50 announcements of Build 2018. Part 1. Hardware & Dev Tools

On May 7–9, a key Microsoft developer conference was held in Seattle. We tried to collect all the key announcements in a small series of articles.

In this issue:

Satya Nadella began his speech with a quote from Mark Weiser from his work 30 years ago about the future of computing. Mark Weiser, then the chief researcher at Xerox PARC, was right in many ways. Technology has become commonplace and is increasingly being dissolved in our lives and our space, affecting companies, society and individuals.

We must do everything possible so that technological benefits reach wider sections of society, and companies and people using them can trust them. Speaking of trust, our focus today is in three directions:

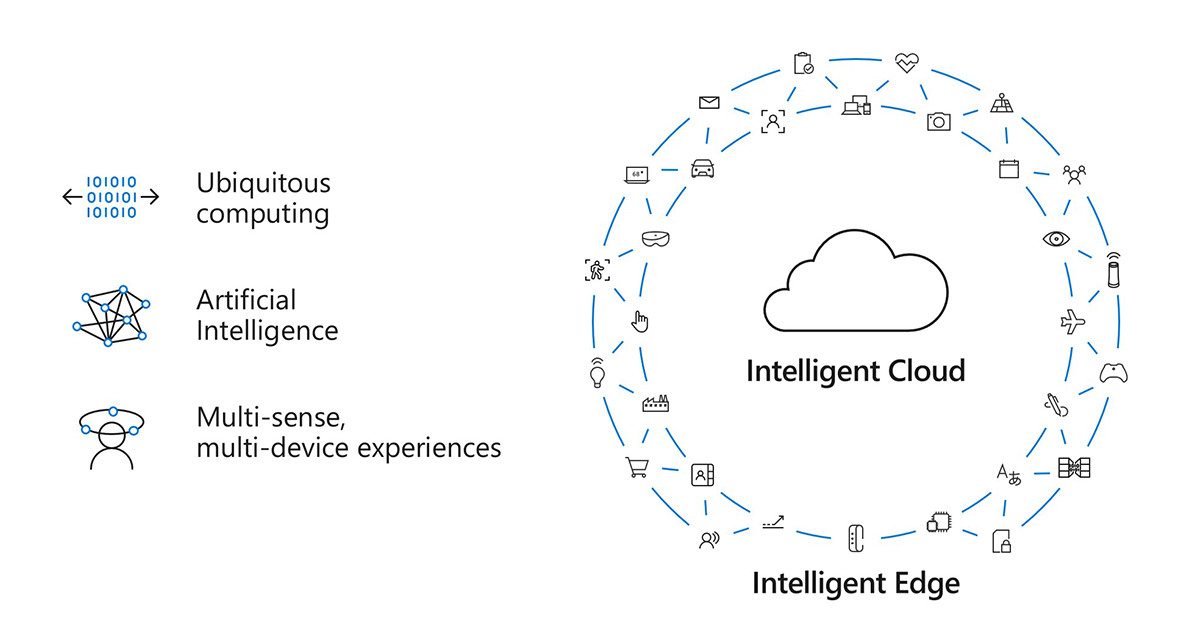

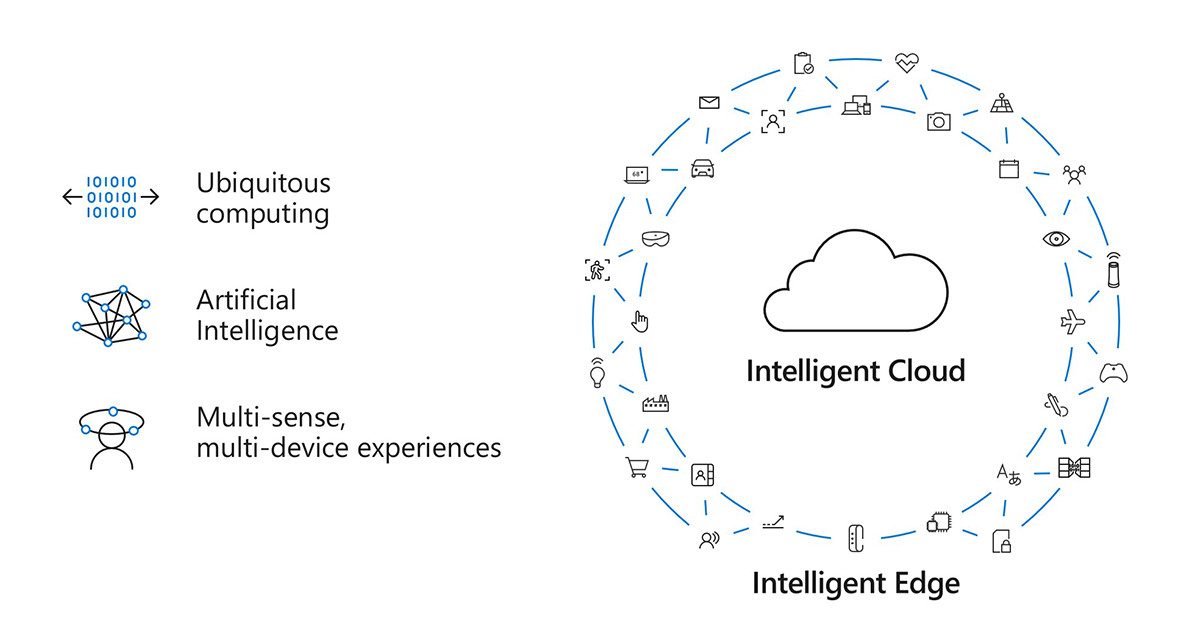

Speaking about Microsoft’s technology agenda, Satya Nadella focused on three areas:

Finally, Satya announced the new AI for Accessibility program , which provides grants and support to research organizations, NGOs, and entrepreneurs to help people with disabilities participate fully in society and the economy.

During the conference, Microsoft announced a number of kits for developers aimed at the tasks of “understanding” the surrounding world - from space scanning to speech analysis.

In addition to these three devices, the conference also showed the SeeD Groove Starter Kit for Azure IoT Edge developer kit and the TeXXmo smart IoT button .

General description .

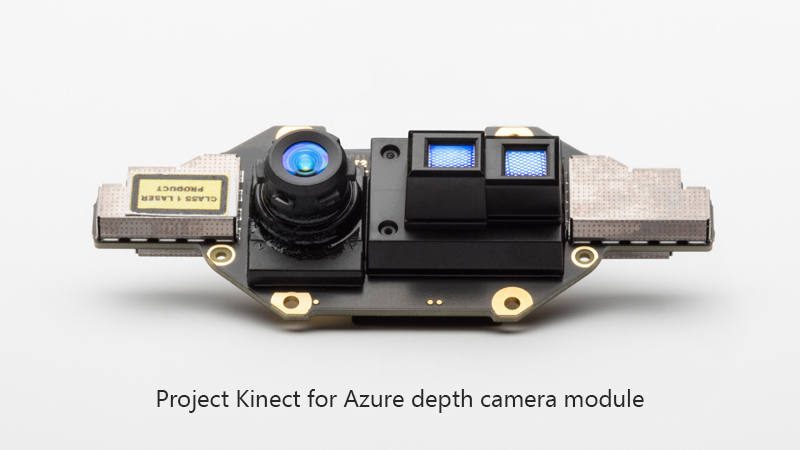

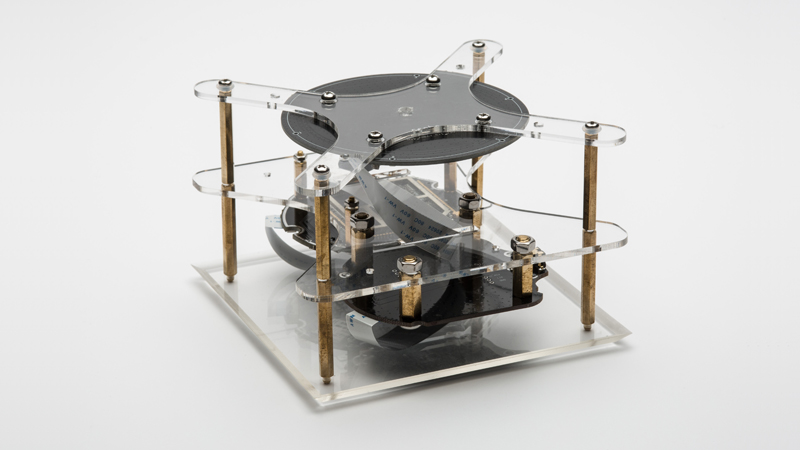

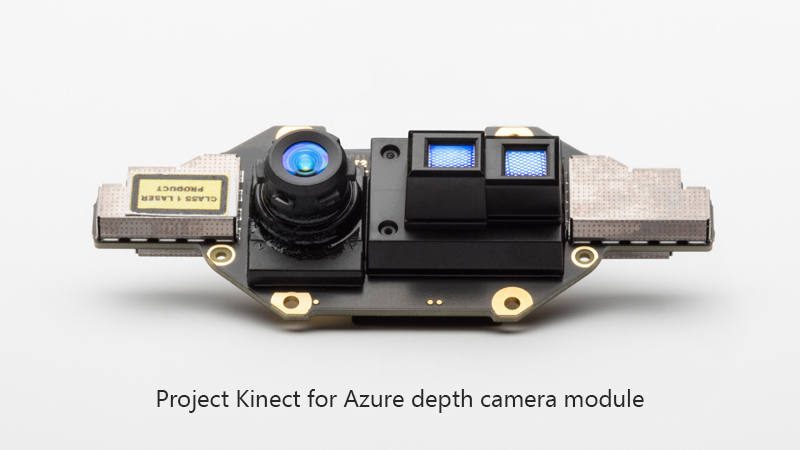

Project Kinect for Azure - a new set of sensors based on developments in Kinect and Hololens, which will also be the main part of the next generation of Hololens! The device includes a new generation depth camera (Time-of-Flight (ToF)), a 4K RGB camera and a set of 360 ° microphones and is aimed at scenarios that require spatial vision, recognition of people and objects, and hand movements.

Project Kinect for Azure combines the capabilities of the device and AI-services in Microsoft Azure. From the link below, Alex Kipman writes that using data from a depth camera can significantly reduce the size of grids for deep learning compared to conventional cameras. Along with this, increasing the overall energy efficiency of the device.

References:

As part of a strategic partnership between Microsoft and Qualcomm Technologies, Inc, we are working to create an AI Developer Kit based on Qualcomm and Azure IoT Edge chipsets. The first project in this direction was the Vision AI Developer Kit based on the Qualcomm QCS603 chipset with hardware accelerated calculation of AI models, with a 4K 8 MP camera, a built-in battery, speakers and a set of microphones, integration with Azure ML and IoT Edge.

References

Speech Devices SDK - a new kit for developers, which will provide high-quality processing of audio data from multichannel sources for more accurate speech recognition with noise reduction, remote sound playback and other functions. The solution combines Microsoft Speech services with development kits from ROOBO.

References

The topic of hardware accelerated computing related to machine learning is of concern to many developers, and cloud companies are no exception.

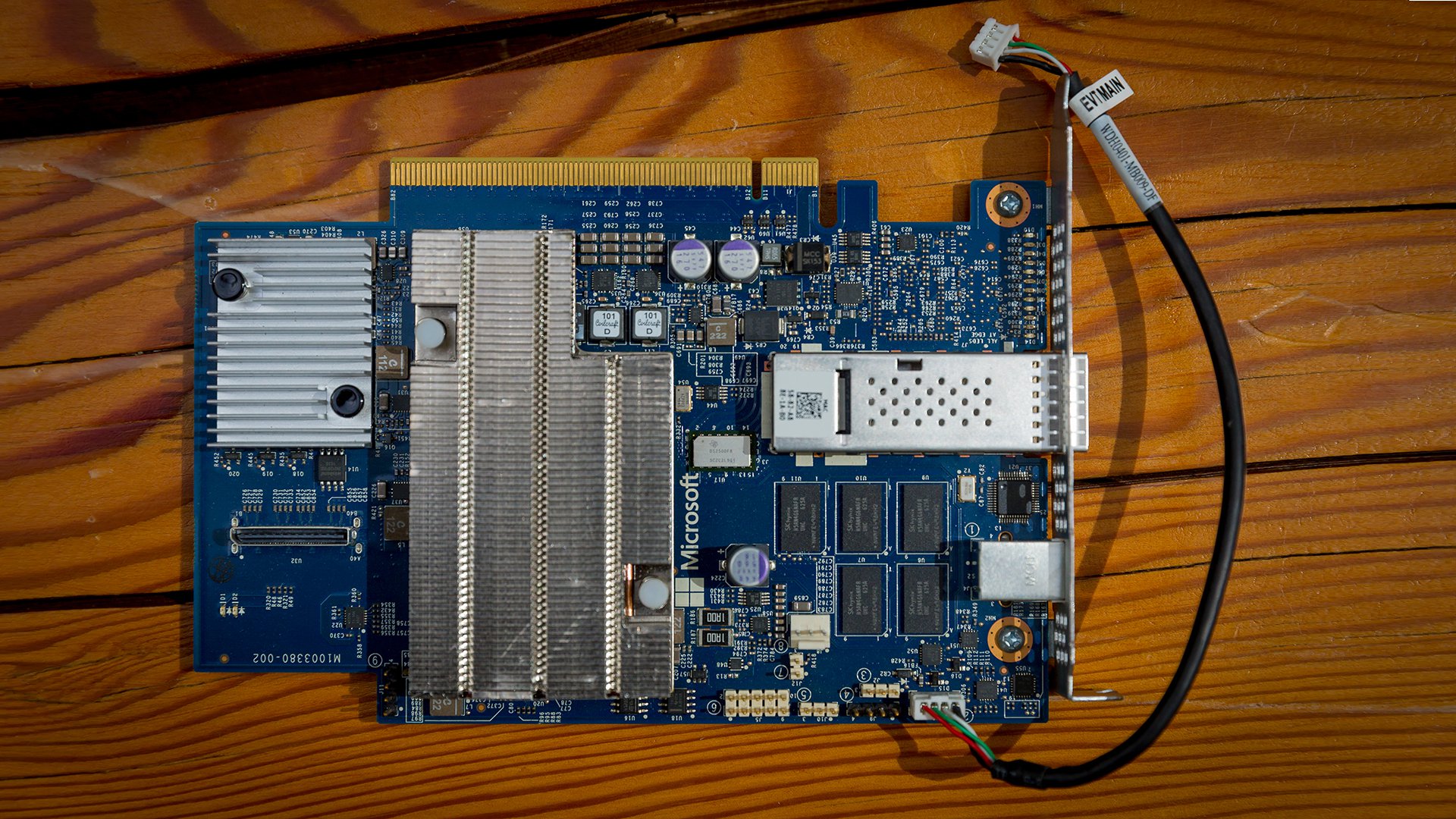

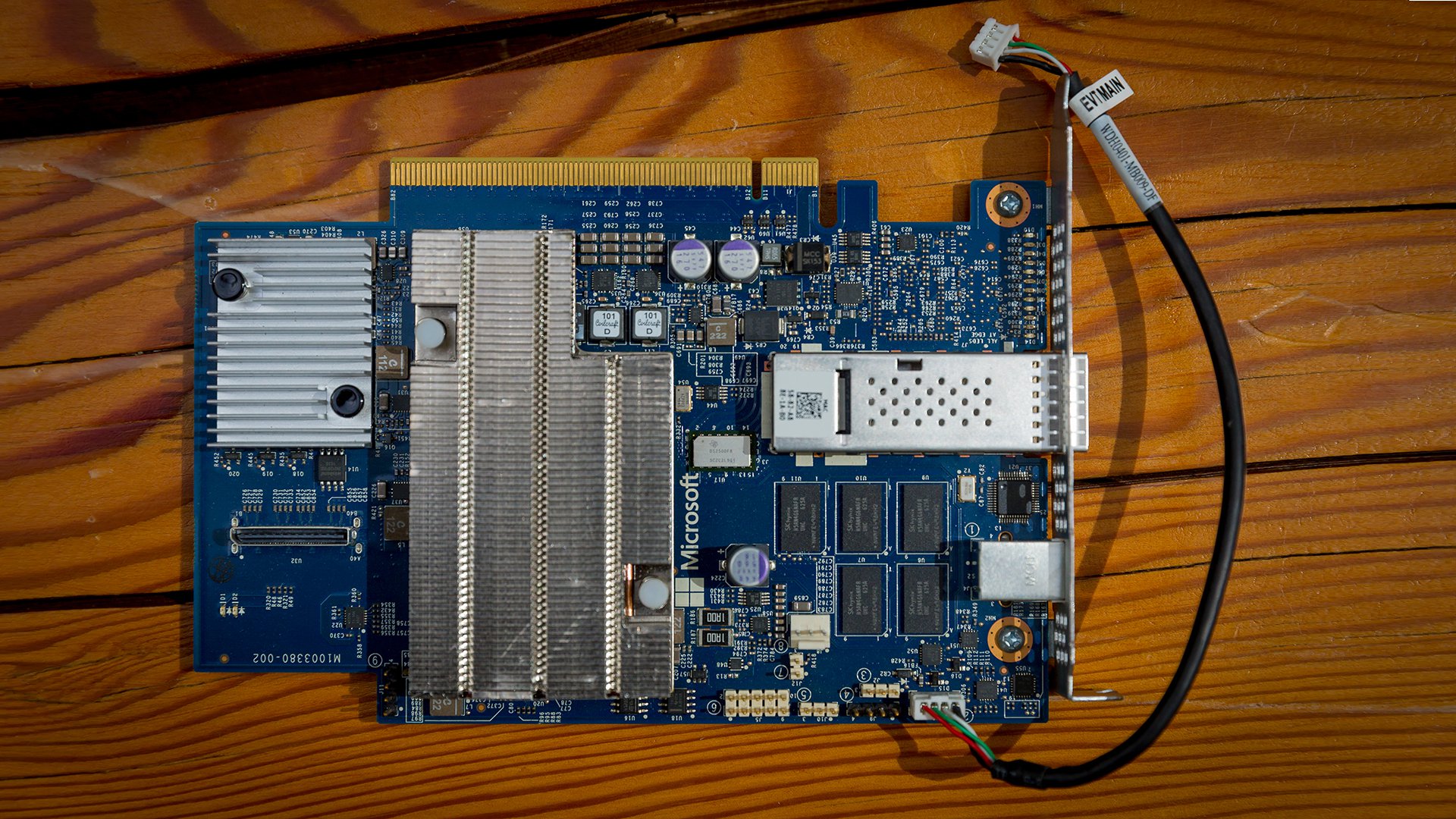

A few years ago, Microsoft Research talked about Project Catapult, an FPGA-based hardware solution used in tasks for the Bing search engine. Later, the project was renamed Project Brainwave, along with plans for the withdrawal of solutions in the form of a cloud service.

At the Build conference, Satya Nadella announced that Project Brainwave is now previewing, being integrated with Azure Machine Learning, using Intel FPGA equipment and neural networks based on ResNet50. AI computing close to real time is getting closer!

References

During the conference, there were many announcements about development tools and DevOps, below are the key ones:

General review .

.NET Core 2.1 has reached the RC stage and is now available with “Go-Live” licensing for use in production. Major improvements:

Announcement .

VS 2017 - 15.7 - Major changes:

VS 2017 - 15.8 Preview - Main innovations:

References

New features:

References

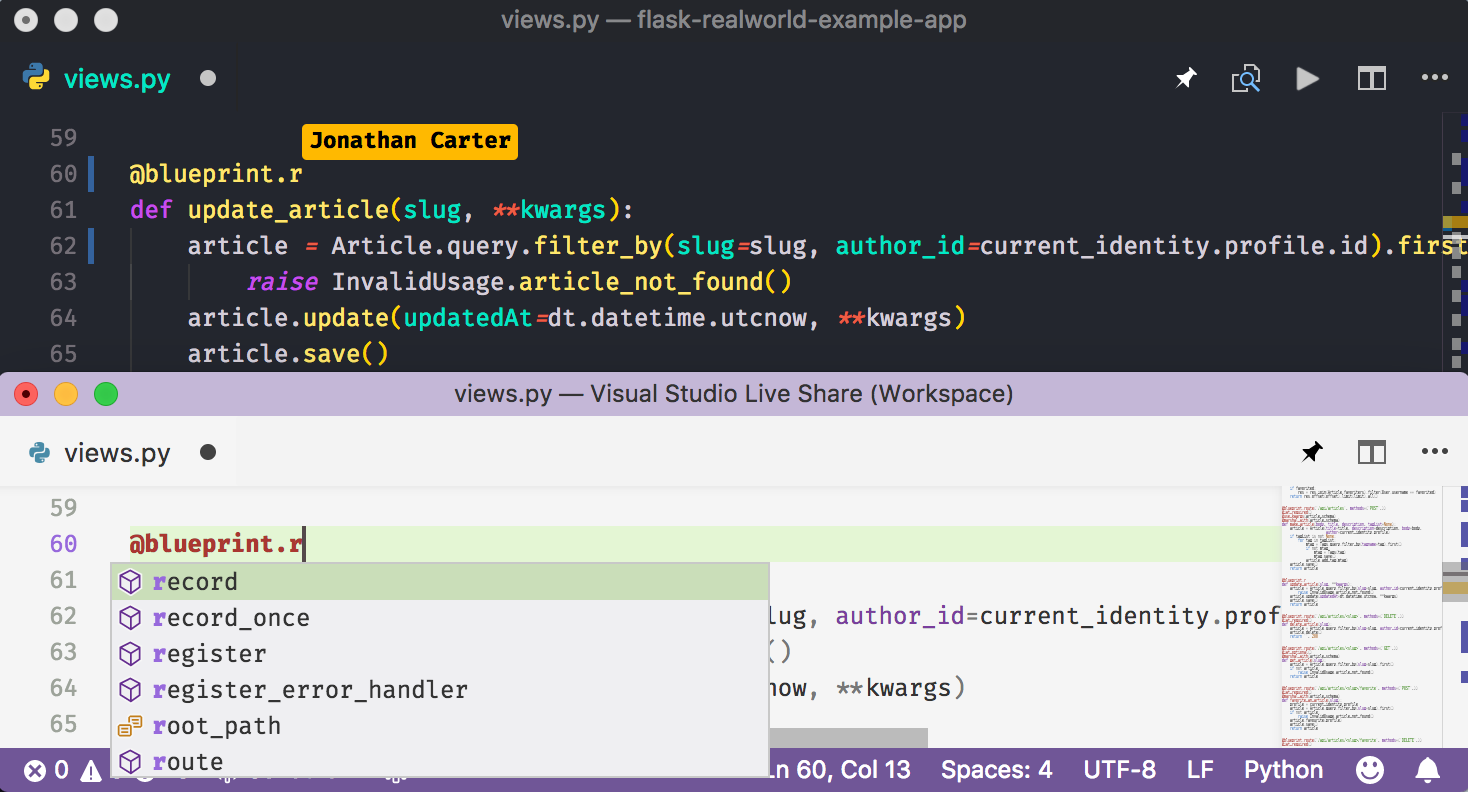

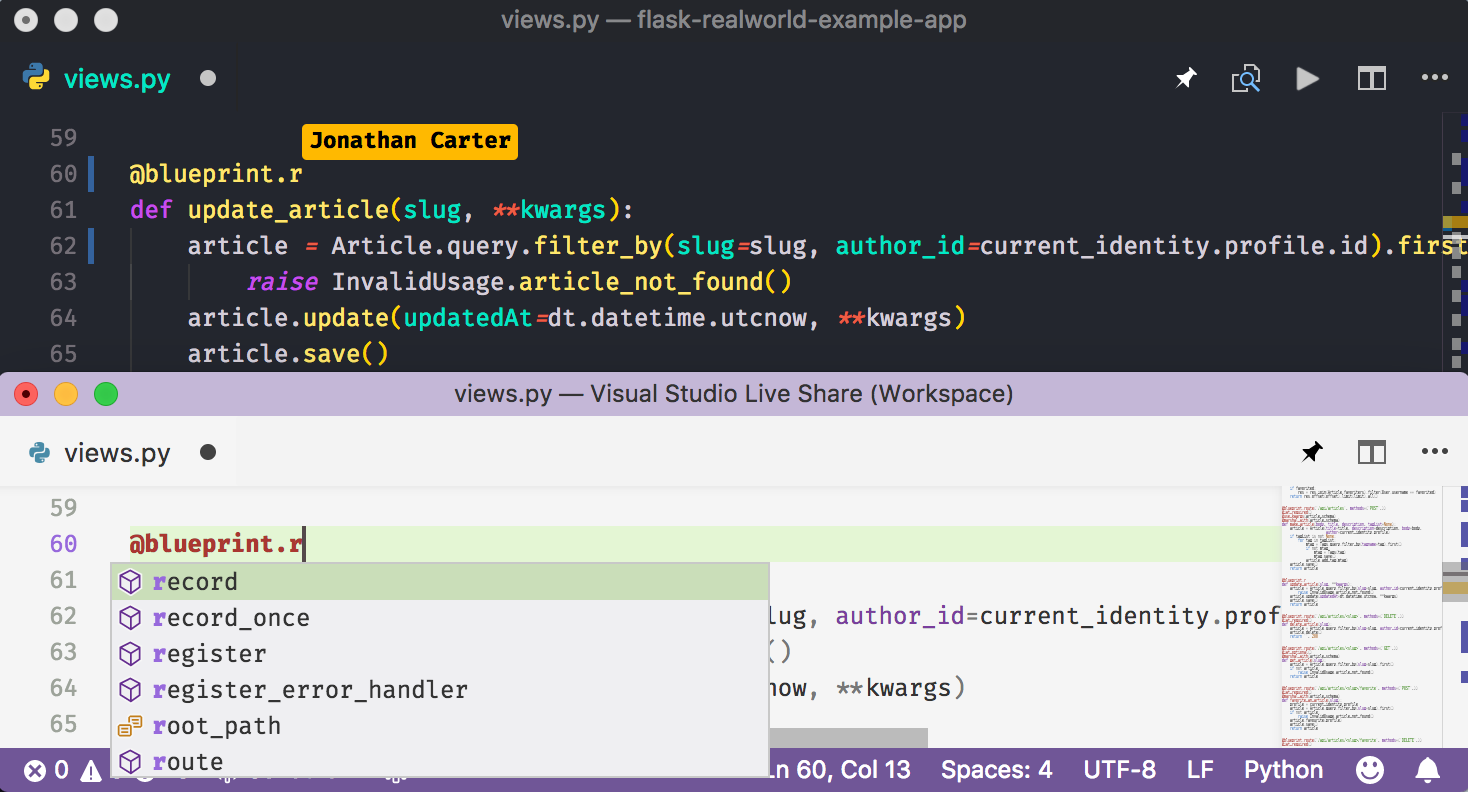

If you have ever worked with someone on a joint project, you know how useful it is to look at a problematic piece of code together and try to figure out what is happening, or explain what you just did.

The subtlety is that before, in order to simultaneously see the same thing, you had to either sit down together, as happens with pair programming, or share a screen with each other, or even try to synchronize remotely, communicating only with voice or comments.

VS Live Share is a new feature for VS Code and Visual Studio, which allows you to share code with a colleague in real time and edit and even debug it together.

References

Although lately we hear a lot of talk about the fact that in the future AI will be able to write code on its own, today a more realistic and applied script is code written by a developer with the support of some AI.

Actually, the question is this. What if some smart agent keeps track of what code you are writing now and knows how you wrote before (perhaps even in the next folder), it understands the context of the project and even keeps abreast of best practices (based on the most popular repositories Github)? And such an agent will help you write the best code.

How exactly, you ask? Well, for example, will it give hints for completing the code not only with an alphabetical list of properties of an object / class, but also put in the beginning the most popular or contextually relevant options? Or will he understand the style of the code in the project and suggest when you get out of style recommendations and even automatically apply them? Or, say, when analyzing the next pull request, he will be able to automatically analyze the code and tell you what to look for?

The new extension for Visual Studio, VS IntelliCode, is aimed precisely at such tasks.

References

Continuous Integration (CI) for mobile developers using GitHub has become easier thanks to the Visual Studio App Center application in the latter’s store.

Continuing to develop collaboration with the open source community of developers, Microsoft announced a new partnership with GitHub, adding the power of Azure DevOps services for GitHub users.

Now developers of applications for iOS, Android, Xamarin and Reac Native with repositories on GitHub can connect their account to the App Center to it to configure automatic assembly of projects from source, test verification (including UI testing). After that, the App Center will update the Pull Request status on GitHub so that you can accept the changes to your project. Using the App Center, you can also configure the publication of applications in application stores, thus automating the entire chain from changes in the code to the delivery of the application to end users.

References:

In the next issue we will talk about Azure and bots!

In this issue:

- What is Satya thinking?

- Perception-Powered Intelligent Edge Dev Kits

- Preview Project Brainwave

- Dev Tools & DevOps

What is Satya thinking?

Satya Nadella began his speech with a quote from Mark Weiser from his work 30 years ago about the future of computing. Mark Weiser, then the chief researcher at Xerox PARC, was right in many ways. Technology has become commonplace and is increasingly being dissolved in our lives and our space, affecting companies, society and individuals.

“Today, every aspect of our lives is changing under the influence of digital technology.”On the one hand, this is a huge opportunity that is in the hands of the developers, but on the other hand, along with the opportunities comes responsibility. “The words of Hans Jones, one of the pioneers of biomedical ethics, that you need to act so that the consequences of actions are compatible with the continuation of a genuine human life, are relevant today.

We must do everything possible so that technological benefits reach wider sections of society, and companies and people using them can trust them. Speaking of trust, our focus today is in three directions:

- Privacy . This is the basic right of the people. Microsoft is actively working to meet the requirements of the GDPR requirements and protect the rights of its customers in a high court (USA).

- Cybersecurity . Together with other companies, we must be responsible for the security of the world. Examples include the new cross-industry initiatives Cybersecurity Tech Accord and Digital Geneva Convention.

- Ethics in AI . Improving our AI algorithms, it is critical that we take the necessary steps to ensure the ethics of the algorithms. “We must ask ourselves not only what computers can do, but what should they do?”

Speaking about Microsoft’s technology agenda, Satya Nadella focused on three areas:

- Ubiquitous computing. Cloud computing is becoming more global, Azure services are available from 50 regions, last year we launched Azure Stack, which allows us to build a hybrid history. We have also begun to work actively on porting computing and intelligence to end devices through Azure IoT Edge and the recently announced Azure Sphere solutions for creating secure microcontrollers. At the conference, we announced that the Azure IoT Runtime will be available in open source, along with a number of partners, we announced development kits for creating smart devices - from embedded devices to drones.

- Artificial Intelligence. Huge progress in the industry. In 2016, we observed equality with people in object recognition tests, in 2017 we talked about speech recognition, and in 2018 about equality in machine reading and translation. But this is not about achievements, but about translating them into frameworks and tools that developers can use. In addition to development kits for speech and image recognition, including Project Kinect for Azure, we are also updating our cognitive services in the cloud and gradually making available to developers a new generation of hardware acceleration solutions for AI computing within Project Brainwave.

- The multiplicity of devices and sensors . We are talking about the transition from the picture of the world (the developer), in which the devices were in the center, to the picture with a person in the foreground. Microsoft 365, combining Windows and Office, aims to create an experience in which many people, in many locations, can interact through many sensor systems of many different devices. An example of such an experience is Cortana, which is available on many platforms and surfaces, and during the conference we announced a partnership with Amazon for the mutual integration of Cortana and Alexa. At the heart of such solutions is an expandable Microsoft Graph, which allows you to combine world data, data from organizations and individuals. Of course, taking into account the privacy requirements imposed by companies and personal by each of us.

Finally, Satya announced the new AI for Accessibility program , which provides grants and support to research organizations, NGOs, and entrepreneurs to help people with disabilities participate fully in society and the economy.

Perception-Powered Intelligent Edge Dev Kits [1-3]

During the conference, Microsoft announced a number of kits for developers aimed at the tasks of “understanding” the surrounding world - from space scanning to speech analysis.

- Project kinect for azure

- Vision AI Developer Kit

- Speech Devices development kit

In addition to these three devices, the conference also showed the SeeD Groove Starter Kit for Azure IoT Edge developer kit and the TeXXmo smart IoT button .

General description .

Project kinect for azure

Project Kinect for Azure - a new set of sensors based on developments in Kinect and Hololens, which will also be the main part of the next generation of Hololens! The device includes a new generation depth camera (Time-of-Flight (ToF)), a 4K RGB camera and a set of 360 ° microphones and is aimed at scenarios that require spatial vision, recognition of people and objects, and hand movements.

Project Kinect for Azure combines the capabilities of the device and AI-services in Microsoft Azure. From the link below, Alex Kipman writes that using data from a depth camera can significantly reduce the size of grids for deep learning compared to conventional cameras. Along with this, increasing the overall energy efficiency of the device.

References:

Vision AI Developer Kit

As part of a strategic partnership between Microsoft and Qualcomm Technologies, Inc, we are working to create an AI Developer Kit based on Qualcomm and Azure IoT Edge chipsets. The first project in this direction was the Vision AI Developer Kit based on the Qualcomm QCS603 chipset with hardware accelerated calculation of AI models, with a 4K 8 MP camera, a built-in battery, speakers and a set of microphones, integration with Azure ML and IoT Edge.

References

Speech Devices SDK

Speech Devices SDK - a new kit for developers, which will provide high-quality processing of audio data from multichannel sources for more accurate speech recognition with noise reduction, remote sound playback and other functions. The solution combines Microsoft Speech services with development kits from ROOBO.

References

Preview Project Brainwave [4]

The topic of hardware accelerated computing related to machine learning is of concern to many developers, and cloud companies are no exception.

A few years ago, Microsoft Research talked about Project Catapult, an FPGA-based hardware solution used in tasks for the Bing search engine. Later, the project was renamed Project Brainwave, along with plans for the withdrawal of solutions in the form of a cloud service.

At the Build conference, Satya Nadella announced that Project Brainwave is now previewing, being integrated with Azure Machine Learning, using Intel FPGA equipment and neural networks based on ResNet50. AI computing close to real time is getting closer!

References

Dev Tools & DevOps [5-10]

During the conference, there were many announcements about development tools and DevOps, below are the key ones:

- .NET Core 2.1 RC with “Go-Live” License

- Visual Studio 2017 release - 15.7 and 15.8 Preview

- Visual Studio for Mac - 7.5+

- Visual Studio Live Share - code collaboration

- Visual Studio IntelliCore - AI tips

- Mobile ci

- Azure DevOps + GitHub

General review .

.NET Core 2.1 RC

.NET Core 2.1 has reached the RC stage and is now available with “Go-Live” licensing for use in production. Major improvements:

- In general, significant improvements in build performance and runtime (for example, ASP.NET Core 2.1 are 15% faster than 2.0). New deployment models and extensions for .NET Core Global Tools.

- Support for Alpine Linux and Linux ARM32 distributions (e.g. Raspbian and Ubuntu).

- Support for brotli compression.

- New Cryptography APIs.

- ASP.NET Core SignalR. SignalR can now work cross-platform and with improved performance based on .NET Core. The availability of SignalR as an Azure service has also been announced.

- ASP.NET Core: Razor UI support for class libraries, the new Identity UI library and the HttpClientFactory class, as well as security improvements.

- Entity Framework Core 2.1: support for lazy loading, data initialization, new data providers and improved support for CosmosDB.

Announcement .

Visual Studio 2017 release - 15.7 and 15.8 Preview

VS 2017 - 15.7 - Major changes:

- Installer update. In VS there was an option to check the availability of updates (Help -> Check for Updates), and during the installation process you can more flexibly specify where which components to install.

- Performance improvements. In the new version, debug windows are now asynchronous, the Xamarin runtime is loaded onto the devices during the build (to speed up the whole process), and for TypeScript, background analysis of closed files can be made optional.

- Improvements in the editor. New features for code refactoring (for example, switching between foreach and for loops for C # and VB and expanding LINQ queries into foreach loops), IntelliSense for XAML conditional blocks, switching tooltips for Xamarin.Forms to a tooltip engine for WPF and UWP, TypeScript support 2.8 and ClangFormat for C ++, and finally, compliance with C ++ 11, C ++ 14 and C ++ 17 standards.

- Debugging and diagnostics. Support for IntelliTrace events and snapshots for applications on .NET Core, support for authenticated Source Link requests for VSTS and private GitHub repositories, the ability to set breakpoints and debug JS code for projects on ASP.NET and ASP.NET Core using Microsoft Edge .

- Mobile development. Support for the Android Oreo SDK, and development improvements for Apple platforms, including support for a static type system and simplifying the deployment of applications on iOS devices.

- Web and cloud development. Deploy non-containerized applications on Azure App Service on Linux and simplified integration with Azure Key Vault.

- UWP development. Support for Windows 10 April 2018 Update SDK, support for automatic updates for applications installed outside the Microsoft Store (sideloading), a new project type “Optional Code Package”.

VS 2017 - 15.8 Preview - Main innovations:

- C ++ Quick Info tooltips for macros now show what they are expanded into, not just a definition.

- Library Manager (LibMan) support for managing client libraries in web projects.

- Simplified addition of container support for web projects on ASP.NET Core.

References

Visual Studio for Mac - 7.5+

New features:

- Web development. ASP.NET Core - Full Razor support in the editor, as well as support for JavaScript and TypeScript.

- Mobile development. For iOS developers, debugging via WiFi for iOS and tvOS has been added. For Android developers, updated SDK and device managers. For Xamarin developers, the XAML editing opat has been improved.

- Cloud development. Support for developing Azure Functions on .NET Core.

- Support for .NET Core 2.1 RC and C # 7.2.

- Support for stylistic rules for projects through .editorconfig files.

- Preview of TF Version Control support for TFS and VSTS.

References

Code Collaboration

If you have ever worked with someone on a joint project, you know how useful it is to look at a problematic piece of code together and try to figure out what is happening, or explain what you just did.

The subtlety is that before, in order to simultaneously see the same thing, you had to either sit down together, as happens with pair programming, or share a screen with each other, or even try to synchronize remotely, communicating only with voice or comments.

VS Live Share is a new feature for VS Code and Visual Studio, which allows you to share code with a colleague in real time and edit and even debug it together.

References

Artificial Intelligence Tips

Although lately we hear a lot of talk about the fact that in the future AI will be able to write code on its own, today a more realistic and applied script is code written by a developer with the support of some AI.

Actually, the question is this. What if some smart agent keeps track of what code you are writing now and knows how you wrote before (perhaps even in the next folder), it understands the context of the project and even keeps abreast of best practices (based on the most popular repositories Github)? And such an agent will help you write the best code.

How exactly, you ask? Well, for example, will it give hints for completing the code not only with an alphabetical list of properties of an object / class, but also put in the beginning the most popular or contextually relevant options? Or will he understand the style of the code in the project and suggest when you get out of style recommendations and even automatically apply them? Or, say, when analyzing the next pull request, he will be able to automatically analyze the code and tell you what to look for?

The new extension for Visual Studio, VS IntelliCode, is aimed precisely at such tasks.

References

Mobile ci

Continuous Integration (CI) for mobile developers using GitHub has become easier thanks to the Visual Studio App Center application in the latter’s store.

Continuing to develop collaboration with the open source community of developers, Microsoft announced a new partnership with GitHub, adding the power of Azure DevOps services for GitHub users.

Now developers of applications for iOS, Android, Xamarin and Reac Native with repositories on GitHub can connect their account to the App Center to it to configure automatic assembly of projects from source, test verification (including UI testing). After that, the App Center will update the Pull Request status on GitHub so that you can accept the changes to your project. Using the App Center, you can also configure the publication of applications in application stores, thus automating the entire chain from changes in the code to the delivery of the application to end users.

References:

In the next issue we will talk about Azure and bots!