Introducing the Cloud: How Static Traffic Distribution Methods Work

Load balancing in the cloud of the IaaS provider helps to efficiently use the resources of virtual machines. There are many load balancing methods, but in today's article we will dwell on some of the most popular static methods: round-robin, CMA, and threshold algorithm. Under the cut, we’ll talk about how they are arranged, what are their characteristics and where are they used.

/ Flickr / woodleywonderworks / CC

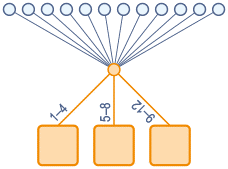

Static load balancing methods imply that the state of individual nodes is not taken into account when distributing traffic. Information about their parameters is "registered" in advance. And it turns out that there is a “ binding ” to a specific server.

Static distribution - binding to one machine.

Server selection can be determined by various factors, for example, the geographical location of the client, or it can be chosen randomly.

This algorithm distributes the load evenly between all nodes. In this case, the tasks do not have priorities: the first node is randomly selected, and the rest are further in order. When the number of servers ends, the queue reverts back to the first.

One implementation of this algorithm is round-robin DNS . In this case, DNS does not respond to requests with a single IP address, but with a list of several addresses. When a user makes a name resolution request for a site, the DNS server assigns a new connection to the first server in the list. This constantly redistributes the load on the server group.

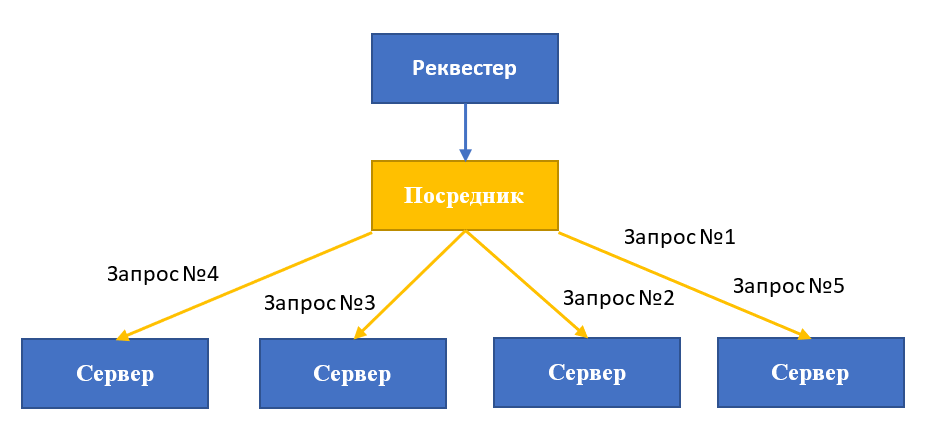

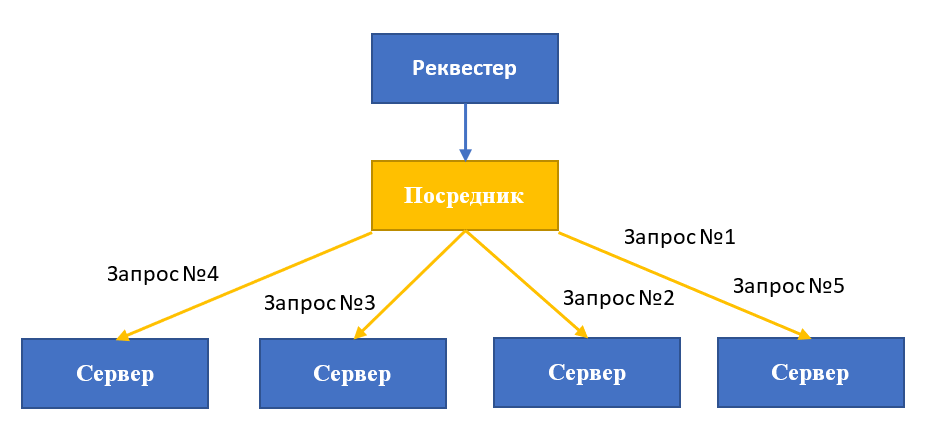

Today, this approach is used to distribute resources both within the data center and between individual data centers. Round-robin usuallyimplemented using reverse proxies, haproxy, apache and nginx. The mediation application receives all incoming messages from external clients, maintains a list of servers and monitors the translation of requests.

Imagine we have deployed two environments with highly available Tomcat 7 application servers and nginx balancers. DNS servers “bind” several IP addresses to the domain name, adding the external addresses of the balancers to the type A resource record . Next, the DNS server forwards the list of IP addresses of the available servers, and the client "tries" them in order and establishes a connection with the first responder.

Note that this approach is not without drawbacks. For example, DNS does not check servers for errors and does not exclude identifiers of disconnected VMs from the list of IP addresses. Therefore, if one of the servers is unavailable, may occur delays in processing requests (about 10-30 seconds).

Another problem is that you need to adjust the cache life of the table with addresses. If the value is too large, clients will not know about the changes in the server group, and if too small, the load on the DNS server will seriously increase.

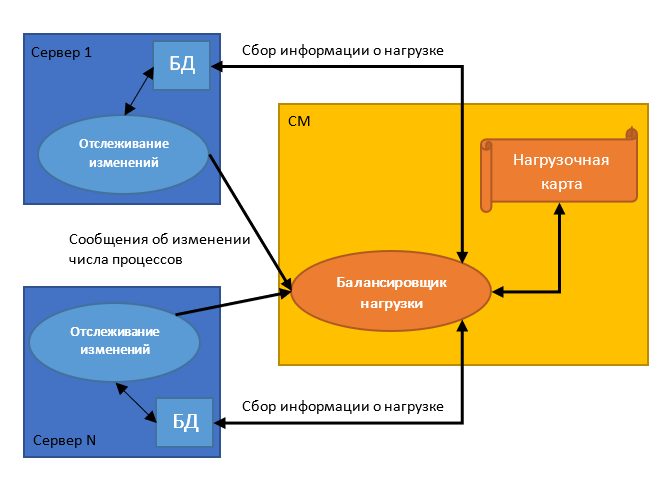

In this algorithm, a central handler (CM) determines the host for the new process by selecting the least loaded server. The central processor makes a choice based on the information that the servers send to it each time when the number of processed tasks changes (for example, when creating a child process or terminating it).

A similar approach is used by IBM in the Guardium solution. The application collects and constantly updates information about all managed modules and creates on its basis the so-called load card. With its help, data flow control is performed. The balancer accepts HTTPS requests from S-TAP , a traffic monitoring tool, by listening on port 8443 and using Transport Layer Security (TLS).

Central Manager Algorithm allows you to more evenly distribute the load, since the decision about the assignment of the process to the server is made at the time of its creation. However, the approach has one serious drawback - this is a large number of interprocess interactions, which leads to the emergence of a “bottleneck”. In this case, the central processor itself is a single point of failure.

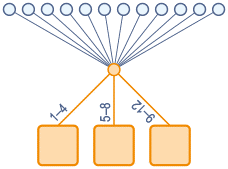

Processes are assigned to hosts immediately when they are created. At the same time, the server can be in one of three states determined by two threshold values - t_upper and t_under.

If the system is in a balanced state, no further action is taken. If an imbalance is observed, the system takes one of two actions: it sends a request to increase the load or, conversely, reduce it.

In the first case, the central server evaluates which tasks that have not yet begun to be performed by other hosts can be delegated to the node. Indian scientists from the University of Guru Gobind Singh Indrastrasta in their study, which they devoted to evaluating the effectiveness of the threshold algorithm in heterogeneous systems, give an example of a function that nodes can use to send a request for increasing the load:

In the second case, the central server can take away from the node those tasks that it has not yet begun to perform, and if possible transfer them to another server. To start the migration process, scientists used the following function:

When load balancing is performed , the servers send a message about changing their process counter to other hosts so that they update their system status information. The advantage of this approach is that the exchange of such messages is rare, because with a sufficient number of server resources, it simply starts a new process at home - this increases productivity.

Static distribution - binding to one machine.

Server selection can be determined by various factors, for example, the geographical location of the client, or it can be chosen randomly.

Round robin

This algorithm distributes the load evenly between all nodes. In this case, the tasks do not have priorities: the first node is randomly selected, and the rest are further in order. When the number of servers ends, the queue reverts back to the first.

One implementation of this algorithm is round-robin DNS . In this case, DNS does not respond to requests with a single IP address, but with a list of several addresses. When a user makes a name resolution request for a site, the DNS server assigns a new connection to the first server in the list. This constantly redistributes the load on the server group.

Today, this approach is used to distribute resources both within the data center and between individual data centers. Round-robin usuallyimplemented using reverse proxies, haproxy, apache and nginx. The mediation application receives all incoming messages from external clients, maintains a list of servers and monitors the translation of requests.

Imagine we have deployed two environments with highly available Tomcat 7 application servers and nginx balancers. DNS servers “bind” several IP addresses to the domain name, adding the external addresses of the balancers to the type A resource record . Next, the DNS server forwards the list of IP addresses of the available servers, and the client "tries" them in order and establishes a connection with the first responder.

Note that this approach is not without drawbacks. For example, DNS does not check servers for errors and does not exclude identifiers of disconnected VMs from the list of IP addresses. Therefore, if one of the servers is unavailable, may occur delays in processing requests (about 10-30 seconds).

Another problem is that you need to adjust the cache life of the table with addresses. If the value is too large, clients will not know about the changes in the server group, and if too small, the load on the DNS server will seriously increase.

Central manager algorithm

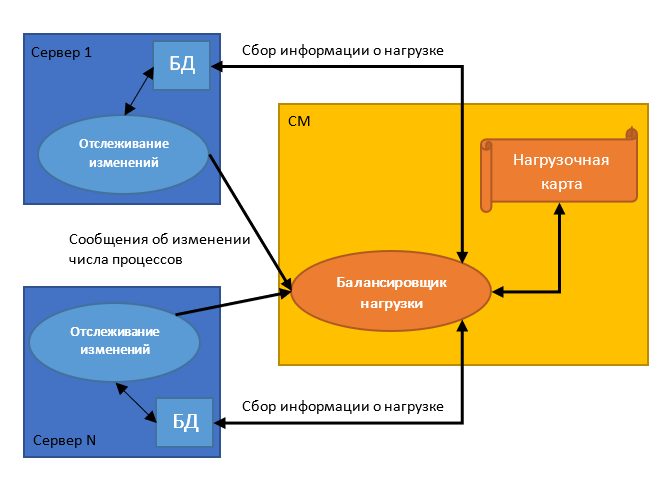

In this algorithm, a central handler (CM) determines the host for the new process by selecting the least loaded server. The central processor makes a choice based on the information that the servers send to it each time when the number of processed tasks changes (for example, when creating a child process or terminating it).

A similar approach is used by IBM in the Guardium solution. The application collects and constantly updates information about all managed modules and creates on its basis the so-called load card. With its help, data flow control is performed. The balancer accepts HTTPS requests from S-TAP , a traffic monitoring tool, by listening on port 8443 and using Transport Layer Security (TLS).

Central Manager Algorithm allows you to more evenly distribute the load, since the decision about the assignment of the process to the server is made at the time of its creation. However, the approach has one serious drawback - this is a large number of interprocess interactions, which leads to the emergence of a “bottleneck”. In this case, the central processor itself is a single point of failure.

Threshold Algorithm

Processes are assigned to hosts immediately when they are created. At the same time, the server can be in one of three states determined by two threshold values - t_upper and t_under.

- Not loaded: load <t_under

- Balanced: t_under ≤ load ≤ t_upper

- Overloaded: load> t_upper

If the system is in a balanced state, no further action is taken. If an imbalance is observed, the system takes one of two actions: it sends a request to increase the load or, conversely, reduce it.

In the first case, the central server evaluates which tasks that have not yet begun to be performed by other hosts can be delegated to the node. Indian scientists from the University of Guru Gobind Singh Indrastrasta in their study, which they devoted to evaluating the effectiveness of the threshold algorithm in heterogeneous systems, give an example of a function that nodes can use to send a request for increasing the load:

UnderLoaded()

{

// validating request made by node

int status = Load_Balancing_Request(Ni);

if (status = = 0 )

{

// checking for non executable jobs from load matrix

int job_status = Load_Matrix_Nxj (No);

if (job_status = =1)

{

// checking suitable node from capability matrix

int node_status=Check_CM();

if (node_status = = 1)

{

// calling load balancer for balancing load

Load_Balancer()

}

} } }

In the second case, the central server can take away from the node those tasks that it has not yet begun to perform, and if possible transfer them to another server. To start the migration process, scientists used the following function:

OverLoaded()

{

// validating request made by node

int status = Load_Balancing_Request(No);

if (status = = 1)

{

// checking load matrix for non executing jobs

int job_status = Load_Matrix_Nxj (No);

if (job_status = =1)

int node_status=Check_CM();

if (node_status = = 1)

{

// calling load balancer to balance load

Load_Balancer()

}

} } }

When load balancing is performed , the servers send a message about changing their process counter to other hosts so that they update their system status information. The advantage of this approach is that the exchange of such messages is rare, because with a sufficient number of server resources, it simply starts a new process at home - this increases productivity.