Resize images on a site

Good afternoon. I am a developer with more than 10 years of experience. In order to evaluate the quality of the source code of sites, not without a share of self-irony, I created a small checklist. Today I’ll talk about an important point for me - the image on the sites. I deliberately omitted a specific technology, because this problem has been and is everywhere, I will be very grateful if in the comments you reveal your approaches using your technology stack, in the end we are all very similar.

Empirically, I found that the way to work with the image on the site is a litmus test of quality. Look at how a person works with images in five sizes and you can conclude about the whole project. The reason is understandable, I have not seen a book or article that describes the logic for storing, updating and working with the image, if someone points it out in the comments, I will be grateful. Each one came up with his own service bike, which he knew how to store according to a specific directory structure or database. As time went on, support sites developed, somewhere the image sizes changed, somewhere new functionality was added that required a new size of previously uploaded images. Each step added a new round of an already debugged procedure: we go through all the images - the originals, change the size and save in the necessary parameters. Long, expensive.

Manual control sooner or later will lead to failure, it will require extra money to test in a test environment. I started looking for a similar problem with others, because it has long been known that if you have a problem, then you are not the first to encounter it. Quickly enough, I went to the cloud services of image modification, caching and placement. I was delighted with the simplicity of the solution, using the link to the original image and the control parameters in it, you can get cropped, compressed, inverted, shifted, transparent, images with printed text and effects, and much, much more. One of these services, which seemed to me the most simple and convenient, I decided to try. For this, I compiled a list of the functionality I needed and decided to remove the temporal characteristics, because it’s no secret to anyone,

Sample list from memory:

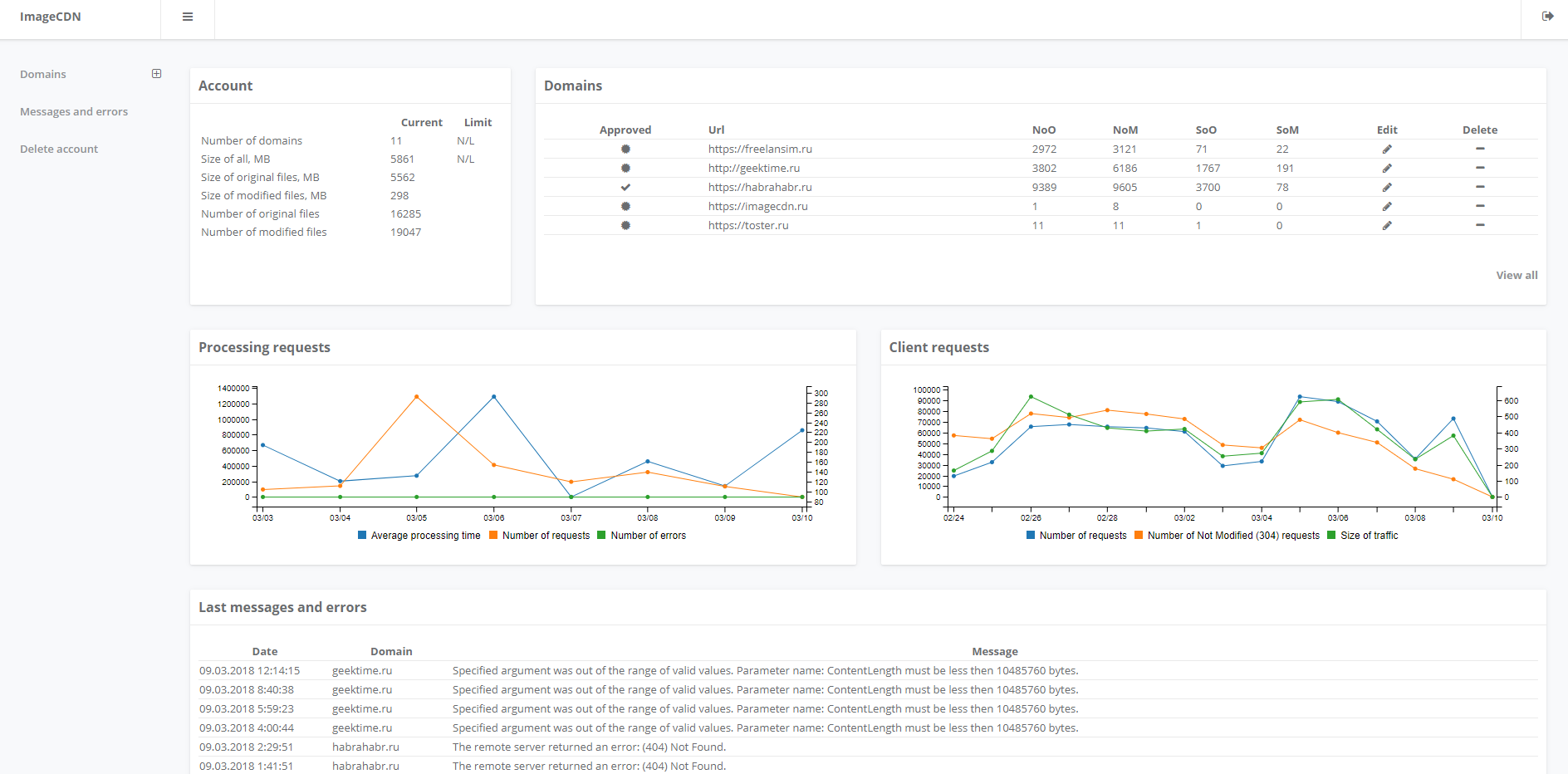

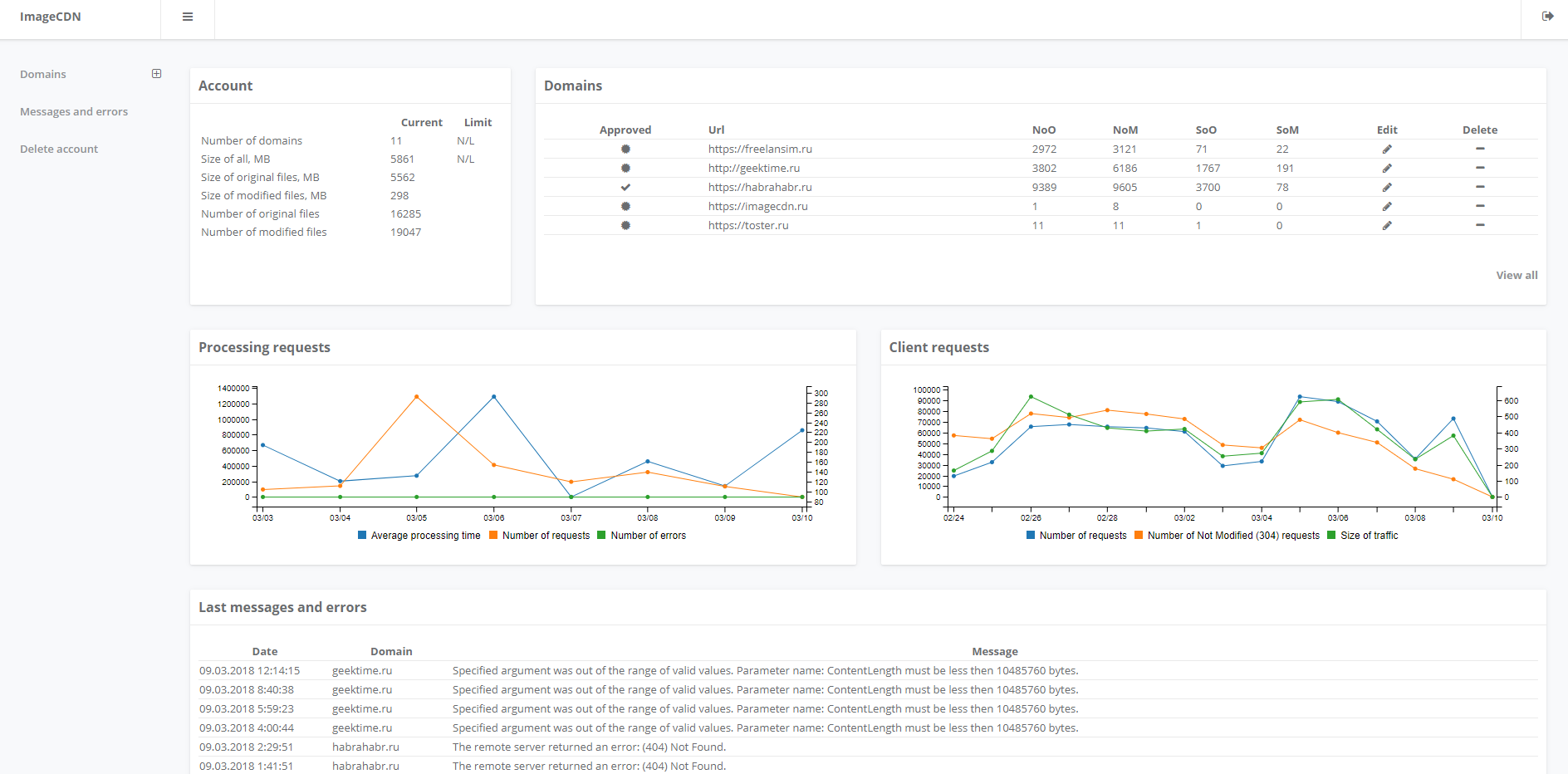

I went through the functional requirements, each of them was reflected with an example of use and completely satisfied, there was a brief instruction for use and a small control panel to monitor statistics and reduce balance.

At the same time, I looked at speed and, frankly, I did not expect good results in speed, since Russia did not appear on the map of the location of the processing and storage servers. But before I noticed that the speed to Europe and the USA is much better than to the neighboring city, so I decided to check it. The results were acceptable, about 35-60 ms per image when changing the image width from 3000 to 500 and 30-150KB result.

Further caching was based on MaxAge and Last-modified, ETag was absent and did not broadcast from the source storage server, but I could not think of a situation where it might be needed if there is Last-modified. MaxAge, with proper configuration, calmly flowed to the service. I was wondering how the service works out the change of the original image on the server storage. There were three algorithms in my head, according to the first one, before returning the image, I had to go to the original repository and look at Last-modified, in the second, he had to compare the information about what is stored on the service and the repository in the background at some time, for example, received through MaxAge and the third, when the next update was assigned through MaxAge, but only occurred if the client makes a request. I decided to install it by a simple experiment, changing the original and requesting an image from the service. Then I discovered that the image has not changed, so the service does not access the store until a certain point. In this case, if you change the image again, the original image will be taken from the service cache, and not from the storage. Further, changing MaxAge, I managed to update the original image in the service cache.

In my list, the price was in last place in order, but not in priority. I was unpleasantly upset by the price (I quote the prices today) from $ 500 for a plan with SLA, while the plan for the number of accesses to the original image also worked poorly, each 1000 images were paid separately. At the same time, it was not clear how accesses to the original image are considered. Whether access is considered a repeated request for a previously modified image from the service cache, without using the browser cache, i.e. a new user, but before someone had already called and changed it. This question arose because at one of the projects we had more than 150 new original images every day, which were viewed by at least 5 thousand people every day. Requests from the cache accounted for only ~ 50% of requests. The answer was simple, after loading the image service cache, all operations with it are unlimited and restriction only on the consumed traffic. Estimating the number of images came out about $ 150 a month, excluding traffic.

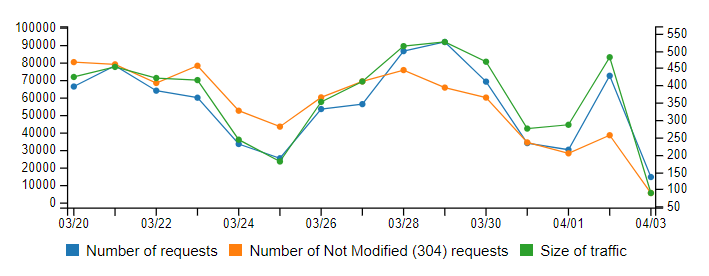

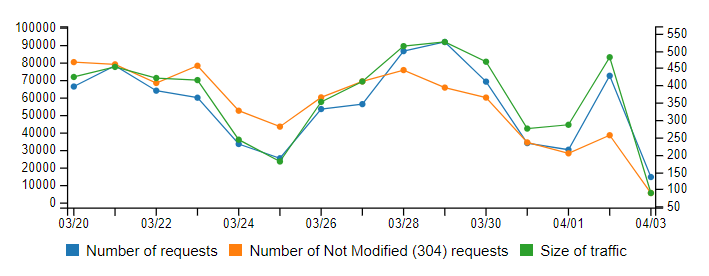

Everything would be fine, but 2014 came and the dollar began to confidently overcome the boundaries of the rational, kind, eternal. The loot began to defeat evil and a decision was made to create its own service for changing images on the fly. So an internal project appeared, and recently (about a year ago), the team and I published a beta version for public testing. The service is built using Microsoft .NET technologies, and NoSQL Redis for caching original images. Different countries work with us, in total about 15 sites. One of our clients, a site from Vietnam of popular Vietnamese music, as far as we can tell, how did he find out about our service is still a mystery. Attendance of each site from 50 to 30,000 people per day. Our target audience is relatively small (up to 50,000 - 100,000 people a day.

The first version of the project was straightforward processing, no authorization and complete freedom of action for users of the service. I will say right away that this is a bad idea, in all respects except for the convenience of the user, until the service falls under the load of unscrupulous users or directly violating the law. This is best shown in the diagram and outlined the main components.

All query statistics, all caching parameters, we stored on Redis. Originals and processed files were stored there, because they wanted to achieve the maximum speed of content delivery to the user, minimizing disk participation. Having launched our prototype, we realized that it is quite viable and covers the requirements for one of the sites with interest (5,000 - 10,000 users per day). Placed on their own hardware, started to use. The problems started when we wanted to scale. Not because we got a certain limit of solution, but because other clients began to show interest in us and we began to fear that we could not cope with the growing load.

So the second version of the service was born. The version built on the queue on top of Redis and microservices that listen to the events of this queue. The facade remained for IIS, but lost a lot of weight and began to proxy requests for the necessary services. There were restrictions on both the number of files and their size, binding to a domain name, in order to avoid unscrupulous users and indicate the owner to the relevant structures, if they are of interest. DNS appeared with Round Robin and the ability to issue addresses relative to the intended client address (CDN).

The scheme allowed us to distribute processing services, duplicate them in terms of fault tolerance and performance, independently send endpoints to service without stopping the entire system. Moreover, services are no longer geographically linked.

We continue to develop the second version of the service. We really want to get feedback from users. We have a list of possible development, with new features:

It is necessary - it is not necessary, it is important - it is not important, therefore, upon registration, the Early Adopter plan is issued, with 500MB storage, up to 5 domains, 2000 original images. He will remain with you only if you do not delete the account (this can be done independently from the control panel at any time). All offers can and should be sent to support contacts or in the comments here. Keep in mind that the Early Adopter plan is flexible and if you need a large scale, just write in support. There is a high probability that they will help you. Also, if you are interested in how one of the parts is arranged inside, write. I will try to answer all the questions, somewhere just give code examples.

Contacts. I tried to approach the article objectively, not to violate the rules of topics and advertising, in order to share with you my thoughts on the simplicity of the approach. Thank you more for your attention and time. Contacts will be reported in a personal message or comments, if there is interest.

Empirically, I found that the way to work with the image on the site is a litmus test of quality. Look at how a person works with images in five sizes and you can conclude about the whole project. The reason is understandable, I have not seen a book or article that describes the logic for storing, updating and working with the image, if someone points it out in the comments, I will be grateful. Each one came up with his own service bike, which he knew how to store according to a specific directory structure or database. As time went on, support sites developed, somewhere the image sizes changed, somewhere new functionality was added that required a new size of previously uploaded images. Each step added a new round of an already debugged procedure: we go through all the images - the originals, change the size and save in the necessary parameters. Long, expensive.

Manual control sooner or later will lead to failure, it will require extra money to test in a test environment. I started looking for a similar problem with others, because it has long been known that if you have a problem, then you are not the first to encounter it. Quickly enough, I went to the cloud services of image modification, caching and placement. I was delighted with the simplicity of the solution, using the link to the original image and the control parameters in it, you can get cropped, compressed, inverted, shifted, transparent, images with printed text and effects, and much, much more. One of these services, which seemed to me the most simple and convenient, I decided to try. For this, I compiled a list of the functionality I needed and decided to remove the temporal characteristics, because it’s no secret to anyone,

Sample list from memory:

- Change in width, proportional change in height.

- Change in height, proportional change in width.

- Crop the image at specified boundaries.

- Fit the image to the borders of the rectangle.

- Caching and storage of results on the side of the service, while updating it regularly depending on ETag and MaxAge.

- Time to receive the modified image by a new user from Russia.

- Https availability.

- Reasonable price of the service and transparency of its payment.

I went through the functional requirements, each of them was reflected with an example of use and completely satisfied, there was a brief instruction for use and a small control panel to monitor statistics and reduce balance.

At the same time, I looked at speed and, frankly, I did not expect good results in speed, since Russia did not appear on the map of the location of the processing and storage servers. But before I noticed that the speed to Europe and the USA is much better than to the neighboring city, so I decided to check it. The results were acceptable, about 35-60 ms per image when changing the image width from 3000 to 500 and 30-150KB result.

Further caching was based on MaxAge and Last-modified, ETag was absent and did not broadcast from the source storage server, but I could not think of a situation where it might be needed if there is Last-modified. MaxAge, with proper configuration, calmly flowed to the service. I was wondering how the service works out the change of the original image on the server storage. There were three algorithms in my head, according to the first one, before returning the image, I had to go to the original repository and look at Last-modified, in the second, he had to compare the information about what is stored on the service and the repository in the background at some time, for example, received through MaxAge and the third, when the next update was assigned through MaxAge, but only occurred if the client makes a request. I decided to install it by a simple experiment, changing the original and requesting an image from the service. Then I discovered that the image has not changed, so the service does not access the store until a certain point. In this case, if you change the image again, the original image will be taken from the service cache, and not from the storage. Further, changing MaxAge, I managed to update the original image in the service cache.

In my list, the price was in last place in order, but not in priority. I was unpleasantly upset by the price (I quote the prices today) from $ 500 for a plan with SLA, while the plan for the number of accesses to the original image also worked poorly, each 1000 images were paid separately. At the same time, it was not clear how accesses to the original image are considered. Whether access is considered a repeated request for a previously modified image from the service cache, without using the browser cache, i.e. a new user, but before someone had already called and changed it. This question arose because at one of the projects we had more than 150 new original images every day, which were viewed by at least 5 thousand people every day. Requests from the cache accounted for only ~ 50% of requests. The answer was simple, after loading the image service cache, all operations with it are unlimited and restriction only on the consumed traffic. Estimating the number of images came out about $ 150 a month, excluding traffic.

Everything would be fine, but 2014 came and the dollar began to confidently overcome the boundaries of the rational, kind, eternal. The loot began to defeat evil and a decision was made to create its own service for changing images on the fly. So an internal project appeared, and recently (about a year ago), the team and I published a beta version for public testing. The service is built using Microsoft .NET technologies, and NoSQL Redis for caching original images. Different countries work with us, in total about 15 sites. One of our clients, a site from Vietnam of popular Vietnamese music, as far as we can tell, how did he find out about our service is still a mystery. Attendance of each site from 50 to 30,000 people per day. Our target audience is relatively small (up to 50,000 - 100,000 people a day.

Architecture

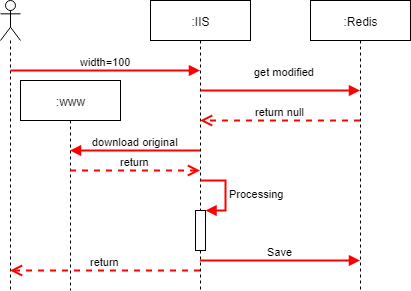

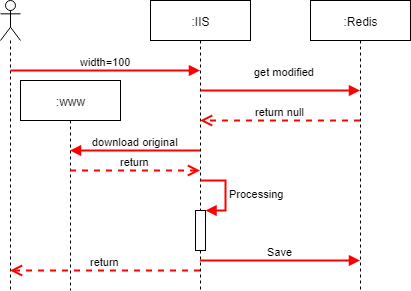

The first version of the project was straightforward processing, no authorization and complete freedom of action for users of the service. I will say right away that this is a bad idea, in all respects except for the convenience of the user, until the service falls under the load of unscrupulous users or directly violating the law. This is best shown in the diagram and outlined the main components.

All query statistics, all caching parameters, we stored on Redis. Originals and processed files were stored there, because they wanted to achieve the maximum speed of content delivery to the user, minimizing disk participation. Having launched our prototype, we realized that it is quite viable and covers the requirements for one of the sites with interest (5,000 - 10,000 users per day). Placed on their own hardware, started to use. The problems started when we wanted to scale. Not because we got a certain limit of solution, but because other clients began to show interest in us and we began to fear that we could not cope with the growing load.

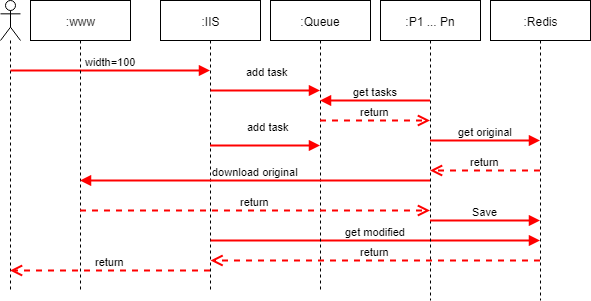

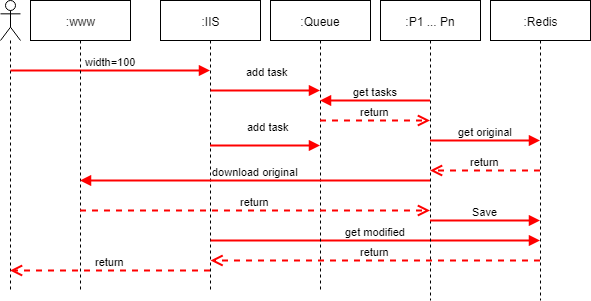

So the second version of the service was born. The version built on the queue on top of Redis and microservices that listen to the events of this queue. The facade remained for IIS, but lost a lot of weight and began to proxy requests for the necessary services. There were restrictions on both the number of files and their size, binding to a domain name, in order to avoid unscrupulous users and indicate the owner to the relevant structures, if they are of interest. DNS appeared with Round Robin and the ability to issue addresses relative to the intended client address (CDN).

The scheme allowed us to distribute processing services, duplicate them in terms of fault tolerance and performance, independently send endpoints to service without stopping the entire system. Moreover, services are no longer geographically linked.

We continue to develop the second version of the service. We really want to get feedback from users. We have a list of possible development, with new features:

- Drawing text on an image - already implemented

- Setting quality in the context of a domain, rules and / or operation (now this is a tough OptimalCompression value)

- Adding a System Choice - Original Image Caching Plan

- Adding sources other than the site (for example, Amazon or new Mail with its cold storage)

- Placement of additional services outside the central region

It is necessary - it is not necessary, it is important - it is not important, therefore, upon registration, the Early Adopter plan is issued, with 500MB storage, up to 5 domains, 2000 original images. He will remain with you only if you do not delete the account (this can be done independently from the control panel at any time). All offers can and should be sent to support contacts or in the comments here. Keep in mind that the Early Adopter plan is flexible and if you need a large scale, just write in support. There is a high probability that they will help you. Also, if you are interested in how one of the parts is arranged inside, write. I will try to answer all the questions, somewhere just give code examples.

Thanks!

Contacts. I tried to approach the article objectively, not to violate the rules of topics and advertising, in order to share with you my thoughts on the simplicity of the approach. Thank you more for your attention and time. Contacts will be reported in a personal message or comments, if there is interest.