Richard Hamming: Chapter 6. Artificial Intelligence - 1

- Transfer

“The goal of this course is to prepare you for your technical future.”

Hi, Habr. Remember the awesome article “You and Your Work” (+219, 2394 bookmarked, 380k reads)?

Hi, Habr. Remember the awesome article “You and Your Work” (+219, 2394 bookmarked, 380k reads)? So Hamming (yes, yes, self-checking and self-correcting Hamming codes ) has a whole book written based on his lectures. We are translating it, because the man is talking business.

This book is not just about IT, it is a book about the thinking style of incredibly cool people. “This is not just a charge of positive thinking; it describes conditions that increase the chances of doing a great job. ”

We have already translated 19 (out of 30) chapters. And we are working on a paper edition.

Chapter 6. Artificial Intelligence - 1

(Thanks for the translation, Ivannikov Alexei, who responded to my call in the "previous chapter".) Who wants to help with the translation, layout and publication of the book - write in PM or email magisterludi2016@yandex.ru

Considering the history of computer applications, we pay attention to the possibilities of machine limits not so much in terms of computational complexity, but in terms of classes of tasks that a computer can or may not be able to solve in the future. Before further discussion, it must be recalled that computers operate with symbols, not information; we cannot just say, let alone write, a program in terms of “information”. We all believe that we know the meanings of words, but good thoughts on your part will convince us that, at best, information is a fuzzy concept and cannot be given a definition that can be converted to a program.

Although Babbage and Augusta (Ada) Lovelace made some assumptions regarding the computational limits of computers, real research actually began in the late 1940s - early 1950s, in particular by Allan Newell and Herbert Simonfrom the RAND research center. For example, they explored solving puzzles such as the task of cannibals and missionaries *. Can a computer solve puzzles? And how will he do it? They examined the approaches that people use to solve such problems and tried to write a program that would reproduce the same result. You should not expect the same result, since on the whole there were no two people who would solve the puzzle through the same logical conclusions in the same sequence, although the program consisted of finding the same solution templates. Instead of just solving the problem, they tried to model the methods of solving puzzles by people and check the similarity of the results of the model and the results of people.

(* Cannibals and missionaries - in our culture known as the puzzle of the wolf, sheep and cabbage.)

They also began to develop the concept of the General Problem Solver (GPS). The idea is that to solve a problem with a computer, you need five universal principles for solving problems and a set of private parts from a certain area. Despite the fact that the method did not work well, valuable side effects, such as list processing, came out of it. After an initial study of the problem (which, as expected, would greatly facilitate the programming task), the question was postponed for a decade. When they returned to him, about 50 necessary universal principles were proposed. When this proposal didn’t work, in a decade the number of universal principles reached 500. Now this idea is known as rule based logic,

A whole area under development called Expert Systems is currently being developed. The principle is to interview experts of a particular field of knowledge, draw up a set of rules, enter these rules into the program and receive an expert program! One of the problems of this principle is that in many areas, especially in medicine, well-known experts are actually not much more qualified than beginners! And this fact has been recorded in many studies! Another problem is that experts use their subconscious and the fact that experts can use one of their subconscious experiences in making decisions. It has been established that it takes about 10 years of intensive work to become an expert. During this time, apparently, many, many patterns are fixed in the mind,

In some areas, rule-based logic has shown impressive achievements, and in some similar areas it has completely failed, this indicates the dependence of success on a large number of elements of luck. Now there is no general rule and basic understanding of when when the rule-based logic method will work, and when not, and how successfully it will be applied.

In chapter 1, I have already developed the topic that perhaps all our “knowledge” cannot be expressed in words (instructions) - it is impossible in the sense of impossible, but not in the sense that we are stupid or uneducated for this. Some of the established features of expert systems undoubtedly prove this position.

After several years of development, the field of study of the limits of the intellectual ability of computers received the vague name of Artificial Intelligence (AI), which does not have one meaning. Firstly, this is an answer to the question,

Can cars think?

Although this is a more limited definition than artificial intelligence, it more clearly identifies the problem and is more accessible to most. This question is important for you, if you think that computers can’t think, then you can’t use computers to solve your problems to the full, but if you think that computers can think, of course, you will make a mistake! Thus, one cannot allow oneself to believe or not to believe - it is necessary to formulate the following unpleasant question: “To what extent can machines think?”

It is necessary to pay attention, this form of the question is not entirely correct, a more general question is formulated as follows: “Is it possible to write a program that will generate“ reason ”from von Neumann's machine?” The reason for such questions is the emerging trend in the development of modern neural networks. They may or may not be able to do what a digital computer cannot do. We will discuss neural networks later after reviewing more technical facts.

Also, the problem of AI can be formulated as: “Which of what can a person do, can a computer do?” Or in the form that is more preferable for me “Which of the tasks a person can solve or can a computer take on significantly?” Let's pay attention to pacemakers - machines that are directly connected to the human nervous system and support the lives of many people, while they automatically perform their routine tasks. People who say that they don’t want their life dependent on the car, quite by the way forget this example. It seems to me that in the long run, cars will be able to make a significant contribution to people's quality of life.

Why is the topic of artificial intelligence so important? I will give a specific example of the need for AI. Without going into details (you cannot look at them without giving a definition to thinking and the car), I believe that in the future we will most likely have cars that study the surface of Mars. The distance between the Earth and Mars is so large that the round-trip signal travel time can be 20 or more minutes. Therefore, during operation, such a car should have a reliable means of local control. After passing between two stones, making a slight turn and finding land under the front wheels, the car will need a quick, “reasonable” action in case of ambiguity. In other words, such obvious approaches as backup execution of the program, coupled with the lack of time to receive instructions from the Earth, will be insufficient to protect against destruction; therefore, a certain degree of “intelligence” must be programmed in the machine.

This is not the only example; a similar task is becoming more and more typical as computers are increasingly used in high-speed systems. In this case, you cannot rely on a person - often because of the boredom factor that people are exposed to. It is believed that piloting aircraft consists of hours of boredom and a few seconds of absolute nervous tension (panic) - this is not what people are intended for, although they can do it. Reaction speed is very important. Modern fast planes are mostly unstable, computers are installed within milliseconds to stabilize them, no pilot can provide such accuracy; a person can only coordinate the management plan as a whole, and leave the details to the machine.

Earlier, I noted the need to at least understand what we mean by the concepts of “machine” and “thinking”. We discussed these terms at Bell Telephone Laboratories in the early 1940s. Some claimed that the machine could not have organic parts, and I objected to this, that such a definition excludes wooden parts! This definition was canceled, but for greater persuasiveness, I proposed a thought experiment with extracting the nervous system from the frog, while leaving it alive. If it could be adapted as a storage device, would it become a machine or not? If this were an addressable storage device, how would we distinguish that it was a “machine”?

In the same discussions, an engineer with a Jesuit diploma ** defined the intellect “Thinking is the ability of man, not machine”. Apparently, such a definition satisfied everyone. But do you like it? Is it true? We drew his attention that with an obvious possible comparison of the current level of machines and the improved level of machines and programming techniques, the difference between people and machines decreases in the future.

(** - approx. Translation - orig. Jesuit trained engineer, probably referring to a person who was educated at Jesuit high school (https://www.jesuittampa.org/page.cfm?p=1) or a similar place .)

Undoubtedly, the term “thinking” needs to be clarified. For most, the definition of thinking as the ability of people that stones, trees and other similar objects do not fit would be useful. Opinions differ on the question of whether to include higher forms of animals in this list. Most are mistaken in claiming that “Thinking is what Newton and Einstein did.” According to this statement, most of us do not think - certainly, it does not suit us! Turing, not answering the question in essence, argued that if you put a programmed machine at the end of one telegraph line and another programmed machine at the end, and the average person cannot distinguish them, this will be a proof of the “thinking” of the machine (program).

The Turing test is a popular approach, but it does not stand up to scientific analysis when it is necessary to clarify simpler things before solving complex ones. Soon I asked myself the question “What size should be the smallest program that can think?” To clarify, if it is divided into two parts, then none of these parts can think. I thought every night before going to bed, and after a year of trying such thoughts, I came to the conclusion that this is the wrong question! Perhaps “thinking” is not a yes or no answer, but something more substantial.

Let me step back a bit and recall some facts from the history of chemistry. It was long believed that organic components can only be obtained from animate things, it was a vitalistic aspect of animate things, as opposed to inanimate things such as stones and rocks. But in 1923, the chemist Wohler synthesized urea, a standard byproduct of man. This was the beginning of the synthesis of organic components in laboratory flasks. But still in the late 1850s, most chemists adhered to a vitalistic theory, according to which organic components can only be obtained from animate things. Well, as you know, now chemists have come to the diametrically opposite opinion that any components can be obtained in laboratory conditions - of course, there is no and there can be no evidence of this. This opinion was formed due to successes in the synthesis of organic components, and chemists do not see the reasons why they could not synthesize substances that can exist in Nature. The views of chemists have gone from a vitalist theory to a non-vitalist theory in chemistry.

Unfortunately, religion entered into a discussion about the problem of thinking of machines, and now there is a vitalistic and non-vitalistic theory of “machine versus man”. In the Christian religion, the Bible says, “God created man in his own image and likeness.” If we can create a car in its own image, then in a sense we will become like God, and that is a little embarrassing! Most religions in one form or another represent a person more than a set of molecules, in fact, a person differs from animals in such properties as the soul and some others. As for the soul, in the late Middle Ages, some researchers wanted to separate the soul from the dead body, put the dead person on weight and track sudden changes in weight - but they only saw a slow decrease in the weight of the decomposing body - apparently, in the soul,

Even if you believe in evolution, there was also a moment in it when God, or the gods, stopped and gave a person the ability that distinguishes him from other living beings. This belief in the significant difference between man and the rest of the world makes people believe that machines can never become like humans in terms of thinking, at least until we ourselves become like gods. People such as the previously mentioned engineer with a Jesuit diploma define thinking as something that machines don’t have the ability to do. As a rule, these statements are not so obvious, hidden in a web of thoughts, but the meaning remains the same!

Физики относятся к вам, как к набору молекул в поле энергии излучении, и к ничему большему в классической физике. Древнегреческий философ Демокрит (род. 460 до н.э) утверждал, что “Все является атомами и пустотой”. Такая же позиция у исследователей жесткой модели ИИ; нет существенной разницы между машинами и людьми, следовательно правильно запрограммированные машины могут делать все то же самое, что и люди. Все их неудачные попытки воспроизвести в деталях мышление у машины объясняются ошибками в программировании, а не существенными ограничениями теории.

The opposite approach to the definition of AI is based on the assumption that we have self-awareness and self-awareness - although we cannot provide satisfactory tests proving their existence. I can make the car type “I have a soul”, or “I have self-awareness” or “I have self-awareness”, but you will not be impressed by such statements from the car. At the same time, such statements from a person inspire more confidence, since they are consistent with the results of introspection and the fact of similarity of you and other people, which was experimentally deduced during your life. However, these are manifestations of racism in the difference between man and machines - we are the best creatures!

In discussions of AI, we have come to a dead end; Now you can say anything, but for most, these statements will not have any meaning. Let us turn our attention to the success and failure of AI.

AI researchers always make extravagant, but, in most cases, unconfirmed statements. In 1958, Newell and Simon predicted that in the next 10 years a computer program would become the world chess champion. Unfortunately, like similar unrealized statements by AI researchers, it was made publicly. Yet striking results were achieved.

I need to step back again to explain the importance of games in AI research. The rules of the game, as well as victory or defeat, are very understandable, or in other words, in a sense, are quite well defined. But this does not mean that we want to teach the car how to play games, we want to test our AI ideas on a good and understandable test base in the form of games.

From the very beginning, chess is seen as a good test of AI, since it is believed that when playing chess there is no doubt that you need to think. Shannon proposed a way to write programs that play chess (we call them computers that play chess, in fact - this is just a class of programs). At Los Alamos National Laboratoryachieved acceptable results on primitive MANIAC computers on a 6x6 board, removing elephants from the board. A little later we will return to the history of chess computers.

Let's see how to write programs for a simpler game of three-dimensional tic-tac-toe. We will not consider two-dimensional tic-tac-toe, since the strategy for reducing the game to a draw is known, and a prudent player can never be won. We believe that games with well-known strategies do not illustrate thinking.

In a 4x4x4 cube there are only 64 cells (cubes), it is possible to make 76 straight lines in them. A line wins if it is completely filled with identical elements. It is necessary to pay attention to 8 corner and 8 central cells, more straight lines pass through them than through the rest; in fact, there is an inversion of the cube, in which the central cells are replaced by corner cells, and the corner ones by central ones with all the straight lines remaining.

The program for playing three-dimensional 4x4x4 tic-tac-toe must first select the valid correct moves. Among the selected moves you need to select those that occupy the "hot" cells. It is necessary to use a random choice of possible cells, because otherwise the adversary can solve a weak algorithm and begin to systematically use it for their strategy. A random selection in a substantial set of different moves is a central part of game program algorithms.

We formulate a set of rules that must be applied sequentially:

- When three cells are filled in a row and one is “open”, it is necessary to fill and win it.

- If there is no winning move, and the opponent has three filled cells in a row, it is necessary to block them.

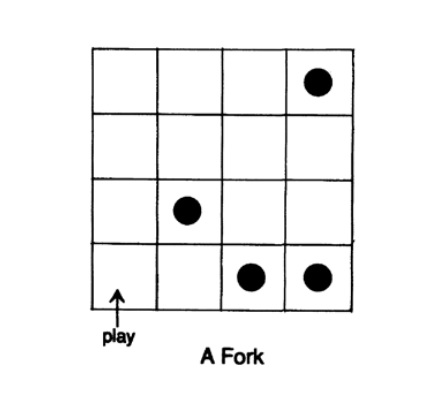

- If a fork has formed (see. Fig. 6.1), then it is necessary to take it. In the next move, the program wins, but the opponent does not.

- If the enemy can form a fork, then it must be blocked.

Figure 6.1

Apparently, there are no more specific rules from which the next move can be calculated. Therefore, you begin to look for the so-called “strong move”, which gives space for maneuver and create a winning combination. Thus, with two occupied cells in the line, you can take the third one, and the enemy will be forced to block the line (but you need to be careful that when blocking the enemy does not have three cells in the line and you do not have to defend yourself). In sequences of strong moves you can create a fork, and then you win! But all these rules are vague. It seems that if you make strong moves to the “important” cells and force the enemy to defend, then you will lead the game, but without a guarantee of victory. If you lose the initiative in the sequence of strong moves, then the opponent is likely to intercept it, make you defend and increase your chances of winning. A very interesting point is the timing of the attack; if it’s too early - you can lose the initiative, too late - the enemy starts and wins. As far as I know, it is impossible to determine the exact rule for choosing the moment of attack.

The algorithm of the game program for the computer consists of several stages. The program must first check the progress for compliance with the rules, this is an insignificant detail. Further, usually the program follows more or less formal rules, and then comes the stage of applying vague rules. Thus, the program contains many heuristic rules (heuristic - invention or discovery) and moves that are likely, but not guaranteed, will lead to victory.

At the beginning of AI research, Arthur Samuel, after him and at IBM, wrote a chess checker program. It was believed that writing a verifier is easier than the game itself, which has become a real stumbling block. The evaluation formula that he wrote included many parameters with their weighting factors, for example, control over the center of the board, passed pawns, position of the king, mobility, locked pieces, etc. Samuel made a copy of the program and slightly changed one (or more) of the parameters. Then, a program with one evaluation formula played against a program with another formula, for example, ten times. A program that won a larger number of parties was considered (with some degree of assumption) the best program. The computer went over the values of one of the parameters until it reached a local minimum, after which it proceeded to the determination of other parameters. Thus, the program was run again and again using the same parameters during repetitions; it gave the program a much better evaluation program - definitely better than Samuel himself. The program even won a Connecticut chess champion!

Would it not be fair to say that “The program has learned from experience?”

Naturally, you object that the program taught the computer how to learn. But doesn’t the teacher give such a training program at the courses of Euclidean geometry? Let’s think more carefully about what the course of geometry is really about. At the beginning of the course, the student cannot solve geometric problems, the teacher lays down a certain program in him, and at the end of the course he can solve such problems. Think about it more carefully. If you are denying that the machine learned from experience, because it was taught by another program (written by a person), then is this situation not like you, with the exception that at birth, something more is stored in you than in a computer when you exit assembly line. Are you sure that you do not program during your life with the events that happen to you?

We begin to find out not only the correctness of the definition of the term mind, but also many other related ones, for example, a machine, training, information, ideas, decisions (in fact, it’s just one of the branches, the branch points of the program are often called decision points, this is why programmers feel more meaningful), the behavior of an expert - all these terms become a little incomprehensible when we try to explain them to the machine. Science traditionally relies on experimental evidence, rather than empty words, and it seems soon science in determining the direction of development will be more effective than philosophy. But of course, the future can be diverse.

In this chapter, we “laid the foundation” for further discussion of AI. We also determined that this topic cannot be ignored. You should be interested in the fact that with the apparent absence of difficulties in determining, and in fact in the complexity of the definition, the terms are very poorly explained and require modification and interpretation. In particular, many have noticed that when a program is written, when determining an acceptable test for machine learning, originality, creativity or rationality, such a test can only be mechanical. This statement is true even if random numbers are used. A machine using random numbers produces two different results for two identical test influences, just as the same person rarely plays two identical chess games. Which test might be acceptable to test machine learning? Or do you intend to assert, as the previously mentioned engineer with a Jesuit diploma, that training, creativity, originality and reasonableness are not the properties that a machine can have? Or do you intend to hide this blatant statement through devious reasoning that does not reflect the full depth of the issue?

In fact, you cannot understand the whole depth of the issue of AI without plunging and trying to independently determine what the concept of man means and what machines can do. Before we examined in detail the algorithm for building test programs, perhaps you thought that machines could not learn from your own experience - now you probably think that this is not training, but rather a smart scam. In order to advance in understanding the issue of the capabilities and limitations of machines in terms of building AI, you must fight your misconceptions. To do this, you need to formalize your beliefs, critically evaluate them, weighing all the strong and weak arguments. Many learners make arguments against AI, many for AI; you need to give up your biased beliefs in this important area. In the next chapter, we will consider more interesting things that a machine can do, and before that you need to form your own opinion. It will be a mistake to assume that you will not take a substantial part in the inevitable computerization of your enterprises and society as a whole.

In many ways, the computer revolution has just begun!

To be continued ...

Who wants to help with the translation, layout and publication of the book - write in a personal email or e-mail magisterludi2016@yandex.ru

By the way, we also launched the translation of another cool book - “The Dream Machine: The History of the Computer Revolution” )

Book Contents and Translated Chapters

Foreword

Who wants to help with the translation, layout and publication of the book - write in a personal email or mail magisterludi2016@yandex.ru

- Intro to The Art of Doing Science and Engineering: Learning to Learn (March 28, 1995) Translation: Chapter 1

- “Foundations of the Digital (Discrete) Revolution” (March 30, 1995) Chapter 2. Fundamentals of the Digital (Discrete) Revolution

- “History of Computers - Hardware” (March 31, 1995) Chapter 3. Computer History - Hardware

- “History of Computers - Software” (April 4, 1995) Chapter 4. History of Computers - Software

- History of Computers - Applications (April 6, 1995) Chapter 5. Computer History - Practical Application

- "Artificial Intelligence - Part I" (April 7, 1995) (in work)

- "Artificial Intelligence - Part II" (April 11, 1995) (in work)

- “Artificial Intelligence III” (April 13, 1995) Chapter 8. Artificial Intelligence-III

- “N-Dimensional Space” (April 14, 1995) Chapter 9. N-Dimensional Space

- “Coding Theory - The Representation of Information, Part I” (April 18, 1995) (in work)

- "Coding Theory - The Representation of Information, Part II" (April 20, 1995)

- “Error-Correcting Codes” (April 21, 1995) (in)

- Information Theory (April 25, 1995) (in work, Alexey Gorgurov)

- Digital Filters, Part I (April 27, 1995) Chapter 14. Digital Filters - 1

- Digital Filters, Part II (April 28, 1995) Chapter 15. Digital Filters - 2

- Digital Filters, Part III (May 2, 1995) Chapter 16. Digital Filters - 3

- Digital Filters, Part IV (May 4, 1995)

- “Simulation, Part I” (May 5, 1995) (in work)

- "Simulation, Part II" (May 9, 1995) is ready

- "Simulation, Part III" (May 11, 1995)

- Fiber Optics (May 12, 1995) at work

- Computer Aided Instruction (May 16, 1995) (in work)

- Mathematics (May 18, 1995) Chapter 23. Mathematics

- Quantum Mechanics (May 19, 1995) Chapter 24. Quantum Mechanics

- Creativity (May 23, 1995). Translation: Chapter 25. Creativity

- “Experts” (May 25, 1995) Chapter 26. Experts

- “Unreliable Data” (May 26, 1995) (in work)

- Systems Engineering (May 30, 1995) Chapter 28. Systems Engineering

- “You Get What You Measure” (June 1, 1995) Chapter 29. You Get What You Measure

- “How Do We Know What We Know” (June 2, 1995) in work

- Hamming, “You and Your Research” (June 6, 1995). Translation: You and Your Work

Who wants to help with the translation, layout and publication of the book - write in a personal email or mail magisterludi2016@yandex.ru