Diving into the depths of C # dynamic

One of the most notable additions in C # 4 is dynamic. This has been said many times and more than once. But always out of sight of DLR (Dynamic Language Runtime). In this article, we will consider the internal structure of DLR, the operation of the compiler itself, and also define the concepts of a static, dynamically typed language with weak and strong typings. And, of course, the PIC technique (Polymorphic Inline Cache), used, for example, in the Google V8 engine, will not go unnoticed.

Before moving on, I would like to refresh some terms and concepts.

In order not to be repeated, by the expression variable we will mean any data object (variable, constant, expression).

Programming languages by the criterion of type checking are usually divided into statically typed (the variable is associated with the type at the time of declaration and the type cannot be changed later) and dynamically typed (the variable is associated with the type at the time of assignment and the type cannot be changed later).

C # is an example of a static typed language, while Python and Ruby are dynamic.

By the criterion of type safety policy, languages with weak (a variable does not have a strictly defined type) and strong / strong (a variable has a strictly defined type that cannot be changed later) are distinguished by typing.

Although dynamic adds the ability to write clean code and interact with dynamic languages like IronPython and IronRuby, C # does not stop being a statically typed language with strong typing.

Before a detailed discussion of the mechanism of dynamic itself, we give an example of code:

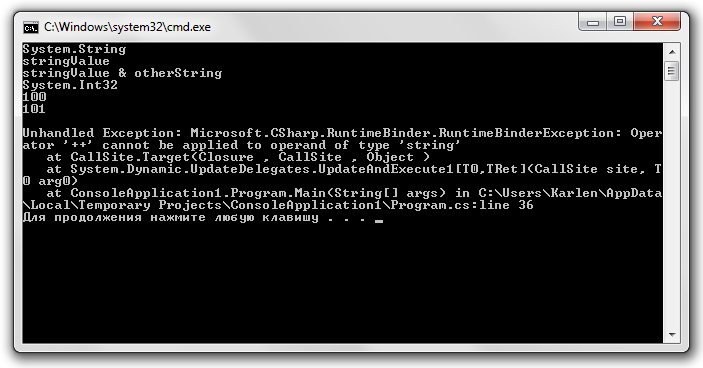

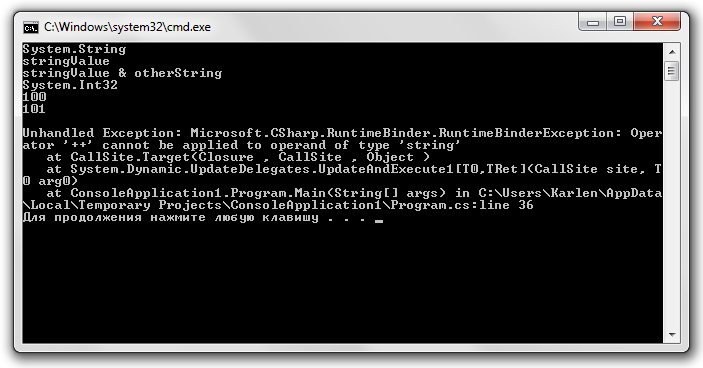

The result of the execution is presented below in the screenshot:

And what do we see? What is typing here?

I will answer right away: typing is strong, and here's why.

Unlike other built-in types of the C # language (for example, string, int, object, etc.), dynamic has no direct comparison with any of the basic BCL types. Instead, dynamic is a special alias for System.Object with additional metadata necessary for proper late binding.

So, the code of the form:

Will be converted to:

As you can see, a variable d of type object is declared . Next, binders from the Microsoft.CSharp library come into play.

For each dynamic expression in the code, the compiler generates a separate dynamic call node , which is the operation itself.

So, for the view code

a class of this form will be generated:

The type of the <> p__Sitee field is the class System.Runtime.CompilerServices.CallSite. Let's consider it in more detail.

Although the Target field is generic, it is always always a delegate . And the last line in the above example is not just a variation of the operation:

The static Create method of the CallSite class is:

The Target field is an L0 cache (there are also L1 and L2 caches), which is used to quickly dispatch calls based on call history.

I draw your attention to the fact that the call node is "self-learning", therefore, the DLR must be updated periodically Target value.

To describe the logic of DLR operation, I will give Eric Lippert's answer on this subject (free translation):

To do this, use the Update property of the CallSite class. When invoking a dynamic operation stored in the Target field, a redirection to the Update property occurs, where the binders are called. When the next time a call occurs, instead of repeating the above steps, a ready-made delegate will be used.

Dynamic language performance suffers due to additional checks and search queries that run in all places of the call. The straightforward implementation is constantly looking for members in the class priority lists and, possibly, allows overloading the types of method arguments every time a line of code is executed. In languages with static typing (or with a sufficient number of type indications in the code and type inference), you can generate instructions or calls to runtime functions that are suitable for all dial peers. This is possible because static types let you know everything you need at compile time.

In practice, repeated operations on the same types of objects can be reduced to a common type. For example, during the first calculation of the value of the expression x + y for integers x and y, you can remember a code fragment or an exact function of the execution time, which adds up two integers. Then, with all subsequent calculations, the values of this expression will not be needed to search for a function or code fragment thanks to the cache.

The above delegate caching mechanism (in this case), when the call node is self-learning and updating, is called the Polymorphic Inline Cache. Why?

Polymorphic . The target of a call node can take several forms, based on the types of objects used in a dynamic operation.

Inline. The life cycle of an instance of the CallSite class takes place exactly at the place of the call itself.

The Cache . The work is based on various cache levels (L0, L1, L3).

Before moving on, I would like to refresh some terms and concepts.

In order not to be repeated, by the expression variable we will mean any data object (variable, constant, expression).

Programming languages by the criterion of type checking are usually divided into statically typed (the variable is associated with the type at the time of declaration and the type cannot be changed later) and dynamically typed (the variable is associated with the type at the time of assignment and the type cannot be changed later).

C # is an example of a static typed language, while Python and Ruby are dynamic.

By the criterion of type safety policy, languages with weak (a variable does not have a strictly defined type) and strong / strong (a variable has a strictly defined type that cannot be changed later) are distinguished by typing.

C # 4 dynamic keyword

Although dynamic adds the ability to write clean code and interact with dynamic languages like IronPython and IronRuby, C # does not stop being a statically typed language with strong typing.

Before a detailed discussion of the mechanism of dynamic itself, we give an example of code:

//присваиваем первоначальное значение типа System.String

dynamic d = "stringValue";

Console.WriteLine(d.GetType());

//во время выполнения исключение не будет вызвано

d = d + "otherString";

Console.WriteLine(d);

//присваиваем значение типа System.Int32

d = 100;

Console.WriteLine(d.GetType());

Console.WriteLine(d);

//во время выполнения исключение не будет вызвано

d++;

Console.WriteLine(d);

d = "stringAgain";

//во время выполнения будет вызвано исключение

d++;

Console.WriteLine(d);

The result of the execution is presented below in the screenshot:

And what do we see? What is typing here?

I will answer right away: typing is strong, and here's why.

Unlike other built-in types of the C # language (for example, string, int, object, etc.), dynamic has no direct comparison with any of the basic BCL types. Instead, dynamic is a special alias for System.Object with additional metadata necessary for proper late binding.

So, the code of the form:

dynamic d = 100;

d++;

Will be converted to:

object d = 100;

object arg = d;

if (Program.o__SiteContainerd.<>p__Sitee == null)

{

Program.o__SiteContainerd.<>p__Sitee = CallSite>.Create(Binder.UnaryOperation(CSharpBinderFlags.None, ExpressionType.Increment, typeof(Program), new CSharpArgumentInfo[]

{

CSharpArgumentInfo.Create(CSharpArgumentInfoFlags.None, null)

}));

}

d = Program.o__SiteContainerd.<>p__Sitee.Target(Program.o__SiteContainerd.<>p__Sitee, arg);

As you can see, a variable d of type object is declared . Next, binders from the Microsoft.CSharp library come into play.

DLR

For each dynamic expression in the code, the compiler generates a separate dynamic call node , which is the operation itself.

So, for the view code

dynamic d = 100;

d++;

a class of this form will be generated:

private static class o__SiteContainerd

{

// Fields

public static CallSite> <>p__Sitee;

}

The type of the <> p__Sitee field is the class System.Runtime.CompilerServices.CallSite. Let's consider it in more detail.

public sealed class CallSite : CallSite where T : class

{

public T Target;

public T Update { get; }

public static CallSite Create(CallSiteBinder binder);

}

Although the Target field is generic, it is always always a delegate . And the last line in the above example is not just a variation of the operation:

d = Program.o__SiteContainerd.<>p__Sitee.Target(Program.o__SiteContainerd.<>p__Sitee, arg);

The static Create method of the CallSite class is:

public static CallSite Create(CallSiteBinder binder)

{

if (!typeof(T).IsSubclassOf(typeof(MulticastDelegate)))

{

throw Error.TypeMustBeDerivedFromSystemDelegate();

}

return new CallSite(binder);

}

The Target field is an L0 cache (there are also L1 and L2 caches), which is used to quickly dispatch calls based on call history.

I draw your attention to the fact that the call node is "self-learning", therefore, the DLR must be updated periodically Target value.

To describe the logic of DLR operation, I will give Eric Lippert's answer on this subject (free translation):

First, the runtime decides what type of object we are dealing with (COM, POCO).

Next, the compiler comes into play. Since there is no need for a lexer and parser, DLR uses a special version of the C # compiler, which has only a metadata analyzer, a semantic expression analyzer, as well as a code generator that generates Expression Trees instead of IL.

The metadata analyzer uses reflection to establish the type of object that is then passed to the semantic analyzer to determine whether it is possible to invoke a method or perform an operation. Next, the Expression Tree is built, as if you were using a lambda expression.

The C # compiler returns the expression tree in DLR back along with the caching policy. The DLR then stores this delegate in the cache associated with the call node.

To do this, use the Update property of the CallSite class. When invoking a dynamic operation stored in the Target field, a redirection to the Update property occurs, where the binders are called. When the next time a call occurs, instead of repeating the above steps, a ready-made delegate will be used.

Polymorphic Inline Cache

Dynamic language performance suffers due to additional checks and search queries that run in all places of the call. The straightforward implementation is constantly looking for members in the class priority lists and, possibly, allows overloading the types of method arguments every time a line of code is executed. In languages with static typing (or with a sufficient number of type indications in the code and type inference), you can generate instructions or calls to runtime functions that are suitable for all dial peers. This is possible because static types let you know everything you need at compile time.

In practice, repeated operations on the same types of objects can be reduced to a common type. For example, during the first calculation of the value of the expression x + y for integers x and y, you can remember a code fragment or an exact function of the execution time, which adds up two integers. Then, with all subsequent calculations, the values of this expression will not be needed to search for a function or code fragment thanks to the cache.

The above delegate caching mechanism (in this case), when the call node is self-learning and updating, is called the Polymorphic Inline Cache. Why?

Polymorphic . The target of a call node can take several forms, based on the types of objects used in a dynamic operation.

Inline. The life cycle of an instance of the CallSite class takes place exactly at the place of the call itself.

The Cache . The work is based on various cache levels (L0, L1, L3).