Organization of a monitoring system

Monitoring is the main thing that the admin has. Admins are needed for monitoring, and monitoring is needed for admins.

Over the past few years, the monitoring paradigm itself has changed. A new era has already arrived, and if now you are monitoring the infrastructure as a set of servers - you are not monitoring almost anything. Because now the "infrastructure" is a multi-level architecture, and there are tools for monitoring each level.

In addition to problems such as "the server crashed", "you need to replace the screw in the raid", now you need to understand the problems of the application level and business level: "the interaction with the microservice has slowed down in such a way", "there are too few messages in the queue for the current time", "execution time database queries in the application are growing, queries are such and such. "

We support about five thousand servers, in a variety of configurations: from systems of three servers with custom docker networks, to large projects with hundreds of servers in Kubernetes. And all this must be somehow monitored, in time to understand that something is broken and quickly repaired. To do this, you need to understand what monitoring is, how it is built in modern realities, how to design it and what it should do. I would like to talk about this.

As it was before

About ten years ago, monitoring was much easier than now. However, the applications were simpler.

Mainly, system indicators were monitored: CPU, memory, disks, network. This was enough, because there was one application running on php, and nothing else was used. The problem is that there is usually little to be said about such indicators. Either works or not. What exactly happens with the application itself is difficult to understand above the level of system indicators.

If the problem was at the application level (not just “the site is down,” but “the site is working, but something is wrong”), then the client wrote or called, reported that there was such a problem, we went and sorted it out, because we ourselves could not notice such problems.

Like now

Now there are completely different systems: with scaling, with autoscaling, microservices, dockers. Systems have become dynamic. Often no one really knows how exactly everything works, on how many servers, how exactly it is deployed. It lives its own life. Sometimes it is not even known what and where is running (if it is Kubernetes, for example).

The complexity of the systems themselves, of course, entailed a greater number of possible problems. There are application metrics, the number of running threads for a Java application, the frequency of garbage collector pauses, the number of events in the queue. It is very important that monitoring also monitors system scaling. Let's say you have Kubernetes HPA. You need to understand how many hearths are running, and metrics should go from each running hearth to the application monitoring system, in apm.

All this needs to be monitored, because all this affects the operation of the system.

And the problems themselves became less obvious.

Conventionally, problems can be divided into two large groups:

Problems of the first kind - the basic, “user functionality,” does not work.

The problems of the second kind - something does not work as it should, and can lead somewhere in the wrong place.

That is, now it is necessary to monitor not only the discrete “works / does not work”, but much more gradations. Which, in turn, allows you to catch the problem before everything collapses.

In addition, we now need to monitor business performance. The business wanted to have charts about money, about how often orders go, how much time has passed since the last order, and so on - this is now also a monitoring task.

Proper monitoring

Design and in general

The idea of what exactly will need to be monitored should be laid down at the time of developing the application and architecture, and it is not so much about server architecture as about the architecture of the application as a whole.

Developers / architects should understand which parts of the system are critical for the functioning of the project and the business, and think in advance that their performance should be checked.

Monitoring should be convenient for the admin, and give an idea of what is happening. The purpose of monitoring is to get an alert on time, according to the schedules, quickly understand what exactly is happening and what needs to be fixed.

Metrics and alerts (alerts)

Alerts should be as clear as possible: the administrator, having received an alert, even if he is not familiar with the system, must understand what this alert is about, what documentation to look at, or at least who to call. There should be clear instructions on what exactly needs to be done and how to solve the problem.

When a problem arises, I really want to understand why it arose. Receiving an alert that the application does not work for you, you would really like to know what other adjacent systems are behaving differently, what other deviations from the norm are. There should be understandable graphs collected in dashboards, from which you will immediately see where the deviation is.

To do this, you need to understand exactly what is normal and what is not normal. That is, there should be sufficient historical information about the state of the system. The task is to cover with alerts all possible deviations from the norm.

When an admin receives an alert, he either needs to know what to do with it, or whom to ask. There should be instructions on how to respond, and it needs to be updated regularly. If everything works for you through the orchestration system, then, probably, everything is fine with you, if all changes occur only through it, including monitoring. The orchestration system allows you to adequately monitor the relevance of monitoring.

Monitoring should be expanded after each accident - if suddenly a problem arose that slipped past the monitoring, then obviously we need to monitor this situation so that the next time the problem is not sudden.

Business performance

It is useful to monitor the time from the last sale, the number of sales for the period. If you posted a release, then what has changed: are there any drawbacks in business indicators? Of course, A / B testing answers this, but I would also like to have graphics. And you need to monitor the actions of the end user: write scripts on phantomjs that repeat the purchase, go through all the stages of the main business process.

Also, you are probably interested to know if the logistics service is working, or if IpGeoBase has fallen again. (Editor’s comment: IpGeoBase is a service that uses a large number of online stores on 1C-Bitrix to determine the location of the user. Most often this is done directly in the page loading code, and when IpGeoBase crashes, dozens of sites stop responding. Someone please , tell programmers that it needs to be processed and timed out, and someone - please ask IpGeoBase not to fall).

You need to understand whether the drawdown on business indicators depends on your system, or on an external one.

Monitoring monitoring

Monitoring itself should also be monitored somehow. There must be some external custom script that will check that monitoring is working fine. Nobody wants to wake up from a call, because your monitoring system has fallen along with the entire data center, and no one has told you about this.

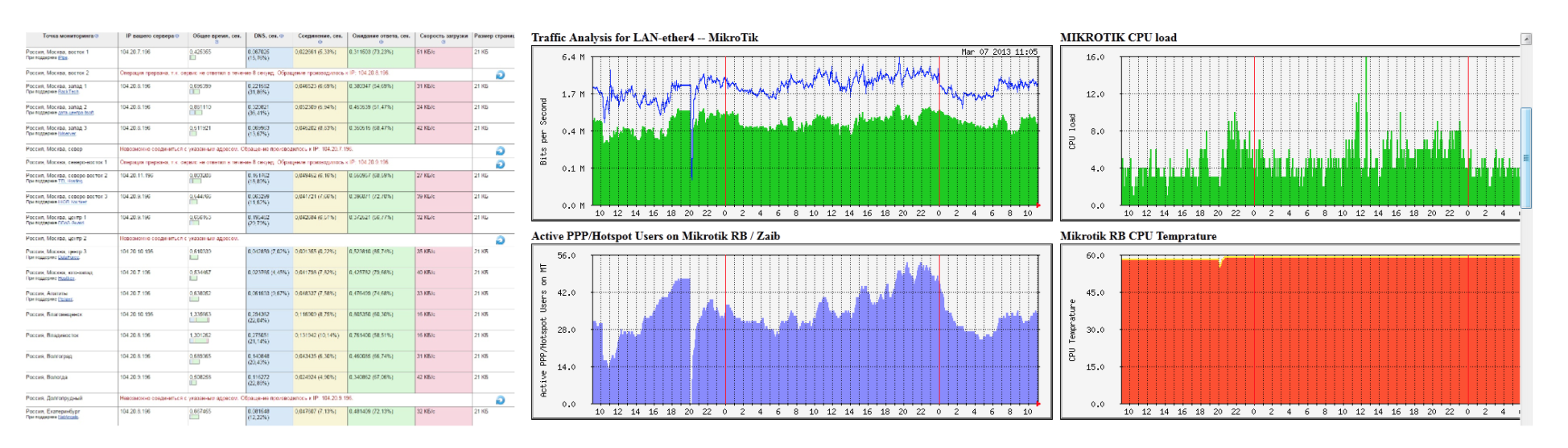

Core tools

In modern systems that scale, you probably use Prometheus, because in principle there are no analogues. In order to view convenient graphs from Prometheus, you need Grafana, because in Prometheus the graphs are so-so. Some APM is also needed. Either it's a proprietary system on Open Trace, jaeger, or something like that. But this is rarely done. Either New Relic or specific stack systems like Dripstat are mainly used. If you do not have one monitoring system, more than one Zabbix, you still need to understand how to collect these metrics and how to distribute alerts; whom to notify, whom to raise, in what order, to whom which alert relates, and what to do with it at all.

Now in order.

Zabbix is not the most convenient system. There are problems with custom metrics, especially if the system is scalable and you need to define roles. And although you can build very custom charts, alerts and dashboards, all this is not very inconvenient and not dynamic. This is a static monitoring system.

Prometheus is a great solution for building a huge number of metrics. It has roughly the same capabilities as Zabbix's custom alerts. You can display charts and build alerts on any wild combinations of several parameters. And this is all very cool, but very inconvenient to watch, so Grafana is added to it. Grafana is very beautiful. But in itself does not really help to monitor systems. But it is convenient to read everything on it. Probably better than graphs.

ELK и Graylog — для сбора логов по событиям в приложении. Может быть полезно для разработчиков, но для подробной аналитики обычно не достаточно.

New Relic — APM, тоже полезный для разработчиков. Есть возможность понять, когда у вас в приложении прямо сейчас что-то идет не так. Понятно, какие из внешних сервисов не очень хорошо работают, или какая из баз медленно отвечает, либо какое системное взаимодействие просаживается.

Свой APM — если вы написали свою систему на Open Tracing, zipkin или jaeger, то, наверное, вы знаете, как именно это должно работать, и что именно, и в какой части кода идет не так. New Relic тоже позволяет это понять, но это не всегда удобно.

Заключение

It is better to think about which indicators should be monitored during the design of the system, think in advance about which parts of the system are critical for its operation and how to verify their operation.

Alerts should not be too many, alerts should be relevant. It should be immediately clear what broke and how to fix it.

To properly monitor business indicators, you need to understand how business processes are organized, what your analysts need, are there enough tools to measure the necessary indicators, and how quickly you can find out if something goes wrong.

In the next post, we will tell you how to properly plan monitoring of modern infrastructure, at all levels: at the system, application and business levels.