Grasp2Vec: Learning to Represent Objects Through Self-Learning Capture

- Transfer

People from a surprisingly early age are already able to recognize their favorite objects and lift them, despite the fact that they are not specifically taught this. According to studies of the development of cognitive abilities, the ability to interact with the objects of the world around us plays a critical role in the development of such abilities as sensation and manipulation of objects — for example, targeted capture. Interacting with the outside world, people can learn by correcting their own mistakes: we know what we have done, and we learn from the results. In robotics, this type of learning with self-correction of errors is actively explored, since it allows robotic systems to learn without a huge amount of training data or manual adjustment.

We're at Google, inspiredthe concept of consistency of objects , we propose the Grasp2Vec system - a simple but effective algorithm for constructing the representation of objects. Grasp2Vec is based on the intuitive understanding that trying to pick up any object will give us some information - if the robot captures the object and picks it up, then the object needs to be in that place before the capture. In addition, the robot knows that if the captured object is in its capture, it means that the object is no longer in the place where it was. Using this form of self-study, the robot can learn to recognize the object due to the visual change of the scene after its capture.

Based on our collaboration with X Robotics , where several robots were simultaneously trained to capture household items using only one camera as an input source, we use robotic capture for “unintentional” capture of objects, and this experience allows us to gain a rich idea of the object. This view can already be used to acquire the ability of "intentional capture", when the robot arm can pick up objects on demand.

Creating a perceptual reward function

In the reinforcement learning platform , the success of a task is measured through the reward function. By maximizing rewards, robots learn various skills to capture from scratch . Creating a reward function is easy when success can be measured by simple sensor readings. A simple example is a button that, by clicking on it, sends a reward directly to the input of a robot .

However, creating a reward function is much more difficult when the success criteria depend on the perceptual understanding of the task. Consider the capture task using an example when the robot is given an image of the desired object held in the capture. After the robot tries to capture the object, it examines the contents of the capture. The reward function for this task depends on the answer to the pattern recognition question: do objects match?

On the left, the grip holds a brush, and on the background you can see several objects (yellow cup, blue plastic block). On the right, the grip holds the cup, and the brush is in the background. If the left image represented the desired result, a good reward function would have to “understand” that these two photos correspond to two different objects.

To solve the problem of recognition, we need a perceptual system that extracts meaningful concepts of objects from unstructured images (not signed by people), and that learns to visualize objects without a teacher. In essence, learning without a teacher works by creating structural assumptions about the data. It is often assumed that images can be compressed to a space with fewer dimensions , and video frames can bepredict by previous . However, without additional assumptions about the contents of the data, this is usually not enough for learning about object concepts that are not related to anything.

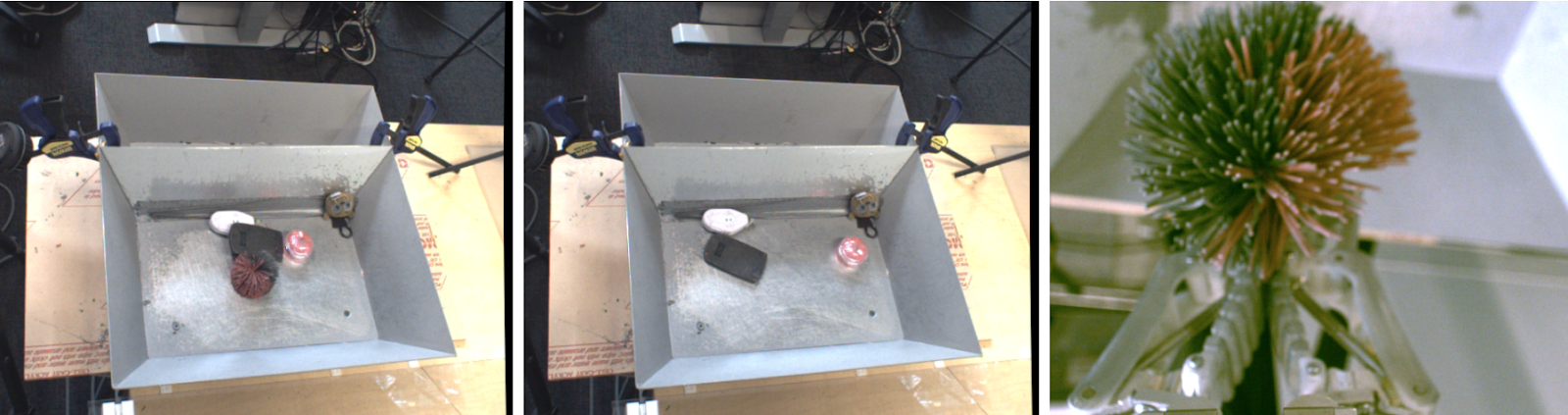

What if we used a robot to physically separate objects during data collection? Robotics offer an excellent opportunity to learn the presentation of objects, since robots can manipulate them, which will give the necessary factors of variation. Our method is based on the idea that capturing an object removes it from the scene. The result is 1) an image of the scene before the capture, 2) an image of the scene after the capture, and 3) a separate view of the captured object.

On the left - objects before capture. In the center - after the capture. On the right is the captured object.

If we consider the built-in function that extracts a “set of objects” from images, it should retain the following subtraction relation:

objects before capture - objects after capture = captured object

We achieve this equality using convolutional architecture and a simple metric learning algorithm. During training, the architecture shown below embeds the images before and after capturing into a dense map of spatial properties . These maps are transformed into vectors through averaged union, and the difference between the “before capture” and “after capture” vectors represents a set of objects. This vector and the corresponding vector representation of this perceived object are equated through the function of N-pairs.

After training, our model naturally has two beneficial properties.

1. Similarity of objects

The cosine coefficient of the distance between the vector inserts allows us to compare objects and determine whether they are identical. This can be used to implement the reward function for training with reinforcements, and allows robots to learn how to use examples without marking up data from humans.

2. Finding targets

We can combine spatial maps of the scene and the embedding of objects to localize the “desired object” in the image space. By performing elementwise multiplication of the maps of spatial features and the vector correspondence of the desired object, we can find all the pixels on the spatial map corresponding to the target object.

Use the Grasp2Vec plugin to localize objects in the scene. Top left - objects in the basket. At the bottom left - the desired object to be captured. The scalar product of the vector of the target object and the spatial features of the image gives us a pixel-by-pixel “activation map” (top right) of the similarity of the specified image segment to the target one. This map can be used to get closer to the target object.

Our method also works when several objects match the target, or even when the target consists of several objects (average of two vectors). For example, in this scenario, the robot detects several orange blocks in the scene.

The resulting "heat map" can be used to plan the approach of the robot to the target object (s). We combine localization from Grasp2Vec and pattern recognition with our “capture everything,” policy, and achieve success in 80% of cases during data collection and in 59% with new objects that the robot has not previously encountered.

Conclusion

In our work, we have shown how the skills of robotic captures can create the data used to train object representations. We can then use representational training to quickly obtain more complex skills, such as capturing by example, while retaining all the unsupervised learning properties in our autonomous capture system.

In addition to our work, several other recent works also explored how unsupervised interaction can be used to obtain representations of objects, by capturing , pushing, and other kinds of interactions.with objects in the environment. We are in joyful anticipation not only that machine learning can give robotics in terms of better perception and control, but also that robotics can give machine learning in terms of new paradigms of independent learning.