Console and Shell Performance

- Transfer

There is a good MSR demo from 2012, which shows the effect of response time when working on a tablet. If you do not want to watch three minutes of video, they essentially created a device that simulates arbitrary delays up to a fraction of a millisecond. A delay of 100 ms (0.1 seconds), typical for modern tablets, looks awful. For 10 ms (0.01 seconds), the delay is noticeable, but you can already work normally, and with a delay of less than 1 ms everything is just perfect - as if you were writing in pencil on paper. If you want to test it yourself, take any Android tablet with a stylus and compare it with the current generation iPad Pro with an Apple stylus. The Apple device’s response time is much longer than 10 ms, but the difference is cardinal anyway - it’s such that I really use the new iPad Pro to write notes and draw diagrams, while Android tablets are completely unacceptable as a replacement for pencil and paper.

You will see something similar in VR helmets with different delays. 20 ms looks normal, 50 ms lags, and 150 ms is already unbearable .

Strange, but rarely hear complaints about the delay in keyboard or mouse input. It would seem that the reason may be that keyboard and mouse input is very fast - and it happens almost instantly. Often they tell me that it is so, but I think the situation is completely opposite. The idea that computers respond quickly to data input — so fast that people don’t notice the difference — is the most common misconception I have heard from professional programmers.

When testers measure the actual delay from start to finish in games on normal computer configurations, it usually turns out that the delay is inrange of 100 ms .

If you look at the distribution of the delay in the game pipeline, made by Robert Menzel , it’s easy to understand where 100+ ms come from:

Please note that the use of a gaming mouse and a pretty decent LCD are assumed here; but in practice, you can often see a much greater mouse delay and pixel switching.

You can configure the system and fit into the 40 ms range, but the vast majority of users do not. And even if they do, it’s still very far from the 10-20 ms range in which the tablets and VR helmets begin to behave “properly”.

Measuring the delay between pressing a key and displaying the screen is usually done in games, because gamers are more important than most other people, but I don’t think that most other applications are very different from games in response time. Although games usually do more work for each frame than "typical" applications, they are much better optimized. Menzel gives the game a budget of 33 ms, including half for game logic and half for rendering. What is the response time in non-gaming applications? Pavel Fatin measured it in text editors and found delays from a few milliseconds to hundreds of milliseconds - and he wrote a special application for measurements , which we can also use to evaluate other applications. Used herejava.awt.Robot for generating keystrokes and screen captures.

Personally, for some reason I would like to look at the response time of different consoles and shells. Firstly, I spend a significant part of my time in the console and usually edit in it, so input delays here are partially attributable to the console. Secondly, most often (about two orders of magnitude more often), the speed of text output, often measured by running

Let's look at the response time in some consoles - does any of them add a noticeable delay. If I measure the response time from pressing a key to the internal screen capture on my laptop, then for different consoles the delays are as follows:

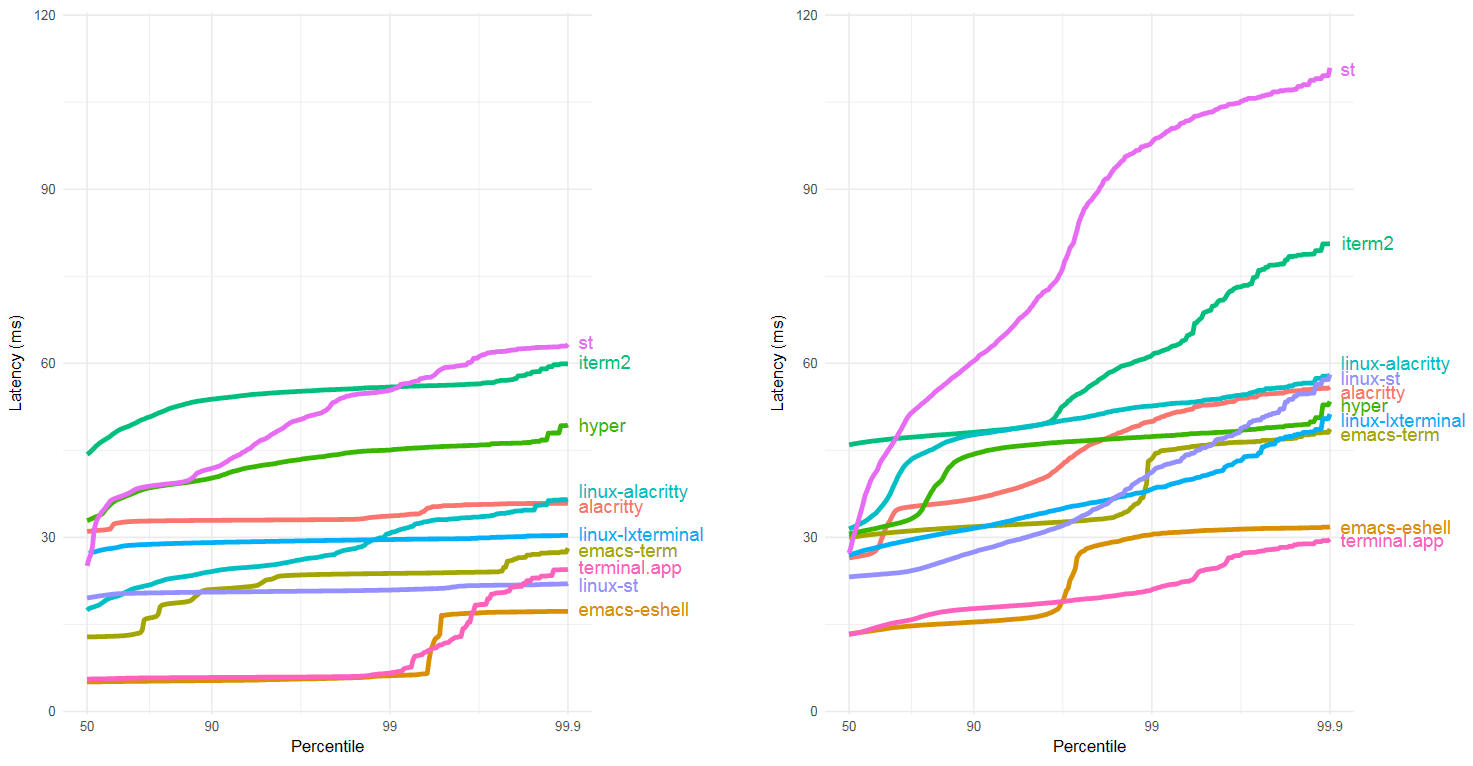

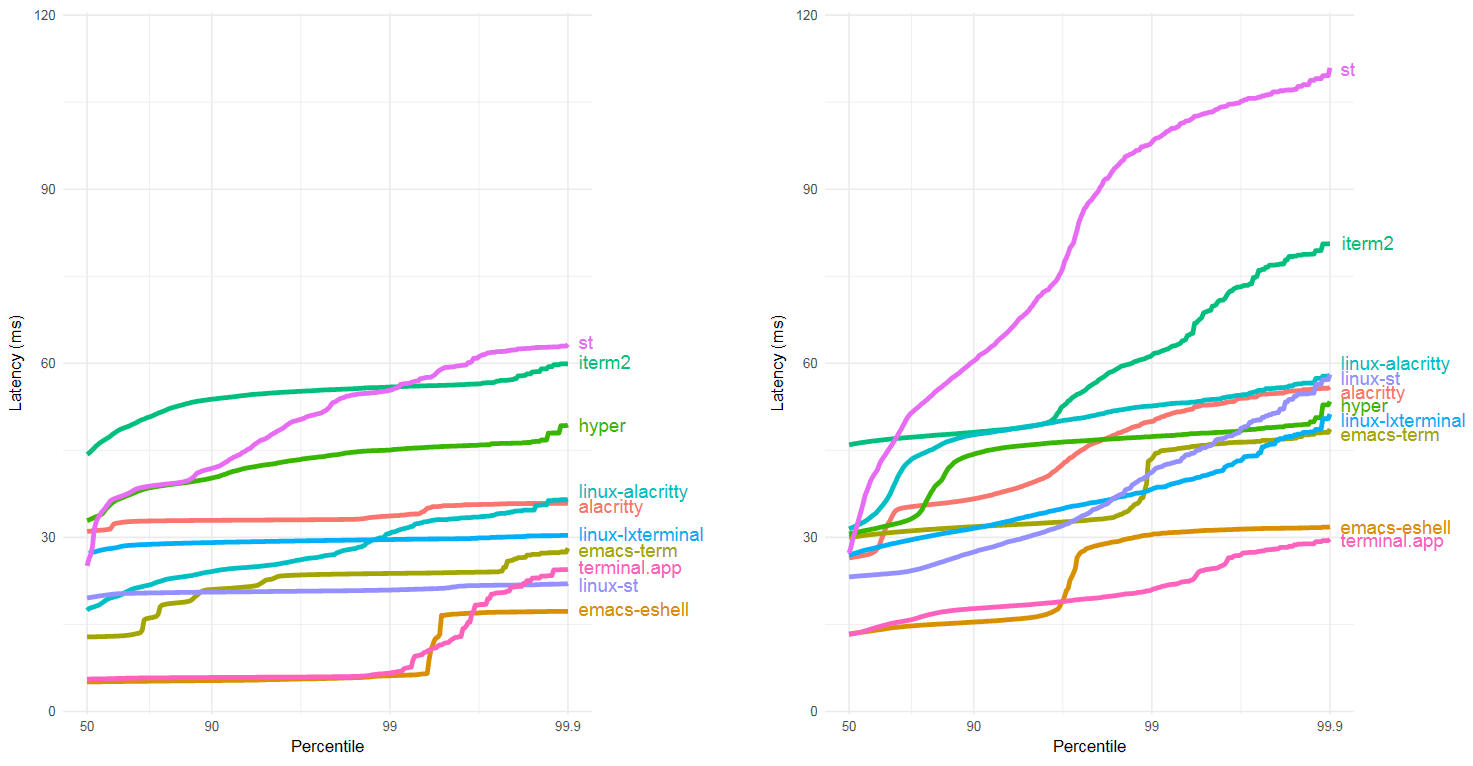

These graphs show the distribution of delays for different consoles. The vertical axis is the delay in milliseconds. The percentile along the horizontal axis (for example, 50 means that 50% of the data is below the 50th percentile, that is, this is the average median click). Measurements taken on macOS unless otherwise indicated. The graph on the left corresponds to an unloaded car, and on the right - under load. If you look only at the median average values, some terminals look pretty good - terminal.app and emacs-eshell are around 5 ms on an unloaded system. This is small enough for most people to not notice the delay. But most consoles (st, alacritty, hyper and iterm2) are in a range where users can already notice additional delayeven on an unloaded system. If you look at the tail of the graph, say, on the response for the 99.9th percentile, then all consoles fall into the range where additional delay should be noticeable, in accordance with user perception studies. For comparison, the delay between the internally generated keystroke and getting into the GPU memory for some consoles exceeds the travel time of a packet from Boston to Seattle and back , which is about 70 ms.

All measurements were carried out when testing each console individually, on a full charge of the battery without power from the A / C cable. Measurements under load were made during compilation of Rust (as before, on a full charge of the battery without power from the A / C cable, and for the sake of reproducibility of results, each measurement began 15 seconds after a clean build of Rust after downloading all the dependencies, with enough time between tests to avoid interference from thermoregulation between tests).

If you look at the average median delays under load, then in addition to emacs-term the results of the other consoles are not much worse than on an unloaded machine. However, at the tail of the graph, like the 90th percentile or 99.9th, each console becomes much less responsive. Switching from macOS to Linux doesn’t change the picture too much, although on different consoles it’s different.

These results are much better than the worst case scenario (on a low battery charge, if you wait 10 minutes from the moment of compilation start to aggravate the noise due to thermal regulation, then there can be delays of hundreds of milliseconds), but even so, each console should have a delay in the tail of the graph be noticeable to a person. Also remember that this is only a fraction of the total response time from the beginning to the end of the input and output processing pipeline.

Why don’t people complain about the delay between keyboard input and screen output the way they complain about the delay when drawing with a stylus or in VR helmets? My theory is that for VR and tablets, people have a lot of experience in similar “applications” with much less latency. For tablets, such an “application” is pencil and paper, and for virtual reality - an ordinary world around, in which we also turn our heads, but only without a VR helmet. But the response time between keyboard input and output to the screen is so long in all applications that most people simply take the big delay for granted.

An alternative theory may be that keyboard and mouse input is fundamentally different from tablet input, which makes the delay less noticeable. Even without my data, such a theory seems implausible, because when I connect via a remote terminal with tens of extra milliseconds, I feel a noticeable lag when I press the keys. And it is known that when adding additional delay in A / B testing, people can notice and really notice a delay in the range that we discussed earlier .

So, if we want to compare the most popular benchmark (stdout performance) with the delay in different consoles, then let's measure how quickly different consoles process the input data for output to stdout:

The relationship between performance

Alacritty was tested with the tmux manager, since this console does not support scrolling back up, and the documentation states that tmux should be used for this. Just for comparison, terminal.app was also tested with tmux. In most consoles, tmux does not seem to slow down

Emacs-eshell is technically not a console, but I also tested eshell, because in some cases this program can be used as a replacement for the console. In fact, Emacs, both with eshell and term, turned out to be so slow that it doesn’t matter at what real speed it produces

Conversely, I type characters fast enough to notice a delay in the long tail. For example, if I type 120 words per minute, that is, 10 characters per second, then the tail from the 99.9th percentile (1 out of 1000) will appear every 100 seconds!

In any case, instead of the “benchmark,”

The table also includes memory usage when loading the program, since I saw that people often use this parameter when testing consoles. Although it seems a little strange to me that consoles at startup can occupy 40 MB in memory, even on a three-year-old laptop I have 16 GB of RAM installed, so optimizing these 40 MB to 2 MB will not really affect the program. Damn, even on the "Chromebook" for $ 300, which we recently bought, installed 16 GB of RAM.

Most consoles have a sufficiently long response time, which can be optimized to improve the user experience of working with the program if the developers focused on this parameter rather than adding new features or other aspects of performance. But when I looked for console benchmarks, I found that if the authors of the programs measured the performance of something, it was either the speed of the output in stdout , or the use of memory at boot time. This is sad, since most "slow" consoles already issue

If you work in the console, then it may be more important for you to have a relatively better optimization of response time and interactivity (for example, a response to

Update. In response to this article, alacritty explained where the alacrity delay came from and described how it could be reduced .

Tmux and delay. I tested the tmux manager with different consoles and found that the difference is within the measurement error.

Shell and delay. I tested different shells, but even in the fastest console the difference between them was within the measurement error. In my experimental setup, it was a little difficult to check Powershell, because it does not correctly handle colors (the first character typed is typed in with the color set in the console, but the rest of the characters are yellow regardless of the settings, this bug seems to be going to close ), which disrupts the image recognition setting that I used. Powershell also does not always place the cursor in the right place.- the one randomly jumps along the line, which also knocks down the image recognition setting. But despite these problems, Powershell has comparable performance with other shells.

Shell and stdout bandwidth. As in previous cases, the difference between different shells is within the measurement error.

Single-line and multi-line text and bandwidth. Although some text editors work with exceptionally long lines, the throughput is practically unchanged, either I pushed a file with one such line into the console, or it was divided into lines of 80 characters.

Queue lock / data skipping error. I ran these tests at an input speed of 10.3 characters per second. But it turned out that the input speed does not have much effect on the delay. Theoretically, the console can be full, and hyper was the first to crash at very high input speeds, but these speeds are much higher than the speed of text input in people I know.

All tests were conducted on a mid-2014 dual-core Macbook Pro 13 ”2.6 GHz. This machine has 16 GB of RAM and a screen resolution of 2560 × 1600 characters. OS X version 10.12.5. Some tests were conducted on Linux (Lubuntu 16.04) to compare macOS and Linux. Each delay measurement was limited to 10 thousand keystrokes.

The measurements were carried out by pressing a button

Consoles were deployed to full screen before running tests. This affects the result, and changing the size of the console window can significantly change performance (for example, you can make hyper much slower than iterm2 by resizing the window with other factors unchanged). st on macOS started as client X under XQuartz. To test the version that XQuartz is inherently slow, I tried runes , another "native" Linux console that uses XQuartz. It turned out that runes have a much smaller tail delay than st and iterm2.

Delay tests on an “unloaded” system were carried out immediately after a system reboot. All terminals were open, but text was entered into only one of them.

Tests under load were performed during Rust compilation in the background, 15 seconds after the compilation began.

The console throughput tests were carried out by creating a large file with pseudo-random text:

with the subsequent launch,

Terminator and urxvt were not tested, since installing them on macOS is a non-trivial procedure and I did not want to bother trying to get them to work. Terminator is easy to compile from source, but it freezes at boot and does not show the command line. Urxvt is installed via brew, but one of its dependencies (which is also installed via brew) was the wrong version, which prevented the console from loading.

You will see something similar in VR helmets with different delays. 20 ms looks normal, 50 ms lags, and 150 ms is already unbearable .

Strange, but rarely hear complaints about the delay in keyboard or mouse input. It would seem that the reason may be that keyboard and mouse input is very fast - and it happens almost instantly. Often they tell me that it is so, but I think the situation is completely opposite. The idea that computers respond quickly to data input — so fast that people don’t notice the difference — is the most common misconception I have heard from professional programmers.

When testers measure the actual delay from start to finish in games on normal computer configurations, it usually turns out that the delay is inrange of 100 ms .

If you look at the distribution of the delay in the game pipeline, made by Robert Menzel , it’s easy to understand where 100+ ms come from:

- ~ 2 ms (mouse)

- 8 ms (average wait time for the start of input processing by the game)

- 16.6 (game simulation)

- 16.6 (render code)

- 16.6 (GPU draws the previous frame, the current frame is cached)

- 16.6 (GPU rendering)

- 8 (average vsync mismatch time)

- 16.6 (display frame caching)

- 16.6 (frame redrawing)

- 5 (pixel switching)

Please note that the use of a gaming mouse and a pretty decent LCD are assumed here; but in practice, you can often see a much greater mouse delay and pixel switching.

You can configure the system and fit into the 40 ms range, but the vast majority of users do not. And even if they do, it’s still very far from the 10-20 ms range in which the tablets and VR helmets begin to behave “properly”.

Measuring the delay between pressing a key and displaying the screen is usually done in games, because gamers are more important than most other people, but I don’t think that most other applications are very different from games in response time. Although games usually do more work for each frame than "typical" applications, they are much better optimized. Menzel gives the game a budget of 33 ms, including half for game logic and half for rendering. What is the response time in non-gaming applications? Pavel Fatin measured it in text editors and found delays from a few milliseconds to hundreds of milliseconds - and he wrote a special application for measurements , which we can also use to evaluate other applications. Used herejava.awt.Robot for generating keystrokes and screen captures.

Personally, for some reason I would like to look at the response time of different consoles and shells. Firstly, I spend a significant part of my time in the console and usually edit in it, so input delays here are partially attributable to the console. Secondly, most often (about two orders of magnitude more often), the speed of text output, often measured by running

caton a large file , is given as a console benchmark . It seems to me that this is a rather useless benchmark. I can’t remember when the last time I performed the task was limited by the speed of processing the file catwith the commandstdoutto the console (well, unless I use eshell in emacs). And I can not imagine a single task for which such a specialized dimension would be useful. The next task that could matter to me is the speed of the interrupt command ^C, when I accidentally sent too much output to stdout. But as we will see from real measurements, the ability of the console to absorb a large amount of input data with output in stdoutvery weakly refers to the response time to ^C. It seems that the speed of scrolling an entire page up and down is related, but in real measurements these two parameters do not correlate much (for example, emacs-eshell quickly scrolls, but it absorbs extremely slowlystdout) What else bothers me is the response time, but the information that a particular console is processing quickly stdoutdoes not say much about its response time. Let's look at the response time in some consoles - does any of them add a noticeable delay. If I measure the response time from pressing a key to the internal screen capture on my laptop, then for different consoles the delays are as follows:

These graphs show the distribution of delays for different consoles. The vertical axis is the delay in milliseconds. The percentile along the horizontal axis (for example, 50 means that 50% of the data is below the 50th percentile, that is, this is the average median click). Measurements taken on macOS unless otherwise indicated. The graph on the left corresponds to an unloaded car, and on the right - under load. If you look only at the median average values, some terminals look pretty good - terminal.app and emacs-eshell are around 5 ms on an unloaded system. This is small enough for most people to not notice the delay. But most consoles (st, alacritty, hyper and iterm2) are in a range where users can already notice additional delayeven on an unloaded system. If you look at the tail of the graph, say, on the response for the 99.9th percentile, then all consoles fall into the range where additional delay should be noticeable, in accordance with user perception studies. For comparison, the delay between the internally generated keystroke and getting into the GPU memory for some consoles exceeds the travel time of a packet from Boston to Seattle and back , which is about 70 ms.

All measurements were carried out when testing each console individually, on a full charge of the battery without power from the A / C cable. Measurements under load were made during compilation of Rust (as before, on a full charge of the battery without power from the A / C cable, and for the sake of reproducibility of results, each measurement began 15 seconds after a clean build of Rust after downloading all the dependencies, with enough time between tests to avoid interference from thermoregulation between tests).

If you look at the average median delays under load, then in addition to emacs-term the results of the other consoles are not much worse than on an unloaded machine. However, at the tail of the graph, like the 90th percentile or 99.9th, each console becomes much less responsive. Switching from macOS to Linux doesn’t change the picture too much, although on different consoles it’s different.

These results are much better than the worst case scenario (on a low battery charge, if you wait 10 minutes from the moment of compilation start to aggravate the noise due to thermal regulation, then there can be delays of hundreds of milliseconds), but even so, each console should have a delay in the tail of the graph be noticeable to a person. Also remember that this is only a fraction of the total response time from the beginning to the end of the input and output processing pipeline.

Why don’t people complain about the delay between keyboard input and screen output the way they complain about the delay when drawing with a stylus or in VR helmets? My theory is that for VR and tablets, people have a lot of experience in similar “applications” with much less latency. For tablets, such an “application” is pencil and paper, and for virtual reality - an ordinary world around, in which we also turn our heads, but only without a VR helmet. But the response time between keyboard input and output to the screen is so long in all applications that most people simply take the big delay for granted.

An alternative theory may be that keyboard and mouse input is fundamentally different from tablet input, which makes the delay less noticeable. Even without my data, such a theory seems implausible, because when I connect via a remote terminal with tens of extra milliseconds, I feel a noticeable lag when I press the keys. And it is known that when adding additional delay in A / B testing, people can notice and really notice a delay in the range that we discussed earlier .

So, if we want to compare the most popular benchmark (stdout performance) with the delay in different consoles, then let's measure how quickly different consoles process the input data for output to stdout:

| Console | stdout (MB / s) | idle50 (ms) | load50 (ms) | idle99.9 (ms) | load99.9 (ms) | mem (MB) | ^ C |

|---|---|---|---|---|---|---|---|

| alacritty | 39 | 31 | 28 | 36 | 56 | 18 | ok |

| terminal.app | 20 | 6 | thirteen | 25 | thirty | 45 | ok |

| st | 14 | 25 | 27 | 63 | 111 | 2 | ok |

| alacritty tmux | 14 | ||||||

| terminal.app tmux | thirteen | ||||||

| iterm2 | eleven | 44 | 45 | 60 | 81 | 24 | ok |

| hyper | eleven | 32 | 31 | 49 | 53 | 178 | fail |

| emacs-eshell | 0.05 | 5 | thirteen | 17 | 32 | thirty | fail |

| emacs-term | 0.03 | thirteen | thirty | 28 | 49 | thirty | ok |

The relationship between performance

stdoutand how fast the console looks is not obvious. In this test, terminal.app looked very bad from the outside. While scrolling, the text moved jerkyly, as if the screen rarely updated. Problems were also observed in hyper and emacs-term. Emacs-term didn’t keep pace with the output at all - after the test was completed, it took him a few seconds to update to the end (the status bar, which shows the number of lines issued, seemed to be relevant, so the number stopped increasing until the test ended). Hyper lagged even further and blinking a couple of times almost never updated the screen. The process was Hyper Helper artificially supported at 100% CPU utilization for about two minutes, and the console was completely unresponsive all the time.Alacritty was tested with the tmux manager, since this console does not support scrolling back up, and the documentation states that tmux should be used for this. Just for comparison, terminal.app was also tested with tmux. In most consoles, tmux does not seem to slow down

stdout, but alacritty and terminal.app were fast enough so that in reality their performance was still limited by the speed of tmux. Emacs-eshell is technically not a console, but I also tested eshell, because in some cases this program can be used as a replacement for the console. In fact, Emacs, both with eshell and term, turned out to be so slow that it doesn’t matter at what real speed it produces

stdout. In the past, when using eshell or term, I sometimes had to wait for scrolling several thousand lines of text if I ran a command with detailed logging in stdoutor stderr. Since this happens quite rarely, for me this is not a big problem until the delay reaches 0.5 or 1 second, although everything works fine in any other console. Conversely, I type characters fast enough to notice a delay in the long tail. For example, if I type 120 words per minute, that is, 10 characters per second, then the tail from the 99.9th percentile (1 out of 1000) will appear every 100 seconds!

In any case, instead of the “benchmark,”

catI’m more concerned with whether I can interrupt the process by^Cif you accidentally run a command with millions of lines of output to the screen instead of thousands of lines. Almost every console passes this test, except for hyper and emacs-eshell - they both freeze for at least ten minutes (after ten minutes I kill tasks and no longer wait for the process to finish). The table also includes memory usage when loading the program, since I saw that people often use this parameter when testing consoles. Although it seems a little strange to me that consoles at startup can occupy 40 MB in memory, even on a three-year-old laptop I have 16 GB of RAM installed, so optimizing these 40 MB to 2 MB will not really affect the program. Damn, even on the "Chromebook" for $ 300, which we recently bought, installed 16 GB of RAM.

Conclusion

Most consoles have a sufficiently long response time, which can be optimized to improve the user experience of working with the program if the developers focused on this parameter rather than adding new features or other aspects of performance. But when I looked for console benchmarks, I found that if the authors of the programs measured the performance of something, it was either the speed of the output in stdout , or the use of memory at boot time. This is sad, since most "slow" consoles already issue

stdoutseveral orders of magnitude faster than people are able to understand, so further speed optimizationstdoutrelatively weak effect on real usability for most users. The same can be said about reducing memory usage at boot time if the console uses 0.01% of the memory on my old laptop or on a modern, cheap model. If you work in the console, then it may be more important for you to have a relatively better optimization of response time and interactivity (for example, a response to

^C) and a relatively less optimization of bandwidth and memory usage at boot time. Update. In response to this article, alacritty explained where the alacrity delay came from and described how it could be reduced .

Appendix: negative results

Tmux and delay. I tested the tmux manager with different consoles and found that the difference is within the measurement error.

Shell and delay. I tested different shells, but even in the fastest console the difference between them was within the measurement error. In my experimental setup, it was a little difficult to check Powershell, because it does not correctly handle colors (the first character typed is typed in with the color set in the console, but the rest of the characters are yellow regardless of the settings, this bug seems to be going to close ), which disrupts the image recognition setting that I used. Powershell also does not always place the cursor in the right place.- the one randomly jumps along the line, which also knocks down the image recognition setting. But despite these problems, Powershell has comparable performance with other shells.

Shell and stdout bandwidth. As in previous cases, the difference between different shells is within the measurement error.

Single-line and multi-line text and bandwidth. Although some text editors work with exceptionally long lines, the throughput is practically unchanged, either I pushed a file with one such line into the console, or it was divided into lines of 80 characters.

Queue lock / data skipping error. I ran these tests at an input speed of 10.3 characters per second. But it turned out that the input speed does not have much effect on the delay. Theoretically, the console can be full, and hyper was the first to crash at very high input speeds, but these speeds are much higher than the speed of text input in people I know.

Application: experimental installation

All tests were conducted on a mid-2014 dual-core Macbook Pro 13 ”2.6 GHz. This machine has 16 GB of RAM and a screen resolution of 2560 × 1600 characters. OS X version 10.12.5. Some tests were conducted on Linux (Lubuntu 16.04) to compare macOS and Linux. Each delay measurement was limited to 10 thousand keystrokes.

The measurements were carried out by pressing a button

.with the output in the default encoding base32, that is, plain ASCII text. George King drew attention to the fact that different types of text can affect the speed of delivery:I noticed that Terminal.app drastically slows down when issuing non-Latin encodings. I think there can be three reasons for this: the need to load different font pages, the need to parse code points outside the Basic Multilingual Plane (BMP), and Unicode multibyte characters.

Probably the first comes down to a very complex combination of deferred loading of font glyphs, calculating fallback fonts and caching glyph pages, or some other way.

The second is a bit speculative in nature, but I would suggest that Terminal.app uses Cocoa NSString based on UTF16, which almost certainly leads to slowdown if code points are higher than BMP due to surrogate pairs.

Consoles were deployed to full screen before running tests. This affects the result, and changing the size of the console window can significantly change performance (for example, you can make hyper much slower than iterm2 by resizing the window with other factors unchanged). st on macOS started as client X under XQuartz. To test the version that XQuartz is inherently slow, I tried runes , another "native" Linux console that uses XQuartz. It turned out that runes have a much smaller tail delay than st and iterm2.

Delay tests on an “unloaded” system were carried out immediately after a system reboot. All terminals were open, but text was entered into only one of them.

Tests under load were performed during Rust compilation in the background, 15 seconds after the compilation began.

The console throughput tests were carried out by creating a large file with pseudo-random text:

timeout 64 sh -c 'cat /dev/urandom | base32 > junk.txt'with the subsequent launch,

timeout 8 sh -c 'cat junk.txt | tee junk.term_name'Terminator and urxvt were not tested, since installing them on macOS is a non-trivial procedure and I did not want to bother trying to get them to work. Terminator is easy to compile from source, but it freezes at boot and does not show the command line. Urxvt is installed via brew, but one of its dependencies (which is also installed via brew) was the wrong version, which prevented the console from loading.