Key-value for storing metadata in storage. Testing Embedded Databases

On November 7-8, 2017, at the Highload ++ conference, the researchers at the Reydiks presented a report entitled “Metadata for the cluster: a race of key-value heroes”.

In this article, we presented the main material of the report regarding the testing of key-value databases. “Why should they be tested by the storage manufacturer?”, You ask. The problem arose in connection with the problem of storing metadata. Such "features" as deduplication, peering, thin provisioning, log-structured recording, run counter to the direct addressing mechanism - it becomes necessary to store a large amount of service information.

Introduction

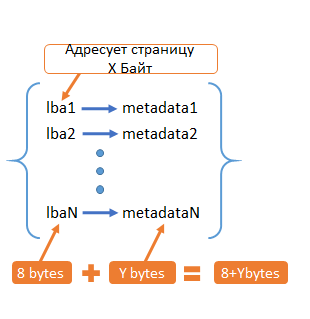

Assume that the entire storage space is divided into pages of size X bytes. Each of them has its own address - LBA (8 Bytes). For each such page, we want to store Y bytes of metadata. Thus, we get the correspondence set lba -> metadata. How many such matches will we have? It all depends on how much data we will store.

Fig. 1. Metadata

For example, with X = 4KB, Y = 16 bytes. We get the following table:

Table 1. The ratio of storage volume and metadata

| Amount of data per node | Number of keys per node | Metadata Scope |

|---|---|---|

| 512TB | 137 billion | 3TB |

| 64TB | 17 billion | 384GB |

| 4TB | 1 billion | 22.3GB |

The amount of metadata is quite large, so it is not possible to store metadata in RAM (or it is not economically feasible). In this regard, the question arises of storing metadata, and with maximum access performance.

Metadata Storage Options

- Key-value database. lba - key, metadata - value

- Direct addressing. Do not store lba = metadata N * Y, not N * (8 + Y) B

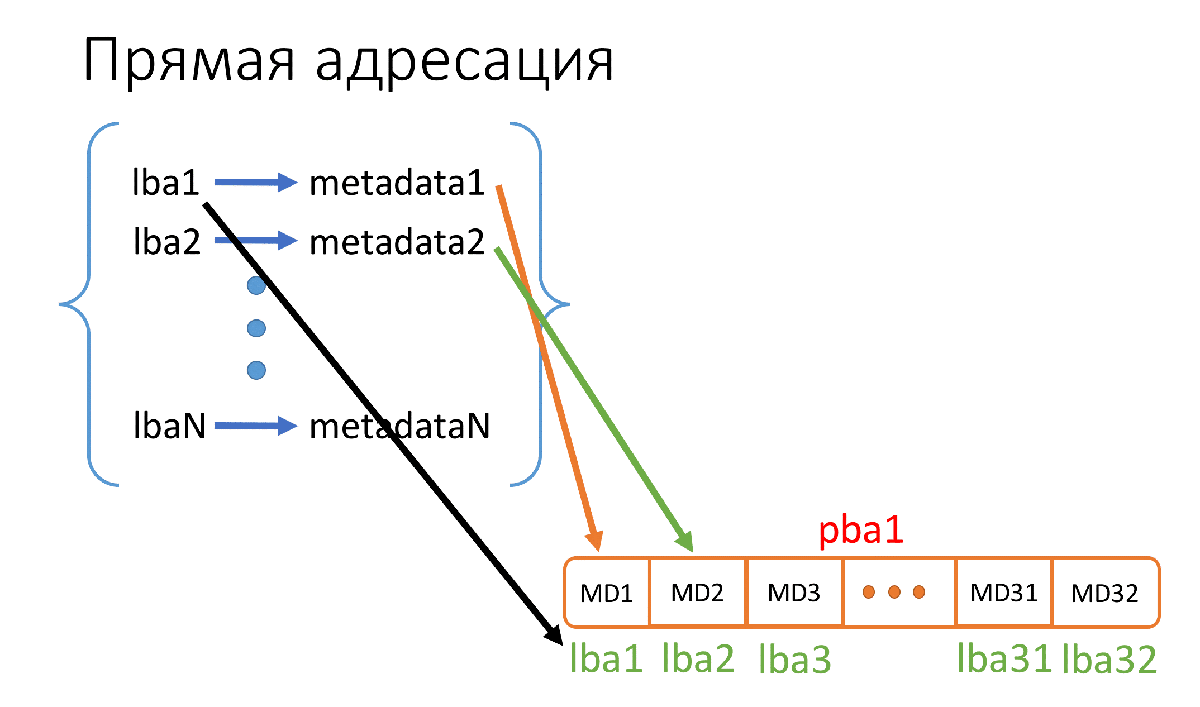

What is direct addressing? This is when we simply place our metadata in order on the drive, starting from the very first sector of the drive. At the same time, we don’t even have to write to which lba metadata corresponds, since everything is in ascending order of lba.

Fig. 2. The principle of direct addressing.

In Figure 2, pba1 is the physical sector (512B) of the drive where we store metadata, and lba1 ... lba32 (there are 32 of them, because 512B / 16B = 32) are the addresses of those pages this metadata matches, and we don’t need to store these addresses.

Storage workload analysis

Based on our workload experience, we will determine what latency and bandwidth requirements we need. Media industry

workload :

- NLE (non-linear editing) - reading and writing several large files in parallel.

- VOD (video on demand) - reading multiple streams, sometimes with jumps. It is possible to record in multiple streams in parallel.

- Transcoding - 16–128 KB random R / W 50/50.

Enterprise Segment Workload :

- 8–64 KB IO.

- Random read / write about 50/50.

- Periodically, Seq Read and Write to hundreds of threads (Boot, Virus Scan).

Workload in High Performance Computing (HPC) :

- 16/32 KB IO.

- Alternating read / write in hundreds and thousands of threads.

From the presented workloads in various market sectors, we will formulate the final performance requirements for All-Flash storage:

- Show:

• Latency: 1–2ms (percentile 99.99%) for flash.

• From 20GB / s, from 300-500k IOPS. - At the following loads:

• Random R / W.

• 50/50 ratio.

• The block size is 8–64K.

To choose which of the dozens of existing databases suits us, it is necessary to conduct tests on selected loads. So we can understand how a particular database copes with a certain amount of data, which provides latency / throughput.

What difficulties arise at the start?

- You will have to select only a few databases for tests - you won’t be able to test everything. How to choose these several? Only subjectively.

- Ideally, you need to carefully configure each database before testing, which can take a huge amount of time. Therefore, we decided to first look at what numbers can be obtained in the standard configuration, and then decide how promising testing is.

- Making tests completely objective and creating the same conditions for each of the databases is quite difficult due to the fundamental differences between the databases.

- Few benchmarks or hard to find. We need exactly unified benchmarks with which you can test any (or almost any) key-value database, or at least a few of the most interesting ones. When testing different databases with different benchmarks, objectivity suffers.

Database key-value types

There are two types of key-value databases:

- Embedded - they are also called “engines”. In fact, this is a library that you can connect in your code and use its functions.

- Dedicated - you can also call them "database server", "NoSQL database". These are separate processes that can most often be accessed by socket. Usually have more features than built-in. For example, replication.

In this article, we will consider testing embedded key-value databases (hereinafter, we will call them “engines”).

How to test?

The first option that can be found is YCSB .

Features of YCSB:

- This benchmark is a kind of industry standard, it is trusted.

- Workloads can be easily configured in configuration files.

- It is written in Java. In this case, this is a minus, because Java is not very fast, and this can introduce distortion into the test results. In addition, the engines are mainly written in C / C ++. This makes writing the YCSB <-> engine driver difficult.

The second option is a benchmark for ioarena engines .

- Written in C.

- Few workloads. Of those that interest us, there is only Random Read. I had to add the necessary workloads in the code.

Fig. 3. Testing options

As a result, we select ioarena for the engines and YCSB for the selected ones.

In addition to workloads in ioarena, we added the option (-a), which allows you to specify when you start separately the number of operations performed on the stream and the number of keys in the database separately.

All changes to ioarena code can be found on GitHub .

Key-value database test parameters

The main workload that interests us is Mix50 / 50. We also decided to look at RR, Mix70 / 30 and Mix30 / 70 to understand which databases are more “fond” of a particular workload.

Testing methodology

We test in 3 stages:

- Filling the database - fill in 1 database stream to the required number of keys.

1.1 Flush Caches! Otherwise, the tests will be dishonest: databases usually write data on top of the file system, so the operating system cache is triggered. It is important to reset it before each test. - Tests for 32 threads - run workloads

2.1 Random Read

• Clear caches!

2.2 Mix70 / 30

• Flush caches!

2.3 Mix50 / 50

• Flush caches!

2.4 Mix30 / 70

• Flush caches! - Tests for 256 threads.

3.1 The same as for 32 threads.

What are we measuring?

- Bandwidth / throughput (IOPS / RPS - who likes which designations more).

- Latency (msec):

• Min.

• Max.

• The mean square value is a more indicative value than the arithmetic mean, because takes into account the quadratic deviation.

• Percentile 99.99.

Test environment

Configuration:

| CPU: | 2x Intel Xeon E5-2620 v4 2.10GHz |

| RAM: | 16GB |

| Disk: | [2x] NVMe HGST SN100 1.5TB |

| OS: | CentOS Linux 7.2 kernel 3.11 |

| FS: | EXT4 |

It is important to note here that such a small amount of RAM is not taken by chance. Thus, the database will not be able to fully fit in the cache on tests with 1 billion keys.

The amount of available RAM was regulated not physically, but programmatically - part was filled artificially with a Python script, and the rest was free for the database and caches.

In some tests, there was a different amount of available memory - this will be discussed separately.

NVMe used one in the tests.

Recording reliability

An important point is the reliability mode of writing data to disk. The recording speed and the probability / volume of losses due to failures depend very much on this.

In general, 3 modes can be distinguished:

- Sync - an honest record to disk before answering the user “OK” to the request for recording. In the event of a failure, everything remains in place until the last committed transaction.

- Lazy - write data to the buffer, respond to the user “OK”, and after a short period of time we dump the buffer to the disk. In the event of a failure, we may lose some of the latest changes.

- Nosync - we do not flush data to disk and just write them to the buffer so that someday (it doesn’t matter when), we flush the buffer to disk. In this mode, there may be large losses in the event of a failure.

In performance, the difference is approximately the same (for example, the MDBX engine):

- Sync = 10k IOPS

- Lazy = 40k IOPS

- Nosync = 300k IOPS

The numbers here are ONLY for an approximate understanding of the difference between the modes.

As a result, lazy mode was chosen as the most balanced for tests. Exceptions will be discussed separately.

Testing embedded key-value db

To test the “engines”, we conducted two testing options: 1 billion keys and 17 billion keys.

Selected "engines":

- RocksDB - everyone knows about him, this is a database from Facebook. LSM index.

- WiredTiger - MongoDB engine. LSM index. Read here .

- Sophia - this engine has its own custom index, which has something in common with LSM-trees, B-trees. You can read here .

- MDBX is a fork of LMDB with improvements in reliability and performance. B + tree as an index.

Test results. 1 billion keys

Filling out

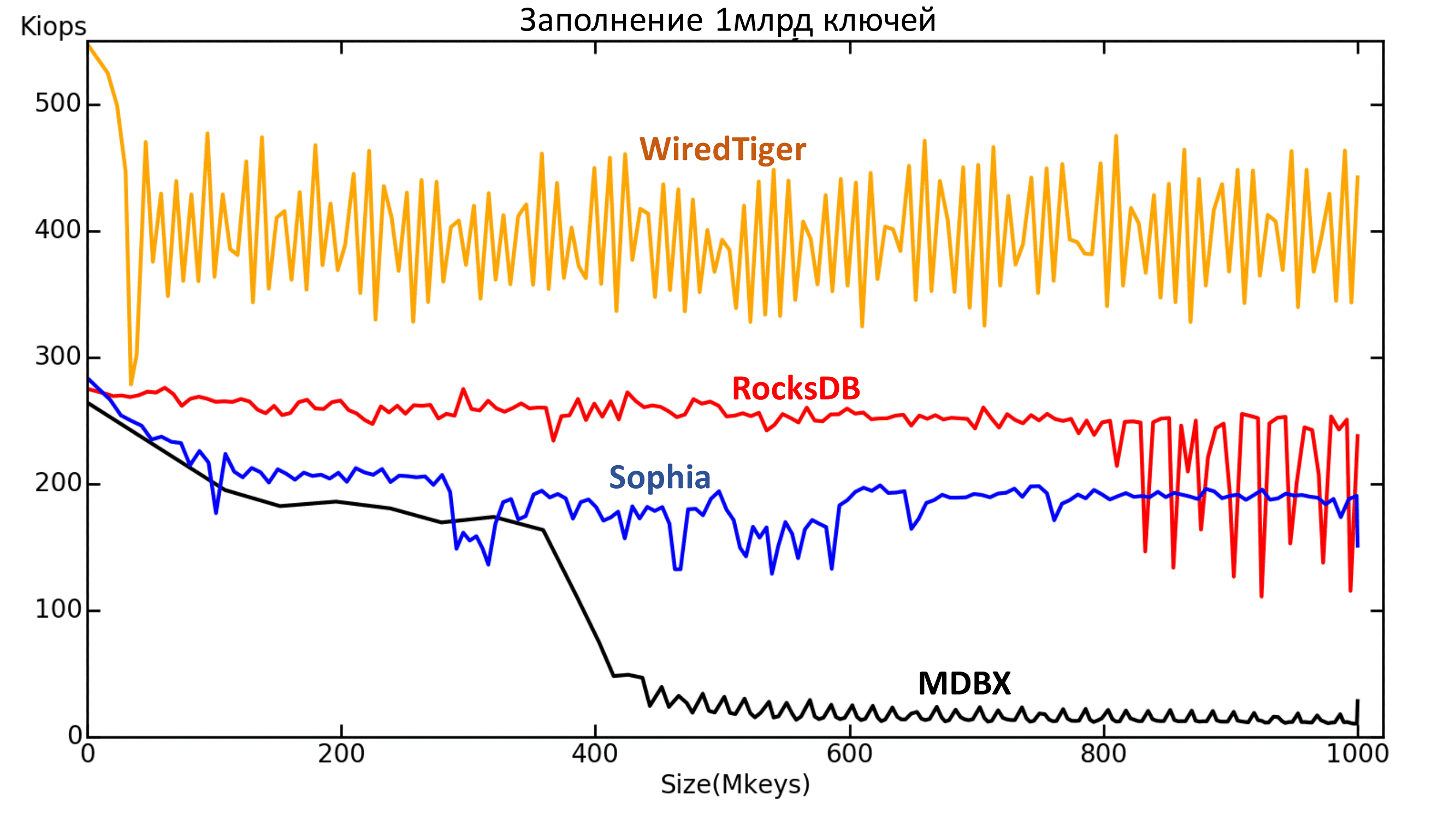

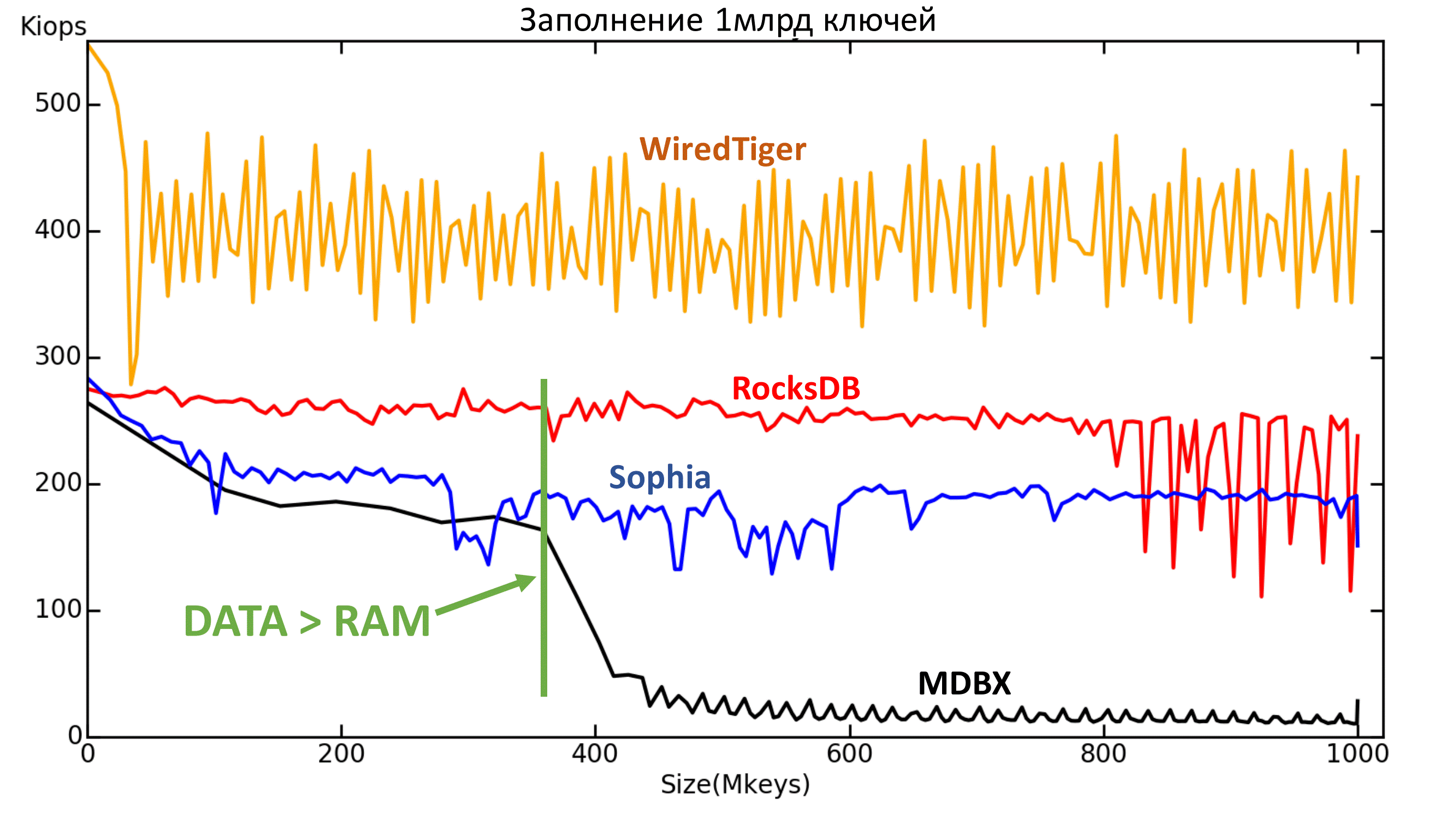

Fig. 4.1. Dependence of the current speed (IOPS) on the number of keys (abscissa axis - millions of keys).

Here all the engines are in a standard configuration. Lazy Reliability Mode, except MDBX. He has too slow a record in Lazy, so Nosync mode was chosen for him, otherwise the filling will take too long. However, it can be seen that from some point on, the recording speed drops to approximately the speed level of the Sync mode.

What can be seen on this chart?

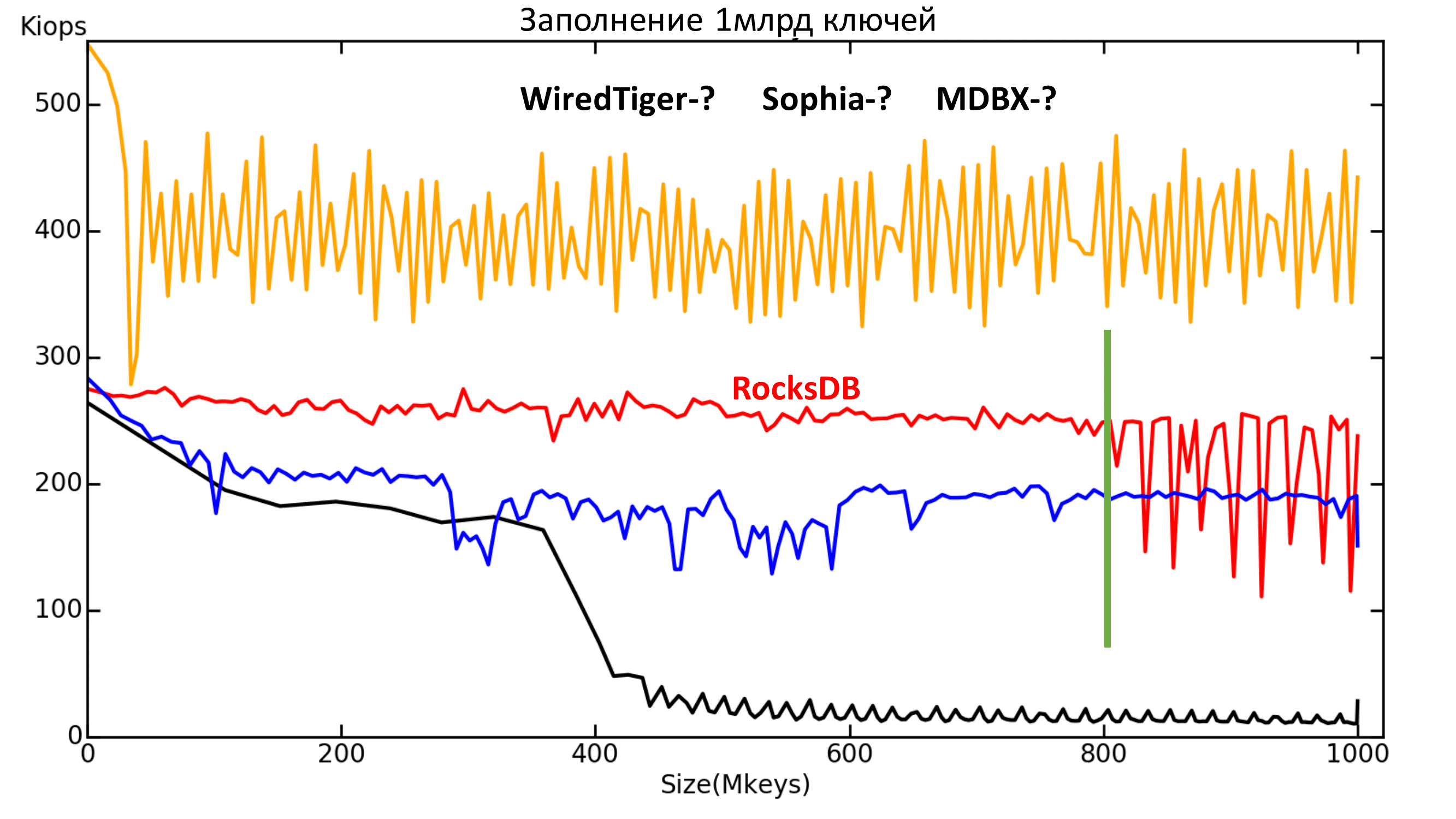

First: something happens to RocksDB after 800 million keys. Unfortunately, the reasons for this were not clarified.

Fig. 4.2. Dependence of the current speed (IOPS) on the number of keys (abscissa axis - millions of keys).

Second: MDBX did not tolerate the moment when there was more data than memory was available.

Fig. 4.3. Dependence of the current speed (IOPS) on the number of keys (abscissa axis - millions of keys).

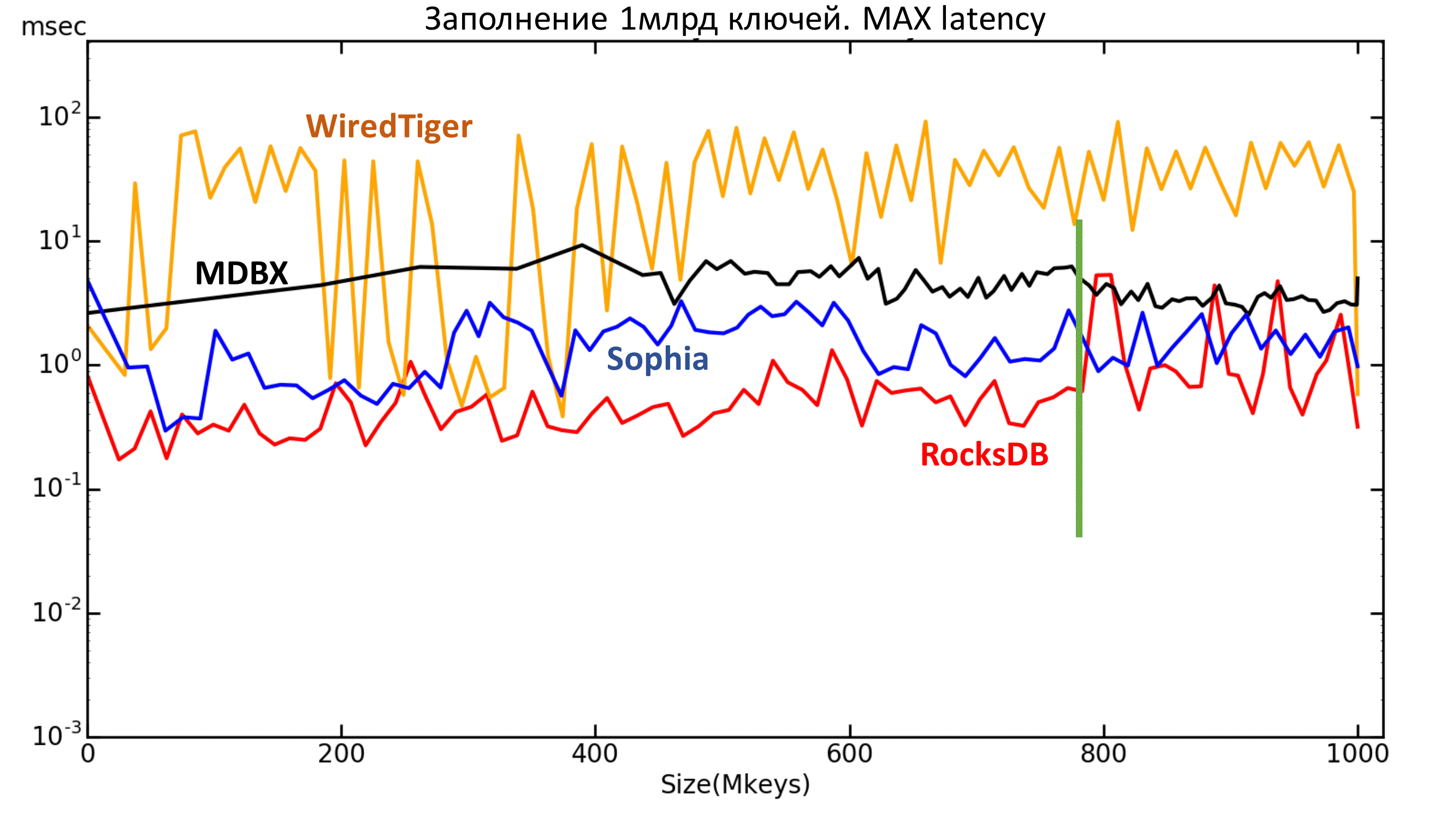

Next, you can look at the graph of the maximum delay. It also shows that RocksDB started crashes after 800 million keys.

Fig. 5 Maximum latency

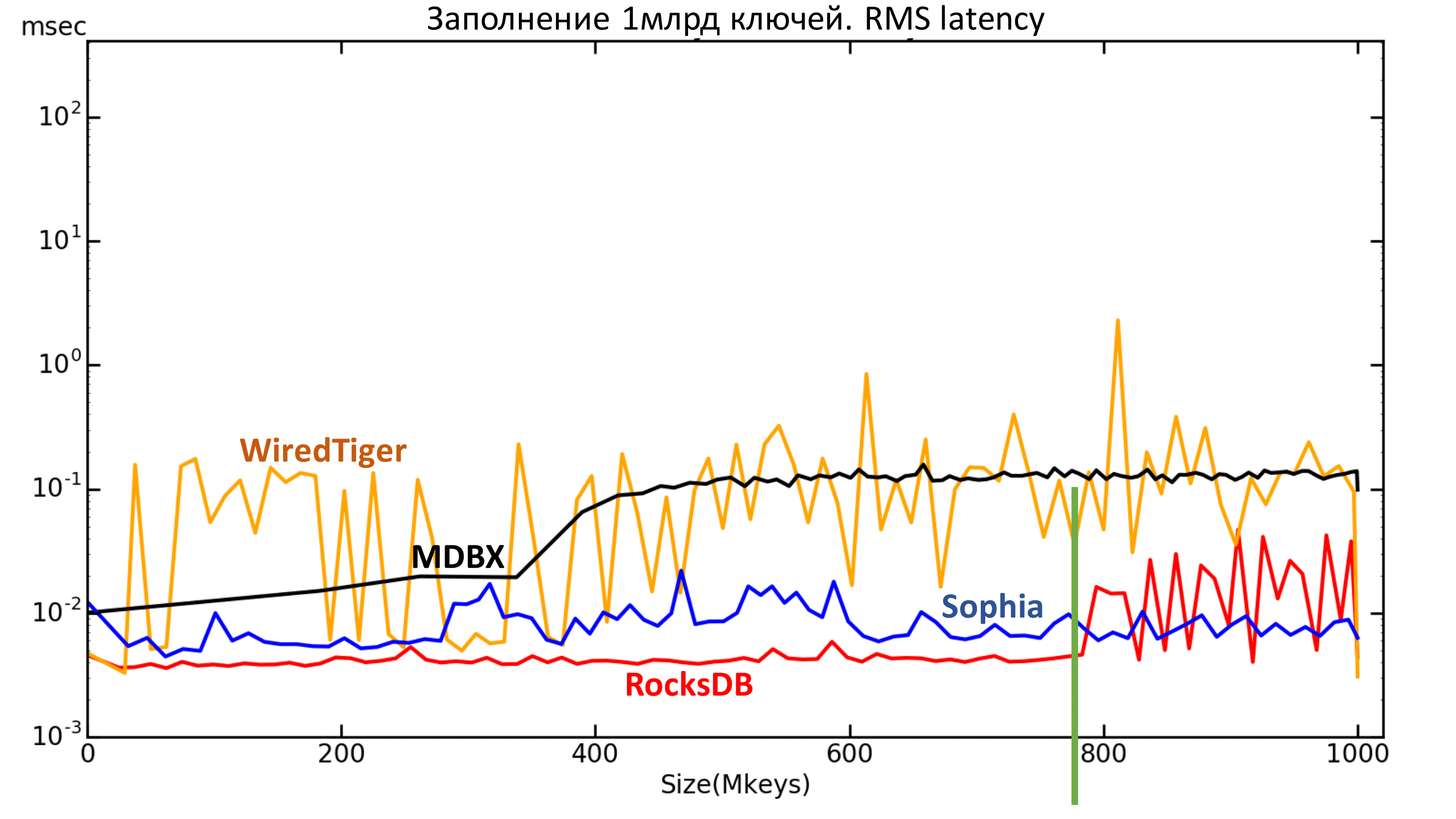

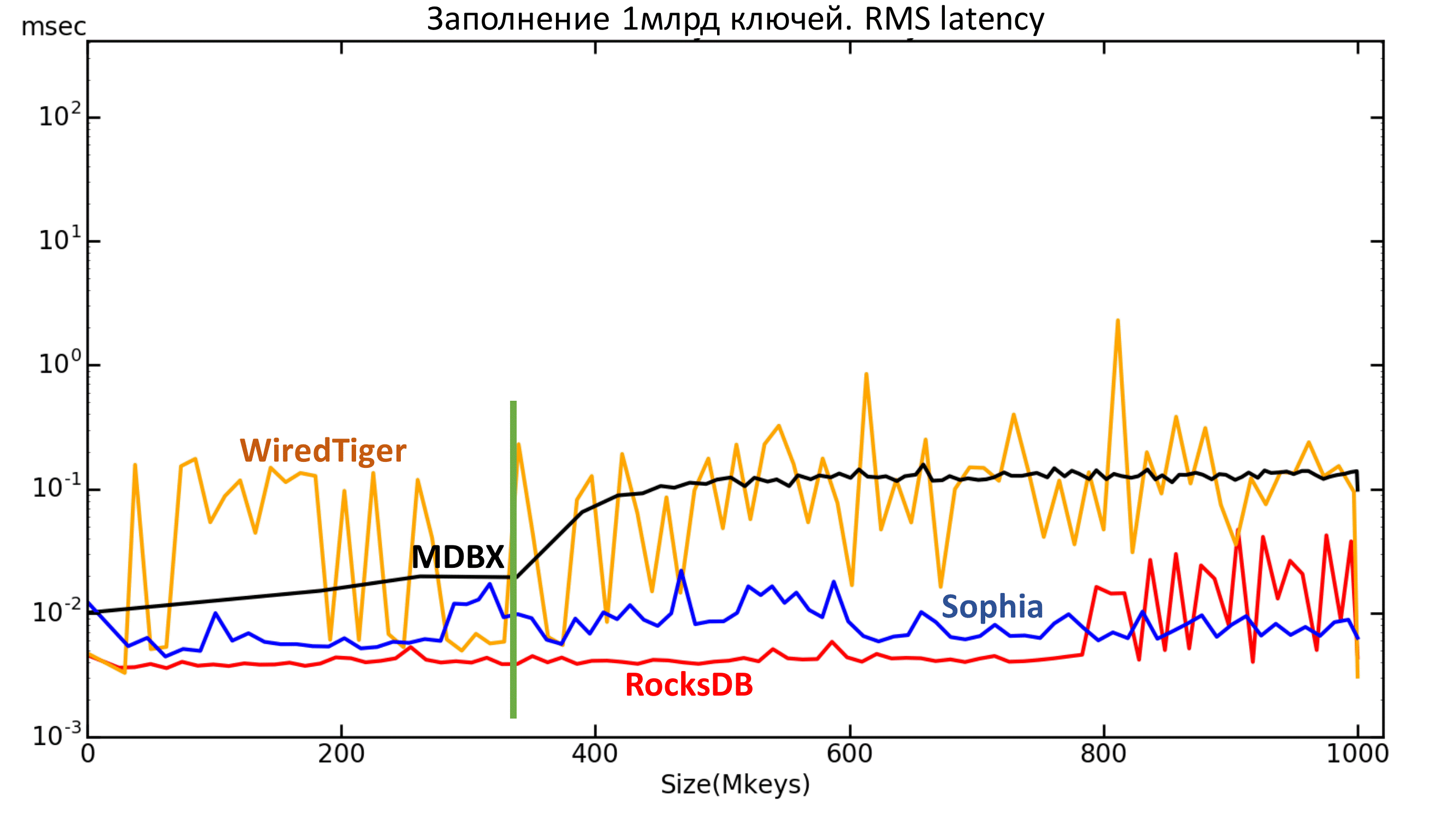

Below is a graph of the rms latency. Here you can also see the very borders for RocksDB and MDBX.

Fig. 6.1. RMS Latency

Fig. 6.2. RMS Latency

Tests

Unfortunately, Sophia performed poorly in all tests. Most likely, she doesn’t “love” a lot of threads (ie 32 or more).

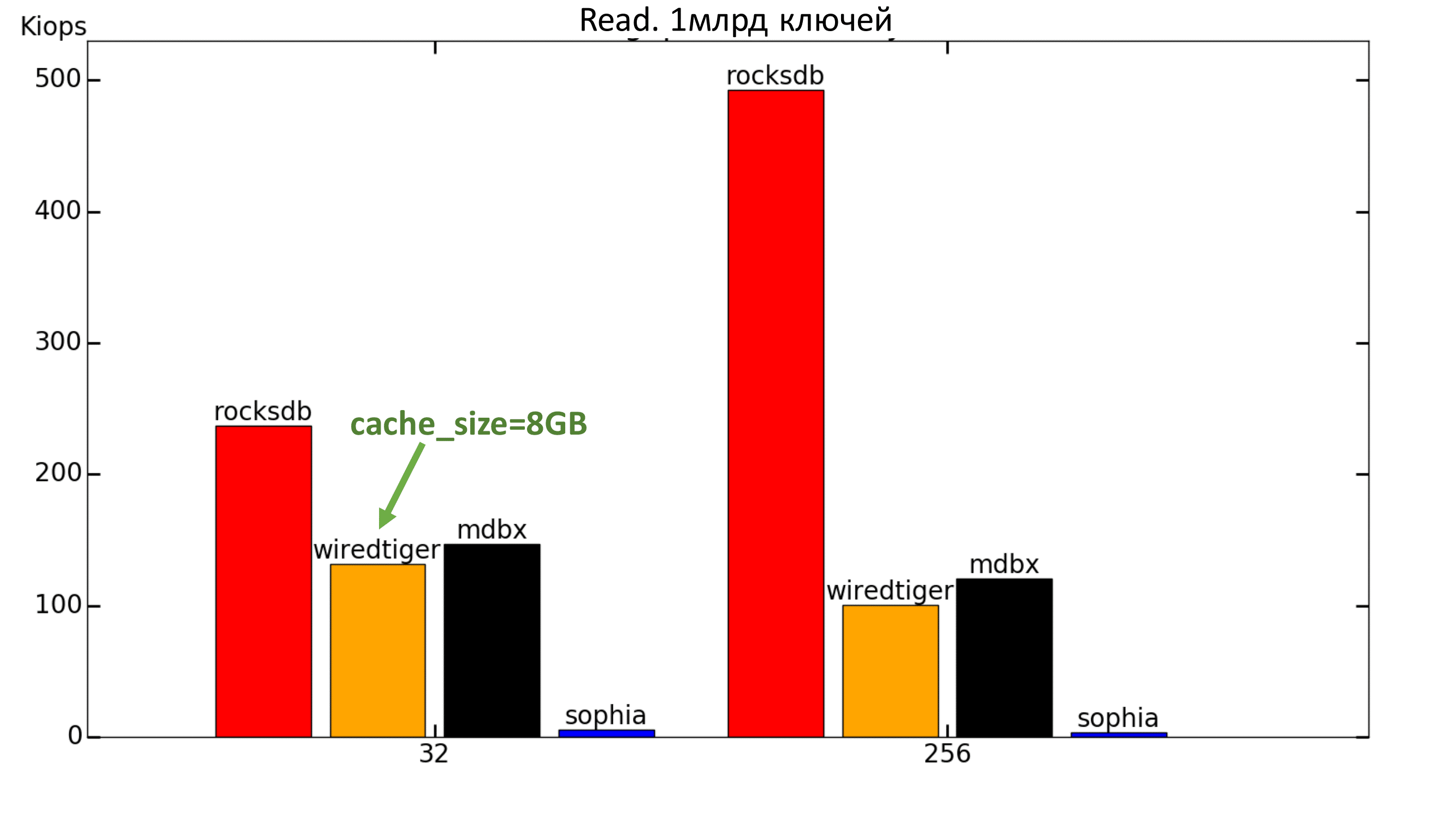

WiredTiger initially showed very low performance at 30 IOPS. It turned out that he has an important cache_size parameter, which is set to 500MB by default. After installing it on 8GB (or even 4GB) everything becomes much better.

For the sake of interest, a test was conducted with the same amount of data, but with available memory> 100GB. In this case, MDBX goes ahead in the read test.

Fig. 7. 100% Read

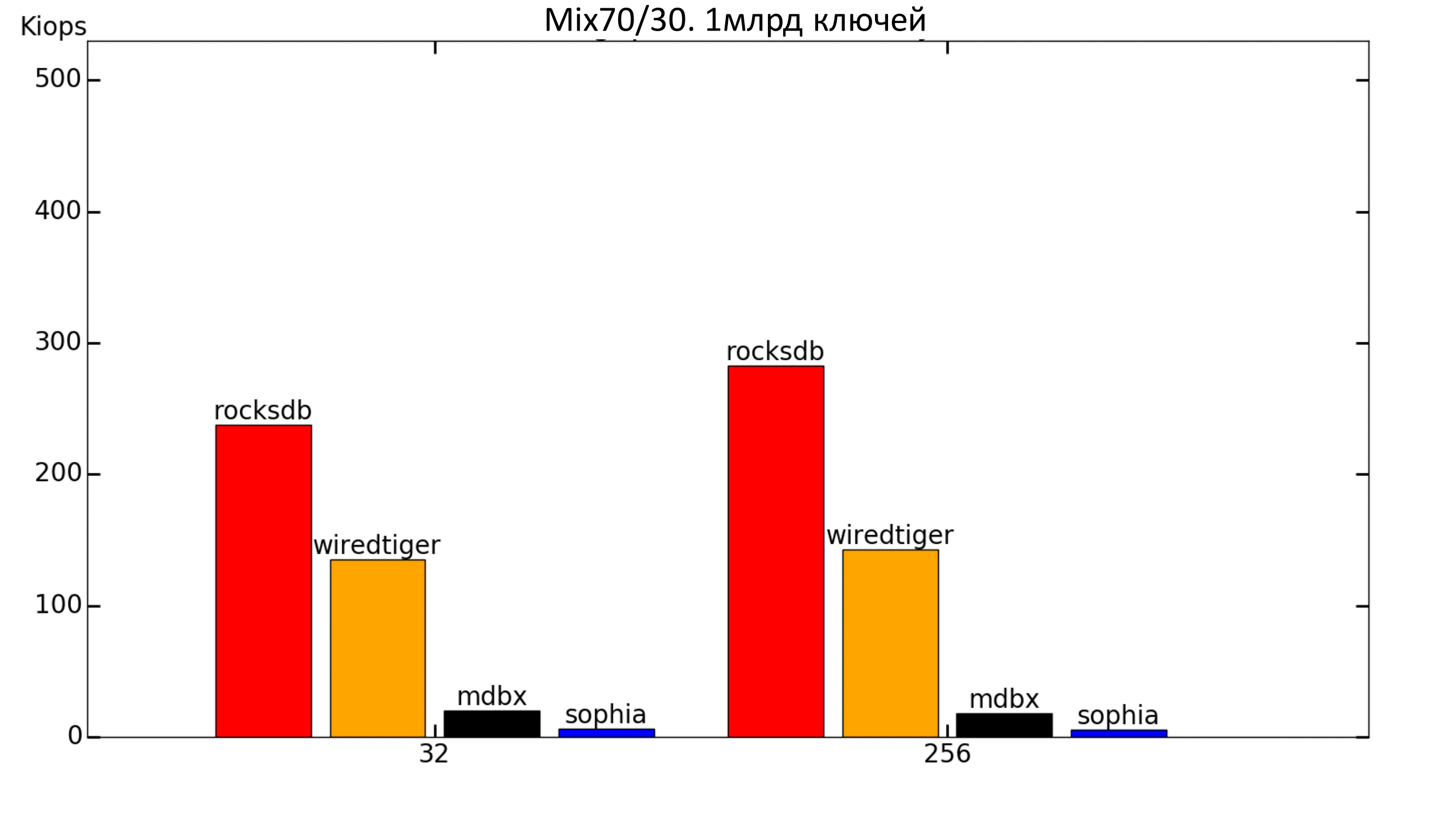

When adding a record to workload, we get a strong drop in MDBX (which is expected, because when filling, the speed was low). WiredTiger has grown, and RocksDB has reduced speed.

Fig. 8. Mix 70% / 30%

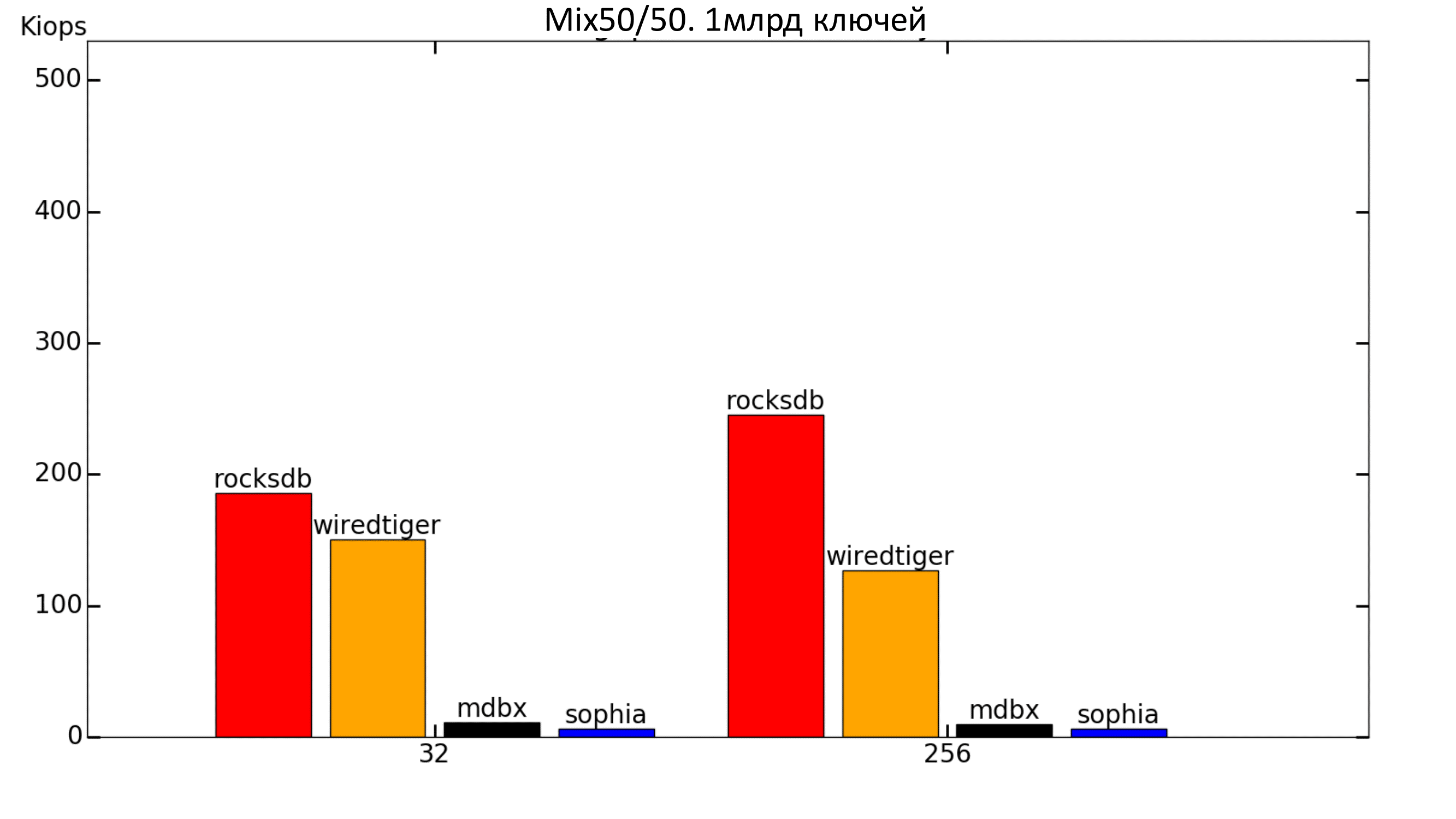

The trend has continued.

Fig. 9. Mix 50% / 50%

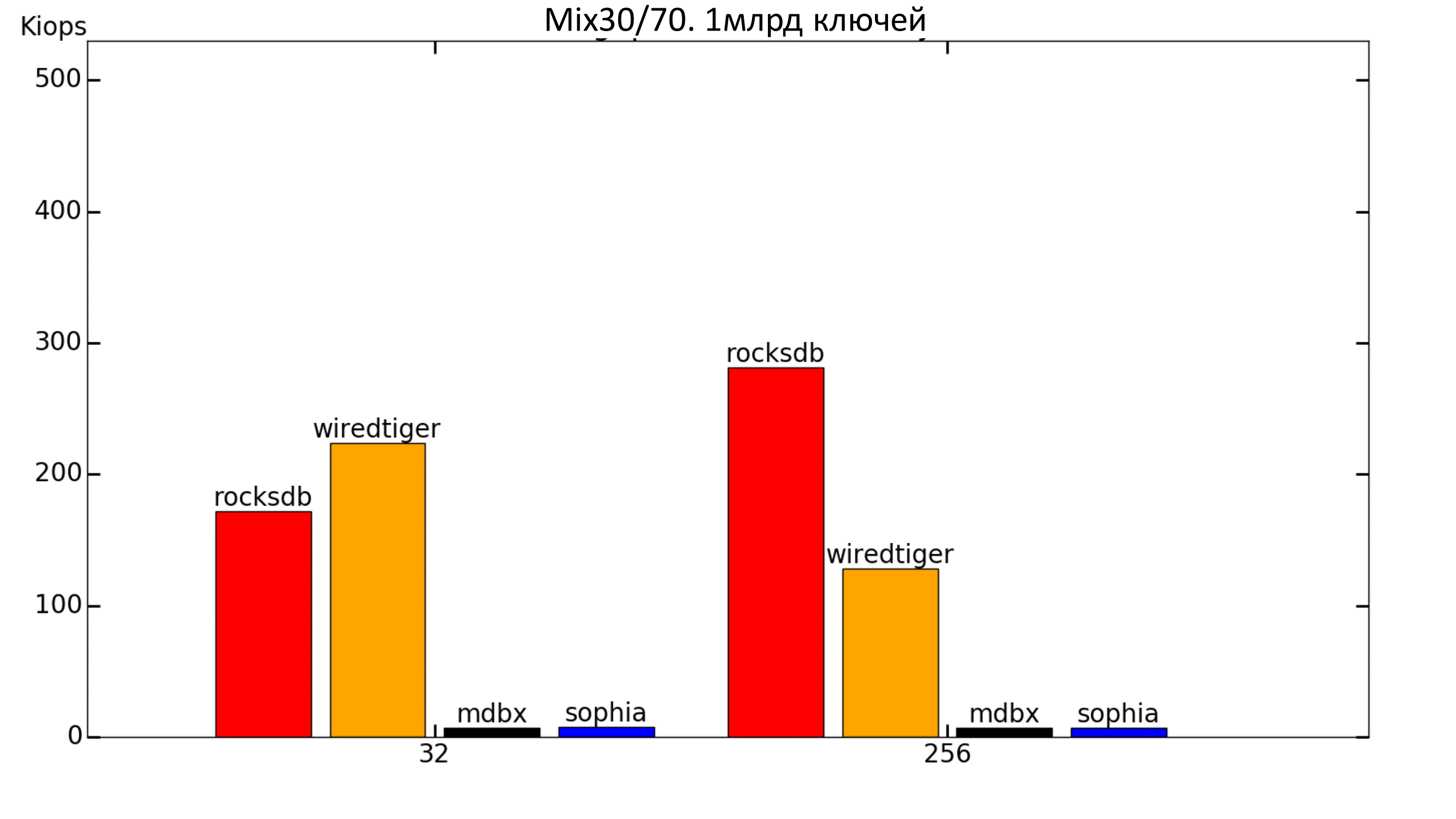

When the recording becomes quite a lot, WiredTiger begins to overtake RocksDB on a small number of streams.

Fig. 10. Mix 30% / 70%

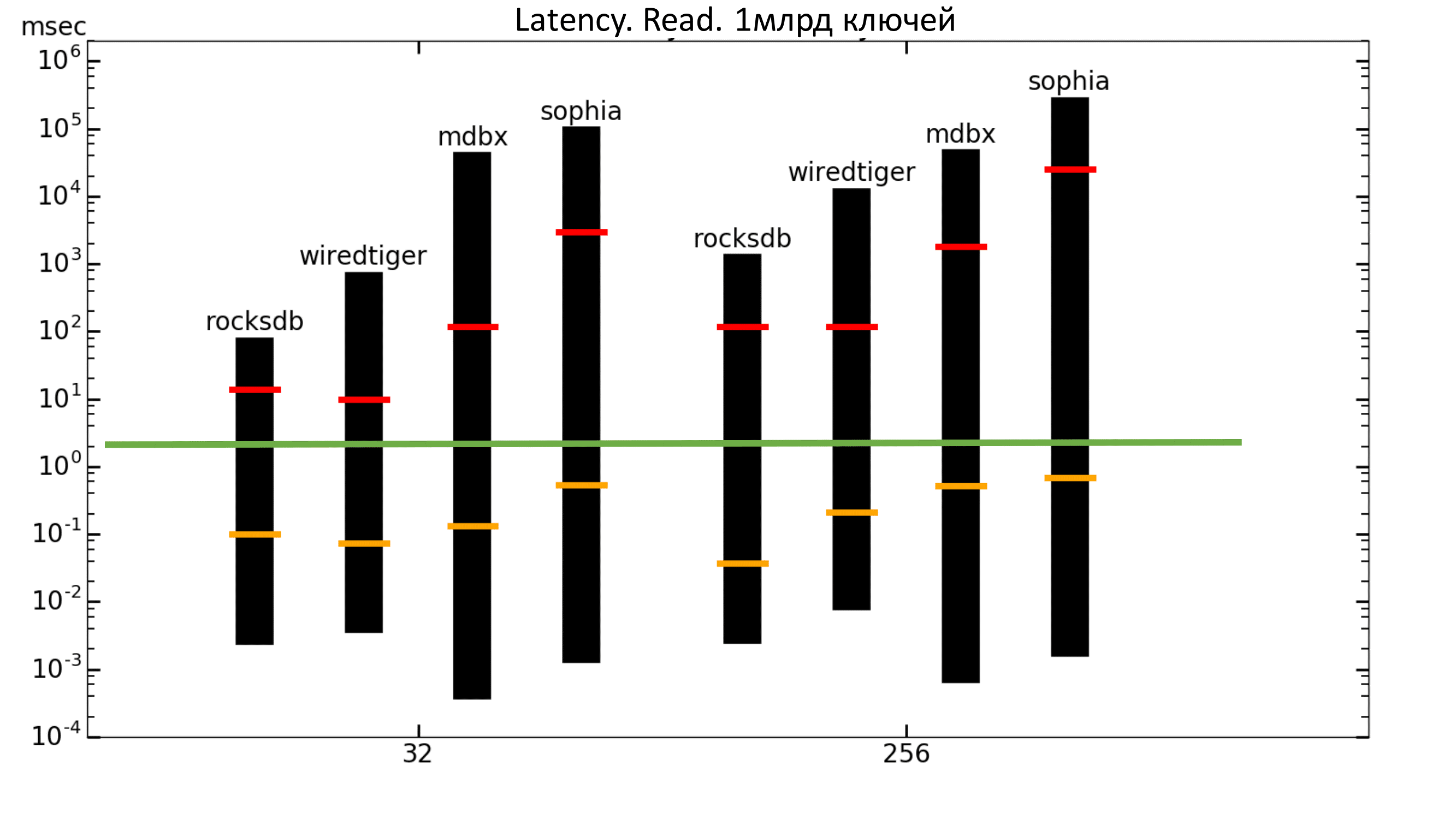

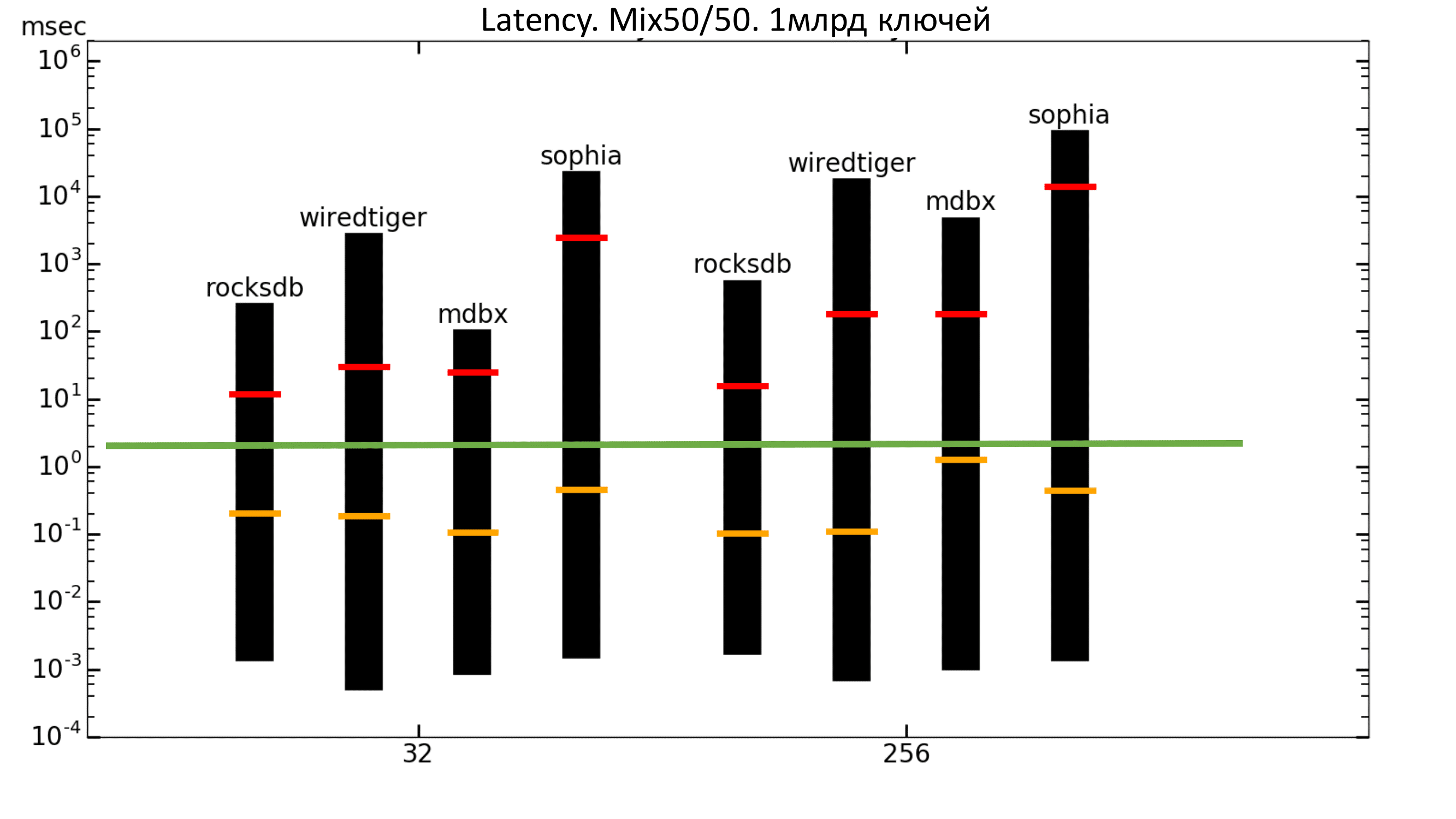

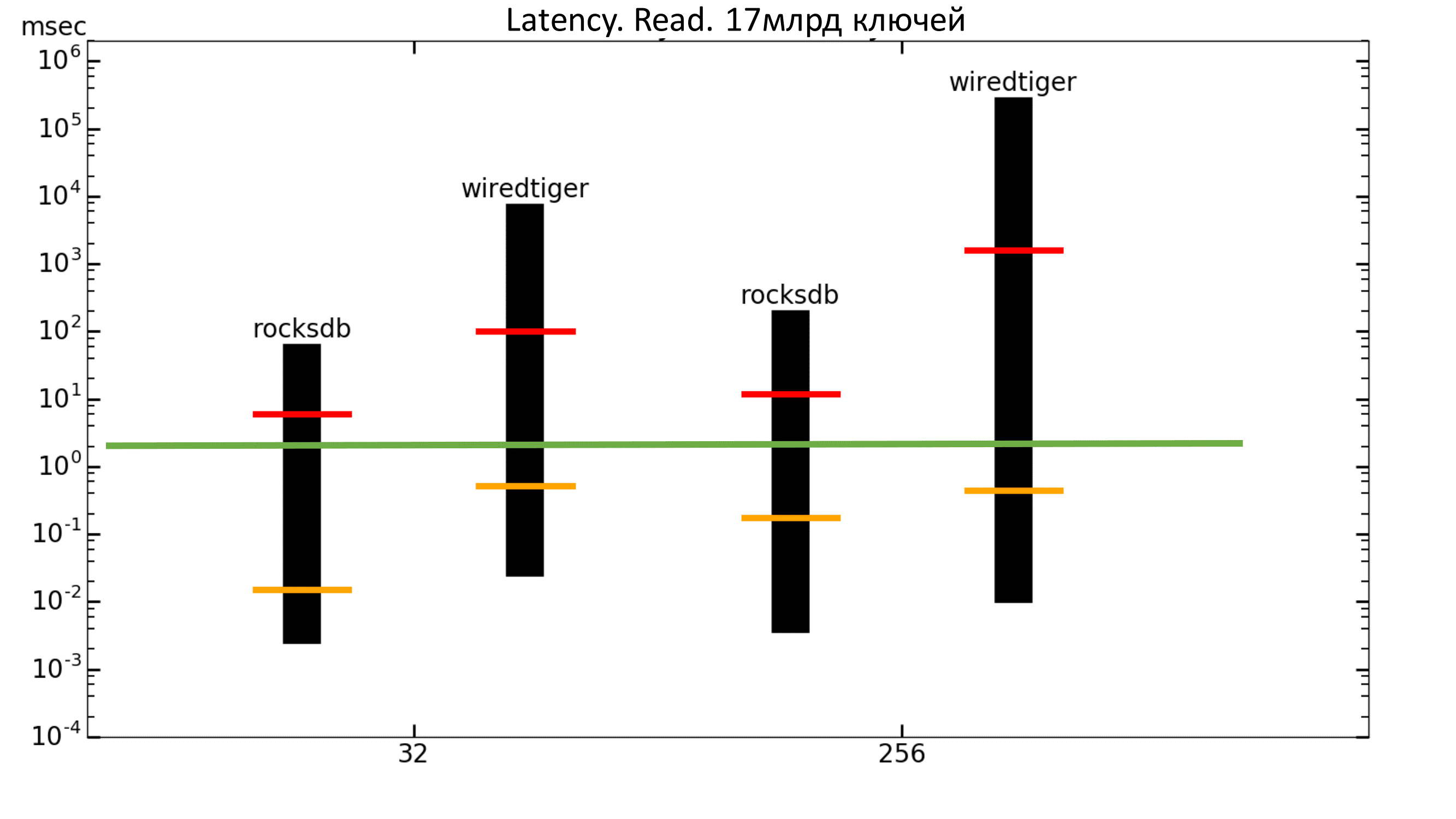

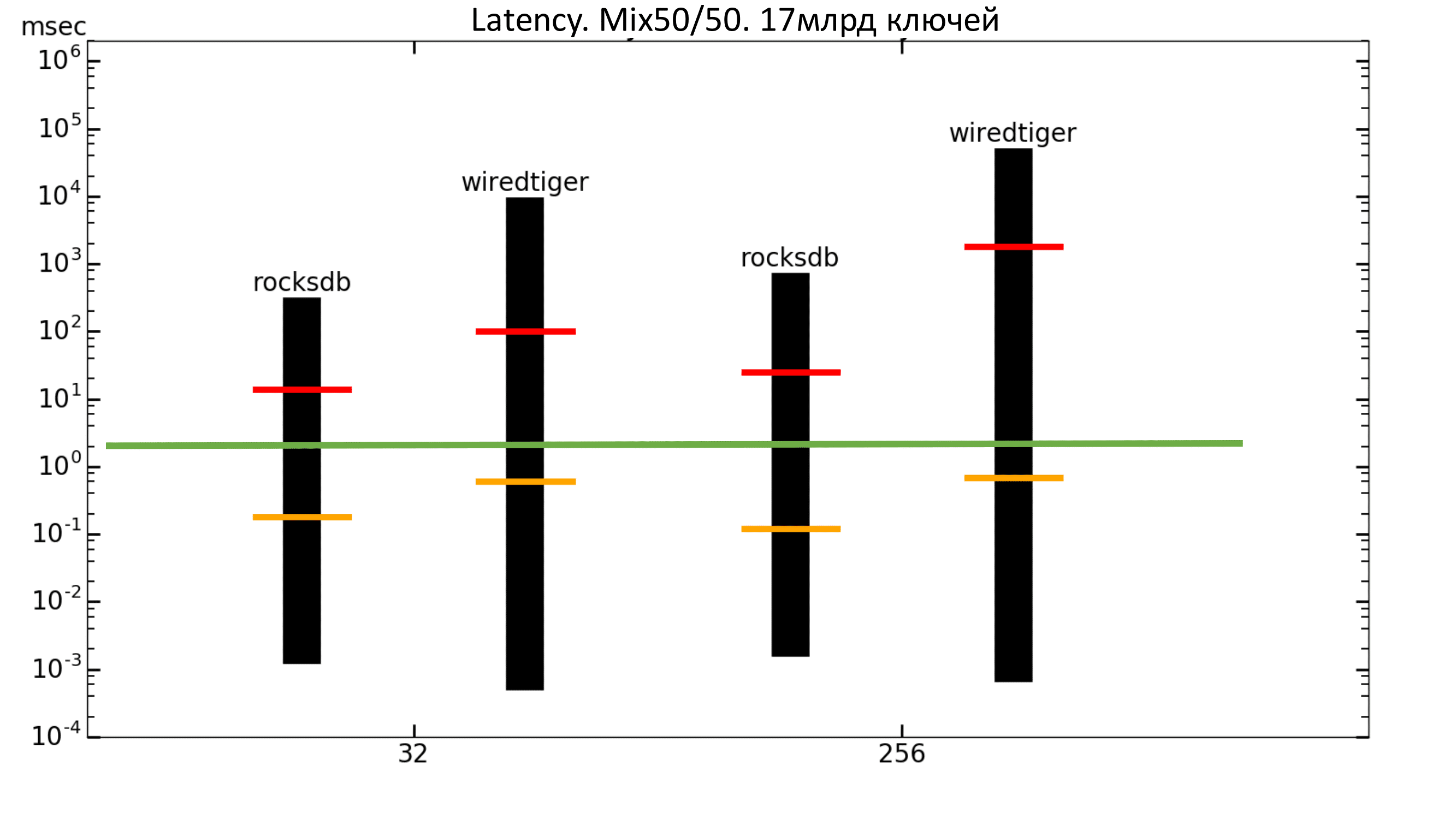

Now you can look at the delay charts. The columns show the minimum and maximum delays, the orange bar is the root-mean-square delay, and the red bar is the 99.99 percentile.

The green bar is about 2 ms. That is, we want the percentile to be no higher than the green bar. In this case, we do not get this (the logarithmic scale).

Fig. 11. Latency Read

Fig. 12. Latency 50% / 50%

Test results. 17 billion keys

Filling out

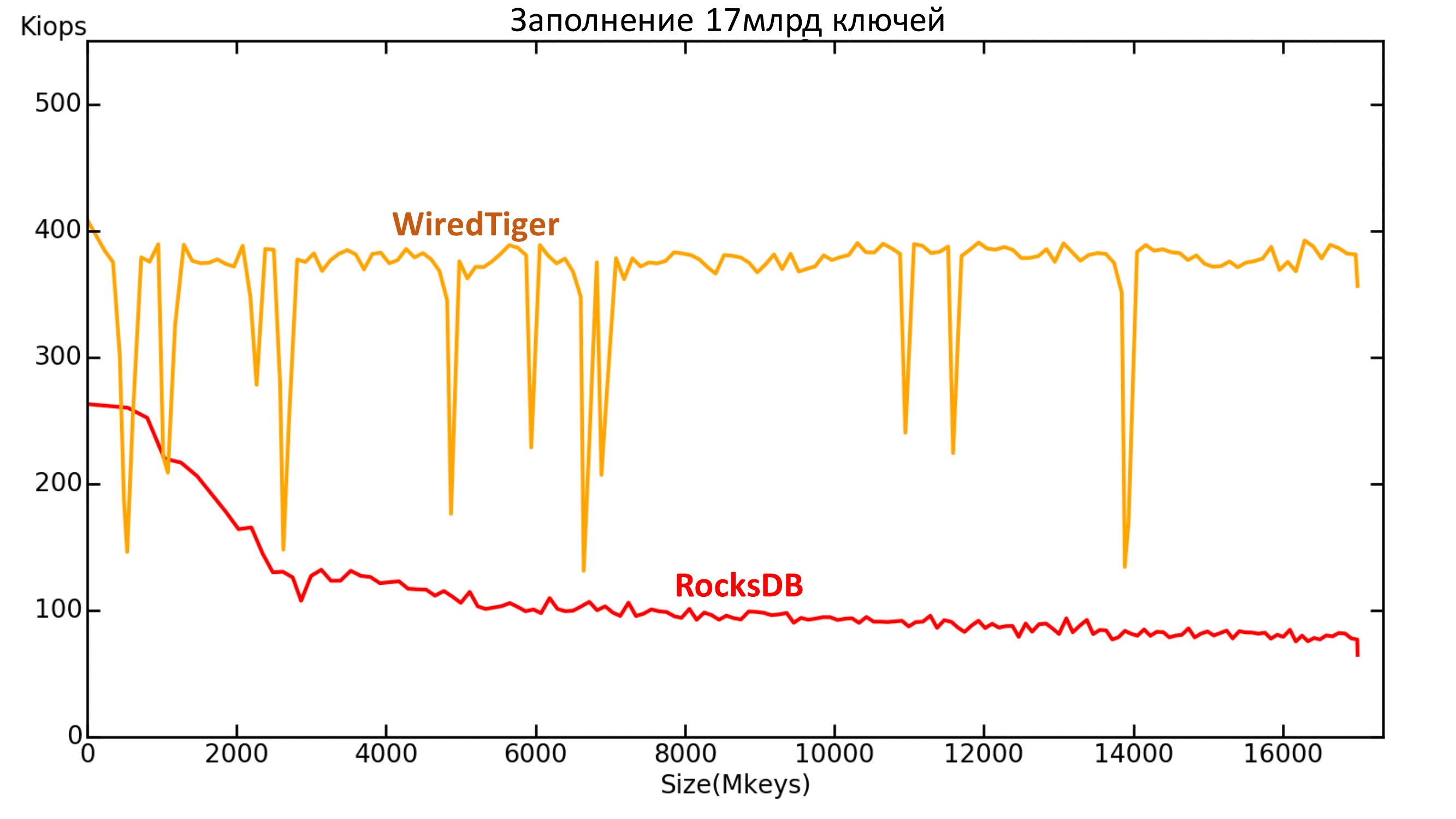

Tests for 17 billion keys were conducted only on RocksDB and WiredTiger, because they were leaders in tests for 1 billion keys.

WiredTiger started having strange attacks, but on the whole it shows itself quite well on filling, plus there is no degradation with an increase in data volume.

But RocksDB eventually went below 100k IOPS. Thus, in the test for 1 billion keys, we did not see the whole picture, so it is important to conduct tests on volumes comparable to real ones!

Fig. 13. Productivity 17 billion keys The

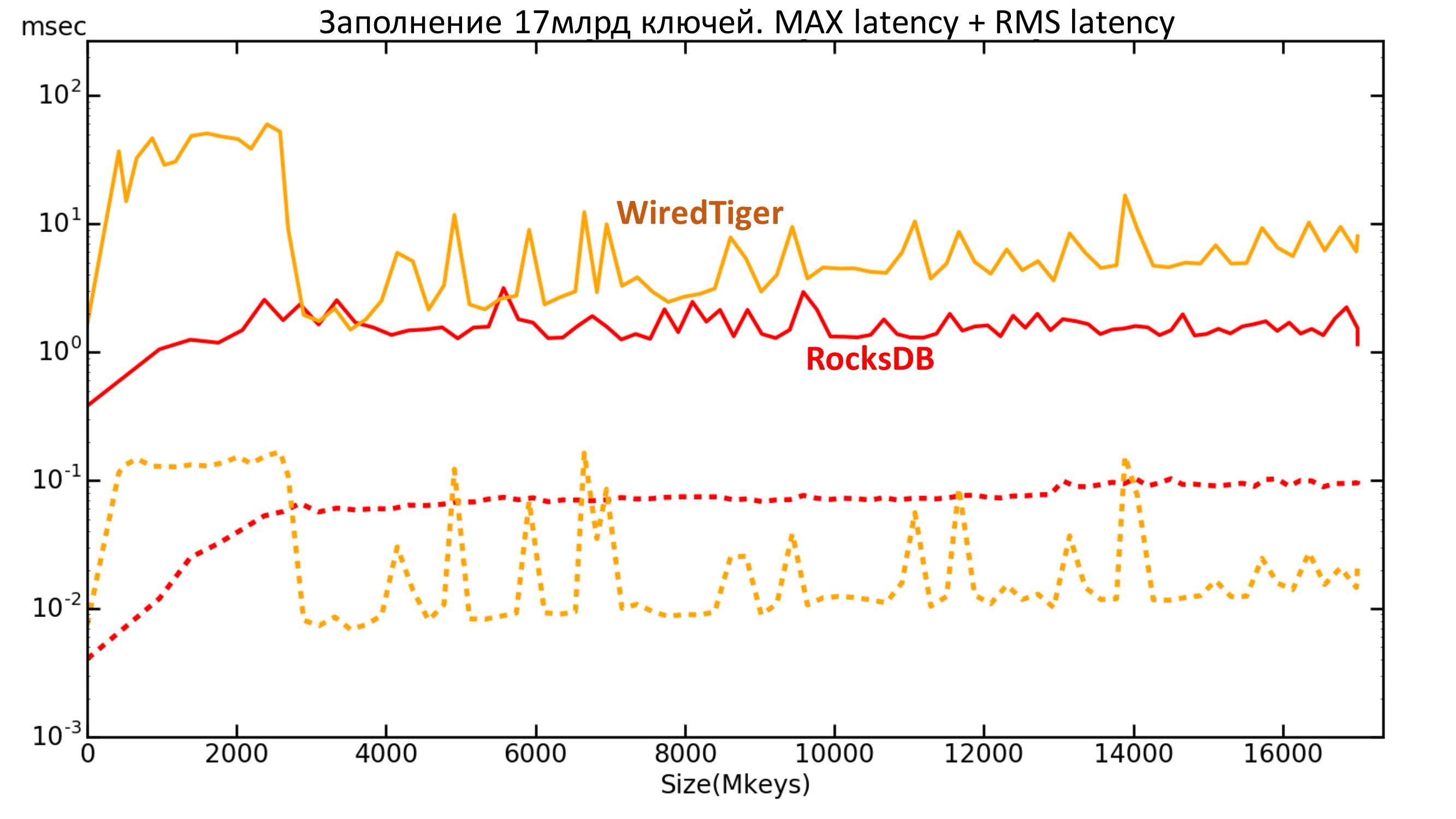

dotted line shows the mean square delay. It can be seen that the maximum delay of WiredTiger is higher, and the mean square is lower than that of RocksDB.

Fig. 14. Latency 17 billion keys

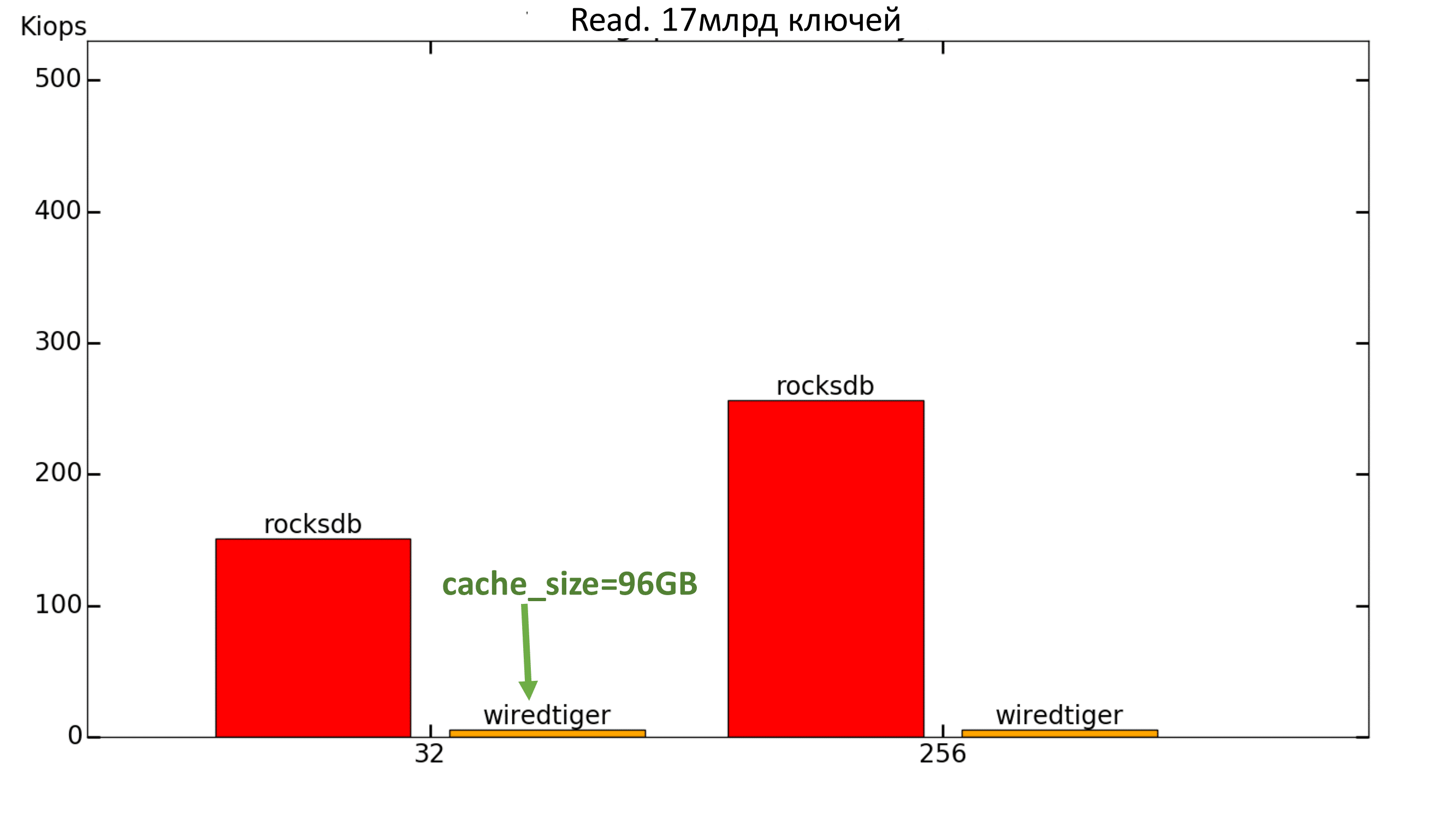

Tests

The same trouble happened with WiredTiger as last time - it showed about 30 IOPS for reading, even with cache_size = 8GB. It was decided to further increase the value of the cache_size parameter, but this did not help either: even with 96GB the speed did not rise above several thousand IOPS, although the allocated memory was not even full.

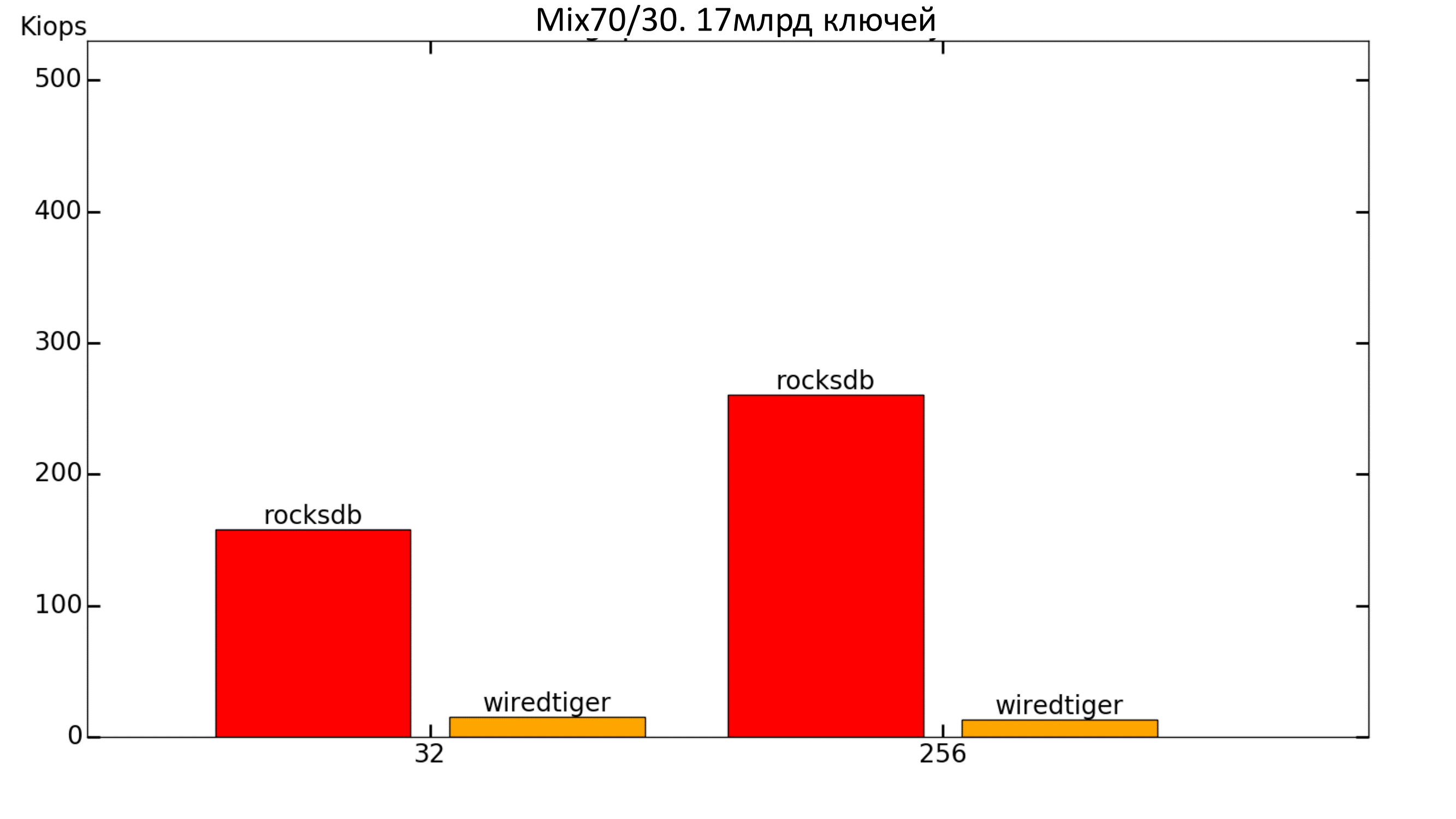

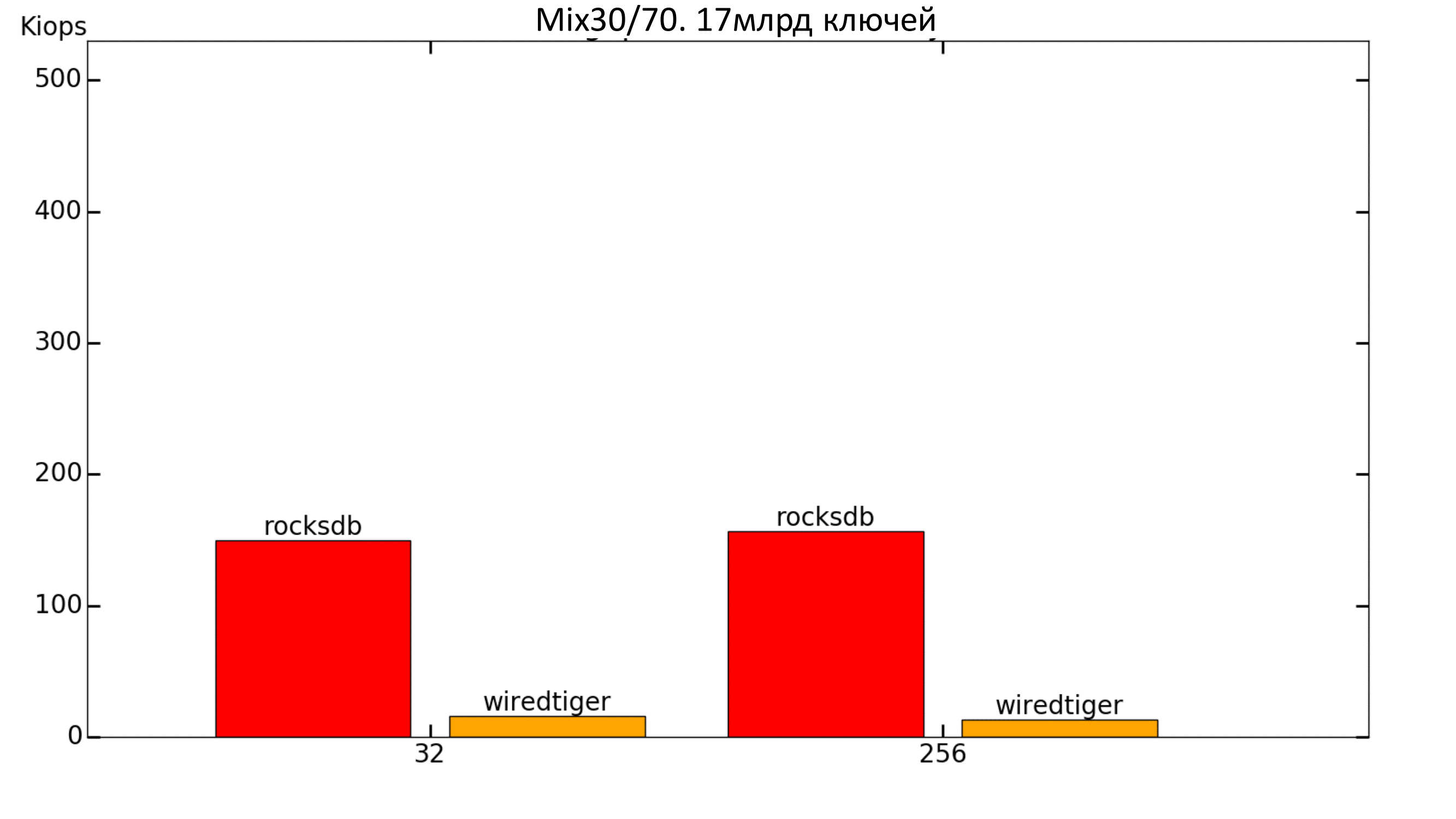

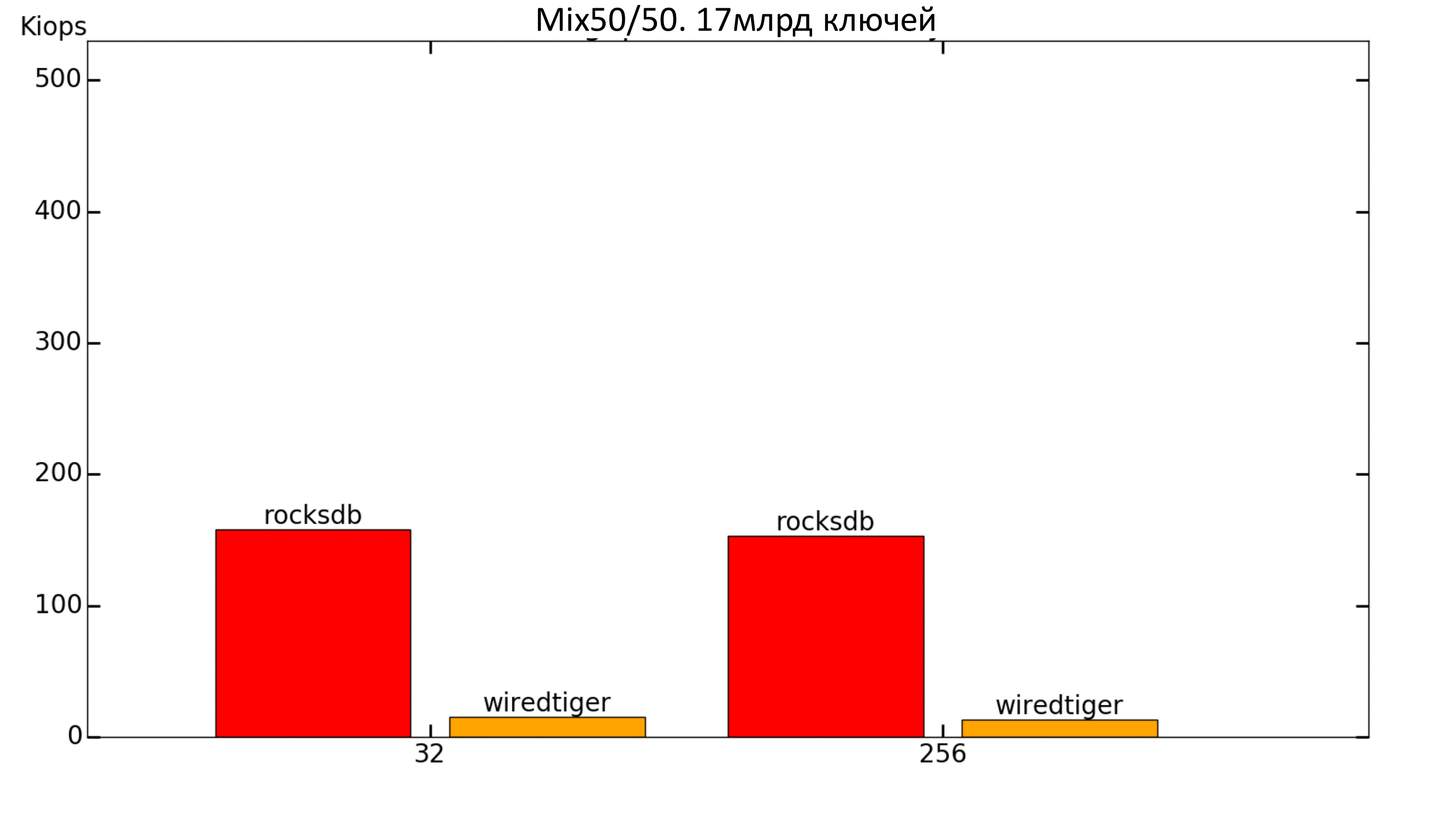

When adding a record to a workload, WiredTiger traditionally rises.

Fig. 15. Productivity 100% Read

Fig. 16. Productivity 70% / 30%

Fig. 17. Productivity 30% / 70%

Fig. 18. Productivity 50% / 50%

Fig. 19. Latency 100% Read

Fig. 20. Latency 50% / 50%

conclusions

From what has been said above, the following conclusions can be drawn:

For a base of 1 billion keys:

- Recording + few streams => WiredTiger

- Write + many threads => RocksDB

- Read + DATA> RAM => RocksDB

- Read + DATA <RAM => MDBX

From which it follows that:

- Mix50 / 50 + many streams + DATA> RAM => RocksDB

For a base of 17 billion keys: RocksDB has a clear leadership .

This is the situation with embedded engines. In the next article, we will talk about the performance of selected key-value databases and draw conclusions about benchmarks.