Automation of development processes: how we at Positive Technologies implemented DevOps ideas

Hi, Habr! In this article I’ll tell you how we at Positive Technologies created and developed a development automation department, put DevOps ideas into development practice and what tools and technologies were used for this, as well as how we organized CI / CD processes. Including I will share successes and plans for the future.

Who we are and what we do

Positive Technologies is a multi-product information security vendor. Positive Technologies products are delivered according to traditional models (in the form of installed software), SaaS, virtual machines and bare metal appliance. Our department, informally called our DevOps department, automates and supports the development processes of all our products: XSpider IS scanner , MaxPatrol 8 security and compliance monitoring system, MaxPatrol SIEM IS events, asset and incident management solutions , cybersecurity incident management systems ASU TP - PT ISIM , application-level security screen PT Application Firewall , code analyzerPT Application Inspector , a multi-threaded malware detection system PT MultiScanner and all other products of the company.

We believe that working on DevOps principles and the responsibilities of the respective automation departments are highly dependent on the specifics of a particular company. The specifics of our company imposes on us the obligation to organize the delivery of a boxed product to end customers and support multiple versions of each product at the same time.

At the moment, our department is responsible for the entire conveyor for serial production of the company's products and related infrastructure, from assembling individual product components and integration assemblies to sending them for testing on our servers and delivering updates to the geographically distributed infrastructure of update servers and then to the infrastructure customers. Despite the large volume of tasks, only 16 people cope with them (10 people solve Continuous Integration tasks and 6 solve Continuous Delivery tasks), while the number of people involved in product development is more than 400.

The key principle for us is “DevOps as a Service”. DevOps in modern IT has long stood out as an independent engineering discipline - very dynamic and technologically advanced. And we must quickly bring these technologies to our developers and testers. In addition, we make their work more comfortable, efficient and productive. We also develop internal support tools, regulate and automate development processes, save and disseminate technological knowledge within the company, conduct technical workshops and do many more useful things.

Our first steps in developing DevOps ideas in our company can be found in the article " Mission is feasible: how to develop DevOps in a company with many projects ."

Continuous Integration and Continuous Delivery Model

When we at Positive Technologies decided to develop DevOps ideas, we were faced with the need to implement them in a situation in which dozens of teams simultaneously worked on projects, both public and non-public. They were very different in size, using various release models and a technological stack. We came to understand that centralized solutions and a high level of organization of development automation are better than the “creative chaos” of conventional development. In addition, any automation is designed to reduce the cost of the product development and implementation process. In companies where there is a dedicated service for implementing DevOps techniques, the serialization of all implemented tools is ensured, so developers can work more efficiently using ready-made automation templates.

Continuous Integration is just one of the processes connecting developers and end users of products. The Continuous Integration infrastructure at Positive Technologies developed around a bundle of three basic services:

- TeamCity - the main system of the organization Continuous Integration;

- GitLab - source code storage system;

- Artifactory is a system for storing collected binary versions of components and products.

Historically, we chose GitLab, TeamCity, Artifactory to store code, organize assemblies, and store their artifacts. We started using GitLab a long time ago because of its convenience compared to svn, which we had before. The advantages of GitLab were that it made it easier to apply changes (merge). As for Artifactory, there is no serious alternative for storing binaries (components and installers). However, you can use file balls or MS SharePoint, but this is more likely for those who are not looking for easy ways, and not for automation. We switched to TeamCity after a long period of operation and assembly on MS TFS. The move was tested for a long time, rolled in on small products, and only then it was carried out completely. The main reason is the need for simple standard solutions and scalability of the assembly and test infrastructure for multicomponent products written in various programming languages. TFS could not give us this.

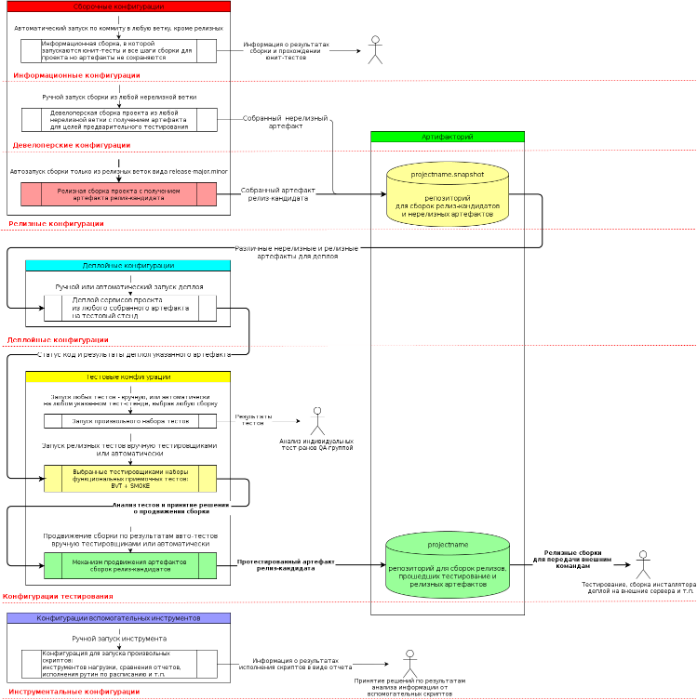

We paid special attention to the development of standard projects for a continuous integration system. This allowed us to achieve unification of projects by highlighting the so-called release assembly scheme with promotions in TeamCity. At the same time, all projects look the same: they include configurations for assemblies whose artifacts fall into Artifactory, and from there they can be taken for deployment, testing and promotion to the project’s release repository (see the diagram in Figure 1). We wrote in more detail about the terminology used and the organization of the typical CI process in the article “ DevOps best practices: recommendations for organizing configurations in TeamCity ”. Figure 1. The basic elements of a typical project for the release assembly scheme with advancements (by clicking on it will open in full size)

We have been developing our Continuous Integration system for more than two years, and so far, in addition to standard configurations for assembly, deployment, testing and promotion of assemblies, we have supplemented it with our Continuous Delivery system, called SupplyLab, for publishing tested release assemblies on Global Update servers where they get from and distributed through Front Update-servers further, up to the customer infrastructure, on which they are deployed and updated.

As noted above, all the solutions provided by our DevOps-team are standard, scalable and built on templates. Despite the fact that the wishes for the infrastructure, programming languages, and assembly algorithms used by the teams differ, the general concept of the CI / CD process remains unchanged (see the diagram in Figure 2):

commit in git - automatic assembly - deployment to test servers - functional and other types of auto-testing - promotion of the assembly to stable - publication on GUS - distribution via FUS on the customer’s infrastructure - installation or updating of the product on specific servers.

At each of these stages, the DevOps department helps developers solve specific problems:

- provide code storage in the GitLab system (allocate a project to them);

- to develop an assembly configuration based on one of the standard templates and ensure the storage of collected artifacts in the Artifactory system;

- implement configurations for deploying artifacts on testers' servers and help them with the implementation of test configurations;

- in case of successful testing - “promote the assembly” - that is, move it to the store of tested artifacts in Artifactory, which also creates a special configuration;

- Further, the assembly can be published on the company's Global Update server, from where it will automatically be distributed to customers' FUS servers;

- Using installation scripts implemented on the basis of SaltStack, the assembly will be deployed to a specific server.

We control each stage through a monitoring system. In the event of a failure, developers can write a request to our service, we will try to localize the problem and fix it.

Figure 2. The top-level IDEF0 model of Continuous Integration and Continuous Delivery processes at Positive Technologies

For more details on how we built and developed our CI / CD system in early 2017, see the article “ Personal Experience: How Our Continuous Integration System Looks ” .

A bit about monitoring

Our team has identified those responsible for monitoring activities: from the application for connecting a server or monitoring service to the final beautiful dashboard of the control system (graphs, metrics, warning system) provided to the teams. The functional responsibilities of this group are: setting up and providing a monitoring service as a service, providing standard templates for monitoring servers and services of various types, designing a hierarchical monitoring system, predicting a lack of resources.

We use the Zabbix monitoring system. To simplify monitoring services, we decided to differentiate the areas of responsibility between the DevOps team, developers and testers, and also wrote our own tools for interacting with Zabbix ( zabbixtools) and use the monitoring as code approach. Let us illustrate with an example: for example, if we have a test bench that needs to be monitored, then the tester assigns him a specific role. Each host has only one role, in which there can be several profiles for monitoring processes, services, APIs and others - the DevOps team provides them (see the relationship between Zabbix entities in Figure 3).

Figure 3. Entity relationship model in Zabbix

To simplify the configuration, a system was implemented in which Zabbix takes as much data as possible from the target server about the observed indicators. To do this, zabbixtools uses the Low Level Discovery (LLD) functionality. This allows you to make any monitoring settings on the monitored server itself, and not in the Zabbix admin panel. Settings files are saved and updated through git.

You can read about the implementation of the monitoring system in the article “ Zabbix for DevOps: how we implemented the monitoring system in the development and testing processes ”.

Deficiencies in the functionality of our CI / CD solutions

All detected problems and shortcomings of the elements of our CI / CD-systems, we fix in our knowledge base DevOps. Summarizing the problems of each specific service within the framework of one article is rather difficult, therefore, I will note only the most indicative ones, for example, for the TeamCity CI system.

At the moment, all assembly configurations in TeamCity are accompanied only by the forces of our department. We make changes to them at the request of the developers in order to avoid problems with the breakdown of their configurations due to ignorance of our templates and tools. Our team also monitors the environment on assembly servers - TeamCity agents. Developers do not have access to them. We limited it in order to avoid making unauthorized changes on the servers and to simplify the localization of possible problems and debugging assemblies: since there is a guarantee that configurations and environments do not arbitrarily change, then the problem can be either in the code or on the network. It turns out that we cannot provide developers with the opportunity to independently debug the assembly process on TeamCity and configure the environment on the assembly agent.

Thus, the first problem is that we lack the ability to delegate the assembly process into teams using the “build as a code” approach. TeamCity has no easy-to-use DSL (specific assembly description language). I would like the assembly description code to be stored in the repository next to the product code, as, for example, in Travis CI. Now we solve this problem by developing our build system - CrossBuilder. It is planned that it will provide developers with the opportunity to give a simple description of the assembly, store and modify it in their project, and also will not depend on the CI system used, realizing the assembly through the plug-in system. In addition, this system will allow you to run assemblies locally through the standalone client.

The second problem with making changes to build environments, like many other engineers, we solved for Linux with the transition to docker-images, for the preparation of which, including storing dockerfiles, the teams are responsible independently. This allowed us to allocate a pool of the same type of assembly Linux-servers, on each of which the assembly of any command in its own environment can start. For Windows, we are just starting to work out a similar scheme and are now conducting experiments.

Our toolkit

We tried many different services and tools, and over time, a certain set of them has evolved. To solve the problems of our department, we use: TeamCity and GitLab CI as CI systems, GitLab for storing code, Artifactory as a binary artifact repository and proxy repository, SaltStack for automating deployment scenarios, Docker for an isolated assembly environment, SonarQube for analyzing code quality, UpSource for conducting team code reviews, TeamPass for secure secrets, VMware as a virtualization tool, Zabbix for monitoring our entire infrastructure, TestRail for storing test results, Kubernetes for managing Linux containers some others.

Of the email clients according to the corporate standard, we use Outlook / OWA and Skype for Business, in all other respects we are not limited by regulations, therefore everyone uses what is convenient for them: Chrome, Thunderbird (Mozilla), Opera; file managers Total Commander, FAR, ConEmu and regular shell; editors MS Word, Notepad ++ and, of course, vim; ssh clients Putty, WinSCP; sniffers and traffic analyzers (tcpdump, windump, wireshark, burpsuite etc); from the IDE we love PyCharm, Visual Studio, WebStorm; for virtualization we use vSphere Client and VirtualBox.

Following the developers of our company, we have to automate processes mainly under Linux (Debian, Ubuntu) and Windows (2003-2016). Accordingly, our language expertise is the same as that of the developers - this is Python, C #, batch / bash. To develop typical modules, for example, meta-scripts for TeamCity, we have a regulation that clearly describes all development steps: from naming scripts, methods, code style to the rules for preparing unit tests for a new module and functional testing of the entire assembly devops-tools (our internal scripts for automation). In the process of developing scripts, we adhere to the standard git-flow modelwith release and feature assemblies. Before merging branches, the code in them necessarily passes a code review: automatic, using SonarQube, and manual from colleagues.

Open DevOps Community on GitHub

We do not see any particular advantages for organizing CI / CD processes based on open-source solutions. There is a lot of controversy on this topic, but each time you have to choose which is better: a boxed solution tailored for specific purposes, or an “as is” solution, which will have to be completed and maintained, but it provides ample customization possibilities. However, last year at the end of our mit ! Op! DevOps! In 2016, we talked about the fact that we were allowed to upload part of the code of the company's tools in open-source. Then we have just announced a community-development DevOps the Open DevOps Community . And already this year, at the Op! DevOps! 2017, we summed up the intermediate results for its development.

In this community, we are trying to combine the work of various specialists in a single system of best practices, knowledge, tools and documentation. We want to share with colleagues what we can do, as well as learn from their experience. Agree, it can be so useful to discuss a difficult task with an understanding colleague. All we do is open tools. We invite everyone to use them, improve, share knowledge and approaches in DevOps. If you have ideas or tools for automating anything, let's share them through the Open DevOps Community under a MIT license! It is fashionable, honorable, prestigious.

The goal of the Open DevOps Community is to create open, turnkey solutions for managing the full cycle of the development, testing and related processes, as well as the delivery, deployment and licensing of complex, multi-component products.

At the moment, the community is at the initial stage of its development, but now you can find some useful tools written in Python in it. Yes, we love him.

- crosspm - a universal manager for downloading packages for assembling multicomponent products, according to the rules specified in the manifest (more on the video here );

- vspheretools - a tool for managing virtual machines on vSphere directly from the console, with the ability to connect as an API library in Python scripts (more on the video here );

- YouTrack Python 3 Client Library - Python client for working with YouTrack API;

- TFS API Python client - Python client for working with the MS TFS API;

- Artifactory - Python client for working with the Artifactory binary data warehouse API;

- FuzzyClassificator is a universal neuro-fuzzy classifier of arbitrary objects whose properties can be evaluated on a fuzzy measuring scale (more on the video and in the article ).

Each tool has an automatic assembly in Travis CI with the calculation in the PyPI repository, where they can be found and installed through the standard mechanism: python pip install.

A few more tools are being prepared for publication:

- CrossBuilder - a system for organizing cross-platform assemblies Build As a Code, similar to Travis CI, but independent of the CI system used (TeamCity, Jenkins, GitLab-CI);

- ChangelogBuilder - release notes generator with a description of changes for a product that receives and aggregates data from various trackers: TFS, YouTrack, GitLab, Jira (more on the video here );

- pyteamcity - a modified python client for working with TeamCity API;

- MSISDK - SDK for creating msi packages for installers.

- SupplyLab - a system for publishing, storing, delivering, deploying and licensing products and updates for them (more on the video ).

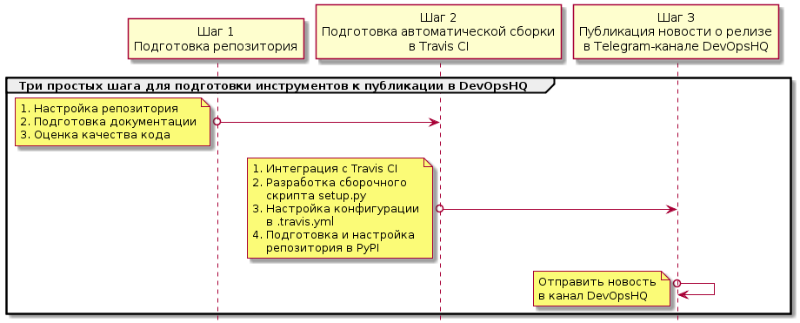

We invite everyone to participate in the development of the community! We have a typical ExampleProject project , which contains the general structure and detailed instructions for creating your own project in the community. It is enough to copy it and make your project by analogy (see the steps for preparation in Figure 4).

Figure 4. A few simple steps to prepare your tool for publication in the Open DevOps Community

Retrospective and future plans

The year 2017 is approaching and it is already possible to conduct a small retrospective analysis of the work done by our team to develop DevOps ideas in Positive Technologies:

- The year 2014 is the realization that you will not get far with the technologies available at that time (svn, SharePoint, TFS), after which work began on the research and piloting of new CI / CD systems.

- 2015 - typical scenarios and processes were prepared and configured, the DevOps system framework was built on the base TeamCity + GitLab + Artifactory bundle (more on the video ).

- 2016 - an active increase in the volume of assembly and test configurations (up to +200 per month!), Transfer of all processes to a standard release assembly scheme with advancements, ensuring stability and fault tolerance of the assembly infrastructure.

- 2017 - consolidation of success and stabilization of project growth, a qualitative transition to the ease of use of all services provided by the DevOps team. A bit more detailed:

- According to statistics, only in November 2017, for ~ 4800 active assembly configurations currently available, ~ 110,000 assemblies were launched on our infrastructure, with a total duration of ~ 38 months, an average of 6.5 minutes. to assembly;

- For the first time in our department, we started the year 2017 with an annual plan that included non-standard tasks that go beyond the usual routine that one engineer can handle, and the solution of which required considerable labor, joint efforts and expertise;

- completed the transfer of assembly environments to docker and allocated two single pool of builders for windows and linux, came close to the description of assemblies and infrastructure on DSL (for the paradigms of build as a code and infrastructure as code);

- stabilized the SupplyLab update delivery system (some statistics on it: customers downloaded ~ 80TB of updates, 20 product releases and ~ 2000 service packs were published).

- This year, we began to consider our production processes in terms of the technological chains used and the so-called final useful result (CRC).

So why do we need an automation department in our company? We, like other divisions, work for the final useful result, therefore the main goal pursued from the implementation of DevOps ideas in our production processes is to ensure a consistent reduction in the cost of production of CRC.

As the main function of our DevOps department, we see the macro assembly of individual parts into a single useful product and reduce the cost of the chain: production - delivery - software deployment.

We have a product company and, based on its future needs, global tasks have been identified for our department for 2018:

- Ensuring stability of development processes due to:

- Compliance with SLA for assemblies and typical tasks

- profiling and optimizing bottlenecks in all areas of work and processes in teams;

- accelerating the localization of problems in complex production chains due to more accurate diagnostics and monitoring).

- Regular webinars on existing developments to reuse solutions in products and ensure serial production.

- Transfer to serial duplication of processes and tools in teams. For example, accelerating the preparation of typical assembly projects, primarily due to CrossBuilder.

- Putting into operation a management system for the composition of the release and the quality of the assembled components and installers, through the use of the capabilities of CrossPM and DevOpsLab.

- Development DevOpsLab - a system of automation and delegation of typical tasks to project teams. For example, the promotion of packages and installers, the generation of standard assembly, deployment and test projects, project resource management, the issuance of rights, the establishment of quality labels for components and installers.

- Development of a standard and replicated process for the delivery of our products through the SupplyLab update system.

- The transition of the entire infrastructure to the use of the infrastructure as code paradigm. For example, prepare typical deployment scenarios for virtual machines and their environment, develop a classification of machines by consumed resources, and record and optimize the use of virtual resources.

Instead of a conclusion

We are sometimes asked what we particularly remember in our work, what situations from our practice of implementing DevOps were noteworthy and why. However, it is difficult to single out any one case, because, as a rule, rumors and their resolution are remembered. We do not welcome rumors, but love the clearly planned work on the built-up process - when everything is stable, predictable, expected, and in the case of force majeure, emergency options are provided. It is by this principle that we try to build our service year after year.

We are interested in working with any automation tasks: build CI / CD, provide fault tolerance of release assembly processes, monitor and optimize resources, and also help people build processes in teams and develop useful tools. For us, the systematic, consistent process of building DevOps as a service is important - after all, this is the pursuit of the best.

PS And here are a few of our Telegram stickers for DevOps .

This article was prepared based on an interview in the System Administrator magazine and a report on Op! DevOps! 2017 ( slides ).

Author : Timur Gilmullin , head of process development support group, Positive Technologies