Tech diary: half a year of mobile PvP development

In March 2017, we assembled a small team and set about developing a new promising project. Without special details, I can say that the task was interesting and seductive - mobile, synchronous, team PvP. After 7 months of active development, I wanted to tell colleagues from other projects and departments of Pixonic technical details and I prepared a presentation for them, which later turned into this article.

As a technical team, I’ll tell you what tasks and problems we managed to face, how we solve them and why. We use an iterative approach to adding functionality to the project and at the moment we have implemented: PvP on iOS and Android (both platforms play on the same servers); a set of characters, three dozen game mechanics, bots; matchmaking; a set of meta features (character customization, leveling and others); solved the problem of scalability to the whole world.

So let's go.

Disclaimer

But I must immediately make a reservation that the solutions described in the article are already historical phenomena and facts, made up of many circumstances: business and game design requirements for the product, deadlines, team potential and the unknownness of some problems at the start. This is not best practice, but an experience that is never redundant.

It hurts (,) fun

Even before the start of development, we already presented some difficulties that we will definitely have to face. Namely:

- Synchronous PvP . This is never easy, you will have to choose and implement a whole range of technologies, in any of which your team may not have experience. There are a large number of combinations of technologies in order to solve problems: smooth pictures, hiding delays, cheating, simulation performance (server or master client), the problem of getting into MTU and the cost of traffic. The message delivery lag cannot be canceled, while messages must be delivered as quickly as possible and their delivery should not depend on each other. For these reasons, we could not use the TCP protocol, and the use of UDP adds a set of cases that will also have to be processed.

- Mobile platforms . Additional work on performance and limitations (example: maximum use of RAM). You also need to keep in mind that you will always have players with unstable internet and poor ping - you cannot ignore them.

- Availability from anywhere in the world . Ideally, the player should not bother with the choice of server, the application should automatically understand the location of the device and find the optimal connection point.

- The specifics of the genre . Morally, we were ready that there would certainly be mechanics that no one had yet implemented (especially projected on our technical limitations) and would have to become a pioneer complete with all the bumps.

- The width of the technology stack . Looking at the functional requirements, we can say for sure that at least 3 subprojects are combined in one project: a game client, a game server and a set of meta-micro-services. It turned out to be a difficult task to synchronize the team for the synchronous release of features. A separate problem was how to store the code, how to fumble and reuse it.

Then I tried to describe our situation in the form of “problem - solution”.

Code storage and sharing

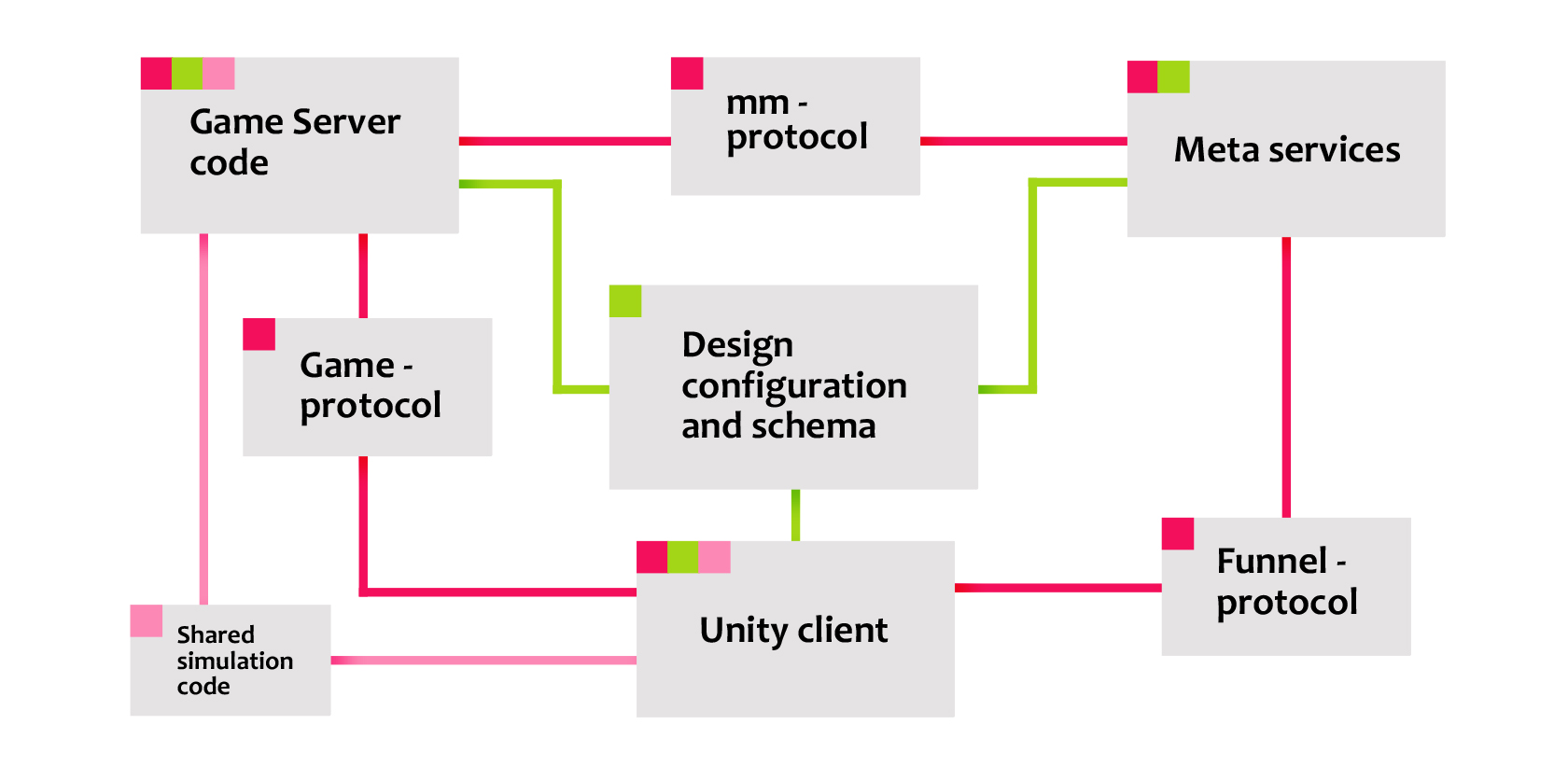

As already mentioned, the project consists of three subprojects:

- Unity client;

- Windows game server using Photon (not PUN) transport

- a set of services for meta games (Java).

I figured that storing them all in one git repository has more minuses than pluses, since all CI processes become more expensive and take longer. As a result, we have three repositories.

We use protocol buffers to exchange messages between all three subprojects. It follows that we must somewhere store the .proto files and the generated code files for these messages (by the way, committing the generated files is not a good tone, but for Unity it reduces the number of compilations when opening, which saves time). Moreover, they must be different for different protocols, it makes no sense to reuse packets, since the server and client need different arguments. There was a task how to receive these files to all projects. We solved it with git submodules. Between each of the 3 pairs of main projects, we started an additional repository and added them as submodules to the main projects. Now there are six repositories.

To speed up the debugging of the simulation and the ability to run the game simulation without being tied to the server, we separated the simulation code. This gave us a lot of opportunities - profiling the launch of hundreds of games as a console application with time acceleration, or, for example, using simulation in the Unity client for local battle training work. This is also very convenient for production itself: a programmer, creating a new game feature, can play Unity right away, he doesn’t even need to deploy a local server. So that the simulation code could be in the client and in the game server, it also had to be moved to a separate submodule.

After some time, we needed to store and track changes in game configs, the necessary parts of which then diverged from subprojects, and we made a separate submodule containing a proto-scheme for its deserialization.

I already talked about the pros, now about the cons:

- I had to train the team to work with submodules, many did not have experience working with them.

- You must support a single authentication option in git. For example, to build a client in teamcity, I want to pump out the client repository with submodules via ssh. But in a counterexample, it’s very wasteful to work with personnel (for example, artists) who have little git experience explaining to them what public and private keys are. This problem was eventually "resolved" by registering modules in .gitmodules via relative links:

[submodule "Assets/shared-code"] path = Assets/shared-code url = ../shared-code.git

But it’s not a fact that SourceTree of your version will be able to understand this, and all repo will have to be stored on the same host of the git. - It may be worthwhile to combine the protocol repositories into one and configure stripping when building the client to reduce the number of submodules and the overall number of operations with git, but this may give other difficulties, since all 3 teams of subprojects will commit changes there.

- Another important minus is that if you work on git-flow, then you will have to maintain feature branches in all affected repositories, otherwise the technical debt of integrating deep-level modules into top-level repos will accumulate.

Example: a programmer makes a feature in his branch of the main repo and in the feature branch of a submodule, and at this time new functionality is poured into the develop branch of the submodule, which will not allow you to simply upgrade to the latest version. It is necessary to change the code of the main module to support these changes. As a result, the programmer will not be able to inject his finished feature into develop until he writes an adaptation for the latest version of the submodule, which is often not connected with its feature. This slowed down the integration and once again switched programmers from the contexts of their tasks. As mentioned above, you must first write the submodule changes in the feature branch, then write the adaptation of the main repository in the feature branch too, and only after that, after passing reviews and tests, these branches merge simultaneously into the development of their repositories.

Player Input Loss

Now we turn to the problem much more closely related directly to the product. Let me remind you that between the game server and the mobile client we use non-reliable UDP, which does not guarantee message delivery or the correct order. This, of course, imposes a number of problems critical for the player himself. A good example is an expensive, powerful rocket and a button to launch it. The player waits for a suitable situation to use this ability and presses the button 1 time, in the most favorable moment, in his opinion. We must guaranteely and as quickly as possible deliver this information to the server so that the player does not manage to pass this moment. But if this package disappears or arrives in 2 seconds, then our goal is not achieved.

At first, we considered the strategy of sending data in the absence of confirmation of receipt, but we wanted to bring the loss of time as close as possible to the period of sending client data. An additional task was to make the shot button pressed during stunning work after the character exited the stun in a simulation on the server.

The solution turned out to be inexpensive, but effective:

- Each frame of the client’s work, we record the input and number it.

- We store them in the collection on the client and send to the server several last records at once (for example, the last ten). The size of such messages is very small (on average about 60 bytes from the client) and we can afford it.

- The server receives messages, takes from them only the part that has not yet been received and adds them to the processing turn. Thus, if some package does not reach the client from the server, any next package that arrives will always contain the entire latest input history.

- To solve the problem of deferred use of skill when the character is ready (exit the camp), and so on, all the data is there. The processing logic knows which input frame was processed for a particular player, and in certain gameplay situations will continue to process it as soon as possible. The advantage of our approach in this case will be that we will rarely miss “holes” in the input queue.

The problem of image smoothness on the client

Using non-guaranteed delivery methods, we also face the problem of getting the state of the world back from the server to the client. But more on that later. To begin with, I would like to describe the mandatory problem that the team of any project-game that transfers states over the network solves.

Imagine a game server - this is an application that does the same thing a certain number of times per second: it receives input over the network, makes decisions, and sends the state back over the network. And now imagine a client - this is an application that (among other things) displays the game state for a certain number of frames per second (for example, 60). If you just let it display what came from the network, then every 2-3 frames the client will display the same incoming state, and the display will occur jerkily, and in the case of uneven delivery, it will also accelerate / decelerate time. In order to make the display smooth, it is necessary to use interpolation between two states from the server and display the calculated intermediate values for several necessary frames.

We are leaving the client in the past ...

But we have only one state for this moment and there is no second to draw intermediate frames. What to do? Solution: we shift the time of client events a little to the past so that at the time of rendering we already have a theoretical opportunity for the next state of the world to come.

In practice, it turns out not so rosy: UDP does not guarantee delivery, and if the state of the world does not arrive at the client, then you will not have data to display for a few frames - you will get the so-called "frieze". Balancing between the input lag and the percentage of packet loss, we use a departure to the past for 2 sending periods + half RTT. Thus, even if one packet is lost, you will have time to receive the next. At the same time, if packet reception was interrupted for 2 or more periods, then it is very likely that further disconnect will occur, which is much more understandable for the player than spontaneous lags. The player will see the reconnect window and it will not spoil the gaming experience so much.

Intermittent Ping Problem

In practice, a scheme with interpolation and a fallback does not always work well. The player could start the game by playing Wi-Fi at home with a ping of 10 ms, and then go outside, take a taxi and ride around the city with mobile internet turned on with a ping of already 100 ms. In this case, remembering the RTT at the start of the game, the player may constantly not have enough time for interpolation, even if the packages will be delivered perfectly, evenly and without loss.

In our case, we solved this problem as follows:

- Each time we analyze the packet arrival time and what server time it means.

- If the state of the network worsens, then we will smoothly go into an even larger past, exactly at the margin we need, so that the rule remains:

2 * Send Rate + RTT/2 - The input lag will increase on the client, but the picture remains smooth.

Visually, the problem remains that when we discovered this, the client already began to lag. We do not move it to the past instantly, but within a short time (0.5 sec), in this case it still will not have 1-2 frames of data. In case of ping drops of more than 1 Send Rate, the player will notice a small (1 \ 30 second) one-time jerking.

In the same way, in the opposite direction, if ping decreases, the client determines this and brings the display closer to the present in order to achieve the optimal balance between a smooth picture and the least input delay.

Answers on questions

In conclusion, I would like to answer a few questions that you might have.

Why, instead of fighting the input lag, do we not locally predict the behavior of the client in the simulation?

This decision comes from the genre and the mechanics of the game: if you are making a first-person shooter, with instant phenomena and the absence of cancellation of the influence of players, then local prediction + lag compensation is perfect for you. If your gameplay involves a large number of frosts, pushings, and other mechanics that affect players and change their behavior, then the manifestation of network artifacts will approach 100%. The most desperate in this regard, I consider the Blizzard Overwatch project team, who have found the optimal balance between minimal artifacts and the need for local prediction. But this is on a PC, where the average ping of players theoretically allows this. In our case, in a local player, in 100% of cases, the jerk forward would end with a “teleport” to the initial state with any stunning.

How will players from different countries with different pings play?

Whoever has the best ping will naturally have an advantage, since he has more time to react. Example: an adversary wants to throw a projectile at a player. A player with a lower ping will notice the beginning of the enemy’s animation a little earlier and he will have more chances to perform a defensive action. Moreover, the protective action will reach the server faster and the probability of managing to evade will increase.

Who will shoot first if both clicked at the same time, and ping one player more?

The server does not take into account the time of pressing, only the arrival time of the input and its order, so the principle from the answer above works.

One more thing

I hope that the material I wrote will be useful to other developers embarking on a similar path. In general, there are so many problems and solutions that have accumulated during this time on the project that they will be enough for more than one article.

Good luck