Trust in Mobile SDK

The recent history of the backdoor in the popular NPM-library led many to think about how much we trust a third-party code, and how to safely use it in their projects (potentially substituting thus users of our products).

But even months before the “thunder struck”, Felix Kraus (known to mobile developers as the creator of Fastlane) spoke at our Mobius 2018 Piter conference about a similar thing: trusting mobile developers to third-party SDKs. If we download the popular SDK from a well-known company, is it all right there, or can something also go wrong? Where is the vector of attack and what should we think about in this connection?

And now we deciphered and translated his report - so that under the cut you can at least watch the original video, at least read the Russian-language text version. Since Kraus is involved in iOS development, all examples are also from iOS, but Android developers can abstract from specific examples and also think about it.

SDK Security

After seeing which SDKs are the most popular in iOS development today, I decided to investigate how vulnerable they are to ordinary network attacks. As many as 31% of them were potential victims for simple man-in-the-middle attacks, meaning that a hacker injects its malicious code into the SDK.

But in general, is it worth it to get scared? What's the worst thing he can do with your SDK? Is it all so serious? You need to understand that the SDK included in the bundle of your application is launched in its scope - that is, the SDK gets access to everything that your application operates on. If a user allows an application to access geolocation data or, say, a photo, then this data also becomes available for the SDK (no additional permissions are required). Other data that can be accessed from the SDK: encrypted iCloud data, API tokens, and in addition, all UIKit Views containing a lot of different information. If a hacker intercepts an SDK that has access to this kind of data, then the consequences, as you understand, can be quite serious. In this case, all users of the application will be affected simultaneously.

How exactly can this happen? Let's take a step back and talk about basic network stuff and abuse.

Network

Let me warn you that my explanations will not be 100% accurate and detailed. My goal is to convey the essence, so I will present everything in a somewhat simplified form. If you are interested in the details, I recommend you to look at my blog or explore the topic yourself.

As you remember, the main difference between the HTTPS protocol and the HTTP protocol is data encryption during transmission. The lack of encryption for HTTP, by and large, means that any host that is inside the network can, if desired, freely listen to and modify any packets transmitted through it; at the same time, it is not possible to check whether the integrity of the packet has been compromised. All this is true for public Wi-Fi networks, and password-protected, and local Ethernet-networks.

When using HTTPS, the network hosts can also listen to the transmitted packets, but they are not able to open them - only the metadata of the packet is visible, in particular, the addresses of the sending and receiving host. In addition, HTTPS provides the possibility of verification: having received a package, you can check whether it has undergone changes while it is in transit.

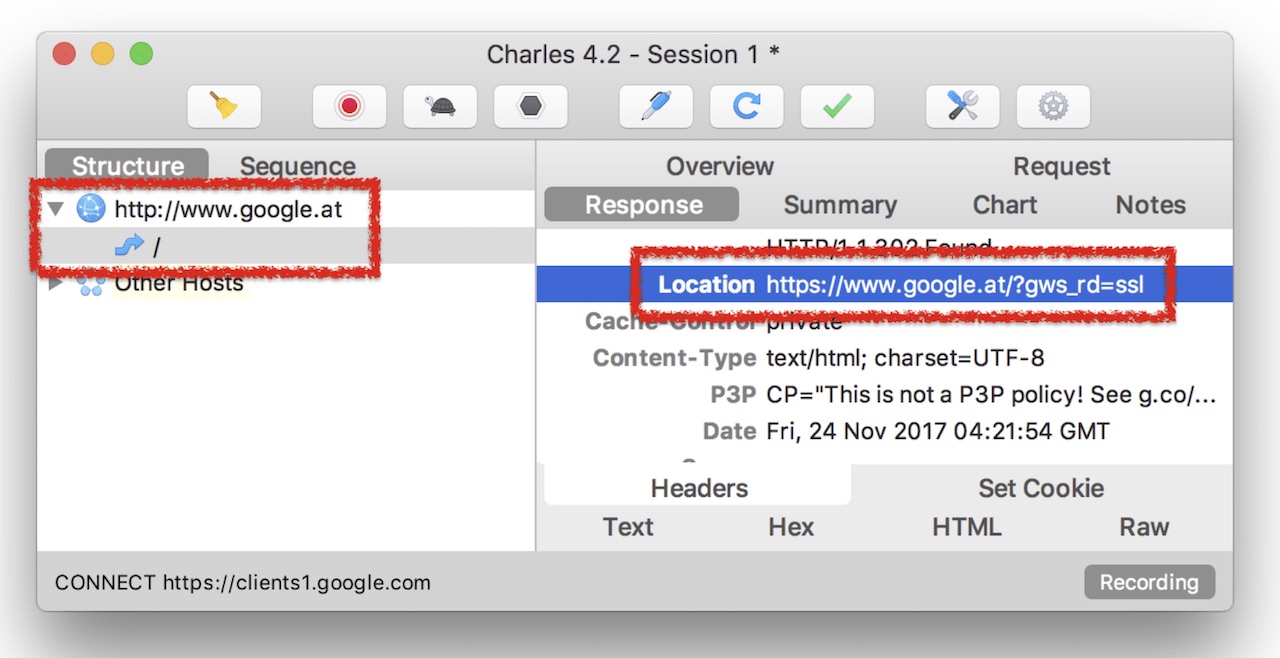

Previously, everything worked as follows. When you entered “google.com” into the address bar without specifying a protocol, the browser defaulted to sending your request via the HTTP protocol. But since the Google server prefers to communicate via HTTPS, you received a new link (redirect) with the prefix "https: //" from it. Here is the screen of Charles Proxy (tool for monitoring HTTP / HTTPS traffic), demonstrating this:

However, the new link itself was sent via HTTP. It is easy to understand what might go wrong here: both the request and the response are transmitted via HTTP, which means, for example, you can intercept the response packet and replace the location URL in it back with “http: //”. This simple type of attack is called an SSL strip. To date, browsers have learned to work a little differently. But understanding what an SSL strip is, we will further come in handy.

At times of study, you can remember the OSI network model. I perceived it as something unbearably boring. But he later discovered that, oddly enough, the OSI model does not just exist and may even be useful.

We will not consider it in details. The main thing to understand is that everything consists of several layers responsible for different things and at the same time being in constant interaction with each other.

One of the layers attempts to determine which MAC address corresponds to a specific IP address. To do this, a special broadcast request is performed, the first reacted device is recorded, and subsequently the packets are sent to it.

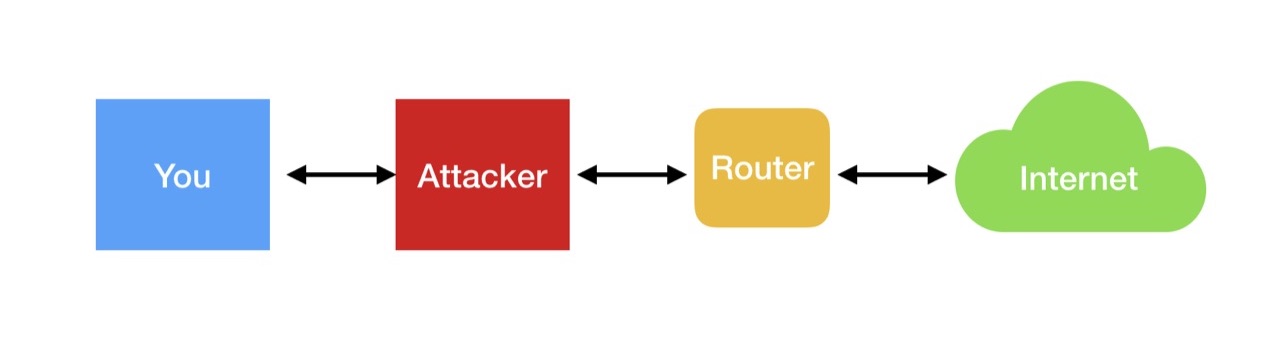

The problem is that a hacker can respond to a request faster: “yes, send me all the packages”. This is called ARP spoofing or ARP cache poisoning. In this case, the scheme of your interaction with the Internet turns into this:

All packets now pass through the hacker's device, and if the traffic is not encrypted, it will be able to read and write. In the case of HTTPS, the possibilities are less, but it can be traced to which hosts you are accessing.

What is interesting, in fact, the same powers are given by Internet and VPN providers. They are intermediaries in your interaction with the Internet and in the same way pose a potential threat of ARP spoofing.

In the man-in-the-middle approach itself, there is nothing new. But how exactly does all this apply to mobile SDKs?

Mobile specifics

CocoaPods is a standard dependency management tool used in iOS development. Using CocoaPods for an open source code is considered practically safe - usually hosted on GitHub, usually access via HTTPS or SSH. However, I managed to find a vulnerability based on the use of HTTP links.

The fact is that CocoaPods allows you to install the SDK with closed source code, and all you have to do is to specify the URL. There is no verification that traffic will be encrypted, and many SDKs offer an HTTP address.

In this regard, I sent several pull requests to the developers of CocoaPods, and soon they completed the revision. Now newer versions of CocoaPods check the user-referenced link, and in case it is unencrypted, display a warning. So my advice: always update CocoaPods versions and do not ignore warnings.

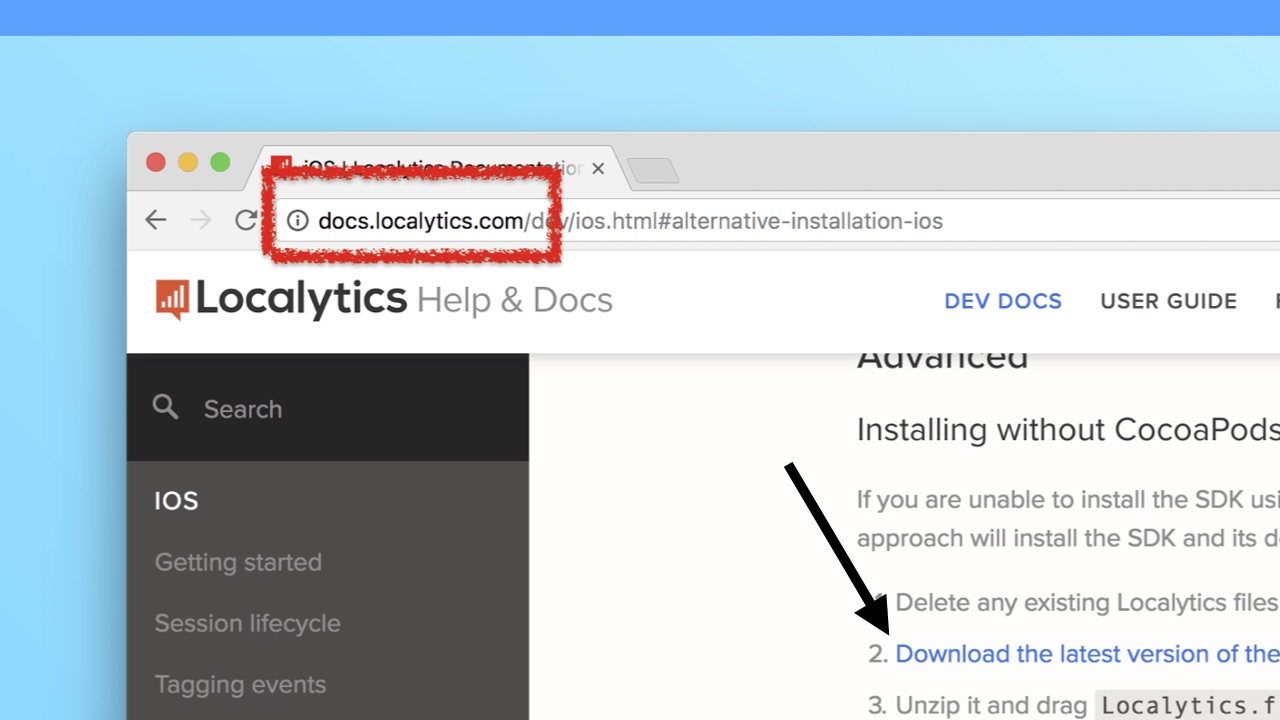

It is even more interesting to see how the installation of non-foam SDKs not from CocoaPods takes place. Take, for example, the platform Localytics.

The docs.localytics.com page is not encrypted. It would seem that in this case, this can be neglected, because this is just documentation. But note that the page, among other things, contains a link to download binaries. The link may be encrypted, but in this case it does not guarantee any security: since the page itself will be transmitted via HTTP, it can be intercepted and the link in it replaced with an unencrypted one. The localytics developers have been made aware of this vulnerability, and it has already been fixed.

You can do it another way: do not change the link to HTTP, but leave HTTPS, but replace the address itself. Finding this will be very difficult. Look at these two links:

One of them belongs to me. Which of them is from real developers, and which is not? Try to understand.

Practical check

Then I decided to test my assumptions, having tried in reality to replace the contents of the SDK with the help of an MITM attack. It turned out that this is not so difficult. It took me literally several hours to set up the simplest publicly available tools to build and activate my scheme.

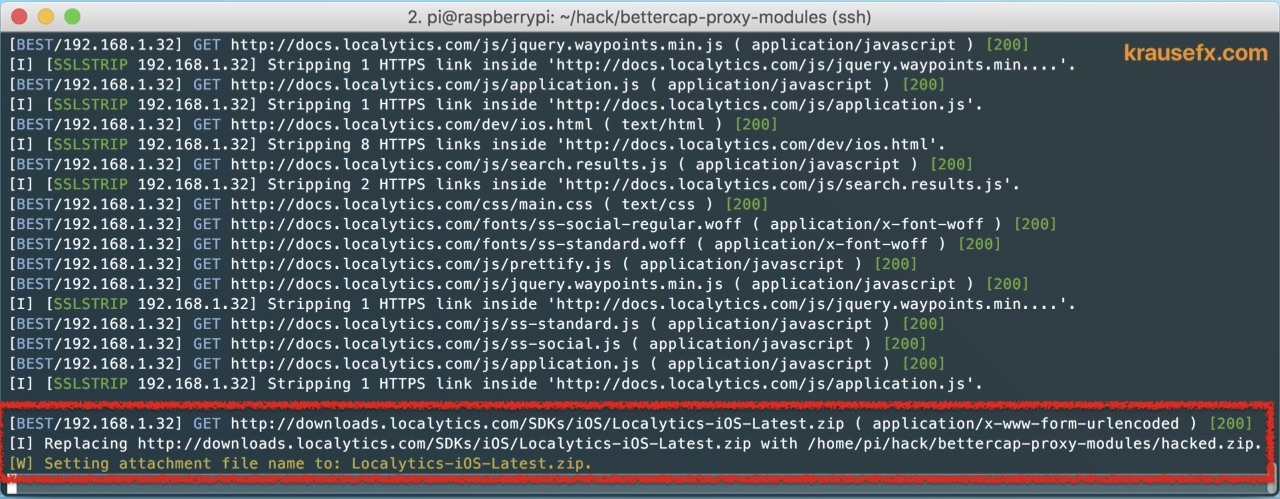

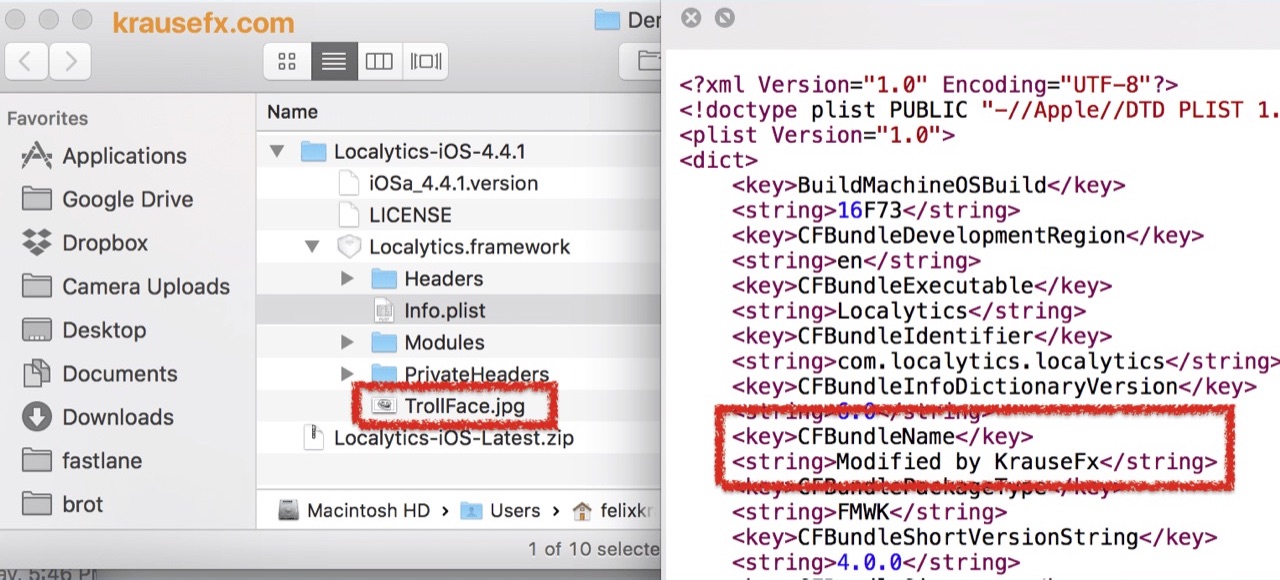

I charged the interception with the usual Raspberry Pi. Included in the local network, he could listen to traffic in it. In the intercepted packets, he had to, first, replace all HTTPS links with HTTP links, and then replace all Localytics iOS .zip files with hack.zip. All this turned out to be simple and worked with a bang.

The trollface.jpg file appeared in the resulting archive, and the string “Modified by KrauseFx” appeared in the Info.plist file. How much was required for such an attack? Only two conditions:

- В вашу сеть сумели получить доступ (помните, что для интернет- и VPN-провайдеров это и вовсе не вопрос). К скольки сетям кофеен и гостиниц мы подключаемся?

- Инициируемая загрузка — нешифруемая.

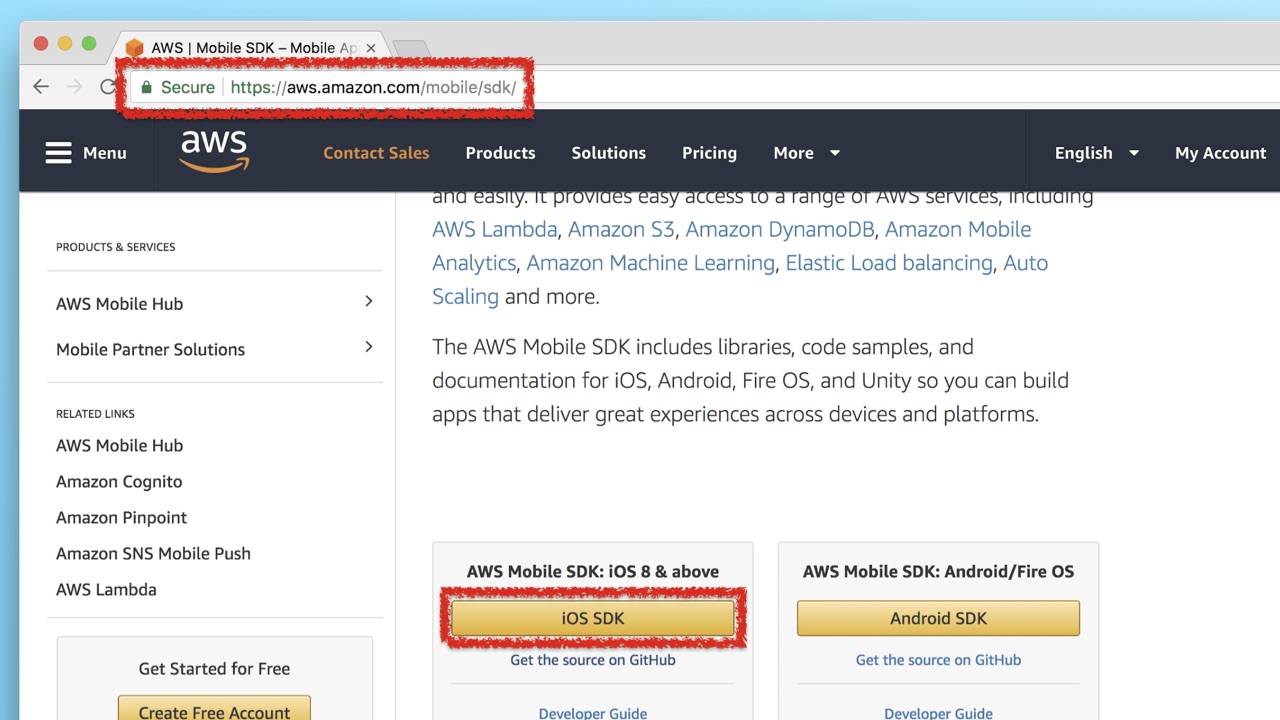

You say "but I just look at the Secure icon in the browser, which means that everything will be OK for me." And if you're on the Amazon site, everything should be fine there, right?

I propose to consider the site Amazon. AWS Mobile SDK is their personal SDK, which they provide developers with to interact with the service.

The "secure" icon, a well-known site - nothing seems to foretell trouble. But alas - only at first glance. The link to download the SDK is specified without a prefix at all (neither https: // nor http: //). And at the same time it should lead the user to another host. Therefore, the browser switches from HTTPS to HTTP. As you can see, here the download of the SDK is not encrypted! At the moment, the vulnerability has already been fixed by the developers of Amazon, but it really did take place.

I must say that the development of modern browsers is also not without attention to security issues. For example, if you load a page using HTTPS, and any one image is listed via the-HTTP link, Google Chrome will notify you about the so-called “mixed content”. But for downloads such a security measure is not provided: browsers do not track which protocol works for the download link indicated on the page. Therefore, as part of this project, I wrote to browser developers asking for tracking of mixed content and notifying users about it.

Apple ID data theft

Now look at another problem. IPhone users should be familiar with this regularly pop-up window:

On the left you see the original version of iOS, on the right - my copy. About how easy it is to simulate a similar window, I wrote in my blog a few months ago. It took 20 minutes to recreate the view.

The iPhone often requests iCloud authentication data, and the reason for the request is usually unclear to the user. Users are so used to this that they enter a password automatically. The question of who is requesting a password — the operating system or the application — simply does not occur.

If you think that there is a difficulty in getting the email address to which your Apple ID is attached, then you are exaggerating: this can be done through the contact book and through iCloud containers (if you have access to an iCloud application, which you can learn about from UserDefaults of this application). And the easiest option is to ask the user to personally enter their email: in theory, this should not even surprise him, because in iOS there really is a type of window asking for not only a password, but also an email.

I thought: “What if I take this code of the request form that I have and, using SDK substitution, penetrate into many different applications with it, in order to steal all passwords from iCloud with the help of them?” How difficult is this task?

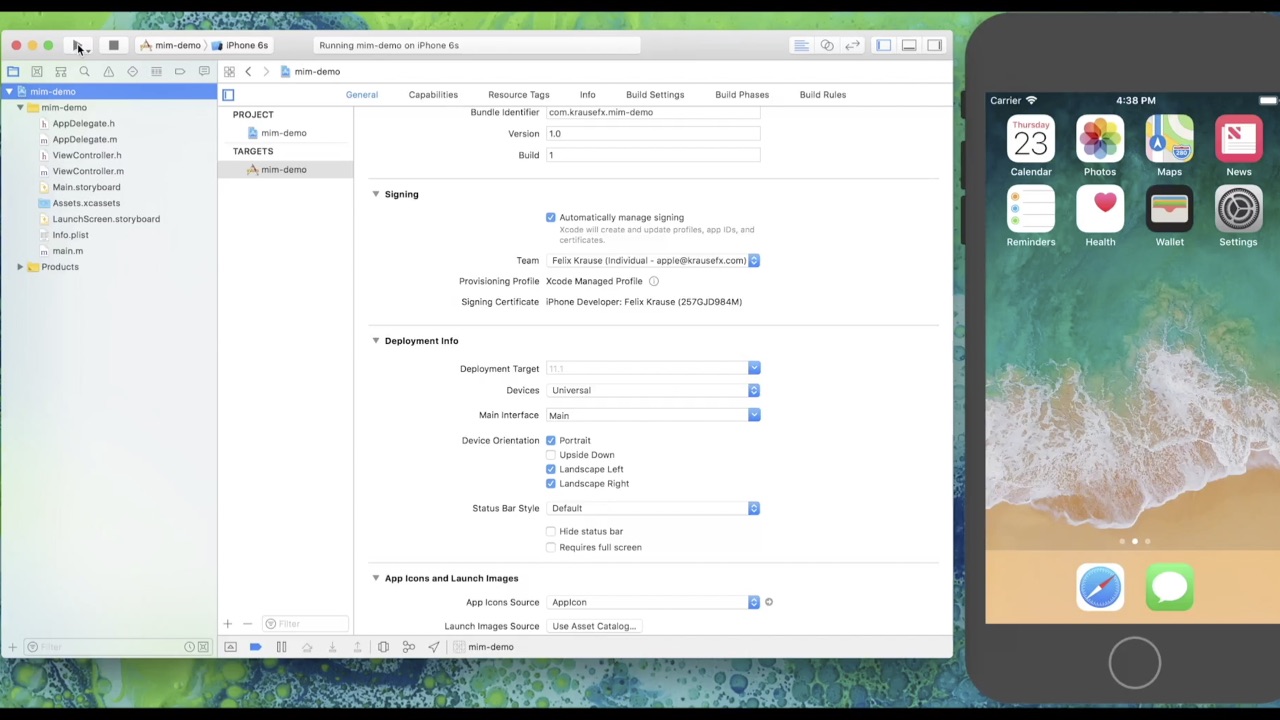

Suppose we have a completely clean Mac, without any VPN or proxy installed on it, but on the same network there is our Raspberry Pi. On Mac, in Xcode, we have a project for an iOS application with an absolute minimum of code - a simple display of a map of the area and nothing more.

Now open the browser, go to Amazon Web Services. Find the AWS Mobile SDK page and click on the download link. Unpack the downloaded binary and drag all frameworks into our project in Xcode. Then import the libraries. We are not even required to call any code - it is enough that it will be loaded. Note that during the whole process Xcode did not issue any warnings.

What happens when recompiling the application? The same copy of the window appears on the screen, offering me to log in to the iTunes Store. I enter the password, the window disappears. In parallel, watching the application log, I see how the password I entered is instantly displayed in it - the interception of Apple ID data is completed. It would have been easy to send this data somewhere to the server.

Here you can say "Well, I would have noticed this input form right away when developing and understood that something was wrong." But if you have an audience of millions of users, you can make sure that it crawls out only once per thousand, and when testing goes unnoticed.

And again, how much did it take for us to launch an attack? It was necessary that the network was our computer (and Raspberry Pi was enough). HTTP or HTTPs - in this case did not matter, encryption would not save the situation. All the software I used was the simplest, and was taken by me from public access. At the same time I am an ordinary developer, without much knowledge and experience hacking.

Control interception

The previous example injected malicious code into an iOS application. And what if we managed to get control of a developer’s computer in general? The ability to run the code on your device, as you understand, gives the hacker tremendous power. It can activate remote access via SSH, install keylogger, etc. He can watch you at any time, record your actions, use the file system. Also, he will be able to install new SSL certificates and use them to intercept all requests that you make to the network. In short, someone has the opportunity to run the code on your computer - and you are completely compromised.

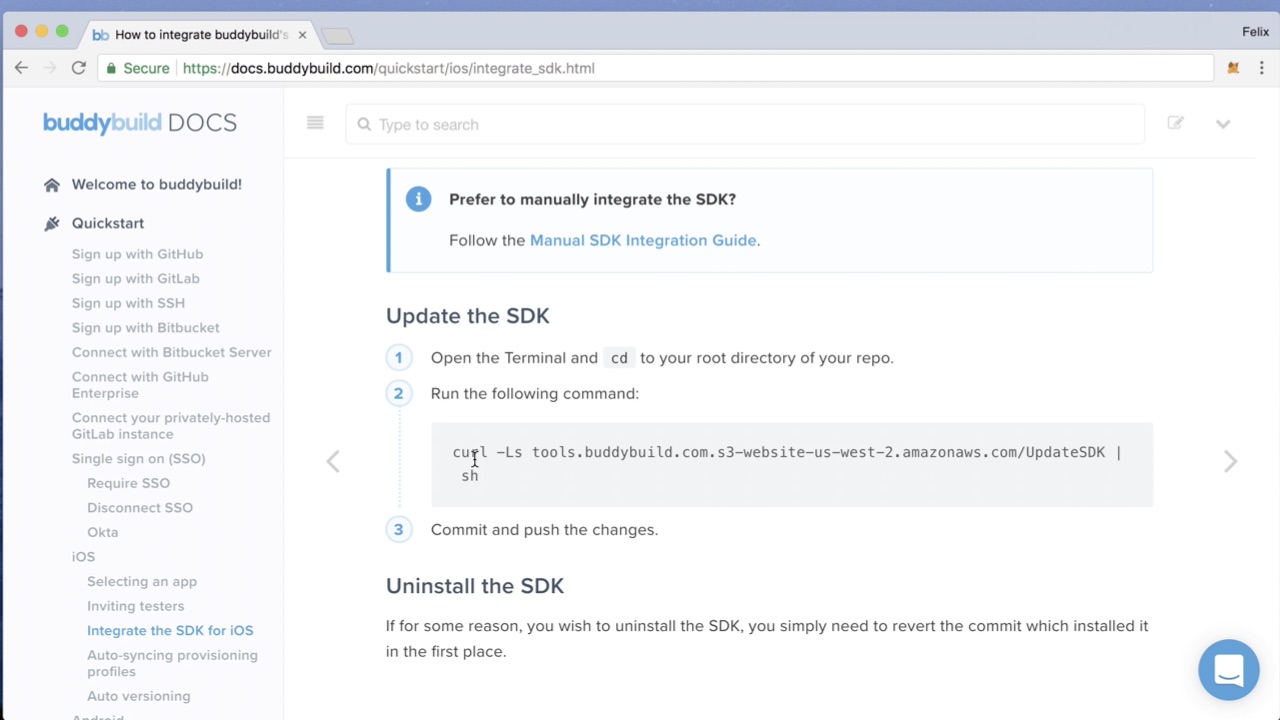

I thought, “what from the iOS SDK can I use for this?” There is a service that provides the SDK via the curl command and an HTTP link with the output redirected to the sh command. That is, your terminal will download and run a shell script.

By itself, this method of installation already puts you at risk, do not do so. But in this case, the HTTP protocol was also used. What then is possible to do?

Suppose you are a user. You go to the official documentation page. Please note that the page is encrypted with the HTTPS protocol - great! You copy the command, run it at home. The command is executed within a few seconds. What had time to happen during this time?

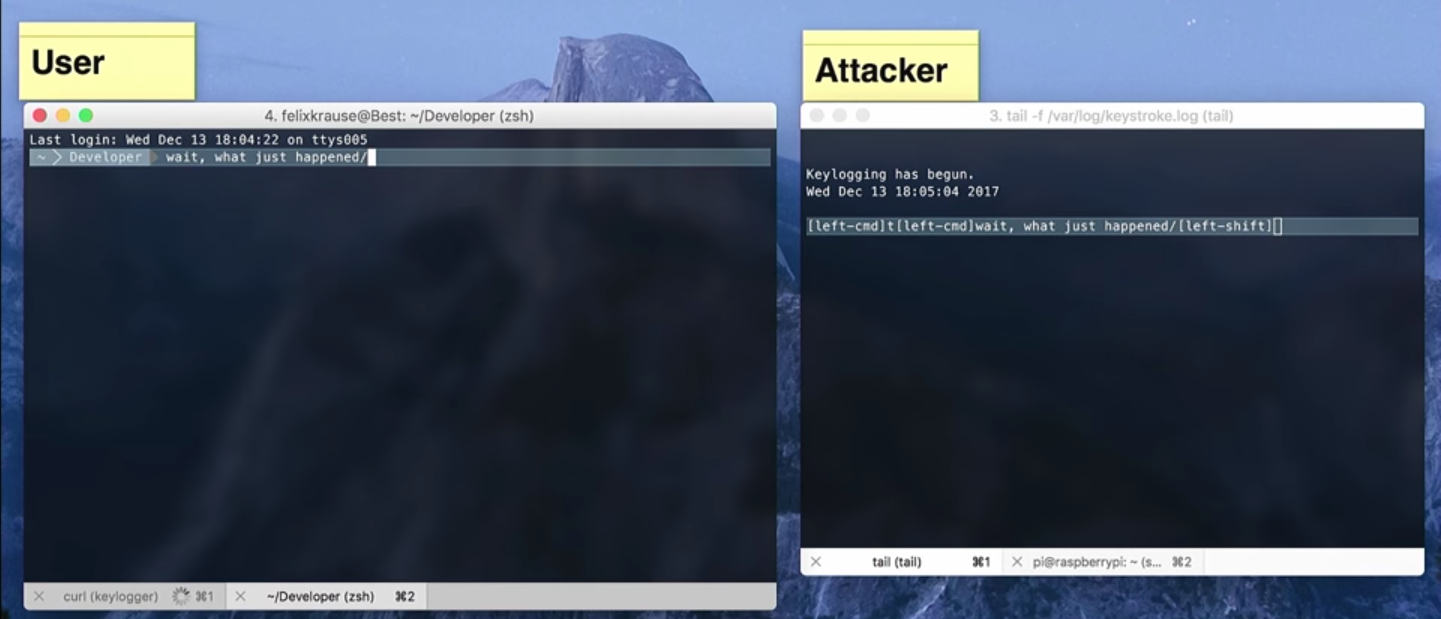

And during this time I managed to work an easy attack mechanism with the participation of my Raspberry Pi. The user-loaded shell script UpdateSDK contained a small patch of my own code. And now I am allowed remote access to your computer via SSH, and you also have a keylogger installed.

On the left you see “your” terminal, and on the right - my Raspberry Pi, which in real time already shows everything that you type on the keyboard. By running Raspberry Pi SSH, I log in using the username and password I just registered with the keylogger, and thus I get full access to managing your Mac and its file system. And also, probably, access to much from your company-employer.

Finally

How likely is it that this could happen to you? After all, developers are still trying to use secure Wi-Fi, buy a VPN for themselves.

Personally, I also thought that I was careful, until I once opened the settings of my Mac and found over 200 connections to unsafe Wi-Fi networks in history. Each such connection is a potential threat. And even using a proven network, you cannot be 100% sure of your security, since you cannot know if any of the devices on this network have been compromised (as we just saw).

Attacks over unsafe Wi-Fi networks occur very often. They are easy to hold in public places, such as hotels, cafes, airports, and, by the way, conferences :) Imagine the speaker talking about some SDK, and, as usual, in parallel, some viewers try to install it by connecting to the Wi-Fi distributed here . And as I said, it's very easy for a network provider to abuse its rights.

Similarly, with a VPN - you just put yourself in the hands of the provider. And who better to trust - the VPN provider or your local network and its users? It is not clear.

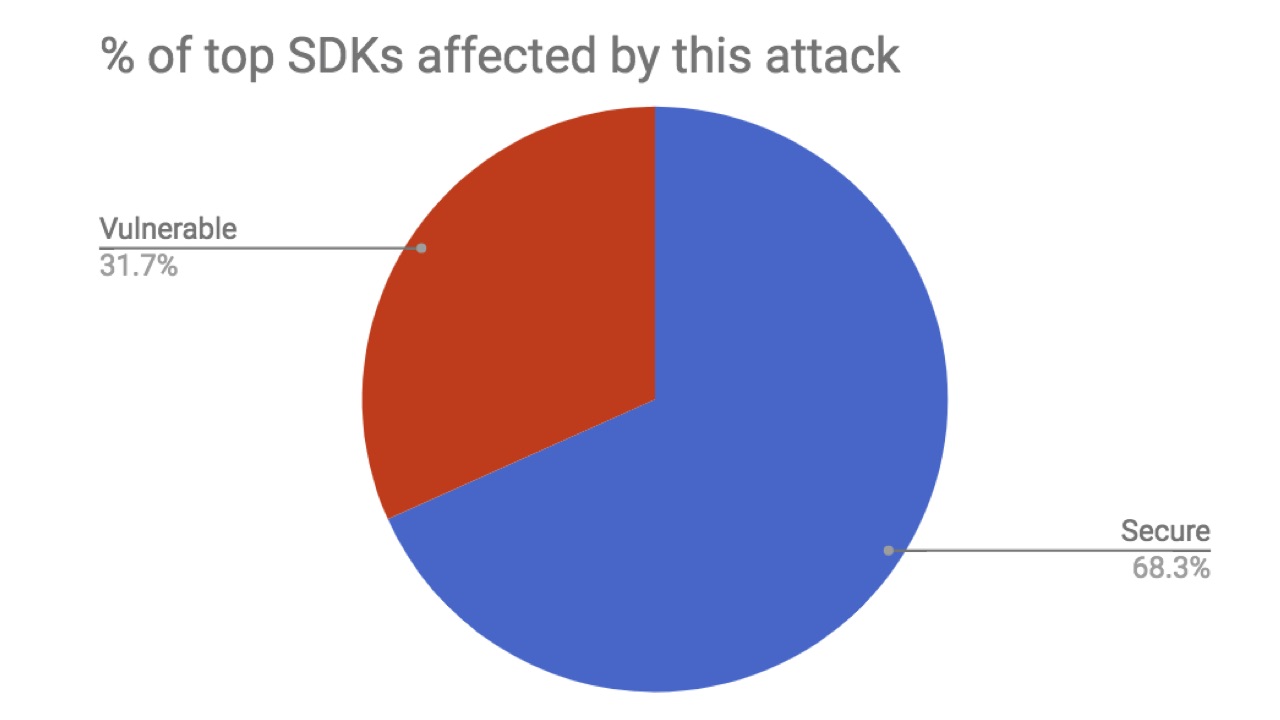

In November 2017, I conducted my research and analyzed the presence of the listed vulnerabilities in the top 41 most popular SDKs for iOS (not counting the Google and Facebook SDKs, they are all protected).

As you can see, 31.7% of the SDK did not pass the tests. I managed to inform about the existing problems almost all suppliers. From one I received the answer literally immediately, the problem was solved within three days. Five teams also responded, but spent a little more time on revision - about a month. Seven did not bother to answer my report at all and have not fixed anything to this day. Let me remind you, this is not about some little-known projects. All of them are among the most well-known SDKs and have tens of thousands of users developing iOS applications using these SDKs, which, in turn, are used by millions of iPhone users.

It is important to understand that users of closed applications are always subject to greater risks, using open-source ones - less. You cannot check how a closed application works. It is extremely difficult to judge the availability of safe solutions. You can compare hashes and hash sums, but by doing this you will be able to check the success of the download. Open-sourced products, on the contrary, you can explore thoroughly, along and across, which means you can provide yourself more protection.

In addition to man-in-the-middle attacks, there are others. A hacker can attack the server from which the SDK is being downloaded. It also happens that the company supplying the SDK intentionally includes so-called backdoors in the code, through which it can later perform unauthorized access to users' devices (perhaps the local government requires installing backdoors, and perhaps this is an initiative of the company itself).

And we are responsible for the product that we supply. We must be sure that we are not letting the user down and are complying with the GDPR. Attacks through the SDK are serious primarily because they are massive - directed not at one developer, but at a million user devices at a time. These attacks can go almost unnoticed by you. Open source code helps you to protect against this, with the closed, everything is much more difficult - use it only when you can safely trust it. Thanks for attention.

If you like this report, please note: the next St. Petersburg Mobius will take place on May 22-23, the tickets are already on sale , and they gradually become more expensive.