Buying a Modern Intel Server with NVMe P4800X

September 17th year I bought for personal use a server worth $ 7680 from the supplier Vise.

Delivered tech.ru to the Moscow data center and started operation.

Photo report available. The article consists of 5 parts:

In August 2015, circumstances developed successfully so that I began to make an online game.

I had a fairly large selection of what long-term construction to do. For a number of reasons, I chose an online game.

He began to do it and for half a year of hard work he made good progress, but suddenly his savings ran out. Nevertheless, I went very far in this matter and then continued to refine the online game in my free time from my main job. The game requires a lot of server resources - to calculate the ratings.

I have already had a personal server for 10 years in the Kiev data center hvosting.ua. He got to me by accident: “Do you want a server like this for 750 euros and pay only for a rack space?”, But then I once had an extra 750 euros and I agreed. The available server was enough for about 500 people online. Therefore, I had to ask all the players "do not tell anyone about the game." And now I would not want to show the current version to anyone, 5 people are actively playing - more than enough at the moment.

It became clear that you need to rent or rent a new server.

I rejected the rental option because I have a positive experience of hosting my

server for 10 years.

There is a negative experience of closing a successful startup due to expensive hosting. Well, that is, you had to pay $ 600 per month for hosting, and the income at some point became less than $ 600 per month.

Accordingly, I could not show it in my portfolio - and I think it was a monstrous damage to my income as a whole.

It was because of these two reasons that I decided to buy a server, rather than rent.

That is, if the online game is financially insolvent - it will still remain online and will be available for the portfolio. And the costs of its maintenance will be quite feasible even with zero income from the game itself.

In addition, I need a server for several non-profit sites and teamspeak server for a large guild in a well-known online game.

An overview of all the components in this server, everything is still in the boxes, now there will be unpacking

I decided that the server should pull 50,000 players online and 1,000,000 registered players.

Upon reaching these indicators, a startup will grow into a business and there will be an opportunity to buy new necessary equipment. But in general, why not exactly 50,000, and not 60,000 - we will not consider, the article is not about that.

A further choice of configuration is the selection of parameters for the required indicators.

At the beginning of this story, I was rather poorly versed in the server hardware and what I really needed.

I believe that the train of thought and the logic of decision-making will be very interesting to everyone involved in the topic. Or decision makers.

All components in their boxes, unpacking and installation will now begin

To reduce financial risks, it is necessary that monthly expenses are minimal. Accordingly, the server should be 1U in size.

The server part of the game is designed in such a way that almost everything can be run in multi-threading. That is, you can even share the task of calculating the game world between several servers.

This means that when choosing “several productive cores” vs “a lot of medium-productive cores”, a choice should be made in favor of the second option.

After making some measurements, I decided that for 50,000 people online, you need 50Gb of RAM (again, the article is not about why so much).

Suddenly it turned out that the game makes a lot of records on the disc. So it should be ssd and quite powerful.

There must be some hdd for backups. I am one of those who "already makes backups."

Processor manufacturer: Intel, AMD, MCST.

AMD - I tried to use the official AMD website, but unfortunately it is impossible to understand there:

The decision to refuse to buy AMD is a very inconvenient site and outdated processors.

MCST - this is certainly very interesting, the very fact of the existence of processors of Russian production is very interesting. Which is even possible to buy.

The inability to buy on behalf of a private person is a very annoying, but resolved issue.

The inability to study the full characteristics of the processor, there are no tests in the public domain. Even synthetic ones. I also note the lack of support for DDR4 - but this is IMHO excusable (for AMD this is not excusable). The decision to refuse to purchase the MCST was after realizing the impossibility of using the “apt-get upgrade” command.

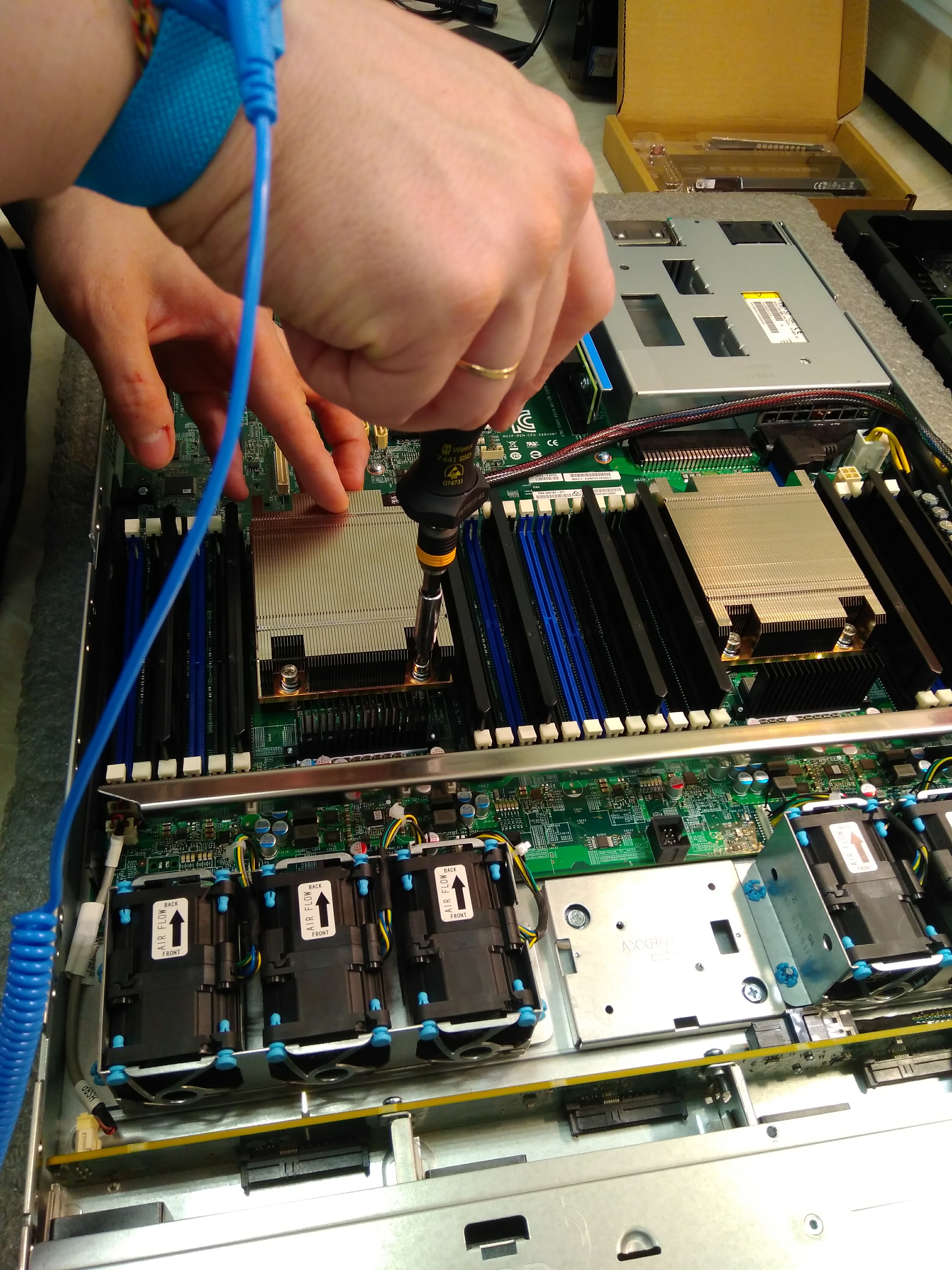

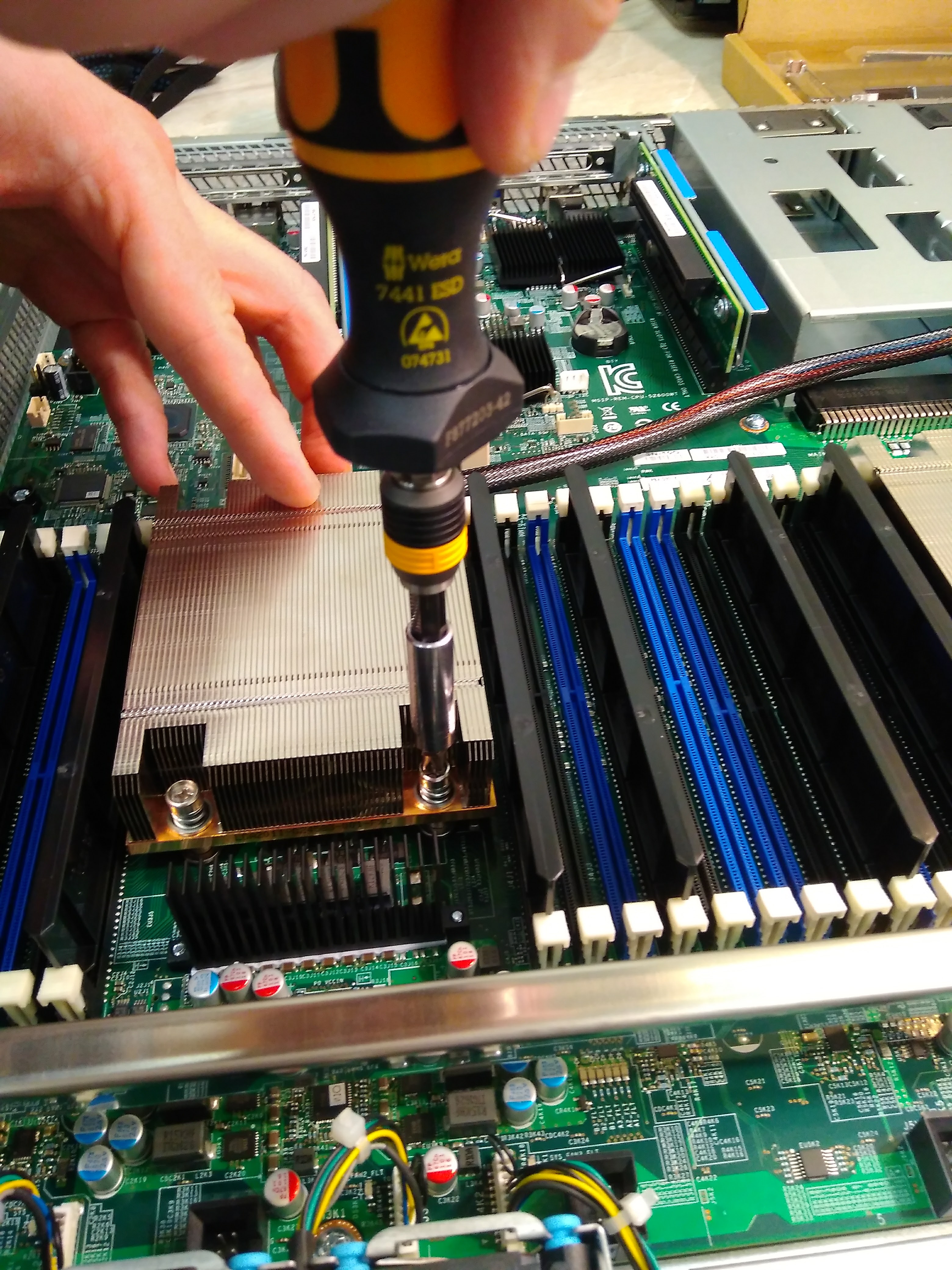

Intel - only this option remains. There is support for DDR4 memory in the latest processor line. There is a special section of the site in which they dump a sheet of processor characteristics - I can find all the characteristics that interest me there. We turn off the processor heatsinks Turn off the

processor

radiators

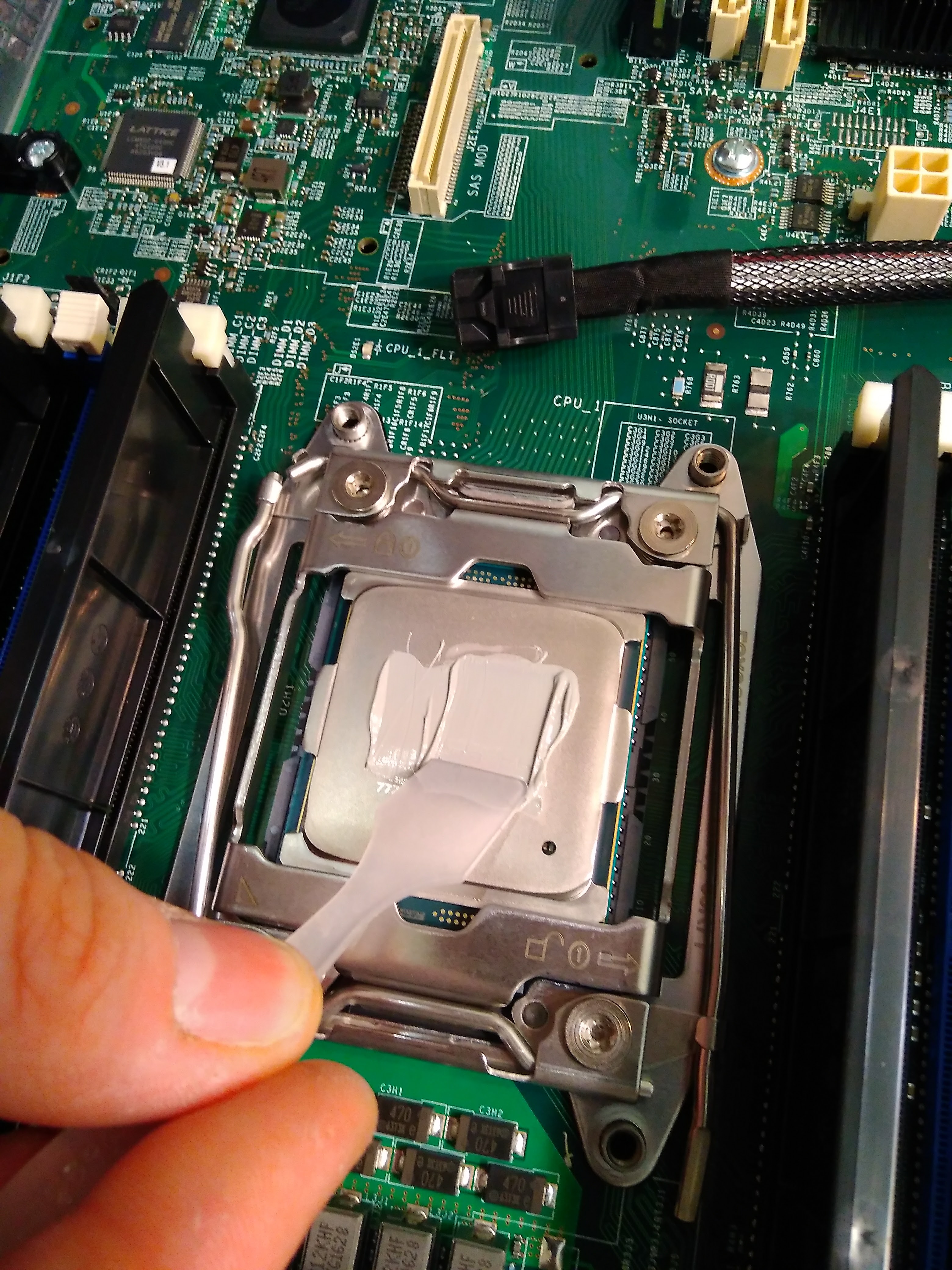

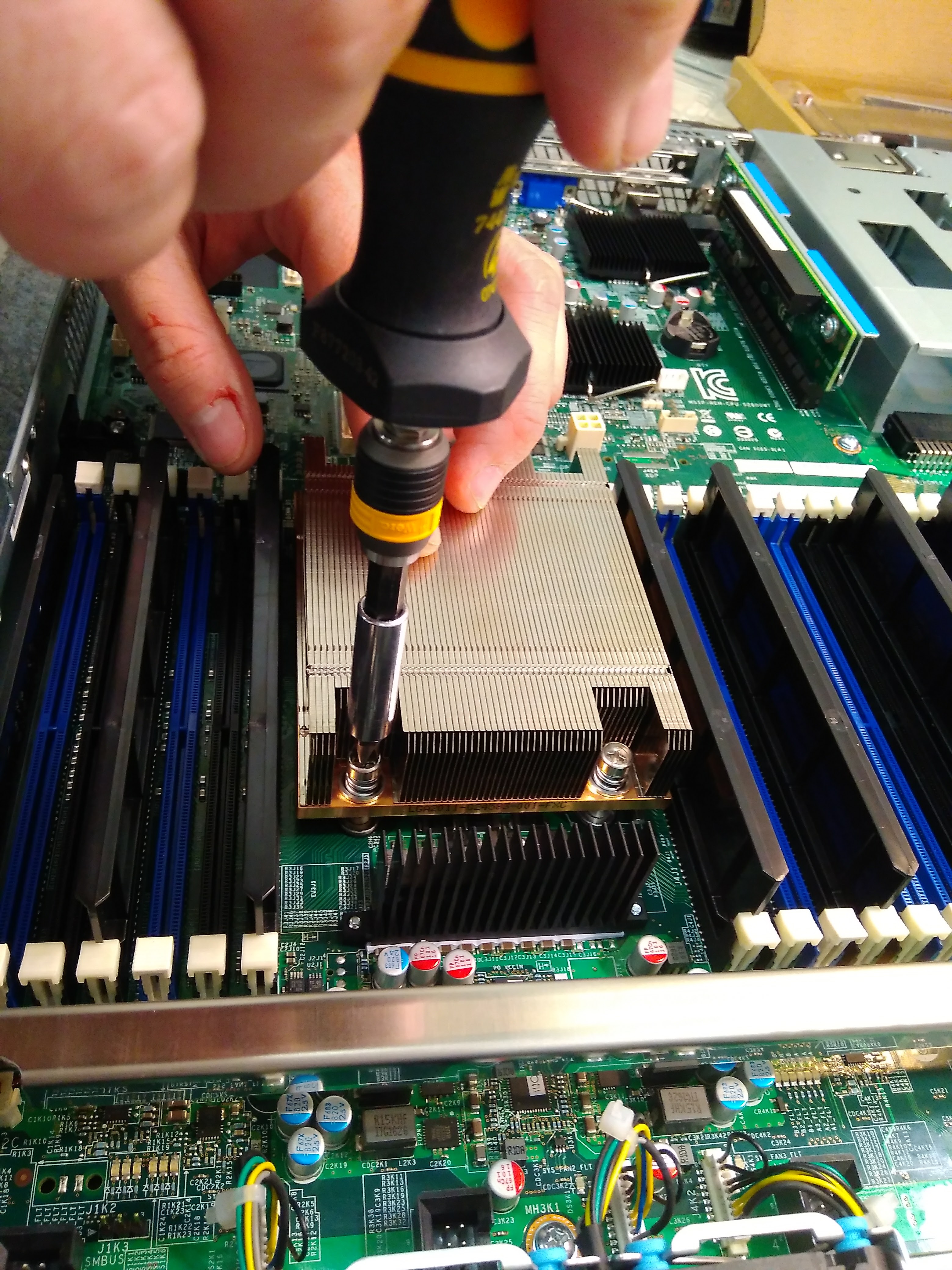

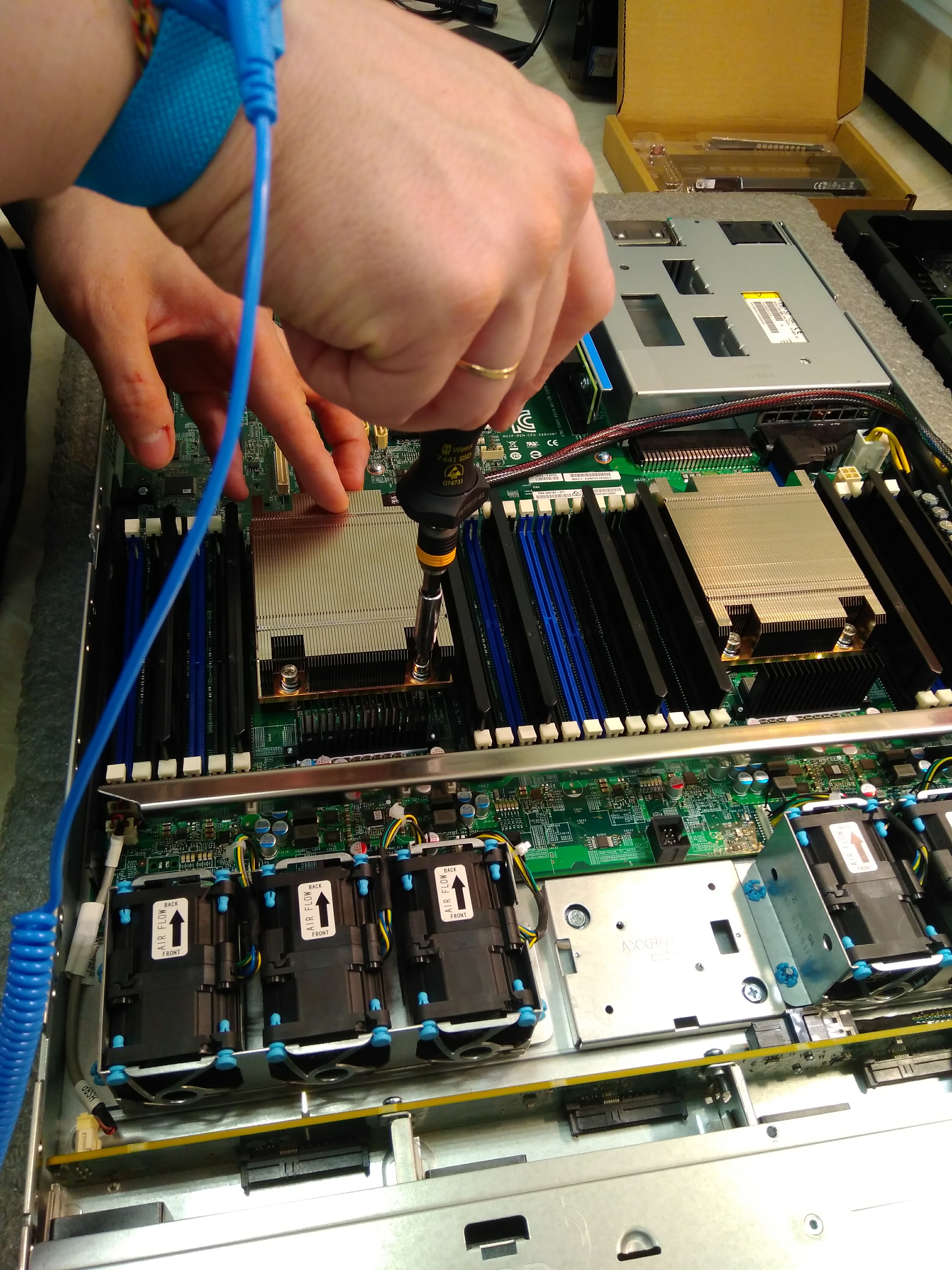

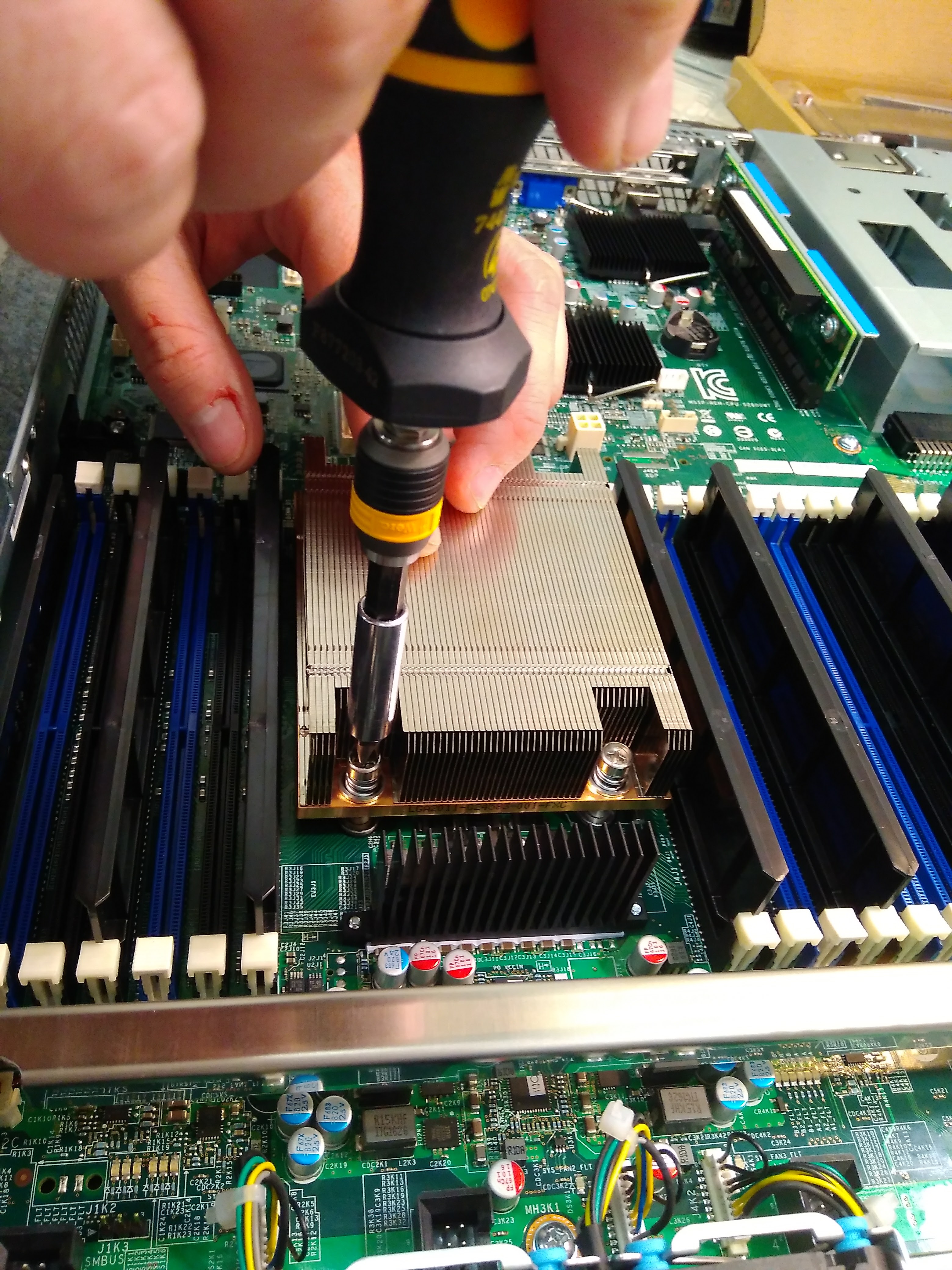

The moment of installation of the processor E5-2630 v4

Installed processor E5-2630 v4 close-up

In free sale there are server options 1x, 2x, 4x, 8x.

I decided not to consider the option to buy a motherboard with several connectors and eventually install a complete set there. Why? To buy equipment is a lot of time, nerves and money. This is unlike a home computer.

The 4x-8x processor options turned out to be incredibly expensive and even in the most modest configuration were at a price too high for me. Uniprocessor and dual processor systems have appeared for different market niches. Here is a list of processors to consider (those that start on "E5-4" are uninteresting, they are for 4 processor systems).

Consider the options "E5-1" - these are processors for single-processor systems.

The high base frequency and high burst are immediately evident, but the number of cores is very small.

For example, “E5-1660 v4” with 8 cores - $ 1113, and a processor for a dual-core system, but with a lower frequency of “E5-2620 v4” 8 cores - $ 422.

The price is 3 times less in favor of a dual-processor system! I recall that for a task more useful are more weak nuclei than few strong nuclei. On this, it was decided to buy a dual-processor system.

Both E5-2630 v4 processors are installed in their slots.

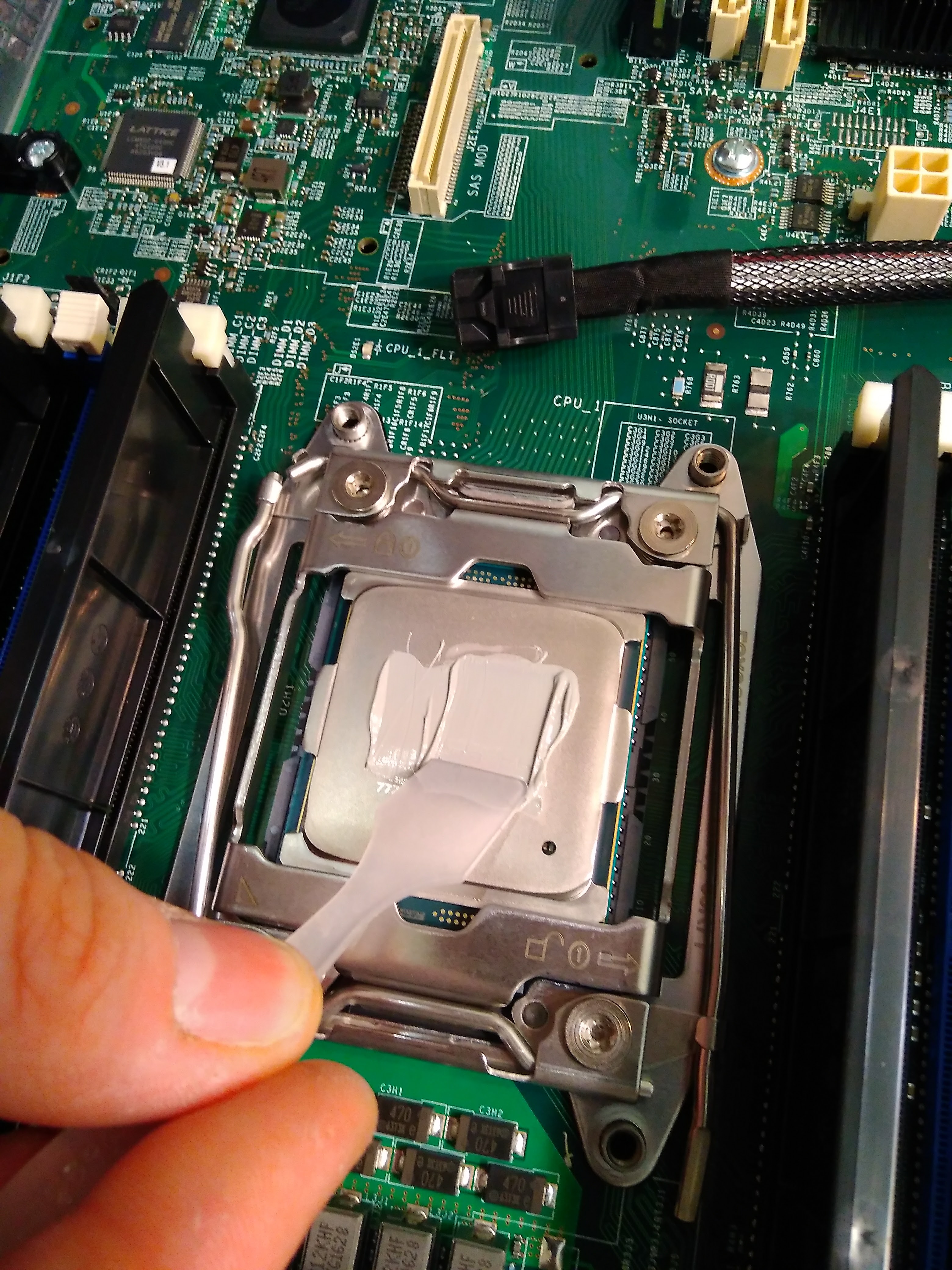

Apply thermal paste to the processor.

Apply thermal paste to the processor.

All the same, there remains a fairly large selection of processors, what choice to make. I pay attention to prefixes like “L” or “W” or “A”.

“L” stands for low power consumption. This is not interesting for me. If I had my own rack in the data center - it would matter, and since I have 1U and no data center wants to put a counter on my server - this is important.

“W” - increased power consumption, “A” - a very successful instance in the line. You can quickly notice that the remaining processors are divided into 2 groups. A small number of cores + high frequency, a large number of cores + low frequency.

Drop the first group. Total left (cost above $ 1,500 apiece, I decided not to consider):

E5-2603, E5-2609, E5-2620, E5-2630, E5-2640, E5-2650, E5-2660.

Immediately discarded too weak processors: E5-2603, E5-2609, because there is definitely enough money for 2pcs E5-2620.

There are 5 options left: E5-2620, E5-2630, E5-2640, E5-2650, E5-2660.

Upon a detailed examination, it turned out that they can be divided into 2 groups according to the maximum permissible memory frequency:

E5-2620, E5-2630, E5-2640 - 2133Mhz

E5-2650, E5-2660 - 2400Mhz

Consider 2 processors from this group:

According to the frequency E5 -2630 vs E5-2650 are no different. The difference is only +2 cores (and the memory frequency is slightly larger) and the price is 2 times higher (or + $ 600)! The second group is no longer due to price.

Now consider the E5-2630 vs E5-2640:

Only the base frequency + 10% differs, and the price is one and a half times higher. I remove the option E5-2640. Incomprehensible overpayment for me.

It remains only E5-2620 vs E5-2630: the

price difference is $ 250, and for them we get + 10% to the frequency and +2 cores.

I am ready to receive such a premium for such a price.

The total choice is made: Intel Xeon Processor E5-2630 v4

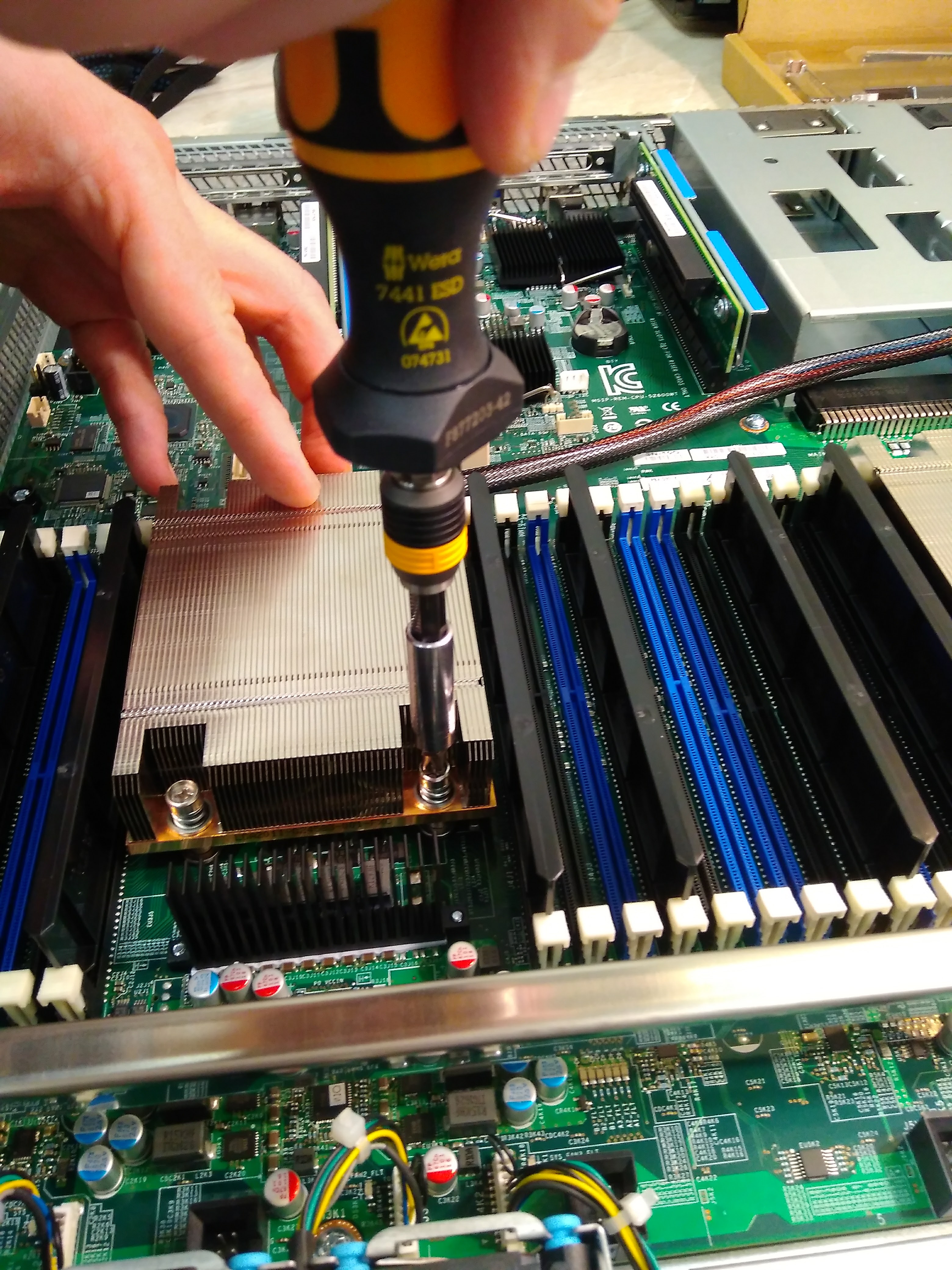

Installing a heatsink on a processor

Twisting a heatsink on a processor

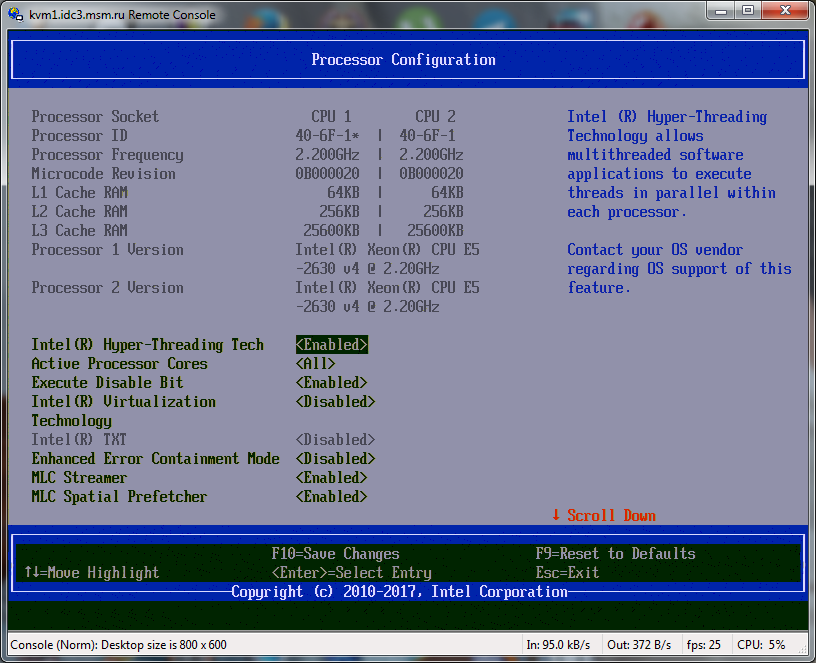

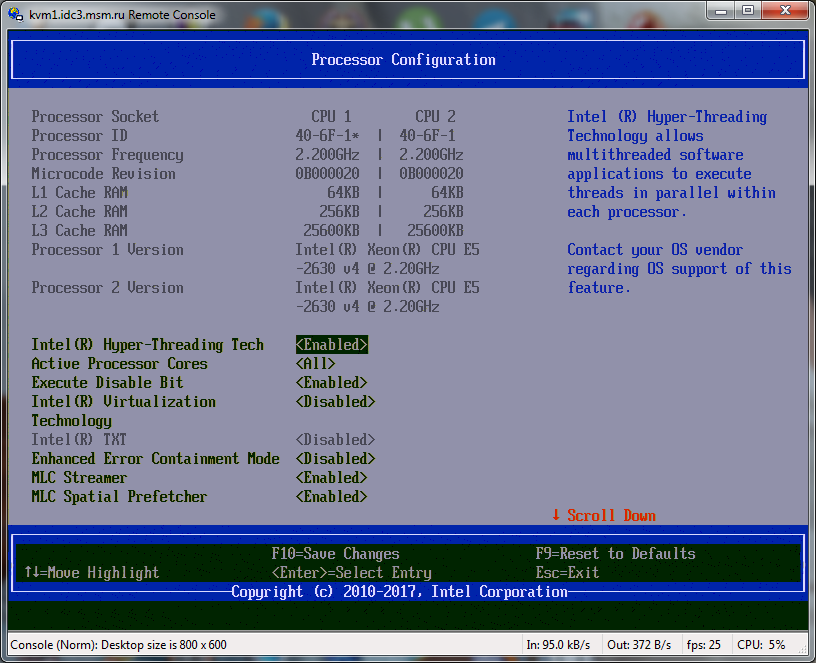

How processors are visible in bios

I remind you: at the idea level - I decided that I need ~ 50Gb of memory. On the Internet, I found that collecting a server with memory is not a multiple of two - this is quite acceptable.

But still. For reasons that all memory should work the same way — so that I don’t have to parse which core how complicated the task is to submit — I decided that the amount of memory should be a multiple of two.

Accordingly, it turns out that you need to put 64Gb of memory. The server part of the game is written in such a way - that on the server side the entire game process is fully calculated.

Thus cheating is impossible. However, if suddenly there is not enough memory to completely fit in the memory of all online players, there will be a small disaster, the game simply will not start, and I will need to urgently make some kind of hotfix. To be able to flush intermediate calculations to disk.

It also turned out that if a lot of memory dies were installed, then the processor’s communication speed with the memory would drop.

Therefore, if I buy 64Gb now, and then I buy as much more, it will be much worse than if I immediately buy 128Gb.

The price difference turned out to be quite feasible, perhaps it would have been enough for 256Gb, but there was no extra $ 1000, the entire reserve eventually went to ssd.

Installation of memory dies, an attentive gopher here can notice 2400 memory. In fact, this memory is here for pictures, the necessary memory arrived at the very last moment.

I already knew that if you put too many memory dies, then it will work much slower than if you put less memory dies. So what - the best option is to put one die on 128Gb?

It turns out that processors have such a concept as “memory channels”. The best option - in each memory channel, one memory card. This rule also appears to work for gaming / work computers. It's amazing that all sorts of bloggers do not convey this simple idea to the public.

In my case, it turns out 2 processors, and each processor has 4 memory channels. That is, for my case, it is best to put 8 memory dies.

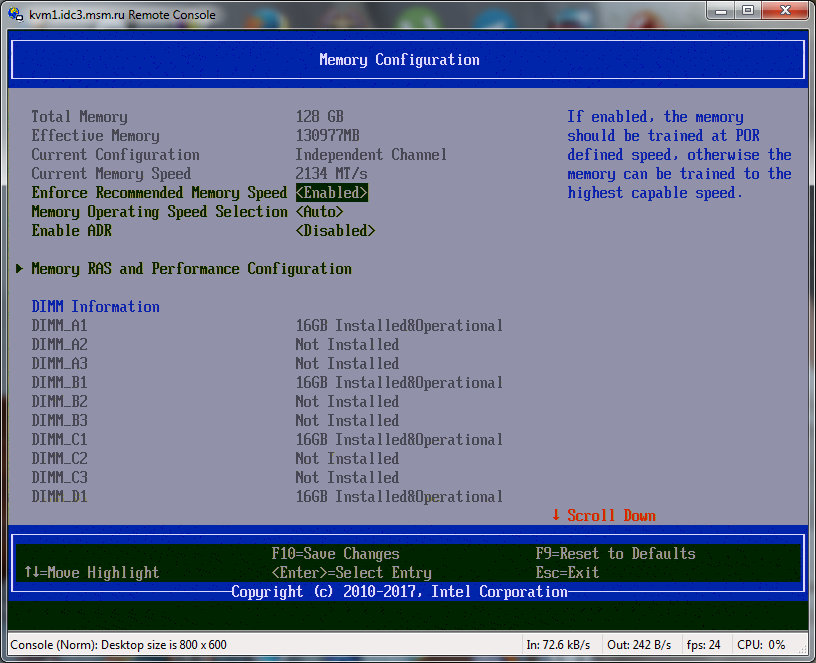

Total choice: 8 x 16Gb

Installation of memory dies

The choice was not great. Firstly, the choice at all at the moment is 2133 and 2400. But the processor pulls 2133. Does it make any sense to take 2400?

It turns out not only makes no sense, but also harmful (for my case). It turns out that memory access is performed for 17 clock cycles in 2400 memory, and for 15 clock cycles in 2133 memory.

But my processor cannot work with 2400 memory, it will still work with it at a frequency of 2133. However, if the processor was E5-2650 v4 or higher, then on the contrary it would be more profitable to put 2400 memory.

The next factor is LRDIMM. This is a technology that allows you to install more memory on the server and when installing maximum laths - such memory works faster than if without LRDIMM.

LRDIMM is an ordinary server memory + some additional chips. Accordingly, the price is + 20% approximately.

For me, accordingly, LRDIMM is not needed, because I do not install memory in each slot.

Now the manufacturer. Among the server memory, it turns out the manufacturer does not matter. I don’t understand how this is possible - but a fact. Synthetic tests are the same. The memtest data is about the same. For Kingston memory, I found an option: 2 options for chip saturation, but even in this case there is no functional difference.

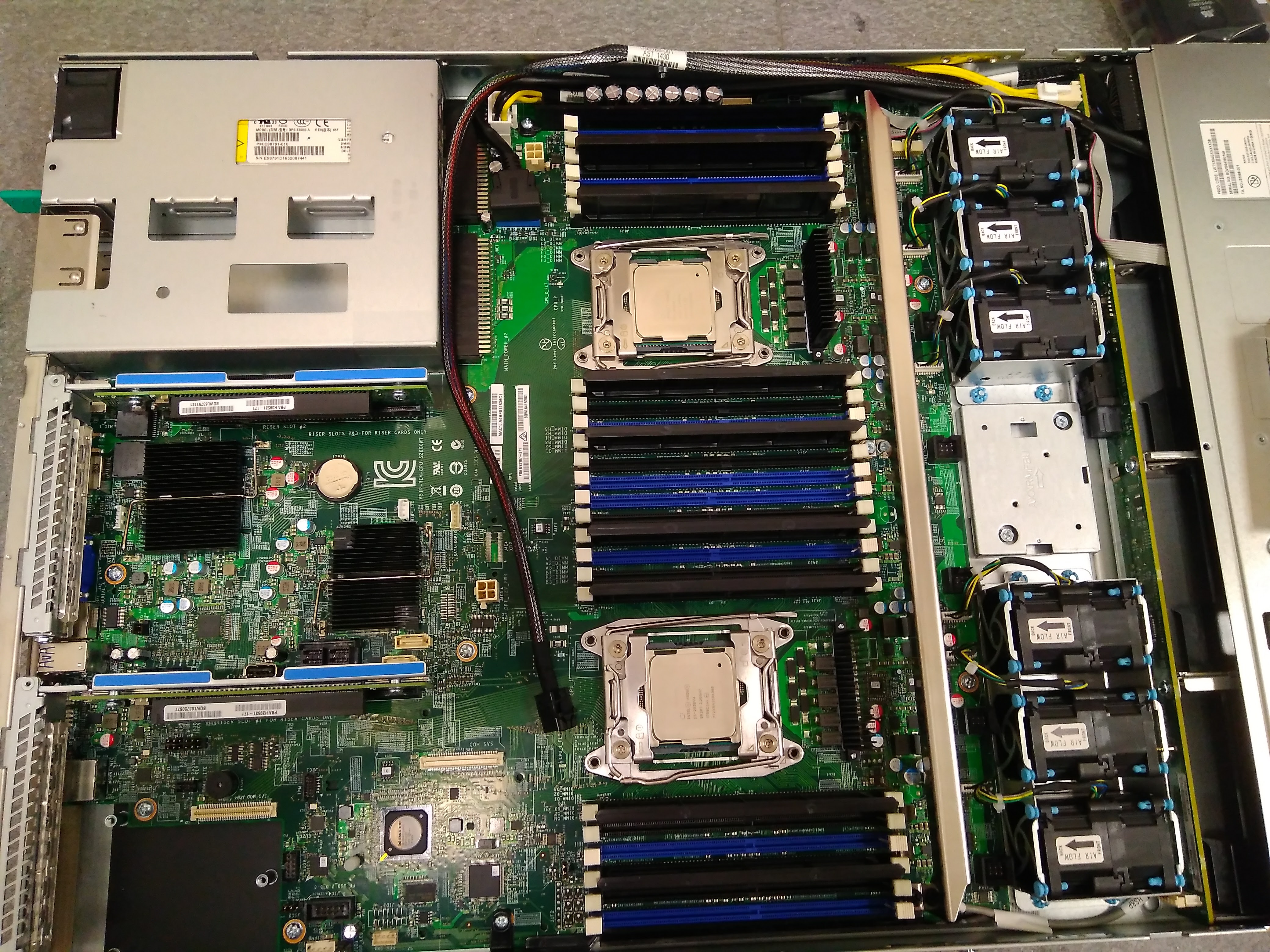

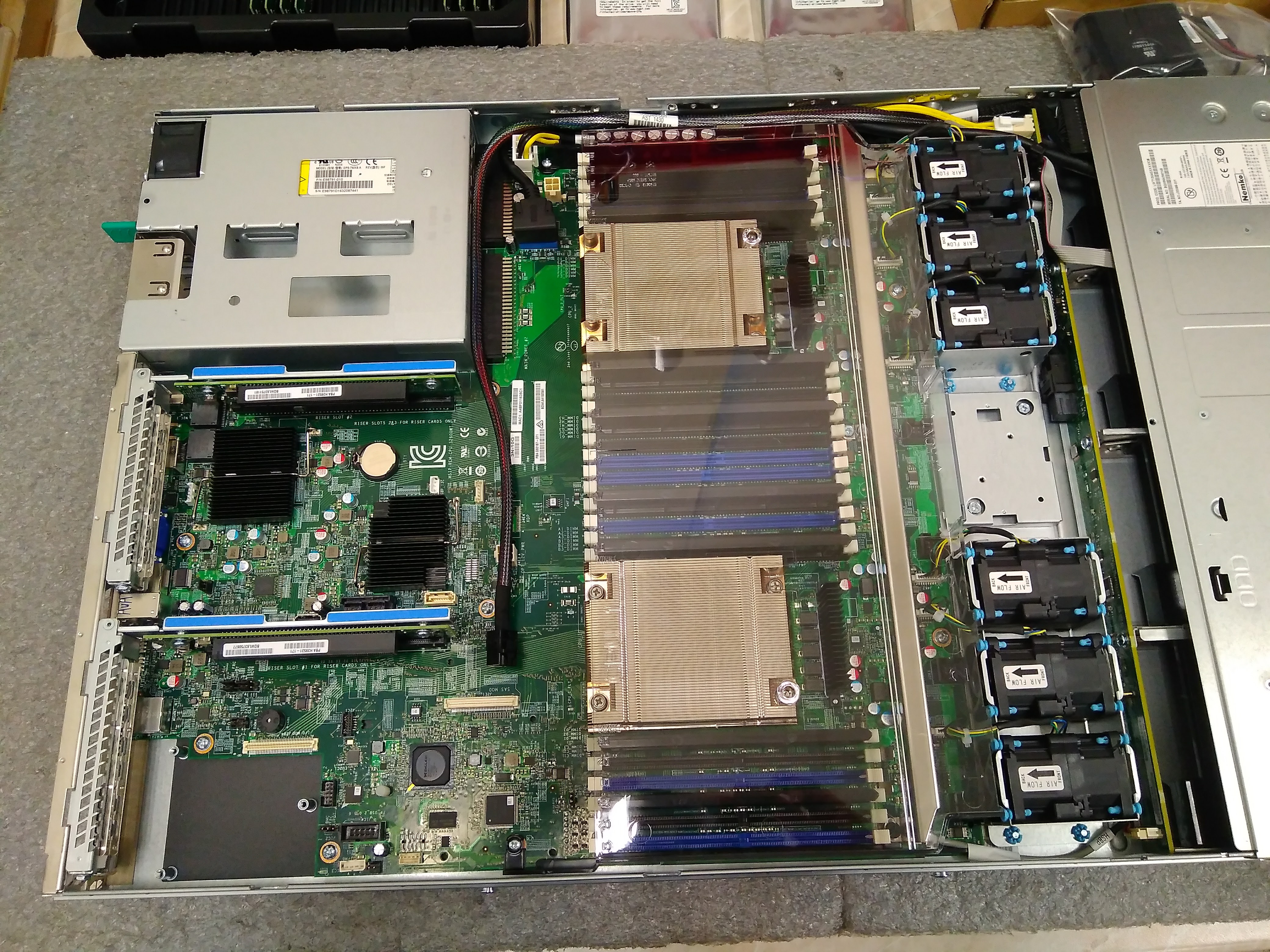

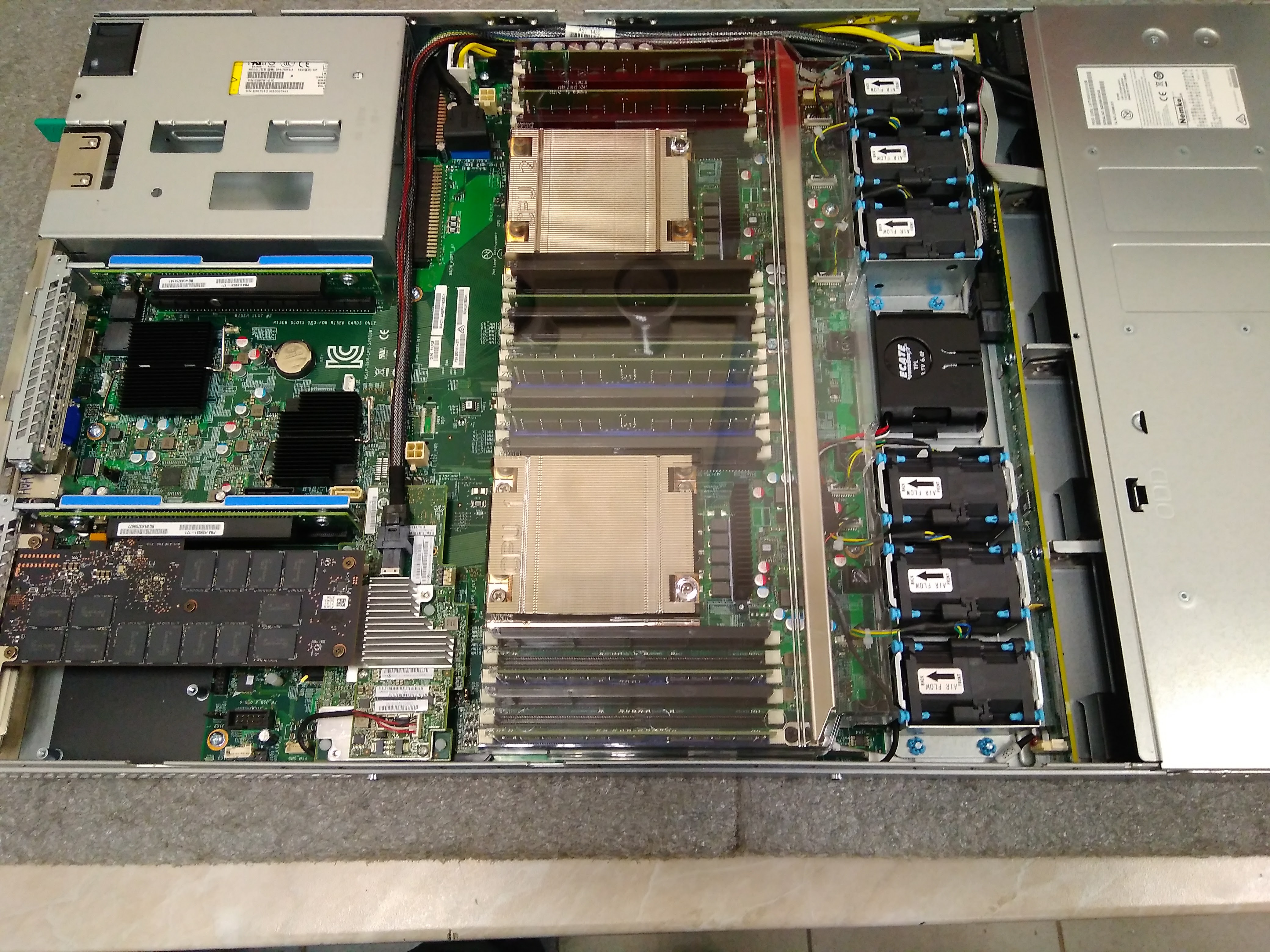

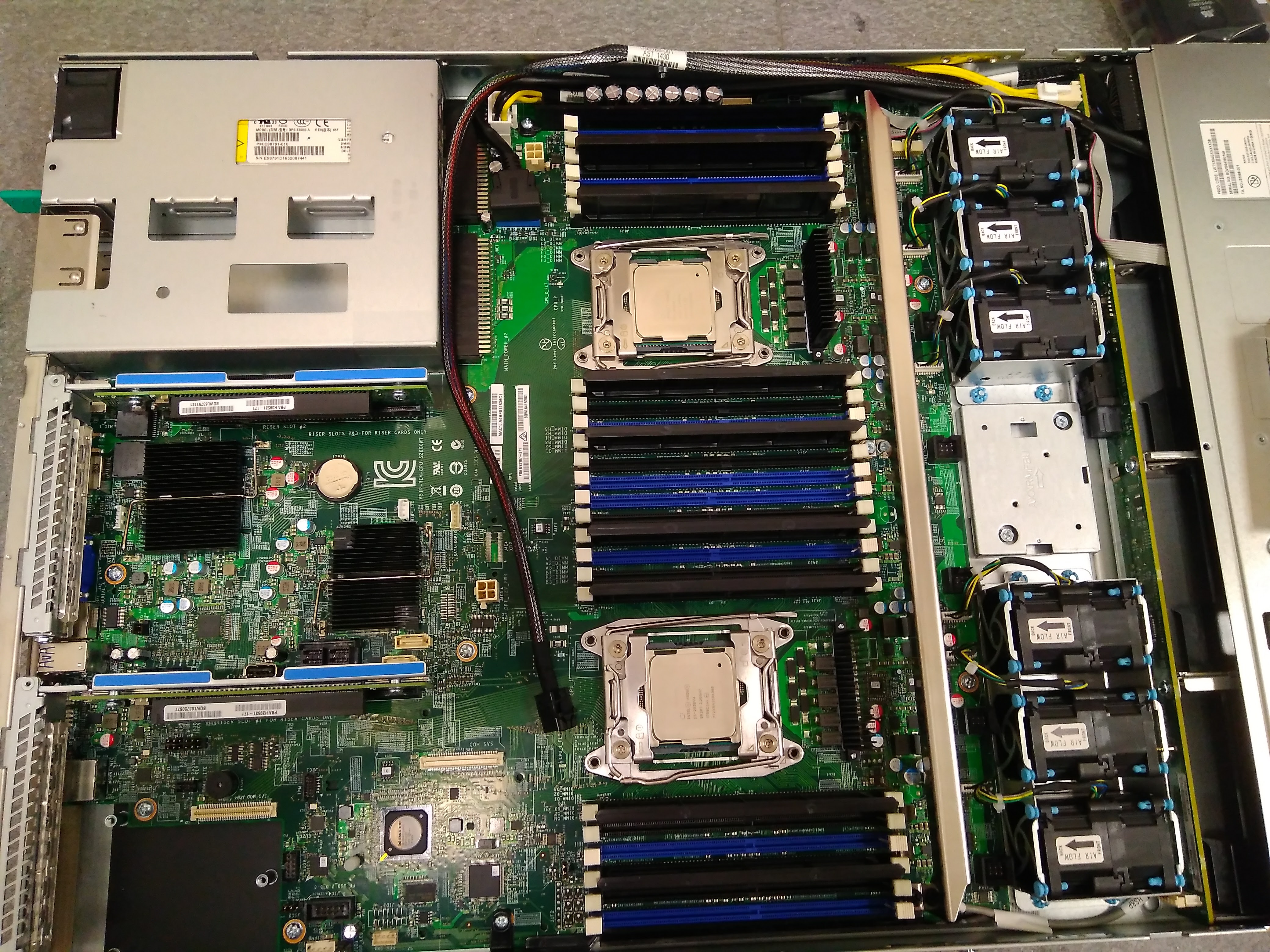

General view of installed processors and memory

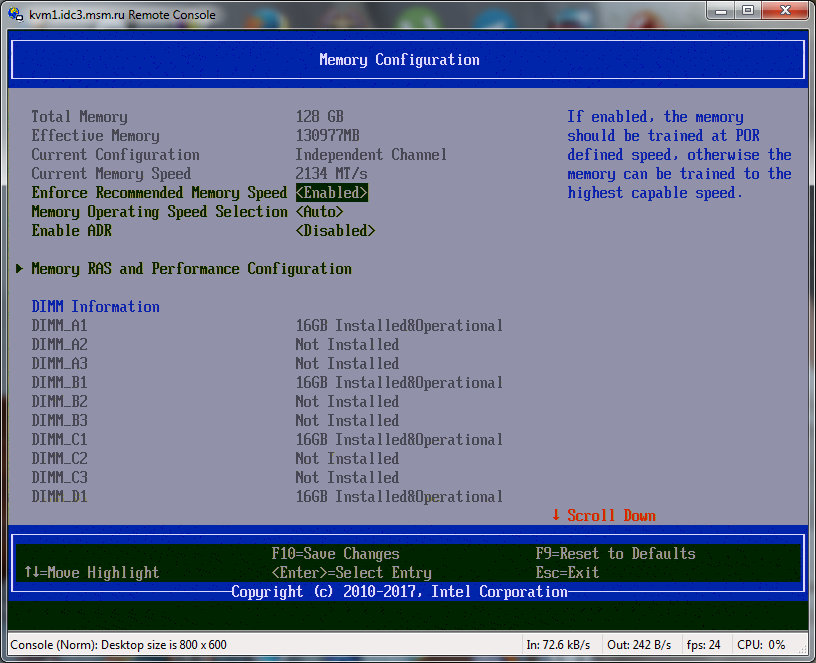

How memory is visible in bios

When I first made the desired server configuration - I wanted 1 ssd at 240gb and one hdd at 2tb.

As I clarified what I needed, my understanding of what I really needed changed too.

I had to find out that ssd drives are very, very different. That on servers it is better to use raid1.

That there is a new NVMe technology and it has a bunch of options. Raid controllers are very different for different tasks.

Let's go in order. Starting with “what is needed for the task?”. You need a 200Gb ssd disk capable of withstanding a lot of rewriting. And 2Tb hdd for reliable backups. “A lot of dubbing” - with very approximate calculations, this is 1-2Tb per day. Reliable backups - this means raid1 configuration. A hardware or software raid is a matter of labor, skill, and responsibility when fixing a raid.

From a management point of view, the choice is:

Accordingly, Vise advised the cheapest of modern hardware raid controllers, which would make it easy to fix a possible breakdown.

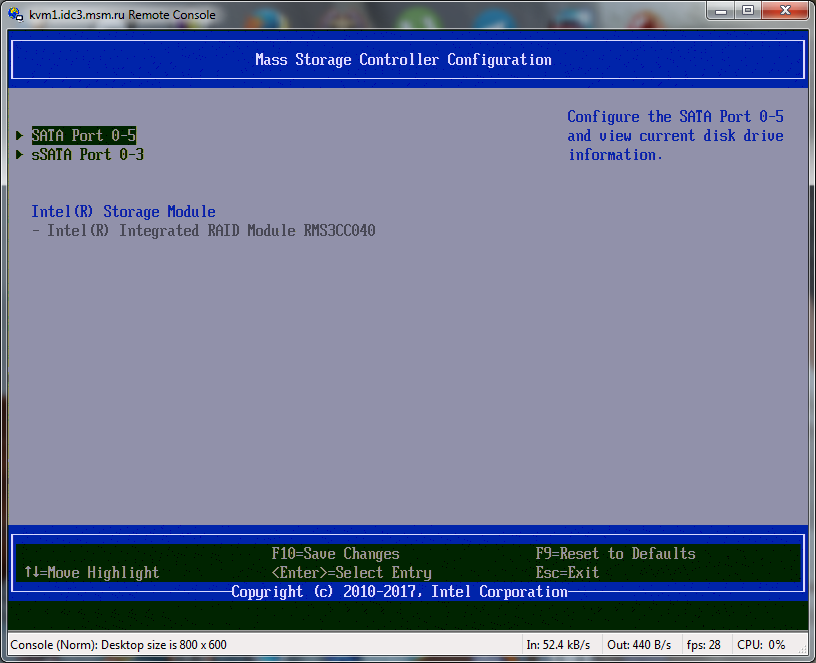

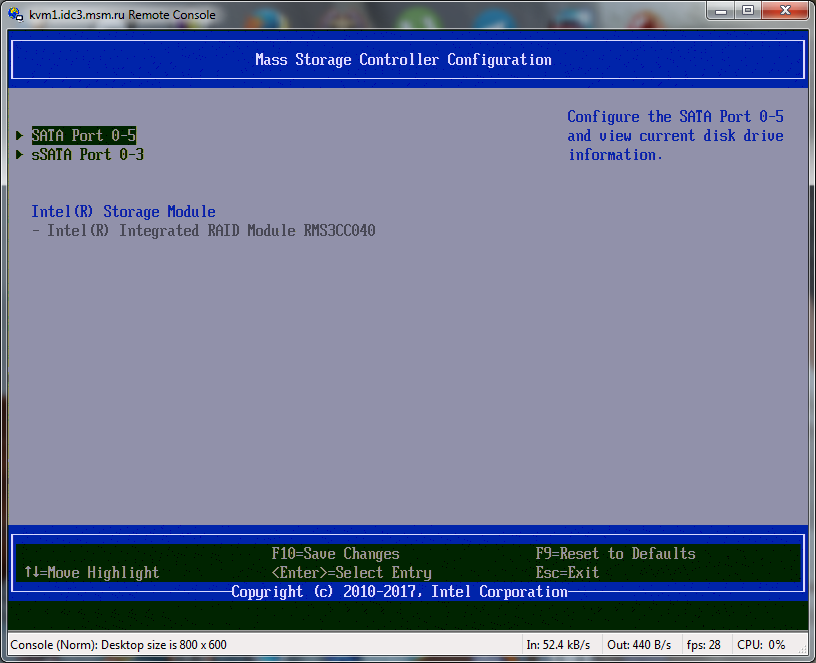

This is: ark.intel.com/en/products/82030/Intel-Integrated-RAID-Module-RMS3CC040

I note that this is a raid controller for the Intel motherboard. That is, if I would choose a platform other than Intel, then I would have to take something else and occupy as much as a whole pci slot.

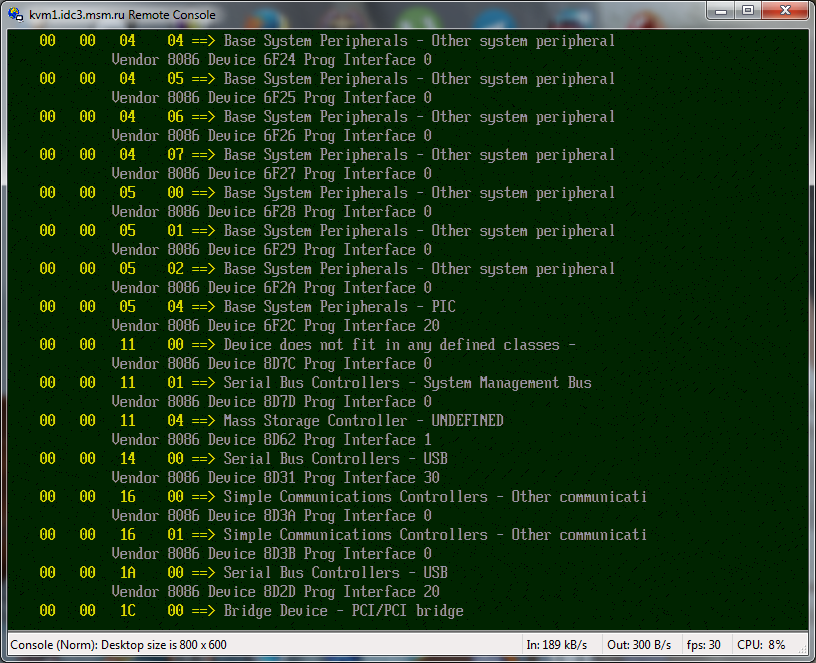

I have no idea what it is. Or something related to encryption or something related to a raid card

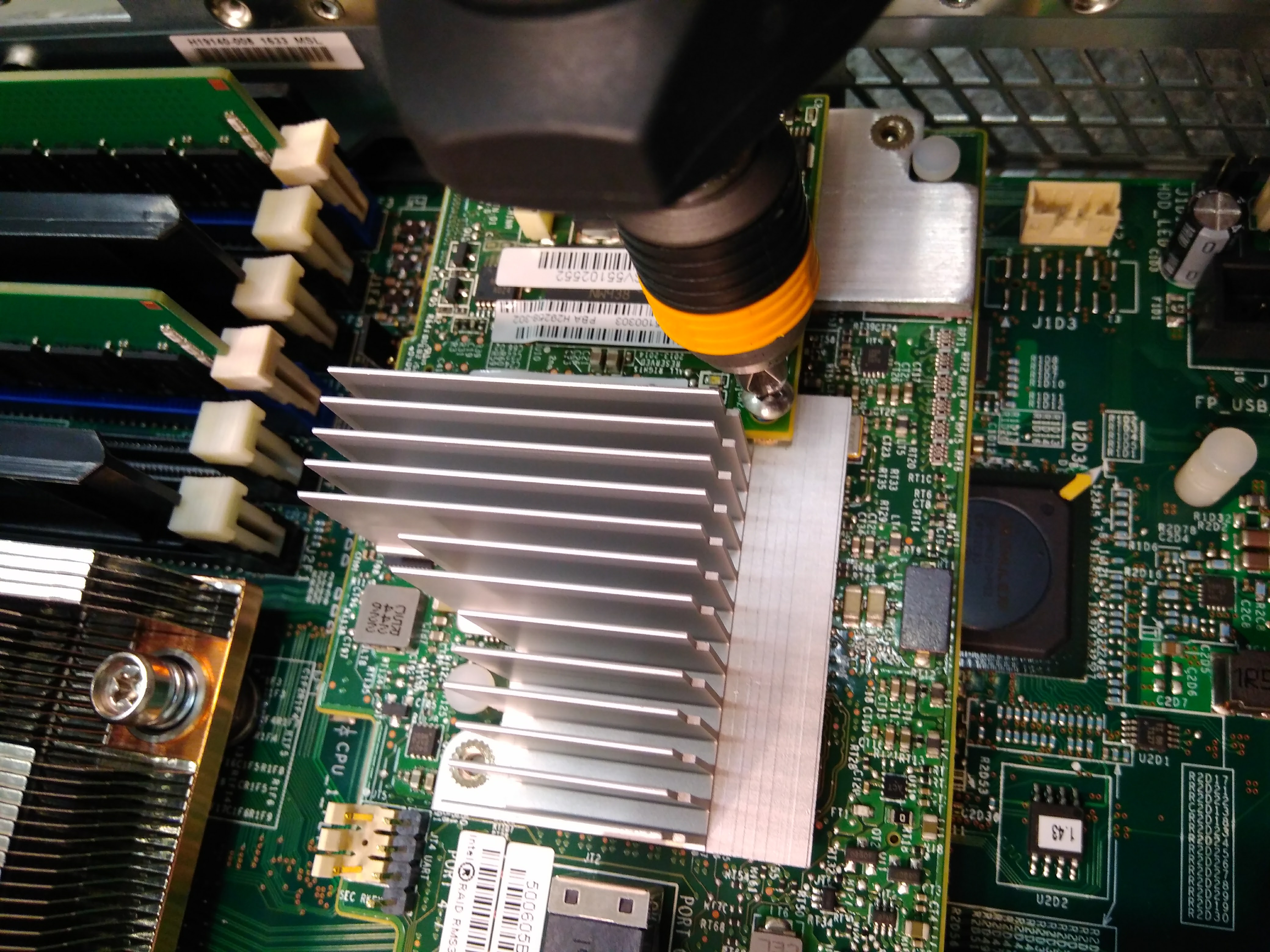

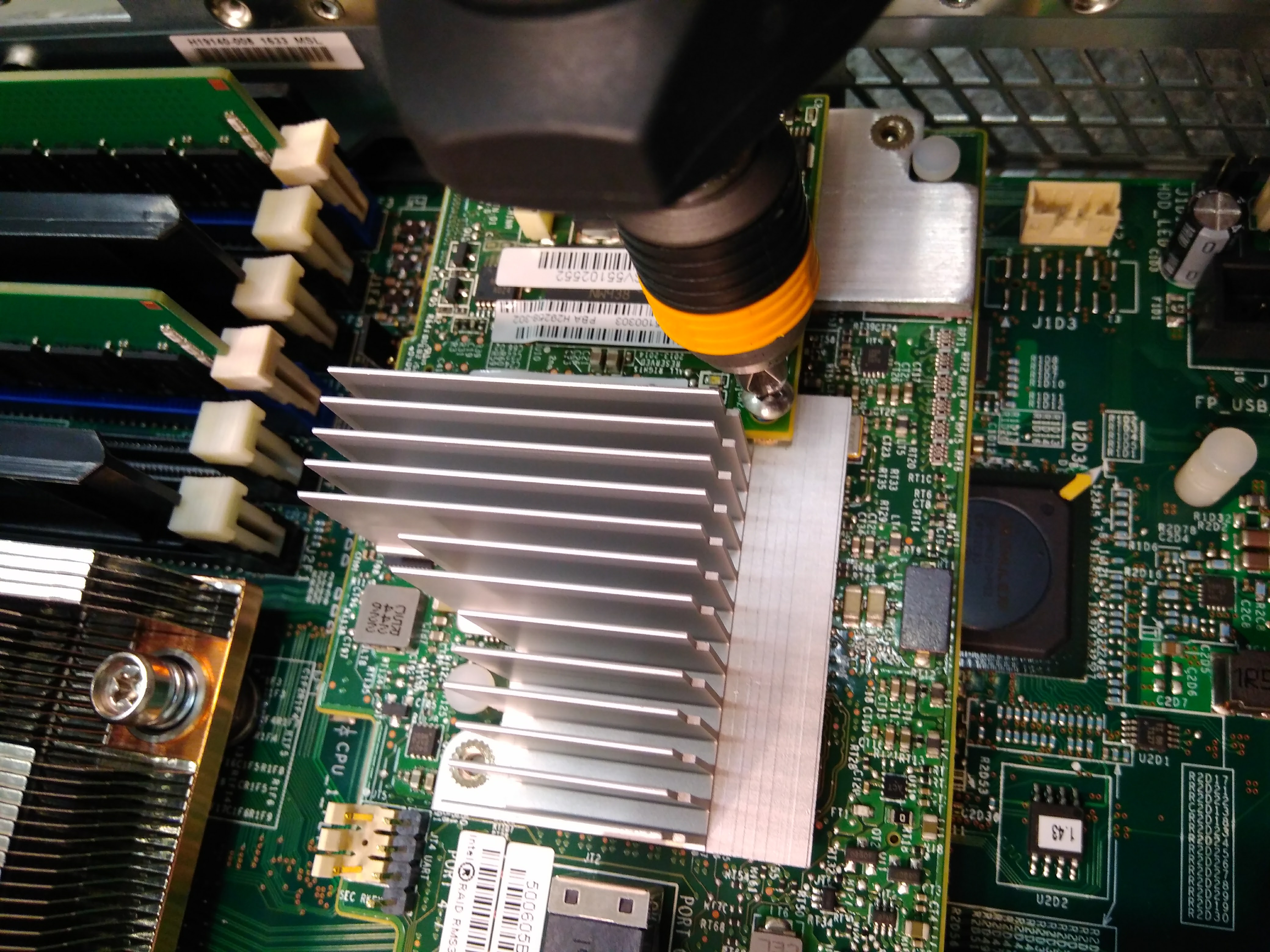

Preparing a raid card for installation

Installing a raid card

How the raid controller is visible in bios

I was going to buy Western Digital because I have a very positive experience using such hdd for home computers. But Vise said that they can only make a replacement within a week (with or without warranty). And they offered HGST - they said they could make a replacement within 24 hours.

I began to look on the Internet for what they write about HGST reliability - like normal reviews, and I agreed with this choice of disk manufacturers.

Further volume. 2Tb is necessary, good. And what is more expensive? + 1Tb is $ 70 more expensive, but then I won’t forgive myself for saving on the volume, and if I suddenly need more space later. Well and further along the line, similarly, the volume increases slightly and the price increases slightly. Between 6Tb and 8Tb, my budget line of opportunity passed.

Total choice: 2pcs 6Tb HGST HDD.

2 HDD HGST 6Tb

Installing some part of the raid controller for the basket with HDD

Connecting the basket with HDD to the Raid card RMS3CC040

The first option: just put 1pc ssd 200gb. Further, I learned that the more ssd - the more rewriting resource it has (ceteris paribus). And so that this rewriting could be performed faster.

For example, I will have 200gb of data and a volume of 240gb vs a volume of 1000gb. So, ceteris paribus - the resource of rewriting a large volume will be 4 times larger.

Well, let the experts forgive me, but for a decision - this is not at all obvious.

Further, I realized that we need to use raid1 and we need 2 of these ssd. Yes, even if backups are made at least every hour, it will not be scary to lose a single ssd. But there is the OS, the settings of everything. Actual DB. It is necessary to urgently spend a lot of time and energy on restoration.

Ssd withstand 2tb per day of dubbing is very expensive. And you also need some stock on this indicator.

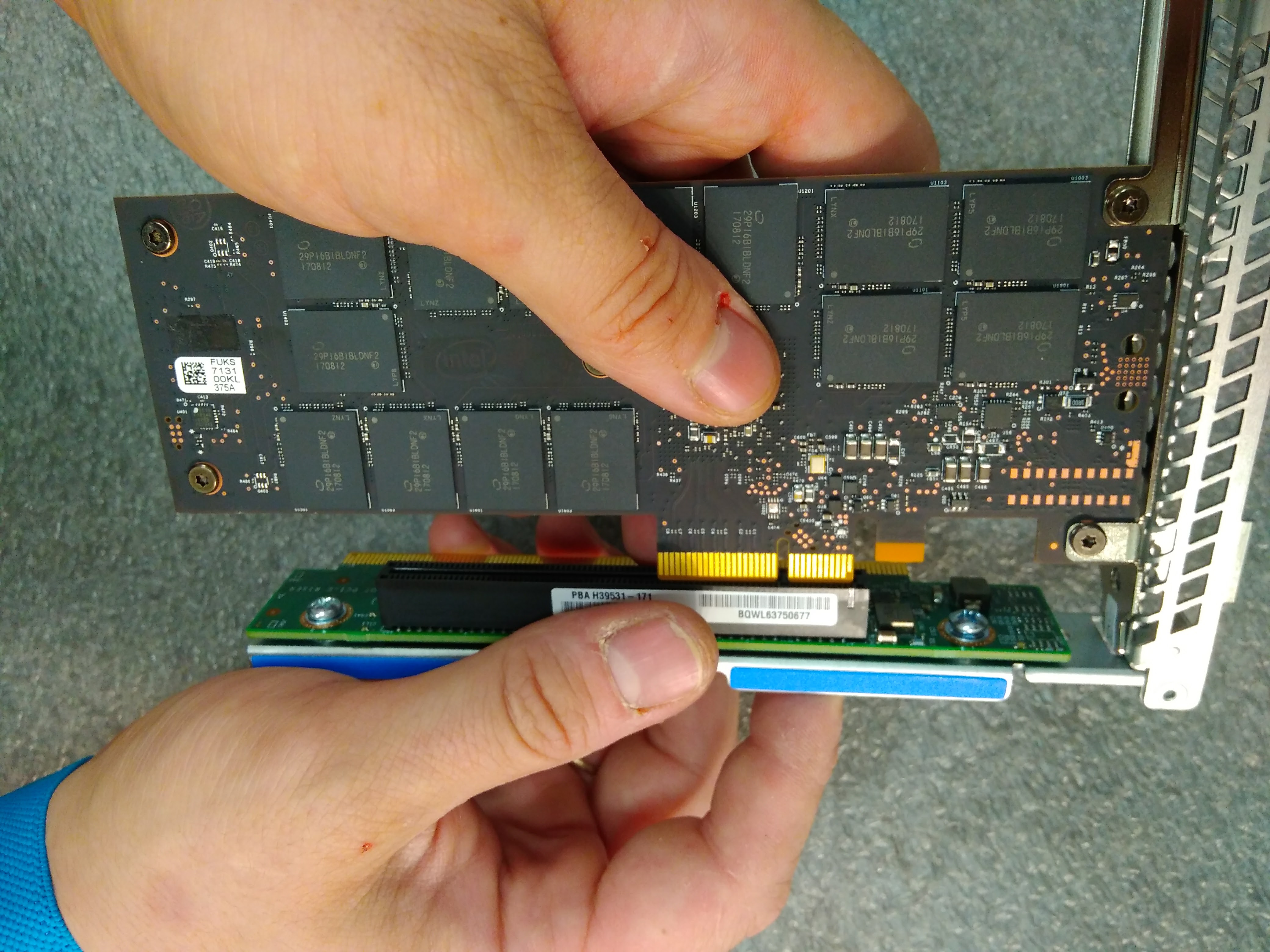

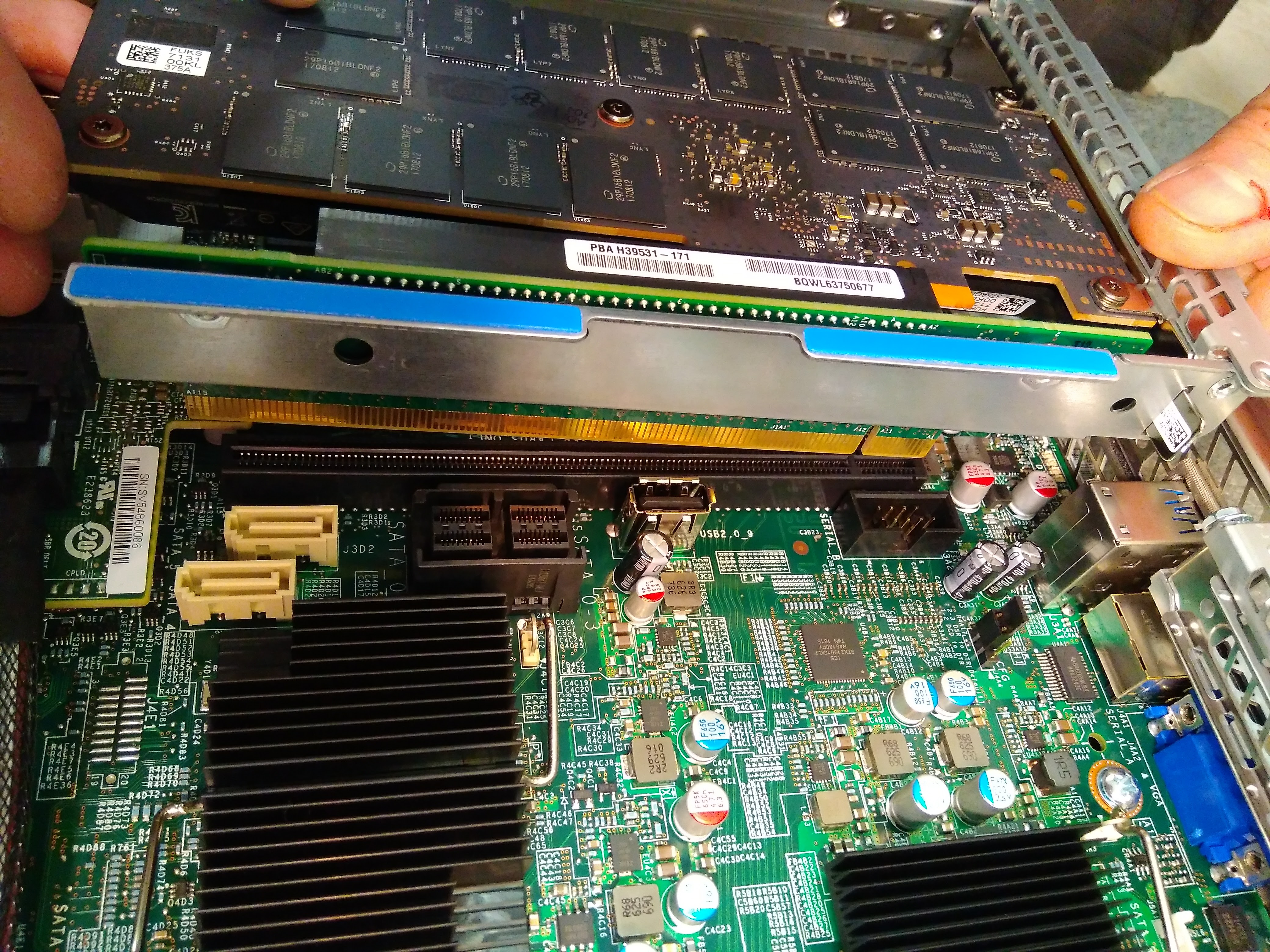

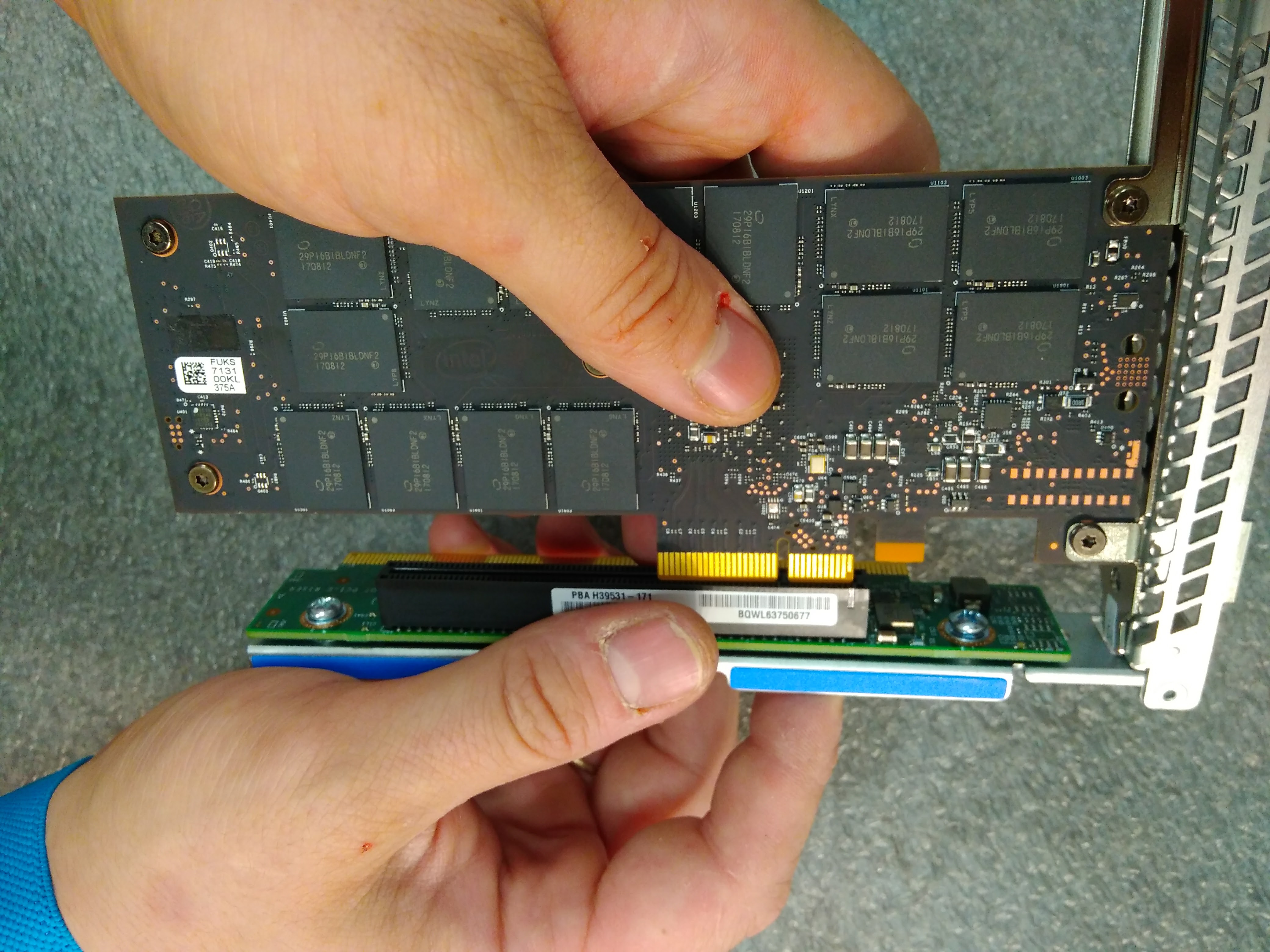

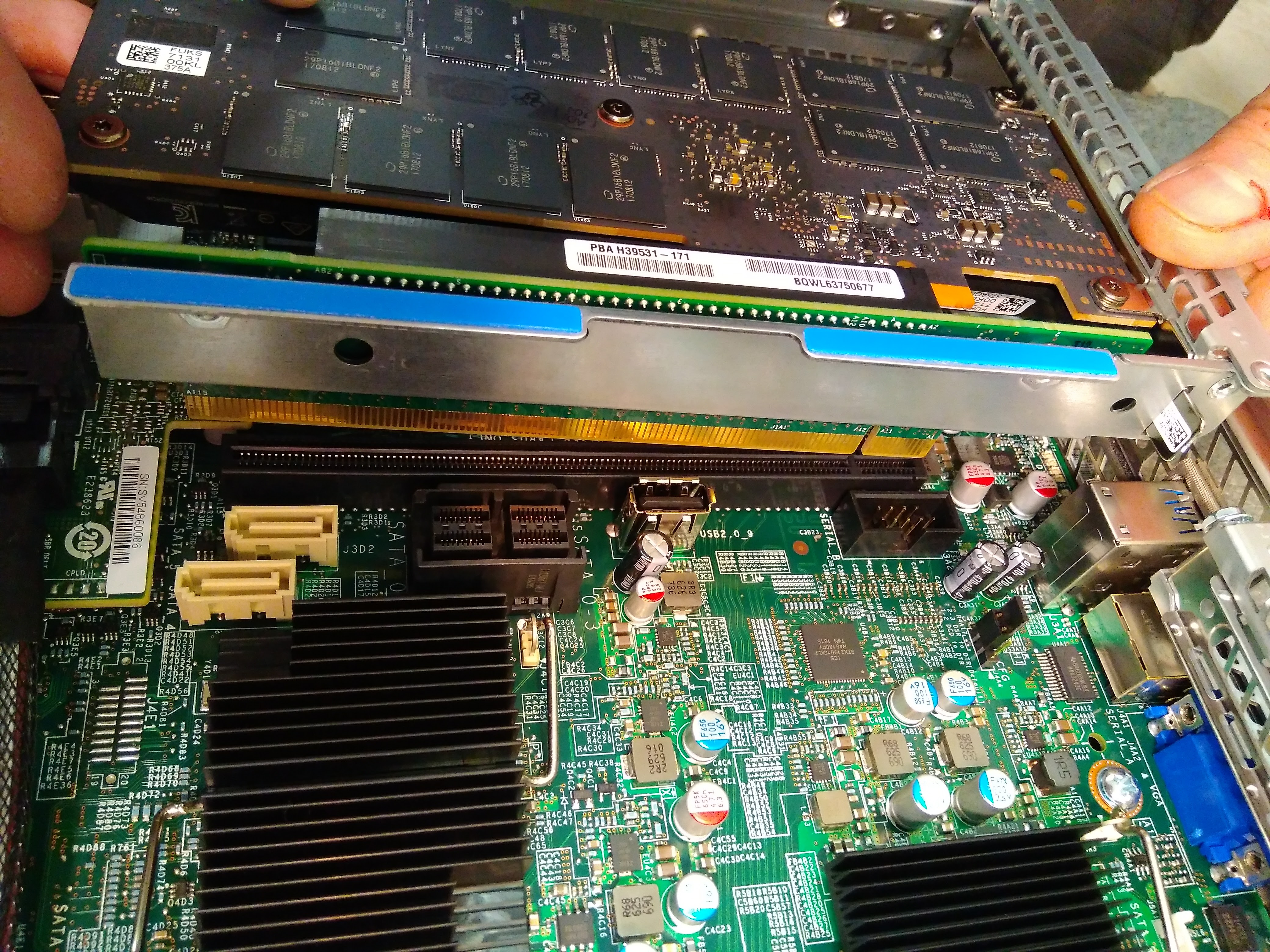

Next, I learned about NVMe technology. This is simple: a ssd drive connected not as usual, but via a pci slot.

The data strip is larger and faster - this is important for my task. The question immediately arose - is it possible to combine them into a raid?

It turns out you can, but there are no such raid controllers on sale. There are prototypes with an unknown price.

In general, at the time of purchase - this was an unacceptable option.

Another decision making factor: let's say I bought 2 expensive ssd disks, combined them into raid. But the rewrite resource is consumed equally quickly. And what will I get? What will they fail at the same time?

Further, I found out that most often ssd fail, not because the rewrite resource is consumed, but because of a controller failure in ssd. Yes, raid will be relevant for this.

And so the choice is made between pci-ssd (NVMe) and raid1-ssd. And given the above considerations, pci-ssd is definitely better for me. Well, it’s just stupid there isn’t another $ 2000 for a backup ssd, but if there were any, then you need to take a 2U system and buy a basket for another $ 400. Well, or buy 10 times worse than ssd.

Well, yes, I’m ready to trust a well-known brand (in this case Intel), in the hope that they have a reliable enough device so that the declared term and resource work without failure.

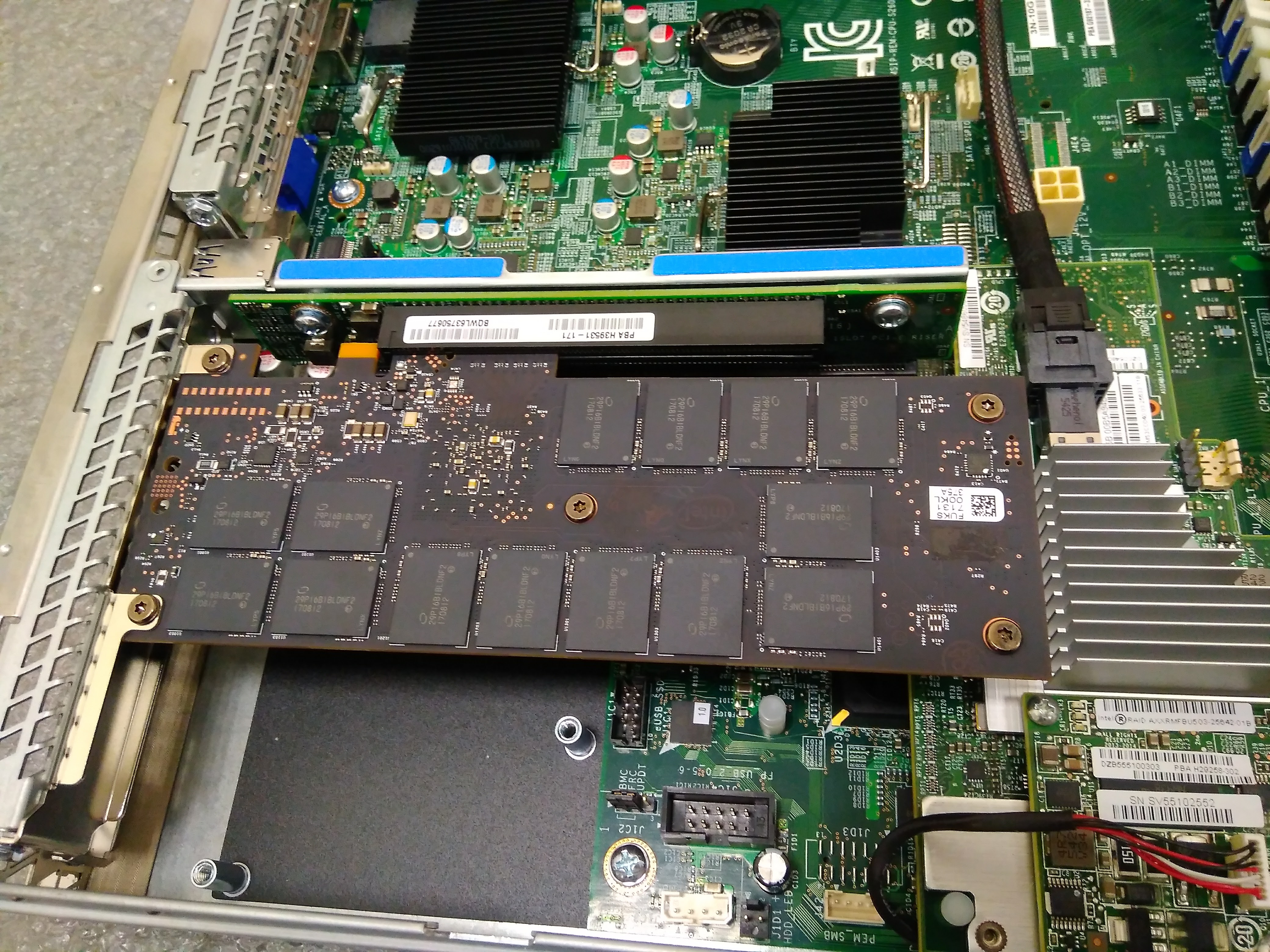

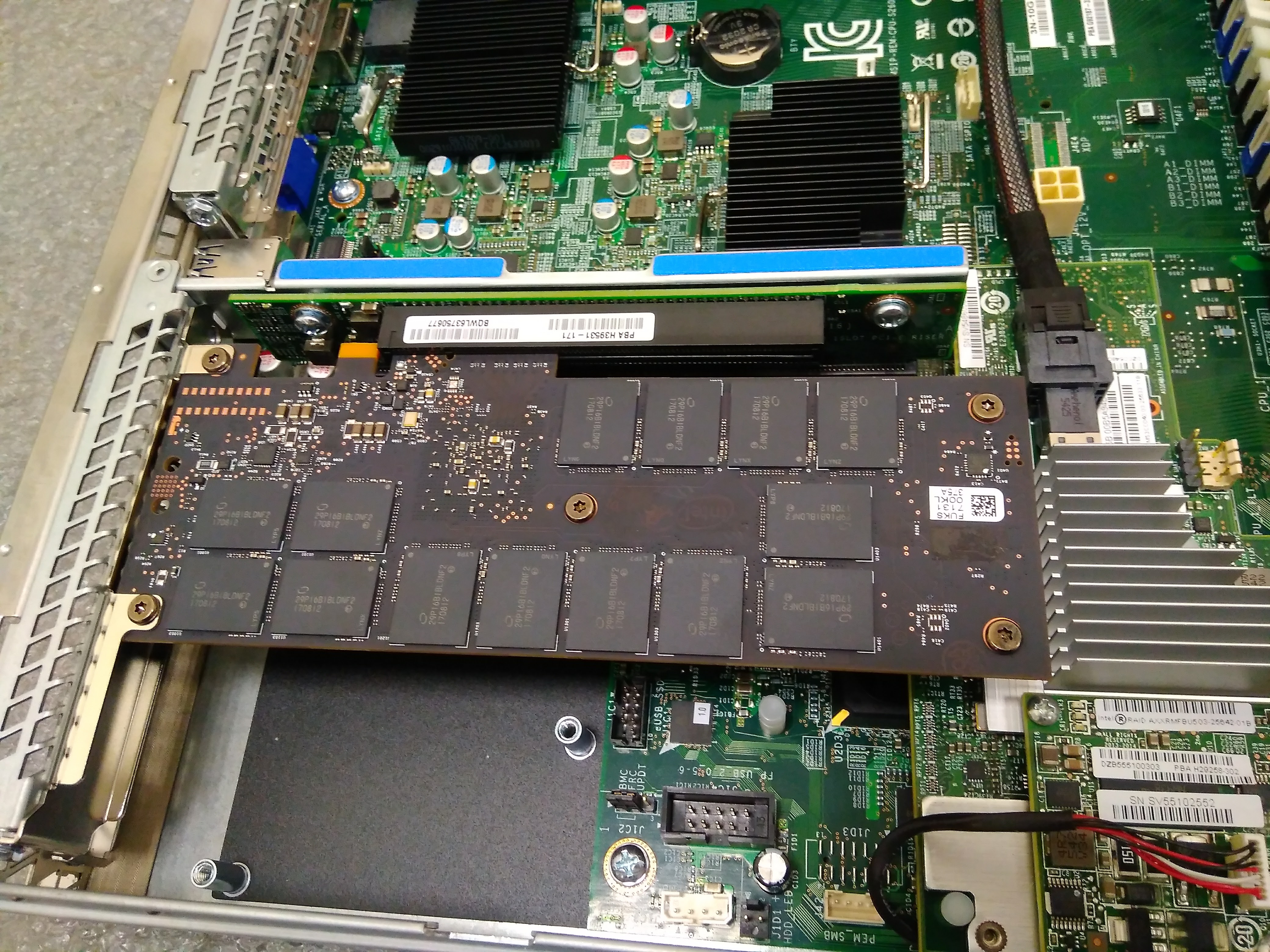

NVMe P4800X installed in a riser

In ssd, I'm interested in iops for writing, speed of a single operation, volume, rewrite resource.

After reviewing the characteristics of thousands of ssd, I believe that in my case it is necessary first of all to look at the declared number of overwrites.

Almost all ssd can be divided into groups according to the number of rewrites of its volume per day for 5 years. Groups: 0.3, 1, 3, 10, 30, 100.

There is almost always an exact correspondence between the rewrite resource and iops for recording. Separately, ssd are allocated, readings from which are performed with an incredible number of iops, but relatively weak to write. Such for me for this server are not interesting.

Manufacturers: Intel, HGST, Huawei, HP, OCZ. Any various ssd - an incredible amount. Honestly - I have little idea how generally people objectively choose the ssd they need. There are so many ssd, they are so different, the price range is so huge.

From my point of view, Intel has grouped the best products. They managed to split all ssd into groups so that I could form an opinion which device I need and which not.

3 lines of NVMe pci-ssd: P3500, P3600, P3700. For 400Gb:

P3700 - 75000 iops - $ 1000, 10 dubs per day

P3600 - 30000 iops - $ 650, 3

dubs per day P3500 - 23000 iops - $ 600, 0.3 dubs per day

Actually it’s obvious that the P3500 immediately disappears. For $ 50 a tenfold increase in the parameter that is important to me.

Am I ready for $ 350 to get ~ one and a half times more iops and 3 times more rewrite resource? Kaneshno, yes.

And so I was going to buy the P3700 for 400Gb, but a couple of months before the purchase, the P4800X is on sale.

It costs almost 2 times more expensive than the P3700. Slightly less volume. iops for recording 500000. The dubbing resource is 30.

That is, for a total of $ 800 I get 8 times more iops and 3 times the dubbing resource.

Well, frankly, in terms of iops - it’s just perfect and despite the fact that getting an extra $ 800 was not easy - I decided to buy such an ssd.

The bonus is a faster recording of each individual team.

Selection:www.intel.com/content/www/us/en/products/memory-storage/solid-state-drives/data-center-ssds/optane-dc-p4800x-series/p4800x-375gb-aic-20nm.html

Райзер с NVMe P4800X устанавливают

NVMe P4800X установлен

Как NVMe виден в bios

Separately, I want to note that there is an ssd type SLC / MLC / TLC / QLC. The meaning of the difference is clear: Several bits of data are written to one physical cell at once. Accordingly, SLC - only one. MLC - two. TLC - three. QLC - four. From left to right - from expensive to cheap. Due to the write, read, overwrite resource.

SLC - the most reliable, fastest and ... monstrously expensive. For example, the “Micron RealSSD P320h" is a 350Gb hairy monster for only $ 5000. Iops claimed entry for 350,000. Unlimited rewrite resource.

MLC - are very different. Well, at the factory they make a bunch of memory chips, something turned out successful - they put it in the server version, something is not very - they put it in the home version of ssd.

TLC - used to be done frankly quickly failing, now it seems like they are doing good ones. And they are trying to make the impression that TLC is good (so that the old ones are bought up by stupid customers).

QLC - they tried to do it, but apparently nobody managed to make a commodity version.

Intel recently released 3D-XPoint, this is some new type of memory. As for me - a layer cake from ssd chips.

For servers, unlike home computers, the choice of motherboard is completely different. For a home PC, the size of the computer itself is not important, but for the server - you need to shove everything in 1U (This is 4.3 centimeters!).

From here, another approach to the selection of motherboards immediately appears. Not motherboards are sold, but platforms. That is, the motherboard + case. Anyone who sells only motherboards separately - immediately loses in the competition.

Platforms for this processor come from different manufacturers. With minor modifications possible, on the sites you can only provide a link to a specific modification (I will tell you about the modification a bit later):

HP and Dell manufacturers - I will not consider it due to the monstrous overpayment for no reason.

-Supermicro

- Asus

- Intel

- Separately Intel

The choice of these four options is the cheapest option suitable for all requirements from each of the listed manufacturers. Their price is about the same. The R1304WFTYS variant disappears as soon as I see the 1100W power of the PSU (why this is important - more on that later).

About ASUS, I thought so that they recently crawled into the market for server motherboards, it is not clear what I will risk.

About supermicro - they are actually only server platforms and have been dealing with for a very long time. But ... raid controller. Either I take the Intel platform or I need another controller and occupy the PCI slot.

Therefore, I decided to choose the Intel platform R1304WTTGSR. But I note that it is 10% more expensive than a similar platform from supermicro.

Modification: with 10 gbit ethernet. The difference in price is $ 100, and if I suddenly need a bold channel, I would not want to touch the server and install something there. And I most likely will need a bold channel for the start of the game.

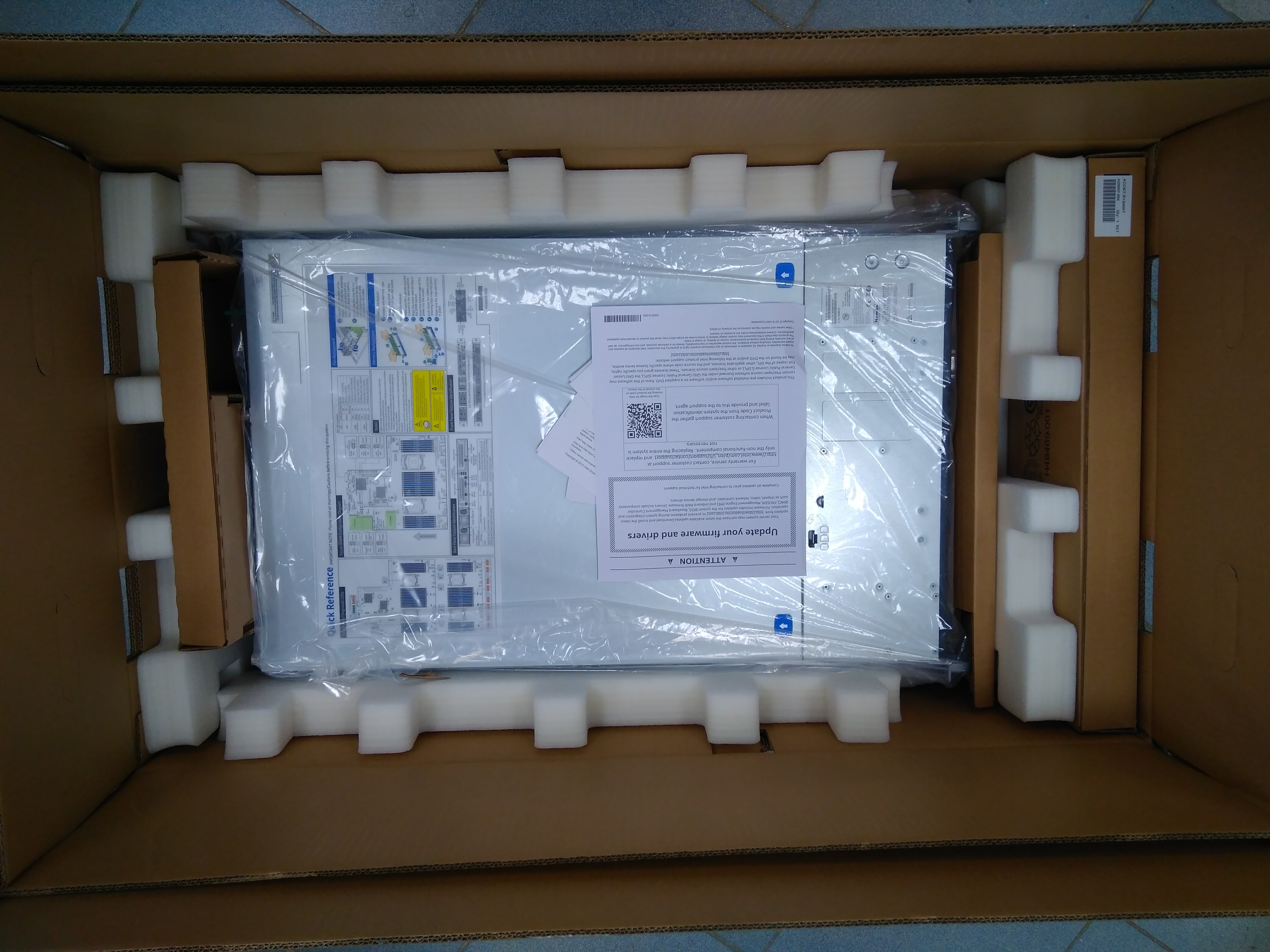

The R1304WTTGSR platform is packed in a box.

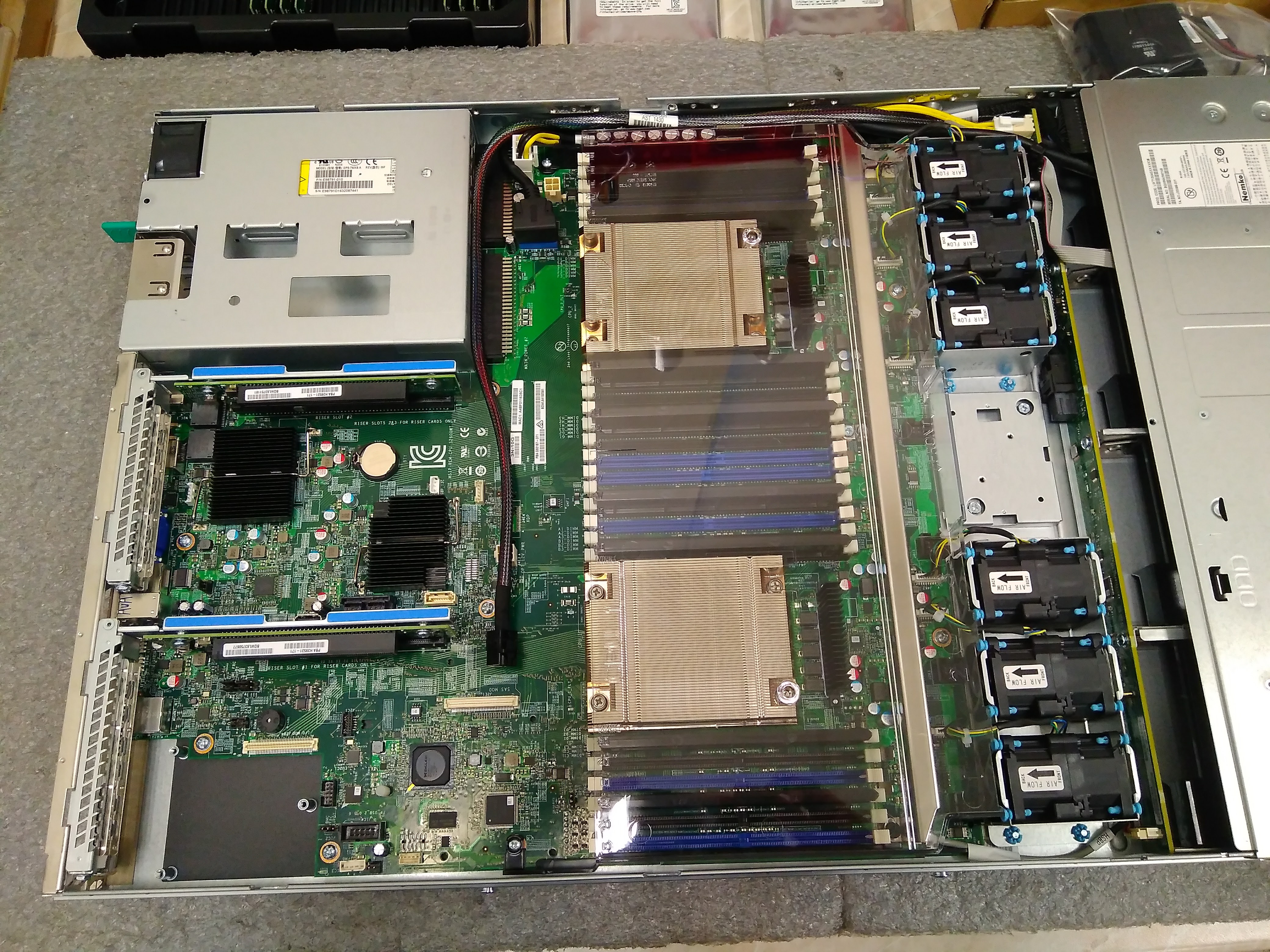

This is what we see when we first open the new R1304WTTGSR platform.

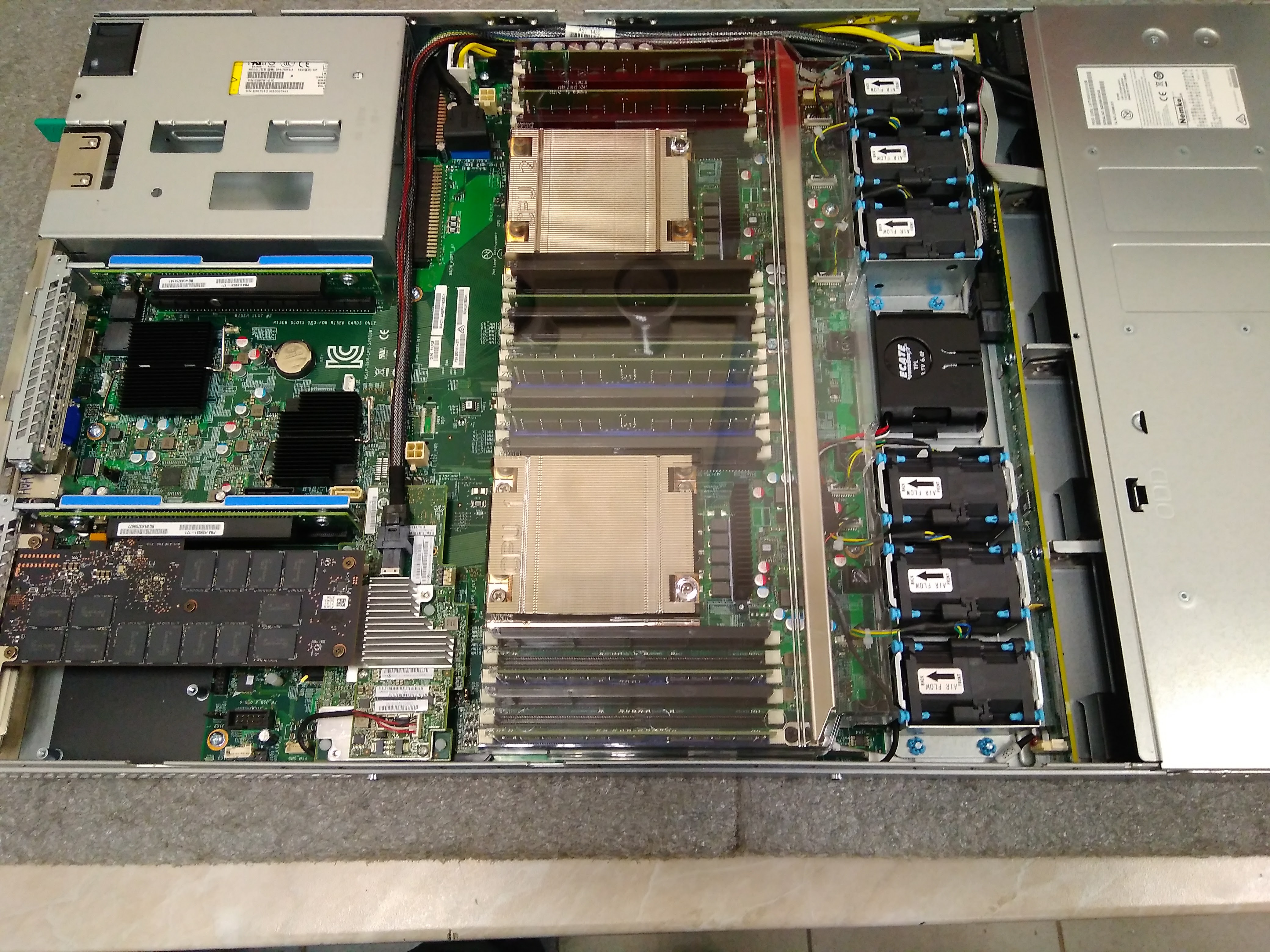

All components are installed, it remains to close the lid.

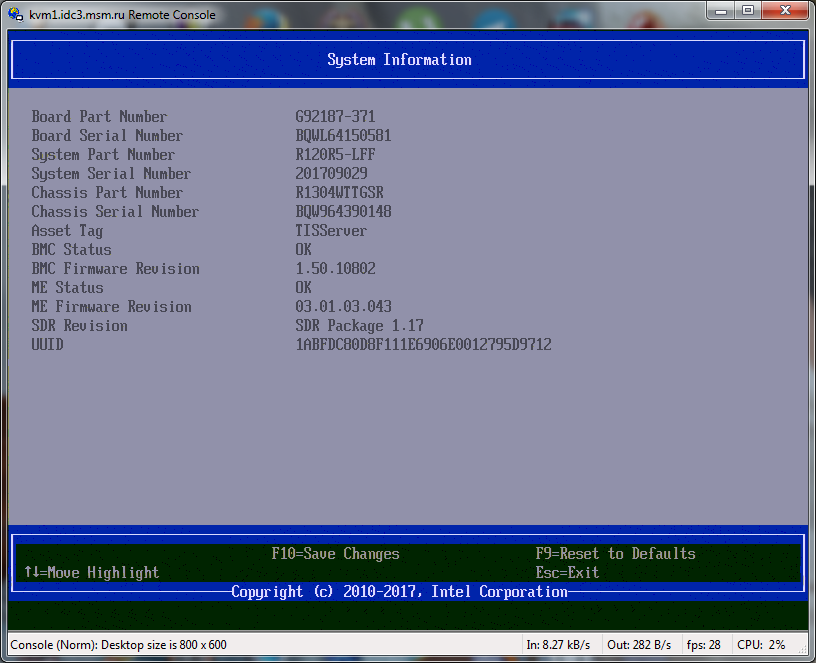

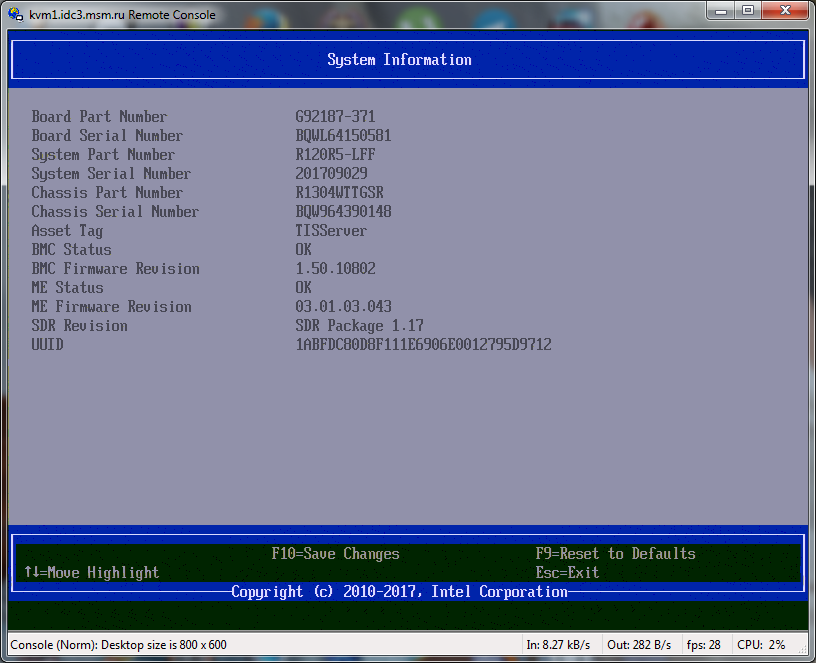

Such platform information is visible in bios

Of course, I first turned to hvosting.ua, because I have been cooperating with them for more than 15 years now. And the volume of transactions exceeds the cost of the server under discussion. But hosting a server in Kiev after the well-known events of 2014 is unacceptable for reasons of equipment safety.

hvosting.ua offered me accommodation in the ttc teleport data center in Prague. But the discussion stopped on the question "I want a piece of paper with legal force that I am the owner of the server." Well, excuse me, I'm no longer a student who completely trusts everyone.

However, if someone really needs to host something in / in Ukraine - I recommend hvosting.ua was uptime ~ 1200 days, then the planned transfer of the server to a neighboring data center and another uptime for 700 days. Next, I tried to do “apt-get upgrade” without rebooting the server :) It didn’t work with debian, it couldn’t update in 2000 days - I couldn’t resolve any dependency myself. Hvosting helped to resolve.

In general, over 15 years there was not a single power failure and the channel dropped for 15 minutes every 4 months, 2 times the channel fell for several hours.

The vk authorization, broken by oauth, was especially delivered (they have vk blocked there).

There was a question about buying in the Russian Federation and placement also in the Russian Federation. Why purchase and placement in the Russian Federation? And so that I would be legally protected. There was a pleasant moment, at the very beginning of the search - the choice of the best supplier, no recommendations, no biases - all are equal. I remember how I started with the banal search “datacenter in Russia” (then I found out that in the Russian Federation this is called the data center). And "buy a server in Russia."

I think it will be correct to tell in chronological order.

12/05/2016

In the beginning there was a very constructive conversation, with an interesting suggestion. I studied the possibility of the raid1-NVMe variant, asked a question: can they put raid1-NVMe on the intel platform?

I suddenly received this question: a rude refusal to answer (by the way, I would have been satisfied with just the answer “no”, I just really was not aware of this matter). Then I did not want to communicate with them.

The first one I saw was the P4800X for sale, and so I decided to ask them for details of the purchase.

The conversation ended on the fact that without my personal presence in Moscow it is impossible to make a deal.

12/05/2016:

They write on their website that they have 12 years of experience. And other cool indicators. DNS whois shows that the domain was recently registered (3 months before the review). I immediately have a question for them: "why such a discrepancy?" It turns out that there was another company to which there were reviews on the Internet such as "paid for a long time, there is no server or money." One could forgive the specialists who created a new company after working with a bad director. But unfortunately, what they wrote did not fit in with the history of DNS. I decided that the risks were unacceptable, although they offered an interesting price.

12/08/2016:

Citizens trade used equipment. Then I still considered such options. Over time, I decided not to consider, because there is no used DDR4. Used sdd-hdd is better not to take it anyway. Well, it turns out they have nothing to offer me.

12/08/2016

It is a regional equipment supplier. In principle, a good option - they are nearby. Then I still thought that maybe there is a decent data center in Krasnodar, but no - if there are decent ones, then they are jammed to the eyeballs. The paradigm bent prices up and did not answer my stupid questions.

12/12/2016:

Here is such a working link so far: server.trinitygroup.ru/expert/25736

As far as I remember, I was confused by the price. Honestly, even now I look at this price and don’t understand where such a big price comes from.

12/12/2016

They were recommended by the tech.ru data center like they themselves collaborate with them. He listened to all my Wishlist - picked up exactly what corresponded to my Wishlist of that time. At a great price. Then he began to dig up DNS whois and reviews on the Internet.

DNS whois is as described in the correspondence. During the investigation, many interesting details were revealed.

It turned out how the company was based, by whom, how it changed. It was found how they changed the site and asked to leave comments about it somewhere.

Company employees were found. There were positive and negative reviews. All this indicated that the company is old and constantly actively engaged in something.

I also checked the Intel certificate - it was at least outdated, but real. I checked some more random certificate - also real. On this I was very pleased.

There is also https. Also adds points in the direction of choosing their company.

From the very beginning it looked like a very good option.

12/12/2016

The first communication is positive. But the price is simply no words how big. Until now, there hangs a brutal example of greed . Choose a 400Gb NVMe 75k iops. This corresponds to the Intel P3700. It turns out that everyone sells this piece of hardware for 60,000 ±, and they push it for 90,000.

Well, okay, maybe this new site with some kind of bug? .. But no, it's not a bug. Here we find it on the main site with the old design:

stss.ru/products/servers/T-series/TX217.4-004LH.html?config=

Here in the appropriate place we select the same piece of iron and also get +90000 to the cost.

A 50% bonus bonus is certainly very profitable, but I'm sorry, we will take the other way.

12/12/2016

In general, it was a very decent option and the DNS whois and reviews looked good.

But the question “can they deliver the server to the data center without my presence?” Did not answer.

So I would seriously choose between Vise and Desten. If they had a more patient support - maybe I would eventually buy a server from them.

12/13/2016

They just sent me the configuration and price - I was horrified and did not answer. Now I look - the price is adequate, and why I was horrified before - because I did not understand the meaning of the word “DWPD”. Apparently, I had some kind of telephone conversation with them.

12/14/2016

Citizens tried to sell me Dell equipment. Again, I see an overpayment for the brand in general it is not clear why. The employee explains to me that the overpayment for operational assistance. But I don’t understand something - the server is in the tier3 data center rack. No one will kick him. Except factory defect - nothing can happen there. Plus, they stated that they could not install a server like Vise without my presence - it was not clear what to talk about further.

01/10/2017

Now I see a poor server configurator on their site. Then they had only a business card site. And it was written there that they are considering installment options, and I decided to ask on this topic. They said that such small amounts for installments are not interesting. Servers they have DELL / HP - that IMHO overpayment do not understand why.

Let me justify my discontent with these companies.

www.regard.ru/catalog/?query=2620+v4 Can

someone explain to me what is the difference between "Intel Xeon E5-2620 v4 OEM" and "Dell Xeon E5-2620 v4 (338-BJEU)"? I see only the difference in price by 2 times! The processor crystals themselves are made by Intel. I’m ready to believe that they put another solder, well, ok + $ 50 I’m ready to understand. Explain to me stupid why the company DELL in this example takes + $ 500?

Apparently somehow a reminder worked for them successfully and they called me a week before the final configuration was agreed with Vise. They tried to intercept me as a client at the last moment. But ... the price was higher than that of Vise.

As well as a parcel post, ComputerUniverse, ogg and other sites. The option that they deliver me to the hinterland, and then I somehow deliver it to Moscow, looks awful. Not only that, the entire profit from the rejection of intermediaries is eaten up by the cost of delivery. So also incredibly much time to eat. And most importantly - the risks of damage appear (hit something for example).

Option with delivery to Moscow - you need somewhere a room where I can assemble a server plus a personal presence. Plus a transtort between the point of issue, the point of assembly and the data center. Plus, the risk of messing up during the assembly (if there are no secondary wiring, I’ll connect it like that - there will be no way to fix it).

I noticed that the price varies for each piece of iron in different stores. That is, you can run around Moscow to buy cheap in old stores. But again, the risks of the build curve. Personal presence, assembly place and transportation around the city. And the question is - where is the profit from this event?

In the outback, of course, there are shops and they are ready for a long time to deliver anything at an affordable price. However, as it turned out, they somehow have a friend’s brains. I explain to them that here ssd dear, I need this one here. And they - we don’t have it, so take another one with similar characteristics. Of the "similar" only volume is considered.

But again - they brought it, we need to collect it (there is a risk that something will be crooked), then delivery, personal presence, etc.

Very interesting option. A man collects computers to order. He says bluntly: we take 4% of the commission. All additional costs are at your expense. I really liked this position, but there are no guarantees that it will not disappear after receiving the money. I don’t understand. What prevented him from saying that there is an option with a contract, everything is legally correctly executed. This option will increase the price by so much. That would be a very interesting option. Here is the main price, here are my 4% mark-ups, here are the additional costs associated with delivery, here are the costs of legal issues.

I have never talked with these guys, but I spent a lot of time on their site.

I must admit - they have the most convenient configurator of all sites. Convenient not from the point of view of prettiness, but from the point of view of "how to understand all these characteristics?" I didn’t communicate with them because they had all 15% of them in comparison with everyone else. They have all kinds of certificates - for themselves, he concluded that the municipalities of the environs of the Moscow region or Moscow are the best supplier of equipment. But for me not - primarily because of the extra charge.

Their configurator is so beautiful that it deserves special attention. Consider this example. Perhaps they should sell it as a service to other companies? Type unload their prices and what is, and users themselves compose what they need. Well ... if Tisk had such a configurator - that would be super fine.

At first glance - bad because of the design. But we do not have a beauty salon here.

I also note that despite the apparent simplicity, a huge amount of small and useful functionality is hidden in it. Platform. Thanks to this site, it finally dawned on me how the motherboards for the servers are selected (in the form of a platform and their modifications). Also here, all modifications are immediately visible. It can be seen that for ssd and for hdd. It can be seen that 10GbE and 1GbE. It immediately becomes clear right away that if I want to install hdd for myself - then 2 modifications do not suit me at all. And the difference in price on the topic of 10GbE and 1GbE is 1GbE + 10% = 10GbE. And that's it, no more thinking and comparing, asking stupid questions to the vendors. Looking at it, everything is clear right away.

Next, a block with processors - you can clearly see which processors can be plugged into this platform. A processor with a bunch of characteristics - at the “I don’t understand anything, but I want to understand” stage - dumping all the different characteristics in columns is very useful and very reasonable. This section helped me a lot to understand which processor characteristics are important for decision making and which are not.

Then a block with memory and it suddenly also helped a lot to figure out what characteristics you need to look at to make a decision. Here it is very clearly visible that it is necessary to take into account the memory frequency and RDIMM / LRDIMM. I wrote about this before.

Next, I figured out how to build raid1-nvme. Look here. There you can choose a basket for ssd-nvme. After that, you can select nvme-ssd in this cart. And then any modern raid controller is suitable for calculating 4x pci lines for each nvme device.

So simple about the most important thing - I’m probably so stupid spent more than 20 hours to understand 3 lines above.

Yes, it is not yet possible to assemble hardware raid1-nvme in modern 1U Intel. Due to the lack of appropriate baskets.

I spent a lot of time here choosing configurations and comparing prices. The prices here are good, but "out of stock" constantly pops up - which is quite a hindrance to plan. I did not contact the store. Because actually what to talk about? Here is the goods, here is the price - pay and take. Since it is necessary to take it personally - an unbearable option.

I tried to build a server, but they always had something out of stock. And what happened was not at the most interesting price.

Of all these options, it’s obvious that the Vise is best for me. Before buying agreed on the final configuration. They entered into a contract remotely, then after purchase - in paper form. On the 4th day after payment, as planned, they delivered the server to tech.ru and in the evening of the same day I already started to configure it. Through bios, I checked that all the equipment is in full and in accordance with the agreement.

Everything went flawlessly, there’s nothing to complain about.

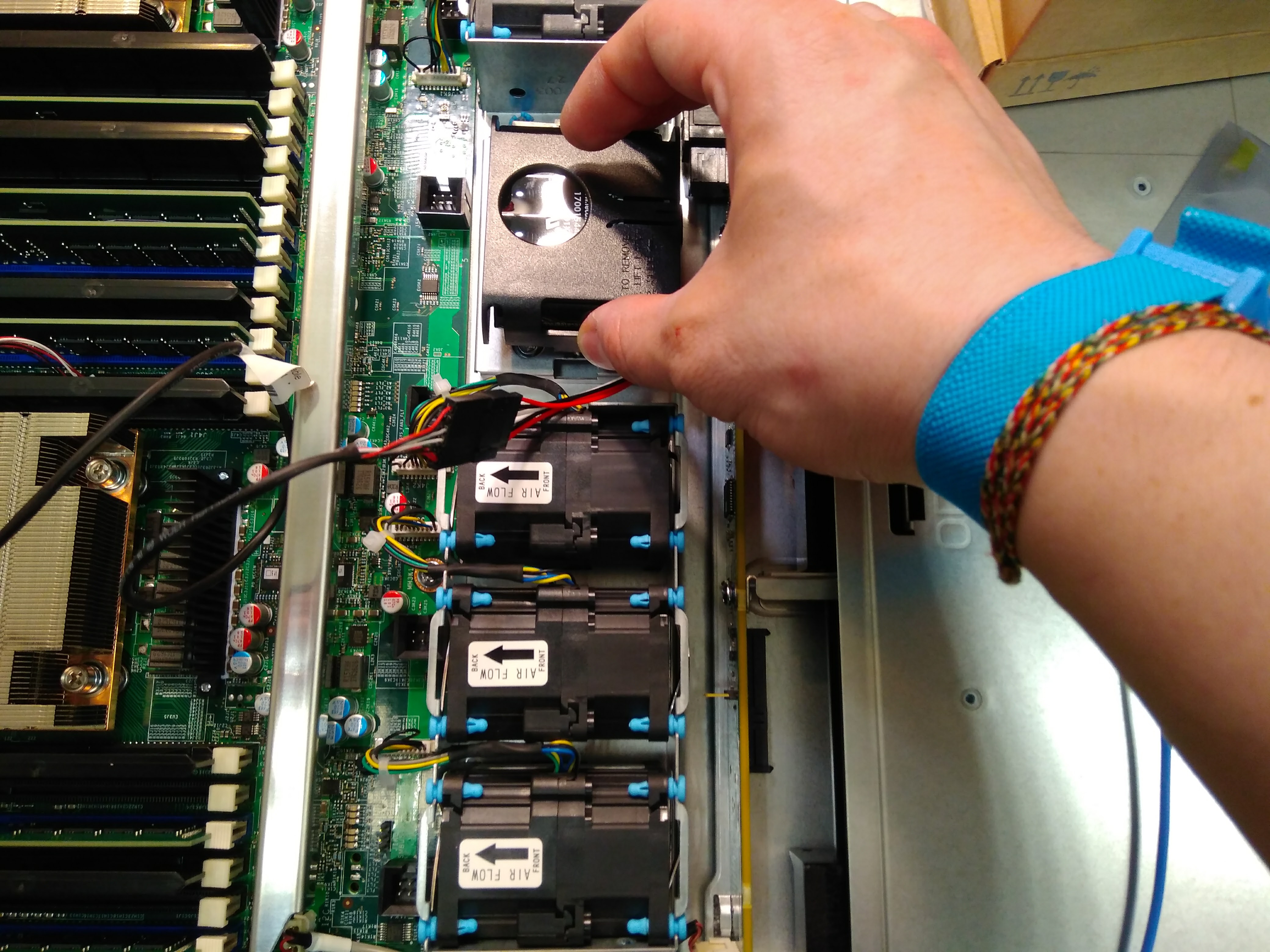

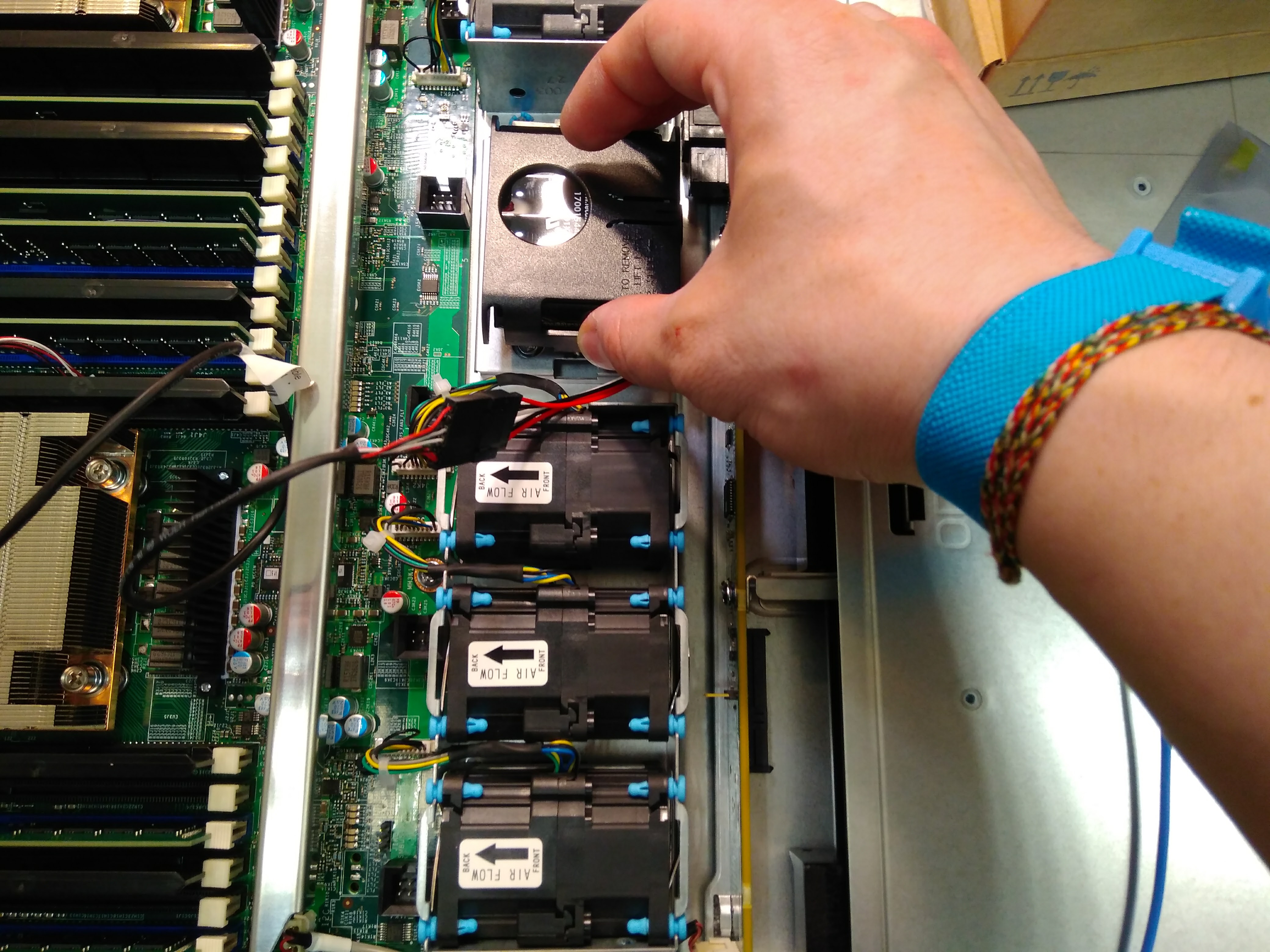

In the above photo, a hand flickers. On this arm there is a blue arm sleeve with a wire. This is not for fun, but grounding. This is an undoubted plus to the qualifications of employees by Vise.

In my history I found correspondence with only 4 data centers, but I remember for sure - there were much more of them. I remember exactly - there were different phone calls. I remember exactly - there were reviews of sites.

You can quickly find out that there are different levels of data centers. tier1, tier2, tier3, tier4. Or even without this brand. tier4 in the Russian Federation there is only one. For some reason I can’t find the offsite now, but I remember I found it and saw that it’s impossible to climb there with my 1U. And probably the price would be too high for me.

Working inventory in the tech.ru

tier3 data center is a lot of options in the Russian Federation. I took this list and began to look for a match. I will take this list now and go from top to bottom:

Possibility to rent only racks. Would write here a list of intermediaries through whom you can put 1U to them.

Obviously just for the needs of the bank itself.

Not for resale.

Only racks trade. There are no intermediaries.

These already offer something for 1U equipment. I remember that I watched this place before, but did not like the prices and therefore did not even write to them. They did not write anything on the site about the cost of communication channels - and even without communication channels an unacceptable price is obtained. I was also embarrassed by the lack of photos. I’m still confused by the lack of https. I understand that they built the site in such a way that they now need wild-ssl, which is significantly more expensive than regular ssl. But there is no money for a certificate or there wasn’t enough mind to organize a site correctly - it’s also not good for them.

This bank also built its own data center.

Somehow I missed such a data center. Now, if I were looking, I would love to contact them. At the prices nothing is known - all through "send a request". Perhaps this confused me half a year ago. The lack of https is surprising - they spent several million dollars on the construction of a data center and there was no $ 10 to buy a certificate. Attention is the question: what else did they save on, or what else did they lose sight of?

This is something state.

This is the data center of a mobile operator.

Only racks trade. There are no intermediaries.

Akado, an Internet service provider, has built a data center.

A close examination - it is clear that they will soon expire tier3 certificate. This means that they made the design of the data center in accordance with tier3, but for some reason still have not implemented it. Https no, no pictures. The prices are very good, why I did not write them - I find it difficult to answer.

Total from the data center, officially corresponding to tier3 - for me no one was acceptable. What to do next? I tried to search in various forms on the Internet for sites of different data centers:

02/14/2017

Apparently they first came across to me in advertising or when searching. I remember exactly what bothered me a lot - that the address of each data center was not indicated, now I can already see it. I also remember the terrible layout on the site. Even now debian + firefox looks awful. When discussing prices, I had "stupid" questions, like I don’t understand what to pay 30000r / month for. They decided not to answer one of these questions. Well, what can you do - I’m really not mine their client. They specialize in payment systems there.

Surely I found more than one data center, but somehow I did not like them. I remember that I began to study “what is the correct data center?”. Watch all kinds of videos on the Internet about the data center and there was an idea of what I should see in the pictures.

I tried to get to the Rostelecom data center local. Because my provider is Rostelecom. And the organization is decent. Spent fiber for $ 3000 - a very good quality of communication.

Offtop: Rostelecom has a terrible native DNS - because of this, most users probably have a terrible opinion about this provider. But they do not offer services for the placement of their equipment, absolutely, nowhere.

Then I just called them in support of the central one and asked - "what do you recommend to me?" This employee recommended me to “hc.ru” and “tech.ru”.

12/16/2016

Patiently answered all my stupid questions (I see this now and understand that the questions are stupid). They offered a bunch of supplier + data centers, besides them, they only offered a similar data center in the Baltic states. They have a modern website, as you saw in the analysis above - not everyone has it. Photo of equipment - corresponds to the idea of what the correct data center should be (in fact, everyone who posted the photo-video matches, but not all posted). There is an unusual channel "burstable rate". Prices from my point of view are slightly lower than at all. Well, they made a special offer in the form of a discount on a power supply unit (PSU).

It is necessary to explain a little why all the data centers were brutalized with this indicator of the maximum power consumption of the PSU. It’s not clear to me as a consumer why, when paying, they look at the inscription on the PSU. It would be logical to look at the power consumption or, in the end, the maximum possible power consumption. In my case, if you collect all the pieces of iron to the heap, you get 420W the maximum possible consumption and the real consumption now is probably about 100W. And on my PSU, 750W is drawn - which means I have to pay 1900 rubles a month for this for exceeding 400W power. And they are not the only ones - it turned out to be all so strange. Why?

It turned out that for the case of turning off the servers - and then turning them on simultaneously, the maximum possible current is consumed.

Well, it turns out that in a critical situation - the operator or just clicks “turn on everything”. Or if there will be many people like me, you will have to turn on each server in turn. Accordingly, longer downtime for some customers. I did not find any more reasons - but even this reason completely explains this strangeness.

The next oddity is connecting to 100mbit / 1gbit / 10gbit ports. Even if very little data is chased through this port - sorry, this is very expensive. And this is not at all obvious to a person who is not aware of these nuances.

Log in to the data center tech.ru work area

16.12.2016

They wrote to me in support, "Write to us, please, when the server is ready for installation in the DC". Well, here's how I perceive it? How polite non-cooperation? After 2 months, I asked them if they have a food restriction? Suddenly it turned out that there is. Well, what the hell did they tell me first to buy a server, and then find out what you had to buy? On this, I decided that it is unacceptable to communicate with such support.

01/18/2016

I asked them if it was possible to order equipment. They only offered a dedicated server collection to order.

The choice was apparently made in favor of tech.ru. First of all, because they work closely with Tiscom. Secondly, due to good support. Thirdly, due to a discount on food.

The fact that there is a burstable rate, a well-designed site, https, photos and other little things was also a plus.

During the month of use, 2 downtime channels were recorded for approximately 15 minutes and 3 minutes, respectively. When connecting, they mixed up the routing of ip addresses (7 ip addresses per 10mbit channel <-> 1 ip address per 100mbit channel). The rest is fine.

I can also find fault with the fact that there is no utility API like finding out how much time UPS has left before shutting down. Something similar is in hetzner.

An example rack in tech.ru with working customer equipment

When they found out that I was going to write an article about them, they asked me to leave this phone here: +79055628383

I handed down separately the problems that I had during the setup of this server.

Not standard problems from my point of view. That is, the solution is not easy to google.

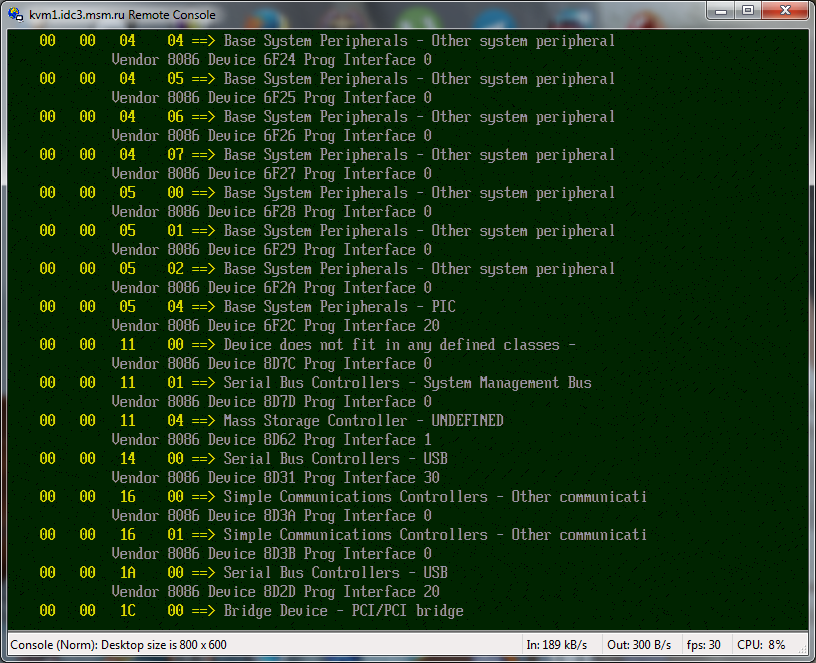

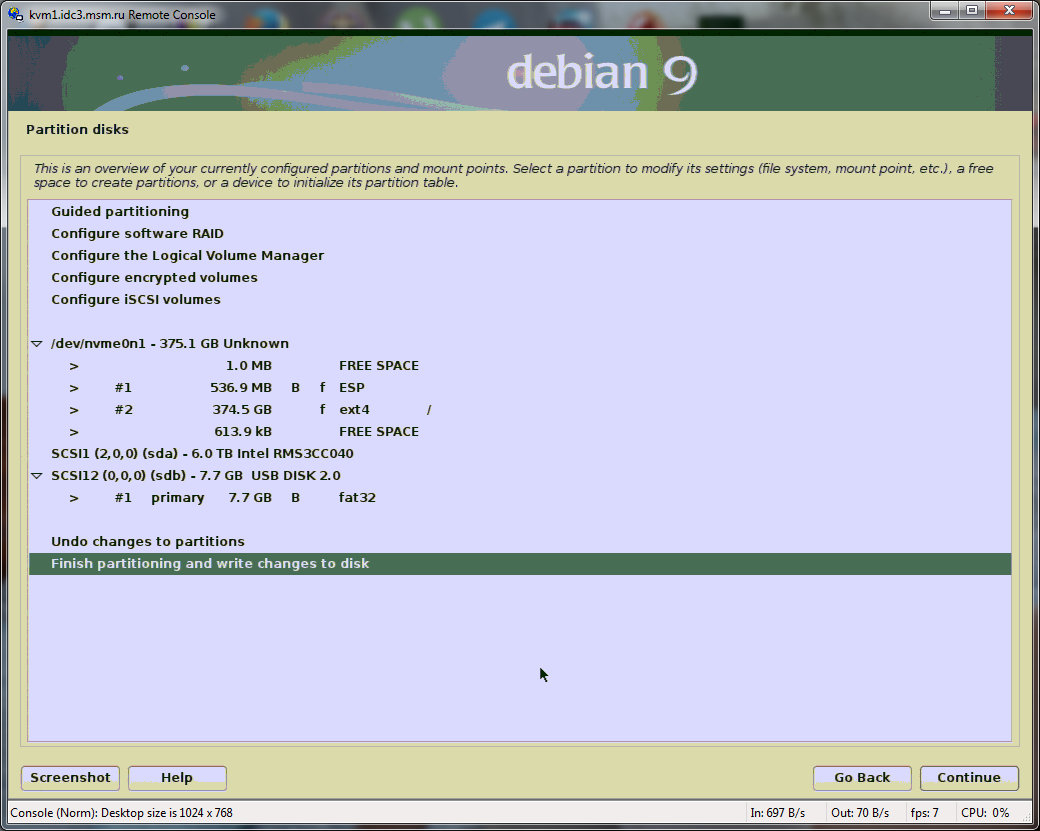

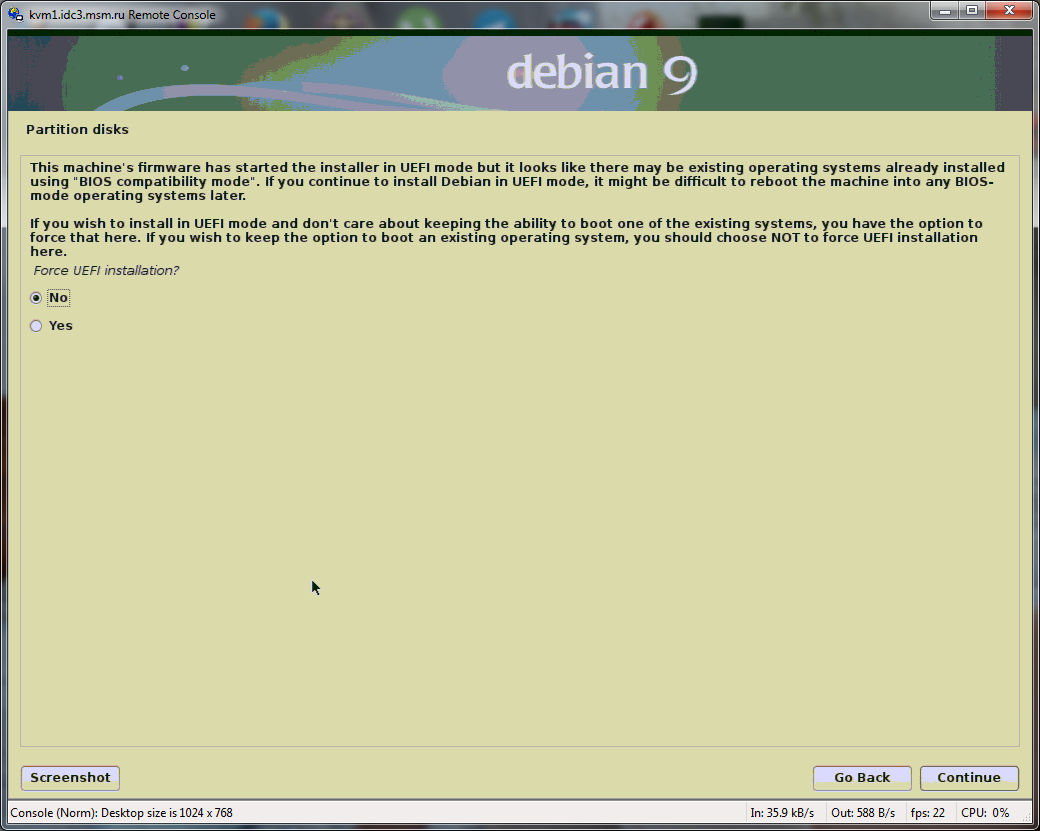

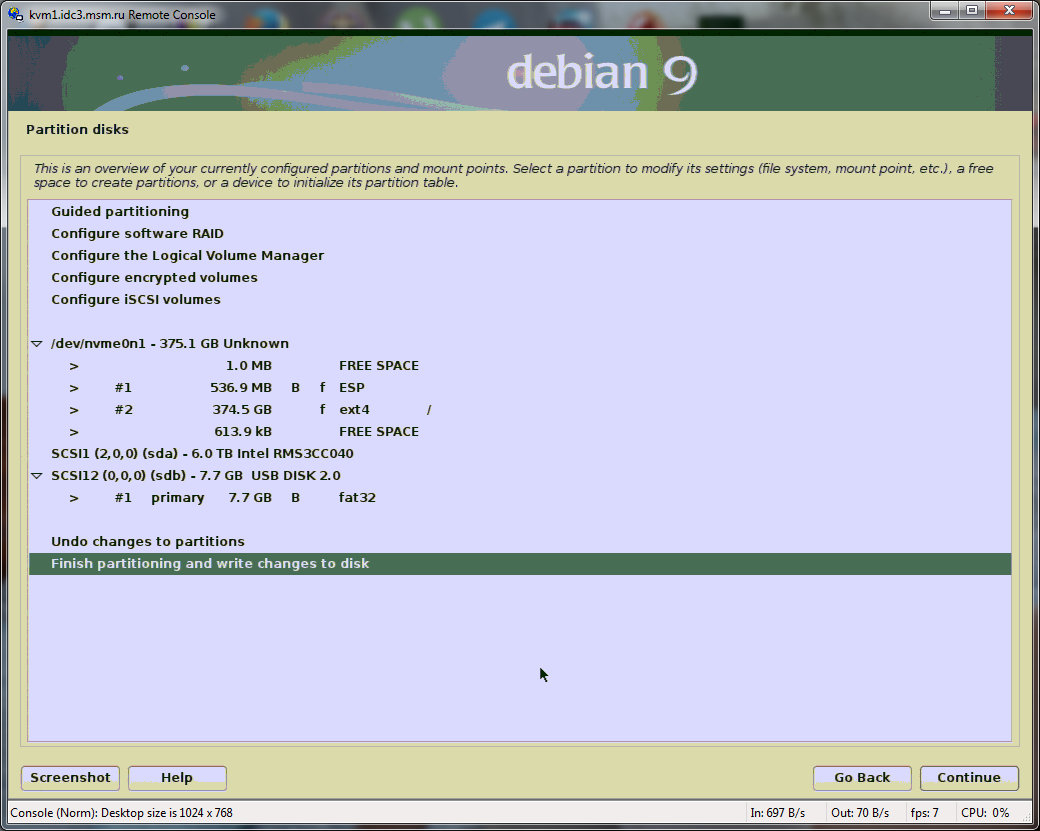

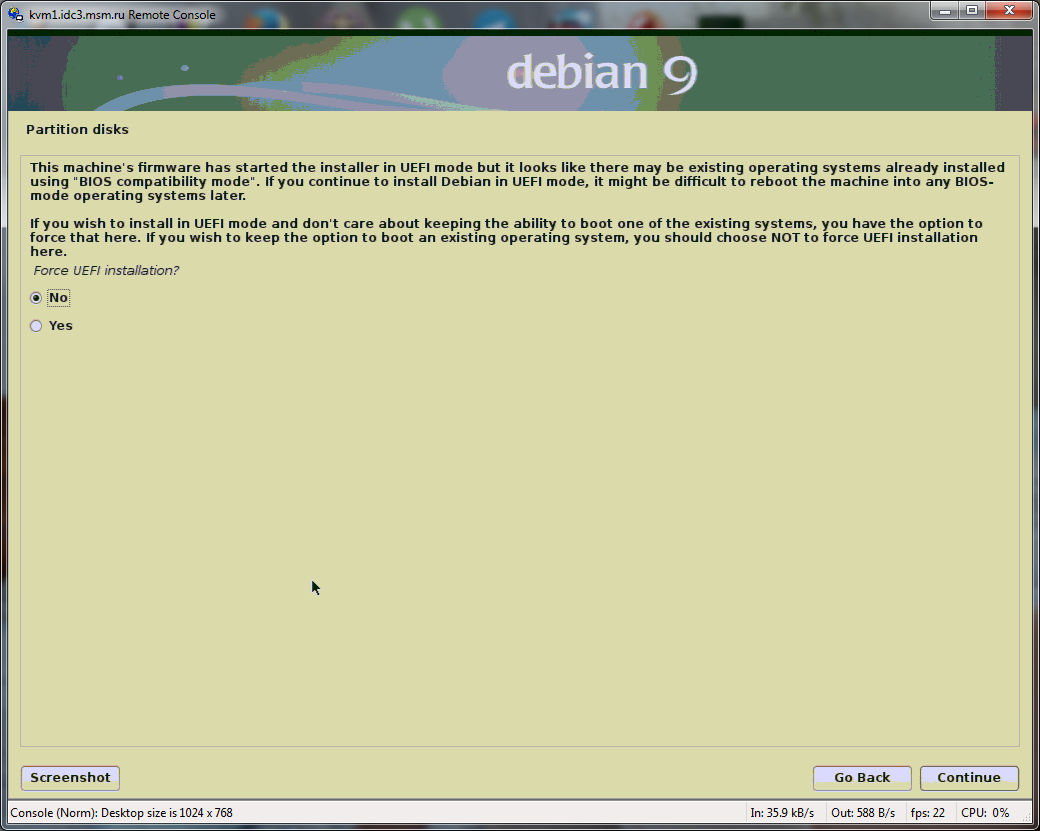

The first problem is how to make the NVMe disk bootable.

I started with a simple one - I tried to install it on my forehead as it is. Did not work out. Well, that is, Debian installed and made NVMe bootable, but the BIOS did not see this boot option, well, nothing at all. I did not want to touch what I did not understand in the BIOS, but from what I understood, I tried everything. From the boot options I found the UEFI option. But he did not want to run boot-NVMe through this option. Having rummaged in UEFI - I was convinced that it is really possible to get information about the installed NVMe from the command line. And that means it really somehow boots from it.

Actually, it became obvious that something needs to be written in this UEFI.

Photo from the UEFI site. Mass Storage Controller is our customer.

I dug and dug in the console of this UEFI - I realized that this is some incredibly difficult way, it should be easier.

Wandered to the Debian website and there was actually an answer to the surface. All I had to do was:

In general, to be honest, this is IMHO a strong jamb on the part of Debian - well, it is obvious that Debian is installed on an NVMe device - it would be correct in this case to offer a non-expert mode of loading method and default via UEFI.

The Debian installer marked up and installed the UEFI bootloader on a piece of NVMe disk (as much as 500 MB he needed for this, okay). Through the same console, UEFI independently made the configuration and boot selection from this section of NVMe. It remains for me to select this new boot method as the main one in the BIOS.

Here is the markup you need to do for a bootable NVMe device.

This is the key point when installing Debian on NVMe, this is what should be in non-expert installation mode for the case of installing on NVMe. And the default is yes.

The second sudden problem is iops counting. I naively believed that zabbix can count this and it will draw to me how many iops my NVMe produces. It turned out that he could not. To me, this is incredibly strange.

It turned out that there is a third-party add-on for zabbix that can collect such data.

But this third-party add-on does not know how to work with NVMe devices. I had to finish a little, the benefit is all opensource. And the method of data collection is not compatible with the latest version of zabbix, and indeed contained an error in data collection. Also corrected. Pull request here .

There was a task - to unpack an archive with a huge number of small files. This NVMe is so fast that it can handle an archive of 100 gig per 10,000 files in a few seconds. Nevertheless, zabbix recorded for this minute: The maximum value that I saw is 5000iops per record during disposal at 5%. That is, very approximately this device will definitely give out 100000iops for recording.

In general, such power was bought for the database and what this piece of hardware is capable of will be known only after the combat launch of the online game that is being done. In the meantime, here's a synthetic simple test.

I also want to note that HDDs can issue 800iops for recording during 100% recycling for 5 minutes and then 500iops for recording for 15 minutes (also during 100% recycling).

There was also a funny moment when I pulled a 90-gig archive by mistake on an NVMe device, but I had to pull it, unpack it and put it on the HDD. Well, I think now I’ll unpack it on the HDD, measure iops for reading, it’s clear that there will not be much, but still. And suddenly I see iops reading zero. Well, I think for sure there is some kind of error in the zabbix scripts. I double-checked everything carefully, left it right - made sure that there is no mistake. How then is this possible? And the trick is that this was the first heavy operation on this server and Debian is looking - a 90 gig file and 120 gig of free RAM.

Well, I completely put this file into RAM. Then, when accessing this file, I read it from RAM, and not from NVMe.

There is such a saying: "As you call a ship, you will sail on it." The server received the name "nova". Short form of recording in English from "Hypernova Star". A hypernova is a very bright flash lasting several hundred years. A blink by the standards of space. So the server should perfectly cope with the launch of an online game for a "short" period of time.

It will take at least a year before everything is finalized, the content has been completed, everything has been thoroughly tested, publication materials are ready, legal issues are resolved and there are still a lot of questions - and then the time will come for this hypernova to break out. Well this is such a rarity today - the publication of a completed game.

In the meantime, I’m ready to conduct NVMe p4800x synthetic tests. For example, for mysql to write some kind of task in 20 threads, so that this ssd is very furious. And yet, find out the real limit of the capabilities of this device. I will gladly execute your load scripts on this server and publish test results.

I’m also ready to consider options with investments in the game itself, from $ 100,000 and properly arranged and carefully agreed upon everything.

Delivered tech.ru to the Moscow data center and started operation.

Photo report available. The article consists of 5 parts:

- Why do I need a server.

- The choice of configuration.

- The choice of who to buy.

- The choice of who to post.

- Problems setting up this server.

Why do I need a server

In August 2015, circumstances developed successfully so that I began to make an online game.

I had a fairly large selection of what long-term construction to do. For a number of reasons, I chose an online game.

He began to do it and for half a year of hard work he made good progress, but suddenly his savings ran out. Nevertheless, I went very far in this matter and then continued to refine the online game in my free time from my main job. The game requires a lot of server resources - to calculate the ratings.

I have already had a personal server for 10 years in the Kiev data center hvosting.ua. He got to me by accident: “Do you want a server like this for 750 euros and pay only for a rack space?”, But then I once had an extra 750 euros and I agreed. The available server was enough for about 500 people online. Therefore, I had to ask all the players "do not tell anyone about the game." And now I would not want to show the current version to anyone, 5 people are actively playing - more than enough at the moment.

It became clear that you need to rent or rent a new server.

I rejected the rental option because I have a positive experience of hosting my

server for 10 years.

There is a negative experience of closing a successful startup due to expensive hosting. Well, that is, you had to pay $ 600 per month for hosting, and the income at some point became less than $ 600 per month.

Accordingly, I could not show it in my portfolio - and I think it was a monstrous damage to my income as a whole.

It was because of these two reasons that I decided to buy a server, rather than rent.

That is, if the online game is financially insolvent - it will still remain online and will be available for the portfolio. And the costs of its maintenance will be quite feasible even with zero income from the game itself.

In addition, I need a server for several non-profit sites and teamspeak server for a large guild in a well-known online game.

An overview of all the components in this server, everything is still in the boxes, now there will be unpacking

Configuration selection

I decided that the server should pull 50,000 players online and 1,000,000 registered players.

Upon reaching these indicators, a startup will grow into a business and there will be an opportunity to buy new necessary equipment. But in general, why not exactly 50,000, and not 60,000 - we will not consider, the article is not about that.

A further choice of configuration is the selection of parameters for the required indicators.

At the beginning of this story, I was rather poorly versed in the server hardware and what I really needed.

I believe that the train of thought and the logic of decision-making will be very interesting to everyone involved in the topic. Or decision makers.

All components in their boxes, unpacking and installation will now begin

General indicators

To reduce financial risks, it is necessary that monthly expenses are minimal. Accordingly, the server should be 1U in size.

The server part of the game is designed in such a way that almost everything can be run in multi-threading. That is, you can even share the task of calculating the game world between several servers.

This means that when choosing “several productive cores” vs “a lot of medium-productive cores”, a choice should be made in favor of the second option.

After making some measurements, I decided that for 50,000 people online, you need 50Gb of RAM (again, the article is not about why so much).

Suddenly it turned out that the game makes a lot of records on the disc. So it should be ssd and quite powerful.

There must be some hdd for backups. I am one of those who "already makes backups."

CPU choice

Processor manufacturer: Intel, AMD, MCST.

AMD - I tried to use the official AMD website, but unfortunately it is impossible to understand there:

- What processors do they have at all? I managed to understand when studying not an official site (all sorts of other sites).

- Which processors are the most relevant. At the time of the study, the most current server processors were without DDR4 support. I did not study further.

The decision to refuse to buy AMD is a very inconvenient site and outdated processors.

MCST - this is certainly very interesting, the very fact of the existence of processors of Russian production is very interesting. Which is even possible to buy.

The inability to buy on behalf of a private person is a very annoying, but resolved issue.

The inability to study the full characteristics of the processor, there are no tests in the public domain. Even synthetic ones. I also note the lack of support for DDR4 - but this is IMHO excusable (for AMD this is not excusable). The decision to refuse to purchase the MCST was after realizing the impossibility of using the “apt-get upgrade” command.

Intel - only this option remains. There is support for DDR4 memory in the latest processor line. There is a special section of the site in which they dump a sheet of processor characteristics - I can find all the characteristics that interest me there. We turn off the processor heatsinks Turn off the

processor

radiators

The moment of installation of the processor E5-2630 v4

Installed processor E5-2630 v4 close-up

Number of processors

In free sale there are server options 1x, 2x, 4x, 8x.

I decided not to consider the option to buy a motherboard with several connectors and eventually install a complete set there. Why? To buy equipment is a lot of time, nerves and money. This is unlike a home computer.

The 4x-8x processor options turned out to be incredibly expensive and even in the most modest configuration were at a price too high for me. Uniprocessor and dual processor systems have appeared for different market niches. Here is a list of processors to consider (those that start on "E5-4" are uninteresting, they are for 4 processor systems).

Consider the options "E5-1" - these are processors for single-processor systems.

The high base frequency and high burst are immediately evident, but the number of cores is very small.

For example, “E5-1660 v4” with 8 cores - $ 1113, and a processor for a dual-core system, but with a lower frequency of “E5-2620 v4” 8 cores - $ 422.

The price is 3 times less in favor of a dual-processor system! I recall that for a task more useful are more weak nuclei than few strong nuclei. On this, it was decided to buy a dual-processor system.

Both E5-2630 v4 processors are installed in their slots.

Apply thermal paste to the processor.

Apply thermal paste to the processor.

Processor model

All the same, there remains a fairly large selection of processors, what choice to make. I pay attention to prefixes like “L” or “W” or “A”.

“L” stands for low power consumption. This is not interesting for me. If I had my own rack in the data center - it would matter, and since I have 1U and no data center wants to put a counter on my server - this is important.

“W” - increased power consumption, “A” - a very successful instance in the line. You can quickly notice that the remaining processors are divided into 2 groups. A small number of cores + high frequency, a large number of cores + low frequency.

Drop the first group. Total left (cost above $ 1,500 apiece, I decided not to consider):

E5-2603, E5-2609, E5-2620, E5-2630, E5-2640, E5-2650, E5-2660.

Immediately discarded too weak processors: E5-2603, E5-2609, because there is definitely enough money for 2pcs E5-2620.

There are 5 options left: E5-2620, E5-2630, E5-2640, E5-2650, E5-2660.

Upon a detailed examination, it turned out that they can be divided into 2 groups according to the maximum permissible memory frequency:

E5-2620, E5-2630, E5-2640 - 2133Mhz

E5-2650, E5-2660 - 2400Mhz

Consider 2 processors from this group:

According to the frequency E5 -2630 vs E5-2650 are no different. The difference is only +2 cores (and the memory frequency is slightly larger) and the price is 2 times higher (or + $ 600)! The second group is no longer due to price.

Now consider the E5-2630 vs E5-2640:

Only the base frequency + 10% differs, and the price is one and a half times higher. I remove the option E5-2640. Incomprehensible overpayment for me.

It remains only E5-2620 vs E5-2630: the

price difference is $ 250, and for them we get + 10% to the frequency and +2 cores.

I am ready to receive such a premium for such a price.

The total choice is made: Intel Xeon Processor E5-2630 v4

Installing a heatsink on a processor

Twisting a heatsink on a processor

How processors are visible in bios

Memory selection

I remind you: at the idea level - I decided that I need ~ 50Gb of memory. On the Internet, I found that collecting a server with memory is not a multiple of two - this is quite acceptable.

But still. For reasons that all memory should work the same way — so that I don’t have to parse which core how complicated the task is to submit — I decided that the amount of memory should be a multiple of two.

Accordingly, it turns out that you need to put 64Gb of memory. The server part of the game is written in such a way - that on the server side the entire game process is fully calculated.

Thus cheating is impossible. However, if suddenly there is not enough memory to completely fit in the memory of all online players, there will be a small disaster, the game simply will not start, and I will need to urgently make some kind of hotfix. To be able to flush intermediate calculations to disk.

It also turned out that if a lot of memory dies were installed, then the processor’s communication speed with the memory would drop.

Therefore, if I buy 64Gb now, and then I buy as much more, it will be much worse than if I immediately buy 128Gb.

The price difference turned out to be quite feasible, perhaps it would have been enough for 256Gb, but there was no extra $ 1000, the entire reserve eventually went to ssd.

Installation of memory dies, an attentive gopher here can notice 2400 memory. In fact, this memory is here for pictures, the necessary memory arrived at the very last moment.

The choice of the number of memory dies

I already knew that if you put too many memory dies, then it will work much slower than if you put less memory dies. So what - the best option is to put one die on 128Gb?

It turns out that processors have such a concept as “memory channels”. The best option - in each memory channel, one memory card. This rule also appears to work for gaming / work computers. It's amazing that all sorts of bloggers do not convey this simple idea to the public.

In my case, it turns out 2 processors, and each processor has 4 memory channels. That is, for my case, it is best to put 8 memory dies.

Total choice: 8 x 16Gb

Installation of memory dies

Memory model selection

The choice was not great. Firstly, the choice at all at the moment is 2133 and 2400. But the processor pulls 2133. Does it make any sense to take 2400?

It turns out not only makes no sense, but also harmful (for my case). It turns out that memory access is performed for 17 clock cycles in 2400 memory, and for 15 clock cycles in 2133 memory.

But my processor cannot work with 2400 memory, it will still work with it at a frequency of 2133. However, if the processor was E5-2650 v4 or higher, then on the contrary it would be more profitable to put 2400 memory.

The next factor is LRDIMM. This is a technology that allows you to install more memory on the server and when installing maximum laths - such memory works faster than if without LRDIMM.

LRDIMM is an ordinary server memory + some additional chips. Accordingly, the price is + 20% approximately.

For me, accordingly, LRDIMM is not needed, because I do not install memory in each slot.

Now the manufacturer. Among the server memory, it turns out the manufacturer does not matter. I don’t understand how this is possible - but a fact. Synthetic tests are the same. The memtest data is about the same. For Kingston memory, I found an option: 2 options for chip saturation, but even in this case there is no functional difference.

General view of installed processors and memory

How memory is visible in bios

Disk subsystem selection

When I first made the desired server configuration - I wanted 1 ssd at 240gb and one hdd at 2tb.

As I clarified what I needed, my understanding of what I really needed changed too.

I had to find out that ssd drives are very, very different. That on servers it is better to use raid1.

That there is a new NVMe technology and it has a bunch of options. Raid controllers are very different for different tasks.

Let's go in order. Starting with “what is needed for the task?”. You need a 200Gb ssd disk capable of withstanding a lot of rewriting. And 2Tb hdd for reliable backups. “A lot of dubbing” - with very approximate calculations, this is 1-2Tb per day. Reliable backups - this means raid1 configuration. A hardware or software raid is a matter of labor, skill, and responsibility when fixing a raid.

From a management point of view, the choice is:

- Software raid - you need the qualifications to fix raid, the slightest error and data is lost. But cheaper.

- Hardware raid - just replace the failed disk and that's it. But expensive.

Accordingly, Vise advised the cheapest of modern hardware raid controllers, which would make it easy to fix a possible breakdown.

This is: ark.intel.com/en/products/82030/Intel-Integrated-RAID-Module-RMS3CC040

I note that this is a raid controller for the Intel motherboard. That is, if I would choose a platform other than Intel, then I would have to take something else and occupy as much as a whole pci slot.

I have no idea what it is. Or something related to encryption or something related to a raid card

Preparing a raid card for installation

Installing a raid card

How the raid controller is visible in bios

HDD Model

I was going to buy Western Digital because I have a very positive experience using such hdd for home computers. But Vise said that they can only make a replacement within a week (with or without warranty). And they offered HGST - they said they could make a replacement within 24 hours.

I began to look on the Internet for what they write about HGST reliability - like normal reviews, and I agreed with this choice of disk manufacturers.

Further volume. 2Tb is necessary, good. And what is more expensive? + 1Tb is $ 70 more expensive, but then I won’t forgive myself for saving on the volume, and if I suddenly need more space later. Well and further along the line, similarly, the volume increases slightly and the price increases slightly. Between 6Tb and 8Tb, my budget line of opportunity passed.

Total choice: 2pcs 6Tb HGST HDD.

2 HDD HGST 6Tb

Installing some part of the raid controller for the basket with HDD

Connecting the basket with HDD to the Raid card RMS3CC040

SSD type selection

The first option: just put 1pc ssd 200gb. Further, I learned that the more ssd - the more rewriting resource it has (ceteris paribus). And so that this rewriting could be performed faster.

For example, I will have 200gb of data and a volume of 240gb vs a volume of 1000gb. So, ceteris paribus - the resource of rewriting a large volume will be 4 times larger.

Well, let the experts forgive me, but for a decision - this is not at all obvious.

Further, I realized that we need to use raid1 and we need 2 of these ssd. Yes, even if backups are made at least every hour, it will not be scary to lose a single ssd. But there is the OS, the settings of everything. Actual DB. It is necessary to urgently spend a lot of time and energy on restoration.

Ssd withstand 2tb per day of dubbing is very expensive. And you also need some stock on this indicator.

Next, I learned about NVMe technology. This is simple: a ssd drive connected not as usual, but via a pci slot.

The data strip is larger and faster - this is important for my task. The question immediately arose - is it possible to combine them into a raid?

It turns out you can, but there are no such raid controllers on sale. There are prototypes with an unknown price.

In general, at the time of purchase - this was an unacceptable option.

Another decision making factor: let's say I bought 2 expensive ssd disks, combined them into raid. But the rewrite resource is consumed equally quickly. And what will I get? What will they fail at the same time?

Further, I found out that most often ssd fail, not because the rewrite resource is consumed, but because of a controller failure in ssd. Yes, raid will be relevant for this.

And so the choice is made between pci-ssd (NVMe) and raid1-ssd. And given the above considerations, pci-ssd is definitely better for me. Well, it’s just stupid there isn’t another $ 2000 for a backup ssd, but if there were any, then you need to take a 2U system and buy a basket for another $ 400. Well, or buy 10 times worse than ssd.

Well, yes, I’m ready to trust a well-known brand (in this case Intel), in the hope that they have a reliable enough device so that the declared term and resource work without failure.

NVMe P4800X installed in a riser

Choosing an NVMe Model

In ssd, I'm interested in iops for writing, speed of a single operation, volume, rewrite resource.

After reviewing the characteristics of thousands of ssd, I believe that in my case it is necessary first of all to look at the declared number of overwrites.

Almost all ssd can be divided into groups according to the number of rewrites of its volume per day for 5 years. Groups: 0.3, 1, 3, 10, 30, 100.

There is almost always an exact correspondence between the rewrite resource and iops for recording. Separately, ssd are allocated, readings from which are performed with an incredible number of iops, but relatively weak to write. Such for me for this server are not interesting.

Manufacturers: Intel, HGST, Huawei, HP, OCZ. Any various ssd - an incredible amount. Honestly - I have little idea how generally people objectively choose the ssd they need. There are so many ssd, they are so different, the price range is so huge.

From my point of view, Intel has grouped the best products. They managed to split all ssd into groups so that I could form an opinion which device I need and which not.

3 lines of NVMe pci-ssd: P3500, P3600, P3700. For 400Gb:

P3700 - 75000 iops - $ 1000, 10 dubs per day

P3600 - 30000 iops - $ 650, 3

dubs per day P3500 - 23000 iops - $ 600, 0.3 dubs per day

Actually it’s obvious that the P3500 immediately disappears. For $ 50 a tenfold increase in the parameter that is important to me.

Am I ready for $ 350 to get ~ one and a half times more iops and 3 times more rewrite resource? Kaneshno, yes.

And so I was going to buy the P3700 for 400Gb, but a couple of months before the purchase, the P4800X is on sale.

It costs almost 2 times more expensive than the P3700. Slightly less volume. iops for recording 500000. The dubbing resource is 30.

That is, for a total of $ 800 I get 8 times more iops and 3 times the dubbing resource.

Well, frankly, in terms of iops - it’s just perfect and despite the fact that getting an extra $ 800 was not easy - I decided to buy such an ssd.

The bonus is a faster recording of each individual team.

Selection:www.intel.com/content/www/us/en/products/memory-storage/solid-state-drives/data-center-ssds/optane-dc-p4800x-series/p4800x-375gb-aic-20nm.html

Райзер с NVMe P4800X устанавливают

NVMe P4800X установлен

Как NVMe виден в bios

ИМХО про ssd

Separately, I want to note that there is an ssd type SLC / MLC / TLC / QLC. The meaning of the difference is clear: Several bits of data are written to one physical cell at once. Accordingly, SLC - only one. MLC - two. TLC - three. QLC - four. From left to right - from expensive to cheap. Due to the write, read, overwrite resource.

SLC - the most reliable, fastest and ... monstrously expensive. For example, the “Micron RealSSD P320h" is a 350Gb hairy monster for only $ 5000. Iops claimed entry for 350,000. Unlimited rewrite resource.

MLC - are very different. Well, at the factory they make a bunch of memory chips, something turned out successful - they put it in the server version, something is not very - they put it in the home version of ssd.

TLC - used to be done frankly quickly failing, now it seems like they are doing good ones. And they are trying to make the impression that TLC is good (so that the old ones are bought up by stupid customers).

QLC - they tried to do it, but apparently nobody managed to make a commodity version.

Intel recently released 3D-XPoint, this is some new type of memory. As for me - a layer cake from ssd chips.

Motherboard selection

For servers, unlike home computers, the choice of motherboard is completely different. For a home PC, the size of the computer itself is not important, but for the server - you need to shove everything in 1U (This is 4.3 centimeters!).

From here, another approach to the selection of motherboards immediately appears. Not motherboards are sold, but platforms. That is, the motherboard + case. Anyone who sells only motherboards separately - immediately loses in the competition.

Platforms for this processor come from different manufacturers. With minor modifications possible, on the sites you can only provide a link to a specific modification (I will tell you about the modification a bit later):

HP and Dell manufacturers - I will not consider it due to the monstrous overpayment for no reason.

-Supermicro

- Asus

- Intel

- Separately Intel

The choice of these four options is the cheapest option suitable for all requirements from each of the listed manufacturers. Their price is about the same. The R1304WFTYS variant disappears as soon as I see the 1100W power of the PSU (why this is important - more on that later).

About ASUS, I thought so that they recently crawled into the market for server motherboards, it is not clear what I will risk.

About supermicro - they are actually only server platforms and have been dealing with for a very long time. But ... raid controller. Either I take the Intel platform or I need another controller and occupy the PCI slot.

Therefore, I decided to choose the Intel platform R1304WTTGSR. But I note that it is 10% more expensive than a similar platform from supermicro.

Modification: with 10 gbit ethernet. The difference in price is $ 100, and if I suddenly need a bold channel, I would not want to touch the server and install something there. And I most likely will need a bold channel for the start of the game.

The R1304WTTGSR platform is packed in a box.

This is what we see when we first open the new R1304WTTGSR platform.

All components are installed, it remains to close the lid.

Such platform information is visible in bios

Who to buy from

Of course, I first turned to hvosting.ua, because I have been cooperating with them for more than 15 years now. And the volume of transactions exceeds the cost of the server under discussion. But hosting a server in Kiev after the well-known events of 2014 is unacceptable for reasons of equipment safety.

hvosting.ua offered me accommodation in the ttc teleport data center in Prague. But the discussion stopped on the question "I want a piece of paper with legal force that I am the owner of the server." Well, excuse me, I'm no longer a student who completely trusts everyone.

However, if someone really needs to host something in / in Ukraine - I recommend hvosting.ua was uptime ~ 1200 days, then the planned transfer of the server to a neighboring data center and another uptime for 700 days. Next, I tried to do “apt-get upgrade” without rebooting the server :) It didn’t work with debian, it couldn’t update in 2000 days - I couldn’t resolve any dependency myself. Hvosting helped to resolve.

In general, over 15 years there was not a single power failure and the channel dropped for 15 minutes every 4 months, 2 times the channel fell for several hours.

The vk authorization, broken by oauth, was especially delivered (they have vk blocked there).

Market Review

There was a question about buying in the Russian Federation and placement also in the Russian Federation. Why purchase and placement in the Russian Federation? And so that I would be legally protected. There was a pleasant moment, at the very beginning of the search - the choice of the best supplier, no recommendations, no biases - all are equal. I remember how I started with the banal search “datacenter in Russia” (then I found out that in the Russian Federation this is called the data center). And "buy a server in Russia."

I think it will be correct to tell in chronological order.

12/05/2016

Brigo

In the beginning there was a very constructive conversation, with an interesting suggestion. I studied the possibility of the raid1-NVMe variant, asked a question: can they put raid1-NVMe on the intel platform?

I suddenly received this question: a rude refusal to answer (by the way, I would have been satisfied with just the answer “no”, I just really was not aware of this matter). Then I did not want to communicate with them.

The first one I saw was the P4800X for sale, and so I decided to ask them for details of the purchase.

The conversation ended on the fact that without my personal presence in Moscow it is impossible to make a deal.

12/05/2016:

Unit solution

They write on their website that they have 12 years of experience. And other cool indicators. DNS whois shows that the domain was recently registered (3 months before the review). I immediately have a question for them: "why such a discrepancy?" It turns out that there was another company to which there were reviews on the Internet such as "paid for a long time, there is no server or money." One could forgive the specialists who created a new company after working with a bad director. But unfortunately, what they wrote did not fit in with the history of DNS. I decided that the risks were unacceptable, although they offered an interesting price.

12/08/2016:

ItTelo

Citizens trade used equipment. Then I still considered such options. Over time, I decided not to consider, because there is no used DDR4. Used sdd-hdd is better not to take it anyway. Well, it turns out they have nothing to offer me.

12/08/2016

It paradigma

It is a regional equipment supplier. In principle, a good option - they are nearby. Then I still thought that maybe there is a decent data center in Krasnodar, but no - if there are decent ones, then they are jammed to the eyeballs. The paradigm bent prices up and did not answer my stupid questions.

12/12/2016:

Trinity

Here is such a working link so far: server.trinitygroup.ru/expert/25736

As far as I remember, I was confused by the price. Honestly, even now I look at this price and don’t understand where such a big price comes from.

12/12/2016

Grip

They were recommended by the tech.ru data center like they themselves collaborate with them. He listened to all my Wishlist - picked up exactly what corresponded to my Wishlist of that time. At a great price. Then he began to dig up DNS whois and reviews on the Internet.

DNS whois is as described in the correspondence. During the investigation, many interesting details were revealed.

It turned out how the company was based, by whom, how it changed. It was found how they changed the site and asked to leave comments about it somewhere.