The recommendatory system on the knee as a remedy against the existential crisis

It may be that the reference to the existential crisis sounds too loud, but for me personally the problem of searching and choosing (or choosing and searching, it matters) both in the world of the Internet and in the world of simple things is sometimes approaching it for torment. Choosing a movie for the evening, books by an unknown author, sausages in a store, a new iron - a wild number of options. Especially when you don’t really know what you want. And when you know, but you can’t try - it’s not a holiday either - the world is diverse and you won’t try everything at once.

Recommender systems greatly help in choosing, but not everywhere and not always as we would like. Often the semantics of content are not taken into account. In addition, the problem of the “ long tail ” arises at its full height , when recommendations are concentrated only on the most popular positions, and interesting but not very popular things are not covered by them.

I decided to start my experiment in this direction by searching for interesting texts, taking for this a rather small, but writing community of authors who still remained on the LiveJournal blog platform . About how to make your own recommendation system and, as a result, also get an assistant in choosing wine for the evening - under the cut.

Well, firstly, this is a blogging platform and often interesting things are written there. Even despite the migration of users to facebook on LJ, according to my estimates, about 35-40 thousand active blogs still remain. Quite a feasible amount for experimentation without involving big data tools. Well, the crawling process itself is very simple, in contrast to working with the same facebook.

As a result, I wanted to get a tool of recommendations from interesting authors. In the process of working on the project, Wishlist expanded and multiplied, and not everything turned out to be immediately covered. About what was possible and what are the future plans, I will tell in this article.

As already mentioned, crawling blogs on the LJ platform is a very simple matter - you can easily get the texts of posts, pictures (if you need them) and even comments on posts with their structure. I must say that it is the system of hierarchical commenting as on Habré that keeps some authors on this platform.

So, a simple crawler on Perl, the code of which can be taken from me on github for a couple of weeks of leisurely work, uploaded texts and comments to me from about 40 thousand blogs for the period summer 2017 and summer 2016. These two periods were chosen for parallel comparison of activity in LJ from year to year.

So, the initial data for the study turned out to be something like this:

- Analysis period: 6 months

- Authors: about 45 thousand

- Publications: about 2.5 million

- Words: about 740 million

Since I am not a data scientist (although I watch kaggle contests from time to time), the algorithms were chosen very simple - consider the proximity of the authors as the cosine distance between the vectors characterizing their texts.

There were several approaches to the construction of vectors with the characteristics of the texts of the authors :

1. Obvious - we are building vectors with the metric TF-IDF according to the assembled text corpus. The disadvantage is that the vector space is very multidimensional (~ 60 thousand) and very sparse.

2. Tricky - cluster text body with word2vecand take the vector as a projection of texts on the resulting clusters. The advantage is relatively short vectors in terms of the number of clusters (usually ~ 1000). The disadvantage is that you need to train word2vec, select the number of clusters for clustering, think about how to project text on clusters, it’s confused.

3. And also not bad - to build vectors with gensim doc2vec . Almost the same as the second option, but the side view and all the problems except training have already been solved. But on python. But I didn’t want that.

As a result, the first model was selected supplemented by the LSA (Latent-semantic analysis) methods in the form of SVD decomposition of the resulting matrix “documents” - “terms”. The method was described many times on Habré, I will not stop.

To lemmatize termsor rather, reducing Russian words to the basic form, we used the Yandex mystem utility and a wrapper around it, which allows you to combine several documents into a single file for feeding it to the utility input. You could call it on every document, which I did before, but it is not very effective. The body selected nouns, adjectives, verbs, and adverbs long more than 3 characters and a frequency of use of more than 100 around the body.

For example, the top nouns reduced to the basic form:

1. Person

2. Time

3. Russia

4. Day

5. Country

SVD decomposition was done using the external utility SVDLIBC, which had to be patched for the use of very large matrices, since it crashed when trying to create a "dense matrix" with the number of elements greater than the maximum value of the int type. But the utility is good in that it can work in sparse matrices and calculate only the required number of singular vectors, which significantly saves time. By the way, for Perl there is a PDL :: SVDLIBC module based on the code of this utility.

The choice of the length of the vector (the number of singular vectors in the expansion) was done by “expert analysis” (read by eye) on a test set of magazines. As a result, he stopped at n = 500.

The result of the analysis is a matrix of authors' similarity coefficients.

At the initial stage, I wanted to evaluate with my own eyes the structure of thematic communities in order to understand what to do next.

I tried to use clustering and visualization in the form of self-organizing maps (SOM) from the analytic package Deductor by feeding a vector of 500 values after the SVD. The result was long in coming (multithreading? No, we didn’t hear it) and was not happy - there were three large clusters with a fairly dense distribution of authors throughout the map field.

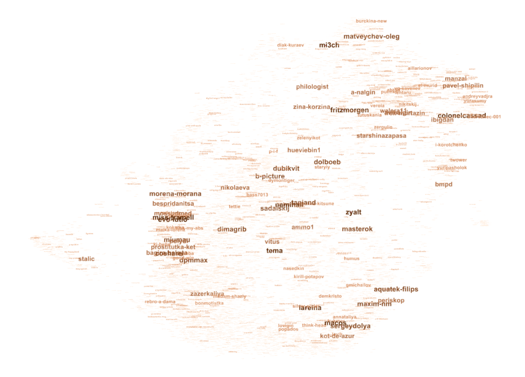

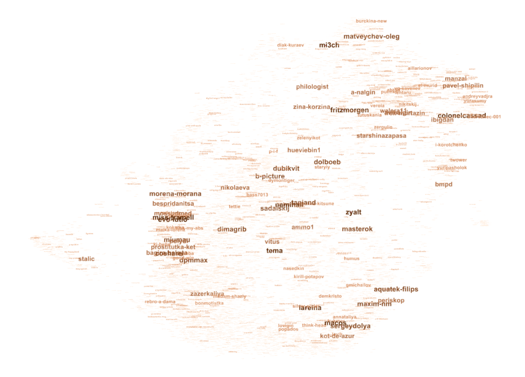

It was obvious that the distance matrix could be transformed into a graphkeeping edges with a coefficient of proximity greater than a certain threshold. Having written the matrix converter into a graph of the GDF format (it seemed to me the most suitable for visualizing various parameters), feeding this graph to the Gephi graph visualization package and experimenting with different layouts and display parameters, I got the following picture:

By clicking on the picture and here you can meditate on it in the resolution of 3000x3000. And if you sometimes go to LJ blogs, maybe you will find familiar names there.

This picture is quite consistent with my idea of the thematic structure of the authors, although not ideal (too dense). Little is left - to give yourself and others the opportunity to receive recommendations based on personal author preferences : set the name of the author and received recommendations similar to him.

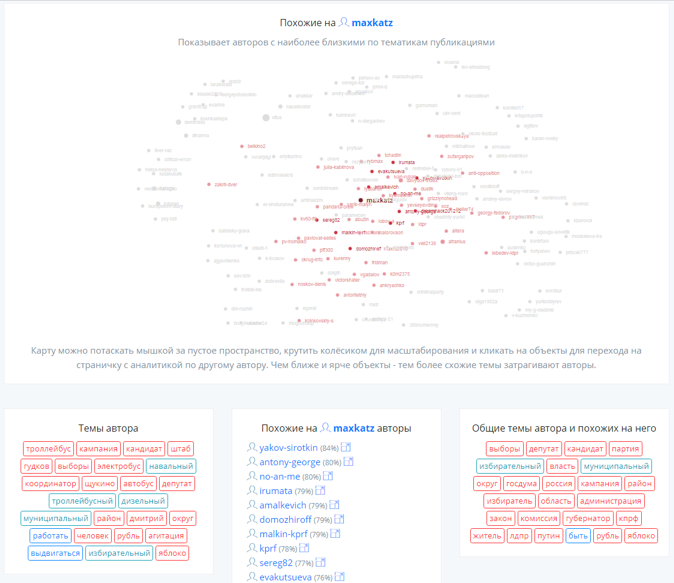

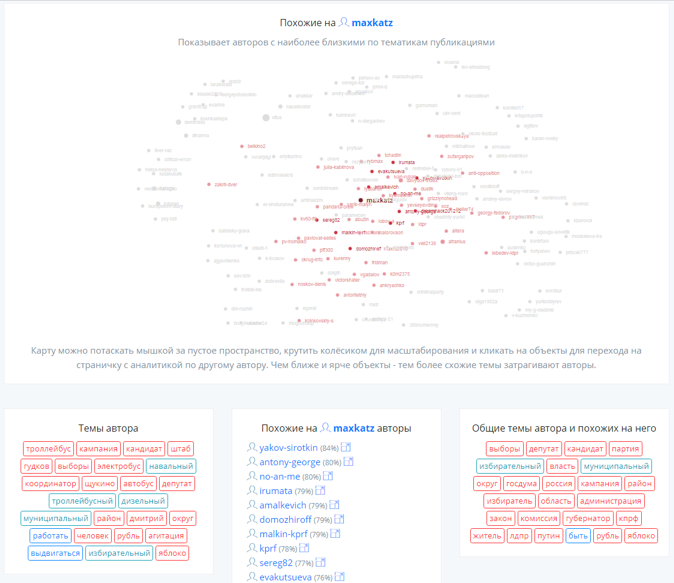

For this purpose, a small visualization of the graph areas adjacent to the author of interest was written on d3js using force layout. For each author (and there are a little more than 40 thousand, as I said), a json file was generated with a description of the nearby nodes of the graph, a “tag annotation” based on a sorted list of popular words for this author, and a similar annotation of his group, which includes nearby nodes. Annotations should give some insight into what the author writes about and what topics are relevant for similar bloggers.

The result was such a similarity.me mini-search engine with recommendations and a graphic card:

The title picture is just a wine list for taste characteristics ))

A side result of the study was the thought "rather than using this approach to recommend any products according to their descriptions." And for this, tasting notes by wine critic Denis Rudenko on his blog on the Daily Wine Telegraph daily were perfect . Unfortunately, he abandoned their publication long ago, but more than 2000 descriptions of wines accumulated there are excellent material for such an experiment.

No special text preprocessing was done. Having fed their system, I got a taste list of the 700 best wines with a recommendation similar:

On it you can highlight wines from the most popular grape varieties and according to their subjective (from the point of view of the taster) tastes.

There are plans to optimize the recommendations a little by converting some adjectives into nouns, for example, “blueberry” - “blueberry”. And place on the map all more than 2,000 tasted wines.

Somehow, you can deal with the problem of choice (if suddenly it is relevant for you) by expanding these approaches to other areas.

Recommender systems greatly help in choosing, but not everywhere and not always as we would like. Often the semantics of content are not taken into account. In addition, the problem of the “ long tail ” arises at its full height , when recommendations are concentrated only on the most popular positions, and interesting but not very popular things are not covered by them.

I decided to start my experiment in this direction by searching for interesting texts, taking for this a rather small, but writing community of authors who still remained on the LiveJournal blog platform . About how to make your own recommendation system and, as a result, also get an assistant in choosing wine for the evening - under the cut.

Why LiveJournal?

Well, firstly, this is a blogging platform and often interesting things are written there. Even despite the migration of users to facebook on LJ, according to my estimates, about 35-40 thousand active blogs still remain. Quite a feasible amount for experimentation without involving big data tools. Well, the crawling process itself is very simple, in contrast to working with the same facebook.

As a result, I wanted to get a tool of recommendations from interesting authors. In the process of working on the project, Wishlist expanded and multiplied, and not everything turned out to be immediately covered. About what was possible and what are the future plans, I will tell in this article.

Crawling

As already mentioned, crawling blogs on the LJ platform is a very simple matter - you can easily get the texts of posts, pictures (if you need them) and even comments on posts with their structure. I must say that it is the system of hierarchical commenting as on Habré that keeps some authors on this platform.

So, a simple crawler on Perl, the code of which can be taken from me on github for a couple of weeks of leisurely work, uploaded texts and comments to me from about 40 thousand blogs for the period summer 2017 and summer 2016. These two periods were chosen for parallel comparison of activity in LJ from year to year.

Why perl

Because I like this language, I work on it and find it very suitable for tasks with an unpredictable flow and result - just what you need in experimental information retrieval and data mining when you do not need maximum performance, but you need maximum flexibility. For data processing, it is better, of course, to use python with a bunch of libraries for it.

So, the initial data for the study turned out to be something like this:

- Analysis period: 6 months

- Authors: about 45 thousand

- Publications: about 2.5 million

- Words: about 740 million

Analytical Model and Processing

Since I am not a data scientist (although I watch kaggle contests from time to time), the algorithms were chosen very simple - consider the proximity of the authors as the cosine distance between the vectors characterizing their texts.

There were several approaches to the construction of vectors with the characteristics of the texts of the authors :

1. Obvious - we are building vectors with the metric TF-IDF according to the assembled text corpus. The disadvantage is that the vector space is very multidimensional (~ 60 thousand) and very sparse.

2. Tricky - cluster text body with word2vecand take the vector as a projection of texts on the resulting clusters. The advantage is relatively short vectors in terms of the number of clusters (usually ~ 1000). The disadvantage is that you need to train word2vec, select the number of clusters for clustering, think about how to project text on clusters, it’s confused.

3. And also not bad - to build vectors with gensim doc2vec . Almost the same as the second option, but the side view and all the problems except training have already been solved. But on python. But I didn’t want that.

As a result, the first model was selected supplemented by the LSA (Latent-semantic analysis) methods in the form of SVD decomposition of the resulting matrix “documents” - “terms”. The method was described many times on Habré, I will not stop.

To lemmatize termsor rather, reducing Russian words to the basic form, we used the Yandex mystem utility and a wrapper around it, which allows you to combine several documents into a single file for feeding it to the utility input. You could call it on every document, which I did before, but it is not very effective. The body selected nouns, adjectives, verbs, and adverbs long more than 3 characters and a frequency of use of more than 100 around the body.

For example, the top nouns reduced to the basic form:

1. Person

2. Time

3. Russia

4. Day

5. Country

SVD decomposition was done using the external utility SVDLIBC, which had to be patched for the use of very large matrices, since it crashed when trying to create a "dense matrix" with the number of elements greater than the maximum value of the int type. But the utility is good in that it can work in sparse matrices and calculate only the required number of singular vectors, which significantly saves time. By the way, for Perl there is a PDL :: SVDLIBC module based on the code of this utility.

The choice of the length of the vector (the number of singular vectors in the expansion) was done by “expert analysis” (read by eye) on a test set of magazines. As a result, he stopped at n = 500.

The result of the analysis is a matrix of authors' similarity coefficients.

Result visualization

At the initial stage, I wanted to evaluate with my own eyes the structure of thematic communities in order to understand what to do next.

I tried to use clustering and visualization in the form of self-organizing maps (SOM) from the analytic package Deductor by feeding a vector of 500 values after the SVD. The result was long in coming (multithreading? No, we didn’t hear it) and was not happy - there were three large clusters with a fairly dense distribution of authors throughout the map field.

It was obvious that the distance matrix could be transformed into a graphkeeping edges with a coefficient of proximity greater than a certain threshold. Having written the matrix converter into a graph of the GDF format (it seemed to me the most suitable for visualizing various parameters), feeding this graph to the Gephi graph visualization package and experimenting with different layouts and display parameters, I got the following picture:

By clicking on the picture and here you can meditate on it in the resolution of 3000x3000. And if you sometimes go to LJ blogs, maybe you will find familiar names there.

This picture is quite consistent with my idea of the thematic structure of the authors, although not ideal (too dense). Little is left - to give yourself and others the opportunity to receive recommendations based on personal author preferences : set the name of the author and received recommendations similar to him.

For this purpose, a small visualization of the graph areas adjacent to the author of interest was written on d3js using force layout. For each author (and there are a little more than 40 thousand, as I said), a json file was generated with a description of the nearby nodes of the graph, a “tag annotation” based on a sorted list of popular words for this author, and a similar annotation of his group, which includes nearby nodes. Annotations should give some insight into what the author writes about and what topics are relevant for similar bloggers.

The result was such a similarity.me mini-search engine with recommendations and a graphic card:

What can be improved?

- Adequacy - now the process of data collection is underway, so that the coverage of posts will be greater and the semantic proximity of the authors on the map should become more adequate. In addition, I plan to conduct an additional pre-selection of authors - to exclude "half-dead", repost lovers, automatic publishers - this will allow a little thinning of the graph from garbage.

- Accuracy - there is an idea to use neural networks to form document vectors and experiment with different distances besides cosine

- Visualization - try to impose a thematic structure on the structure of relations between authors using hexagonal projections and all the same self-organizing maps. Well, to achieve a clearer clustering of the overall map.

- Annotation - try annotation algorithms with collocations.

What about a vinyl?

The title picture is just a wine list for taste characteristics ))

A side result of the study was the thought "rather than using this approach to recommend any products according to their descriptions." And for this, tasting notes by wine critic Denis Rudenko on his blog on the Daily Wine Telegraph daily were perfect . Unfortunately, he abandoned their publication long ago, but more than 2000 descriptions of wines accumulated there are excellent material for such an experiment.

No special text preprocessing was done. Having fed their system, I got a taste list of the 700 best wines with a recommendation similar:

On it you can highlight wines from the most popular grape varieties and according to their subjective (from the point of view of the taster) tastes.

There are plans to optimize the recommendations a little by converting some adjectives into nouns, for example, “blueberry” - “blueberry”. And place on the map all more than 2,000 tasted wines.

Somehow, you can deal with the problem of choice (if suddenly it is relevant for you) by expanding these approaches to other areas.