How we at Tutu.ru achieve the effectiveness of each of the 9000+ UI tests

Any project in the process of its development and growth is filled with new functionality. QA-processes must respond to this promptly and adequately, for example, by increasing the number of tests of all kinds. In this report, we will talk about UI tests, which play an important role in creating a quality product. The automation system of UI testing not only significantly reduces the time for regression testing, but also ensures the effective operation of such development tools and processes as Continuous Integration and release engineering.

The number of tests is gradually growing from 1000 to 3000, from 6000 to 9000+, etc., and so that this "avalanche" does not cover our QA process, you need to think about the effectiveness of the entire system and each test from the very early stage of the development of the automation project in her.

In this report I will tell you how to make the system flexible to the requests coming from the business, as well as about the effective use of each of the tests. In addition, we will talk about evaluation and metrics not only of automation processes, but of the entire QA.

Report outline:

- Let's start with the principles of "test building", which make our system as convenient as possible for use;

- We will analyze the ways to integrate the UI testing system into the processes of QA teams;

- Let's look at specific methods for improving the effectiveness of each test;

- Let's talk about the metrics of the UI testing system and about its relationship with Continuous Integration and release engineering projects;

Requirements for the UI-testing system and the principles of "test building"

We make the following requirements for the automation system of UI testing: the system should be easy to use, it should be intuitive, support for test coverage should not be time-consuming, the system should be resistant to errors in the test code, and, finally, the system should be very efficient .

Based on this, the very first and most important principle is the maximum ease of perception of the tests. This is necessary so that each test is understandable for any employee who can read English.

Use a high level of abstraction and the proper naming of functions, variables, etc., keep an eye on this during the code review.

The following principle - each project should be as independent as possible from others. This is necessary so that each project can set individual goals and objectives for itself and does not interfere with the development of other projects while working on them.

All of your changes to the code must necessarily pass a code review using the code control system. I advise you to use the system used by the developers in your team.

Use pre-push and post-commit hooks to protect the health of the "core" of your system. At a minimum, run unit tests in them.

Unit tests are tests that allow you to check the correctness of individual modules of the program source code. It is important not to confuse unit tests and UI tests - they are not interchangeable, they complement each other.

We have the entire core part of the project covered by unit tests, at the moment there are more than 500 of them. We run them with every push and commit to the repository.

UI testing in QA team processes

How we integrated the UI testing system into teams and product processes. The main goal is that any tests should be useful from an early stage of development. Once the test is written, it immediately enters the Continuous Integration system. Support for test coverage should be a standard part of testing a task. Therefore, in Tutu.ru there are no testers who are engaged only in manual testing. Every specialist in our company is engaged in a full range of testing.

The task should not “blink” in master if it breaks any tests. We always keep this in mind, even if the customer is in a hurry.

The labor costs of each stage of the QA process should be monitored.

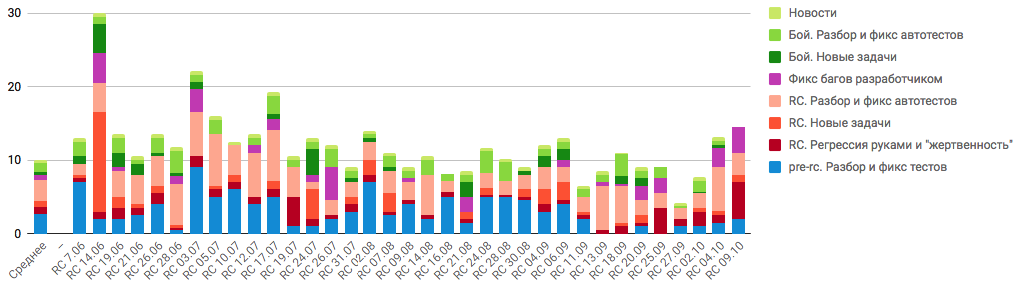

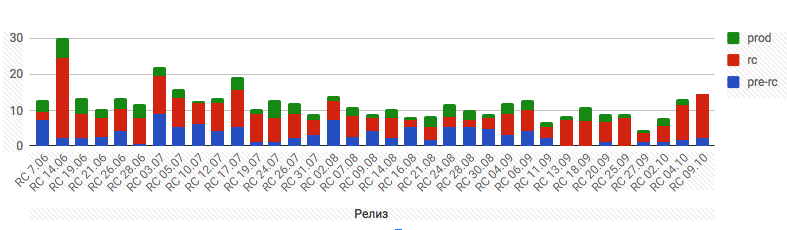

Several of our schedules: detailing the labor costs for the release cycle and its key stages of one of the teams in man-hours. The teams show different results, but they have one goal - to reduce labor costs while maintaining the stated level of quality.

Detailing the release cycle of one of the teams in man-hours

Labor costs for the main stages of the release cycle of one of the teams in man-hours

UI tests in the release cycle

Most importantly, the launch of tests should occur as often as possible, according to the stages of task development. Each stage should pass with the most “green” tests, and we are following this. For some stages, we use test suites specially compiled for them. It is worth noting that each kit is a selection from a common test pool; accordingly, tests from different suites may overlap.

Any development begins with the "story branch" stage . At this stage, we run the test suite, which is formed by testers. Anyone can launch it - a developer, tester or analyst. Test coverage is being updated, and the QA department employee responsible for testing this task is involved.

The Next Stage - Pre-rc. These are "night builds." A special branch is going to the test bench every day. It runs the entire pool of tests, of which we have more than 9000. Each team uses the results of this work. At this stage, the final completion of the test coverage.

The next step is RC . This is our release process, we will be released twice a week. A special release test suite is used for this, and at this step, the tests should be practically all "green", if something is wrong, this is fixed.

Final release (stable) . The release also uses the release test suite.

Project support

A separate role is the project supporter for the prompt resolution of the problems of the QA team. For the team, constant support for the tool’s performance is very important. We use the Service Desk so that every employee can get support when using the system.

Improving the effectiveness of each test

In this section I will talk about specific tools that step by step made the use of UI tests more convenient and, accordingly, increased the efficiency of the system.

Test Container Control and Management Project

There are a lot of tests. The multi-threaded launch system consists of more than 150 test containers that you need to monitor. We have made a tool that allows you to control test flows, provides information about workload and gives the best integration with the Runner module.

Container Management System Interface for UI Tests

Custom Runner Tests

We wrote Runner UI tests. For us, low consumption of resources was paramount. Flexibility in development is important to us - we need to respond to business requirements. Runner is able to balance the load on its system, taking into account startup priorities. Launching the release cycle and devel environment cycles have different priorities. Runner balances them, given these conditions. It also provides better integration with other modules of the system.

Internal organization

A special php script forms a test queue from the repository. It gets into our multi-threaded launch module, where it forks for individual tests (PHP processes). Each such separate process has access to a database where it receives user data under which the test will be performed.

Runner Module Schematic

Test Cases and Test Suite Management

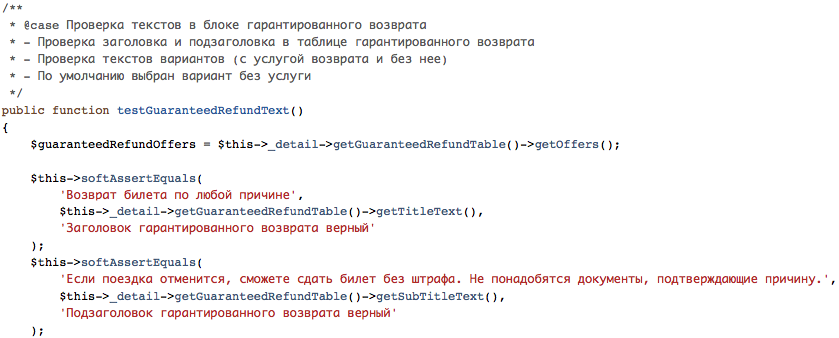

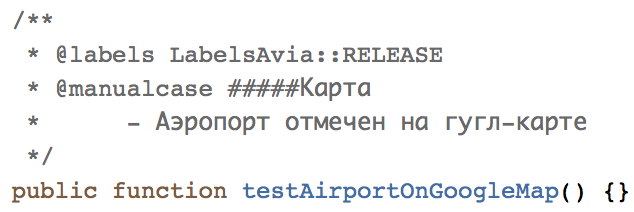

We store test documentation next to the test code, so we bundle UI tests and test cases into a single whole, this is especially convenient when generating reports, each test has a description by which you can quickly understand exactly what risks it covers. Implemented this functionality using PHPDoc.

For already covered test cases, the case tag is used :

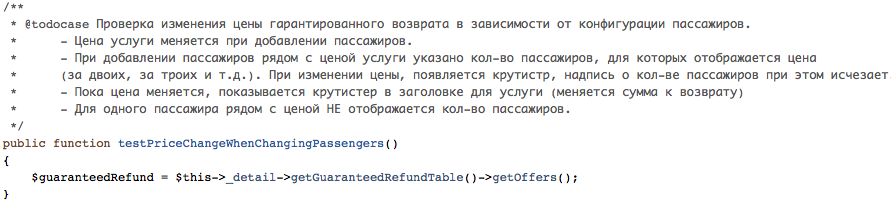

For test cases that are not yet implemented in the code, the todocase tag :

For test cases that need to be done only manually, the manualcase tag :

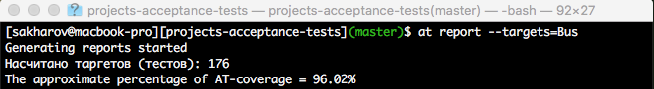

Test coverage calculation

Also, using the tag mechanism, we automatically calculate the UI coverage of each of the projects. Calculation according to the formula:

Percentage_coating = (1 - (number_todocase tests + number_manual tests) / total_number_tests_in_project) * 100%

Console output with percentage of UI-tests coverage for the “Buses” project

Test Suite Management

We use the same mechanism for managing test suites. For example, we use test suites for specific functionality, there are suites for release, RC-cycles, and in general, the creation of suites is limited only by the imagination of QA-specialists. Each test can be included in several sets, we designate them using the @labels tag .

The test refers to the release test suite.

Example of launching the test suite for the functionality of a success page

HTML report

The report is formed individually for each launch. Each run, the tester can do with his hands and get an HTML page in the form of a report, thereby the QA specialist gets the opportunity to quickly assess the quality of the product. HTML reporting reduces test update time. Reports are in the CI tool, but it is sometimes useful for a tester to see the report in his working copy.

"Soft asserts"

PHPUnit, like any other unit testing system with assertions, works like this: if an assertion encounters a data mismatch, then the test does not continue. We have changed this paradigm. Soft assertions, as we call them, when they encounter a problem, do not interrupt the test execution, but continue to execute it with all other assertions, and, ultimately, the test completes its execution with an error at the teardown stage. Thus, soft assertions allow you to give information about the quality of the whole block within a single test, even if there are some problems in this block. Such assertions are safer in complex test logic. For example: we have a test that makes an order from a bank card for real money, and we do not want this test to “fall” somewhere after placing an order and not have time to cancel it.

Flexible launcher settings system

It was created to meet the needs of QA professionals, namely to provide the best integration with CI. It was written using the Symfony Console and currently has more than 30 parameters.

A few of them:

On-demand. We have tests that are highly risky and do not start automatically. They are launched if the tester indicates “on-demand” in the startup parameters.

Bug-skipped tests. Tests that are blocked by any problem in the product can be marked with a special label, and these tests will not run in the CI system. To catch the situation that the product has fixed something, and the test can already be turned off, we have a special plan that runs once a week, and it runs only those tests that are now deactivated.

Js-error-seeker. This test is especially useful for front-end renders. Front-end tenders and testers use this feature to make checks for js errors during the test. Using this mechanism, we can catch the js-error on the whole test path.

Notify-maintainers. Each test can have a miner. This is the person who is responsible for this test and wants to be notified if this test fails. The above flag includes this notice.

Metrics

The project for monitoring and managing test containers is able to monitor the system load, thereby showing the growth points of the system.

Timetables for passing tests at different stands. We have set a limit of 60 minutes, if any project goes beyond this time, then this is an occasion to go to the project and understand why the test suite takes so long.

Production project test time

Release engineering

Here we will talk about how the UI testing tool should interact with the release engineering project.

What a bunch of UI-testing system and Bamboo can do: run builds, generate reports, support the release process, automatically run builds on a schedule. There is also the possibility of automatically “rolling back” the release if the smoke test suite showed that something went wrong.

There can be a large number of different plans in the CI system, and this is absolutely normal, do not be afraid of this.

conclusions

- Each test should run as often as possible;

- The mutual integration of the modules of the UI testing system is very important, for the sake of this it is worth writing your own implementation;

- The system must be flexible and maintainable, ready-made tools do not always meet these requirements;

- Keep track of the performance of QA processes and improve them as soon as you see that something is going wrong;

- QA should be part of the development process, our tools must support this.