Experiments with neural interfaces on javascript

- Transfer

The author of the material, the translation of which we are publishing today, says that for the last couple of years he has noticed a steady interest in neurotechnology. In this article, she wants to talk about her experiments with various hardware and software systems that allow you to establish a connection between the brain and the computer.

I don't have a basic computer education (I studied advertising and marketing). I mastered programming on courses in General Assembly.

When I was looking for the first job, I started experimenting with JavaScript and with different devices. In particular, my first project was the organization of control of the Sphero robotic ball through arm movement using Leap Motion .

Sphero Ball, hand-guided with Leap Motion Technology

This was my first experience using JavaScript to manage something outside the browser. I was immediately, as they say, "hooked."

Since then, I have spent a lot of time working on interactive projects. Each time, taking up a new project, I tried to find for myself more and more complex tasks. So I constantly developed and learned something new.

After experimenting with various devices, in search of another interesting task, I came across sensors of brain activity from the NeuroSky company.

When I was interested in experiments with sensors of brain activity, I decided to purchase a NeuroSky neuro headset. She was much cheaper than other similar offers.

NeuroSky NeuroSky

I didn’t know if my qualification was enough to write at least something for such a device (I just finished programming courses at that moment), so I decided to choose something cheaper so that the task will be prohibitively difficult for me, not to waste too much money. Fortunately, a JavaScript framework has already been created for working with the headset, so it was quite easy to begin the experiments. In particular, I used the estimate of the level of my attention to control the Sphero ball and the Parrot AR.Drone quadrocopter.

In the course of the experiments, I quickly realized that this neuro headset is not particularly accurate. She has only three sensors, so she allows to get rather approximate data on brain activity. The device gives access to the raw data from each sensor, which allows, for example, to visualize this data. But the fact that the headset has only three electrodes does not allow drawing any serious conclusions about what is happening in the human brain based on the data obtained from it.

When I decided to look for other devices for reading indicators of brain activity, I found the Emotiv Epoc neuro headset . I had the feeling that this thing has more serious features in comparison with the NeuroSky headset, so I decided to buy it to continue my experiments.

Before I talk about how Emotiv Epoc works, I suggest to briefly talk about how the human brain works.

I can't call myself a great neuroscience expert, so my story about the brain will be rather superficial. Namely, I want to talk about a few basic things that need to be known to those who want to better understand how the neurohearnes work.

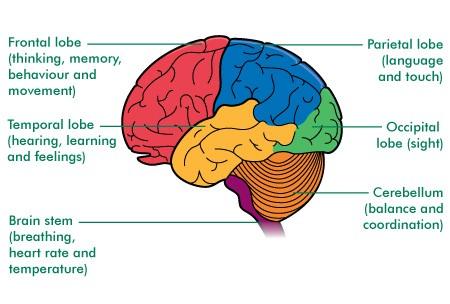

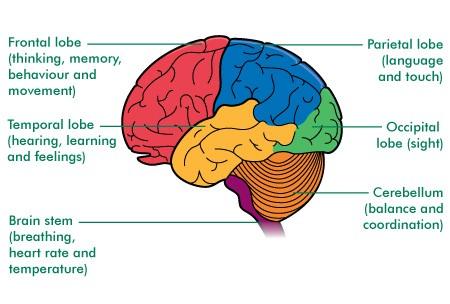

The brain consists of many billions of neurons - specialized cells that are engaged in the processing, storage and transmission of information. Different parts of the brain, consisting of neurons, are responsible for different physiological functions.

Different parts of the brain (source - macmillan.org.uk)

For example, let 's talk about how the brain controls the movements. Parts of the brain, such as the primary motor cortex and the cerebellum, are responsible for movement and coordination. The signals of the corresponding neurons act on the muscles, which leads to movements.

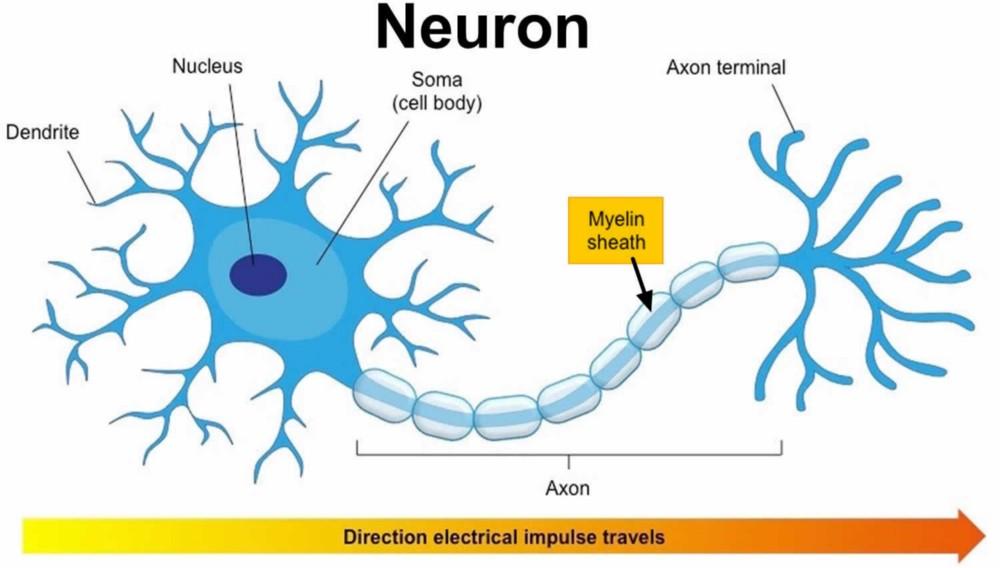

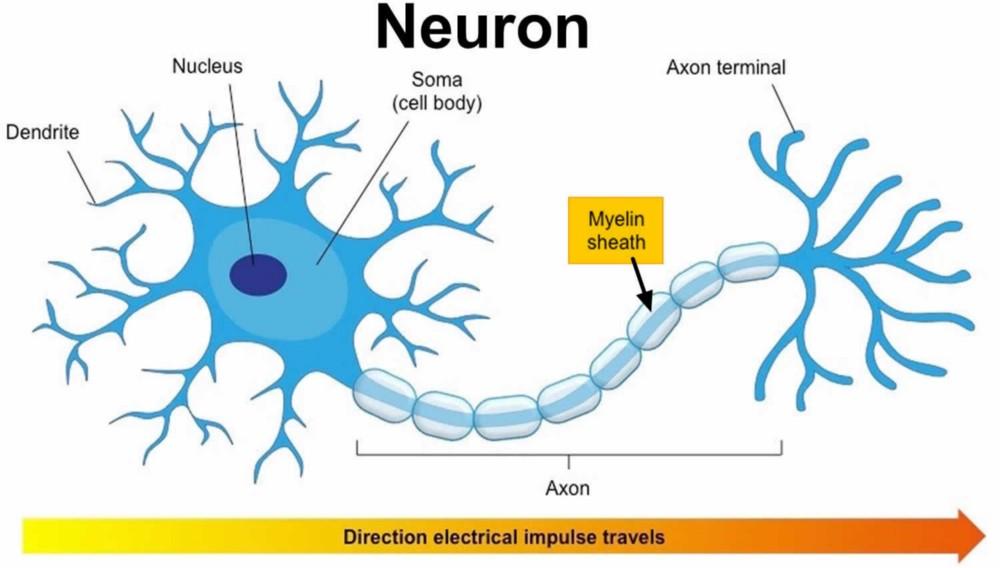

Anatomy of a neuron

, as I said, here represented by an extremely simple description of the brain, but for us the most important thing here is that the activity of the neurons can be monitored by methods of electroencephalography (EEG), by reading the parameters of the electrical activity of the brain with the surface of the scalp.

Other technologies can be used to monitor brain activity, but their use implies surgical intervention. In particular, we are talking about electrocorticography - with this approach, the electrodes are superimposed directly on the cerebral cortex.

Now that we’ve found that the brain generates electrical signals that can be read as we work, let's talk about the Emotiv Epoc headset.

The Emotiv company produces several types of neurogarnitures:

The Epoc headset has 14 sensors (also called “channels”) located in different places of the head.

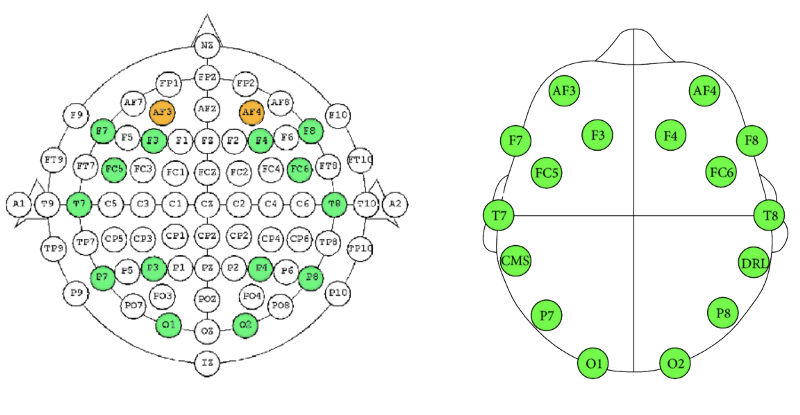

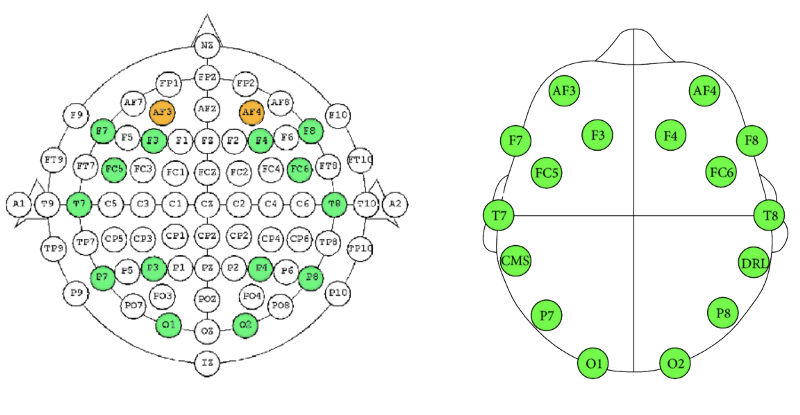

The following figure, on the left, shows the layout of electrodes 10-20 recommended by the International Federation of Electroencephalography and Clinical Neurophysiology. Each electrode corresponds to a specific area of the brain. The use of the 10-20 system allows you to follow a certain standard when creating various devices and when performing scientific studies of the brain.

The figure on the right shows the layout of the electrodes of the Emotiv Epoc headset. To compare it with the 10-20 system, the selection is green and orange.

Comparison of the international placement system of electrodes 10-20 and the Emotiv Epoc headset with

14 Epoc channels is not so much, but the electrodes are placed across the scalp fairly evenly. This gives hope that with Epoc it is possible to obtain fairly accurate information about brain activity.

The headset reads sensors at a frequency of 2048 samples per second (SPS). At the same time, the user has access to the signal sampling rate of 128 or 256 SPS. The device is capable of capturing brain waves with a frequency of 0.16 to 43 Hz. There are various rhythms of the brain, their brief characteristics are shown in the following figure.

Types of brain waves

Why is this important? The fact is that depending on the application that needs to be built on the basis of an electroencephalograph, we may need to pay special attention to brain waves of a certain frequency. For example, if we need to create a program to help meditators, then we will probably be interested only in theta waves, whose frequency is 4-8 Hz.

Having dealt with the principles underlying electroencephalography, let's talk about the capabilities of Emotiv Epoc and related software.

Emotiv software is not open source, a special license is required to access the raw sensor signal. Under normal conditions, the following features are available when working with Emotiv Epoc:

In order to use the recognition of mental commands, first the user needs to train the system . Learning data is saved as a file.

If you want to develop your own programs for Emotiv Epoc, you can use API Cortex and the corresponding SDK (its support is discontinued after the release of version 3.5). If you want to use JavaScript, you can take a look at my development, the Epoc.js library .

Epoc.js is a framework designed to interact with Emotiv Epoc and Insight devices using JavaScript. This framework gives the developer access to the above features of the systems from Emotiv and allows you to interact with the emulator .

Here is the simplest project based on Epoc.js:

In this sample code, we connect the Node.js module

Each such property can be set to either 0 or 1. A unit in the property value means that the system has recorded the corresponding event. A full list of these properties can be found here .

The described library was created using the Emotiv C ++ SDK, Node.js and three modules for Node.js: Node-gyp, Bindings and Nan. Its development used an approach that can now be considered obsolete. Now the use of N-API is relevant .

Having discussed the various possibilities of neuroheternologies and the ways of program work with them, I will tell you about several prototypes that I created that use the neurointerface.

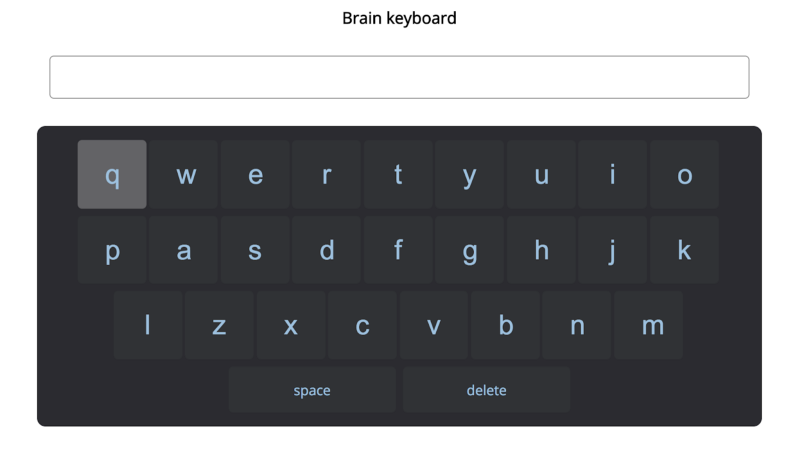

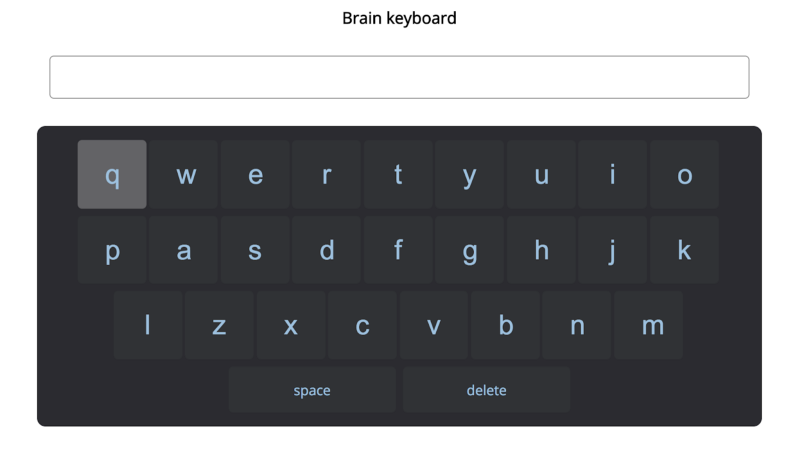

This is how the keyboard, which controls the movement of the eyes.

Eye Movement Keyboard Prototype

This was my first project created using Emotiv Epoc. I wondered if it was possible to create a simple interface using a neuro headset that allows a person to interact with a computer using eye movements. For example, when you translate the view to the right or left on the keyboard, the corresponding keys are highlighted. In order to "press" the highlighted key, you need to blink. The corresponding letter appears in the box above the keyboard.

This project looks very simple, but the most important thing is that it works.

In my second project I used mental commands. Creating it, I wanted to understand whether it is possible to control an object that is in three-dimensional space, just thinking about something.

Web-interface, managed by thoughts

Here, to create a simple three-dimensional environment, I used the Three.js library, the Epoc.js library was used for recognition of mental commands, and web-sockets were used to send data from the server to the client.

Starting the third project, I wanted to explore the possibilities of managing real devices using mental commands. I have been interested in IoT development using JavaScript for quite some time, so it was interesting for me to know what happens if I combine Parrot quadrocopter and neuro headset.

Quadcopter

All the projects described above, all the prototypes created, are pretty simple designs that I created to test some ideas in practice and to evaluate the possibilities and limitations of neural interfaces.

The word “neurointerface” sounds amazing, and when it turns out that a computer can control the power of thought, it may seem like it is the future, but in fact neurocomputer technologies still have quite a few limitations.

It’s quite normal that users have to train the system, during which they record brain waves and compare them with certain teams, but for many, such a step is an obstacle to adopting a new technology. It is hard for me to imagine that someone will spend time on learning neurocomputer systems, except that such a system will be really needed by someone, while the accuracy with which it recognizes mental commands will be at a very high level.

When I developed my prototype based on computer perception of mental commands, I found out that there was some delay between the moment when I started to think and the program when I reacted to this thought.

I think the point here is that the machine learning algorithm used in the prototype receives data from the device in real time. In order to recognize the thought that he had previously learned, he needs indicators collected over a period of time.

This has an impact on which programs can be built on the basis of a neural interface. For example, a program that helps to meditate looks quite realistic, since the delays between a change in brain state and the program’s response do not particularly affect the results of such a program. However, if you ask yourself to create something like a wheelchair that is controlled by thoughts, the problem of delays becomes much more acute, calling into question a similar development.

Electroencephalogram removal devices are great for everyday use in ordinary life situations. It is enough to put on a headset, putting a special gel on the sensors, and everything is ready. However, the fact that signals generated by the brain are read from the scalp, and not, say, from the surface of the brain itself, impairs the accuracy of such signals.

If we talk about the frequency of removal indicators, it is very good in existing devices. The same cannot be said about the spatial characteristics of the data obtained. EEG devices can only read signals originating in those parts of the brain that are close to the surface of the head. About what happens in the deeper structures of the brain, using a similar approach can not be known.

Neuro headset - this is not the cutest and most familiar device. I think that as long as these headsets look like they are now, they are unlikely to be worn in public places. As technology advances, it may be that devices are created that may be hidden in accessories like hats, but even here you may encounter a problem related to the fact that such devices will be inconvenient when worn for a long time.

EEG sensors should fit quite tightly to the scalp to remove the quality indicators of brain activity. And if their pressure immediately after putting on the headset can almost not be felt, over time it begins to cause discomfort. Moreover, if the sensors also need to be applied gel, it turns into an additional barrier to the wide distribution of neurohears.

As you can see, the current state of affairs in the field of neural interfaces suggests that they are unlikely to be widespread. However, if we talk about the future, then we can say that such devices have interesting perspectives.

If you take into account the current state of technology and think about what they may become in the future, you can find several options for their application.

I would like the neuro headsets to help people with disabilities live a fuller life and be more independent.

This is what I thought about when I created my first prototype - a keyboard, controlled by eye movements. This development of mine is far from the level when it could be used in practice, but working on this project, it was interesting for me to understand whether a quite affordable consumer device can really help someone. Not everyone has access to complex medical systems, and I was just amazed that the not very expensive thing, which can be freely purchased from the online store, is able to solve important and necessary tasks.

Mental practices, in particular - meditation - this is the scope of the neurohears, which already today attracts a certain attention (for example, the Muse headset helps to meditate). It is about helping someone who wants to meditate, do everything right.

If the neuro headsets would penetrate into our lives as much as mobile phones, we could probably create applications that can respond to health problems. For example, it would be great if there were applications that, based on an analysis of brain activity, would help fight strokes, panic attacks, epilepsy episodes.

The neuroheck headset can help meditate, which means that with its help you can really figure out what time of day a person is best focused. This information, obtained by regularly wearing a headset, can help to understand when it is best to engage in some kind of activity. You can even imagine that the work schedule will be organized in accordance with the individual characteristics of a person, which will increase his productivity.

I like, on my own initiative, during off-hours, to explore phenomena at the intersection of art and technology. I believe that one should not underestimate the work in this direction related to neural interfaces, since, although they may seem “frivolous,” they help to better understand technologies that will prove useful in more “serious” cases of their use.

Recently, I had an idea that EEG sensors should not be considered as something completely independent. Our brain perceives the world through the senses. He is not able to see without eyes and to hear without ears. Therefore, if we want to extract the maximum benefit from data on the electrical activity of the brain, we may need to monitor other vital signs.

The main problem here is that all this can lead to the fact that people will be literally hung with various sensors.

Are there too many sensors? (source of illustration - cognionics.net)

Perhaps no one will constantly wear the sensors shown in the previous figure.

A few weeks ago, I acquired something new — the OpenBCI complex . My next step is to study the raw data obtained from EEG sensors and apply machine learning methods to this data. OpenBCI is an open source project, so their development seems to me perfectly suited for this purpose. I still didn’t work much with their headset, so far I only had enough time to connect it to a computer and set it up. Here is how it all looks.

Openbci

The author of this material says that he continues to study neural interfaces. Hopefully, her story will help those who are interested in this topic, but do not dare to start practical actions, to take the first steps in the field of the use of neuroheternologies. If all of this is interesting to you - here is another one of our publications on neuroheaders and JavaScript, dedicated to Muse.

Dear readers! Do you plan to do experiments with neurohearnes?

Prehistory

I don't have a basic computer education (I studied advertising and marketing). I mastered programming on courses in General Assembly.

When I was looking for the first job, I started experimenting with JavaScript and with different devices. In particular, my first project was the organization of control of the Sphero robotic ball through arm movement using Leap Motion .

Sphero Ball, hand-guided with Leap Motion Technology

This was my first experience using JavaScript to manage something outside the browser. I was immediately, as they say, "hooked."

Since then, I have spent a lot of time working on interactive projects. Each time, taking up a new project, I tried to find for myself more and more complex tasks. So I constantly developed and learned something new.

After experimenting with various devices, in search of another interesting task, I came across sensors of brain activity from the NeuroSky company.

The first experiments with the neuro headset

When I was interested in experiments with sensors of brain activity, I decided to purchase a NeuroSky neuro headset. She was much cheaper than other similar offers.

NeuroSky NeuroSky

I didn’t know if my qualification was enough to write at least something for such a device (I just finished programming courses at that moment), so I decided to choose something cheaper so that the task will be prohibitively difficult for me, not to waste too much money. Fortunately, a JavaScript framework has already been created for working with the headset, so it was quite easy to begin the experiments. In particular, I used the estimate of the level of my attention to control the Sphero ball and the Parrot AR.Drone quadrocopter.

In the course of the experiments, I quickly realized that this neuro headset is not particularly accurate. She has only three sensors, so she allows to get rather approximate data on brain activity. The device gives access to the raw data from each sensor, which allows, for example, to visualize this data. But the fact that the headset has only three electrodes does not allow drawing any serious conclusions about what is happening in the human brain based on the data obtained from it.

When I decided to look for other devices for reading indicators of brain activity, I found the Emotiv Epoc neuro headset . I had the feeling that this thing has more serious features in comparison with the NeuroSky headset, so I decided to buy it to continue my experiments.

Before I talk about how Emotiv Epoc works, I suggest to briefly talk about how the human brain works.

How the brain works

I can't call myself a great neuroscience expert, so my story about the brain will be rather superficial. Namely, I want to talk about a few basic things that need to be known to those who want to better understand how the neurohearnes work.

The brain consists of many billions of neurons - specialized cells that are engaged in the processing, storage and transmission of information. Different parts of the brain, consisting of neurons, are responsible for different physiological functions.

Different parts of the brain (source - macmillan.org.uk)

For example, let 's talk about how the brain controls the movements. Parts of the brain, such as the primary motor cortex and the cerebellum, are responsible for movement and coordination. The signals of the corresponding neurons act on the muscles, which leads to movements.

Anatomy of a neuron

, as I said, here represented by an extremely simple description of the brain, but for us the most important thing here is that the activity of the neurons can be monitored by methods of electroencephalography (EEG), by reading the parameters of the electrical activity of the brain with the surface of the scalp.

Other technologies can be used to monitor brain activity, but their use implies surgical intervention. In particular, we are talking about electrocorticography - with this approach, the electrodes are superimposed directly on the cerebral cortex.

Now that we’ve found that the brain generates electrical signals that can be read as we work, let's talk about the Emotiv Epoc headset.

How the neuro headset works

The Emotiv company produces several types of neurogarnitures:

- Emotiv Insight

- Emotiv Epoc Flex Kit

- Emotiv Epoc

The Epoc headset has 14 sensors (also called “channels”) located in different places of the head.

The following figure, on the left, shows the layout of electrodes 10-20 recommended by the International Federation of Electroencephalography and Clinical Neurophysiology. Each electrode corresponds to a specific area of the brain. The use of the 10-20 system allows you to follow a certain standard when creating various devices and when performing scientific studies of the brain.

The figure on the right shows the layout of the electrodes of the Emotiv Epoc headset. To compare it with the 10-20 system, the selection is green and orange.

Comparison of the international placement system of electrodes 10-20 and the Emotiv Epoc headset with

14 Epoc channels is not so much, but the electrodes are placed across the scalp fairly evenly. This gives hope that with Epoc it is possible to obtain fairly accurate information about brain activity.

The headset reads sensors at a frequency of 2048 samples per second (SPS). At the same time, the user has access to the signal sampling rate of 128 or 256 SPS. The device is capable of capturing brain waves with a frequency of 0.16 to 43 Hz. There are various rhythms of the brain, their brief characteristics are shown in the following figure.

Types of brain waves

Why is this important? The fact is that depending on the application that needs to be built on the basis of an electroencephalograph, we may need to pay special attention to brain waves of a certain frequency. For example, if we need to create a program to help meditators, then we will probably be interested only in theta waves, whose frequency is 4-8 Hz.

Having dealt with the principles underlying electroencephalography, let's talk about the capabilities of Emotiv Epoc and related software.

Emotiv Epoc features

Emotiv software is not open source, a special license is required to access the raw sensor signal. Under normal conditions, the following features are available when working with Emotiv Epoc:

- Measurement of indicators characterizing the position of the user's head in space using an accelerometer and gyroscope.

- Measurement of the level of arousal, involvement, relaxation, interest, stress, concentration.

- Recognition of facial muscle movements, giving an idea of the user's facial expression. For example, we are talking about blinking and a smile.

- Recognition of mental commands (movement and turns).

In order to use the recognition of mental commands, first the user needs to train the system . Learning data is saved as a file.

If you want to develop your own programs for Emotiv Epoc, you can use API Cortex and the corresponding SDK (its support is discontinued after the release of version 3.5). If you want to use JavaScript, you can take a look at my development, the Epoc.js library .

Epoc.js library

Epoc.js is a framework designed to interact with Emotiv Epoc and Insight devices using JavaScript. This framework gives the developer access to the above features of the systems from Emotiv and allows you to interact with the emulator .

Here is the simplest project based on Epoc.js:

const epoc = require('epocjs')();

epoc.connectToLiveData('path/to/profile/file', function(event){

var action = event.blink === 1 ? 'blinking' : 'not blinking';

console.log(action);

});In this sample code, we connect the Node.js module

epocjsand create an instance of the corresponding object. Then we call the method of connectToLiveDatathis object, passing it the path to the file with the user data obtained after learning the system, and the callback function. This function is passed an event object that contains various properties available for tracking. For example, if we want the program to respond to a blink, the property is used event.blink. Each such property can be set to either 0 or 1. A unit in the property value means that the system has recorded the corresponding event. A full list of these properties can be found here .

The described library was created using the Emotiv C ++ SDK, Node.js and three modules for Node.js: Node-gyp, Bindings and Nan. Its development used an approach that can now be considered obsolete. Now the use of N-API is relevant .

Having discussed the various possibilities of neuroheternologies and the ways of program work with them, I will tell you about several prototypes that I created that use the neurointerface.

Prototypes

▍1. Keyboard

This is how the keyboard, which controls the movement of the eyes.

Eye Movement Keyboard Prototype

This was my first project created using Emotiv Epoc. I wondered if it was possible to create a simple interface using a neuro headset that allows a person to interact with a computer using eye movements. For example, when you translate the view to the right or left on the keyboard, the corresponding keys are highlighted. In order to "press" the highlighted key, you need to blink. The corresponding letter appears in the box above the keyboard.

This project looks very simple, but the most important thing is that it works.

▍2. WebVR

In my second project I used mental commands. Creating it, I wanted to understand whether it is possible to control an object that is in three-dimensional space, just thinking about something.

Web-interface, managed by thoughts

Here, to create a simple three-dimensional environment, I used the Three.js library, the Epoc.js library was used for recognition of mental commands, and web-sockets were used to send data from the server to the client.

▍3. Iot

Starting the third project, I wanted to explore the possibilities of managing real devices using mental commands. I have been interested in IoT development using JavaScript for quite some time, so it was interesting for me to know what happens if I combine Parrot quadrocopter and neuro headset.

Quadcopter

All the projects described above, all the prototypes created, are pretty simple designs that I created to test some ideas in practice and to evaluate the possibilities and limitations of neural interfaces.

Neural Interface Restrictions

The word “neurointerface” sounds amazing, and when it turns out that a computer can control the power of thought, it may seem like it is the future, but in fact neurocomputer technologies still have quite a few limitations.

▍Neediness in learning

It’s quite normal that users have to train the system, during which they record brain waves and compare them with certain teams, but for many, such a step is an obstacle to adopting a new technology. It is hard for me to imagine that someone will spend time on learning neurocomputer systems, except that such a system will be really needed by someone, while the accuracy with which it recognizes mental commands will be at a very high level.

▍ Delays

When I developed my prototype based on computer perception of mental commands, I found out that there was some delay between the moment when I started to think and the program when I reacted to this thought.

I think the point here is that the machine learning algorithm used in the prototype receives data from the device in real time. In order to recognize the thought that he had previously learned, he needs indicators collected over a period of time.

This has an impact on which programs can be built on the basis of a neural interface. For example, a program that helps to meditate looks quite realistic, since the delays between a change in brain state and the program’s response do not particularly affect the results of such a program. However, if you ask yourself to create something like a wheelchair that is controlled by thoughts, the problem of delays becomes much more acute, calling into question a similar development.

▍Non-invasive technology and accuracy of indicators

Electroencephalogram removal devices are great for everyday use in ordinary life situations. It is enough to put on a headset, putting a special gel on the sensors, and everything is ready. However, the fact that signals generated by the brain are read from the scalp, and not, say, from the surface of the brain itself, impairs the accuracy of such signals.

If we talk about the frequency of removal indicators, it is very good in existing devices. The same cannot be said about the spatial characteristics of the data obtained. EEG devices can only read signals originating in those parts of the brain that are close to the surface of the head. About what happens in the deeper structures of the brain, using a similar approach can not be known.

▍ Community acceptance

Neuro headset - this is not the cutest and most familiar device. I think that as long as these headsets look like they are now, they are unlikely to be worn in public places. As technology advances, it may be that devices are created that may be hidden in accessories like hats, but even here you may encounter a problem related to the fact that such devices will be inconvenient when worn for a long time.

EEG sensors should fit quite tightly to the scalp to remove the quality indicators of brain activity. And if their pressure immediately after putting on the headset can almost not be felt, over time it begins to cause discomfort. Moreover, if the sensors also need to be applied gel, it turns into an additional barrier to the wide distribution of neurohears.

As you can see, the current state of affairs in the field of neural interfaces suggests that they are unlikely to be widespread. However, if we talk about the future, then we can say that such devices have interesting perspectives.

Neural Interface Features

If you take into account the current state of technology and think about what they may become in the future, you can find several options for their application.

▍Help people with disabilities

I would like the neuro headsets to help people with disabilities live a fuller life and be more independent.

This is what I thought about when I created my first prototype - a keyboard, controlled by eye movements. This development of mine is far from the level when it could be used in practice, but working on this project, it was interesting for me to understand whether a quite affordable consumer device can really help someone. Not everyone has access to complex medical systems, and I was just amazed that the not very expensive thing, which can be freely purchased from the online store, is able to solve important and necessary tasks.

▍Mental practices

Mental practices, in particular - meditation - this is the scope of the neurohears, which already today attracts a certain attention (for example, the Muse headset helps to meditate). It is about helping someone who wants to meditate, do everything right.

▍Help in solving health problems

If the neuro headsets would penetrate into our lives as much as mobile phones, we could probably create applications that can respond to health problems. For example, it would be great if there were applications that, based on an analysis of brain activity, would help fight strokes, panic attacks, epilepsy episodes.

▍ Improving labor productivity

The neuroheck headset can help meditate, which means that with its help you can really figure out what time of day a person is best focused. This information, obtained by regularly wearing a headset, can help to understand when it is best to engage in some kind of activity. You can even imagine that the work schedule will be organized in accordance with the individual characteristics of a person, which will increase his productivity.

▍ Art

I like, on my own initiative, during off-hours, to explore phenomena at the intersection of art and technology. I believe that one should not underestimate the work in this direction related to neural interfaces, since, although they may seem “frivolous,” they help to better understand technologies that will prove useful in more “serious” cases of their use.

Combination of electrical brain activity sensors with other sensors

Recently, I had an idea that EEG sensors should not be considered as something completely independent. Our brain perceives the world through the senses. He is not able to see without eyes and to hear without ears. Therefore, if we want to extract the maximum benefit from data on the electrical activity of the brain, we may need to monitor other vital signs.

The main problem here is that all this can lead to the fact that people will be literally hung with various sensors.

Are there too many sensors? (source of illustration - cognionics.net)

Perhaps no one will constantly wear the sensors shown in the previous figure.

Openbci

A few weeks ago, I acquired something new — the OpenBCI complex . My next step is to study the raw data obtained from EEG sensors and apply machine learning methods to this data. OpenBCI is an open source project, so their development seems to me perfectly suited for this purpose. I still didn’t work much with their headset, so far I only had enough time to connect it to a computer and set it up. Here is how it all looks.

Openbci

Results

The author of this material says that he continues to study neural interfaces. Hopefully, her story will help those who are interested in this topic, but do not dare to start practical actions, to take the first steps in the field of the use of neuroheternologies. If all of this is interesting to you - here is another one of our publications on neuroheaders and JavaScript, dedicated to Muse.

Dear readers! Do you plan to do experiments with neurohearnes?