The history of a single request

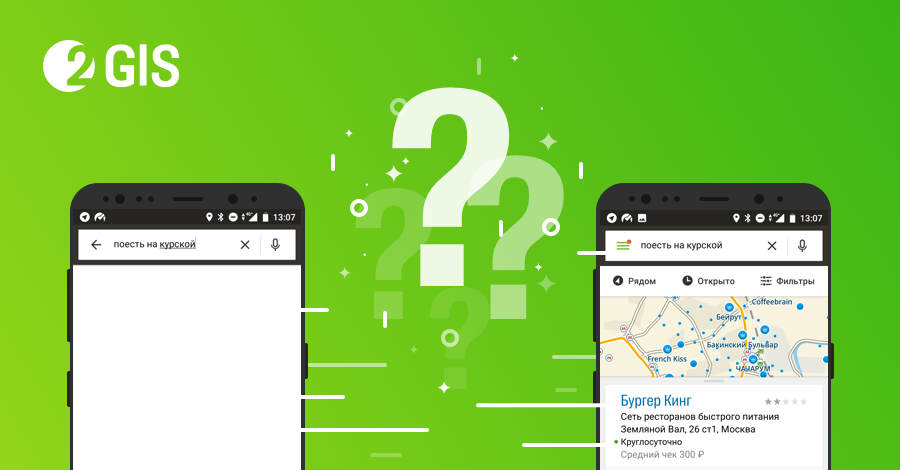

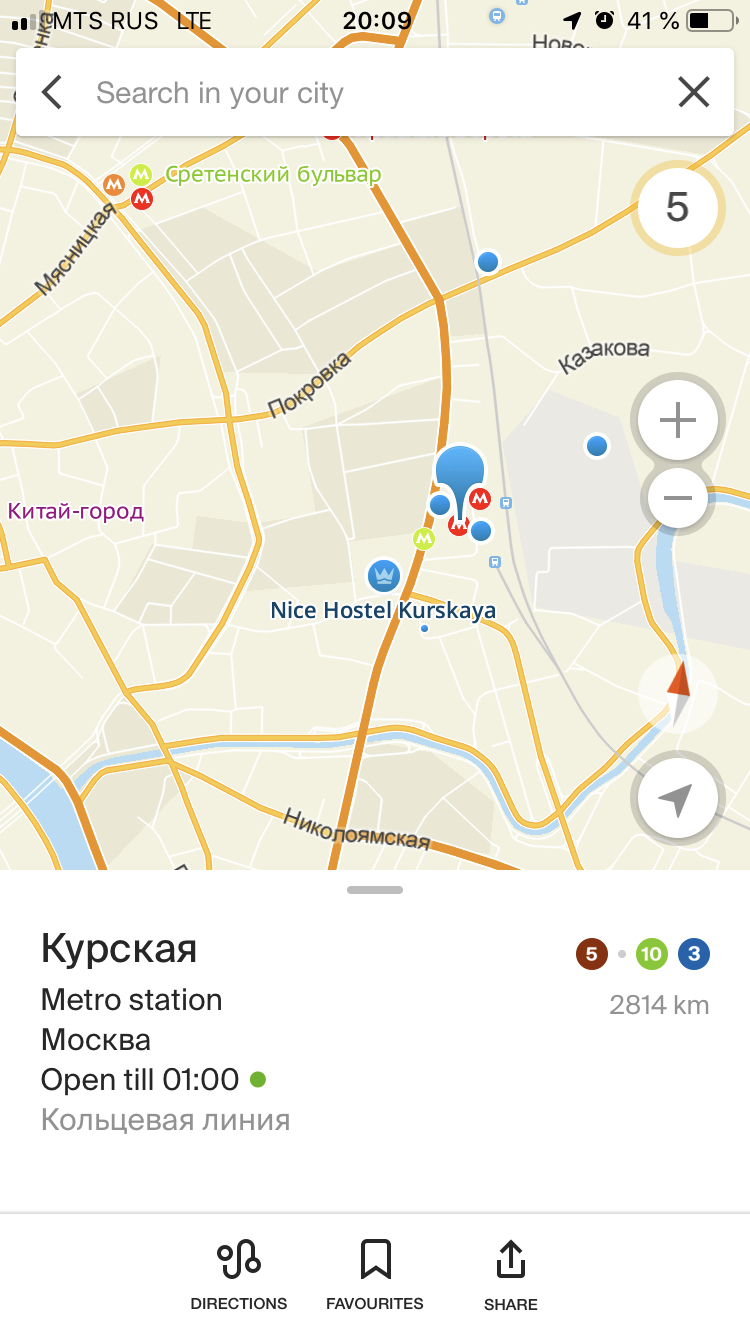

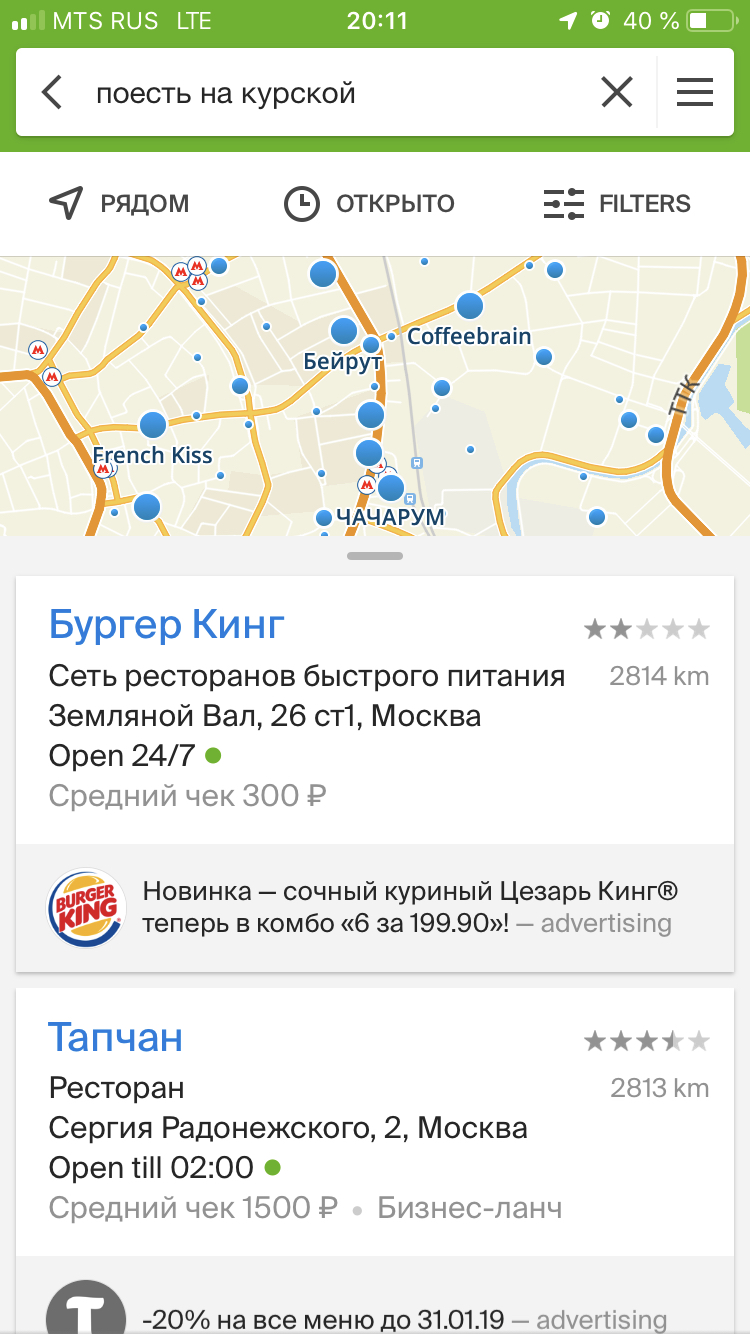

Submit your first day at the new job. The office is located in the area completely unknown to you Kurskaya metro station. Dinner time is coming. You open a search application, write “eat at Kurskaya” and get a selection of options where you can dine.

What is behind the request to "eat at Kursk" and how is it processed to find exactly what you need? In the article I will tell you how the 2GIS Search team is doing everything possible to make life in cities more convenient and comfortable for users.

It is important to understand that the text of the search query is only the tip of the iceberg, a small part of what the user interacts with. The search query itself, in addition to the text, contains a lot of other data. They include personalized information about a user's location, a map section that he sees, a set of records from his favorites and information about search modes. For example, search on the map or in the building, and maybe the search for travel. Data - the key to success of good search functionality.

We pay great attention to our data and their structure. Moreover, we call the search algorithm in 2GIS itself a structural search, because it is designed for efficient and fast search in our structured data. We specifically prepare the search index and the data from which it is built. I will not go into details, I’ll just say that the data is organized in such a way as to be fairly simple to process, well compressed, and most importantly allow us to quickly process it even on mobile devices.

Moreover, the search can work offline, and therefore places special demands on the speed and volume of the search index. We pay great attention to this feature - constantly compressing the search index, assess performance on various platforms and speed up functionality where specific search cases exceed the set time limit. By the way, we can boast that an ordinary search query in 2GIS on a mobile device is faster than the application draws a drop-down list of the results.

Below I will open the veil of secrecy covering the magic of our search. As an example, take the above-mentioned request “to eat at Kursk” . Consider the stages of its processing and what happens at each of them. So let's go!

Stage 1. Parsing

Input Parameters: a request to “eat at Kursk”

First of all, we need to parse the text of the request. This is important, because after parsing we will be able to work not with the query text, but with the set of tokens of which it consists. Tokens are single query words. In our case, we get a set of three tokens: “eat” , “on” , “Kursk” . It would seem that everything is simple, but the devil is in the details. And sometimes everything is not so obvious: for example, in requests for “wi-fi” or “2nd” we should understand that such combinations should be processed entirely.

The tokens themselves contain information about the text of the word, about the position in the request, about the presence of a separator following the word and some characteristic of the word - the register of its characters, whether the word itself is a number, serial number, telephone number, address or other data.

Stage 2. Vocabulary search

Input parameters: tokens "eat" , "on" , "Kursk"

With the ready list of tokens of the request, we proceed to the stage of dictionary search, that is, to the stage at which for each token we find the list of word forms from our data. The word form is encoded information about the root of the word and its ending.

We represent dictionary search as an algorithm for analyzing a dictionary represented as a graph. The nodes in it are letters, or rather, symbols. A graph consists of symbol nodes and transitions between these nodes. The result of the traversal of our dictionary dictionary is a set of word forms that we can get based on the transferred tokens from the previous stage. So we try to find in our dictionary a sequence of characters that matches the next token from the query. In this case, as we all know, users make typos, skip letters, or even make mistakes in the keyboard layout. Therefore, when traversing the dictionary, we apply some manipulations in order to take into account the possible human factor or try to guess what a person is typing right now. Various character chain transformations are in progress: inserts, substitutions,

As a result, for each request token, we extract a set of word forms with information about the root and the end of a word, information about the number of characters in a word form, and an estimate of matching. Assessment of finding - assessment, characterizing the dictionary distance of the found sequence of characters to the desired sequence. The score describes how much the found string of characters differs from the request token.

So for tokens we find the word forms:

- 13 forms for "eat" : "eat", "eat", "paese", "payot", ...

- 3 forms for "on" : "na", "nu", "on"

- 48 forms for "Kursk" : "Kursk", "Kursk", "Kursk", "Kursk", "Kurako", ...

Next, the found word forms are filtered depending on their assessment. The best of them, that is, as close as possible to the words from the query, fall into the list of terms. The term will be understood as a word form and assessment of finding. Plus, in addition to the found word forms, the terms added using the rules of morphology are added to the list. An important stage of morphology processing is the addition of a morphological assessment. The fact is that in our search we use a strong morphology processing mechanism, which allows us not only to find similar words from the dictionary, but also, according to the rules of the natural language, to more correctly find out what interests the user in meaning, and not only in word similarity.

So for tokens the following terms will be created:

- “Eat” : “eat”, “eat”, “eat”, “eat”, “eat”

- "On" : "on", "na", "nu"

- "Kursk" : "Kursk", "Kursk", "Kursk", "Kursk", "Kursk"

At this stage of vocabulary search ends. We have processed the request and have for each token a list of terms that go to further processing. These terms contain all the information about the word they represent, and have an estimate of how each of them was found.

Stage 3. Search for entries in the data

Login: term set for each part of the request

- “Eat” : “eat”, “eat”, “eat”, “eat”, “eat”

- "On" : "on", "na", "nu"

- "Kursk" : "Kursk", "Kursk", "Kursk", "Kursk", "Kursk"

Having obtained from the previous stage a set of terms for each of the parts of the query, we proceed to searching them by our index. Each document in the data has many headers and is written in the reverse index so that we can easily find all references to the desired term in specific documents representing organizations, addresses, or any other.

For each of the parts of the query and for each of the terms of these parts, we are looking for documents containing words encoded in terms. So, for parts of the query for all terms, the occurrences will be found:

- "Eat" : 18 occurrences

- "On" : 4304 occurrences

- "Kursk" : 79 occurrences

It is obvious that the preposition "at" found a lot of time and therefore falls into the many titles of documents - "Coffee on Tap", "play on set-top box", "production on account of the car." "Eat" and "Kursk" are also used repeatedly. The second word with its terms is found in our data much more often than the first.

A hit is a match for a word from a search query to a word from a specific document. These hits are saved to the list, which will be analyzed in the next step. When adding a hit, we not only copy the word data from the term in the document, but also calculate the best estimate of how the word could be found. In other words, we choose the morphological assessment of the term, or an assessment of how the term was found in the dictionary, depending on which of the estimates is closer to the request token.

This stage is a prelude to the start of the search itself. We have prepared a set of hits in specific documents, on the basis of which the next algorithm will select and evaluate what needs to be given to the user as a result.

Stage 4. The heart of the search

Login: hit list

- "Eat" : 18 occurrences

- "On" : 4304 occurrences

- "Kursk" : 79 occurrences

In fact, the hit list in our implementation is a tricky container. It is important to understand that when adding hits to it, special nodes are created where the hits themselves are written, and a link to the document to which these hits fell.

Therefore, it would be more correct to display the input data as follows:

Input: container of document nodes

- document1: {hits, ...}

- document2: {hits, ...}

- Document3: {hits, ...}

- Document4: {hits, ...}

- ...

First of all, the search begins to traverse the document tree and each node returns to the analyzer, which evaluates whether the document from this node is suitable to be the result for getting into the output. To understand what volumes the analyzer has to work with, I will say that at the start the container contains more than 3000 nodes! But the nodes can be added during the traversal process, so it is actually processed even more. It is no exaggeration to say that analysis is the most expensive part of the search and at the same time one of the most complex and large project functions. Nevertheless, it runs extremely quickly, even on mobile devices.

Stage 5. Analysis

Input: Document node : {hits, ...}

The analysis starts with a list of titles from the site. The title is a title and a list of hits that fell into this document title. These titles will be evaluated at the first stage. It is important for us to learn the usefulness of each title. Utility can be good, weak and junk.

For each of the titles selected the best of the chain of hits - the best in length and vocabulary score, made up of similarity of hits. Based on the best chain and will assess the usefulness of the title. To determine the utility of the chain, we also use a mechanism based on the frequency of words in the documents. Roughly speaking, the more often a word occurs in a document, the more important it is ( TF-IDF). So, if the chain contains a hit in an important word from the title of the document without strong morphological differences, for example, an excellent number or gender, we consider the title useful. A title can also be useful if its hits completely cover the words in the title of the document.

With the utility, all titles form a utility mask for the node. This mask gives us an idea of the request tokens covered by the node being analyzed. And with its help, we largely determine whether to analyze the node further.

As a result, we have not just one document from the index, but a set of structural data representing a potential result with information on how it can be found.

Stage 6. Evaluation

Input: Document node : {hits, ...}

Depending on the utility mask, we will either process the node, or go straight to the next one. From the set of processed nodes, we accumulate various information about their totality. For example, a set of node titles, the relationship of nodes among themselves, and some other data.

Next comes the analysis of node titles interconnected with each other. In fact, many nodes are combined into a graph of nodes, which we estimate.

From the titles of the nodes of the graph we get a list of ranked titles. Simply put, from the set of nodes, we compile a single list of titles, in which for each element we also add an estimate and a combination of factors from the hit titles of all the participating nodes.

Evaluation is a structure with information about the number of words involved in a title from a query and many other factors about how a word was found and processed - starting from the stage of a dictionary search. It is important that these ratings from the ranked title will participate in selecting the best ratings. Some of them will be marked as selected and will contribute to the final assessment of the result that the user will see.

The overall score provides the result with information that will be extremely important when sorting the entire issue. It contains factors such as, for example, missing words from a query. This measure characterizes the number of words that were not covered by the node with its structural information.

Based on information about the usefulness of titles, the clarity of the result is determined. Clarity can be good, weak and bad. And it is calculated with the participation of all selected titles for the node being processed. All these data have a dramatic impact on the further fate of the results and the order of their issuance.

Gradually, we are approaching the end of the node analysis. Before the node finally leaves the analyzer and becomes a potential result, we carry out several more refinement manipulations. For example, on the compatibility of selected document headers.

The node that has passed all the circles of the analyzer forms a result containing information about the selected headers and a document. The result, which gives a good coverage of the search query, is sent to the post-processing.

Stage 7. Post-processing

Input: result constructed from a node

The analyzer filters out many records from the index before they become results. However, the analysis can be evaluated and sent to the processing of many potential results. To show the user only the most useful ones in order of relevance, we need to cut off the worst options found by the analyzer.

At the previous step, a general assessment of the result was mentioned. Overall assessment allows us to cut off the worst results at the post-processing stage. Graduation is as follows. The results that did not cover any request tokens are obviously worse than those results that completely cover the user's request. Results with worse clarity are obviously less desirable than results with good clarity. The postprocessor accumulates information about incoming results and eliminates those that are obviously worse. The rest adds to the list.

Before we give the cafe information to the hungry user, we carry out the final processing - we sort them by relevance. In the sorting involved many factors, calculated and aggregated at different stages of the search. And ultimately, the search results for the query "eat at Kursk"Makes over 500 results. Many of them were found in the same way, and therefore have the same rating. They will be sorted by user popularity.

Conclusion

We received the issue with a lot of cafes and restaurants and, joyful, we go for lunch. All the results we got in a fraction of seconds. Nor do we even need an internet connection.

The application stores search indexes on the device. This scheme provides us with non-trivial tasks to optimize data compression and processing speed - because the search should work quickly on a variety of mobile devices! However, this is a completely different story.

Today, I tried to open the hood of our search engine and show how we help users find what they need in the city, and do it quickly and conveniently. I hope it was informative.