Sharding theory

It seems that we have plunged so deeply into the jungle of highload development that we simply do not think about basic problems. Take sharding, for example. What to understand in it, if you can write conditionally shards = n in the database settings, and everything will be done by itself. So, he is like that, but if, more correctly, when something goes wrong, the resources start to really be missed, I would like to understand what the reason is and how to fix everything.

In short, if you are contributing your alternative implementation of hashing in Cassandra, then there are hardly any revelations for you here. But if the load on your services is already arriving, and the system knowledge does not keep up with it, then you are welcome. The great and terrible Andrei Aksyonov ( shodan ), in his characteristic manner, will tell you thatSharding is bad, not sharding is also bad , and how it is arranged inside. And quite by accident, one of the parts of the story about sharding is not at all entirely about sharding, but the devil knows what - like objects on shards mapit.

A photo of cats (although they happened to be puppies) already answers the question why this is all, but we will begin sequentially.

What is "sharding"

If you persistently google, it turns out that there is a fairly blurred border between the so-called partitioning and the so-called sharding. Everyone calls everything he wants, what he wants. Some people distinguish horizontal partitioning and sharding. Others say that sharding is a certain kind of horizontal partitioning.

I did not find a single terminological standard that would be approved by the founding fathers and is ISO certified. Personal inner conviction is approximately like this: Partitioning on average is “cutting the base into pieces” in an arbitrary manner.

The awkward moment here is in the subtle difference between horizontal partitioning and sharding. I can cut into pieces, but I surely will not tell you what it is. There is a feeling that sharding and horizontal partitioning are about the same thing.

Sharding is generally when a large table in terms of databases or a collection of documents, objects, if you do not have a database, and the document store, is cut by objects. That is, out of 2 billion objects, pieces are selected no matter what size. The objects themselves inside each object are not cut into pieces, we do not lay them out into separate columns, but we lay them out in different places in bundles.

Link to the presentation to complete the picture.

Next came the subtle terminological differences. For example, relatively speaking, the developers at Postgres can say that horizontal partitioning is when all the tables into which the main table is divided lie in the same schema, and when on different machines it is sharding.

In a general sense, without being tied to the terminology of a specific database and a specific data management system, there is a feeling that sharding is just cutting by lines and documents and so on — and that’s all:

I emphasize typically. In the sense that we are doing all this not just to cut 2 billion documents into 20 tables, each of which would be more manageable, but in order to distribute it into many cores, many disks or many different physical or virtual servers .

The implication is that we do this so that every shard — every bit of data — replicates many times. But really, no.

In fact, if you do this data cutting, you will generate 16 small tablets from one giant SQL table on MySQL on your glorious laptop, without going beyond a single laptop, a single schema, a single database, etc. etc. - everything, you have sharding.

Remembering the illustration with the puppies, this leads to the following:

Why bandwidth? We sometimes may have such amounts of data that do not interpose - it is not clear where, but not intermeddle - on 1 {core | disk | server | ...}. Just not enough resources and everything. In order to work with this big dataset, you need to cut it.

Why latency? On one core, scanning a table of 2 billion rows is 20 times slower than scanning 20 tables on 20 cores, making it parallel. Data is too slowly processed on one resource.

Why high availability? Or we cut the data in order to do both one and the other at the same time, and at the same time several copies of each shard - replication ensures high availability.

A simple example of "how to make hands"

The conditional sharding can be cut using the test table test.documents for 32 documents, and by generating 16 test tables from this table for approximately 2 documents test.docs00, 01, 02, ..., 15.

Why about? Because a priori we do not know how id is distributed, if from 1 to 32 inclusively, then there will be exactly 2 documents each, otherwise - no.

We do this for what it is. After we have made 16 tables, we can “grab” 16 of what we need. Regardless of where we stand, we can parallelize these resources. For example, if there is not enough disk space, it would make sense to decompose these tables into separate disks.

All this, unfortunately, is not free. I suspect that in the case of the canonical SQL standard (I haven’t reread the SQL standard for a long time, maybe it hasn’t been updated for a long time), there is no official standardized syntax for any SQL server to say: “Dear SQL server, make me 32 shards and decompose them into 4 disks. ” But in individual implementations, there is often a specific syntax in order to do the same in principle. PostgreSQL has mechanisms for partitioning, MySQL has MariaDB, Oracle probably did it all a long time ago.

However, if we do it by hand, without database support and within the standard, then we conditionally pay for data access complexity.. Where there was a simple SELECT * FROM documents WHERE id = 123, now 16 x SELECT * FROM docsXX. And well, if we tried to get the record by key. Much more interesting if we tried to get an early range of records. Now (if we, I emphasize, like fools, and remain within the standard) the results of these 16 SELECT * FROM will have to be combined in the application.

What performance changes to expect?

In fact, the correct answer is unknown. With dexterous application of the sharding technique, you can achieve a significant superlinear degradation of your application, and DBA will come running with a hot poker.

Let's see how this can be achieved. It is clear that simply putting the setting in PostgreSQL shards = 16, and then it itself took off - it is not interesting. Let's think about how we can ensure that we can slow down 32 times from sharding - this is interesting from the point of view how not to do this.

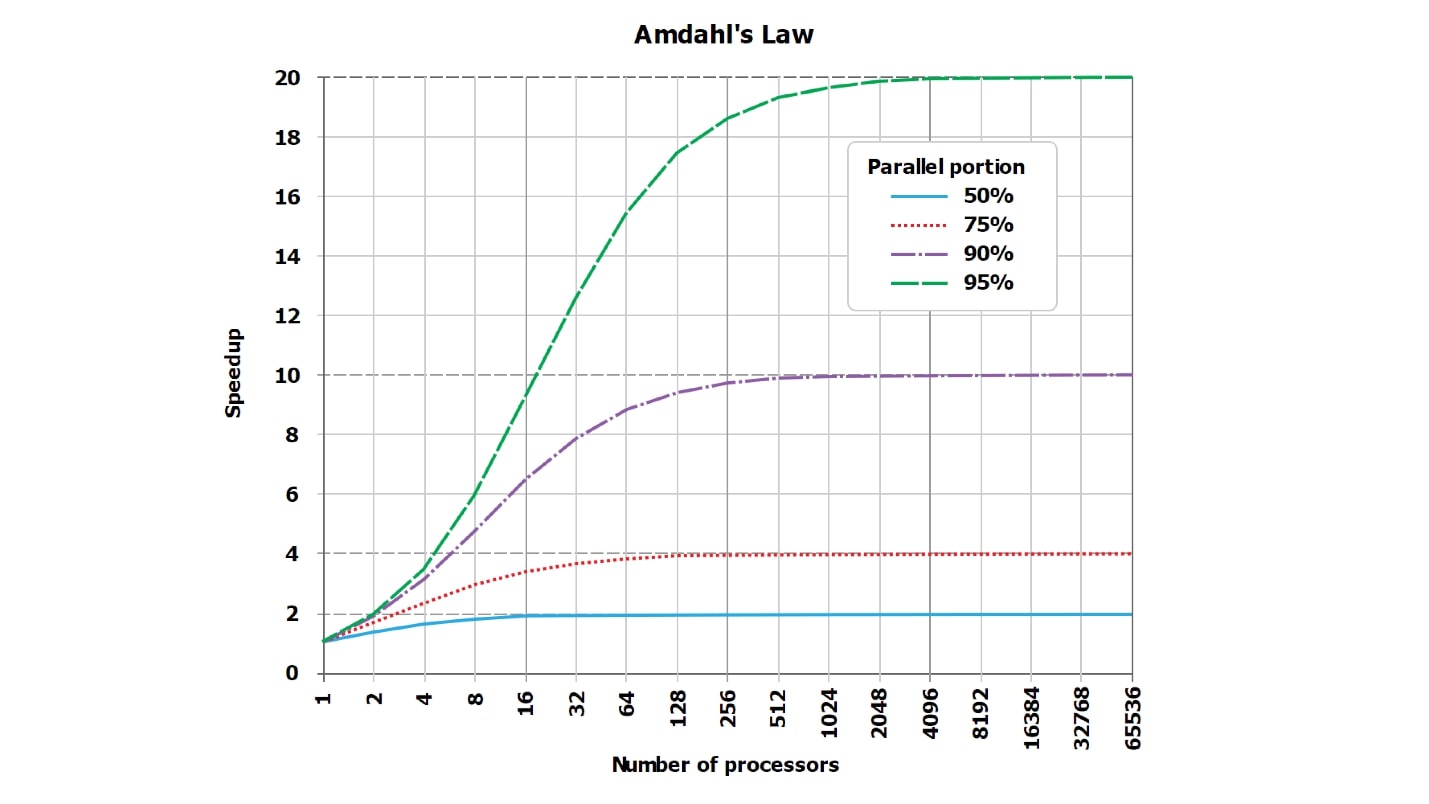

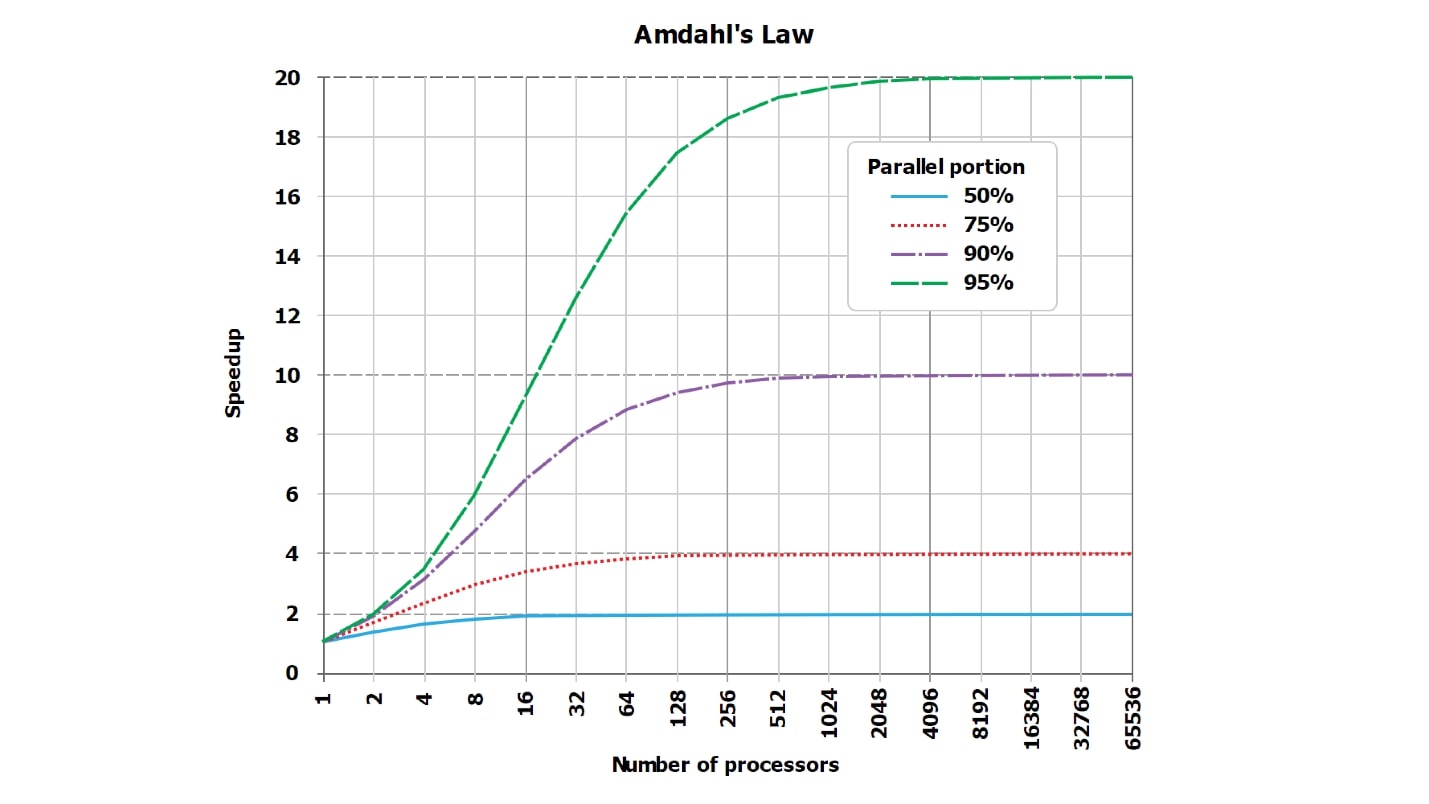

Our attempts to accelerate or slow down will always rest against the classics — against the good old Amdahl law, which says that there is no perfect parallelization of any query, there is always some consistent part.

Amdahl law

There is always a part of the execution of the request that is parallel, and there is always a part that does not parallel. Even if it seems to you that there is a perfectly parallel query, at least the collection of the result string that you are going to send to the client is always from the strings received from each shard, and it is always consistent.

There is always some consistent part. It can be tiny, completely imperceptible on the general background, it can be gigantic and, accordingly, strongly affecting parallelization, but it always is.

In addition, its influence is changing.and can grow significantly, for example, if we cut our table - let's raise the rates - from 64 records to 16 tables with 4 records, this part will change. Of course, judging by such huge amounts of data, we work on a mobile phone and an 86 2 MHz processor, we don’t have enough files that can be kept open at the same time. Apparently, with such introductory, we open one file at a time.

With this simple example, I'm trying to show that some kind of Xserial appears. In addition to the fact that there is always a serialized part, and the fact that we are trying to work with data in parallel, an additional part appears to support this data slicing. Roughly speaking, we may need:

Any out-of-sync effects will still appear. They may be insignificant and take one billion dollars from the total time, but they are always non-zero and always are. With their help, we can dramatically lose in performance after sharding.

This is a standard picture about the law of Amdal. It is not very readable, but it is important that the lines, which should ideally be straight and grow linearly, abut on the asymptote. But since the schedule from the Internet is unreadable, I have made, in my opinion, more vivid tables with numbers.

Suppose that we have a certain serialized part of the request processing, which takes only 5%: serial = 0.05 = 1/20.

Intuitively, it would seem that with the serialized part, which takes only 1/20 of the request processing, if we parallelize the processing of the request for 20 cores, it will become approximately 20, at worst 18, times faster.

In fact, mathematics is heartless :

It turns out that if you carefully count, with the serialized part of 5%, the acceleration will be 10 times (10.3), which is 51% compared to the theoretical ideal.

Using 20 cores (20 disks, if you like) for the task on which one worked earlier, we even theoretically will never get acceleration more than 20 times, but practically - much less. Moreover, with an increase in the number of parallels, inefficiency is greatly increasing.

When only 1% of the serialized work remains, and 99% is parallelized, the acceleration values are somewhat improved:

For a completely thermonuclear query that is naturally executed for hours, and the preparatory work and the assembly of the result take very little time (serial = 0.001), we will see already good efficiency:

Please note 100% we will never see . In particularly good cases, you can see, for example, 99.999%, but not exactly 100%.

How to chuff and repeat N times?

It is possible to fool and repeat exactly N times:

In this case, the serialized part (serial) occupies not 1% and not 5%, but approximately 20% in modern databases. You can get 50% of the serialized part if you access the database using a wildly efficient binary protocol or link it as a dynamic library to a Python script.

The rest of the processing time of a simple request will be occupied by non-parallelized operations of parsing the request, preparing a plan, etc. That is, it does not read the record.

If we split the data into 16 tables and start sequentially, as is customary in the PHP programming language, for example, (he is not very good at starting asynchronous processes), then we’ll get a slowdown 16 times. And maybe even more, because network round-trips will also be added.

Remember about the choice of programming language, because if you send queries to the database (or search server) sequentially, then where does the acceleration come from? Rather, there will be a slowdown.

Bike from life

If you choose C ++, write to POSIX Threads , not Boost I / O. I saw an excellent library from experienced developers from Oracle and MySQL, who wrote a chat with the MySQL server on Boost. Apparently, at work they were forced to write on pure C, and then they managed to turn around, take Boost with asynchronous I / O, etc. One problem is that this asynchronous I / O, which theoretically should have driven 10 requests in parallel, for some reason had an imperceptible synchronization point inside. When you run 10 queries in parallel, they were executed exactly 20 times slower than one, because 10 times for the queries themselves and again for the synchronization point.

Conclusion:write in languages that implement parallel execution and waiting for different requests well. I do not know, to be honest, what exactly is there to advise, besides Go. Not only because I love Go, but because I don’t know anything more suitable.

Do not write in useless languages in which you will not be able to run 20 parallel queries to the database. Or at every opportunity do not do it all by hand - understand how it works, but do not do it manually.

A / B dough

Still sometimes you can slow down, because you are used to, that everything works, and did not notice that the serialized part, firstly, is, secondly, large.

This is a bike from the production, when the search queries were changed a little and they started to choose a lot more documents from the 60 shards of the search index. Everything worked quickly and according to the principle: “It works — don't touch it”, they all forgotten that there are actually 60 shards inside. Increased the sampling limit for each shard from a thousand to 50 thousand documents. Suddenly it began to slow down and the parallel ceased. The requests themselves, which were executed by shards, flew quite well, and the stage slowed down, when 50 thousand documents were collected from 60 shards. These 3 million final documents on one core merged together, sorted, the top of 3 million was selected and given to the client. That same serial part slowed down, that same merciless Amdal law worked.

So maybe you should not do sharding with your hands, but just like a human

say database: "Do it!"

Disclaimer: I do not really know how to do something right. I type from the wrong floor !!!

I have been promoting a religion called “algorithmic fundamentalism” throughout my adult life. It is briefly stated very simply:

Let's consider the options:

It is difficult to tell something about an automaton, except for sending the corresponding database to the documentation (MongoDB, Elastic, Cassandra, ... in general, the so-called NoSQL). If you are lucky, then you just pull the switch “make me 16 shards” and everything will work. At the moment when it does not work itself, the rest of the article may be necessary.

Pro semiautomatic

In some places, the delights of information technology inspire chthonic horror. For example, MySQL out of the box did not have a sharding to certain versions exactly, nevertheless, the size of the bases used in battle grow to indecent values.

Suffering humanity in the face of individual DBA suffers for years and writes a few bad sharding solutions, built incomprehensibly on what. After that, one more or less decent sharding solution is written under the name ProxySQL (MariaDB / Spider, PG / pg_shard / Citus, ...). This is a well-known example of this same snip.

ProxySQL as a whole, of course, is a complete enterprise-class solution for open source, for routing and so on. But one of the tasks to be solved is the sharding for the database, which in itself cannot be human shard. You see, there is no “shards = 16” switch, you have to either rewrite each request in the application, and there are many of them in places, or put some intermediate layer between the application and the database: “Hmm ... SELECT * FROM documents? Yes, it should be broken into 16 small SELECT * FROM server1.document1, SELECT * FROM server2.document2 - to this server with the same login / password, to this with another. If one did not answer, then ... "etc.

Intermediate hooks can do this exactly. They are a little less than for all databases. For PostgreSQL, as I understand it, at the same time there are some built-in solutions (PostgresForeign Data Wrappers, in my opinion, built into PostgreSQL itself), there are external patches.

Configuring each specific patch is a separate giant topic that does not fit in one report, so we will discuss only the basic concepts.

Let's talk the best about the theory of buzz.

Absolute perfect automatics?

The whole theory of buzz in the case of sharding in this letter F (), the basic principle is always the same roughly:

Sharding is generally about what? We have 2 billion records (or 64). We want to split them into several pieces. There is an unexpected question - how? According to what principle should I scatter my 2 billion records (or 64) on the 16 servers available to me?

The latent mathematician in us must suggest that in the end there is always some magic function that, for each document (object, line, etc.), determines in which piece to put it.

If you go deeper into mathematics, this function always depends not only on the object itself (the line itself), but also on the external settings such as the total number of shards. The function, which for each object should tell where to put it, cannot return a value greater than there are servers in the system. And the functions are slightly different:

But further we will not dig in these jungle of separate functions, we will just talk what magic functions F () are.

What are F ()?

They can come up with many different and many different implementation mechanisms. Sample summary:

An interesting fact - you can naturally scatter all the data randomly - throw the next entry on an arbitrary server, on an arbitrary kernel, in an arbitrary table. There will be no happiness in this, but it will work.

There are slightly more intelligent methods of sharding by reproducible or even consistent hash functions, or sharding by some attribute. Let's go through each method.

F = rand ()

Scattering radomom - not a very correct method. One problem: we scattered our 2 billion records per thousand servers randomly, and we don’t know where the record is. We need to pull out user_1, and we don’t know where it is. We go to a thousand servers and go through everything - somehow it is inefficient.

F = somehash ()

Let's scatter users in an adult way: read the reproducible hash function from user_id, take the remainder of the division by the number of servers, and contact the right server immediately.

And why are we doing this? And then, that we have highload and we don’t have anything else in one server. If it was, life would be so simple.

Great, the situation has already improved, in order to get one record, we are going to one previously known server. But if we have a range of keys, then in all of this range we have to go through all the key values and, in the limit, go either to as many shards as we have keys in the range, or to each server in general. The situation, of course, improved, but not for all requests. Some requests suffered.

Natural sharding (F = object.date% num_shards)

Sometimes, that is often, 95% of the traffic and 95% of the load are requests that have some kind of natural sharding. For example, 95% of conditionally socio-analytical queries affect data only for the last 1 day, 3 days, 7 days, and the remaining 5% refer to the last few years. But 95% of requests, thus, are naturally shaded by date, the interest of system users is focused on the last few days.

In this case, you can divide the data by date, for example, by one day, and follow the response to a request for a report for a certain day or an object from that day on this shard and go.

Life is improving - we now not only know the location of a particular object, but also know about the range. If they ask us not the date range, but the range of other columns, then, of course, you will have to go through all the shards. But under the terms of the game, we have only 5% of such requests.

It seems that we came up with the perfect solution to everything, but there are two problems:

Not to say that this is a bad sharding scheme - we have cut off the hot data, nevertheless we have to do something with the hottest shard.

The problem is solved by grimaces, jumps and poultices, that is, by increasing the number of replicas for the burning current day, then by gradually reducing the number of replicas when this day becomes the past and goes into the archive. There is no perfect solution called “you just need to blur the data on the cluster just like a magic hash function”.

Formally, we know now we know "everything." True, we do not know one giant headache and two smaller headaches.

1. Simple pain: bad smeared

This is an example from a textbook that almost never occurs in combat, but suddenly.

We chose the sharding mechanism, and / or the data changed, and, of course, PM did not communicate the requirements (we do not have errors in the code, always PM does not convey the requirements), and the distribution has become monstrously uneven. That is, missed with the criterion.

To catch, you need to look at the size of shards. We will definitely see the problem at the moment when one of our shards either overheat or becomes 100 times bigger than the others. You can fix it by simply replacing the key or sharding function.

This is a simple problem, to be honest, I do not think that at least one person in a hundred will run into this in life, but suddenly at least it will help someone.

2. “Invincible” pain: aggregation, join

How to make a selection that billions of records from one table per billion records from another table?

The short answer is: don’t suffer!

If you have distributed a billion records per thousand servers in the first table in order for them to work faster, in the second table they did the same, then naturally one thousand per thousand servers should speak in pairs. A million connections will not work well. If we make requests to the database (search, storage, document store or distributed file system), which are badly placed on the sharding, these requests will slow down wildly.

The important moment - some requests will always be unsuccessfully smeared and will slow down. It is important to try to minimize their percentage. As a result, do not make giant joins with a billion to a billion records. If there is a possibility of a small table, relative to which is joining in a giant, shared table, to replicate to all nodes, you need to do this. If joins are actually local in some way, for example, there is a possibility for the user and his posts to be placed side by side, to have them in the same way, and to do all the joins within the same machine - this should be done.

This is a separate course of lectures for three days, so we turn to the last hellish pain and to different algorithms for dealing with it.

3. Difficult / long pain: Resarding

Get ready: if you zashardili your data for the first time in your life, then on average five times more you will surely give it to them.

If you are very smart and lucky, then pereshardite, at least once. But once you have to, because at that moment when you think that 10 units are enough for a user, someone right at that moment writes a request for 30, and the plans have a request for 100 units of unknown resources. Shardov is never enough. With the first sharding scheme, you miss anyway - you always have to either increase the number of servers, or do something else - in general, somehow rebuild the data.

Well, if we have nice degrees of two: there were 16 shard servers, it became 32. More fun, if it was 17, it became 23 - two positively simple numbers. How do the databases do that, maybe they have some magic inside?

The correct answer is no, there is no magic inside, they have hell inside.

Next, we will consider what can be done “by hand”, maybe we will understand it “as an automaton”.

In the forehead # 1. Reset everything

Such a hell, how to shift all 2 billion records from old shards to new ones, I hope, is nowhere to be found. The naive approach is clear: there were 17 machines, 6 machines were added to the cluster, 2 billion records were sorted out, transferred them from 17 machines to 23 machines. Once in 10 years, you can probably even do it. But overall this is a bad move.

In the forehead # 2. Relocate half

The next naive improvement - let's abandon such a stupid scheme - let's ban 17 cars in 23, and we will always decide 16 cars in 32 cars! Then, according to the theory, we will have to transfer exactly half of the data, and in practice we will be able to do it too.

In principle, everything is fine, except for binding to the power of two on the number of machines. This naive approach, oddly enough, may work.

Please note that the additional fragmentation of the cluster in powers of two in this case is also optimal. In any case, adding 16 machines to a cluster of 16, we are obliged to transfer half of the data - exactly half and shift.

Well, but really mankind has not invented anything else - the question arises for an inquiring mind.

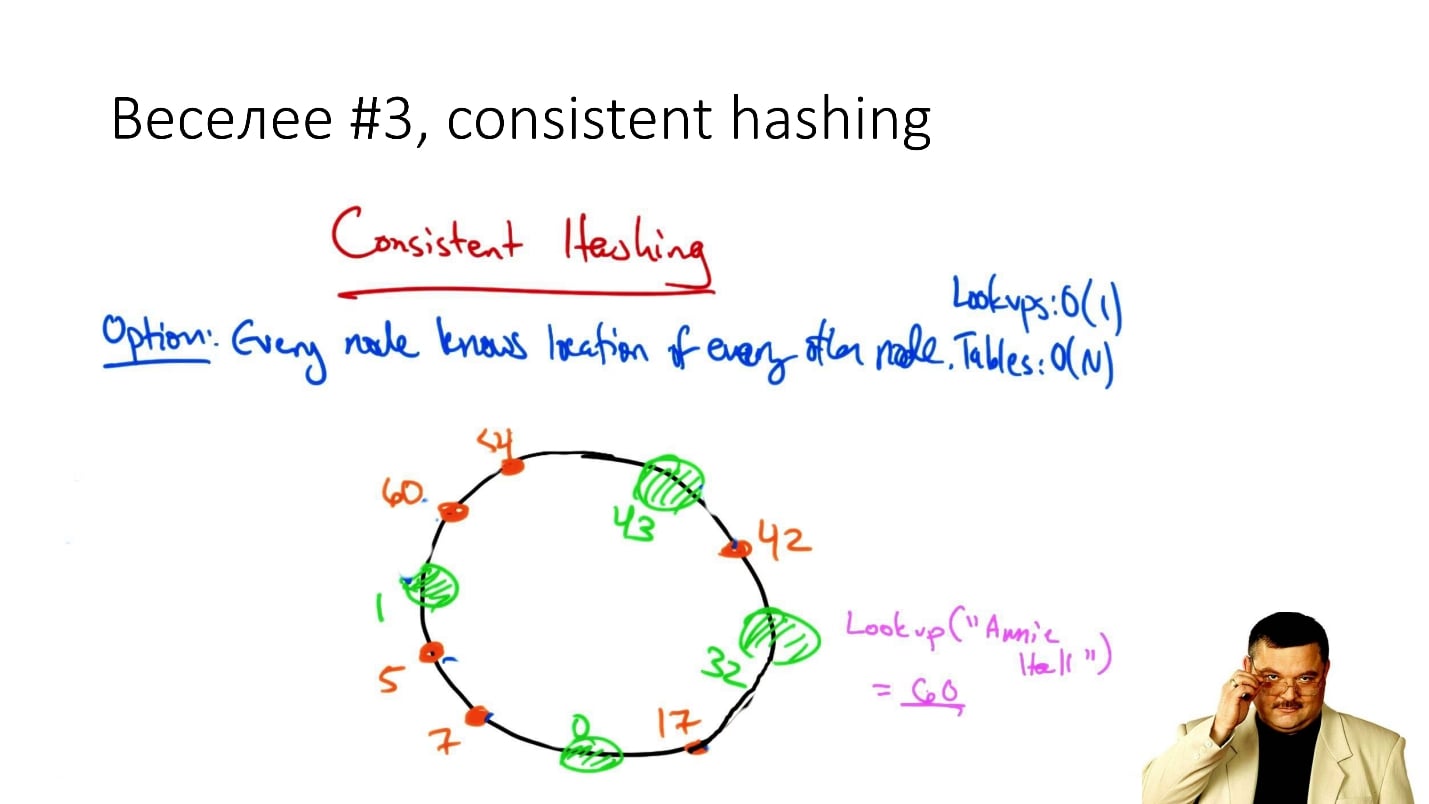

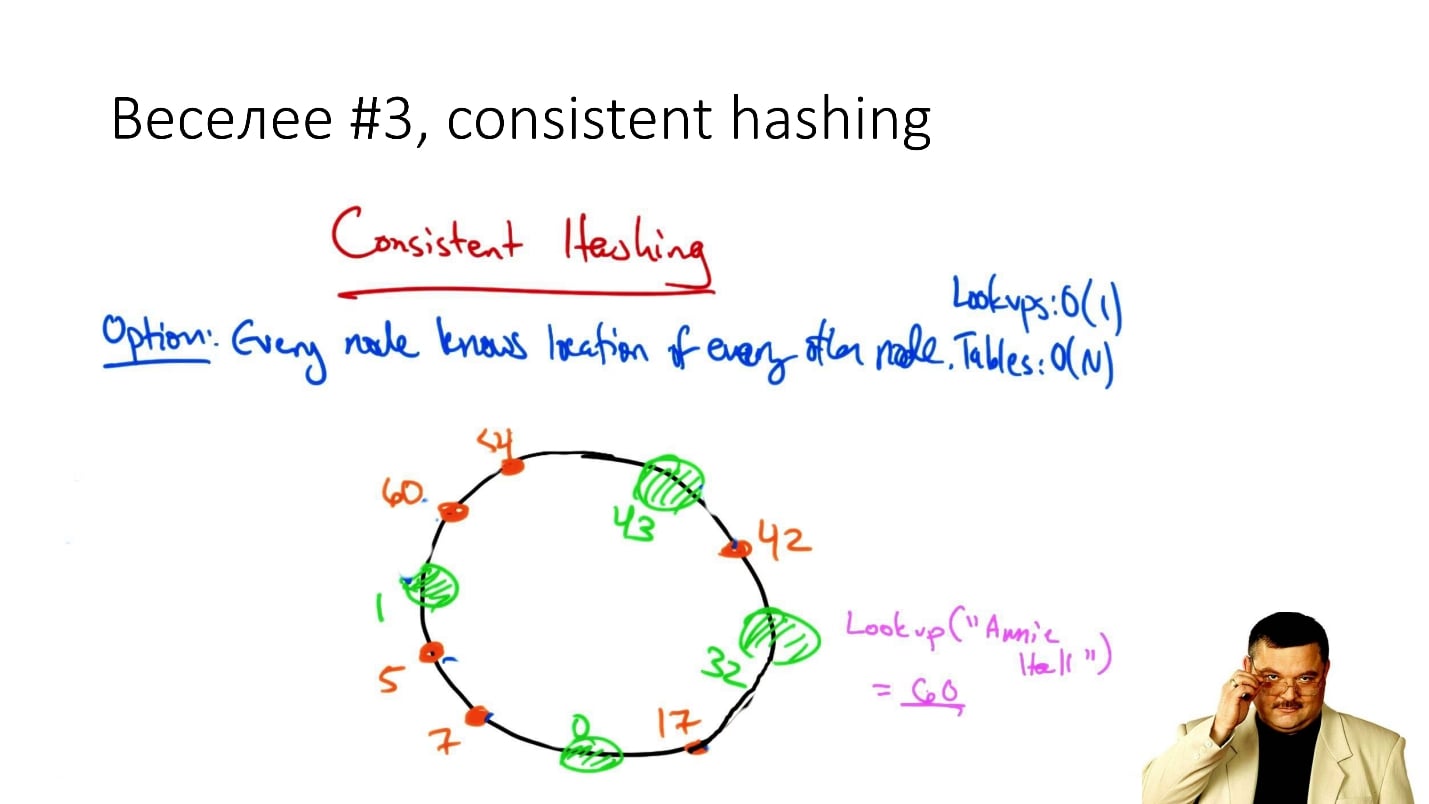

More fun # 3. Consistent hashing

Of course, a picture with a circle about consistent hashing is obligatory here.

If you google “consistent hashing”, then a circle will definitely come out, the whole issue is settled in circles.

The idea: let's draw the identifiers of the shards (hashes) on the circle, and on top we will mark the hashed identifiers of the servers. When we need to add a server, we put a new point on the circle, and we resettled what was close to it (and only what was close to it).

Very effective in terms of minimizing traffic when adding a shard, and completely disgusting in terms of normal balancing data. Because when we hash all these objects, which we distribute to a large number of machines, we do it relatively unevenly: the points in a circle are unevenly distributed, and the load on each particular node can be very different from the rest.

This problem is solved by the last line of the virtual node. Each node, each server on the circle is indicated by more than one point. By adding a server, shard, etc., we add a few points. Every time when we delete something, respectively, we delete several points and shift a small part of the data.

I am talking about this cosmos with circles, because, for example, inside Cassandra is such a scheme. That is, when you start recording between the chords between you, know that the circle is looking at you and, probably, does not approve.

However, in comparison with the first ways, life has improved - we are already looking at adding / removing a shard not all the records, but only a part, and we shift only a part.

Attention, the question: is it possible to improve more? And even improve the uniformity of loading shards? - They say that you can!

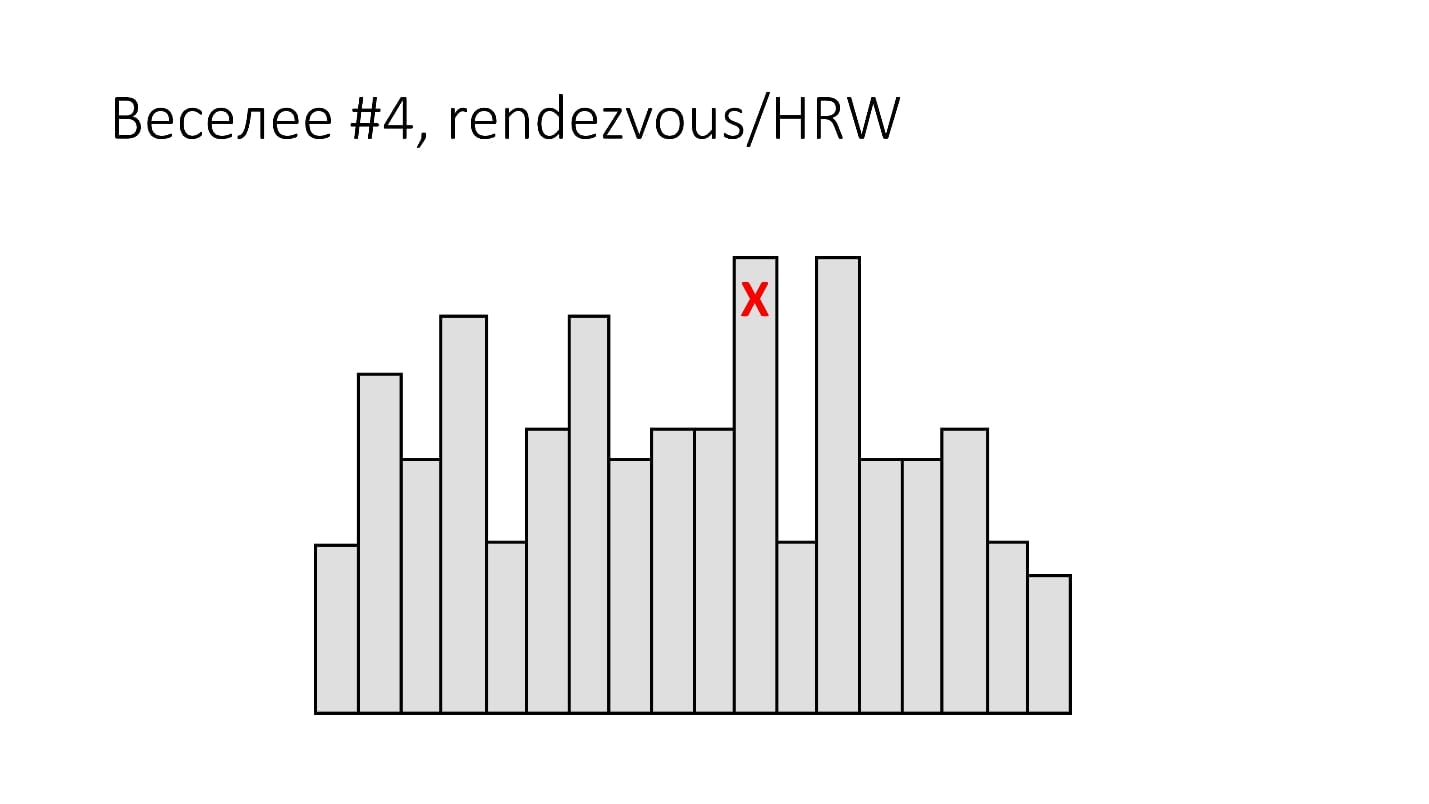

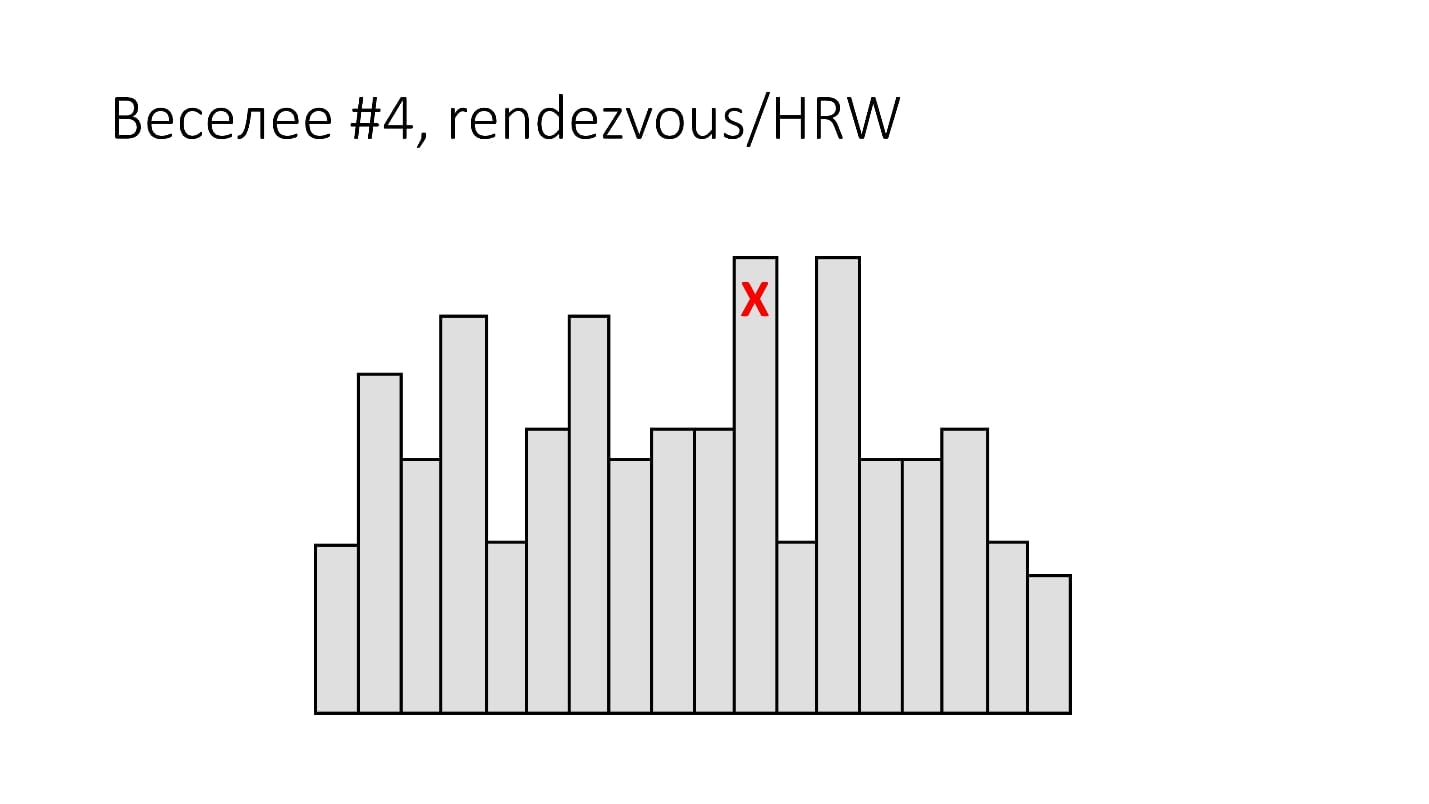

More fun # 4. Rendezvous / HRW

The following simple idea (the material is a tutorial, therefore nothing complicated): shard_id = arg max hash (object_id, shard_id).

Why it is called Rendezvous hashing, I do not know, but I know why it is called Highest Random Weight. It is very easy to visualize it as follows:

We have, for example, 16 shards. For each object (line) that needs to be put somewhere, we calculate 16 hashes, depending on the object from the shard number. Who has the highest value of the hash function, he won.

This is the so-called HRW-hashing, also known as Rendezvous hashing. Dull as a stick scheme for calculating the number of the shard, firstly, by the eye, it is easier to circle and gives a uniform load, on the other hand.

The only negative is that the addition of a new shard has worsened. There is a risk that when adding a new shard, we still have some hashes that will change and it may be necessary to review everything. The shard removal technology hasn't changed much.

Another problem is computationally difficult with a large number of shards.

More fun # 5. More technology

Interestingly, research does not stand still and Google publishes some new space technology every year:

If you are interested in topics, you can read a lot of theses. I cite this data in order to make it clear that the problem is not solved, there is no super-solution that can be implemented in all databases. Until now, people defend dissertations.

More fun # 6. Lists

For an appetizer, the easiest option is stupid lists. Why do we need all these mega-technicians? We do not want, in order to manage 2 billion records, to keep in memory of the cluster on each node a giant object_id list with 2 billion identifiers that would display the location of the object.

And what if you take and thin out this list? Or not even much?

Let's at least just count. I am sure that in some of the databases it is used, but I do not know which one. Mathematics says that it can work quite well, and, to be honest, you can even manage to do it with pens.

Let's estimate:

Compare this with the sharding of 2 billion records using hash functions for at least 16 nodes - that's more than 100 million with something for a shard. And here we have granularity in the block: the records that we put as a single package on a particular shard are very small - 1 Kb each. You can make optimal any operation, and add, and remove the shard.

I repeat, the option is simple as a stick, complex space technologies are not needed, and for sure it works somewhere, but I did not find where.

findings

There is an important basic technique called sharding named after Gaul Julius Caesar: "Divide and conquer, conquer and divide!" If the data does not fit into one server, it is necessary to split them into 20 servers.

Having learned this all, there should be an impression that it would be better not to shard. If you decide that it would be better not to be shardit - this is the right feeling. If you can add $ 100 of memory to the server and do not shard anything, then you have to do it. When sharding, a complex distributed system will appear with data transferring to and fro, stacking data is not known where. If you can avoid it - you need to avoid it.

It’s better not to do it by hand, it is better that the “base” (search, DFS, ...) know how to shard itself. In any case, sooner or later, highload will come and somehow the data will have to be split. Not the fact that even if the base is able to do it itself, you will not run into any problems. Remember about algorithmic fundamentalism - you need to understand how everything is arranged inside .

When setting up sharding for the first time, carefully select F () , think about requests, network, etc. But get ready, you will probably have to choose 2 times and at least once you have to redo everything .

About speaker

Usually, we talk about the speaker on the beach, but this time there is a reason for an exception. Andrei Aksyonov became one of the laureates of the HighLoad Award ++ , and everyone for whom the Aksyonov associative chain — Sphinx — highload is not obvious is worth watching the video.

About the training mitap

This was the material on the performance on the training session of the Highload User Group. I'll tell you a little what it is and why.

We recently realized that reports on a large HighLoad ++ go deeper and deeper. At the conference, we cannot repeat and again discuss, for example, questions of architecture. And there are no places where one could get acquainted with basic concepts and systematize fragmentary knowledge. Therefore, we launched a series of training mitapov on the development of highload-applications, sites and services.

We will talk about scaling services and databases, load testing, machine learning and neural networks, project architectures. Everything connected with the development of non-standard solutions with increased requirements for performance, sustainability, safety, and so on will gradually appear on the agenda.

On the third mitap on January 24 in St. Petersburg we will discuss the design patterns of high-loaded systems “Queue”, “Pipelining”. The event is free, but you need to register by the link in the description of the mitap. Speakers and topics will be published a little later, or we can send in the mailing list .

In short, if you are contributing your alternative implementation of hashing in Cassandra, then there are hardly any revelations for you here. But if the load on your services is already arriving, and the system knowledge does not keep up with it, then you are welcome. The great and terrible Andrei Aksyonov ( shodan ), in his characteristic manner, will tell you thatSharding is bad, not sharding is also bad , and how it is arranged inside. And quite by accident, one of the parts of the story about sharding is not at all entirely about sharding, but the devil knows what - like objects on shards mapit.

A photo of cats (although they happened to be puppies) already answers the question why this is all, but we will begin sequentially.

What is "sharding"

If you persistently google, it turns out that there is a fairly blurred border between the so-called partitioning and the so-called sharding. Everyone calls everything he wants, what he wants. Some people distinguish horizontal partitioning and sharding. Others say that sharding is a certain kind of horizontal partitioning.

I did not find a single terminological standard that would be approved by the founding fathers and is ISO certified. Personal inner conviction is approximately like this: Partitioning on average is “cutting the base into pieces” in an arbitrary manner.

- Vertical partitioning - pokolonochno. For example, there is a giant table for a couple of billion entries in 60 columns. Instead of keeping one such gigantic table, we hold 60 not less giant tables of 2 billion records - and this is not a column basis, but vertical partitioning (as an example of terminology).

- Horizontal partitioning - we cut line by line, maybe inside the server.

The awkward moment here is in the subtle difference between horizontal partitioning and sharding. I can cut into pieces, but I surely will not tell you what it is. There is a feeling that sharding and horizontal partitioning are about the same thing.

Sharding is generally when a large table in terms of databases or a collection of documents, objects, if you do not have a database, and the document store, is cut by objects. That is, out of 2 billion objects, pieces are selected no matter what size. The objects themselves inside each object are not cut into pieces, we do not lay them out into separate columns, but we lay them out in different places in bundles.

Link to the presentation to complete the picture.

Next came the subtle terminological differences. For example, relatively speaking, the developers at Postgres can say that horizontal partitioning is when all the tables into which the main table is divided lie in the same schema, and when on different machines it is sharding.

In a general sense, without being tied to the terminology of a specific database and a specific data management system, there is a feeling that sharding is just cutting by lines and documents and so on — and that’s all:

Sharding (~ =, \ in ...) Horizontal Partitioning == is typical.

I emphasize typically. In the sense that we are doing all this not just to cut 2 billion documents into 20 tables, each of which would be more manageable, but in order to distribute it into many cores, many disks or many different physical or virtual servers .

The implication is that we do this so that every shard — every bit of data — replicates many times. But really, no.

INSERTINTO docs00

SELECT * FROM documents WHERE (id%16)=0

...

INSERTINTO docs15

SELECT * FROM documents WHERE (id%16)=15In fact, if you do this data cutting, you will generate 16 small tablets from one giant SQL table on MySQL on your glorious laptop, without going beyond a single laptop, a single schema, a single database, etc. etc. - everything, you have sharding.

Remembering the illustration with the puppies, this leads to the following:

- Increases bandwidth - bandwidth.

- Latency does not change, that is, everyone, so to speak, a worker or consumer in this case, gets his own. It is not known what puppies get in the picture, but requests are served approximately in one time, as if the puppy were alone.

- Either that, and another, and more high availability (replication).

Why bandwidth? We sometimes may have such amounts of data that do not interpose - it is not clear where, but not intermeddle - on 1 {core | disk | server | ...}. Just not enough resources and everything. In order to work with this big dataset, you need to cut it.

Why latency? On one core, scanning a table of 2 billion rows is 20 times slower than scanning 20 tables on 20 cores, making it parallel. Data is too slowly processed on one resource.

Why high availability? Or we cut the data in order to do both one and the other at the same time, and at the same time several copies of each shard - replication ensures high availability.

A simple example of "how to make hands"

The conditional sharding can be cut using the test table test.documents for 32 documents, and by generating 16 test tables from this table for approximately 2 documents test.docs00, 01, 02, ..., 15.

INSERTINTO docs00

SELECT * FROM documents WHERE (id%16)=0

...

INSERTINTO docs15

SELECT * FROM documents WHERE (id%16)=15Why about? Because a priori we do not know how id is distributed, if from 1 to 32 inclusively, then there will be exactly 2 documents each, otherwise - no.

We do this for what it is. After we have made 16 tables, we can “grab” 16 of what we need. Regardless of where we stand, we can parallelize these resources. For example, if there is not enough disk space, it would make sense to decompose these tables into separate disks.

All this, unfortunately, is not free. I suspect that in the case of the canonical SQL standard (I haven’t reread the SQL standard for a long time, maybe it hasn’t been updated for a long time), there is no official standardized syntax for any SQL server to say: “Dear SQL server, make me 32 shards and decompose them into 4 disks. ” But in individual implementations, there is often a specific syntax in order to do the same in principle. PostgreSQL has mechanisms for partitioning, MySQL has MariaDB, Oracle probably did it all a long time ago.

However, if we do it by hand, without database support and within the standard, then we conditionally pay for data access complexity.. Where there was a simple SELECT * FROM documents WHERE id = 123, now 16 x SELECT * FROM docsXX. And well, if we tried to get the record by key. Much more interesting if we tried to get an early range of records. Now (if we, I emphasize, like fools, and remain within the standard) the results of these 16 SELECT * FROM will have to be combined in the application.

What performance changes to expect?

- Intuitively linear.

- Theoretically sublinear because Amdahl law .

- Practically - maybe almost linearly, maybe not.

In fact, the correct answer is unknown. With dexterous application of the sharding technique, you can achieve a significant superlinear degradation of your application, and DBA will come running with a hot poker.

Let's see how this can be achieved. It is clear that simply putting the setting in PostgreSQL shards = 16, and then it itself took off - it is not interesting. Let's think about how we can ensure that we can slow down 32 times from sharding - this is interesting from the point of view how not to do this.

Our attempts to accelerate or slow down will always rest against the classics — against the good old Amdahl law, which says that there is no perfect parallelization of any query, there is always some consistent part.

Amdahl law

There is always a serialized part.

There is always a part of the execution of the request that is parallel, and there is always a part that does not parallel. Even if it seems to you that there is a perfectly parallel query, at least the collection of the result string that you are going to send to the client is always from the strings received from each shard, and it is always consistent.

There is always some consistent part. It can be tiny, completely imperceptible on the general background, it can be gigantic and, accordingly, strongly affecting parallelization, but it always is.

In addition, its influence is changing.and can grow significantly, for example, if we cut our table - let's raise the rates - from 64 records to 16 tables with 4 records, this part will change. Of course, judging by such huge amounts of data, we work on a mobile phone and an 86 2 MHz processor, we don’t have enough files that can be kept open at the same time. Apparently, with such introductory, we open one file at a time.

- It was Total = Serial + Parallel . Where, for example, parallel is all the work inside the DB, and serial is sending the result to the client.

- It became Total2 = Serial + Parallel / N + Xserial. For example, when a generic ORDER BY, Xserial> 0.

With this simple example, I'm trying to show that some kind of Xserial appears. In addition to the fact that there is always a serialized part, and the fact that we are trying to work with data in parallel, an additional part appears to support this data slicing. Roughly speaking, we may need:

- find these 16 tables in the internal database dictionary;

- open files;

- allocate memory;

- unallocate memory;

- results;

- synchronize between the cores;

Any out-of-sync effects will still appear. They may be insignificant and take one billion dollars from the total time, but they are always non-zero and always are. With their help, we can dramatically lose in performance after sharding.

This is a standard picture about the law of Amdal. It is not very readable, but it is important that the lines, which should ideally be straight and grow linearly, abut on the asymptote. But since the schedule from the Internet is unreadable, I have made, in my opinion, more vivid tables with numbers.

Suppose that we have a certain serialized part of the request processing, which takes only 5%: serial = 0.05 = 1/20.

Intuitively, it would seem that with the serialized part, which takes only 1/20 of the request processing, if we parallelize the processing of the request for 20 cores, it will become approximately 20, at worst 18, times faster.

In fact, mathematics is heartless :

wall = 0.05 + 0.95/num_cores, speedup = 1 / (0.05 + 0.95/num_cores)It turns out that if you carefully count, with the serialized part of 5%, the acceleration will be 10 times (10.3), which is 51% compared to the theoretical ideal.

| 8 cores | = 5.9 | = 74% |

| 10 cores | = 6.9 | = 69% |

| 20 cores | = 10.3 | = 51% |

| 40 cores | = 13.6 | = 34% |

| 128 cores | = 17.4 | = 14% |

Using 20 cores (20 disks, if you like) for the task on which one worked earlier, we even theoretically will never get acceleration more than 20 times, but practically - much less. Moreover, with an increase in the number of parallels, inefficiency is greatly increasing.

When only 1% of the serialized work remains, and 99% is parallelized, the acceleration values are somewhat improved:

| 8 cores | = 7.5 | = 93% |

| 16 cores | = 13.9 | = 87% |

| 32 cores | = 24.4 | = 76% |

| 64 cores | = 39.3 | = 61% |

For a completely thermonuclear query that is naturally executed for hours, and the preparatory work and the assembly of the result take very little time (serial = 0.001), we will see already good efficiency:

| 8 cores | = 7.94 | = 99% |

| 16 cores | = 15.76 | = 99% |

| 32 cores | = 31.04 | = 97% |

| 64 cores | = 60.20 | = 94% |

Please note 100% we will never see . In particularly good cases, you can see, for example, 99.999%, but not exactly 100%.

How to chuff and repeat N times?

It is possible to fool and repeat exactly N times:

- Send requests docs00 ... docs15 sequentially , not in parallel.

- The simple request to make the sample is not keyed , WHERE something = 234.

In this case, the serialized part (serial) occupies not 1% and not 5%, but approximately 20% in modern databases. You can get 50% of the serialized part if you access the database using a wildly efficient binary protocol or link it as a dynamic library to a Python script.

The rest of the processing time of a simple request will be occupied by non-parallelized operations of parsing the request, preparing a plan, etc. That is, it does not read the record.

If we split the data into 16 tables and start sequentially, as is customary in the PHP programming language, for example, (he is not very good at starting asynchronous processes), then we’ll get a slowdown 16 times. And maybe even more, because network round-trips will also be added.

Suddenly, when sharding, the choice of programming language is important.

Remember about the choice of programming language, because if you send queries to the database (or search server) sequentially, then where does the acceleration come from? Rather, there will be a slowdown.

Bike from life

If you choose C ++, write to POSIX Threads , not Boost I / O. I saw an excellent library from experienced developers from Oracle and MySQL, who wrote a chat with the MySQL server on Boost. Apparently, at work they were forced to write on pure C, and then they managed to turn around, take Boost with asynchronous I / O, etc. One problem is that this asynchronous I / O, which theoretically should have driven 10 requests in parallel, for some reason had an imperceptible synchronization point inside. When you run 10 queries in parallel, they were executed exactly 20 times slower than one, because 10 times for the queries themselves and again for the synchronization point.

Conclusion:write in languages that implement parallel execution and waiting for different requests well. I do not know, to be honest, what exactly is there to advise, besides Go. Not only because I love Go, but because I don’t know anything more suitable.

Do not write in useless languages in which you will not be able to run 20 parallel queries to the database. Or at every opportunity do not do it all by hand - understand how it works, but do not do it manually.

A / B dough

Still sometimes you can slow down, because you are used to, that everything works, and did not notice that the serialized part, firstly, is, secondly, large.

- Immediately ~ 60 search index shards, categories

- These are correct and true shards, under the subject area.

- There were up to 1000 documents, and there were 50,000 documents.

This is a bike from the production, when the search queries were changed a little and they started to choose a lot more documents from the 60 shards of the search index. Everything worked quickly and according to the principle: “It works — don't touch it”, they all forgotten that there are actually 60 shards inside. Increased the sampling limit for each shard from a thousand to 50 thousand documents. Suddenly it began to slow down and the parallel ceased. The requests themselves, which were executed by shards, flew quite well, and the stage slowed down, when 50 thousand documents were collected from 60 shards. These 3 million final documents on one core merged together, sorted, the top of 3 million was selected and given to the client. That same serial part slowed down, that same merciless Amdal law worked.

So maybe you should not do sharding with your hands, but just like a human

say database: "Do it!"

Disclaimer: I do not really know how to do something right. I type from the wrong floor !!!

I have been promoting a religion called “algorithmic fundamentalism” throughout my adult life. It is briefly stated very simply:

You do not want to do anything really with your hands, but it is extremely useful to know how it is arranged inside. So that at the moment when something goes wrong in the database, you at least understand what went wrong there, how it is arranged inside and around, how to fix it.

Let's consider the options:

- "Hands" . Previously, we manually divided the data into 16 virtual tables, we rewrote all the requests with our hands - this is extremely uncomfortable to do. If you can not shard hands - do not shuffle hands! But sometimes this is not possible, for example, you have MySQL 3.23, and then you have to.

- "Automatic". It happens that you can shard with an automaton or almost with an automaton, when the database is able to distribute the data itself, you just have to write roughly a specific setting somewhere. There are a lot of bases, and they have a lot of different settings. I am sure that in every database, in which there is an opportunity to write shards = 16 (whatever the syntax), a lot of other settings are glued to this case by a steam locomotive.

- "Semi-automatic" - a completely space, in my opinion, and brutal mode. That is, the base itself doesn’t seem to know how, but there are external additional patches.

It is difficult to tell something about an automaton, except for sending the corresponding database to the documentation (MongoDB, Elastic, Cassandra, ... in general, the so-called NoSQL). If you are lucky, then you just pull the switch “make me 16 shards” and everything will work. At the moment when it does not work itself, the rest of the article may be necessary.

Pro semiautomatic

In some places, the delights of information technology inspire chthonic horror. For example, MySQL out of the box did not have a sharding to certain versions exactly, nevertheless, the size of the bases used in battle grow to indecent values.

Suffering humanity in the face of individual DBA suffers for years and writes a few bad sharding solutions, built incomprehensibly on what. After that, one more or less decent sharding solution is written under the name ProxySQL (MariaDB / Spider, PG / pg_shard / Citus, ...). This is a well-known example of this same snip.

ProxySQL as a whole, of course, is a complete enterprise-class solution for open source, for routing and so on. But one of the tasks to be solved is the sharding for the database, which in itself cannot be human shard. You see, there is no “shards = 16” switch, you have to either rewrite each request in the application, and there are many of them in places, or put some intermediate layer between the application and the database: “Hmm ... SELECT * FROM documents? Yes, it should be broken into 16 small SELECT * FROM server1.document1, SELECT * FROM server2.document2 - to this server with the same login / password, to this with another. If one did not answer, then ... "etc.

Intermediate hooks can do this exactly. They are a little less than for all databases. For PostgreSQL, as I understand it, at the same time there are some built-in solutions (PostgresForeign Data Wrappers, in my opinion, built into PostgreSQL itself), there are external patches.

Configuring each specific patch is a separate giant topic that does not fit in one report, so we will discuss only the basic concepts.

Let's talk the best about the theory of buzz.

Absolute perfect automatics?

The whole theory of buzz in the case of sharding in this letter F (), the basic principle is always the same roughly:

shard_id = F(object).Sharding is generally about what? We have 2 billion records (or 64). We want to split them into several pieces. There is an unexpected question - how? According to what principle should I scatter my 2 billion records (or 64) on the 16 servers available to me?

The latent mathematician in us must suggest that in the end there is always some magic function that, for each document (object, line, etc.), determines in which piece to put it.

If you go deeper into mathematics, this function always depends not only on the object itself (the line itself), but also on the external settings such as the total number of shards. The function, which for each object should tell where to put it, cannot return a value greater than there are servers in the system. And the functions are slightly different:

- shard_func = F1 (object);

- shard_id = F2 (shard_func, ...);

- shard_id = F2 ( F1 (object), current_num_shards, ...).

But further we will not dig in these jungle of separate functions, we will just talk what magic functions F () are.

What are F ()?

They can come up with many different and many different implementation mechanisms. Sample summary:

- F = rand ()% nums_shards

- F = somehash (object.id)% num_shards

- F = object.date% num_shards

- F = object.user_id% num_shards

- ...

- F = shard_table [somehash () | ... object.date | ...]

An interesting fact - you can naturally scatter all the data randomly - throw the next entry on an arbitrary server, on an arbitrary kernel, in an arbitrary table. There will be no happiness in this, but it will work.

There are slightly more intelligent methods of sharding by reproducible or even consistent hash functions, or sharding by some attribute. Let's go through each method.

F = rand ()

Scattering radomom - not a very correct method. One problem: we scattered our 2 billion records per thousand servers randomly, and we don’t know where the record is. We need to pull out user_1, and we don’t know where it is. We go to a thousand servers and go through everything - somehow it is inefficient.

F = somehash ()

Let's scatter users in an adult way: read the reproducible hash function from user_id, take the remainder of the division by the number of servers, and contact the right server immediately.

And why are we doing this? And then, that we have highload and we don’t have anything else in one server. If it was, life would be so simple.

Great, the situation has already improved, in order to get one record, we are going to one previously known server. But if we have a range of keys, then in all of this range we have to go through all the key values and, in the limit, go either to as many shards as we have keys in the range, or to each server in general. The situation, of course, improved, but not for all requests. Some requests suffered.

Natural sharding (F = object.date% num_shards)

Sometimes, that is often, 95% of the traffic and 95% of the load are requests that have some kind of natural sharding. For example, 95% of conditionally socio-analytical queries affect data only for the last 1 day, 3 days, 7 days, and the remaining 5% refer to the last few years. But 95% of requests, thus, are naturally shaded by date, the interest of system users is focused on the last few days.

In this case, you can divide the data by date, for example, by one day, and follow the response to a request for a report for a certain day or an object from that day on this shard and go.

Life is improving - we now not only know the location of a particular object, but also know about the range. If they ask us not the date range, but the range of other columns, then, of course, you will have to go through all the shards. But under the terms of the game, we have only 5% of such requests.

It seems that we came up with the perfect solution to everything, but there are two problems:

- This solution is designed for a specific case, when 95% of requests involve only the last week.

- Since 95% of requests touch the last week, they will all fall on one shard that serves this last week. This shard will melt, while everyone else will stand idle at this time. In this case, they can not be thrown out, the archived data should also be stored.

Not to say that this is a bad sharding scheme - we have cut off the hot data, nevertheless we have to do something with the hottest shard.

The problem is solved by grimaces, jumps and poultices, that is, by increasing the number of replicas for the burning current day, then by gradually reducing the number of replicas when this day becomes the past and goes into the archive. There is no perfect solution called “you just need to blur the data on the cluster just like a magic hash function”.

Formally, we know now we know "everything." True, we do not know one giant headache and two smaller headaches.

1. Simple pain: bad smeared

This is an example from a textbook that almost never occurs in combat, but suddenly.

- As an example with a date, only without a date!

- Non-intentional uneven (perceptible) distribution.

We chose the sharding mechanism, and / or the data changed, and, of course, PM did not communicate the requirements (we do not have errors in the code, always PM does not convey the requirements), and the distribution has become monstrously uneven. That is, missed with the criterion.

To catch, you need to look at the size of shards. We will definitely see the problem at the moment when one of our shards either overheat or becomes 100 times bigger than the others. You can fix it by simply replacing the key or sharding function.

This is a simple problem, to be honest, I do not think that at least one person in a hundred will run into this in life, but suddenly at least it will help someone.

2. “Invincible” pain: aggregation, join

How to make a selection that billions of records from one table per billion records from another table?

- How to "quickly" count ... WHERE randcol BETWEEN aaa AND bbb?

- How “cleverly” to do ... users_32shards JOIN posts_1024 shards?

The short answer is: don’t suffer!

If you have distributed a billion records per thousand servers in the first table in order for them to work faster, in the second table they did the same, then naturally one thousand per thousand servers should speak in pairs. A million connections will not work well. If we make requests to the database (search, storage, document store or distributed file system), which are badly placed on the sharding, these requests will slow down wildly.

The important moment - some requests will always be unsuccessfully smeared and will slow down. It is important to try to minimize their percentage. As a result, do not make giant joins with a billion to a billion records. If there is a possibility of a small table, relative to which is joining in a giant, shared table, to replicate to all nodes, you need to do this. If joins are actually local in some way, for example, there is a possibility for the user and his posts to be placed side by side, to have them in the same way, and to do all the joins within the same machine - this should be done.

This is a separate course of lectures for three days, so we turn to the last hellish pain and to different algorithms for dealing with it.

3. Difficult / long pain: Resarding

Get ready: if you zashardili your data for the first time in your life, then on average five times more you will surely give it to them.

How many clusters do not configure, all the same decide.

If you are very smart and lucky, then pereshardite, at least once. But once you have to, because at that moment when you think that 10 units are enough for a user, someone right at that moment writes a request for 30, and the plans have a request for 100 units of unknown resources. Shardov is never enough. With the first sharding scheme, you miss anyway - you always have to either increase the number of servers, or do something else - in general, somehow rebuild the data.

Well, if we have nice degrees of two: there were 16 shard servers, it became 32. More fun, if it was 17, it became 23 - two positively simple numbers. How do the databases do that, maybe they have some magic inside?

The correct answer is no, there is no magic inside, they have hell inside.

Next, we will consider what can be done “by hand”, maybe we will understand it “as an automaton”.

In the forehead # 1. Reset everything

- For all objects we count NewF (object), we shift it to a new shard.

- The probability of coincidence NewF () = OldF () is small.

- We shift almost everything at all.

- Oh.

Such a hell, how to shift all 2 billion records from old shards to new ones, I hope, is nowhere to be found. The naive approach is clear: there were 17 machines, 6 machines were added to the cluster, 2 billion records were sorted out, transferred them from 17 machines to 23 machines. Once in 10 years, you can probably even do it. But overall this is a bad move.

In the forehead # 2. Relocate half

The next naive improvement - let's abandon such a stupid scheme - let's ban 17 cars in 23, and we will always decide 16 cars in 32 cars! Then, according to the theory, we will have to transfer exactly half of the data, and in practice we will be able to do it too.

- For all objects we count NewF (object), we shift it to a new shard.

- It was strictly 2 ^ N, it became strictly 2 ^ (N + 1) shards.

- The probability of coincidence NewF () = OldF () is 0.5.

- We transfer about 50% of the data.

- Optimally, but only works for powers of two.

In principle, everything is fine, except for binding to the power of two on the number of machines. This naive approach, oddly enough, may work.

Please note that the additional fragmentation of the cluster in powers of two in this case is also optimal. In any case, adding 16 machines to a cluster of 16, we are obliged to transfer half of the data - exactly half and shift.

Well, but really mankind has not invented anything else - the question arises for an inquiring mind.

More fun # 3. Consistent hashing

Of course, a picture with a circle about consistent hashing is obligatory here.

If you google “consistent hashing”, then a circle will definitely come out, the whole issue is settled in circles.

The idea: let's draw the identifiers of the shards (hashes) on the circle, and on top we will mark the hashed identifiers of the servers. When we need to add a server, we put a new point on the circle, and we resettled what was close to it (and only what was close to it).

- When adding a shard: we view not everything , but only 2 “neighbors”, we shift an average of 1 / n.

- When deleting a shard: we look through only the deleted shard, we shift only it. Type optimum.

Very effective in terms of minimizing traffic when adding a shard, and completely disgusting in terms of normal balancing data. Because when we hash all these objects, which we distribute to a large number of machines, we do it relatively unevenly: the points in a circle are unevenly distributed, and the load on each particular node can be very different from the rest.

This problem is solved by the last line of the virtual node. Each node, each server on the circle is indicated by more than one point. By adding a server, shard, etc., we add a few points. Every time when we delete something, respectively, we delete several points and shift a small part of the data.

I am talking about this cosmos with circles, because, for example, inside Cassandra is such a scheme. That is, when you start recording between the chords between you, know that the circle is looking at you and, probably, does not approve.

However, in comparison with the first ways, life has improved - we are already looking at adding / removing a shard not all the records, but only a part, and we shift only a part.

Attention, the question: is it possible to improve more? And even improve the uniformity of loading shards? - They say that you can!

More fun # 4. Rendezvous / HRW

The following simple idea (the material is a tutorial, therefore nothing complicated): shard_id = arg max hash (object_id, shard_id).

Why it is called Rendezvous hashing, I do not know, but I know why it is called Highest Random Weight. It is very easy to visualize it as follows:

We have, for example, 16 shards. For each object (line) that needs to be put somewhere, we calculate 16 hashes, depending on the object from the shard number. Who has the highest value of the hash function, he won.

This is the so-called HRW-hashing, also known as Rendezvous hashing. Dull as a stick scheme for calculating the number of the shard, firstly, by the eye, it is easier to circle and gives a uniform load, on the other hand.

The only negative is that the addition of a new shard has worsened. There is a risk that when adding a new shard, we still have some hashes that will change and it may be necessary to review everything. The shard removal technology hasn't changed much.

Another problem is computationally difficult with a large number of shards.

More fun # 5. More technology

Interestingly, research does not stand still and Google publishes some new space technology every year:

- Jump Hash - Google 2014.

- Multi Probe —Google '2015.

- Maglev - Google '2016.

If you are interested in topics, you can read a lot of theses. I cite this data in order to make it clear that the problem is not solved, there is no super-solution that can be implemented in all databases. Until now, people defend dissertations.

More fun # 6. Lists

For an appetizer, the easiest option is stupid lists. Why do we need all these mega-technicians? We do not want, in order to manage 2 billion records, to keep in memory of the cluster on each node a giant object_id list with 2 billion identifiers that would display the location of the object.

And what if you take and thin out this list? Or not even much?

Let's at least just count. I am sure that in some of the databases it is used, but I do not know which one. Mathematics says that it can work quite well, and, to be honest, you can even manage to do it with pens.

Let's estimate:

- There are 1 billion objects.

- We take objects and by identifiers / hashes / dates / anything else we split into a million intervals: min / max_id => shard_id.

- A million intervals with 8 byte hashes and 4 byte numbers of the shard (4 billion shards should be enough for everyone!) - this is 20 bytes for one interval.

- In order to place a billion objects somewhere in a cluster, you need to globally maintain 20 MB in the memory of the entire cluster — not such a large amount of data.

- 20 MB is a fairly granular data map in a cluster for a very small range of a thousand records.

Compare this with the sharding of 2 billion records using hash functions for at least 16 nodes - that's more than 100 million with something for a shard. And here we have granularity in the block: the records that we put as a single package on a particular shard are very small - 1 Kb each. You can make optimal any operation, and add, and remove the shard.

I repeat, the option is simple as a stick, complex space technologies are not needed, and for sure it works somewhere, but I did not find where.

findings

There is an important basic technique called sharding named after Gaul Julius Caesar: "Divide and conquer, conquer and divide!" If the data does not fit into one server, it is necessary to split them into 20 servers.

Having learned this all, there should be an impression that it would be better not to shard. If you decide that it would be better not to be shardit - this is the right feeling. If you can add $ 100 of memory to the server and do not shard anything, then you have to do it. When sharding, a complex distributed system will appear with data transferring to and fro, stacking data is not known where. If you can avoid it - you need to avoid it.

It’s better not to do it by hand, it is better that the “base” (search, DFS, ...) know how to shard itself. In any case, sooner or later, highload will come and somehow the data will have to be split. Not the fact that even if the base is able to do it itself, you will not run into any problems. Remember about algorithmic fundamentalism - you need to understand how everything is arranged inside .

When setting up sharding for the first time, carefully select F () , think about requests, network, etc. But get ready, you will probably have to choose 2 times and at least once you have to redo everything .

About speaker

Usually, we talk about the speaker on the beach, but this time there is a reason for an exception. Andrei Aksyonov became one of the laureates of the HighLoad Award ++ , and everyone for whom the Aksyonov associative chain — Sphinx — highload is not obvious is worth watching the video.

About the training mitap

This was the material on the performance on the training session of the Highload User Group. I'll tell you a little what it is and why.

We recently realized that reports on a large HighLoad ++ go deeper and deeper. At the conference, we cannot repeat and again discuss, for example, questions of architecture. And there are no places where one could get acquainted with basic concepts and systematize fragmentary knowledge. Therefore, we launched a series of training mitapov on the development of highload-applications, sites and services.

We will talk about scaling services and databases, load testing, machine learning and neural networks, project architectures. Everything connected with the development of non-standard solutions with increased requirements for performance, sustainability, safety, and so on will gradually appear on the agenda.

On the third mitap on January 24 in St. Petersburg we will discuss the design patterns of high-loaded systems “Queue”, “Pipelining”. The event is free, but you need to register by the link in the description of the mitap. Speakers and topics will be published a little later, or we can send in the mailing list .

Mailing is also the easiest way to find out about news, for example, that on April 8 and 9, St. Petersburg will host your HighLoad ++ and you can already apply or book an early bird ticket.