NetApp HCI ─ Next Generation Hyperconverged Data System

The development of data storage systems and their management have come a long way in development, being critical for any corporate IT solution. Today, the most advanced are hyper-converged systems, which have a number of advantages over today's legacy and legacy systems. They are cheaper, easier to manage, easy to scale and ensure that their resources exactly match the needs of the enterprise.

This article gives a comparative description of traditional, convergent and hyperconverged systems related to data storage and processing. Scaling options for similar enterprise systems and Scale-Out architecture are considered. The description and characteristics of a new generation hyperconverged system for working with NetApp HCI data are given.

Converged and traditional systems

Converged systems are the result of natural progress, a departure from the traditional IT infrastructure, which has always been associated with the creation of separate and unrelated “bunkers” for data storage and processing.

For the inherited IT environment, as a rule, separate administrative groups (teams of specialists) were created for storage systems, for servers, and for network support. For example, a storage group was involved in the purchase, provision, and maintenance of a storage infrastructure. She also maintained relationships with storage vendors. The same was true for server groups and networks.

A converged system combines two or more of these components of an IT infrastructure as a pre-engineered solution. The best solutions of this class combine all three components that are closely related to each other by the corresponding software.

A clear advantage of such a solution is a relatively simple design for complex IT infrastructure. The idea is to create a single team for support, ─ the same way as focusing on a single seller to support all the necessary components.

Converged and Hyper Converged Systems

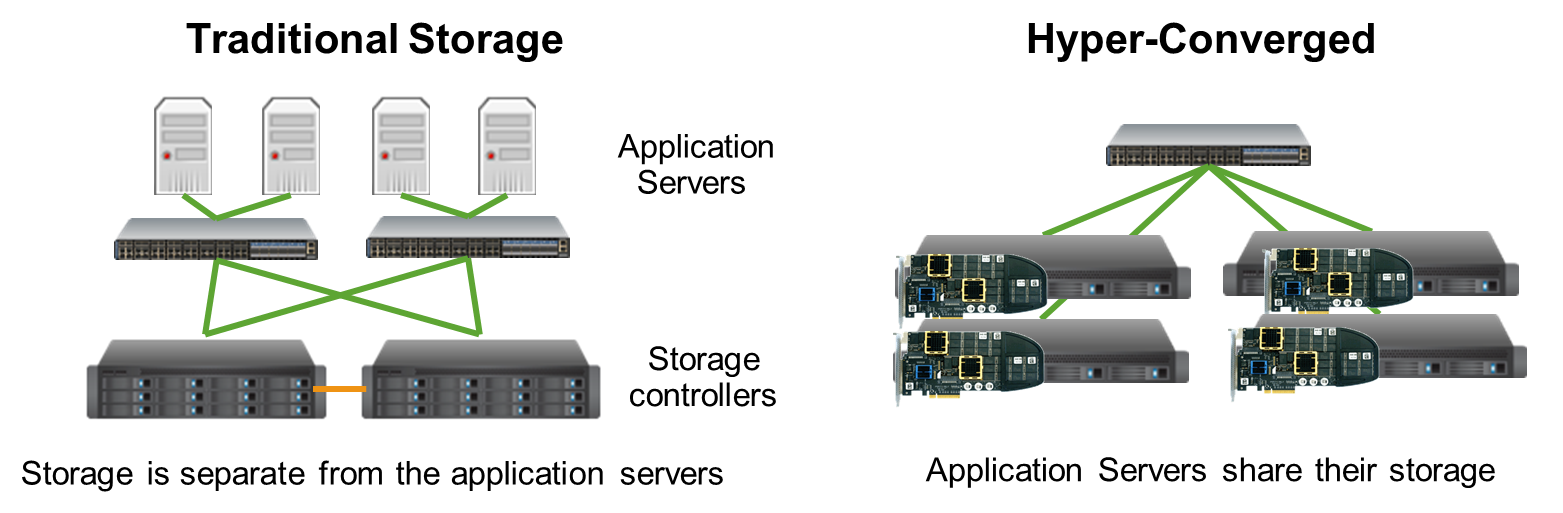

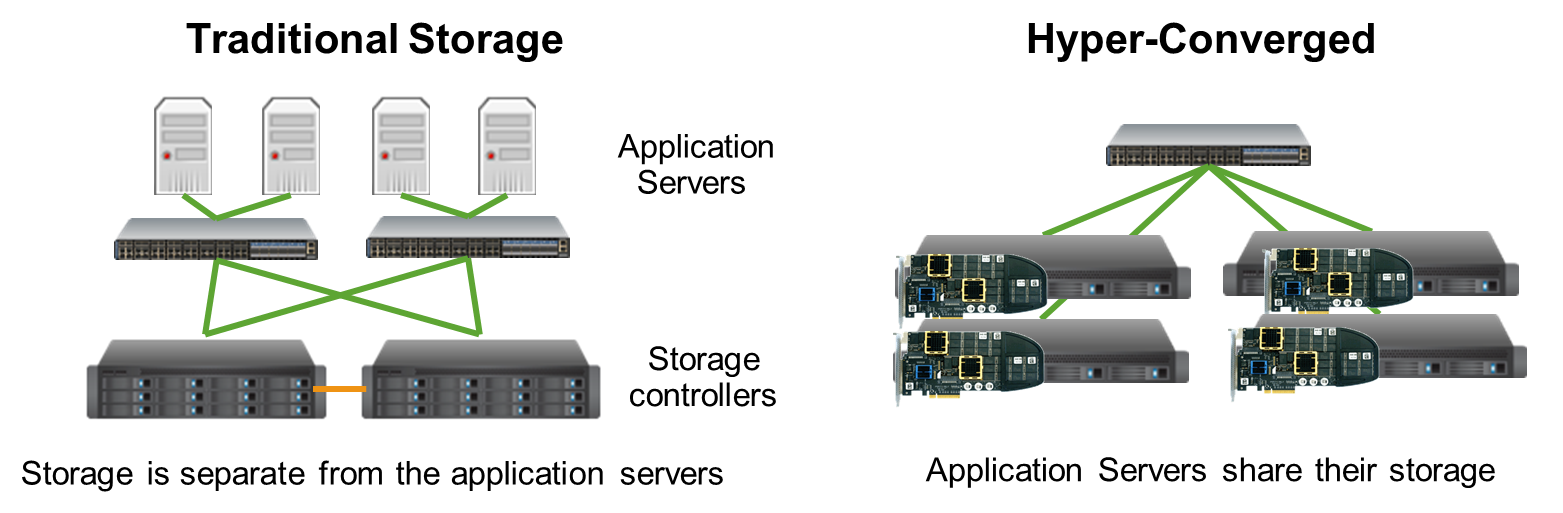

Hyperconverged systems (HCS) take the concept of “convergence” to a new level. Converged systems typically consist of separate components designed to work well together. HCS are typically modular solutions designed to scale by incorporating additional modules into the system. In fact, they perform the “scaling” of a large data storage system due to the controller software layer.

Typical architectures for traditional and hyperconverged storage systems and their management

The more storage devices are added, the greater their overall capacity and performance. Instead of expanding, adding more disks, memory or processors, simply add new stand-alone modules containing all the necessary resources. In addition to the simplified architecture, a simplified administration model is used since HCSs are managed through a single interface.

Vertical and horizontal scalability

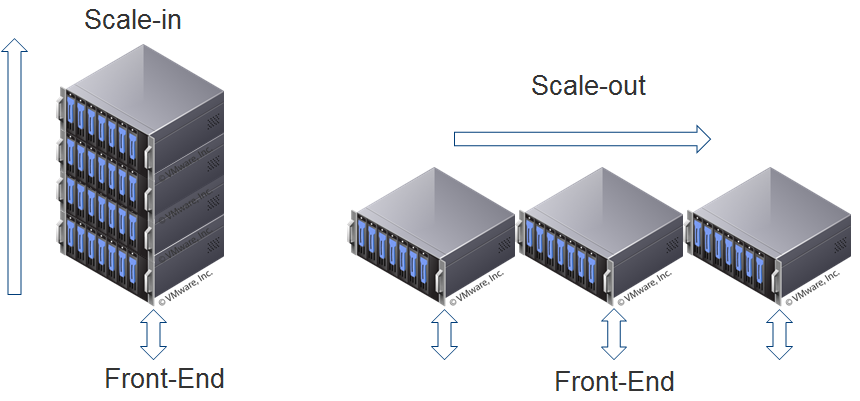

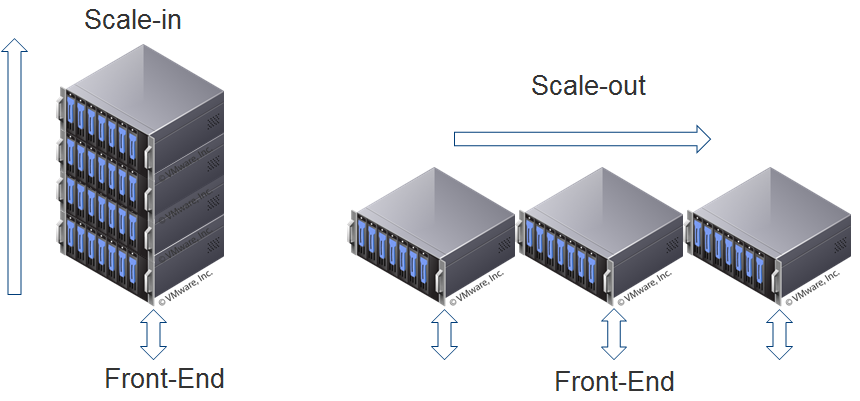

Scalability is understood as the ability of certain types of systems to continue to function properly when their size (or volume) is changed to meet user needs. In some contexts, scalability is understood as the ability to satisfy larger or smaller user requests. In the context of storage, they often talk about satisfying the demand for a larger volume.

Schematic representation of vertical and horizontal scalability

Vertical scale-in ─ increasing the capabilities of existing hardware or software by adding new resources to a physical system ─ for example, computing power to a server to make it faster. In the case of storage systems, this means adding new controllers, disks, and input / output modules to the existing system as needed.

Horizontal scale out means connecting many autonomous units so that they work as a single and common logical unit. With horizontal scaling, for example, there can be many nodes that are geographically distant.

Scale-out storage architecture

According to the concept of scale out architecture, new groups of devices can be added to the system almost without limits, as required. Each device (or node) has some data storage capacity. It, in turn, can be dialed by disk devices and have its own computing power, ─ like the I / O bandwidth (input / output, I / O).

The inclusion of these resources means that, not only the capacity increases, but also the productivity of working with data. The scale of the system grows as nodes are added that are clustered. For this, x86 servers with special OS and storage systems connected through an external network are often used.

Users administer the cluster as a single system and manage data in the global namespace or in a distributed file system. Thus, they do not have to worry about the actual physical location of the data.

NetApp Enterprise-Scale HCI: A New Generation of Hyper-Converged Systems

Of course, in some cases, special and unique solutions (custom-made storage systems, network, servers) remain the best choice. However, other options ─ “as-a-service”, converged infrastructure (CI) and software-defined systems (SDS) quickly capture the IT infrastructure market, and this movement will dominate in the next few years.

The CI market is growing very fast as organizations tend to have less operational complexity and faster IT adoption. Hyper Converged Infrastructure (HCI) platforms emerged as a result of their natural development as organizations are already moving towards building next-generation data centers.

It is also expected that by 2020, 70% of the storage management functions will be automated and they will be included in the infrastructure platform. NetApp HCI represents the next generation of hyper-converged infrastructure and is the first HCI platform designed for enterprise applications.

The first generation of HCI solutions was more suitable for projects of a relatively small scale, ─ customers found that they had quite a few architectural limitations. They dealt with many aspects ─ performance, automation, mixed workloads, scaling, configuration flexibility, etc.

This, of course, contradicted the strategy of building the next generation information center, where “agility”, scaling, automation and predictability are mandatory requirements.

Introduction to NetApp HCI

NetApp HCI is the first enterprise-wide hyperconverged infrastructure solution. The solution provides a cloud-like infrastructure (storage resources, as well as computing and network) in an “agile”, scalable, easy-to-manage standard four-node unit.

The solution was developed based on the SolidFire flash storage system . Simple centralized management through the VMware vCenter Plug-in gives you complete control over your entire infrastructure through an intuitive user interface.

Integration with NetApp ONTAP Selectopens up a new range of deployment options ─ for both existing NetApp customers and those who want to upgrade their data center. NetApp HCI addresses the limitations of the current generation of HCI offerings in four key ways:

Guaranteed performance . Specialized platforms and great redundancy today are not an acceptable choice. NetApp HCI is a solution that provides "granular" control of each application, which "eliminates noisy neighbors." All applications are deployed on a common platform. At the same time, according to the company, eliminated more than 90% of traditional performance problems.

Flexibility and scalability. Previous generations of HCI had fixed resources, limited to a few host configurations. NetApp HCI now has independent storage and computing resources. As a result, NetApp HCI is well suited for configurations of any scale.

Automated infrastructure . The new NetApp Deployment Engine (NDE) utility eliminates most of the manual steps involved in deploying the infrastructure. VMware vCenter Plug-in makes management simple and intuitive. The corresponding API allows integration into top-level management systems, provides backup and disaster recovery. The system recovery time after failures does not exceed 30 minutes.

The NetApp Data Fabric. In the early generations of HCI platforms, there was a need to introduce new resource groups into IT infrastructure. Obviously, this is an ─ inefficient approach. NetApp HCI integrates into the data fabric NetApp Data Fabric. This increases data mobility, visibility and protection, allowing you to use the full potential of data ─ in a local (on-premise), public or hybrid cloud.

NetApp Data Fabric NetApp HCI Data Fabric

Deployment Model

is an out-of-the-box solution that is immediately ready to run in a Data Fabric environment. Thus, the user gets access to all his data that is in a public or hybrid cloud.

NetApp Data Fabric─ software-defined data management approach that allows enterprises to use incompatible data storage resources and provide continuous streaming data management between on-premises and cloud storage.

The products and services that make up NetApp Data Fabric are designed to give customers freedom. They must quickly and efficiently transfer data to / from the cloud, if necessary, restore cloud data and move it from the cloud of one provider to the cloud of another.

The foundation of NetApp Data Fabric is the Clustered Data ONTAP storage operating system. As part of the Data Fabric, NetApp has developed a dedicated cloud version of ONTAP for Cloud. It creates a virtual NetApp storage within the enterprise-wide public cloud.

This platform allows you to save data in the same way that it is implemented on internal NetApp systems. Continuity allows administrators to move data to where and when it is needed, without requiring any intermediate transformations. In turn, this actually allows you to expand the data center of the enterprise. due to the public cloud of the provider.

NetApp first introduced the Data Fabric concept in 2014 at its annual Insight conference. According to NetApp, this was in response to the need of its customers to get a unified view of enterprise data stored in many internal and external data centers. In particular, with Data Fabric, enterprises got easy access to their corporate data located in the public clouds of the Google Cloud Platform, Amazon Simple Storage Service (S3), Microsoft Azure and IBM SoftLayer.

Compliance with the requirements of the enterprise

One of the biggest problems in any data center is the predictability of the currently needed performance. This is especially true for “sprawling” applications and their workloads, which can sometimes be very intense.

Each enterprise uses a large number of enterprise applications that use the same IT infrastructure. Thus, there is always a potential danger that some application will interfere with the work of another.

In particular, for important applications, such as, for example, the virtual desktop infrastructure (Virtual Desktop Infrastructure, VDI) and database applications, the input / output mechanisms are quite different and tend to affect each other. HCI NetApp eliminates unpredictability by delivering the performance you need at every moment.

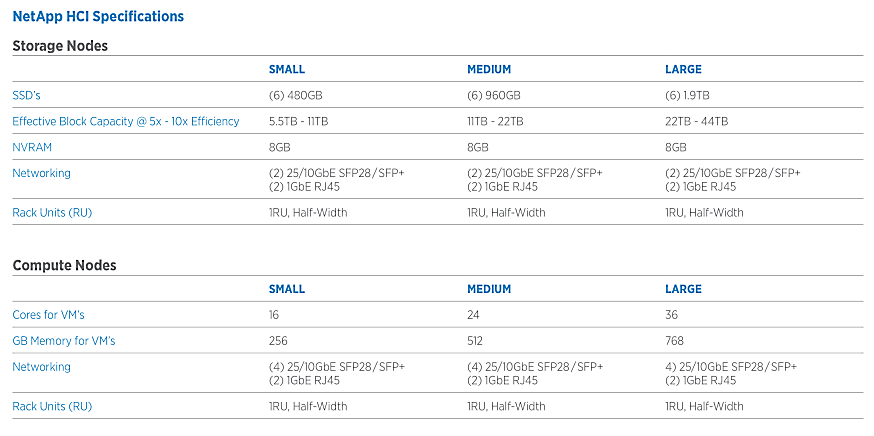

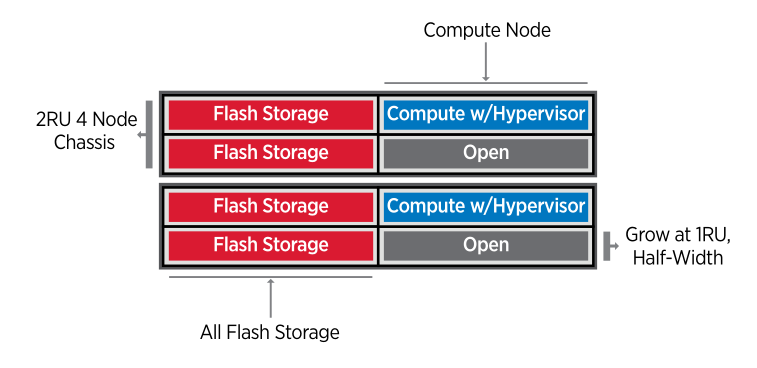

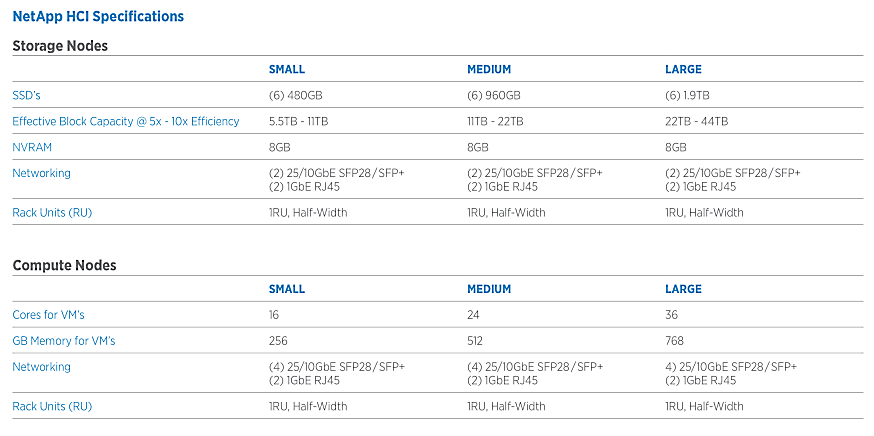

NetApp HCI is available for small, medium and large storage and computing configurations. The system can be expanded in increments of 1RU. As a result, enterprises can very accurately determine the resources they require and not have unused redundant hardware.

The main task of each IT department is to automate all common tasks, eliminating the risk of user errors associated with manual operations and freeing up resources to solve more priority and complex business tasks. NetApp Deployment Engine (NDE) eliminates most manual infrastructure deployment operations. At the same time, the vCenter plug-in makes VMware virtual management simple and intuitive.

Finally, the API set allows for seamless integration of data storage and processing subsystems into higher-level control systems, and also provides backup and disaster recovery.

NetApp HCI integrates and supports the following technologies :

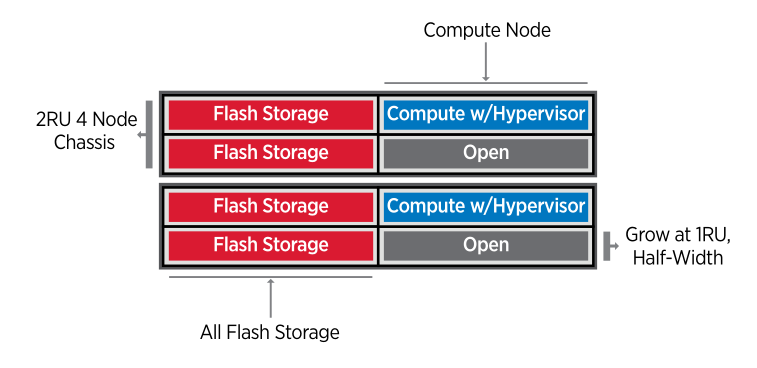

Minimum configuration NetApp HCI: two linings (chassis), in which the aggregate has four module for the flash memory, two computing modules and two empty compartments for additional modules

Appearance one module NetApp HCI reverse side

Specifications NetApp HCI

Effective Block Capacity ─ one of deduplication parameters depends on the data type. See here for more details .

The system will be available for order no earlier than this fall. For detailed information, please contact netapp@muk.ua .

This article gives a comparative description of traditional, convergent and hyperconverged systems related to data storage and processing. Scaling options for similar enterprise systems and Scale-Out architecture are considered. The description and characteristics of a new generation hyperconverged system for working with NetApp HCI data are given.

Converged and traditional systems

Converged systems are the result of natural progress, a departure from the traditional IT infrastructure, which has always been associated with the creation of separate and unrelated “bunkers” for data storage and processing.

For the inherited IT environment, as a rule, separate administrative groups (teams of specialists) were created for storage systems, for servers, and for network support. For example, a storage group was involved in the purchase, provision, and maintenance of a storage infrastructure. She also maintained relationships with storage vendors. The same was true for server groups and networks.

A converged system combines two or more of these components of an IT infrastructure as a pre-engineered solution. The best solutions of this class combine all three components that are closely related to each other by the corresponding software.

A clear advantage of such a solution is a relatively simple design for complex IT infrastructure. The idea is to create a single team for support, ─ the same way as focusing on a single seller to support all the necessary components.

Converged and Hyper Converged Systems

Hyperconverged systems (HCS) take the concept of “convergence” to a new level. Converged systems typically consist of separate components designed to work well together. HCS are typically modular solutions designed to scale by incorporating additional modules into the system. In fact, they perform the “scaling” of a large data storage system due to the controller software layer.

Typical architectures for traditional and hyperconverged storage systems and their management

The more storage devices are added, the greater their overall capacity and performance. Instead of expanding, adding more disks, memory or processors, simply add new stand-alone modules containing all the necessary resources. In addition to the simplified architecture, a simplified administration model is used since HCSs are managed through a single interface.

Vertical and horizontal scalability

Scalability is understood as the ability of certain types of systems to continue to function properly when their size (or volume) is changed to meet user needs. In some contexts, scalability is understood as the ability to satisfy larger or smaller user requests. In the context of storage, they often talk about satisfying the demand for a larger volume.

Schematic representation of vertical and horizontal scalability

Vertical scale-in ─ increasing the capabilities of existing hardware or software by adding new resources to a physical system ─ for example, computing power to a server to make it faster. In the case of storage systems, this means adding new controllers, disks, and input / output modules to the existing system as needed.

Horizontal scale out means connecting many autonomous units so that they work as a single and common logical unit. With horizontal scaling, for example, there can be many nodes that are geographically distant.

Scale-out storage architecture

According to the concept of scale out architecture, new groups of devices can be added to the system almost without limits, as required. Each device (or node) has some data storage capacity. It, in turn, can be dialed by disk devices and have its own computing power, ─ like the I / O bandwidth (input / output, I / O).

The inclusion of these resources means that, not only the capacity increases, but also the productivity of working with data. The scale of the system grows as nodes are added that are clustered. For this, x86 servers with special OS and storage systems connected through an external network are often used.

Users administer the cluster as a single system and manage data in the global namespace or in a distributed file system. Thus, they do not have to worry about the actual physical location of the data.

NetApp Enterprise-Scale HCI: A New Generation of Hyper-Converged Systems

Of course, in some cases, special and unique solutions (custom-made storage systems, network, servers) remain the best choice. However, other options ─ “as-a-service”, converged infrastructure (CI) and software-defined systems (SDS) quickly capture the IT infrastructure market, and this movement will dominate in the next few years.

The CI market is growing very fast as organizations tend to have less operational complexity and faster IT adoption. Hyper Converged Infrastructure (HCI) platforms emerged as a result of their natural development as organizations are already moving towards building next-generation data centers.

It is also expected that by 2020, 70% of the storage management functions will be automated and they will be included in the infrastructure platform. NetApp HCI represents the next generation of hyper-converged infrastructure and is the first HCI platform designed for enterprise applications.

The first generation of HCI solutions was more suitable for projects of a relatively small scale, ─ customers found that they had quite a few architectural limitations. They dealt with many aspects ─ performance, automation, mixed workloads, scaling, configuration flexibility, etc.

This, of course, contradicted the strategy of building the next generation information center, where “agility”, scaling, automation and predictability are mandatory requirements.

Introduction to NetApp HCI

NetApp HCI is the first enterprise-wide hyperconverged infrastructure solution. The solution provides a cloud-like infrastructure (storage resources, as well as computing and network) in an “agile”, scalable, easy-to-manage standard four-node unit.

The solution was developed based on the SolidFire flash storage system . Simple centralized management through the VMware vCenter Plug-in gives you complete control over your entire infrastructure through an intuitive user interface.

Integration with NetApp ONTAP Selectopens up a new range of deployment options ─ for both existing NetApp customers and those who want to upgrade their data center. NetApp HCI addresses the limitations of the current generation of HCI offerings in four key ways:

Guaranteed performance . Specialized platforms and great redundancy today are not an acceptable choice. NetApp HCI is a solution that provides "granular" control of each application, which "eliminates noisy neighbors." All applications are deployed on a common platform. At the same time, according to the company, eliminated more than 90% of traditional performance problems.

Flexibility and scalability. Previous generations of HCI had fixed resources, limited to a few host configurations. NetApp HCI now has independent storage and computing resources. As a result, NetApp HCI is well suited for configurations of any scale.

Automated infrastructure . The new NetApp Deployment Engine (NDE) utility eliminates most of the manual steps involved in deploying the infrastructure. VMware vCenter Plug-in makes management simple and intuitive. The corresponding API allows integration into top-level management systems, provides backup and disaster recovery. The system recovery time after failures does not exceed 30 minutes.

The NetApp Data Fabric. In the early generations of HCI platforms, there was a need to introduce new resource groups into IT infrastructure. Obviously, this is an ─ inefficient approach. NetApp HCI integrates into the data fabric NetApp Data Fabric. This increases data mobility, visibility and protection, allowing you to use the full potential of data ─ in a local (on-premise), public or hybrid cloud.

NetApp Data Fabric NetApp HCI Data Fabric

Deployment Model

is an out-of-the-box solution that is immediately ready to run in a Data Fabric environment. Thus, the user gets access to all his data that is in a public or hybrid cloud.

NetApp Data Fabric─ software-defined data management approach that allows enterprises to use incompatible data storage resources and provide continuous streaming data management between on-premises and cloud storage.

The products and services that make up NetApp Data Fabric are designed to give customers freedom. They must quickly and efficiently transfer data to / from the cloud, if necessary, restore cloud data and move it from the cloud of one provider to the cloud of another.

The foundation of NetApp Data Fabric is the Clustered Data ONTAP storage operating system. As part of the Data Fabric, NetApp has developed a dedicated cloud version of ONTAP for Cloud. It creates a virtual NetApp storage within the enterprise-wide public cloud.

This platform allows you to save data in the same way that it is implemented on internal NetApp systems. Continuity allows administrators to move data to where and when it is needed, without requiring any intermediate transformations. In turn, this actually allows you to expand the data center of the enterprise. due to the public cloud of the provider.

NetApp first introduced the Data Fabric concept in 2014 at its annual Insight conference. According to NetApp, this was in response to the need of its customers to get a unified view of enterprise data stored in many internal and external data centers. In particular, with Data Fabric, enterprises got easy access to their corporate data located in the public clouds of the Google Cloud Platform, Amazon Simple Storage Service (S3), Microsoft Azure and IBM SoftLayer.

Compliance with the requirements of the enterprise

One of the biggest problems in any data center is the predictability of the currently needed performance. This is especially true for “sprawling” applications and their workloads, which can sometimes be very intense.

Each enterprise uses a large number of enterprise applications that use the same IT infrastructure. Thus, there is always a potential danger that some application will interfere with the work of another.

In particular, for important applications, such as, for example, the virtual desktop infrastructure (Virtual Desktop Infrastructure, VDI) and database applications, the input / output mechanisms are quite different and tend to affect each other. HCI NetApp eliminates unpredictability by delivering the performance you need at every moment.

NetApp HCI is available for small, medium and large storage and computing configurations. The system can be expanded in increments of 1RU. As a result, enterprises can very accurately determine the resources they require and not have unused redundant hardware.

The main task of each IT department is to automate all common tasks, eliminating the risk of user errors associated with manual operations and freeing up resources to solve more priority and complex business tasks. NetApp Deployment Engine (NDE) eliminates most manual infrastructure deployment operations. At the same time, the vCenter plug-in makes VMware virtual management simple and intuitive.

Finally, the API set allows for seamless integration of data storage and processing subsystems into higher-level control systems, and also provides backup and disaster recovery.

NetApp HCI integrates and supports the following technologies :

- NetApp SolidFire Element OS ─ SolidFire Storage Operating System, allows you to quickly scale the storage node.

- Intuitive Deployment Engine ─ Deploys and builds software components for managing storage and computing resources.

- Robust Monitoring Agent ─ monitors HCI resources and storage devices, and sends information to vCenter and Active IQ.

- NetApp SolidFire vCenter Plugin ─ provides a comprehensive set of functions for managing data storage.

- NetApp SolidFire Management Node ─ VM for monitoring and upgrading HCI with support for remote management.

- VMware ESXi and vCenter v6 ─ host for virtualization and software management.

- NetApp Data Fabric ─ provides integration using SolidFire Element OS tools, including SnapMirror, SnapCenter, ONTAP Select file services, and AltaVault and StorageGRID backup tools.

Minimum configuration NetApp HCI: two linings (chassis), in which the aggregate has four module for the flash memory, two computing modules and two empty compartments for additional modules

Appearance one module NetApp HCI reverse side

Specifications NetApp HCI

Effective Block Capacity ─ one of deduplication parameters depends on the data type. See here for more details .

The system will be available for order no earlier than this fall. For detailed information, please contact netapp@muk.ua .