Fuzzy Name Search

Good afternoon. The problem with a search, service or product arises on the vast majority of sites. And for the most part, the implementation of such an opportunity is limited to searching by the exact word that was entered in the search line.

If there is time, and the customer wants a little more, then google the implementation of the most popular algorithm (which is the "Levenshtein distance") and enter it.

In this article, I will describe a highly modified algorithm based, however, on Levenshtein distances, and give examples of C # code for fuzzy search by names, for example: cafes, restaurants or certain services ... In general, everything that can be listed and has from one to a few words in its composition:

“Yandex”, “Mail”, “ProjectArmata”, “world of tanks”, “world of warships”, “world of warplanes”, etc.

For those who are unfamiliar with the algorithm, I recommend reading first the articles " Implementing Fuzzy Search ", " Fuzzy Search in Text and Dictionary " or its description in my presentation under the slider.

And it would seem - here it is! Everything was invented for us and there’s no need to do anything at all, but there is a problem ...

1) Taking a weight factor of 1 is always not quite right, because the probability of a typo depends on several specific factors: the distance of the keys on the keyboard, phonetic groups, and even the commonplace typing speed characters.

2) The idea of Levenshtein is intended to “find differences between words”, and not part of words, which is critical for dynamically generated results as you type letters.

3) The names of services have in their composition more than one word, then a person may simply not remember in which order they go.

We also take into account several more factors, such as:

• keyboard layout in another language

• transliteration of characters

These are the problems we will try to solve in this article.

To begin with, let's agree that all the words we will lead to one single register. In my version of the code, I chose lower case, this will be reflected in the references that we need (these references will be given in the course of the description). In the article itself, I will resort to various writing styles, for example, to CamelCase - ProjectArmata, but this is done solely for the convenience of human perception, from the point of view of analysis, the register is one (lower). And yet, we will take as a basis not the classic, but the optimized version of the code for finding the Levenshtein distance from here :

We will slightly correct it by removing the word order changes. For Levenshtein's algorithm, the word order does not matter, but for us it will be important for other reasons. As a result, we received the following:

We begin to improve the search algorithm by changing the weights. To begin with, the insertion and deletion coefficients are equal to 2.

That is, the lines will change:

And here is the line for calculating the symbol replacement coefficient, we have turned into the CostDistanceSymbol function:

And we will take into account two factors:

1) Keyboard distance

2) Phonetic groups

In this regard, for more convenient work with source and target objects, we will turn them into an object:

To do this, the following auxiliary directories will be required:

The ratio of the key codes of the Russian-language keyboard:

Key Code Ratio for English Keyboard

Thanks to these two directories, speaking in mathematical language, we can convert two different symbol spaces into one single universal.

And the following relationships will be valid on it:

private static SortedDictionary DistanceCodeKey = new SortedDictionary

Those. we take the keys standing around another key. You can verify this by the example of the figure:

Yes, I know that in addition to the QWERTY keyboard, there are other layouts, and then the keyboard distance table will not match, but we take the most common option.

If someone knows a better way, please write.

Now we are ready for the first stage - calculating the weight of the erroneous symbol in the CostDistanceSymbol function:

As it was before, if the symbols are the same, then the distance is 0:

If the key codes are the same, then the distance is also 0:

If you have a question why we are comparing key codes after comparing the characters, the answer is simple - we want the words “Water” and “Djlf” to be perceived the same way. And the characters that can be typed in different layouts, for example, ";" or "," regardless of layout, were also perceived the same way.

Further, we already look only at the codes how close the key codes are from each other. If close, then the distance is 1, and if not, then 2 (exactly the same weight as when inserting or removing):

In fact, such a small refinement covers a huge number of random errors from the wrong layout, ending with a miss by the key.

But aft of typing errors, a person simply may not know how words are spelled. For example: pike, vulgar. test.

Such words are not only in Russian, but also in English. We also need to consider such cases. For English words, we take as a basis the work of Zobel and Darth on phonetic groups .

“Aeiouy”, “bp”, “ckq”, “dt”, “lr”, “mn”, “gj”, “fpv”, “sxz”, “csz”

And for the Russian, I’ll compose on my own intuition:

“yy ”,“ Eee ”,“ aya ”,“ oye ”,“ uy ”,“ shch ”,“ oa ”,“ yo ”We

transform these phonetic groups into an object of the type:

This can be done by hand or how I write code, but the result will be the same. And now, after checking the key code, you can check the letters for entering the phonetic group with the same logic for finding errors as in the previous step:

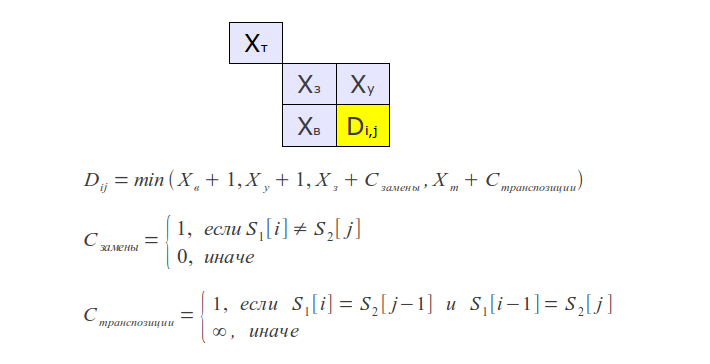

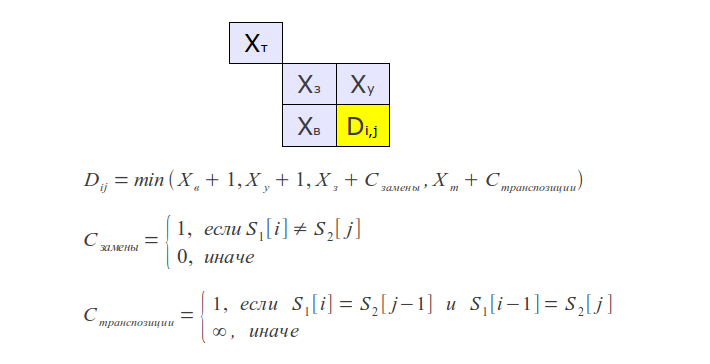

In addition to the above typos, there are typos of the "typing speed" of the text. This is when two consecutive letters of people, when typing, are confused. Moreover, this is such a common mistake that a certain mathematician Frederic Damerau finalized the Levenshtein algorithm by adding the operation of transposition (permutation) of characters.

From a software point of view, we add the following to the LevenshteinDistance function:

Thus, we have completed the first step on finalizing the code. We pass to the second point. Recall that Levenshtein’s idea is intended to “distinguish between words,” and not part of the words, which is critical for dynamic output as you type letters.

For example, in the directory there are two words:

• “ProjectArmata”

• “Yak”

Entering the query “Pro” in the search bar, we will get “Yak” at the output as a higher priority, due to the fact that replacing two characters and deleting one will be only 3 parrots (taking into account unit coefficients and the classical Levenshtein algorithm, without our modifications), and adding a part of the word "jectArmata" 10 parrots.

The most logical in this situation is to compare not the whole word, but only parts of the searched word with the string entered.

Because the search query consists of three characters “Pro”, we take the first three characters from the word “ProjectArmata” being compared, i.e. “Pro” and get a 100% match. For this particular case - perfect. But let's look at a few more options. Suppose we have the following set of words in our database:

• “Kommunalka”

• “Conveyor”

• “Colony”

• “Cosmetics” The

search query will look like this “Kom”. As a result, the words will have the following coincidence coefficients:

Kommunalka - 0

Conveyor - 1

Colony - 1

Cosmetics - 1

If everything is fine with the word “Kommunalka”, then the other three words look somehow uniform ... Although it is clear that perhaps the person described in one letter and he is looking for “Cosmetics”, not the Conveyor or Colony. And our task is to give him the most suitable result, and not just everything in a row. Moreover, as Damerau said, most mistakes are permutations of letters.

To eliminate such a mistake, we make a small revision:

Take not the first “n” letters, but “n + 1” letters, where n is the number of letters in the request. And then the coefficients at the request of “Com” will be as follows:

Kommunalka - 1

Cosmetics - 1

Conveyor - 2

Colony - 2

“Cosmetics” was corrected, but “Kommunalka” left ... But I like this option more for the following reasons. Usually, if a search is required, then initially the information is shown to the user in the form of a drop-down list, under the search line, as you type letters. The length of this drop-down list is limited by the size of 3-7 entries. As a result, if there are only three entries, then in the second version they will appear: “Kommunalka”, “Cosmetics” and “Conveyor” [as it is stupid first in issuance because of guid or simply because of creation date]. And in the first case there will be “Kommunalka”, “Conveyor”, “Colony” and no “Cosmetics”, because she was unlucky for other reasons ...

Of course, this problem has other solutions. For example, sort first by “n” letters, and then, take those groups of words whose indices match and additionally re-sort “n + 1” letters and you can again, and again ... Here it is already decided individually depending on the data in the database problem being solved, computing power.

Let’s not focus on solving the problem described above ... We are only in the middle of the road and I still have something to tell.

The next nuance in the correct search results comes to us from the times of the USSR. Then they liked to make names consisting of several words combined into one. Yes, and now it is relevant:

Consumer

Union GazPromBank

Russian Agricultural

Bank

ProjectArmata Bank URALSIB

, etc.

[ps I know that some of the names are written with a space, but I needed to pick up vivid examples]

But, if we follow our algorithm, then we always take the first "n + 1" letters from the word. And if a person types the word “Bank”, he will not receive an adequate extradition. Because we will compare the lines:

“BankU”

“Potre”

“GazPr”

“Rosset”

“Proje”

To find the word “bank” regardless of position, we will need to make a floating window by phrase and return the lowest coefficient:

As you can see, to the error obtained after calculating the Levenshtein distance, we add another value (i * 2 / 10.0). If with “i * 2” - everything is clear, this is an error inserting letters at the beginning of a word, like the classical algorithm for finding the Levenshtein distance, but why do we divide it by 10? The bottom line is that if we just leave “i * 2”, then the words that are dumb are shorter in length will fall into the search result and we will again leave the name of the banks. And therefore, the coefficient has to be divided by 10, which reduces this bias. Why exactly 10? For our base, it suited more or less normally, but I do not exclude that it can be divided into more. Everything depends on the length of the words and on how similar the words are. I will give the calculation of the most suitable coefficient a bit later.

With words, like a search unit, sort of sorted out, now we turn to phrases. And to begin with, again, a few examples:

• warface

• world of tanks

• world of warplanes

• world of ships

Let's understand what we basically need from a phrase search. We need to add missing words, remove unnecessary phrases, and also, so that they change places. Somewhere I already said this ... And, for sure, I remembered ... At the beginning of the article, when I talked about the words and the distance function of Levenshtein. In order not to waste your time, I’ll say right away that I couldn’t use it in its pure form, but, inspired by it, I managed to write a code applicable to phrases.

As with the implementation of the Levenshtein distance function, if one of the phrases is empty, then we return the error value equal to the insertion or deletion [depending on which side the empty phrase came from] of all letters.

Those. we summarize the number of letters in a phrase, multiply by 2 (this coefficient was chosen at the beginning of the article for letters) and multiply by 100. These 100 are the most contradictory coefficient in the whole algorithm. Why it will be needed will be presented more clearly below, and after that I will explain that in theory it needs to be calculated, and not just taken from the ceiling.

In this code, we compare each word from the record in the database with each of the search queries and take the lowest coefficients for each word. The total coefficient for the phrase is the following:

result + = minRangeWord * 100 + (Math.Abs (i - minIndex) / 10.0);

As you can see, the whole logic is the same as described above, we multiply the minimum coefficient for the word minRangeWord by our 100 and add it with a coefficient showing how close the word is to its most suitable position (Math.Abs (i - minIndex) / 10.0).

Multiplication by 100 is used to compensate for the additional coefficient that may arise when searching for the most suitable position of the search word in the previous step. As a result, this coefficient can be calculated as the largest between the phrase in the search line and all the words in the database. Not in phrases, but in words. But for this it is necessary to measure the Levenshtein distance with our modifications into the "empty", which is very wasteful in resources.

Those. run the GetRangeWord function and take the maximum value of the deviation of the phrase “i * 2” from the most suitable place. And after taking the highest value, bring it to the nearest larger tenfold number (10, 100, 1000, 10000, 100000, etc.). Thus we get two values:

The first is the value by which you want to divide the mixed word in the GetRangeWord function. Second, the value to be multiplied by minRangeWord to compensate for the previous offset. This way we get accurate similarity phrases. But in practice, you can neglect large deviations, and roughly estimate the average ... What I actually did.

In principle, everything. The main issues I have sorted out are only a small refinement of the "transliteration of characters." The difference between the search described above and the search in transliteration is that in the CostDistanceSymbol function we will not adjust the response values according to the key distances, because issuance in this case will not be correct.

It can also be noted that an adequate search result starts with 3 characters in the search string, if the character is less, it is better to use a more clumsy method with exact string matching.

Next, I will give the most complete code of everything described above, but first:

1) This code was written by me personally in my free time.

2) The algorithm was thought up by me personally in my free time.

Separately, I want to say thank you for the links and inspiration: Dmitry Panyushkin, Pavel Grigorenko.

The names that are given in the article are taken from open sources and belong to their owners. Not an advertisement.

Thanks to everyone who read. Criticism and advice are welcome.

Vitaly Alferov, 2017

If there is time, and the customer wants a little more, then google the implementation of the most popular algorithm (which is the "Levenshtein distance") and enter it.

In this article, I will describe a highly modified algorithm based, however, on Levenshtein distances, and give examples of C # code for fuzzy search by names, for example: cafes, restaurants or certain services ... In general, everything that can be listed and has from one to a few words in its composition:

“Yandex”, “Mail”, “ProjectArmata”, “world of tanks”, “world of warships”, “world of warplanes”, etc.

For those who are unfamiliar with the algorithm, I recommend reading first the articles " Implementing Fuzzy Search ", " Fuzzy Search in Text and Dictionary " or its description in my presentation under the slider.

Levenshtein distance algorithm

Levenshtein distance is the most popular algorithm in the network to find the degree of difference between two words. Namely, what is the minimum number of actions that must be performed to get the second from the first line.

There are only three such actions:

• Deletion

• Insert

• Replacement

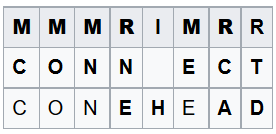

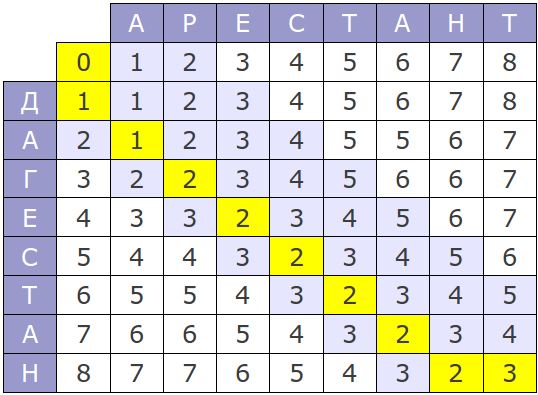

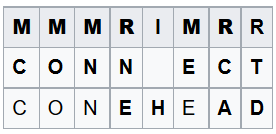

For example, for 2 rows “CONNECT” and “CONEHEAD”, you can build the following conversion table:

In theory, the prices of operations may depend on the type of operation (insert, delete, replace) and / or from the characters involved in it. But in the general case:

w (a, ε) is the price of deleting the character “a”, equal to 1

w (ε, b) is the price of inserting the character “b”, is 1

w (a, b) is the price of replacing the character “a” on the symbol "b", equal to 1

Everywhere they put units.

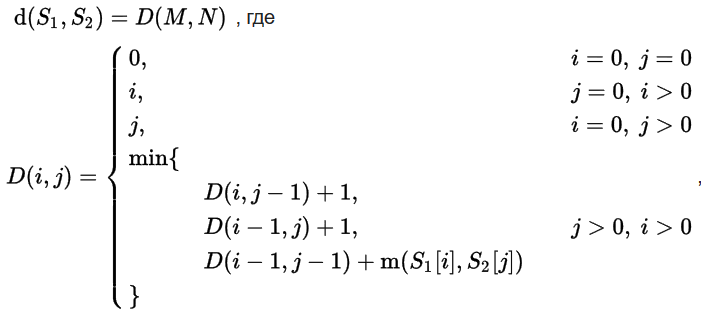

And from the point of view of mathematics, the calculation looks like this.

Let S1 and S2 be two lines (of length M and N, respectively) over some alphabet, then the editorial distance (Levenshtein distance) d (S1, S2) can be calculated using the following recurrence formula:

Two mathematicians Wanger and Fisher made the default implementation of the Levenshtein algorithm [not to be confused with a chess player].

And it looks like this in C #:

Taken from here .

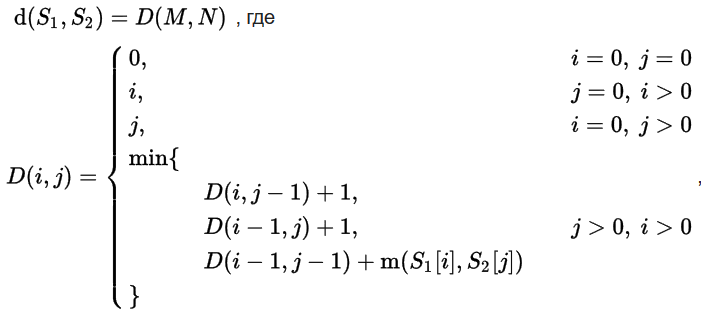

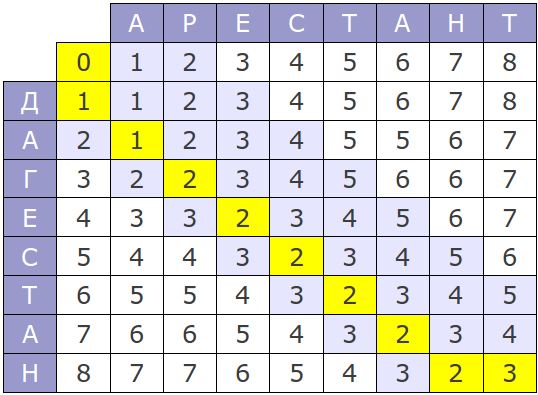

And visually the operation of the algorithm for the words “Arestant” and “Dagestan” is represented as follows:

The extreme right-bottom corner of the matrix shows how much the words differ. From the matrix, that in this particular case, the difference between the words is 3 conditional parrots.

If it’s not clear, then we’ll look at these words in a different way:

_ A R E S T A N T

D A G E S T A N _

Thus, it turns out that in order to turn the words “Arestant” and “Dagestan”, one letter “ D ”add, replace one letter“ P ”with“ G ”and delete the letter“ T ”. Because all actions have a weight of 1, it turns out the difference in words is 3 parrots.

If the words coincide completely, then the distance will be 0. That's the whole theory, everything ingenious is simple.

There are only three such actions:

• Deletion

• Insert

• Replacement

For example, for 2 rows “CONNECT” and “CONEHEAD”, you can build the following conversion table:

In theory, the prices of operations may depend on the type of operation (insert, delete, replace) and / or from the characters involved in it. But in the general case:

w (a, ε) is the price of deleting the character “a”, equal to 1

w (ε, b) is the price of inserting the character “b”, is 1

w (a, b) is the price of replacing the character “a” on the symbol "b", equal to 1

Everywhere they put units.

And from the point of view of mathematics, the calculation looks like this.

Let S1 and S2 be two lines (of length M and N, respectively) over some alphabet, then the editorial distance (Levenshtein distance) d (S1, S2) can be calculated using the following recurrence formula:

Two mathematicians Wanger and Fisher made the default implementation of the Levenshtein algorithm [not to be confused with a chess player].

And it looks like this in C #:

private Int32 levenshtein(String a, String b) {

if (string.IsNullOrEmpty(a))

{

if (!string.IsNullOrEmpty(b))

{

return b.Length;

}

return 0;

}

if (string.IsNullOrEmpty(b))

{

if (!string.IsNullOrEmpty(a))

{

return a.Length;

}

return 0;

}

Int32 cost;

Int32[,] d = new int[a.Length + 1, b.Length + 1];

Int32 min1;

Int32 min2;

Int32 min3;

for (Int32 i = 0; i <= d.GetUpperBound(0); i += 1)

{

d[i, 0] = i;

}

for (Int32 i = 0; i <= d.GetUpperBound(1); i += 1)

{

d[0, i] = i;

}

for (Int32 i = 1; i <= d.GetUpperBound(0); i += 1)

{

for (Int32 j = 1; j <= d.GetUpperBound(1); j += 1)

{

cost = Convert.ToInt32(!(a[i-1] == b[j - 1]));

min1 = d[i - 1, j] + 1;

min2 = d[i, j - 1] + 1;

min3 = d[i - 1, j - 1] + cost;

d[i, j] = Math.Min(Math.Min(min1, min2), min3);

}

}

return d[d.GetUpperBound(0), d.GetUpperBound(1)];

}Taken from here .

And visually the operation of the algorithm for the words “Arestant” and “Dagestan” is represented as follows:

The extreme right-bottom corner of the matrix shows how much the words differ. From the matrix, that in this particular case, the difference between the words is 3 conditional parrots.

If it’s not clear, then we’ll look at these words in a different way:

_ A R E S T A N T

D A G E S T A N _

Thus, it turns out that in order to turn the words “Arestant” and “Dagestan”, one letter “ D ”add, replace one letter“ P ”with“ G ”and delete the letter“ T ”. Because all actions have a weight of 1, it turns out the difference in words is 3 parrots.

If the words coincide completely, then the distance will be 0. That's the whole theory, everything ingenious is simple.

And it would seem - here it is! Everything was invented for us and there’s no need to do anything at all, but there is a problem ...

1) Taking a weight factor of 1 is always not quite right, because the probability of a typo depends on several specific factors: the distance of the keys on the keyboard, phonetic groups, and even the commonplace typing speed characters.

2) The idea of Levenshtein is intended to “find differences between words”, and not part of words, which is critical for dynamically generated results as you type letters.

3) The names of services have in their composition more than one word, then a person may simply not remember in which order they go.

We also take into account several more factors, such as:

• keyboard layout in another language

• transliteration of characters

These are the problems we will try to solve in this article.

To begin with, let's agree that all the words we will lead to one single register. In my version of the code, I chose lower case, this will be reflected in the references that we need (these references will be given in the course of the description). In the article itself, I will resort to various writing styles, for example, to CamelCase - ProjectArmata, but this is done solely for the convenience of human perception, from the point of view of analysis, the register is one (lower). And yet, we will take as a basis not the classic, but the optimized version of the code for finding the Levenshtein distance from here :

We will slightly correct it by removing the word order changes. For Levenshtein's algorithm, the word order does not matter, but for us it will be important for other reasons. As a result, we received the following:

public int LevenshteinDistance(string source, string target){

if(String.IsNullOrEmpty(source)){

if(String.IsNullOrEmpty(target)) return 0;

return target.Length;

}

if(String.IsNullOrEmpty(target)) return source.Length;

var m = target.Length;

var n = source.Length;

var distance = new int[2, m + 1];

// Initialize the distance 'matrix'

for(var j = 1; j <= m; j++) distance[0, j] = j;

var currentRow = 0;

for(var i = 1; i <= n; ++i){

currentRow = i & 1;

distance[currentRow, 0] = i;

var previousRow = currentRow ^ 1;

for(var j = 1; j <= m; j++){

var cost = (target[j - 1] == source[i - 1] ? 0 : 1);

distance[currentRow, j] = Math.Min(Math.Min(

distance[previousRow, j] + 1,

distance[currentRow, j - 1] + 1),

distance[previousRow, j - 1] + cost);

}

}

return distance[currentRow, m];

}We begin to improve the search algorithm by changing the weights. To begin with, the insertion and deletion coefficients are equal to 2.

That is, the lines will change:

for(var j = 1; j <= m; j++) distance[0, j] = j * 2;

...

distance[currentRow, 0] = i * 2;

...

distance[previousRow, j] + 2

...

distance[currentRow, j - 1] + 2And here is the line for calculating the symbol replacement coefficient, we have turned into the CostDistanceSymbol function:

var cost = (target[j - 1] == source[i - 1] ? 0 : 1);And we will take into account two factors:

1) Keyboard distance

2) Phonetic groups

In this regard, for more convenient work with source and target objects, we will turn them into an object:

class Word {

//Исходная поисковая строка

public string Text { get; set; }

//Коды клавиш клавиатуры

public List Codes { get; set; } = new List();

}

To do this, the following auxiliary directories will be required:

The ratio of the key codes of the Russian-language keyboard:

private static SortedDictionary CodeKeysRus = new SortedDictionary

{

{ 'ё' , 192 },

{ '1' , 49 },

{ '2' , 50 },

...

{ '-' , 189 },

{ '=' , 187 },

{ 'й' , 81 },

{ 'ц' , 87 },

{ 'у' , 69 },

...

{ '_' , 189 },

{ '+' , 187 },

{ ',' , 191 },

} Key Code Ratio for English Keyboard

private static SortedDictionary CodeKeysEng = new SortedDictionary

{

{ '`', 192 },

{ '1', 49 },

{ '2', 50 },

...

{ '-', 189 },

{ '=', 187 },

{ 'q', 81 },

{ 'w', 87 },

{ 'e', 69 },

{ 'r', 82 },

...

{ '<', 188 },

{ '>', 190 },

{ '?', 191 },

}; Thanks to these two directories, speaking in mathematical language, we can convert two different symbol spaces into one single universal.

And the following relationships will be valid on it:

private static SortedDictionary

List>

{

/* '`' */ { 192, new List(){ 49 }},

/* '1' */ { 49 , new List(){ 50, 87, 81 }},

/* '2' */ { 50 , new List(){ 49, 81, 87, 69, 51 }},

...

/* '-' */ { 189, new List(){ 48, 80, 219, 221, 187 }},

/* '+' */ { 187, new List(){ 189, 219, 221 }},

/* 'q' */ { 81 , new List(){ 49, 50, 87, 83, 65 }},

/* 'w' */ { 87 , new List(){ 49, 81, 65, 83, 68, 69, 51, 50 }},

...

/* '>' */ { 188, new List(){ 77, 74, 75, 76, 190 }},

/* '<' */ { 190, new List(){ 188, 75, 76, 186, 191 }},

/* '?' */ { 191, new List(){ 190, 76, 186, 222 }},

}; Those. we take the keys standing around another key. You can verify this by the example of the figure:

Yes, I know that in addition to the QWERTY keyboard, there are other layouts, and then the keyboard distance table will not match, but we take the most common option.

If someone knows a better way, please write.

Now we are ready for the first stage - calculating the weight of the erroneous symbol in the CostDistanceSymbol function:

As it was before, if the symbols are the same, then the distance is 0:

if (source.Text[sourcePosition] == target.Text[targetPosition])

return 0;If the key codes are the same, then the distance is also 0:

if (source.Codes[sourcePosition] != 0

&& target.Codes[targetPosition] == target.Codes[targetPosition])

return 0;If you have a question why we are comparing key codes after comparing the characters, the answer is simple - we want the words “Water” and “Djlf” to be perceived the same way. And the characters that can be typed in different layouts, for example, ";" or "," regardless of layout, were also perceived the same way.

Further, we already look only at the codes how close the key codes are from each other. If close, then the distance is 1, and if not, then 2 (exactly the same weight as when inserting or removing):

int resultWeight = 0;

List nearKeys;

if (!DistanceCodeKey.TryGetValue(source.Codes[sourcePosition], out nearKeys))

resultWeight = 2;

else

resultWeight = nearKeys.Contains(target.Codes[searchPosition]) ? 1 : 2; In fact, such a small refinement covers a huge number of random errors from the wrong layout, ending with a miss by the key.

But aft of typing errors, a person simply may not know how words are spelled. For example: pike, vulgar. test.

Such words are not only in Russian, but also in English. We also need to consider such cases. For English words, we take as a basis the work of Zobel and Darth on phonetic groups .

“Aeiouy”, “bp”, “ckq”, “dt”, “lr”, “mn”, “gj”, “fpv”, “sxz”, “csz”

And for the Russian, I’ll compose on my own intuition:

“yy ”,“ Eee ”,“ aya ”,“ oye ”,“ uy ”,“ shch ”,“ oa ”,“ yo ”We

transform these phonetic groups into an object of the type:

PhoneticGroupsEng = {

{ ‘a’, { ‘e’, ‘i’, ‘o’, ‘u’, ‘y’} },

{ ‘e’, { ‘a’, ‘i’, ‘o’, ‘u’, ‘y’} }

...

}

This can be done by hand or how I write code, but the result will be the same. And now, after checking the key code, you can check the letters for entering the phonetic group with the same logic for finding errors as in the previous step:

List phoneticGroups;

if (PhoneticGroupsRus.TryGetValue(target.Text[targetPosition], out phoneticGroups))

resultWeight = Math.Min(resultWeight, phoneticGroups.Contains(source.Text[sourcePosition]) ? 1 : 2);

if (PhoneticGroupsEng.TryGetValue(target.Text[targetPosition], out phoneticGroups))

resultWeight = Math.Min(resultWeight, phoneticGroups.Contains(source.Text[sourcePosition]) ? 1 : 2); In addition to the above typos, there are typos of the "typing speed" of the text. This is when two consecutive letters of people, when typing, are confused. Moreover, this is such a common mistake that a certain mathematician Frederic Damerau finalized the Levenshtein algorithm by adding the operation of transposition (permutation) of characters.

From a software point of view, we add the following to the LevenshteinDistance function:

if (i > 1 && j > 1

&& source.Text[i - 1] == target.Text[j - 2]

&& source.Text[i - 2] == target.Text[j - 1]) {

distance[currentRow, j] = Math.Min(distance[currentRow, j], distance[(i - 2) % 3, j - 2] + 2);

}

Remark

The optimized code that we took as a basis has the following form of initializing the distance matrix: var distance = new int [2, m + 1];

Therefore, this section of the code “distance [(i - 2)% 3, ...” will not work in its current form, I will give the correct version of the function at the end of the article.

Therefore, this section of the code “distance [(i - 2)% 3, ...” will not work in its current form, I will give the correct version of the function at the end of the article.

Thus, we have completed the first step on finalizing the code. We pass to the second point. Recall that Levenshtein’s idea is intended to “distinguish between words,” and not part of the words, which is critical for dynamic output as you type letters.

For example, in the directory there are two words:

• “ProjectArmata”

• “Yak”

Entering the query “Pro” in the search bar, we will get “Yak” at the output as a higher priority, due to the fact that replacing two characters and deleting one will be only 3 parrots (taking into account unit coefficients and the classical Levenshtein algorithm, without our modifications), and adding a part of the word "jectArmata" 10 parrots.

The most logical in this situation is to compare not the whole word, but only parts of the searched word with the string entered.

Because the search query consists of three characters “Pro”, we take the first three characters from the word “ProjectArmata” being compared, i.e. “Pro” and get a 100% match. For this particular case - perfect. But let's look at a few more options. Suppose we have the following set of words in our database:

• “Kommunalka”

• “Conveyor”

• “Colony”

• “Cosmetics” The

search query will look like this “Kom”. As a result, the words will have the following coincidence coefficients:

Kommunalka - 0

Conveyor - 1

Colony - 1

Cosmetics - 1

If everything is fine with the word “Kommunalka”, then the other three words look somehow uniform ... Although it is clear that perhaps the person described in one letter and he is looking for “Cosmetics”, not the Conveyor or Colony. And our task is to give him the most suitable result, and not just everything in a row. Moreover, as Damerau said, most mistakes are permutations of letters.

To eliminate such a mistake, we make a small revision:

Take not the first “n” letters, but “n + 1” letters, where n is the number of letters in the request. And then the coefficients at the request of “Com” will be as follows:

Kommunalka - 1

Cosmetics - 1

Conveyor - 2

Colony - 2

“Cosmetics” was corrected, but “Kommunalka” left ... But I like this option more for the following reasons. Usually, if a search is required, then initially the information is shown to the user in the form of a drop-down list, under the search line, as you type letters. The length of this drop-down list is limited by the size of 3-7 entries. As a result, if there are only three entries, then in the second version they will appear: “Kommunalka”, “Cosmetics” and “Conveyor” [as it is stupid first in issuance because of guid or simply because of creation date]. And in the first case there will be “Kommunalka”, “Conveyor”, “Colony” and no “Cosmetics”, because she was unlucky for other reasons ...

Of course, this problem has other solutions. For example, sort first by “n” letters, and then, take those groups of words whose indices match and additionally re-sort “n + 1” letters and you can again, and again ... Here it is already decided individually depending on the data in the database problem being solved, computing power.

Let’s not focus on solving the problem described above ... We are only in the middle of the road and I still have something to tell.

The next nuance in the correct search results comes to us from the times of the USSR. Then they liked to make names consisting of several words combined into one. Yes, and now it is relevant:

Consumer

Union GazPromBank

Russian Agricultural

Bank

ProjectArmata Bank URALSIB

, etc.

[ps I know that some of the names are written with a space, but I needed to pick up vivid examples]

But, if we follow our algorithm, then we always take the first "n + 1" letters from the word. And if a person types the word “Bank”, he will not receive an adequate extradition. Because we will compare the lines:

“BankU”

“Potre”

“GazPr”

“Rosset”

“Proje”

To find the word “bank” regardless of position, we will need to make a floating window by phrase and return the lowest coefficient:

double GetRangeWord(Word source, Word target) {

double rangeWord = double.MaxValue;

Word croppedSource = new Word();

int length = Math.Min(source.Text.Length, target.Text.Length + 1);

for (int i = 0; i <= source.Text.Length - length; i++) {

croppedSource.Text = target.Text.Substring(i, length);

croppedSource.Codes = target.Codes.Skip(i).Take(length).ToList();

rangeWord = Math.Min(LevenshteinDistance(croppedSource, target) + (i * 2 / 10.0), rangeWord);

}

return rangeWord;

}As you can see, to the error obtained after calculating the Levenshtein distance, we add another value (i * 2 / 10.0). If with “i * 2” - everything is clear, this is an error inserting letters at the beginning of a word, like the classical algorithm for finding the Levenshtein distance, but why do we divide it by 10? The bottom line is that if we just leave “i * 2”, then the words that are dumb are shorter in length will fall into the search result and we will again leave the name of the banks. And therefore, the coefficient has to be divided by 10, which reduces this bias. Why exactly 10? For our base, it suited more or less normally, but I do not exclude that it can be divided into more. Everything depends on the length of the words and on how similar the words are. I will give the calculation of the most suitable coefficient a bit later.

With words, like a search unit, sort of sorted out, now we turn to phrases. And to begin with, again, a few examples:

• warface

• world of tanks

• world of warplanes

• world of ships

Let's understand what we basically need from a phrase search. We need to add missing words, remove unnecessary phrases, and also, so that they change places. Somewhere I already said this ... And, for sure, I remembered ... At the beginning of the article, when I talked about the words and the distance function of Levenshtein. In order not to waste your time, I’ll say right away that I couldn’t use it in its pure form, but, inspired by it, I managed to write a code applicable to phrases.

As with the implementation of the Levenshtein distance function, if one of the phrases is empty, then we return the error value equal to the insertion or deletion [depending on which side the empty phrase came from] of all letters.

if (!source.Words.Any()) {

if (!search.Words.Any())

return 0;

return search.Words.Sum(w => w.Text.Length) * 2 * 100;

}

if (!search.Words.Any()) {

return source.Words.Sum(w => w.Text.Length) * 2 * 100;

}Those. we summarize the number of letters in a phrase, multiply by 2 (this coefficient was chosen at the beginning of the article for letters) and multiply by 100. These 100 are the most contradictory coefficient in the whole algorithm. Why it will be needed will be presented more clearly below, and after that I will explain that in theory it needs to be calculated, and not just taken from the ceiling.

double result = 0;

for (int i = 0; i < search.Words.Count; i++) {

double minRangeWord = double.MaxValue;

int minIndex = 0;

for (int j = 0; j < source.Words.Count; j++) {

double currentRangeWord = GetRangeWord(source.Words[j], search.Words[i], translation);

if (currentRangeWord < minRangeWord) {

minRangeWord = currentRangeWord;

minIndex = j;

}

}

result += minRangeWord * 100 + (Math.Abs(i - minIndex) / 10.0);

}

return result;

}In this code, we compare each word from the record in the database with each of the search queries and take the lowest coefficients for each word. The total coefficient for the phrase is the following:

result + = minRangeWord * 100 + (Math.Abs (i - minIndex) / 10.0);

As you can see, the whole logic is the same as described above, we multiply the minimum coefficient for the word minRangeWord by our 100 and add it with a coefficient showing how close the word is to its most suitable position (Math.Abs (i - minIndex) / 10.0).

Multiplication by 100 is used to compensate for the additional coefficient that may arise when searching for the most suitable position of the search word in the previous step. As a result, this coefficient can be calculated as the largest between the phrase in the search line and all the words in the database. Not in phrases, but in words. But for this it is necessary to measure the Levenshtein distance with our modifications into the "empty", which is very wasteful in resources.

Those. run the GetRangeWord function and take the maximum value of the deviation of the phrase “i * 2” from the most suitable place. And after taking the highest value, bring it to the nearest larger tenfold number (10, 100, 1000, 10000, 100000, etc.). Thus we get two values:

The first is the value by which you want to divide the mixed word in the GetRangeWord function. Second, the value to be multiplied by minRangeWord to compensate for the previous offset. This way we get accurate similarity phrases. But in practice, you can neglect large deviations, and roughly estimate the average ... What I actually did.

In principle, everything. The main issues I have sorted out are only a small refinement of the "transliteration of characters." The difference between the search described above and the search in transliteration is that in the CostDistanceSymbol function we will not adjust the response values according to the key distances, because issuance in this case will not be correct.

It can also be noted that an adequate search result starts with 3 characters in the search string, if the character is less, it is better to use a more clumsy method with exact string matching.

Next, I will give the most complete code of everything described above, but first:

1) This code was written by me personally in my free time.

2) The algorithm was thought up by me personally in my free time.

Separately, I want to say thank you for the links and inspiration: Dmitry Panyushkin, Pavel Grigorenko.

The names that are given in the article are taken from open sources and belong to their owners. Not an advertisement.

Thanks to everyone who read. Criticism and advice are welcome.

Full code

public class DistanceAlferov {

class Word {

public string Text { get; set; }

public List Codes { get; set; } = new List();

}

class AnalizeObject {

public string Origianl { get; set; }

public List Words { get; set; } = new List();

}

class LanguageSet {

public AnalizeObject Rus { get; set; } = new AnalizeObject();

public AnalizeObject Eng { get; set; } = new AnalizeObject();

}

List Samples { get; set; } = new List();

public void SetData(List> datas) {

List> codeKeys = CodeKeysRus.Concat(CodeKeysEng).ToList();

foreach (var data in datas) {

LanguageSet languageSet = new LanguageSet();

languageSet.Rus.Origianl = data.Item1;

if (data.Item1.Length > 0) {

languageSet.Rus.Words = data.Item1.Split(' ').Select(w => new Word() {

Text = w.ToLower(),

Codes = GetKeyCodes(codeKeys, w)

}).ToList();

}

languageSet.Eng.Origianl = data.Item2;

if (data.Item2.Length > 0) {

languageSet.Eng.Words = data.Item2.Split(' ').Select(w => new Word() {

Text = w.ToLower(),

Codes = GetKeyCodes(codeKeys, w)

}).ToList();

}

this.Samples.Add(languageSet);

}

}

public List> Search(string targetStr) {

List> codeKeys = CodeKeysRus.Concat(CodeKeysEng).ToList();

AnalizeObject originalSearchObj = new AnalizeObject();

if (targetStr.Length > 0) {

originalSearchObj.Words = targetStr.Split(' ').Select(w => new Word() {

Text = w.ToLower(),

Codes = GetKeyCodes(codeKeys, w)

}).ToList();

}

AnalizeObject translationSearchObj = new AnalizeObject();

if (targetStr.Length > 0) {

translationSearchObj.Words = targetStr.Split(' ').Select(w => {

string translateStr = Transliterate(w.ToLower(), Translit_Ru_En);

return new Word() {

Text = translateStr,

Codes = GetKeyCodes(codeKeys, translateStr)

};

}).ToList();

}

var result = new List>();

foreach (LanguageSet sampl in Samples) {

int languageType = 1;

double cost = GetRangePhrase(sampl.Rus, originalSearchObj, false);

double tempCost = GetRangePhrase(sampl.Eng, originalSearchObj, false);

if (cost > tempCost) {

cost = tempCost;

languageType = 3;

}

// Проверка транслетерационной строки

tempCost = GetRangePhrase(sampl.Rus, translationSearchObj, true);

if (cost > tempCost) {

cost = tempCost;

languageType = 2;

}

tempCost = GetRangePhrase(sampl.Eng, translationSearchObj, true);

if (cost > tempCost) {

cost = tempCost;

languageType = 3;

}

result.Add(new Tuple(sampl.Rus.Origianl, sampl.Eng.Origianl, cost, languageType));

}

return result;

}

private double GetRangePhrase(AnalizeObject source, AnalizeObject search, bool translation) {

if (!source.Words.Any()) {

if (!search.Words.Any())

return 0;

return search.Words.Sum(w => w.Text.Length) * 2 * 100;

}

if (!search.Words.Any()) {

return source.Words.Sum(w => w.Text.Length) * 2 * 100;

}

double result = 0;

for (int i = 0; i < search.Words.Count; i++) {

double minRangeWord = double.MaxValue;

int minIndex = 0;

for (int j = 0; j < source.Words.Count; j++) {

double currentRangeWord = GetRangeWord(source.Words[j], search.Words[i], translation);

if (currentRangeWord < minRangeWord) {

minRangeWord = currentRangeWord;

minIndex = j;

}

}

result += minRangeWord * 100 + (Math.Abs(i - minIndex) / 10.0);

}

return result;

}

private double GetRangeWord(Word source, Word target, bool translation) {

double minDistance = double.MaxValue;

Word croppedSource = new Word();

int length = Math.Min(source.Text.Length, target.Text.Length + 1);

for (int i = 0; i <= source.Text.Length - length; i++) {

croppedSource.Text = source.Text.Substring(i, length);

croppedSource.Codes = source.Codes.Skip(i).Take(length).ToList();

minDistance = Math.Min(minDistance, LevenshteinDistance(croppedSource, target, croppedSource.Text.Length == source.Text.Length, translation) + (i * 2 / 10.0));

}

return minDistance;

}

private int LevenshteinDistance(Word source, Word target, bool fullWord, bool translation) {

if (String.IsNullOrEmpty(source.Text)) {

if (String.IsNullOrEmpty(target.Text))

return 0;

return target.Text.Length * 2;

}

if (String.IsNullOrEmpty(target.Text))

return source.Text.Length * 2;

int n = source.Text.Length;

int m = target.Text.Length;

//TODO Убрать в параметры (для оптимизации)

int[,] distance = new int[3, m + 1];

// Initialize the distance 'matrix'

for (var j = 1; j <= m; j++)

distance[0, j] = j * 2;

var currentRow = 0;

for (var i = 1; i <= n; ++i) {

currentRow = i % 3;

var previousRow = (i - 1) % 3;

distance[currentRow, 0] = i * 2;

for (var j = 1; j <= m; j++) {

distance[currentRow, j] = Math.Min(Math.Min(

distance[previousRow, j] + ((!fullWord && i == n) ? 2 - 1 : 2),

distance[currentRow, j - 1] + ((!fullWord && i == n) ? 2 - 1 : 2)),

distance[previousRow, j - 1] + CostDistanceSymbol(source, i - 1, target, j - 1, translation));

if (i > 1 && j > 1 && source.Text[i - 1] == target.Text[j - 2]

&& source.Text[i - 2] == target.Text[j - 1]) {

distance[currentRow, j] = Math.Min(distance[currentRow, j], distance[(i - 2) % 3, j - 2] + 2);

}

}

}

return distance[currentRow, m];

}

private int CostDistanceSymbol(Word source, int sourcePosition, Word search, int searchPosition, bool translation) {

if (source.Text[sourcePosition] == search.Text[searchPosition])

return 0;

if (translation)

return 2;

if (source.Codes[sourcePosition] != 0 && source.Codes[sourcePosition] == search.Codes[searchPosition])

return 0;

int resultWeight = 0;

List nearKeys;

if (!DistanceCodeKey.TryGetValue(source.Codes[sourcePosition], out nearKeys))

resultWeight = 2;

else

resultWeight = nearKeys.Contains(search.Codes[searchPosition]) ? 1 : 2;

List phoneticGroups;

if (PhoneticGroupsRus.TryGetValue(search.Text[searchPosition], out phoneticGroups))

resultWeight = Math.Min(resultWeight, phoneticGroups.Contains(source.Text[sourcePosition]) ? 1 : 2);

if (PhoneticGroupsEng.TryGetValue(search.Text[searchPosition], out phoneticGroups))

resultWeight = Math.Min(resultWeight, phoneticGroups.Contains(source.Text[sourcePosition]) ? 1 : 2);

return resultWeight;

}

private List GetKeyCodes(List> codeKeys, string word) {

return word.ToLower().Select(ch => codeKeys.FirstOrDefault(ck => ck.Key == ch).Value).ToList();

}

private string Transliterate(string text, Dictionary cultureFrom) {

IEnumerable translateText = text.SelectMany(t => {

string translateChar;

if (cultureFrom.TryGetValue(t, out translateChar))

return translateChar;

return t.ToString();

});

return string.Concat(translateText);

}

#region Блок Фонетических групп

static Dictionary> PhoneticGroupsRus = new Dictionary>();

static Dictionary> PhoneticGroupsEng = new Dictionary>();

#endregion

static DistanceAlferov() {

SetPhoneticGroups(PhoneticGroupsRus, new List() { "ыий", "эе", "ая", "оёе", "ую", "шщ", "оа" });

SetPhoneticGroups(PhoneticGroupsEng, new List() { "aeiouy", "bp", "ckq", "dt", "lr", "mn", "gj", "fpv", "sxz", "csz" });

}

private static void SetPhoneticGroups(Dictionary> resultPhoneticGroups, List phoneticGroups) {

foreach (string group in phoneticGroups)

foreach (char symbol in group)

if (!resultPhoneticGroups.ContainsKey(symbol))

resultPhoneticGroups.Add(symbol, phoneticGroups.Where(pg => pg.Contains(symbol)).SelectMany(pg => pg).Distinct().Where(ch => ch != symbol).ToList());

}

#region Блок для сопоставления клавиатуры

///

/// Близость кнопок клавиатуры

///

private static Dictionary> DistanceCodeKey = new Dictionary>

{

/* '`' */ { 192 , new List(){ 49 }},

/* '1' */ { 49 , new List(){ 50, 87, 81 }},

/* '2' */ { 50 , new List(){ 49, 81, 87, 69, 51 }},

/* '3' */ { 51 , new List(){ 50, 87, 69, 82, 52 }},

/* '4' */ { 52 , new List(){ 51, 69, 82, 84, 53 }},

/* '5' */ { 53 , new List(){ 52, 82, 84, 89, 54 }},

/* '6' */ { 54 , new List(){ 53, 84, 89, 85, 55 }},

/* '7' */ { 55 , new List(){ 54, 89, 85, 73, 56 }},

/* '8' */ { 56 , new List(){ 55, 85, 73, 79, 57 }},

/* '9' */ { 57 , new List(){ 56, 73, 79, 80, 48 }},

/* '0' */ { 48 , new List(){ 57, 79, 80, 219, 189 }},

/* '-' */ { 189 , new List(){ 48, 80, 219, 221, 187 }},

/* '+' */ { 187 , new List(){ 189, 219, 221 }},

/* 'q' */ { 81 , new List(){ 49, 50, 87, 83, 65 }},

/* 'w' */ { 87 , new List(){ 49, 81, 65, 83, 68, 69, 51, 50 }},

/* 'e' */ { 69 , new List(){ 50, 87, 83, 68, 70, 82, 52, 51 }},

/* 'r' */ { 82 , new List(){ 51, 69, 68, 70, 71, 84, 53, 52 }},

/* 't' */ { 84 , new List(){ 52, 82, 70, 71, 72, 89, 54, 53 }},

/* 'y' */ { 89 , new List(){ 53, 84, 71, 72, 74, 85, 55, 54 }},

/* 'u' */ { 85 , new List(){ 54, 89, 72, 74, 75, 73, 56, 55 }},

/* 'i' */ { 73 , new List(){ 55, 85, 74, 75, 76, 79, 57, 56 }},

/* 'o' */ { 79 , new List(){ 56, 73, 75, 76, 186, 80, 48, 57 }},

/* 'p' */ { 80 , new List(){ 57, 79, 76, 186, 222, 219, 189, 48 }},

/* '[' */ { 219 , new List(){ 48, 186, 222, 221, 187, 189 }},

/* ']' */ { 221 , new List(){ 189, 219, 187 }},

/* 'a' */ { 65 , new List(){ 81, 87, 83, 88, 90 }},

/* 's' */ { 83 , new List(){ 81, 65, 90, 88, 67, 68, 69, 87, 81 }},

/* 'd' */ { 68 , new List(){ 87, 83, 88, 67, 86, 70, 82, 69 }},

/* 'f' */ { 70 , new List(){ 69, 68, 67, 86, 66, 71, 84, 82 }},

/* 'g' */ { 71 , new List(){ 82, 70, 86, 66, 78, 72, 89, 84 }},

/* 'h' */ { 72 , new List(){ 84, 71, 66, 78, 77, 74, 85, 89 }},

/* 'j' */ { 74 , new List(){ 89, 72, 78, 77, 188, 75, 73, 85 }},

/* 'k' */ { 75 , new List(){ 85, 74, 77, 188, 190, 76, 79, 73 }},

/* 'l' */ { 76 , new List(){ 73, 75, 188, 190, 191, 186, 80, 79 }},

/* ';' */ { 186 , new List(){ 79, 76, 190, 191, 222, 219, 80 }},

/* '\''*/ { 222 , new List(){ 80, 186, 191, 221, 219 }},

/* 'z' */ { 90 , new List(){ 65, 83, 88 }},

/* 'x' */ { 88 , new List(){ 90, 65, 83, 68, 67 }},

/* 'c' */ { 67 , new List(){ 88, 83, 68, 70, 86 }},

/* 'v' */ { 86 , new List(){ 67, 68, 70, 71, 66 }},

/* 'b' */ { 66 , new List(){ 86, 70, 71, 72, 78 }},

/* 'n' */ { 78 , new List(){ 66, 71, 72, 74, 77 }},

/* 'm' */ { 77 , new List(){ 78, 72, 74, 75, 188 }},

/* '<' */ { 188 , new List(){ 77, 74, 75, 76, 190 }},

/* '>' */ { 190 , new List(){ 188, 75, 76, 186, 191 }},

/* '?' */ { 191 , new List(){ 190, 76, 186, 222 }},

};

///

/// Коды клавиш русскоязычной клавиатуры

///

private static Dictionary CodeKeysRus = new Dictionary

{

{ 'ё' , 192 },

{ '1' , 49 },

{ '2' , 50 },

{ '3' , 51 },

{ '4' , 52 },

{ '5' , 53 },

{ '6' , 54 },

{ '7' , 55 },

{ '8' , 56 },

{ '9' , 57 },

{ '0' , 48 },

{ '-' , 189 },

{ '=' , 187 },

{ 'й' , 81 },

{ 'ц' , 87 },

{ 'у' , 69 },

{ 'к' , 82 },

{ 'е' , 84 },

{ 'н' , 89 },

{ 'г' , 85 },

{ 'ш' , 73 },

{ 'щ' , 79 },

{ 'з' , 80 },

{ 'х' , 219 },

{ 'ъ' , 221 },

{ 'ф' , 65 },

{ 'ы' , 83 },

{ 'в' , 68 },

{ 'а' , 70 },

{ 'п' , 71 },

{ 'р' , 72 },

{ 'о' , 74 },

{ 'л' , 75 },

{ 'д' , 76 },

{ 'ж' , 186 },

{ 'э' , 222 },

{ 'я' , 90 },

{ 'ч' , 88 },

{ 'с' , 67 },

{ 'м' , 86 },

{ 'и' , 66 },

{ 'т' , 78 },

{ 'ь' , 77 },

{ 'б' , 188 },

{ 'ю' , 190 },

{ '.' , 191 },

{ '!' , 49 },

{ '"' , 50 },

{ '№' , 51 },

{ ';' , 52 },

{ '%' , 53 },

{ ':' , 54 },

{ '?' , 55 },

{ '*' , 56 },

{ '(' , 57 },

{ ')' , 48 },

{ '_' , 189 },

{ '+' , 187 },

{ ',' , 191 },

};

///

/// Коды клавиш англиской клавиатуры

///

private static Dictionary CodeKeysEng = new Dictionary

{

{ '`', 192 },

{ '1', 49 },

{ '2', 50 },

{ '3', 51 },

{ '4', 52 },

{ '5', 53 },

{ '6', 54 },

{ '7', 55 },

{ '8', 56 },

{ '9', 57 },

{ '0', 48 },

{ '-', 189 },

{ '=', 187 },

{ 'q', 81 },

{ 'w', 87 },

{ 'e', 69 },

{ 'r', 82 },

{ 't', 84 },

{ 'y', 89 },

{ 'u', 85 },

{ 'i', 73 },

{ 'o', 79 },

{ 'p', 80 },

{ '[', 219 },

{ ']', 221 },

{ 'a', 65 },

{ 's', 83 },

{ 'd', 68 },

{ 'f', 70 },

{ 'g', 71 },

{ 'h', 72 },

{ 'j', 74 },

{ 'k', 75 },

{ 'l', 76 },

{ ';', 186 },

{ '\'', 222 },

{ 'z', 90 },

{ 'x', 88 },

{ 'c', 67 },

{ 'v', 86 },

{ 'b', 66 },

{ 'n', 78 },

{ 'm', 77 },

{ ',', 188 },

{ '.', 190 },

{ '/', 191 },

{ '~' , 192 },

{ '!' , 49 },

{ '@' , 50 },

{ '#' , 51 },

{ '$' , 52 },

{ '%' , 53 },

{ '^' , 54 },

{ '&' , 55 },

{ '*' , 56 },

{ '(' , 57 },

{ ')' , 48 },

{ '_' , 189 },

{ '+' , 187 },

{ '{', 219 },

{ '}', 221 },

{ ':', 186 },

{ '"', 222 },

{ '<', 188 },

{ '>', 190 },

{ '?', 191 },

};

#endregion

#region Блок транслитерации

///

/// Транслитерация Русский => ASCII (ISO 9-95)

///

private static Dictionary Translit_Ru_En = new Dictionary

{

{ 'а', "a" },

{ 'б', "b" },

{ 'в', "v" },

{ 'г', "g" },

{ 'д', "d" },

{ 'е', "e" },

{ 'ё', "yo" },

{ 'ж', "zh" },

{ 'з', "z" },

{ 'и', "i" },

{ 'й', "i" },

{ 'к', "k" },

{ 'л', "l" },

{ 'м', "m" },

{ 'н', "n" },

{ 'о', "o" },

{ 'п', "p" },

{ 'р', "r" },

{ 'с', "s" },

{ 'т', "t" },

{ 'у', "u" },

{ 'ф', "f" },

{ 'х', "x" },

{ 'ц', "c" },

{ 'ч', "ch" },

{ 'ш', "sh" },

{ 'щ', "shh" },

{ 'ъ', "" },

{ 'ы', "y" },

{ 'ь', "'" },

{ 'э', "e" },

{ 'ю', "yu" },

{ 'я', "ya" },

};

#endregion

} Vitaly Alferov, 2017