Load optimization in the “Warehouse Balance” task using partitioning in SQL Server

This article provides a solution for optimizing Transact SQL for calculating stock balances. Applied: partitioning tables and materialized views.

The task must be solved on SQL Server 2014 Enterprise Edition (x64). The company has many warehouses. Each warehouse has several thousand shipments and goods received daily. There is a table of movements of goods in the warehouse income / expense. Necessary to implement:

Calculation of the balance sheet for the selected date and time (up to an hour) for all / any warehouses for each product. For analytics, it is necessary to create an object (function, table, view) with the help of which for the selected date range for all warehouses and products to display the data of the source table and an additional calculation column - the remainder of the position’s warehouse.

These calculations are supposed to be performed on a schedule with different date ranges and should work at an acceptable time. Those. if it is necessary to display a table with balances for the last hour or day, then the execution time should be as fast as possible, as well as if it is necessary to display the same data for the last 3 years, for subsequent upload to the analytical database.

Technical Details The table itself:

Create a completed table. In order for you to test and watch the results with me, I suggest creating and filling out the dbo.Turnover table with the script:

I had this script on a PC with an SSD drive running about 22 minutes, and the size of the table took about 8GB on the hard drive. You can reduce the number of years, and the number of warehouses, in order to reduce the time of creating and filling the table. But I recommend leaving some good amount for evaluating query plans, at least 1-2 gigabytes.

Group the data up to an hour.

Next, we need to group the amounts of products in the warehouse for the studied period of time, in our problem statement it is one hour (up to a minute, up to 15 minutes, a day. But obviously up to milliseconds hardly anyone needs reporting). For comparisons in the session (window) where we execute our requests, execute the command - set statistics time on ;. Next, we execute the queries themselves and look at the query plans:

If we make a final request with a balance that is considered a cumulative total of our quantity, then the request and the request plan will be as follows:

Grouping optimization

Everything is quite simple here. The query itself without a cumulative total can be optimized by a materialized view (index view). To build a materialized view, what should be summed must not be NULL, sum (Operation * Quantity), or make each field NOT NULL or add isnull / coalesce to the expression. I propose to create a materialized view.

And build a clustered index on it. In the index, we indicate the field order as in the grouping (for grouping, so much order is not important, it is important that all the grouping fields are in the index) and progressively (the order is important here - first what is in partition by, then what is in partition order by):

Now, after building the clustered index, we can re-execute the queries by changing the aggregation of the sum as in the view:

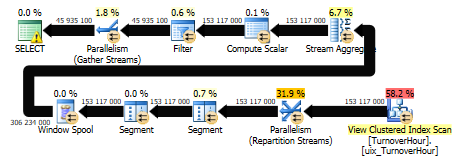

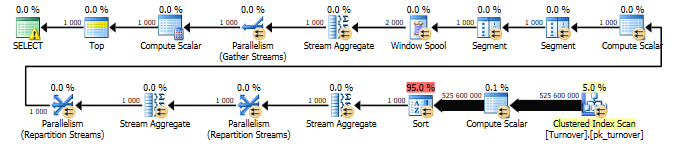

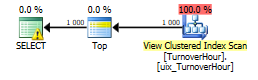

Steel query plans:

Cost 0.008

Cost 0.008

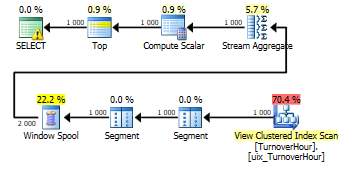

Cost 0.01

Cost 0.01

In total, we see that with an indexed query, the query does not scan the table by grouping the data, but the cluster index, in which everything is already grouped. And accordingly, the execution time was reduced from 321797 milliseconds to 116 ms., I.e. 2774 times.

This would be the end of our optimization, if not for the fact that we often need not the whole table (view) but part of it for the selected range.

Intermediate balances

As a result, we need to quickly execute the following request:

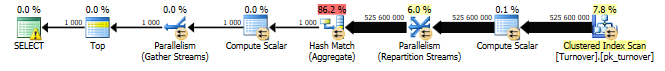

The cost of the plan = 3103. And imagine what would happen if it weren’t for the materialized idea that I went but for the table itself.

The output of the materialized presentation and balance sheet for each product in stock on a date with a time rounded up to an hour. In order to calculate the balance - it is necessary from the very beginning (from zero balance) to sum all the quantities to the last date indicated (@finish), and after that, in the summed result set, cut off the data later than the start parameter .

Here, obviously, intermediate calculated balances will help. For example, on the 1st day of every month or on every Sunday. Having such balances, the task boils down to the need to summarize previously calculated balances and calculate the balance not from the beginning, but from the last calculated date. For experiments and comparisons, we construct an additional non-clustered index by date:

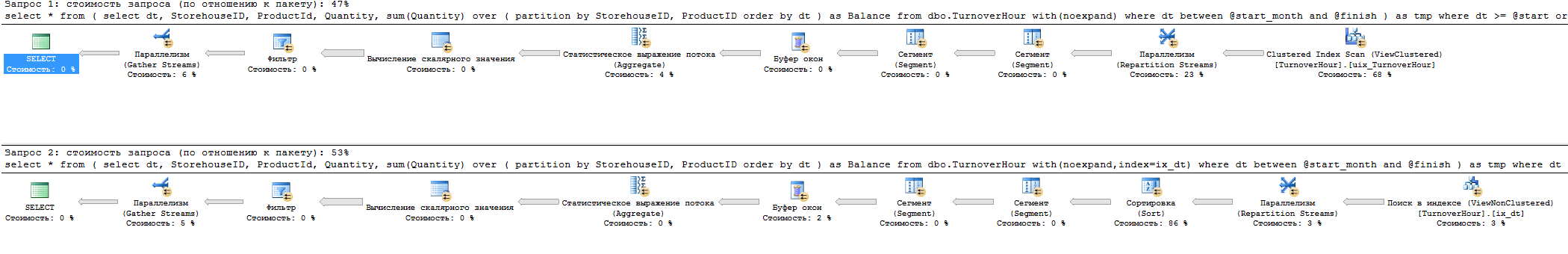

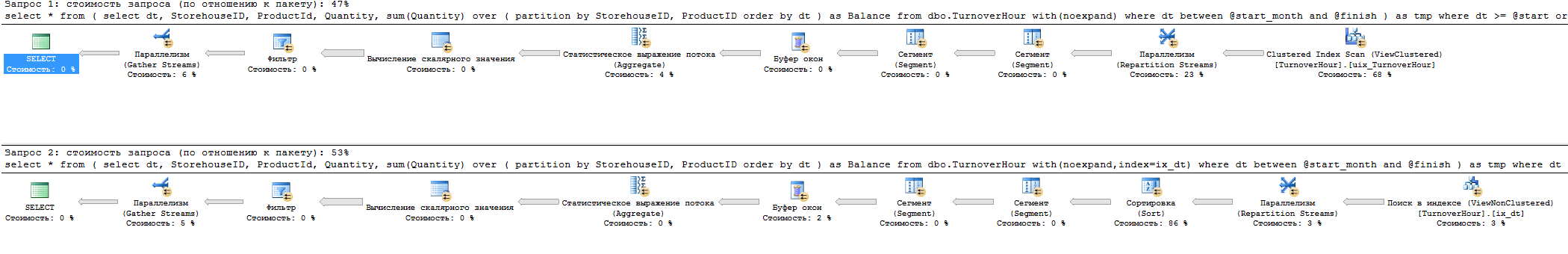

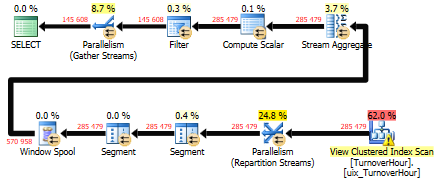

In general, this query, even having an index by date that completely covers all the fields affected in the query, will select our clustered index and scan. Instead of searching by date, followed by sorting. I propose to fulfill the following 2 queries and compare what happened, then we will analyze what is still better:

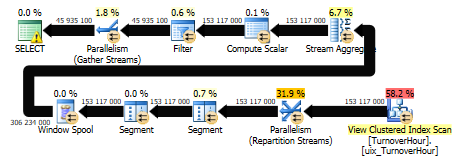

From time it is seen that the index by date is much faster. But the query plans in comparison are as follows:

The cost of the 1st request with the automatically selected cluster index = 2752, but the cost with the index by the date of the request = 3119.

Be that as it may, here we need two tasks from the index: sorting and selecting a range. One index available to us does not solve this problem. In this example, the data range is only for 1 day, but if there is a longer period, but not all, for example, for 2 months, then unambiguously searching by index will not be effective due to the cost of sorting.

Here, from the visible optimal solutions, I see:

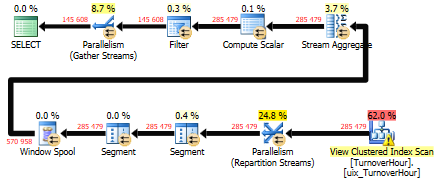

In the project, as a result, I made the 3rd option. Partitioning the cluster index of a materialized view. And if the sample goes over a period of one month, then in fact the optimizer affects only one section, making it scan without sorting. And clipping of unused data occurs at the level of clipping of unused sections. Here, if you search from 10 to 20, we don’t have an exact search for these dates, but search for data from the 1st to the last day of the month, then scan this range in a sorted index with filtering during scanning by the set dates.

We partition the cluster index of views. First of all, remove all indexes from the view:

And create a function and partition scheme:

In fact, data in one section is selected and scanned by the cluster index quickly enough. It should be added here that when the request is parameterized, the unpleasant effect of parameter sniffing occurs, option (recompile) is treated.

Formulation of the problem

The task must be solved on SQL Server 2014 Enterprise Edition (x64). The company has many warehouses. Each warehouse has several thousand shipments and goods received daily. There is a table of movements of goods in the warehouse income / expense. Necessary to implement:

Calculation of the balance sheet for the selected date and time (up to an hour) for all / any warehouses for each product. For analytics, it is necessary to create an object (function, table, view) with the help of which for the selected date range for all warehouses and products to display the data of the source table and an additional calculation column - the remainder of the position’s warehouse.

These calculations are supposed to be performed on a schedule with different date ranges and should work at an acceptable time. Those. if it is necessary to display a table with balances for the last hour or day, then the execution time should be as fast as possible, as well as if it is necessary to display the same data for the last 3 years, for subsequent upload to the analytical database.

Technical Details The table itself:

create table dbo.Turnover

(

id int identity primary key,

dt datetime not null,

ProductID int not null,

StorehouseID int not null,

Operation smallint not null check (Operation in (-1,1)), -- +1 приход на склад, -1 расход со склада

Quantity numeric(20,2) not null,

Cost money not null

)Dt - Date the time of receipt / write-off to / from the warehouse.

ProductID - Product

StorehouseID - warehouse

Operation - 2 values income or consumption

Quantity - the amount of product in stock. It can be material if the product is not in pieces, but, for example, in kilograms.

Cost - the cost of the batch of the product.

Task research

Create a completed table. In order for you to test and watch the results with me, I suggest creating and filling out the dbo.Turnover table with the script:

if object_id('dbo.Turnover','U') is not null drop table dbo.Turnover;

go

with times as

(

select 1 id

union all

select id+1

from times

where id < 10*365*24*60 -- 10 лет * 365 дней * 24 часа * 60 минут = столько минут в 10 лет

)

, storehouse as

(

select 1 id

union all

select id+1

from storehouse

where id < 100 -- количество складов

)

select

identity(int,1,1) id,

dateadd(minute, t.id, convert(datetime,'20060101',120)) dt,

1+abs(convert(int,convert(binary(4),newid()))%1000) ProductID, -- 1000 - количество разных продуктов

s.id StorehouseID,

case when abs(convert(int,convert(binary(4),newid()))%3) in (0,1) then 1 else -1 end Operation, -- какой то приход и расход, из случайных сделаем из 3х вариантов 2 приход 1 расход

1+abs(convert(int,convert(binary(4),newid()))%100) Quantity

into dbo.Turnover

from times t cross join storehouse s

option(maxrecursion 0);

go

--- 15 min

alter table dbo.Turnover alter column id int not null

go

alter table dbo.Turnover add constraint pk_turnover primary key (id) with(data_compression=page)

go

-- 6 minI had this script on a PC with an SSD drive running about 22 minutes, and the size of the table took about 8GB on the hard drive. You can reduce the number of years, and the number of warehouses, in order to reduce the time of creating and filling the table. But I recommend leaving some good amount for evaluating query plans, at least 1-2 gigabytes.

Group the data up to an hour.

Next, we need to group the amounts of products in the warehouse for the studied period of time, in our problem statement it is one hour (up to a minute, up to 15 minutes, a day. But obviously up to milliseconds hardly anyone needs reporting). For comparisons in the session (window) where we execute our requests, execute the command - set statistics time on ;. Next, we execute the queries themselves and look at the query plans:

select top(1000)

convert(datetime,convert(varchar(13),dt,120)+':00',120) as dt, -- округляем до часа

ProductID,

StorehouseID,

sum(Operation*Quantity) as Quantity

from dbo.Turnover

group by

convert(datetime,convert(varchar(13),dt,120)+':00',120),

ProductID,

StorehouseID

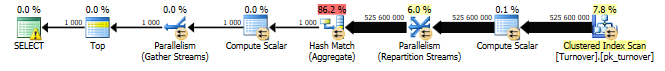

The request cost is 12406

(rows processed: 1000)

SQL Server uptime:

CPU time = 2096594 ms, elapsed time = 321797 ms.

If we make a final request with a balance that is considered a cumulative total of our quantity, then the request and the request plan will be as follows:

select top(1000)

convert(datetime,convert(varchar(13),dt,120)+':00',120) as dt, -- округляем до часа

ProductID,

StorehouseID,

sum(Operation*Quantity) as Quantity,

sum(sum(Operation*Quantity)) over

(

partition by StorehouseID, ProductID

order by convert(datetime,convert(varchar(13),dt,120)+':00',120)

) as Balance

from dbo.Turnover

group by

convert(datetime,convert(varchar(13),dt,120)+':00',120),

ProductID,

StorehouseID

Request cost - 19329

(rows processed: 1000)

SQL Server uptime:

CPU time = 2413155 ms, elapsed time = 344631 ms.

Grouping optimization

Everything is quite simple here. The query itself without a cumulative total can be optimized by a materialized view (index view). To build a materialized view, what should be summed must not be NULL, sum (Operation * Quantity), or make each field NOT NULL or add isnull / coalesce to the expression. I propose to create a materialized view.

create view dbo.TurnoverHour

with schemabinding as

select

convert(datetime,convert(varchar(13),dt,120)+':00',120) as dt, -- округляем до часа

ProductID,

StorehouseID,

sum(isnull(Operation*Quantity,0)) as Quantity,

count_big(*) qty

from dbo.Turnover

group by

convert(datetime,convert(varchar(13),dt,120)+':00',120),

ProductID,

StorehouseID

goAnd build a clustered index on it. In the index, we indicate the field order as in the grouping (for grouping, so much order is not important, it is important that all the grouping fields are in the index) and progressively (the order is important here - first what is in partition by, then what is in partition order by):

create unique clustered index uix_TurnoverHour on dbo.TurnoverHour (StorehouseID, ProductID, dt)Now, after building the clustered index, we can re-execute the queries by changing the aggregation of the sum as in the view:

select top(1000)

convert(datetime,convert(varchar(13),dt,120)+':00',120) as dt, -- округляем до часа

ProductID,

StorehouseID,

sum(isnull(Operation*Quantity,0)) as Quantity

from dbo.Turnover

group by

convert(datetime,convert(varchar(13),dt,120)+':00',120),

ProductID,

StorehouseID

select top(1000)

convert(datetime,convert(varchar(13),dt,120)+':00',120) as dt, -- округляем до часа

ProductID,

StorehouseID,

sum(isnull(Operation*Quantity,0)) as Quantity,

sum(sum(isnull(Operation*Quantity,0))) over

(

partition by StorehouseID, ProductID

order by convert(datetime,convert(varchar(13),dt,120)+':00',120)

) as Balance

from dbo.Turnover

group by

convert(datetime,convert(varchar(13),dt,120)+':00',120),

ProductID,

StorehouseIDSteel query plans:

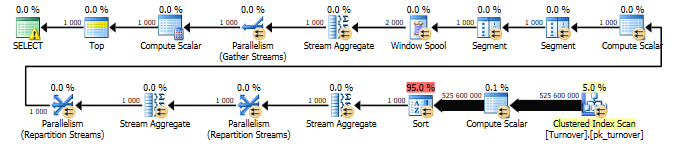

Cost 0.008

Cost 0.008  Cost 0.01

Cost 0.01SQL Server uptime:

CPU time = 31 ms, elapsed time = 116 ms.

(rows processed: 1000)

SQL Server uptime:

CPU time = 0 ms, elapsed time = 151 ms.

In total, we see that with an indexed query, the query does not scan the table by grouping the data, but the cluster index, in which everything is already grouped. And accordingly, the execution time was reduced from 321797 milliseconds to 116 ms., I.e. 2774 times.

This would be the end of our optimization, if not for the fact that we often need not the whole table (view) but part of it for the selected range.

Intermediate balances

As a result, we need to quickly execute the following request:

set dateformat ymd;

declare

@start datetime = '2015-01-02',

@finish datetime = '2015-01-03'

select *

from

(

select

dt,

StorehouseID,

ProductId,

Quantity,

sum(Quantity) over

(

partition by StorehouseID, ProductID

order by dt

) as Balance

from dbo.TurnoverHour with(noexpand)

where dt <= @finish

) as tmp

where dt >= @start

The cost of the plan = 3103. And imagine what would happen if it weren’t for the materialized idea that I went but for the table itself.

The output of the materialized presentation and balance sheet for each product in stock on a date with a time rounded up to an hour. In order to calculate the balance - it is necessary from the very beginning (from zero balance) to sum all the quantities to the last date indicated (@finish), and after that, in the summed result set, cut off the data later than the start parameter .

Here, obviously, intermediate calculated balances will help. For example, on the 1st day of every month or on every Sunday. Having such balances, the task boils down to the need to summarize previously calculated balances and calculate the balance not from the beginning, but from the last calculated date. For experiments and comparisons, we construct an additional non-clustered index by date:

create index ix_dt on dbo.TurnoverHour (dt) include (Quantity) with(data_compression=page); --7 min

И наш запрос будет вида:

set dateformat ymd;

declare

@start datetime = '2015-01-02',

@finish datetime = '2015-01-03'

declare

@start_month datetime = convert(datetime,convert(varchar(9),@start,120)+'1',120)

select *

from

(

select

dt,

StorehouseID,

ProductId,

Quantity,

sum(Quantity) over

(

partition by StorehouseID, ProductID

order by dt

) as Balance

from dbo.TurnoverHour with(noexpand)

where dt between @start_month and @finish

) as tmp

where dt >= @start

order by StorehouseID, ProductID, dtIn general, this query, even having an index by date that completely covers all the fields affected in the query, will select our clustered index and scan. Instead of searching by date, followed by sorting. I propose to fulfill the following 2 queries and compare what happened, then we will analyze what is still better:

set dateformat ymd;

declare

@start datetime = '2015-01-02',

@finish datetime = '2015-01-03'

declare

@start_month datetime = convert(datetime,convert(varchar(9),@start,120)+'1',120)

select *

from

(

select

dt,

StorehouseID,

ProductId,

Quantity,

sum(Quantity) over

(

partition by StorehouseID, ProductID

order by dt

) as Balance

from dbo.TurnoverHour with(noexpand)

where dt between @start_month and @finish

) as tmp

where dt >= @start

order by StorehouseID, ProductID, dt

select *

from

(

select

dt,

StorehouseID,

ProductId,

Quantity,

sum(Quantity) over

(

partition by StorehouseID, ProductID

order by dt

) as Balance

from dbo.TurnoverHour with(noexpand,index=ix_dt)

where dt between @start_month and @finish

) as tmp

where dt >= @start

order by StorehouseID, ProductID, dtSQL Server uptime:

CPU time = 33860 ms, elapsed time = 24247 ms.

(rows processed: 145608)

(rows processed: 1)

SQL Server uptime:

CPU time = 6374 ms, elapsed time = 1718 ms.

SQL Server Parsing and Compilation Time:

CPU Time = 0 ms, Elapsed Time = 0 ms.

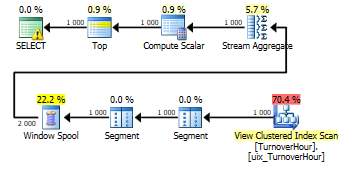

From time it is seen that the index by date is much faster. But the query plans in comparison are as follows:

The cost of the 1st request with the automatically selected cluster index = 2752, but the cost with the index by the date of the request = 3119.

Be that as it may, here we need two tasks from the index: sorting and selecting a range. One index available to us does not solve this problem. In this example, the data range is only for 1 day, but if there is a longer period, but not all, for example, for 2 months, then unambiguously searching by index will not be effective due to the cost of sorting.

Here, from the visible optimal solutions, I see:

- Create a computed field Year-Month and create an index (Year-Month, other fields of the cluster index). In the condition where dt between @start_month and finish, replace with Year-Month = @ month, and after that, already apply the filter to the desired dates.

- Filtered indexes - the index itself is clustered, but the filter is by date, for the desired month. And there are as many indexes as we have in total months. The idea is close to a solution, but here if the range of conditions is from 2 filtered indexes, a connection is required and in the future sorting is inevitable anyway.

- We partition the cluster index so that in each section there is data for only one month.

In the project, as a result, I made the 3rd option. Partitioning the cluster index of a materialized view. And if the sample goes over a period of one month, then in fact the optimizer affects only one section, making it scan without sorting. And clipping of unused data occurs at the level of clipping of unused sections. Here, if you search from 10 to 20, we don’t have an exact search for these dates, but search for data from the 1st to the last day of the month, then scan this range in a sorted index with filtering during scanning by the set dates.

We partition the cluster index of views. First of all, remove all indexes from the view:

drop index ix_dt on dbo.TurnoverHour;

drop index uix_TurnoverHour on dbo.TurnoverHour;And create a function and partition scheme:

set dateformat ymd;

create partition function pf_TurnoverHour(datetime) as range right for values (

'2006-01-01', '2006-02-01', '2006-03-01', '2006-04-01', '2006-05-01', '2006-06-01', '2006-07-01', '2006-08-01', '2006-09-01', '2006-10-01', '2006-11-01', '2006-12-01',

'2007-01-01', '2007-02-01', '2007-03-01', '2007-04-01', '2007-05-01', '2007-06-01', '2007-07-01', '2007-08-01', '2007-09-01', '2007-10-01', '2007-11-01', '2007-12-01',

'2008-01-01', '2008-02-01', '2008-03-01', '2008-04-01', '2008-05-01', '2008-06-01', '2008-07-01', '2008-08-01', '2008-09-01', '2008-10-01', '2008-11-01', '2008-12-01',

'2009-01-01', '2009-02-01', '2009-03-01', '2009-04-01', '2009-05-01', '2009-06-01', '2009-07-01', '2009-08-01', '2009-09-01', '2009-10-01', '2009-11-01', '2009-12-01',

'2010-01-01', '2010-02-01', '2010-03-01', '2010-04-01', '2010-05-01', '2010-06-01', '2010-07-01', '2010-08-01', '2010-09-01', '2010-10-01', '2010-11-01', '2010-12-01',

'2011-01-01', '2011-02-01', '2011-03-01', '2011-04-01', '2011-05-01', '2011-06-01', '2011-07-01', '2011-08-01', '2011-09-01', '2011-10-01', '2011-11-01', '2011-12-01',

'2012-01-01', '2012-02-01', '2012-03-01', '2012-04-01', '2012-05-01', '2012-06-01', '2012-07-01', '2012-08-01', '2012-09-01', '2012-10-01', '2012-11-01', '2012-12-01',

'2013-01-01', '2013-02-01', '2013-03-01', '2013-04-01', '2013-05-01', '2013-06-01', '2013-07-01', '2013-08-01', '2013-09-01', '2013-10-01', '2013-11-01', '2013-12-01',

'2014-01-01', '2014-02-01', '2014-03-01', '2014-04-01', '2014-05-01', '2014-06-01', '2014-07-01', '2014-08-01', '2014-09-01', '2014-10-01', '2014-11-01', '2014-12-01',

'2015-01-01', '2015-02-01', '2015-03-01', '2015-04-01', '2015-05-01', '2015-06-01', '2015-07-01', '2015-08-01', '2015-09-01', '2015-10-01', '2015-11-01', '2015-12-01',

'2016-01-01', '2016-02-01', '2016-03-01', '2016-04-01', '2016-05-01', '2016-06-01', '2016-07-01', '2016-08-01', '2016-09-01', '2016-10-01', '2016-11-01', '2016-12-01',

'2017-01-01', '2017-02-01', '2017-03-01', '2017-04-01', '2017-05-01', '2017-06-01', '2017-07-01', '2017-08-01', '2017-09-01', '2017-10-01', '2017-11-01', '2017-12-01',

'2018-01-01', '2018-02-01', '2018-03-01', '2018-04-01', '2018-05-01', '2018-06-01', '2018-07-01', '2018-08-01', '2018-09-01', '2018-10-01', '2018-11-01', '2018-12-01',

'2019-01-01', '2019-02-01', '2019-03-01', '2019-04-01', '2019-05-01', '2019-06-01', '2019-07-01', '2019-08-01', '2019-09-01', '2019-10-01', '2019-11-01', '2019-12-01');

go

create partition scheme ps_TurnoverHour as partition pf_TurnoverHour all to ([primary]);

go

Ну и уже известный нам кластерный индекс только в созданной схеме секционирования:

create unique clustered index uix_TurnoverHour on dbo.TurnoverHour (StorehouseID, ProductID, dt) with (data_compression=page) on ps_TurnoverHour(dt); --- 19 min

И теперь посмотрим, что у нас получилось. Сам запрос:

set dateformat ymd;

declare

@start datetime = '2015-01-02',

@finish datetime = '2015-01-03'

declare

@start_month datetime = convert(datetime,convert(varchar(9),@start,120)+'1',120)

select *

from

(

select

dt,

StorehouseID,

ProductId,

Quantity,

sum(Quantity) over

(

partition by StorehouseID, ProductID

order by dt

) as Balance

from dbo.TurnoverHour with(noexpand)

where dt between @start_month and @finish

) as tmp

where dt >= @start

order by StorehouseID, ProductID, dt

option(recompile);

SQL Server uptime:

CPU time = 7860 ms, elapsed time = 1725 ms.

SQL Server Parsing and Compilation Time:

CPU Time = 0 ms, Elapsed Time = 0 ms.

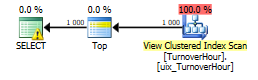

Request Plan Cost = 9.4

In fact, data in one section is selected and scanned by the cluster index quickly enough. It should be added here that when the request is parameterized, the unpleasant effect of parameter sniffing occurs, option (recompile) is treated.