Analysis of changes in the game

One of the main characteristics of a successful mobile game is its constant operation: it is the processing of existing content, and the addition of new ones. But there is a flip side to the coin - you need to constantly evaluate the risks of changes in the next version of the application. You need to know in advance how changes in the update will affect project performance. Otherwise, you may find yourself in a situation when the balance suddenly breaks during a scheduled update and you urgently need to raise the entire development team to release a hotfix.

Even before assembling a new production build, we must understand what performance the innovation will affect. Indeed, in new versions of the game there may be many balance changes. Without prior planning, one of the following questions will inevitably arise: “What increased ARPU in Canada - local events in honor of a national holiday or general increase in the complexity of a group of some levels; maybe the stars just coincided like that? ” Of course, after the release of the update, a comprehensive analysis of the results is performed, but you need to understand the nature of the changes in advance.

Before introducing new features into the game, we try to answer a number of questions:

When we answered the most important questions, a couple more remain. For example, how will we add changes to the game? There are two options here:

A lot of good and different things have been written about AB tests, but we will write a little more.

If you decide to check the changes using the AB-test, the main thing is to highlight the key points, otherwise the test will be useless either due to its inconclusiveness, or due to an incorrect interpretation. In any case, we do not need a useless test. Therefore, this is what we look at when we are planning an AB test:

Even if you distribute the players into groups in equal proportions, inside they can fall into different conditions. For example, those who came into the game and entered the test at the beginning of the month may have a higher ARPU than those who came at the end of the test. The choice of methodology - this can be a cohort approach or the calculation of ARPU Daily (ARPDAU) - depends on specific goals at the planning stage and on the players who are allocated to the test on a regular basis.

In a cohort assessment of the result, it should be borne in mind that players distributed closer to the end of the test might not have reached the “conversion points” yet. You need to either cut off part of the “last” cohorts in the analysis, or wait for the right time after stopping the distribution of players in the test.

You can also approach the analysis of the final data of groups in different ways. We use two main approaches:

Here are a few tests on the Township project as an example. There, the use of the Bayesian approach instead of the frequency one allowed us to improve the accuracy.

In the first test, we changed the amount of paid currency, the so-called cache that a player can get from one specific source. We planned that this could lead to a slight improvement in monetization. As a result, we established the difference:

Thus, we were able to raise the accuracy to acceptable without changing the size of the groups of participants.

In another test, we changed the settings for displaying ads for a certain group of players in a region with poor monetization. At that time, we already found out that for some well-selected groups of players, advertising can not only not spoil, but even improve conversion or ARPU, and we wanted to test this approach with a wider group.

Regardless of the final choice of the approach to evaluating the results, we get information about the difference between the control and test groups. If we consider the result to be positive for the project, then we include the change for all users. Even before the full introduction of changes to the game, we have an idea of how they can affect our players.

Of course, in some cases, for new content, we may not conduct an AB test if the effect of the changes is predictable. For example, when adding new match-3 levels to the game. For these changes, we use posterior control of key metrics. As in the case of the AB test, the data for this is collected and processed from events that came from users in automatic mode.

We have our own dashboard, internal development based on Cubes opensource solution. It is used not only by analysts, but also by game designers, managers, producers and other members of our friendly development team. With the help of this dashboard (more precisely, a set of dashboards) for each project, both key indicators and their internal metrics are tracked, such as, for example, the difficulty of a match-3 level. Data for dashboards is prepared in the format of olap-cubes, which, in turn, contain aggregated data from the raw-events database. Thanks to the selection of additive models for each chart, it is possible to perform drilldown (decomposition into components) into the necessary categories. Optionally, you can group or ungroup users to apply filters when rendering metrics. For example, you can make a drilldown by application version, level setting version, region and solvency,

Of course, the most interesting bunch by default is the latest version of the game and the latest version of the levels, which are compared with the previous version. This makes it possible for level designers to continuously monitor the current state of the project complexity curve, abstracted from the global update analysis, and to quickly respond to deviations from the planned rate.

Even before assembling a new production build, we must understand what performance the innovation will affect. Indeed, in new versions of the game there may be many balance changes. Without prior planning, one of the following questions will inevitably arise: “What increased ARPU in Canada - local events in honor of a national holiday or general increase in the complexity of a group of some levels; maybe the stars just coincided like that? ” Of course, after the release of the update, a comprehensive analysis of the results is performed, but you need to understand the nature of the changes in advance.

Change Design

Before introducing new features into the game, we try to answer a number of questions:

- Where will we look? - which metrics ( both generally accepted KPIs and internal quality criteria ) are expected to affect the change, which effect will be considered successful for the project.

- Who will we look at? - for whom the change is intended ( for whales, for new players, for players from China, for players stuck on a particular group of levels, etc.) .

- Why will we watch? - what are the associated risks ( more difficult levels → more monetization and more dumps (churn); easier levels → less monetization and less dumps) .

- Is there something to watch? - Does all the necessary information track in the game.

When we answered the most important questions, a couple more remain. For example, how will we add changes to the game? There are two options here:

- AB test. We conduct AB tests for new features or strong changes in the current balance and gameplay. Whenever possible, a preliminary AB test is preferable for us.

- Immediately into the game. Adding new content to the game without an AB test is possible when we either technically cannot conduct the AB test, or do not consider the content to be fundamentally new (a new set of levels, new decorations, etc.).

A lot of good and different things have been written about AB tests, but we will write a little more.

AB Test Planning

If you decide to check the changes using the AB-test, the main thing is to highlight the key points, otherwise the test will be useless either due to its inconclusiveness, or due to an incorrect interpretation. In any case, we do not need a useless test. Therefore, this is what we look at when we are planning an AB test:

- The choice of values for evaluation.

Example: it is assumed that the changes should “tighten the screws” and encourage players to pay to complete the level. What we need to evaluate: conversion, ARPU, retention, dumps, level complexity, the use of boosts. We need to be sure that increasing revenue by increasing conversion is worth the potential loss of users. Moreover, part of the users who fell off could in the future start watching ads and generate revenue. You must also be sure that the level is well done, that it is not too random, that there is no "silver bullet" in the form of a single boost, guaranteeing the passage. Are these controlled metrics enough or is it worth adding more? - Calculation of the required required sample to obtain a meaningful result.

Example: the model predicts a guaranteed absence of conversion for about 2,000 people in the group with level-up to the selected level on the selected day. How many days to run predictions so that the test results produce significant results when you increase the display of commercials for this group? - Formalization of test launch conditions.

Example: to run a large-scale test, you need to find a country with a good conversion rate. At the same time, recruit only experienced players who do not speak English. How to formalize this for the dynamic distribution of players in the test? - Understanding what mechanisms are provided for data collection and analysis of results.

Example: can we simultaneously conduct several tests for different groups of levels and use a convenient tool to divide the influence of one and the other on the players participating in two tests at once? - Formalization of criteria for making decisions on completion of a test.

Example: is it worth stopping the test ahead of time if the metrics have dropped sharply. Or is it a temporary effect characteristic of a cohort - and is it worth the wait? - Formalization of criteria for making a decision on the introduction of changes to the game. Let's talk about this in the next section of the article!

Making the right decisions based on test results

Even if you distribute the players into groups in equal proportions, inside they can fall into different conditions. For example, those who came into the game and entered the test at the beginning of the month may have a higher ARPU than those who came at the end of the test. The choice of methodology - this can be a cohort approach or the calculation of ARPU Daily (ARPDAU) - depends on specific goals at the planning stage and on the players who are allocated to the test on a regular basis.

In a cohort assessment of the result, it should be borne in mind that players distributed closer to the end of the test might not have reached the “conversion points” yet. You need to either cut off part of the “last” cohorts in the analysis, or wait for the right time after stopping the distribution of players in the test.

You can also approach the analysis of the final data of groups in different ways. We use two main approaches:

- The frequency approach is a classic approach to measuring results. We formulate the null hypothesis that there is no significant difference between the groups and the alternative hypothesis that negates the null. The result of the study will be a decision on the validity of any of the two hypotheses for the entire population. We select the alpha level (1 - alpha = significance level) and conduct a test using the statistical criterion (z-test, t-test). Next, we build confidence intervals for the control and test groups. If these intervals do not intersect, then the result is reliable with a probability of 1 - alpha. In fact, the selected alpha value is the probability of an error of the first kind, i.e. the probability that an alternative hypothesis will be accepted from the results of the experiment, although the null hypothesis is true for the population.

Pros: the guaranteed result of determining a significant difference with a sufficiently large sample.

Cons: sample sizes - the higher the selected level of confidence, the more groups of participants are recruited. And the more critical the tested change, the higher the significance level that we set as the threshold value. - The Bayesian approach is a less common method, which is based on the principle of calculating conditional probability. It allows you to consider the probability of an event, provided that the population is distributed in a certain way. From the point of view of practice, the use of the Bayesian approach makes it possible to reduce the requirements on the number of sample elements (participants in the AB test) for a number of metrics. This works because at a certain point in the optimization of the distribution parameters, the constructed density no longer approaches the actual results in the test.

The approach is based on the principle of calculating conditional probability. If at the time of analyzing the results we know what kind of distribution the general population has, we can restore the probability density by optimizing the parameters of this distribution.

If the random variable does not correspond to one type of type distribution, then the desired distribution can be obtained by combining several type distributions using the principle of joint probability of events. For example, for ARPPU we combine the distribution of revenue from the user with the distribution of paying users (optimizing the parameters of the work). We describe the model of the final distribution for a random variable and, within the framework of the constructed model, compare two samples. Next, using the statistical criterion, we verify that both samples correspond to the distribution described in the model and have different parameters.

Pros:in the case of a successful model selection and successful optimization of parameters, it is possible to improve the accuracy and reliability of the estimates, and in some cases you can make decisions by recruiting small groups of users for the test.

Cons: a successful model may not be, or it may be quite complicated for applied use.

Here are a few tests on the Township project as an example. There, the use of the Bayesian approach instead of the frequency one allowed us to improve the accuracy.

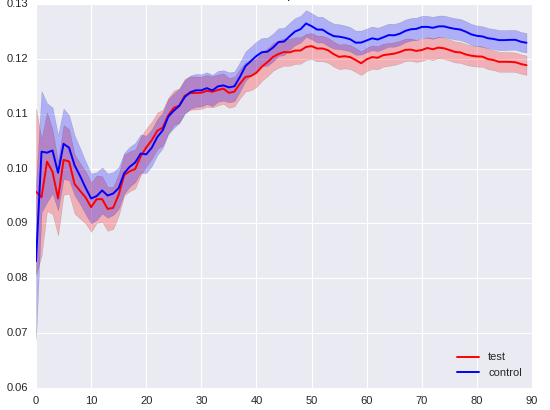

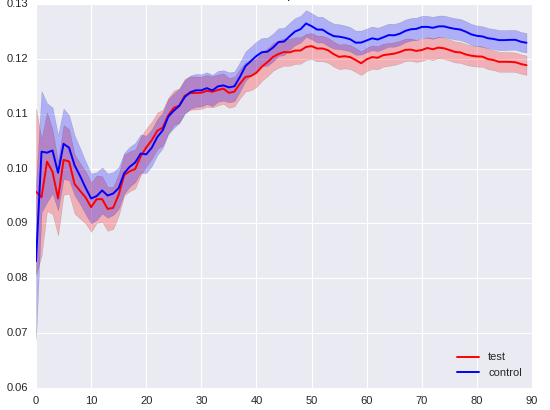

In the first test, we changed the amount of paid currency, the so-called cache that a player can get from one specific source. We planned that this could lead to a slight improvement in monetization. As a result, we established the difference:

- DPU (Daily Paying Users) - the test group is 1% larger than the control group with a probability of 87% when using the Bayesian approach, while the same group discrepancy when using the frequency approach is true with a probability of 50%.

- ARPU Daily (ARPDAU) - test more than the control by 2.55%, Bayesian approach - 94%, frequency - 74%.

- ARPPU Daily - test more by 1.8%, Bayesian - 89%, frequency - 61%.

Thus, we were able to raise the accuracy to acceptable without changing the size of the groups of participants.

In another test, we changed the settings for displaying ads for a certain group of players in a region with poor monetization. At that time, we already found out that for some well-selected groups of players, advertising can not only not spoil, but even improve conversion or ARPU, and we wanted to test this approach with a wider group.

- Conversion in the control group was higher than in the test group, with a Bayesian approach with a probability of 97% this was clear for 50,000 players, with a particular approach with a 95% probability of 75,000 players.

- We estimated the difference in ARPU in favor of the control group using the Bayesian approach based on the total with a 97% probability per month, while with the frequency approach we did not differ in confidence intervals.

Regardless of the final choice of the approach to evaluating the results, we get information about the difference between the control and test groups. If we consider the result to be positive for the project, then we include the change for all users. Even before the full introduction of changes to the game, we have an idea of how they can affect our players.

Metric monitoring

Of course, in some cases, for new content, we may not conduct an AB test if the effect of the changes is predictable. For example, when adding new match-3 levels to the game. For these changes, we use posterior control of key metrics. As in the case of the AB test, the data for this is collected and processed from events that came from users in automatic mode.

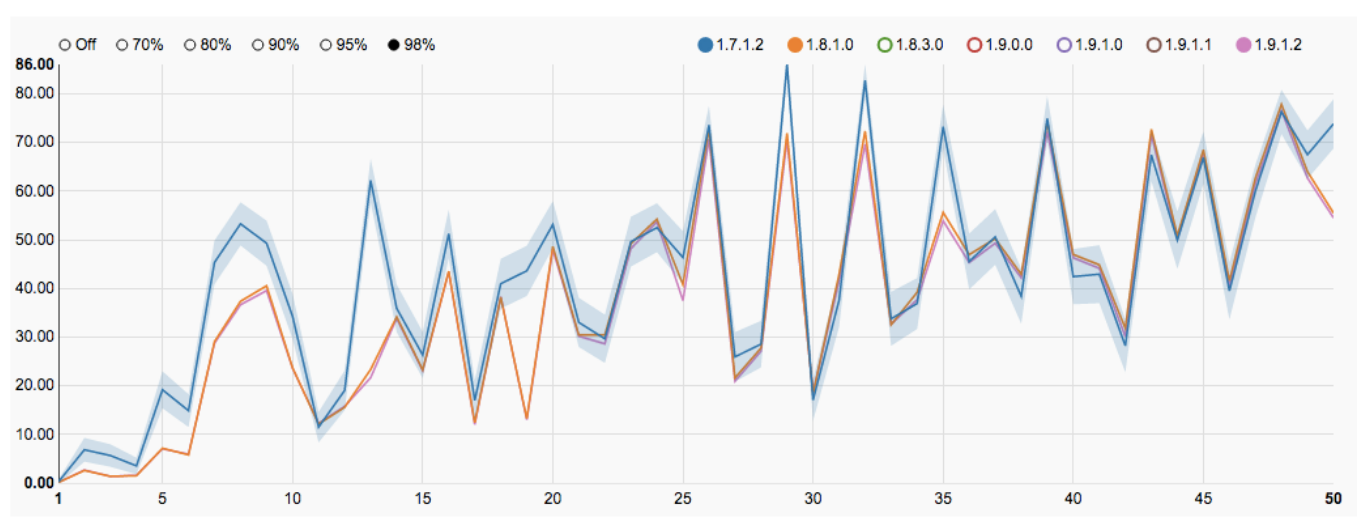

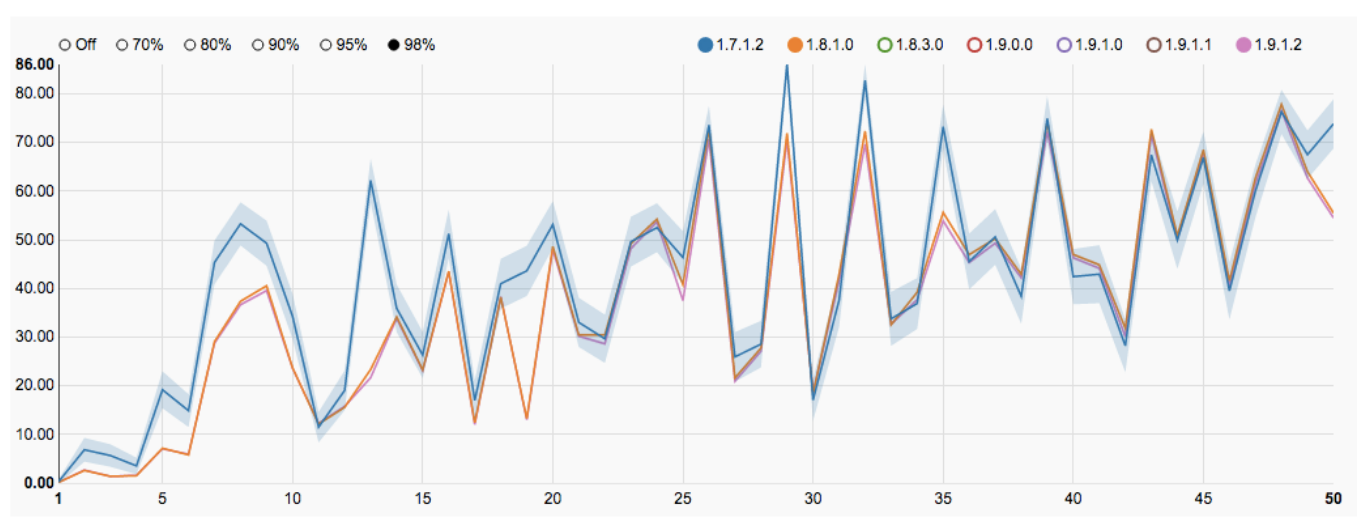

We have our own dashboard, internal development based on Cubes opensource solution. It is used not only by analysts, but also by game designers, managers, producers and other members of our friendly development team. With the help of this dashboard (more precisely, a set of dashboards) for each project, both key indicators and their internal metrics are tracked, such as, for example, the difficulty of a match-3 level. Data for dashboards is prepared in the format of olap-cubes, which, in turn, contain aggregated data from the raw-events database. Thanks to the selection of additive models for each chart, it is possible to perform drilldown (decomposition into components) into the necessary categories. Optionally, you can group or ungroup users to apply filters when rendering metrics. For example, you can make a drilldown by application version, level setting version, region and solvency,

Of course, the most interesting bunch by default is the latest version of the game and the latest version of the levels, which are compared with the previous version. This makes it possible for level designers to continuously monitor the current state of the project complexity curve, abstracted from the global update analysis, and to quickly respond to deviations from the planned rate.