New processor architecture solves combinatorial optimization

The speed of computers is constantly increasing, transforming the world around us. Information technology helps humanity cope with many difficulties in various fields. Part of the real problems can be solved using combinatorial computer systems. However, as the number of analyzed factors increases, the number of combinations grows, and the “problem of combinatorial optimization” arises. In order to solve it, it is necessary to significantly increase the speed of the computers, which to date has become extremely difficult to carry out without a radical overhaul of the basics of constructing the appropriate equipment. This is what Fujitsu did.

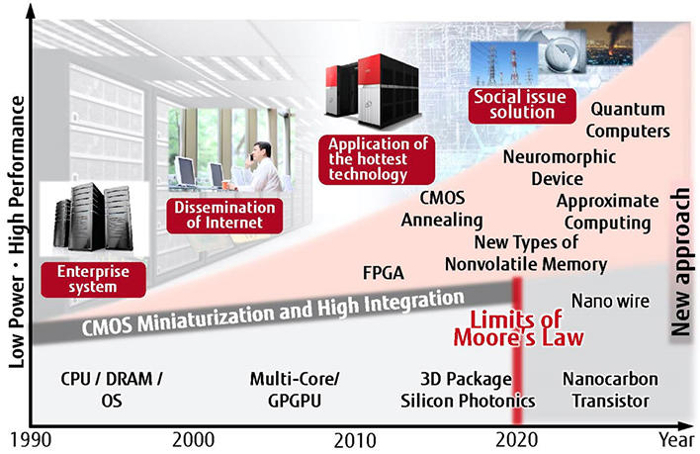

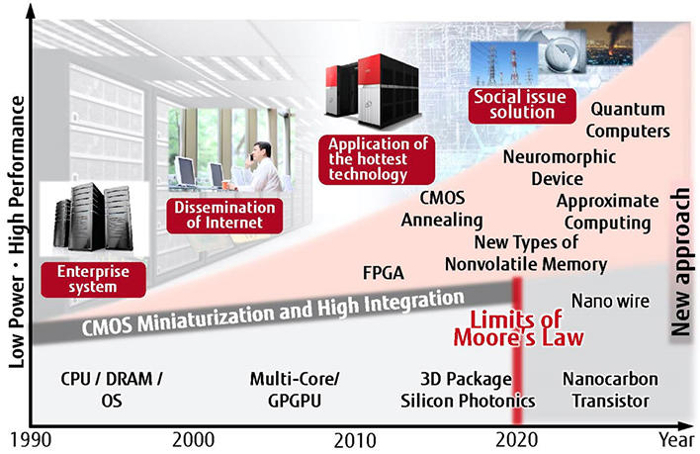

Gordon Moore's law, which involved doubling the number of transistors in semiconductor circuits every two years, has been the main rule in the design and manufacture of microelectronics for the past 50 years. The uncontrolled increase in the performance of computing systems has provided certain benefits to mankind, but today experts do not see the conditions for the continuation of this law.

If we want computers to significantly improve our quality of life in the future, the IT industry must take a number of measures, including the development of fundamentally new devices, such as quantum computers. Such systems, based on the method called “quantum annealing,” were developed specifically to solve the problem of combinatorial optimization.

Although today's quantum computers are generally able to solve this problem, there are limitations on their capabilities associated with connecting adjacent elements. Therefore, manufacturers of computing equipment have a need to create a new computer architecture that can quickly and efficiently process a large number of combinations composed of existing factors of the real world.

The University of Toronto and Fujitsu have jointly developed a new computer architecture to find the best solutions to practical problems by analyzing a huge number of combinations. The architecture uses CMOS technology, expanding its scope.

The new development has two distinctive features.

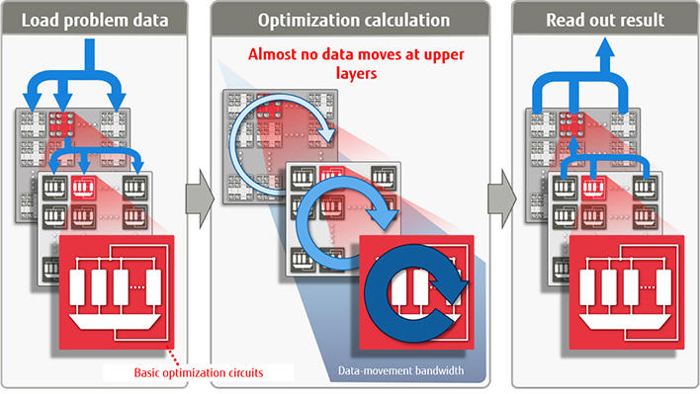

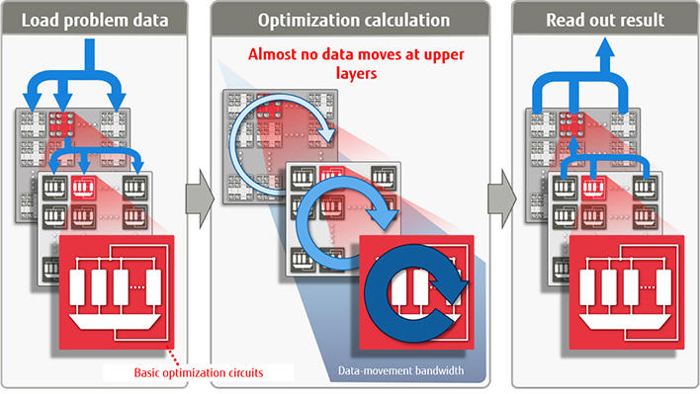

1. First of all, it is a "non-von Neumann" architecture, therefore, it minimizes the amount of data movement. Almost all modern computers use the "von Neumann processing." This means that program data is stored in memory, and then operations with them are sequentially performed. In recent years, computer performance has increased significantly, and the low speed of reading instructions from memory has become a bottleneck. As a result, the possibility of switching to "non-von Neumann" data processing looks more and more attractive. Similar architectures are used in neurocomputers (created by the type of neural circuits), quantum computers that use the principle of behavior of elementary particles of quantum mechanics, and DNA computers.

A technology for minimizing data movement using non-von Neumann operations

By using “non-von Neumann” data processing (program execution) and updating a variable optimization (bit), the cost of solving the problem is reduced. In this case, the data is first loaded from memory, then, as necessary, their optimization is performed, after which a finished result is issued. Because during operation, data is not read or written to memory, time and energy costs are reduced. In addition, by minimizing the movement of data between the basic schemes, it is necessary to move their minimum volume to the upper levels.

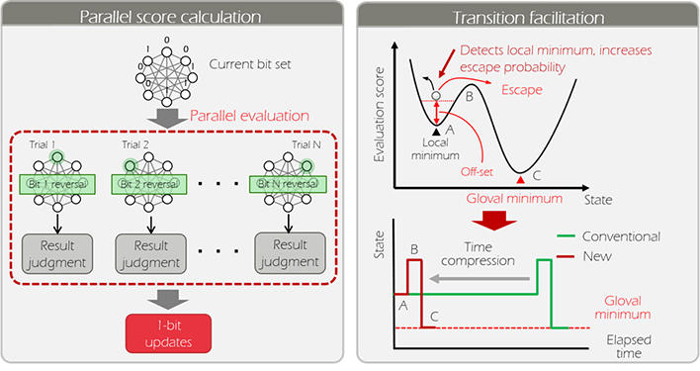

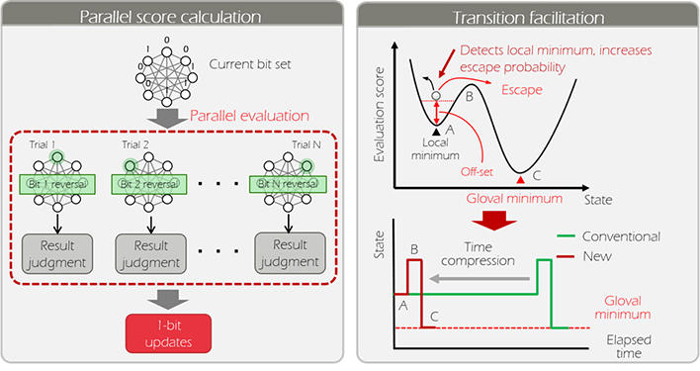

2. The second distinctive feature is the use of "high-speed technology in the basic optimization scheme." In this scheme, probability theory is used to search for the most optimal state. The probability of determining the optimal state of the object is increased by parallel calculation of the value of each operation for several options. If the search stops in the middle, this method cyclically adds a constant value to the total energy consumption in order to increase the probability of an exception for the next state. This approach allows you to quickly get the best solution.

Acceleration technology for basic optimization schemes

Fujitsu experts tested the new architecture and created a basic optimization scheme based on a programmable logic integrated circuit such as FPGA (i.e., a circuit whose configuration can be configured directly by the customer), capable of processing 1024 bits. The new system is about 10 thousand times faster than a similar system running on processors of standard architecture (x86).

The use of several basic schemes that perform optimization in parallel allows one to solve a wider range of problems in comparison with current quantum computers. The scale of the problems being solved and the speed of their processing are also increasing. This allows, for example, to optimize several thousand physically distributed databases. Fujitsu will continue to work on improving the new architecture and plans to create an experimental system with scaling from 100 thousand to 1 million bits by 2018.

Gordon Moore's law, which involved doubling the number of transistors in semiconductor circuits every two years, has been the main rule in the design and manufacture of microelectronics for the past 50 years. The uncontrolled increase in the performance of computing systems has provided certain benefits to mankind, but today experts do not see the conditions for the continuation of this law.

If we want computers to significantly improve our quality of life in the future, the IT industry must take a number of measures, including the development of fundamentally new devices, such as quantum computers. Such systems, based on the method called “quantum annealing,” were developed specifically to solve the problem of combinatorial optimization.

Although today's quantum computers are generally able to solve this problem, there are limitations on their capabilities associated with connecting adjacent elements. Therefore, manufacturers of computing equipment have a need to create a new computer architecture that can quickly and efficiently process a large number of combinations composed of existing factors of the real world.

New processor architecture

The University of Toronto and Fujitsu have jointly developed a new computer architecture to find the best solutions to practical problems by analyzing a huge number of combinations. The architecture uses CMOS technology, expanding its scope.

The new development has two distinctive features.

1. First of all, it is a "non-von Neumann" architecture, therefore, it minimizes the amount of data movement. Almost all modern computers use the "von Neumann processing." This means that program data is stored in memory, and then operations with them are sequentially performed. In recent years, computer performance has increased significantly, and the low speed of reading instructions from memory has become a bottleneck. As a result, the possibility of switching to "non-von Neumann" data processing looks more and more attractive. Similar architectures are used in neurocomputers (created by the type of neural circuits), quantum computers that use the principle of behavior of elementary particles of quantum mechanics, and DNA computers.

A technology for minimizing data movement using non-von Neumann operations

By using “non-von Neumann” data processing (program execution) and updating a variable optimization (bit), the cost of solving the problem is reduced. In this case, the data is first loaded from memory, then, as necessary, their optimization is performed, after which a finished result is issued. Because during operation, data is not read or written to memory, time and energy costs are reduced. In addition, by minimizing the movement of data between the basic schemes, it is necessary to move their minimum volume to the upper levels.

2. The second distinctive feature is the use of "high-speed technology in the basic optimization scheme." In this scheme, probability theory is used to search for the most optimal state. The probability of determining the optimal state of the object is increased by parallel calculation of the value of each operation for several options. If the search stops in the middle, this method cyclically adds a constant value to the total energy consumption in order to increase the probability of an exception for the next state. This approach allows you to quickly get the best solution.

Acceleration technology for basic optimization schemes

Fujitsu experts tested the new architecture and created a basic optimization scheme based on a programmable logic integrated circuit such as FPGA (i.e., a circuit whose configuration can be configured directly by the customer), capable of processing 1024 bits. The new system is about 10 thousand times faster than a similar system running on processors of standard architecture (x86).

The use of several basic schemes that perform optimization in parallel allows one to solve a wider range of problems in comparison with current quantum computers. The scale of the problems being solved and the speed of their processing are also increasing. This allows, for example, to optimize several thousand physically distributed databases. Fujitsu will continue to work on improving the new architecture and plans to create an experimental system with scaling from 100 thousand to 1 million bits by 2018.