Devops, JUnit5 and microservice testing: a subjective view of the Moscow “Heisenbag”

On December 6-7, the fifth Heisenbag conference took place in Moscow.

Her slogan is “Testing. Not only for testers! ”, And for two years of regularly visiting Heisenbagov, I (formerly a Java developer, now a technical leader in a small company that never worked in QA) managed to learn a lot in testing and implement a lot in our team. I want to share a subjective review of the reports I remembered this time.

Disclaimer. Of course, this is only a small fraction (8 out of 30) of reports selected based on my personal preferences. Practically all these reports are somehow related to Java and there is not a single one about front-end and mobile development. In some places I allow myself a polemic with the speaker. If you are interested in a more complete and neutral review, according to tradition, it should appear in the blog of the organizers . But, perhaps, it will be interesting to someone to find out exactly about those reports, which were not visited.

Photos in the article - from the official twitter of the conference.

Baruch Sadogursky. We have DevOps. Let's dismiss all testers

(In the photo - a stir in the distribution of the book Baruch the Liquid Software )

Those involved in Java and attends conferences JUGRU Group, Baruch Sadogursky needs no introduction. However, on “Heisenbag” he spoke for the first time.

In a nutshell - it was a review report about the main ideas of DevOps. The audience’s need for such reports is preserved, since the question “give a definition of DevOps” to the people in the room is still the first to answer “this is such a person ...”

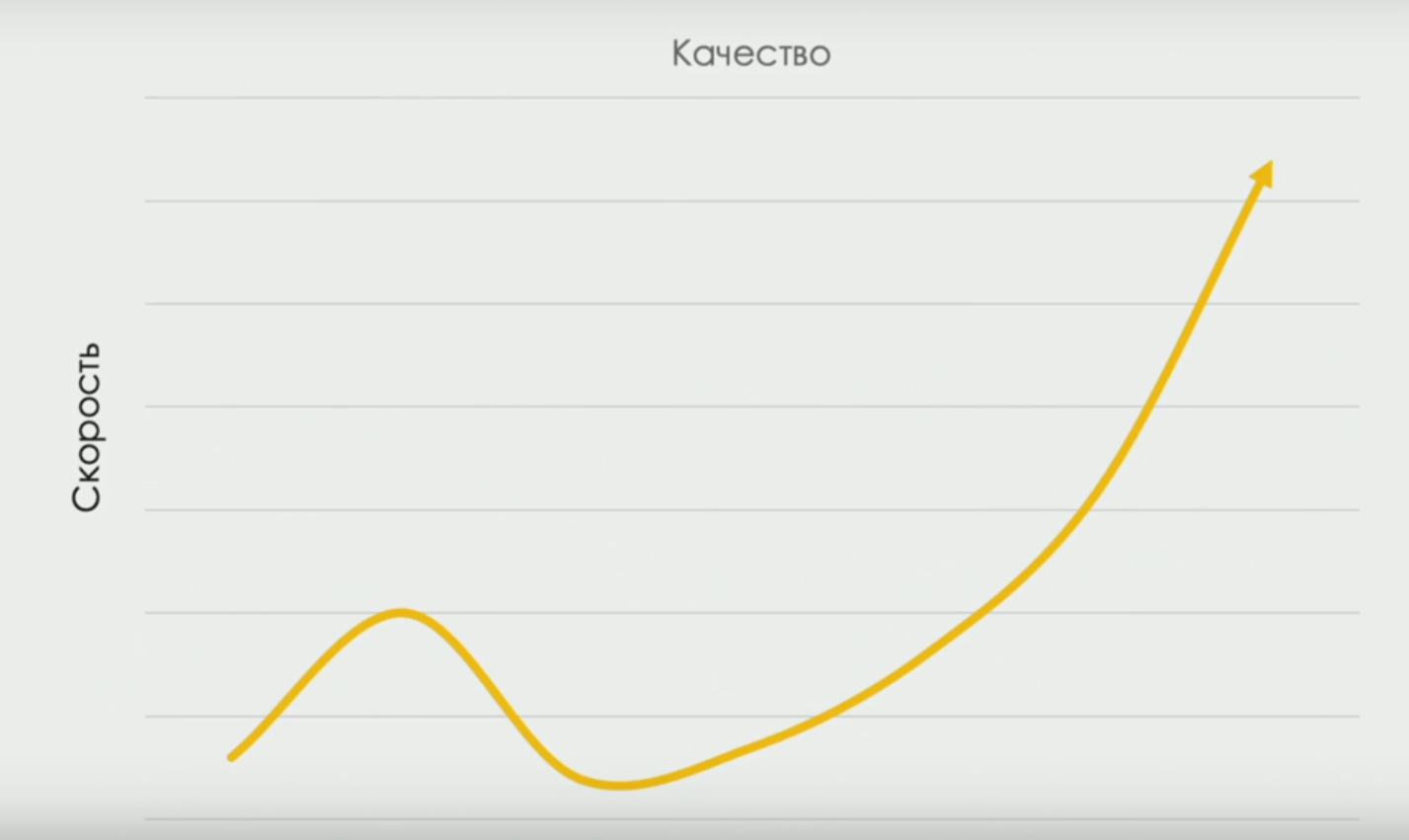

But even those who have already learned something about this topic will be very interested to learn about the research associations DORA devops-research.com, which obtained the percentages of handmade varieties in teams with different productivity. And about the curve connecting the speed of delivery and quality (at some point the speed decreases, because we need time to “test better”, but as the team develops, the correlation becomes straightforward):

Although the title of the report was provocative, and The report’s schedule was marked with the category “will burn”, its content, in my opinion, was quite mainstream. It was, of course, not about the dismissal of testers in terms of Devops-transformation, but about changing the nature of the work of testers. Alan Paige and Nikolai Alimenkov talked a lot about these things a year ago . And about changing roles, and about the "horizontal" development of "T-shaped skills" a year ago, there was a discussion at the round table "that the tester should know in 2018 ".

" Of course, if you do not want to change, and there will work for you, even if not so interesting. But there was still work for those who want to maintain a system written in COBOL on 70- x years, ”Baruch sneered.

Artyom Eroshenko. Need to do a project refactoring? Have an IDEA!

Artyom to the participants of Heisenbag is familiar from the reports on the Allure reporting system (for example, here is his report on the Allure opportunities in 2018 from the previous Heisenbag in St. Petersburg). Allure itself was born in the context of projects with thousands, tens of thousands and even more than a hundred thousand tests and is designed to simplify the interaction between developers and testers. It has the ability to link tests with external resources such as ticketing systems and commits in the version control system. In our micro-team, as long as the score went by just dozens of tests, we coped well with standard means. But as the number of tests in one of the products reached 700 and, in general, the challenge was to create high-quality reports for customers, I began to look towards Allure.

However, this report was not about Allure, although about him too.

Artyom convinced the public that writing plug-ins to IntelliJ IDEA is a simple and exciting activity. For what this may be required? To automate mass code modification. For example, to translate a large number of source codes from JUnit4 to JUnit5. Or from using Allure 1 to Allure 2. Or to automate tagging on tests with connection to the ticketing system.

Those who work with IDEA know what tricks she can do with code (for example, automatically translate code using for-loops into code using Java Streams and back, well, or instantly translate Java to Kotlin). The more interesting it was to look at how the veil of secrecy over code transformations in IDEA opens, we are invited to take part in this and create our own plug-ins for our unique needs. Next time, when I need to do something with a large code base, I will remember this report and see how it can be automated with the help of a samopisny plug-in in IDEA.

Kirill Merkushev. Java and Reactor project - what about tests?

This report, it seems to me, could well be held at the Joker or JPoint Java conferences. Kirill talked about how he uses the projectreactor.io framework in the microservice architecture with a single event log (Kafka), a little about the essence of coding on “reactive streams”, including how to use applications with this framework to be debugged and tested.

Life is also pushing our team to use architecture with a single event log, and we also look at Kafka. However, for streaming event handling, we experiment with the Kafka Streams API (where, I think, more things like stateful processing are implemented out of the box transparently to the developer), rather than Reactor. However, as always happens with new technologies, the "rake" and "pitfalls" are not known in advance. Therefore, it was important to listen to the story of a specialist already working with technology.

Leonid Rudenko. Selenoid Cluster Management with Terraform

If the previous report was a reminder of the JPoint conference, then this one is definitely DevOops . Leonid talked about how to raise and configure a Selenoid cluster using Terraform specifications. The fact that Selenoid itself was a report on last year's Heisenbag is a feature-rich distributed system that works as an elastic service and allows you to run a large number of Selenium tests in various browsers. Like any system that requires deployment on multiple machines, it is difficult to manually install Selenoid. Here modern Configuration-as-Code systems come to the rescue.

Leonid made a rather detailed overview of Terraform’s capabilities - a system that was probably unfamiliar to most of the audience, but is generally well known to DevOps-automatizers (for example, Anton Babenko’s excellent report on the best practices for creating and maintaining on Terraform). Next, it was shown using Terraform scripts to describe the parameters of docker containers with Selenoid for each of the machines in the cluster and the parameters themselves of the virtual machines of the cluster.

Although the specific case considered by Leonid certainly can ease the task of deploying Selenoid, I don’t agree with the speaker in everything. In fact, it uses Terraform for two different tasks: creating resources and configuring them. And this leads to the fact that Leonid is forced to run Terraform once to create virtual machines and once more for each of the virtual machines in order to raise the docker containers on them. In my opinion, Terraform, a well-performing resource creation task, does not solve the configuration task very well. It was possible to avoid reproduction of terraform-projects and their multiple launch using special configuration systems, for example, Ansible, or other solutions.

But in general, as a “educational program” for testers in the field of Infrastructure as Code, this report is very useful.

Andrei Markelov. Elegant integration testing of a microservice zoo using TestContainers and JUnit 5 using the example of a global SMS platform

And again about microservices! This time, the conversation was about how to perform tests that require the launch and interaction of several services at the same time. JUnit5 with its Extension System and the well-known (and beautiful) TestContainers framework were proposed as the basis for the solution (see, for example, last year’s report by Sergey Egorov ).

If you are writing something in Java and still do not know what TestContainers is, I urgently recommend learning. TestContainers allows, using Docker technology, directly in the test code to raise real databases and other services, connect them over the network and, as a result, perform integration testing in an environment that is created at the time of the tests start and is destroyed immediately after it. At the same time, everything works directly from Java code, connects as a Maven-dependency, and does not require installation of anything but Docker on the developer’s / CI server’s machine. We ourselves have been using TestContainers for over a year now.

Andrei showed a rather impressive example of how to set up a test environment configuration for end-to-end tests using JUnit5 Extensions, own annotations and TestContainers. For example, inscribing over your test (conditional code) annotations

@Billing@Messagingwe can, relatively speaking, write

@TestvoidsystemIsDoingRightThings(BillingService b, MessagingService m){...}

In the parameters of which will be transferred Java-interfaces through which you can communicate with real services raised (imperceptibly for the developer of the test) in containers.

These examples look very elegant. For me, as an active user of TestContainers and JUnit 5, they are understandable and relatively easy to implement.

But in general, with this approach, the big question remains that is associated with the fact that the method of configuring the test and production systems are radically different.

Making quick releases in production without fear of breaking everything is possible only if during the end-to-end testing not only the whole system was tested, but also the way of its configuration. If we repeatedly launched the system deployment script during the development and testing process, we would have no doubt that this script will work even when launched in production. The role of the code that configures the test environment in the example of Andrew perform annotations. But in production, we lay out the system using a completely different code - Ansible, Kubernetes, anything - not involved in any way with similar testing of the system. And this limits these tests, which are not entirely end-to-end.

Andrey Glazkov. Testing systems with external dependencies: problems, solutions, Mountebank

For those for whom the topic of this report is relevant, I highly recommend also to see the bright report by Andrei Solntsev on the fundamental approach to testing systems dependent on external services. Solntsev very convincingly speaks of the need to use mocks external systems for comprehensive testing. And Andrei Glazkov in his report describes one of the systems for such mocking, Mountebank, written on NodeJS.

Mountebank can be raised as a server and “educated” in answering requests over the network in a way similar to how we, when writing unit tests, “teach” moka interfaces. With the only difference that it is a mock network service. A curious case of using Mountebank is the ability to use it as a proxy - sending part of the requests to a real external system.

It should be noted here that I would recommend to Java developers (and Andrew in the discussion area agreed with this) also look towards the WireMock library, which was created in Java and can run in a embedded mode, i.e., directly from the tests without installing -or services on the developer's machine or CI-server (although it can work as a standalone server). Like Mountebank, WireMock supports proxying mode. We have a little positive experience using WireMock.

The advantage of Mountebank, however, is the support of lower-level protocols (WireMock works only for HTTP) and the opportunity to work in the “zoo” of different technologies (for Mountebank there are libraries for different languages).

Kirill Tolkachev. Test and cry with Spring Boot Test

And again, Java, microservices and JUnit 5. Kirill is another well-known Java-community Joker and JPoint conference speaker who spoke at Heisenbag for the first time.

This report is a modified version of last year's report “ The Curse of Spring Test ”, with examples modified under JUnit5 and Spring Boot 2. Various practical problems related to configuring Spring Boot tests in component / microservice tests are considered in depth. For example, I was impressed by the example of using the

@SpringBootConfiguration StopConfigurationsource tree empty in the right place to stop the process of scanning configurations, as well as the ability to use @MockBeanand@SpyBeaninstead of mocks. Like other reports by Kirill and Yevgeny Borisov, this is material to which it makes sense to return in the process of practical use of the Spring Framework.Andrey Karpov. What can static analyzers, what programmers and testers can't

Static code analysis is a useful thing. According to the canons of Continuous Delivery, it should be the very first phase of the delivery conveyor, screening out the code with problems that can be detected by “reading” the code. Static analysis is good because it is fast (much faster than executing tests), as well as cheap (it does not require additional efforts from the team in the form of writing tests: all checks are already written by the authors of the analyzer).

Andrei Karpov, one of the founders of the PVS-Studio project (familiar to Habra’s readers in his blog ) built a report on examples of what bugs when analyzing the code of known products were found using PVS-Studio. PVS Studio itself is a polyglot product, it supports C, C ++, C # and, most recently, Java.

Despite the fact that these examples were interesting and the benefits of static analysis of them are obvious, in my opinion, Andrei’s report had flaws.

Firstly, the report was built solely on consideration of the PVS-Studio product (for which, according to the speaker, “the average price tag is $ 10,000”). But it was worth mentioning that, in fact, in many languages there are many developed OpenSource static analysis systems. In Java alone, free Checkstyle and SpotBugs (the heir to the frozen FindBugs project), as well as the IntelliJ IDEA analyzer, which can be run separately from the IDE and get a report, has made tremendous progress.

Secondly, speaking about static analysis, it seems to me that it is always worth mentioning the fundamental limitations of this method. Not everyone was at the university the theory of algorithms and are familiar with the "problem of stopping", for example.

Finally, the problems of introducing static analysis into the existing code base were not affected at all, which still stops many people from regularly using analyzers on projects. For example, we drove the analyzer on a large legacy project and found 100,500 Vorning. There is no time and effort to fix them right on the spot, and there is a risk to massively change something in the code. What to do with it, how to make static analysis work as a quality gate? In the discussion area, this problem was discussed with Andrey, but this question was not considered in the report itself.

In general, I wish Andrey and his team success. Their product is interesting and the idea to occupy a niche in this area is very brave.

***

Perhaps I will not say anything about the final keyouts of the first and second days: they were both author shows, which you just need to watch. To talk about them is the same as retelling words, for example, a rock band performance.

In my report a year ago, I tried to convey the general atmosphere of the conference and talked about what was happening in the discussion areas, at lunch and at a party, so I will not repeat.

In conclusion, I would like to thank the organizers for another great conference. As far as I understood, the interest in the conference somewhat exceeded expectations, there was some overbooking and not even enough souvenirs for everyone. But definitely everyone had more important things: interesting reports, space for discussion, food and drinks. I look forward to new meetings!