Scaling TLS

Habr, this is a report from one of the “not main” halls of Highload ++ 2016. Artyom ximaera Gavrichenkov, Technical Director of Qrator Labs, talks about application encryption, including in highly loaded projects. Video and presentation at the end of the post - thanks to Oleg Bunin .

Greetings! We continue to be in the session about HTTPS, TLS, SSL and all that.

What I'm going to talk about now is not some kind of tutorial. As my lecturer at the University of Databases, Sergey Dmitrievich Kuznetsov, said: “I will not teach you how to configure Microsoft SQL Server - let Microsoft do it; I won’t teach you how to configure Oracle - let Oracle do it; I won’t teach you how to configure MySQL - do it yourself. ”

In the same way, I will not teach you how to configure NGINX - this is all on the site of Igor Sysoev . What we will discuss is a certain general view of the problems and the possibilities for solving the problems that arise when implementing encryption on public services.

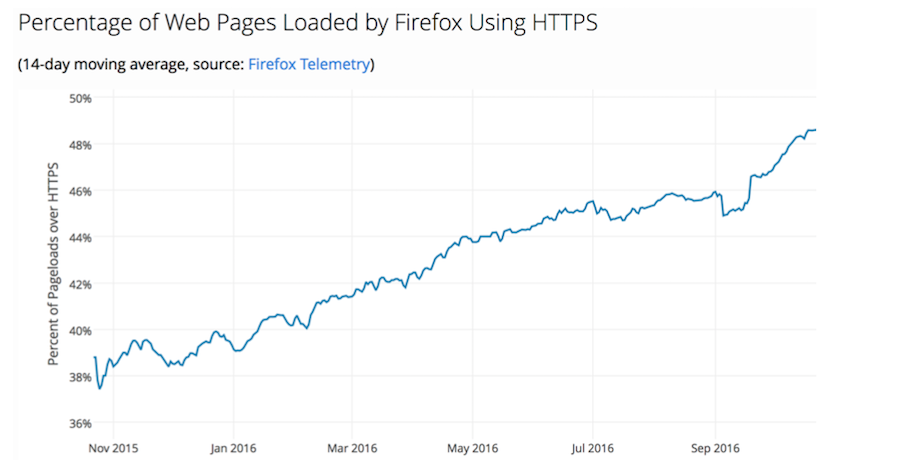

A small excursion into the very latest history: as you know, recently the topic of encryption of public services has risen to some new level - it is heard and the slide above shows how approximately this happened.

It all began in 2010, in my opinion, with the invention of the SPDY protocol by Google, Speed could work without encryption de jure, but de facto its implementation depended on the NPN extension ( Next Protocol Negotiation), which was in TLS, and it wasn’t in the standard protocol of the TCP transport layer. Therefore, de facto without SPDY encryption did not work.

For a long time in the industry there were disputes and lively discussions on the topics: "Does Speed help?"; "Does it speed up the work?"; "Does it reduce the load or not?" During this time, many managed to implement it and many people found themselves with one way or another, encryption implemented.

4 years later, and Google search staff on their blog announced that since the present (2014), the presence of an HTTPS site will improve the ranking of the site when it appears in the search. This was a serious acceleration - not everyone thinks about user safety, but almost everyone thinks about a place in the search results.

Therefore, starting from this moment, HTTPS began to be used by those who had not thought about it before and had not seen such a problem for themselves.

A year later, the HTTP / 2 standard was finalized and published, which again did not record the requirement that it should work only on top of TLS. However, de facto, every modern browser in HTTP / 2 without TLS does not know how. And finally, in 2016, a number of companies, with the support of the Electronic Frontiers Fund, founded the non-profit project Let's Encrypt, which issues certificates to everyone automatically, quickly and free of charge.

This is roughly what the graph of the number of active Let's Encrypt certificates issued is, a scale in millions. Implementation is very active, below is another statistic - from Firefox telemetry.

For the year from November 2015 to November 2016, the number of web pages visited by Firefox users on HTTPS, compared with HTTP, grew by a quarter to a third. Let’s say thank you very much for this Let's Encrypt and, by the way, they started (and have already ended ) a crowdfunding campaign, so do not pass by.

This is only one side of the story - speaking of it, one cannot but mention the second thread.

It relates to the revelations of the well-known employee of Booz Allen Hamilton Corporation. Who knows who I'm talking about? Edward Snowden, yes.

At that time, it served as some kind of marketing towards HTTPS: "They are watching us, let's protect our users." Google, after the latest revelations were published about the fact that the NSA "kind of like" intercepted internal communications in the Google data center. The company's engineers wrote obscene words on the US government in their blog and encrypted all internal communications as well.

Accordingly, about a month later a post was published about taking into account HTTPS when ranking - some conspiracy theorists can see some kind of connection in this event .

Since then, that is, 2013-2014, we have had two factors to promote TLS, and it has really become popular. It became so popular that it turned out that the infrastructure and encryption libraries were not ready for such popularity.

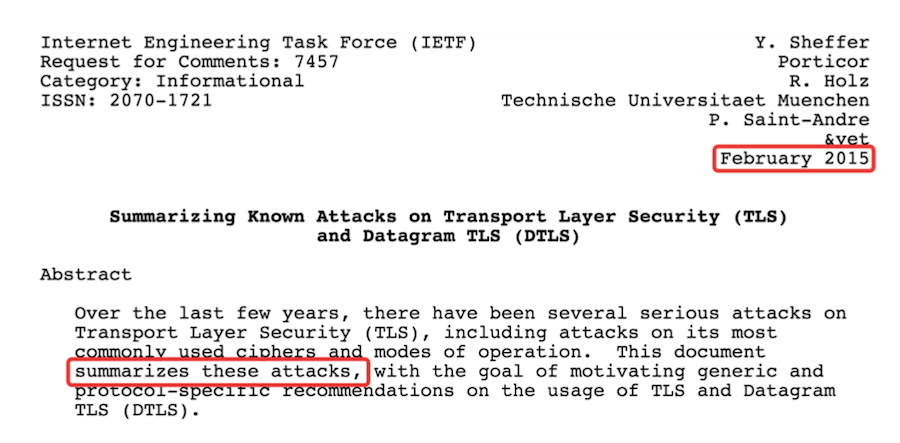

In 2014, two critical vulnerabilities were discovered in the most popular, at that time, SSL library. Someone even tried to switch on this occasion to another popular alternative - TLS, which turned out to be even worse. In February 2015, the IETF even released a separate RFC, which described the well-known attacks on TLS and datagram TLS (over UDP). This is documentary evidence of the high optimism of the IETF working group because in February 2015 they announced that “they will now summarize all the attacks,” and since then there have been three more.

Moreover, the very first of them was found 20 days after the publication of the RFC. In fact, this story is about this - in the discipline of project management you can find the mention of such a term as “technological debt”.

Relatively speaking, if you solve a problem and you understand that its solution will take 6 months, but it takes 3, but if you put a crutch here , you can basically do it in three months - in fact, at that moment you take the universe has borrowed time. Because the crutch that will be substituted will fall apart sooner or later - you will have to correct it. If there are a lot of such crutches, then there will be a “technological default” and you simply won’t be able to do anything new until you sort out everything old.

Encryption in the form in which it was in the late 2000s, was done by enthusiasts. But when the people really started using it, it turned out that there were a lot of jambs and they needed to be fixed somehow. And the main jamb is the awareness of the target audience, that is, you, about how it all works and how to set it up.

Let's go over and take a look at the main points with an eye, just for a large setup.

Let's start with the platitudes. To raise a service - you need to configure it, to configure it - you need a certificate, in order to have a certificate you need to buy it. Who?

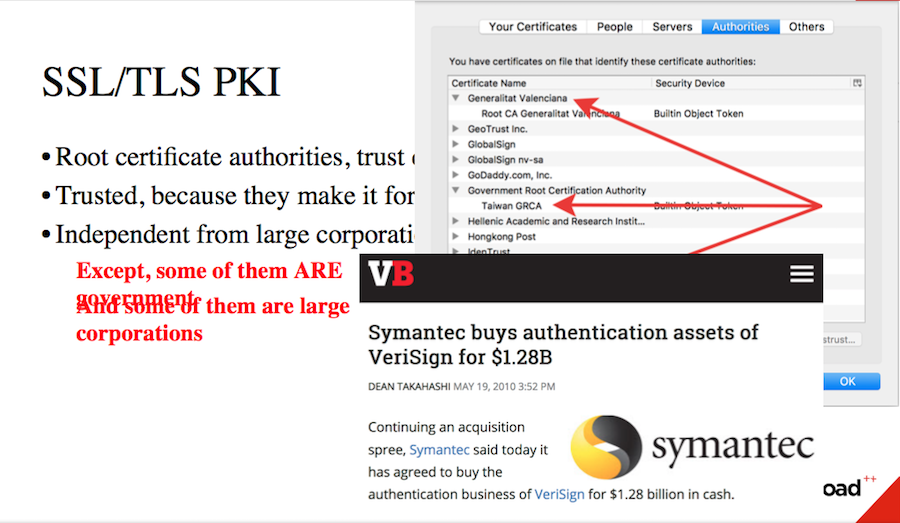

The SSL and TLS protocols include, of course, a public key infrastructure. There is a set of certification authorities, respectively, if you have a service, it must have a certificate that can be signed either by a certification authority or by someone that a certification authority has signed. That is, a continuous chain should be built from you to someone whom the browser and user device trust. To whom? For example:

I downloaded vanilla Firefox and there are certificates of about 100 pieces - trusted certificate authorities. That is, you have a large choice, in the sense of buying a certificate.

What are these hundreds of companies

According to ideology, these companies can be trusted because signing certificates and monitoring security is their main business. It’s difficult to skimp on this, otherwise the company will simply go bankrupt - a simple economic model. These should have been relatively small companies that are not dependent on large corporations and are not dependent on governments. Well, apart from the fact that some of them are the government, in particular the Japanese, Taiwanese and Valencia.

And others were bought by large corporations for awesome amounts.

Thus, today, we can’t say with confidence how certain certification authorities behave. They should and should still protect their interests as a player in the market, as a certification center. But, again, if you are a government employee or belong to a large organization, then if a large client comes to her who wants to do something for big money, then you are ordered to do it. The question is what you can do about it.

How is it that the original model now works so strangely? Why do these companies, belonging, for example, to the Ministry of Communications of China (to which your browser trusts) still remain certification authorities?

The point here is that it is quite difficult to stop being a certification authority.

Visual History - A certification authority called WoSign . If I'm not mistaken, since 2009 it has been a trusted certification authority in all popular browsers and operating systems. It became a center along a fairly correct path, having passed the audit of Ernst & Young (now E&Y), organized an aggressive marketing company and distributed certificates to everyone right and left, and everything was fine.

In 2016, the Mozilla Foundation staff wrote a whole page on their Wiki with a list of various violations that WoSign made at different points in time. Basically, these violations are dated 2015-2016.

What were they? As you know, you cannot get a certificate for a “left” domain. You must somehow prove that you are the owner of this domain - as a rule you should either receive something by physical mail or post a certain token on the website at a strictly defined address. WoSign at least once issued a certificate for AliCDN, which was not requested by AliExpress itself (they use the services of Symantec) - nevertheless, the certificate was issued and published.

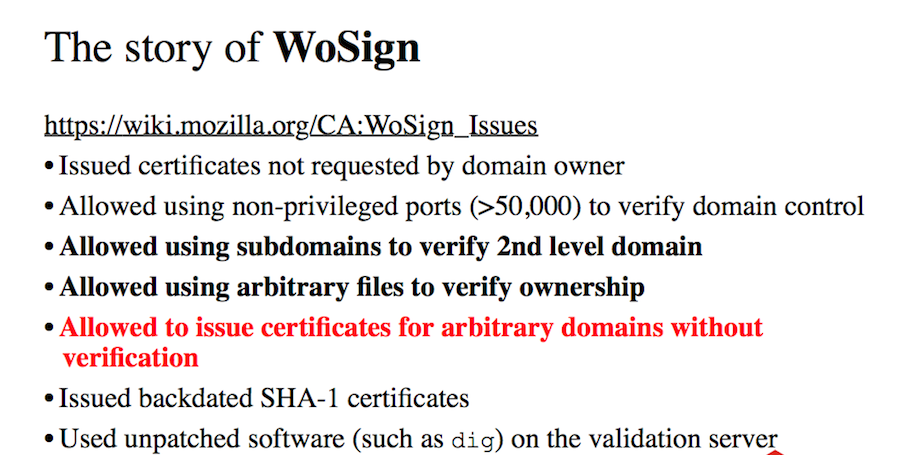

WoSign allowed the use of non-privileged ports for verification - that is, any user (not just the administrator) of this server could receive a certificate for any domain on this server hosted. WoSign allowed the use of domains 3, 4 and the following levels for verification of a second-level domain. Thus, the researchers, as part of the WoSign test for durability, were able to get a valid certificate for GitHub.

WoSign allowed you to use any files, that is, specify an arbitrary path on the site along which the token will be placed - this way the researchers managed to get signed certificates for Google and Facebook.

Well, in principle, this is all nonsense, because under certain conditions WoSign allowed you to issue any certificate for any domain without any verification at all (laughter in the hall).

After this, all the little things fade into the background, but in total Mozilla brought about 13 complaints. WoSign issued certificates retroactively, using software that had no patches on the validation server, including updating the parts responsible for security for many years.

WoSign bought StartCom and refused to publish any information that violates the Mozilla Foundation’s policies, but compared to everything else it’s not so painful.

What is the outcome?

In September of this year, WoSign was banned from Chrome and Firefox for a year, but it is still trusted by Microsoft and the Windows operating system.

You can’t just stop being a certification authority.

And we say this, in fact, about a trifle. If you talk about the same Verisign (Symantec), then you need to understand that you can’t just take and withdraw the Symantec root certificate, because it signed the same AliExpress, it signed Google, it signed a lot of people and users will have problems accessing resources. Therefore, one way or another, at the click of a finger, as it once was, it is impossible to revoke a certificate.

Large certification authorities, there is such an expression, they are " Too big to fail " - too large to cease to exist.

Альтернатива TLS’ному PKI (Public Key Infrastructure) есть, она называется DANE, построена вокруг DNSSEC’а, но у неё столько инфраструктурных проблем — в том числе с задержками и деплоем, что говорить о ней сейчас бессмысленно, нужно как-то с этим жить.

Как же с этим жить?

I promised to give advice on how to choose Certificate Authority. Based on the foregoing, my advice is as follows:

Take it for free - there will not be much difference.

If there is an API that allows you to automate the issuance and deployment of certificates - great.

The second useful advice today (that is, we are talking about a distributed structure with many balancers) - get not one certificate, but two or three certificates. Buy one from Verisign, one from Polish Unizeto, and one get free from Let's Encrypt. This will not greatly increase OPEX (operating costs), but it will seriously help you in case of unforeseen situations, which we will talk about more.

The question is right away: “Now, Let's Encrypt does not issue extended validity certificates (extended validity period and a beautiful green icon in the browser), what should I do?” In fact, this is a security theater , because not only are few people looking at these green icons, the mobile browser in Windows Phone does not even know how to display this icon. The user does not look at the presence of the icon in principle, not to mention its color.

Therefore, if you are not a bank and your security audit does not force you to buy extended validity, there will be no real benefit from it.

It is better to buy certificates with a short validity period, because if something happens with Certificate Authority, you will have room for maneuver. Diversify.

Let's talk more about the lifetime of certificates. Long-lived certificates (a year - two - three) have a number of advantages. For example, the fifth point does not hurt when you do not need to renew the certificate every 2-3 months - you put it once and forgot it for three years. In addition, certification authorities usually provide significant discounts to those who buy certificates for a year, three, and five years.

On this, the pros end and the cons begin

If something went wrong with the certificates, for example, you lost the private key and it went to someone else - in TLS there is a mechanism that allows you to revoke the certificate back. CRL and OCSP are called, there are two of them. The problem is that at the moment they both work in soft fail mode, that is, if the browser was unable to connect to the CRL server and check the status of the certificate, then “and to hell with it” - the certificate is supposedly normal.

Adam Langley from Google compared the soft fail CRL and OCSP with a seat belt in a car that pulls on all the time, and when you get into an accident it breaks. There is no point in this, because the same attacker who can do man in the middle and substitute a stolen key can also block access to CRL and OCSP, so these mechanisms simply won’t work.

Hard fail CRL and OCSP, that is, those that will display an error if the CRL server is unavailable, are now practically not used by anyone.

And again about the pain in the fifth point - yes, it is inevitable. But to be honest, you still need to automate certificate deployment. Deployment is just what you need to do, because replacing the certificate with your hands is the same technical debt.

She will not bite you instantly, but sooner or later problems may begin.

What allows automated certificate management? You can add, delete and modify them with one swipe of your finger. You can issue them with a short validity period, you can use many keys, you can configure client authorization by certificates. You will be able to very easily and very quickly handle situations from the series: "Ops, an intermediate certificate has been withdrawn from our certification center." This is not a theoretical story - it happened in October 2016.

One of the largest CAs - GlobalSign, with a long history and very reliable, in October 2016 carried out technical work to reclassify root and intermediate certificates ... and accidentally revoked all its intermediate certificates. Engineers quickly found a problem, but you need to understand that all browsers of all users are included in CRL and OCSP - this is a rather loaded service. GlobalSign, like everyone else, uses the CDN for this purpose, which Cloudflare was in the case of GlobalSign and, for some reason, Cloudflare was unable to quickly clear the cache - invalid lists of revoked certificates continued to creep over the Internet for several hours.

And cached in browsers. Moreover, the CRL and OCSP cache in the browser and operating system last about four days. That is, for four days an error message was displayed when trying to access Wikipedia, Dropbox, Spotify and the Financial Times - all these companies suffered. Of course, there are options for a more expeditious solution to this problem, and they, as a rule, consist in changing the certification center and changing the certificate - then everything will be fine. But this still needs to be reached.

Note that in a situation where everything depends on the CDN, everything depends on the attendance - that is, on whether the wrong answer has been cached on this CDN node, sites with high traffic suffer more, because the probability is much higher.

We talked about the fact that "to solve the situation is simple - you need to replace the certificate", but first you need to understand what is happening. What does the situation with revoked root certificates look like from the point of view of the resource administrator?

Prime time, everything is fine, the load is below average - there are no problems, nothing comes out of monitoring ... and at the same time the traffic has fallen by 30%. The problem is distributed in nature and it is very difficult to catch it - you need to understand that here you are dependent on the vendor, without controlling either CRL or OCSP, so there is an unknown damn nonsense and you don’t know what to do.

What helps to find such problems

Firstly, if you have many balancers and certificates from different vendors (which we talked about) for them, you will see a correlation. Where there was a certificate from GlobalSign - problems started there, on the other balancers everything is fine - that’s the connection.

You need to understand that there were no distributed services for checking CRL and OCSP as of November 2016. That is, there was no service at the same time distributed enough to monitor Cloudflare and at the same time able to go to CRL and OCSP for an arbitrary domain.

But we still have a tool called tcpdump, which also clearly shows what the problem is - the sessions break off around the “TLS server hello” moment, that is, it is obvious that the problems are somewhere in TLS, which means that although it would be pretty clear where to look.

An additional advantage of the fact that you have different keys on different machines is that if one of them is leaked, you will at least find out where. That is, you can write root cause analysis.

Some deployment ideology says that a private key should never leave the machine on which it is generated. This is “technological nihilism”, but such a situation exists.

Returning to the story with GlobalSign, we see that TLS is still at the forefront of technology. However, we still have insufficient tools and still insufficient understanding of why such problems will arise.

And hours can be spent searching for the source of the problems, and when it is discovered, it needs to be solved very quickly. Therefore - automation of certificate management.

This requires either a certification authority that provides an API - for example, Let's Encrypt, which is a good choice if you do not need a wildcard certificate . In 80% of cases, you do not need a wildcard, as it is primarily a way to save money - buy not 20 certificates, but one. But since Let's Encrypt gives them out for free, you probably don't need a wildcard.

Further, there is also a toolkit for automating certificate management with other certificate authorities, such as SSLmate and similar things. Well, in the end, if none of this suits you, then you have to write your own plugin for Ansible.

So, 25 minutes of the report passed, we have the 50th slide in front of us and we finally were able to buy a certificate (laughter in the hall).

How to configure it? At what points should stop?

First, in HTTP, a header called Strict Transport Security is described, which indicates that: those clients who came to him once via HTTPS, that in the future it is worth going to him via HTTPS, and never go through HTTP. This header should be on any server that supports HTTPS simply for the reason that voluntary encryption from the series “if it turned out to be encrypted, we work, but no - it doesn't” just doesn't work. Toolkits like SSLstrip are already too popular. Users will not watch "there is a lock there or not" - they will simply work with the page, so their browser must remember and know in advance that you need to go over HTTPS. HTTPS only makes sense when it is enforced.

Another useful heading is Public Key Pinning, that is, when binding a public key, and we said earlier that it is difficult for us to trust certification authorities, so when a user visits the site we can give him a list of public key hashes that can be used for identification of this site. That is - other certificates, besides those listed, cannot be used by this site. If the browser sees such a certificate, it is either theft or some fraud.

Accordingly, in the Public Key Pinning it is also necessary to include all the keys that will be valid for the period - that is, if you configure for a period of 30 or 60 days, then the hashes of all certificates that will be on this site within 30 or 60 days should be issued . All future certificates that you will only issue now, and will be used only after a month.

Again - with your hands it is very difficult to do, it is a big pain, therefore automation is needed.

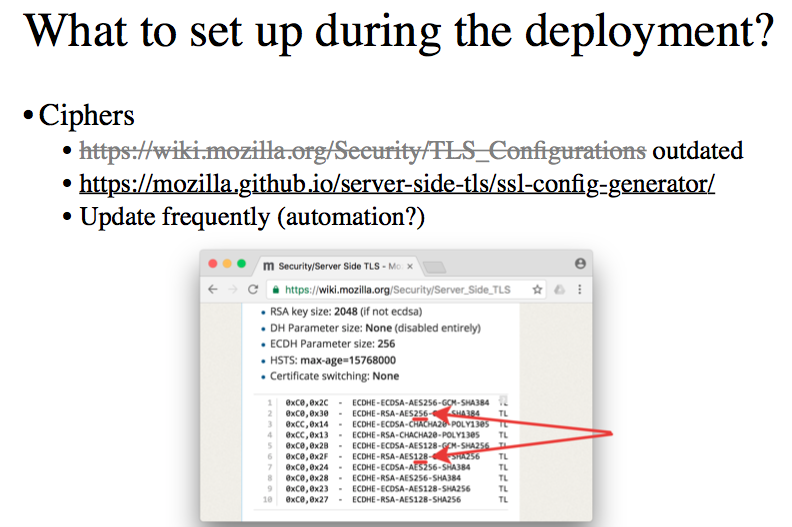

Other problems include ciphers. There is a link in the Mozilla wiki where you can find the config generator for almost all popular web servers: NGINX, Apache, HAProxy and lighttpd (if anyone has one). Go there periodically, go there often - maybe this process should be automated somehow. If you have a full-time cryptograph, then everything is fine, but it is not clear what you are doing here. If you do not have a full-time cryptographer, then the Mozilla Foundation has it. It follows that all the necessary changes in the ciphers - all the work that cryptographers do in an attempt to understand "how ciphers can be vulnerable", is available on this page.

There is a golden rule of cryptography: "Do not invent your cryptography." it applies not only to development, but also to administration.

There are so many unobvious things, for example - this is what the list of ciphers looks like, which Mozilla recommends installing by default. Please note that it is underlined - two identical ciphers, which should be fully supported by the server and should be displayed to the client in the list of available ones. They differ only in that one of them is an AES cipher with a key length of 256, and the other with a length of 128.

If the cipher is 128 long enough reliable, why do we need 256? If there is 256, why do we need 128? Who knows why?

I’ll tell you a story about Rijndael - the name sounds like it's some kind of Tolkien.

The AES encryption standard was ordered by the US federal government in 1998: a competition and tender was organized that won the French Rijndael cipher (the word is so strange because it is made up of the names of two of its inventors - and both French). In 2001, the code was finally approved by the NSA and began to be used, including in the Department of Defense and the US Army. And here the cipher developers came across the requirements of the American army, which, to put it mildly, are up-to-date.

What were they?

The military demanded that the provided cipher had 3 security levels. Three different levels of security. Moreover, the weakest of them is used for the least important data, and the most persistent - for the most important data (directly Top Secret). Having encountered this situation, cryptographers carefully read the requirements for the tender and found out that there is no requirement for the weakest cipher to be unstable - therefore they issued ciphers with three key levels, where even the weakest (128-bit) still cannot be cracked / decrypted for the foreseeable future.

Accordingly, AES-256 is an absolutely redundant thing that was needed because the military wanted it so much. AES-128 is quite sufficient today, but it is of very little importance, because AES supports most modern processors - out of the box and at the iron level, so we do not bother with this topic. I tell this as an illustration of the fact that in the list of ciphers published by the Mozilla Foundation there will be things that you may not be obvious.

Or here's another example

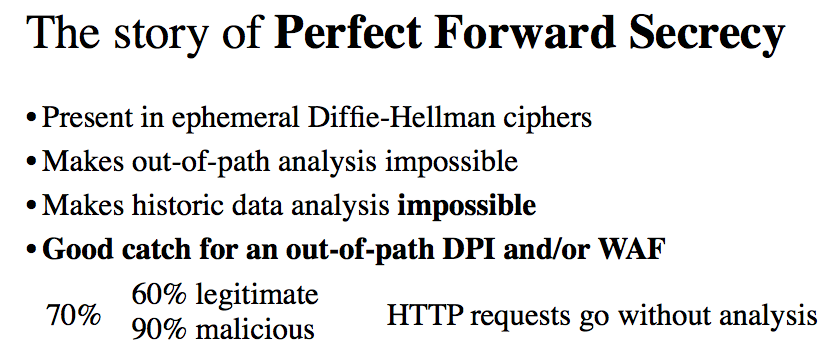

That, how much modern encryption may not be obvious to the layman. There is such a property of session encryption algorithms called Perfect Forward Secrecy - it does not have a normal translation into Russian, so everyone uses the English term. This property of Perfect Forward Secrecy has many ciphers, including ephemeral versions of the Diffie-Hellman protocol , which is very common.

This property implies that once a session key is generated, it cannot be restored or cracked using the original private key, one that matches the public one and is recorded in the certificate.

The Perfect Forward Secrecy property makes passive traffic analysis impossible and analysis in what is called out-of-path analysis - analysis of the saved traffic history is impossible. This comes across a lot of DPI and WAF solutions, because about 70% of HTTPS requests use one or another type of algorithm that supports PFS and pass through these DPI solutions like a knife through the oil, without any analysis. Moreover, of these 70% of traffic, about 10% is legitimate traffic, and the rest is bots and crackers who know how to get through DPI. Perhaps at that moment someone already understood where I was leading.

If it so happened that the state requires you to disclose the private keys of your service and give them so that it becomes possible to decrypt the traffic once collected and stored three months before - thanks to PFS and Diffie-Hellman, we can only wish good luck on this the way.

Protocol Summary

1. Нет сомнений что SSLv2 (версии два) уже умер. Если у кого-то он есть — прекратите его использование прямо сейчас, это очень неаккуратно написанный протокол.

2. SSLv3 и TLSv1.0 ровно так же мертвы и не должны использоваться за исключением того, что TLS вообще не поддерживается в браузере IE6, слава богу его мало кто использует в настоящий момент, однако в принципе такие люди ещё остались.

Что более существенно — целая куча телевизоров не поддерживает TLS в принципе, она поддерживает только старые версии протокола SSL. С этим связана некоторая болтанка вокруг сертификации систем для работы с платёжными картами — PCI DSS. A little over a year ago, the PCI DSS Council decided that starting in June 2016, SSLv3 and earlier versions of TLS (i.e. version 1.0) cannot be used on services that process public card data.

In principle, reasonable requirements, with the exception that a bunch of vendors grabbed rusty agricultural killing tools after that and went to the PCI DSS council with the words: “In this case, we can’t work with either televisions or old smartphones - this is a huge amount of traffic and we can’t do anything ourselves. ”

In February 2016, the PCI DSS Council postponed the implementation of this requirement until 2018 or 2019. We stop here and sigh, because SSL of any version is vulnerable, and TLS of any version is not supported by a bunch of TVs, in particular Korean ones. It's a shame.

However, the TLS protocol continues to live and evolve (with the exception of version 1.0).

The current version is 1.2 and is currently under development 1.3. And it is possible that TLS is growing even too fast, because in TLS 1.2 there is an opportunity to implement a rather interesting mechanism: force the server to sign a strictly defined token shipped to it by the client and, accordingly, get this signature, under certain conditions. That is, we can argue that: "We went through an encrypted connection to this server, so many times, for such a certain period of time." What is the necessary prototype for organizing the blockchain - please, the DDoSCoin cryptocurrency as a concept, and GitHub has a fairly detailed document and you can look at the code.

Well, literally on top

OCSP stapling is a very useful thing. We already said that OCSP certificate revocation is soft fail. On the other hand, it is possible to implement OCSP stapling - when the server itself will go to the validation server and get the necessary signature from there, which it then gives to the client.

For most highly loaded sites, certification authorities may even require this kind of thing, since it is not beneficial for them to bear the same burden. Hold it yourself.

The problem is that in some cases OCSP stapling results in a real hard fail and, for example, if the OCSP server at the certification authority is not available for a certain time and you do not manage to get a valid signature from it at the moment, you have a downtime and you are offended . Therefore, before you enable stapling, you need to get the data and understand who your certification authority is currently and what its structure is.

Further, when implementing TLS, you need to understand that a TLS handshake is expensive and time consuming . That is, packet-rate increases, load, pages open slowly - therefore, even if you didn’t use keep-alive connection with a certain lifetime for some reason in HTTPS, then Adam Langley himself ordered in TLS.

Another important thing that I will not dwell on in detail is that if you have any unencrypted content, you need to do something about it. If you are a large company with a large infrastructure, then you can go to your banner network and say: "Give me this in encrypted form." Because if you are a small service, then in return you will not hear anything good.

Three final points to be remembered

- Use short-lived certificates

- Automate everything

- Trust the Mozilla Foundation - it won’t say bad