Tuning SQL Server 2012 for SharePoint 2013/2016. Part 1

- Tutorial

Hello. My name is Lyubov Volkova, I am a system architect of the business solutions development department. From time to time I write application posts about Microsoft server products (for example, about monitoring SharePoint servers and about servicing databases related to content databases, services and components of this platform.

This post is the first of two in which I will talk about something important from the point of view the administration of SharePoint portals on the topic of tuning SQL servers, aimed at achieving high performance.It is extremely important to ensure careful planning, correct installation and subsequent configuration of the SQL server that will use I have to store the data stored on the corporate portal.

In this post, you can read about planning for installing an SQL server. A little later, the second part will be published on the installation of SQL-server and subsequent configuration.

We list seven main reasons why server virtualization is being considered:

When considering the virtualization of servers that are part of a SharePoint farm or a network infrastructure server that provides user authentication, name resolution, PKI management, and other functions, it is necessary to remember that there is a possibility of virtualization:

A summary of guest operating systems, their supported versions of SQL servers and clustering of virtual machines is presented in the table below:

Note that guest clustering is supported in versions of the Windows Server 2008 SP2 operating system and later.

A more detailed description of the purpose and architecture of the failover guest cluster can be found here , and hardware requirements, features of support for working in a virtual environment for specific versions of SQL servers can be obtained from the links given in the following table:

ESG Labs studies have confirmed that SQL Server 2012 OLTP performance on virtual servers is second only to 6.3% of the physical platform.

Hyper-V supports up to 64 processors per separate virtual machine, tests showed a 6-fold increase in productivity and a 5-fold increase in transaction execution time. The illustration below shows the main results of the tests. A detailed report can be found here .

Hyper-V can be used to virtualize large SQL server databases and supports the use of additional features such as SR-IOV , Virtual Fiber Channel and Virtual NUMA .

We list its main drawbacks, which become the main reasons that SQL Server for SharePoint is deployed on a physical server:

To ensure the normal level of functioning of the SQL server, it is required to provide a sufficient amount of RAM. In the case when only one instance of SQL is deployed, which is allocated exclusively for working with SharePoint databases, the requirements are minimal:

SharePoint is a powerful platform for building portal solutions with a modular architecture. A set of services and components that ensure the operation of the corporate portal allows you to highlight a list of databases whose storage should be planned. There are three groups of databases that differ in the time of recommended planning:

This Microsoft article details the key features of all the databases that are listed in groups. The table below contains a recommendation for setting recovery mode for each of them, taking into account many years of experience in technical support, migrations, and restoration of SharePoint databases:

Ideally, it is recommended that you have six disks on the SQL server to host the following files:

SharePoint uses a SQL server to store its data, which has its own data storage features. In this regard, the preparation of the disk subsystem at both the physical and logical levels, taking into account these features, will have a huge impact on the final performance of the corporate portal.

The data that Microsoft SQL Server stores is divided into 8 KB pages, which in turn are grouped into so-called 64 KB extents. Learn more about this here . In accordance with this, the configuration of the disk subsystem is to ensure a consistent placement of the extent at all physical and logical levels of the disk subsystem.

The first thing to start is RAID initialization. In this case, the array on which the databases will be located should have a stripe size multiple of 64 KB, preferably if it is exactly 64 KB.

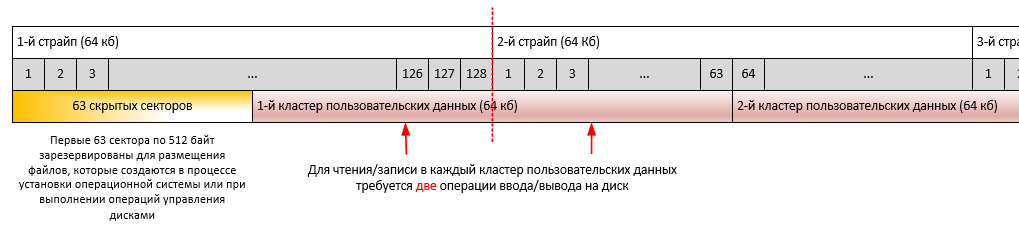

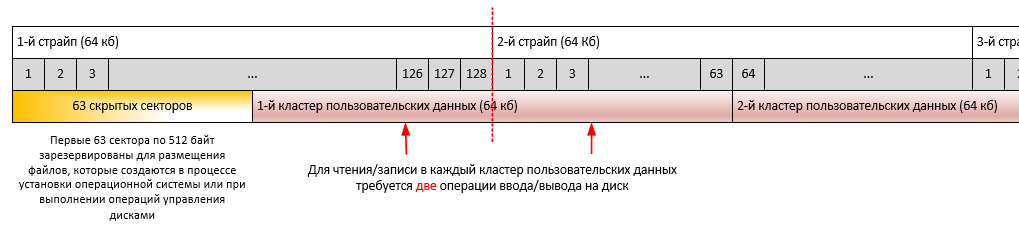

The procedure of aligning the file system with respect to its physical placement on the disk array is very important when considering the issue of improving the performance of the SQL server. It is described in detail here.. On any hard disk or SSD, the first 63 sectors of 512 bytes are reserved for placing files that are created during the installation of the operating system or during disk management operations, as well as using special programs - partition managers of the hard disk. The essence of the alignment procedure is to select a starting partition offset such that an integer number of file system clusters fit in one strip array disk array. Otherwise, a situation is possible in which to perform the operation of reading one cluster of data from the file system, it will be necessary to perform two operations of physical reading from the disk array, which significantly degrades the performance of the disk subsystem (loss in performance can be up to 30%).

The illustration below reflects the features of performing read / write operations for unaligned sections using 64 Kb stripe as an example.

The illustration below shows the features of performing read / write operations for aligned sections using the 64 Kb stripe as an example.

By default, in Windows Server 2008/2012, the partition offset is 1024 KB, which correlates well with the size of the stripe disks on 64 KB, 128 KB, 256 KB, 512 KB, and 1024 KB disks.

In cases of setting up operating systems from images, using various software for managing disk partitions, situations may arise related to violation of partition alignment. In this regard, it is recommended to check the correlations described in the next section before installing the SQL server as an additional insurance.

Partition Offset

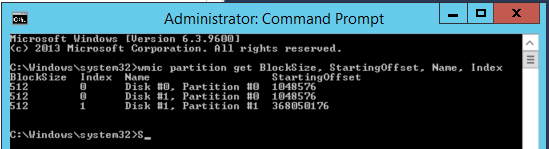

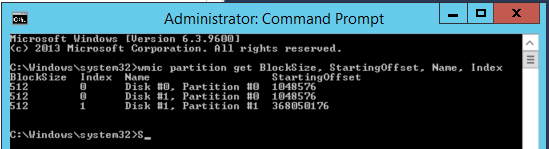

The most correct method for checking partition offsets for basic disks is to use the wmic.exe command-line utility :

wmic partition get BlockSize, StartingOffset, Name, Index

To check the alignment of partitions for dynamic disks, use diskdiag.exe (for a description of how to work with it, see section « the Dynamic Disk Offsets of Partition »).

Stripe unit size

Windows does not have standard tools for determining the size of the minimum data block for writing to disk (stripe unit size). The value of this parameter must be found out from the vendor’s documentation on the hard drive or from the SAN administrator. Most often, Stripe Unit Size indicators are 64 Kb, 128 Kb, 256 Kb, 512 Kb or 1024 Kb. In the examples discussed earlier, a value of 64 Kb was used.

File Allocation Unit Size

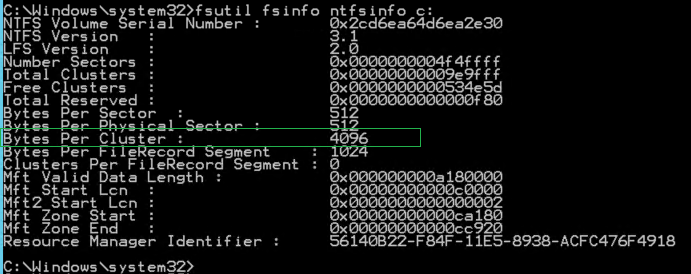

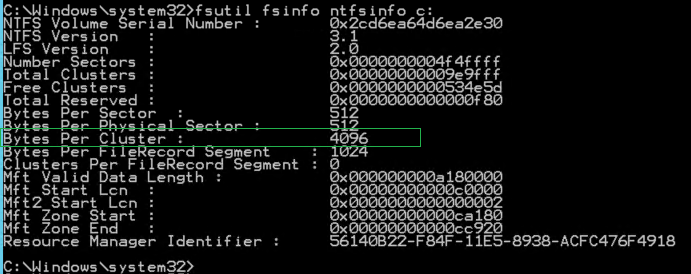

To determine the value of this indicator, you must use the following command for each disk separately (Bytes Per Cluster property value):

fsutil fsinfo ntfsinfo c:

For partitions that store data files and SQL Server transaction log files, the metric value should be 65.536 bytes (64 Kb). See “Optimizing file storage at the file system level”.

Two important correlations, in compliance with which are the basis for optimal disk I / O performance. The calculation results for the following expressions must be an integer:

Most important is the first correlation.

An example of unaligned values. The Partition offset is 32.256 bytes (31.5 Kb) and the minimum block size for writing data to disk is 65.536 bytes (64 Kb). 32,256 bytes / 65,536 bytes = 0.4921875. The result of division is not an integer; as a result, we have unaligned values of Partition_Offset and Stripe_Unit_Size.

If the Partition offset is 1,048,576 bytes (1 Mb), and the size of the minimum block for writing data to disk is 65,536 bytes (64 Kb), then the division result is 8, which is an integer.

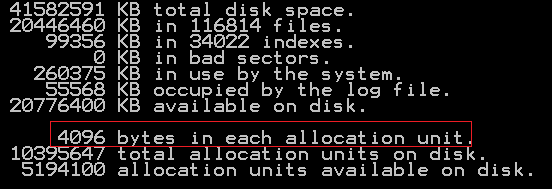

The next level to organize optimal storage of extents is the file system. The optimal settings here are the NTFS file system with a cluster size of 65.536 bytes (64 KB). In this regard, before installing the SQL server, it is strongly recommended that you format the disks and set the cluster size to 65.536 bytes (64 Kb) instead of the default 4096 bytes (4K).

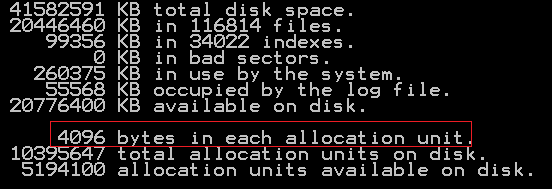

You can check the current values for the disk using the following command:

chkdsk c:

Example output:

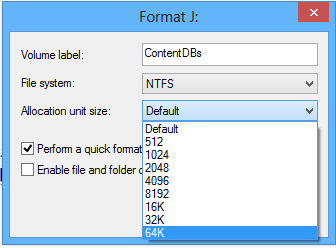

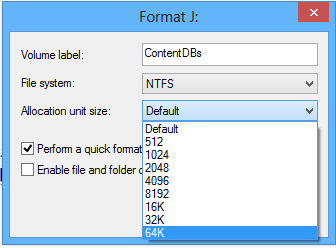

In the presented example, the cluster size is 4096 bytes / 1024 = 4 Kb, which does not correspond to the recommendations. To change, you will have to reformat the disk. Cluster size can be set during configuration of disk formatting using the Windows operating system:

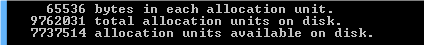

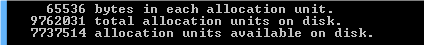

After that, make sure that the cluster size now complies with the recommendations (65536 bytes / 1024 = 64 kb):

When designing the placement of system database files for SQL Server and SharePoint databases, the optimal choice of disks based on their rank based on information about read / write speeds will be important.

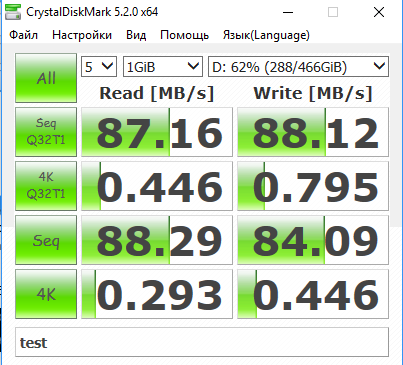

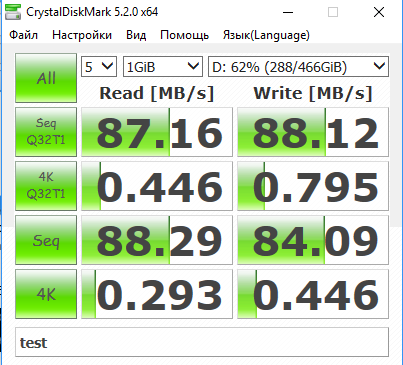

You can use software similar to CrystalDiskMark (for example, SQLI O) to test and determine accurate data on the read / write speed of random access and sequential access files . Typically, an application allows you to enter basic test parameters, at least:

Below is an example of the test results obtained:

The following is a description of the contents of the tests performed:

Note that the tests use a recording unit size of 4 KB, which does not fully correspond to the actual data storage scheme by the SQL server and working with 8 KB pages with 64 KB ectents. When executed, there is no goal to ensure that the results obtained are absolutely consistent with real data about the same operations performed by the SQL server. The main ultimate goal is ranking the disks by the speed of read / write operations and obtaining the final table.

To quickly make a decision during the installation of the SQL server, configure the parameters for placing database files, it will be useful to format the results of the tests in a table separately for each type of test, the template of which is shown below:

For magnetic disks (single or as part of RAID), sequential operations (Seq Q32 test) often exceed the results of other tests by 10x-100x times. These metrics often depend on how you connect to the repository. It must be remembered that the number of Mb / s claimed by vendors is a theoretical limitation. In practice, they are usually less than claimed by 5-20%.

For solid-state drives, the difference between the speed of sequential and arbitrary read / write operations should not differ much from each other, usually no more than 2-3 times. Connection methods such as 3Gb SATA, 1Gb iSCSI, or 2 / 4Gb FC will affect speed.

If the server boots from a local disk and stores the SQL server data on another disk, you must also include both of these disks in the test plan. A comparison of the CrystalDiskMark benchmark test results for the disk on which the variables are stored, data with the performance of the disk on which the operating system is installed, can demonstrate the performance advantage of the second. In this situation, the system administrator needs to check the settings of the disk or SAN storage for correctness and obtaining optimal performance indicators.

Information about the purpose of the portal is used to correctly prioritize the selection of disks for storing SharePoint databases.

If the corporate portal is used by users mainly for reading content and there is no active daily increase in content (for example, an external website of a company), the most productive disks must be allocated for data storage, while the less productive ones should be stored for transaction log files:

If the corporate portal is used to organize collaboration between users who actively download dozens of documents daily, the priorities will be different:

The slow speed of read / write operations for the tempdb SQL Server system database seriously affects the overall performance of the SharePoint farm, and as a result, the performance of the corporate portal. A better recommendation would be to use RAID 10 to store the files in this database.

For sites designed to work together or perform a large volume of upgrade operations, special attention should be paid to the choice of disks for storing SharePoint content database files. The following recommendations should be considered:

The general formula for calculating the expected size of the content database is:

((D * V) * S) + (10Kb * (L + (V * D))), where:

Additional storage costs must be considered:

Although RAID 5 has the best performance / cost ratios, it is strongly recommended that you use RAID 10 for SharePoint databases, especially if you are actively using the corporate portal for user collaboration. RAID 5 has not very high write speeds to disk.

You must ensure that a high-speed connection is configured between the SQL and SharePoint servers.

It is recommended that you install two network adapters, one of which is dedicated to transferring traffic between SQL and SharePoint, and the second for interaction with client requests, as the first step to increase the performance of network communication on each server in a SharePoint farm.

Installing SQL and SharePoint servers on the basis of Windows Server 2012 and using the functionality of grouping adapters into a group will give additional advantages and can be the second serious step in optimizing configuration settings for SharePoint:

You can read more about setting up grouping adapters here .

According to the software requirements, the SharePoint farm can be deployed on the basis of the following operating systems:

Back in 2007, Windows Server 2003 SP2 introduced a set of features that control network performance and are commonly known as the Scalable Networking Pack (SNP). This package used hardware acceleration when processing network packets to provide higher bandwidth. The SNP package includes features known as Receive Side Scaling (RSS), TCP / IP Chimney Offload (sometimes called TOE), and Network Direct Memory Access (NetDMA). This functionality of the operating system allows you to transfer the load of processing TCP / IP packets from the processor to the network card.

Due to issues with SNP in Server 2003 SP2, the IT community quickly made it a rule to disable these features. For Server 2003, this approach made sense. But on Server 2008, Server 2008 R2, and later operating systems, disabling these features can often lead to reduced network performance and server bandwidth. These features are very stable on Server 2008 R2 (with or without SP1). Unfortunately, disabling functions as one of the first steps in solving network problems is still a very common troubleshooting practice, although many problems cannot be solved in this way.

RSS technology provides the network adapter with the ability to distribute network processing load across multiple cores in multi-core processors.

If you disable the use of the RSS mechanism, the system may suffer significant performance losses, which will reduce the overall load and the number of network operations that each server can process. Such a situation may lead to an increase in costs associated with the purchase of additional equipment, which is actually not required, and with additional infrastructure costs that accompany the purchase of additional equipment.

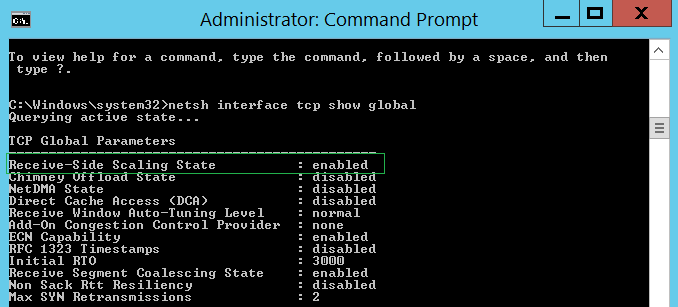

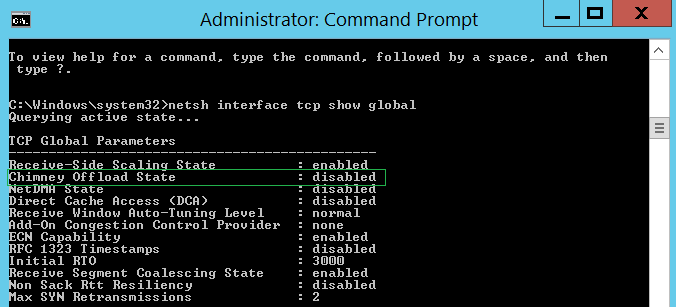

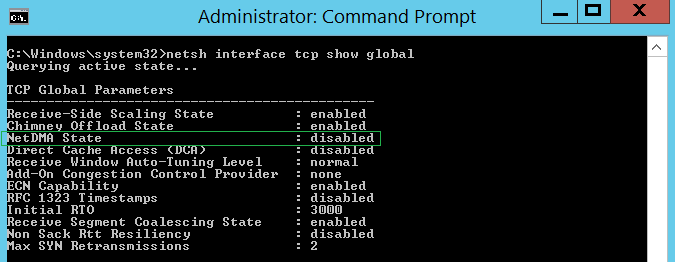

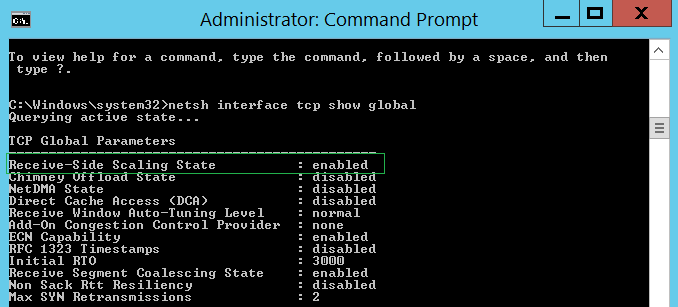

By default, Server 2008 R2 and later have RSS enabled. You can find out whether the technology is enabled globally or not by analyzing the result of the following command:

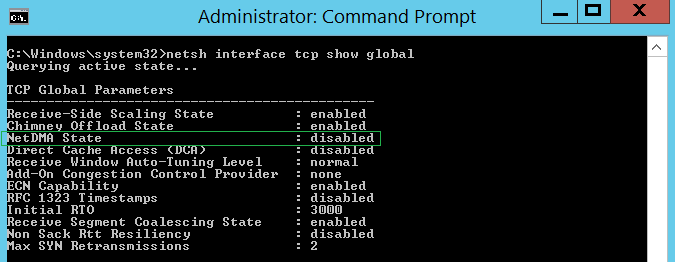

netsh interface tcp show global

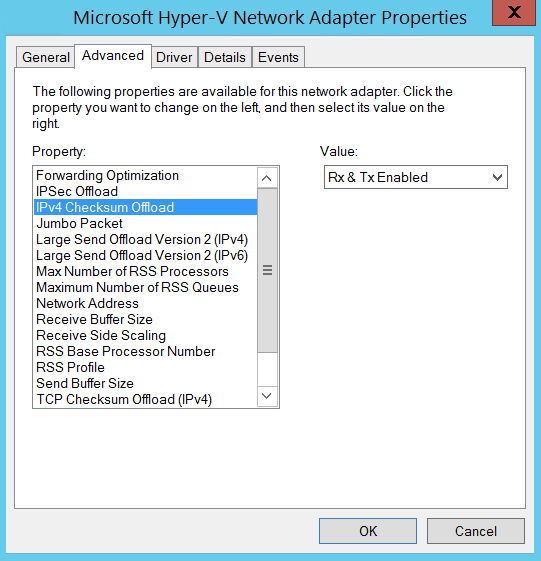

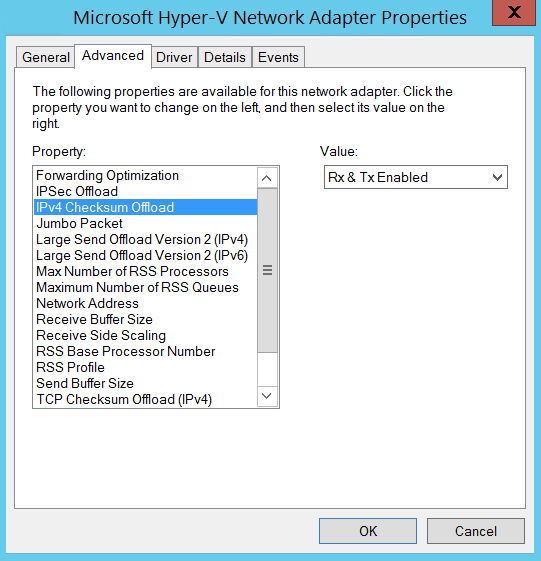

It is necessary to make sure that the use of RSS technology is also enabled on individual network adapters through their additional properties or configuration settings:

RSS also depends on network adapter offloads, known as TCP Checksum Offload, IP Checksum Offload, Large Send Offload and UDP Checksum Offload (for IPv4 and IPv6 protocols). So if they were disabled on the network adapter, RSS technology will not be used for it.

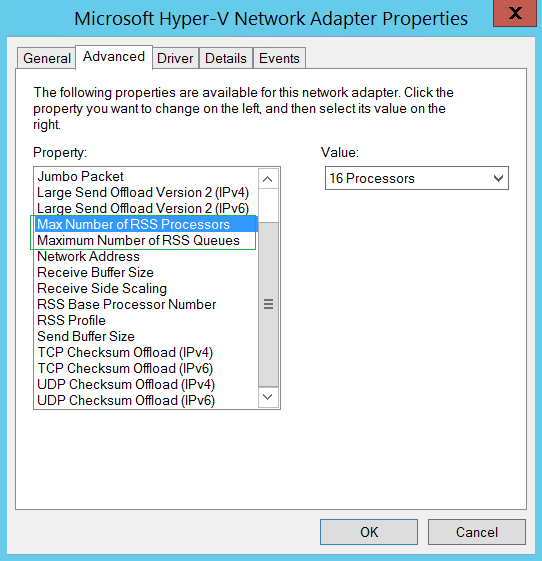

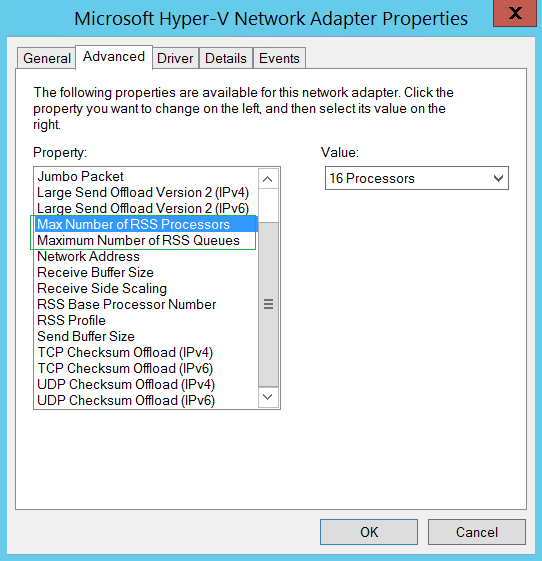

In addition, some network adapters have additional parameters that control the number of processors used in RSS mechanisms, as well as the number of RSS queues.

A common mistake is to install a small number of RSS processors compared to the number of processors on the server. Each adapter and manufacturer has their own recommendations for tuning, so you need to look at the manufacturer's documentation to determine the optimal settings for a specific environment and workload.

Chimney Offload TCP technology (often referred to as TCP / IP Offloading or, more briefly, TOE) manufactures the transmission of TCP traffic from the computer processor to a network adapter that supports TOE. Transferring TCP processing from the central processor to the network adapter can free the processor to perform functions more related to the operation of applications. TOE can offload processing for both TCP / IPv4 and TCP / IPv6 connections, if the network adapter supports it.

Due to delays associated with the transfer of TCP / IP processing to the network adapter (more details can be found here), TOE technology is most effective for applications that establish long-term connections and transfer large amounts of data. Servers that make long-term connections, such as database replication, working with files, or performing backup functions, are examples of computers that can benefit from using TOE.

Short-lived servers, such as SharePoint web servers, benefit virtually nothing from this technology. In this regard, it is recommended to disable the use of TCP Chimney Offload.

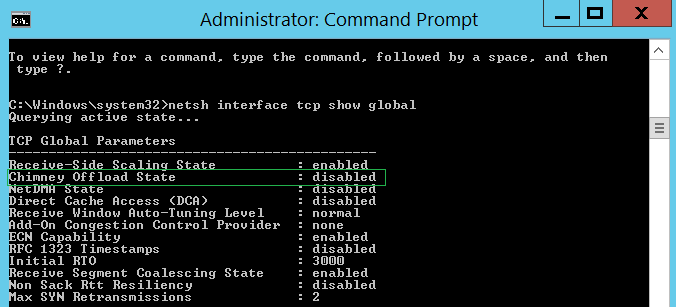

You can check the global TCP settings with the following command:

netsh interface tcp show global

You can disable TOE for TCP using the command:

netsh int tcp set global chimney = disable

Before deciding whether to disable TOE, it is recommended that you first look at the TOE usage statistics for TCP using the command:

Netsh netsh interface tcp show chimneystats

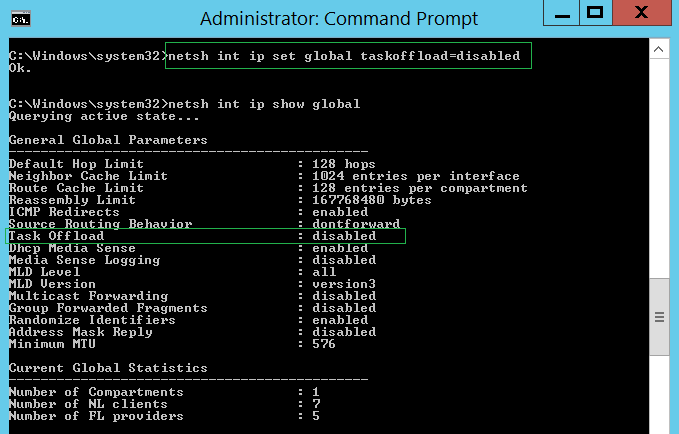

You can disable TOE for IP using the following command

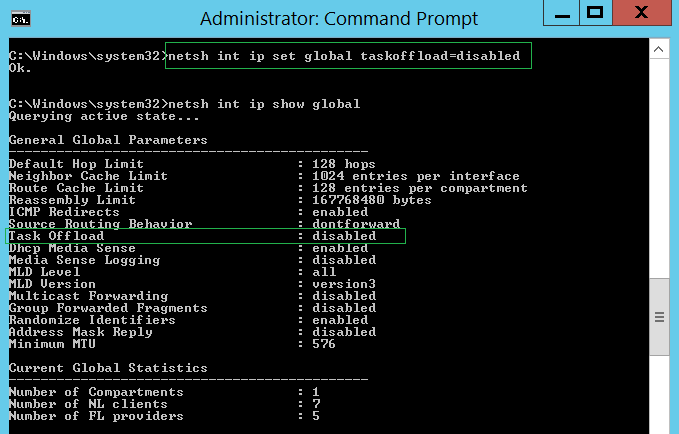

netsh int ip set global taskoffload = disable d

Check global IP settings with of another command:

netsh int ip show global

NetDMA is quite an interesting feature. The meaning of its application is when TOE is not supported for you and you need to speed up the processing of network connections. NetDMA allows you to copy data from the receiving buffers of the network stack directly to application buffers without CPU participation, which removes this task from the CPU.

In order to use NetDMA, you need equipment that supports this functionality - in the case of Windows it is a processor with support for Intel I / O Acceleration Technology (I / OAT) family of technologies. The inclusion of NetDMA on AMD equipment, alas, will not bring any effect.

Note that TOE and NetDMA are mutually exclusive; these functions cannot be simultaneously enabled in the system.

To enable NetDMA in Windows 2008 R2, you can use the following command:

netsh interface tcp set global netdma = enabled

You can check the status of the functional using another command:

netsh interface tcp show global

Windows Server 2008 R 2

“Automatic” for TCP Chimney Offload means that the function will be enabled if the “TCP Chimney Offload” setting is enabled for the network adapter in combination with a high-speed connection such as 10 GB Ethernet.

Windows Server 2012, Windows Server 2012 R2 2

This post is the first of two in which I will talk about something important from the point of view the administration of SharePoint portals on the topic of tuning SQL servers, aimed at achieving high performance.It is extremely important to ensure careful planning, correct installation and subsequent configuration of the SQL server that will use I have to store the data stored on the corporate portal.

In this post, you can read about planning for installing an SQL server. A little later, the second part will be published on the installation of SQL-server and subsequent configuration.

Planning for SQL Server Installation

SQL Server Virtualization

Why server virtualization?

We list seven main reasons why server virtualization is being considered:

- Increased hardware utilization . According to statistics, most servers are 15-20 percent loaded when they perform everyday tasks. Using multiple virtual servers on one physical server will increase it to 80 percent, while providing significant savings on the purchase of hardware.

- Reduced hardware replacement costs. Since virtual servers are untied from specific equipment, updating the physical server fleet does not require reinstalling and configuring the software. A virtual machine can simply be copied to another server.

- Increase the flexibility of using virtual servers. If it is necessary to use several servers (for example, for testing and working in a product environment) under a changing load, virtual servers are the best solution, since they can be seamlessly transferred to other platforms when the physical server is experiencing increased loads.

- Increased manageability of server infrastructure. There are many virtual infrastructure management products that allow you to centrally manage virtual servers and provide load balancing and live migration.

- Providing high availability. Preparing virtual machine backups and restoring them takes much less time and is a simpler procedure. Also, in case of equipment failure, the backup of the virtual server can be immediately launched on another physical server.

- Savings on staff . Simplification of virtual server management in the long run entails savings on specialists serving the company's infrastructure. If two people using the tools for managing virtual servers can do what the four did, why do you need two extra specialists who receive at least $ 15,000 a year? Nevertheless, it should be borne in mind that considerable training is also needed to train qualified personnel in the field of virtualization.

- Saving on electricity. For small companies, this factor, of course, does not matter much, but for large data centers, where the cost of maintaining a large fleet of servers includes the cost of electricity (power, cooling systems), this point is of considerable importance. Concentrating multiple virtual servers on one physical server will reduce these costs.

SQL Server Virtualization Support

When considering the virtualization of servers that are part of a SharePoint farm or a network infrastructure server that provides user authentication, name resolution, PKI management, and other functions, it is necessary to remember that there is a possibility of virtualization:

- Active Directory Domain Services

- Web servers and SharePoint application servers (Front-End Web Server and Application Server);

- SQL Server Services

- Any component of SQL, ADS, SharePoint 2013.

A summary of guest operating systems, their supported versions of SQL servers and clustering of virtual machines is presented in the table below:

| SQL Server Version | Supported Windows Server Guest Operating Systems | Hyper-V Support | Guest clustering support |

|---|---|---|---|

| SQL Server 2008 SP3 | 2003 SP2, 2003 R2 SP2, 2008 SP2, 2008 R2 SP1, 2012, 2012 R2 | Yes | Yes |

| SQL Server 2008 R2 SP2 | 2003 SP2, 2008 SP2, 2008 R2 SP1, 2012, 2012 R2 | Yes | Yes |

| SQL Server 2012 SP1 | 2008 SP2, 2008 R2 SP1, 2012, 2012 R2 | Yes | Yes |

| SQL Server 2014 | 2008 SP2, 2008 R2 SP1, 2012, 2012 R2 | Yes | Yes |

Note that guest clustering is supported in versions of the Windows Server 2008 SP2 operating system and later.

A more detailed description of the purpose and architecture of the failover guest cluster can be found here , and hardware requirements, features of support for working in a virtual environment for specific versions of SQL servers can be obtained from the links given in the following table:

| SQL Server Version | Documentation Link |

|---|---|

| SQL Server 2008 SP3 | Hardware & Software Requirements, Hardware Virtualization & Guest Clustering Support |

| SQL Server 2008 R2 SP2 | Hardware & Software Requirements, Hardware Virtualization & Guest Clustering Support |

| SQL Server 2012 SP1 | Hardware & Software Requirements, Hardware Virtualization & Guest Clustering Support |

| SQL Server 2014 | Hardware & Software Requirements, Hardware Virtualization & Guest Clustering Support |

Virtualization SQL Server Performance

ESG Labs studies have confirmed that SQL Server 2012 OLTP performance on virtual servers is second only to 6.3% of the physical platform.

Hyper-V supports up to 64 processors per separate virtual machine, tests showed a 6-fold increase in productivity and a 5-fold increase in transaction execution time. The illustration below shows the main results of the tests. A detailed report can be found here .

Hyper-V can be used to virtualize large SQL server databases and supports the use of additional features such as SR-IOV , Virtual Fiber Channel and Virtual NUMA .

Disadvantages of SQL Server Virtualization

We list its main drawbacks, which become the main reasons that SQL Server for SharePoint is deployed on a physical server:

- The need to restructure the approach to working with system reliability. Indeed, since several virtual machines are running on the same physical server, failure of the host leads to the simultaneous failure of all VMs and the applications running on them.

- Load balancing. If the virtual machine, the SQL server usually uses a lot of computing resources of the processor (or memory), which affects the operation of other virtual machines and host applications that also require processor time (memory). Even if only the virtual machine with the SQL server is located on the host, tuning the optimal level of performance requires a whole range of actions. For more information, see Michael Ooty's article, available here. Administrators have to distribute the load by setting rules by which running virtual machines will automatically move to less loaded servers or "unload" loaded ones.

RAM & CPU

To ensure the normal level of functioning of the SQL server, it is required to provide a sufficient amount of RAM. In the case when only one instance of SQL is deployed, which is allocated exclusively for working with SharePoint databases, the requirements are minimal:

| SharePoint Small Farm (up to 500 GB content) | Medium SharePoint Farm (Content from 501 GB to 1 TB) | Large SharePoint Farm (1-2 TB Content) | Very Large SharePoint Farm (2-5 TB Content) | Special cases | |

|---|---|---|---|---|---|

| RAM | 8 GB | 16 GB | 32 GB | 64 GB | 64 GB |

| CPU | 4 | 4 | 8 | 8 | 8 |

Plan for size, placement, and general database requirements for SharePoint

SharePoint is a powerful platform for building portal solutions with a modular architecture. A set of services and components that ensure the operation of the corporate portal allows you to highlight a list of databases whose storage should be planned. There are three groups of databases that differ in the time of recommended planning:

- Before installing the SQL server. This group includes all the system databases of the SQL server, the SharePoint content database (one by default).

- Before installing the SharePoint server. This group includes the SharePoint configuration database, the SharePoint Central Administration content database.

- Before deploying a service application storing data in a database / databases. Examples: Managed Metadata Service, User Profile Service, Search, etc.

- Before creating additional SharePoint content databases.

This Microsoft article details the key features of all the databases that are listed in groups. The table below contains a recommendation for setting recovery mode for each of them, taking into account many years of experience in technical support, migrations, and restoration of SharePoint databases:

| Master database | Plain | Plain |

| Model database template | Full access | Plain. Typically, tuning is performed once or very rarely. It is enough to back up after making changes |

| Msdb database | Plain | Plain |

| Tempdb database | Plain | Plain |

| Central Administration Content Database | Full access | Plain. |

| Configuration database | Full access | Changes are usually actively made during the initial or point-in-point configuration of services and components of a SharePoint farm, which has clear, short timelines. It’s more efficient to back up after completing the completed change blocks. |

| Application Management Service Database | Full access | Plain. Typically, a SharePoint farm installs from 0 to a small number of (1-5) SharePoint applications. Application installations are usually significantly spaced in time. It is more rational to perform a full backup of the database after installing a separate application |

| Subscription Settings Service Database | Full access | Plain. Typically, SharePoint applications are installed on a small number of sites (1-5). Application installations are usually significantly spaced in time. It is more rational to perform a full backup of the database after installing a separate application. Full. If you intend to install applications on an unlimited number of websites, installations are often performed |

| Business Data Connectivity Service | Full access | Plain. Configuring and making changes associated with the operation of this service is usually short in time and is performed once or a small number of times. It is more rational to perform a full database backup after the completed blocks of creating / editing data models, external content types, and external data lists |

| Managed Metadata Service Application Database | Full access | Simple when using directories with rarely modified content. Full access in case of changes to the term sets on a regular basis (active creation and editing of hierarchical directories, tagging of elements of lists and documents). |

| SharePoint Translation Services Application Database | Full access | Plain. |

| Power pivot database | Full access | Full access |

| PerformancePoint Services Database | Full access | Simple when working with a set of indicator panels, the settings of which are rarely changed. Full access in case of intensive creation / modification of indicator panels |

| Search Administration Database | Plain | Plain. Configuring and making changes associated with the operation of this service is usually short in time and is performed once or a small number of times. It is more rational to perform a full backup of the database after completed settings blocks, changes to the search scheme |

| Analytics Reporting Database | Plain | Simple, if the analysis of search results and the intelligent adaptation of the search results, search suggestions are not critical. Full access if the portal search and its constant personalized work are critical for business |

| Crawl database | Plain | Plain. Restoring this database from a backup or through the recovery wizard will most often be much longer compared to resetting the index and crawling the content completely. |

| Link Database | Plain | Simple, if the analysis of search results and the intelligent adaptation of the search results, search suggestions are not critical. Full access if the portal search and its constant personalized work are critical for business |

| Secure Store Database | Full access | Plain. Configuring and making changes associated with the operation of this service is usually short in time and is performed once or a small number of times. It is more rational to perform a full database backup after completed configuration blocks. |

| State Service Application Database | Full access | Plain. Due to the fact that this database provides storage of temporary states for InfoPath forms, Visio Web Parts, it is not intended for long-term storage of this information. The correctness of the data in this database is important at the time the user views the specific form of InfoPath or Visio diagram through the web part. |

| Usage and Health Data Collection Database | Plain | Simple or Full Access. Depends on the requirements for the acceptable level of data loss for the organization. |

| Profiles Database | Plain | Simple, if there is no critical business functionality, the work of which requires working with the most relevant user profile data Full access, if the situation is reverse. |

| Profile Synchronization Database | Plain | Simple if recovery time takes less time compared to reconfiguring connections and performing full profile synchronization Full access if the situation is reverse |

| Social Tag Database | Plain | Simple, if working with social content is not a business critical functionality Full access, if the situation is reverse |

| Word Automation Database | Full access | Full access |

Number of discs

Ideally, it is recommended that you have six disks on the SQL server to host the following files:

- Tempdb database data files;

- Tempdb database transaction log file;

- SharePoint database data files

- SharePoint database transaction log files

- Operating system;

- Files of other applications.

Disk Subsystem Preparation

SharePoint uses a SQL server to store its data, which has its own data storage features. In this regard, the preparation of the disk subsystem at both the physical and logical levels, taking into account these features, will have a huge impact on the final performance of the corporate portal.

The data that Microsoft SQL Server stores is divided into 8 KB pages, which in turn are grouped into so-called 64 KB extents. Learn more about this here . In accordance with this, the configuration of the disk subsystem is to ensure a consistent placement of the extent at all physical and logical levels of the disk subsystem.

The first thing to start is RAID initialization. In this case, the array on which the databases will be located should have a stripe size multiple of 64 KB, preferably if it is exactly 64 KB.

Aligning partitions on a hard drive or SSD

The procedure of aligning the file system with respect to its physical placement on the disk array is very important when considering the issue of improving the performance of the SQL server. It is described in detail here.. On any hard disk or SSD, the first 63 sectors of 512 bytes are reserved for placing files that are created during the installation of the operating system or during disk management operations, as well as using special programs - partition managers of the hard disk. The essence of the alignment procedure is to select a starting partition offset such that an integer number of file system clusters fit in one strip array disk array. Otherwise, a situation is possible in which to perform the operation of reading one cluster of data from the file system, it will be necessary to perform two operations of physical reading from the disk array, which significantly degrades the performance of the disk subsystem (loss in performance can be up to 30%).

The illustration below reflects the features of performing read / write operations for unaligned sections using 64 Kb stripe as an example.

The illustration below shows the features of performing read / write operations for aligned sections using the 64 Kb stripe as an example.

By default, in Windows Server 2008/2012, the partition offset is 1024 KB, which correlates well with the size of the stripe disks on 64 KB, 128 KB, 256 KB, 512 KB, and 1024 KB disks.

In cases of setting up operating systems from images, using various software for managing disk partitions, situations may arise related to violation of partition alignment. In this regard, it is recommended to check the correlations described in the next section before installing the SQL server as an additional insurance.

Important correlations: Partition Offset, File Allocation Unit Size, Stripe Unit Size

Partition Offset

The most correct method for checking partition offsets for basic disks is to use the wmic.exe command-line utility :

wmic partition get BlockSize, StartingOffset, Name, Index

To check the alignment of partitions for dynamic disks, use diskdiag.exe (for a description of how to work with it, see section « the Dynamic Disk Offsets of Partition »).

Stripe unit size

Windows does not have standard tools for determining the size of the minimum data block for writing to disk (stripe unit size). The value of this parameter must be found out from the vendor’s documentation on the hard drive or from the SAN administrator. Most often, Stripe Unit Size indicators are 64 Kb, 128 Kb, 256 Kb, 512 Kb or 1024 Kb. In the examples discussed earlier, a value of 64 Kb was used.

File Allocation Unit Size

To determine the value of this indicator, you must use the following command for each disk separately (Bytes Per Cluster property value):

fsutil fsinfo ntfsinfo c:

For partitions that store data files and SQL Server transaction log files, the metric value should be 65.536 bytes (64 Kb). See “Optimizing file storage at the file system level”.

Two important correlations, in compliance with which are the basis for optimal disk I / O performance. The calculation results for the following expressions must be an integer:

- Partition_Offset ÷ Stripe_Unit_Size

- Stripe_Unit_Size ÷ File_Allocation_Unit_Size

Most important is the first correlation.

An example of unaligned values. The Partition offset is 32.256 bytes (31.5 Kb) and the minimum block size for writing data to disk is 65.536 bytes (64 Kb). 32,256 bytes / 65,536 bytes = 0.4921875. The result of division is not an integer; as a result, we have unaligned values of Partition_Offset and Stripe_Unit_Size.

If the Partition offset is 1,048,576 bytes (1 Mb), and the size of the minimum block for writing data to disk is 65,536 bytes (64 Kb), then the division result is 8, which is an integer.

File system-level storage optimization

The next level to organize optimal storage of extents is the file system. The optimal settings here are the NTFS file system with a cluster size of 65.536 bytes (64 KB). In this regard, before installing the SQL server, it is strongly recommended that you format the disks and set the cluster size to 65.536 bytes (64 Kb) instead of the default 4096 bytes (4K).

You can check the current values for the disk using the following command:

chkdsk c:

Example output:

In the presented example, the cluster size is 4096 bytes / 1024 = 4 Kb, which does not correspond to the recommendations. To change, you will have to reformat the disk. Cluster size can be set during configuration of disk formatting using the Windows operating system:

After that, make sure that the cluster size now complies with the recommendations (65536 bytes / 1024 = 64 kb):

Read / write speed ranking of disks

When designing the placement of system database files for SQL Server and SharePoint databases, the optimal choice of disks based on their rank based on information about read / write speeds will be important.

You can use software similar to CrystalDiskMark (for example, SQLI O) to test and determine accurate data on the read / write speed of random access and sequential access files . Typically, an application allows you to enter basic test parameters, at least:

- The number of tests. There should be several tests to get a more accurate average value.

- The size of the file to be used during the execution of the tests. It is recommended that you set a value that exceeds the amount of server RAM in order to avoid using only the cache. In addition, the figures obtained will allow us to obtain statistics on the processing of large file sizes, which is relevant for many corporate portals with intensive user collaboration on content.

- The name of the drive on which read / write operations will be performed;

- List of tests to be performed.

Below is an example of the test results obtained:

The following is a description of the contents of the tests performed:

- Seq Q32 – скорость выполнения чтения/записи в файлы с последовательным доступом. Для SQL-сервера это такие операции, как резервное копирование, сканирование таблиц, дефрагментация индексов, чтение/запись файлов транзакций.

- 4K QD32 – скорость выполнения большого кол-ва случайных операций чтения/записи с данными малых размеров (4 Кб) в один и тот же момент времени. Результаты теста позволяют судить о показателях диска при выполнении транзакций в ходе работы OLTP-сервера, имеющего высокую загрузку.

- 512K — скорость выполнения большого кол-ва операций чтения/записи с данными больших размеров (4 Кб) в один и тот же момент времени. Результаты этого теста можно не учитывать, т.к. к работе SQL-сервера как такового отношения не имеют.

- 4K – скорость выполнения небольшого кол-ва случайных операций чтения/записи с данными малых размеров (4 Кб) в один и тот же момент времени. Результаты теста позволяют судить о показателях диска при выполнении транзакций в ходе работы OLTP-сервера с небольшой загрузкой.

Note that the tests use a recording unit size of 4 KB, which does not fully correspond to the actual data storage scheme by the SQL server and working with 8 KB pages with 64 KB ectents. When executed, there is no goal to ensure that the results obtained are absolutely consistent with real data about the same operations performed by the SQL server. The main ultimate goal is ranking the disks by the speed of read / write operations and obtaining the final table.

To quickly make a decision during the installation of the SQL server, configure the parameters for placing database files, it will be useful to format the results of the tests in a table separately for each type of test, the template of which is shown below:

| Disks sorted by speed of read / write operations from the fastest to the slowest <Test name> | |||

|---|---|---|---|

| 0 | I: | 20GB | VHD фиксированного размера |

| 1 | H: | 20GB | VHD фиксированного размера |

| 2 | G: | 50GB | |

| 3 | F: | 200GB | |

| 4 | C: | 80GB | Операционная система |

| 5 | E: | 2TB | |

| 6 | D: | 100GB |

Как интерпретировать результаты тестов CrystalDiskMark?

For magnetic disks (single or as part of RAID), sequential operations (Seq Q32 test) often exceed the results of other tests by 10x-100x times. These metrics often depend on how you connect to the repository. It must be remembered that the number of Mb / s claimed by vendors is a theoretical limitation. In practice, they are usually less than claimed by 5-20%.

For solid-state drives, the difference between the speed of sequential and arbitrary read / write operations should not differ much from each other, usually no more than 2-3 times. Connection methods such as 3Gb SATA, 1Gb iSCSI, or 2 / 4Gb FC will affect speed.

If the server boots from a local disk and stores the SQL server data on another disk, you must also include both of these disks in the test plan. A comparison of the CrystalDiskMark benchmark test results for the disk on which the variables are stored, data with the performance of the disk on which the operating system is installed, can demonstrate the performance advantage of the second. In this situation, the system administrator needs to check the settings of the disk or SAN storage for correctness and obtaining optimal performance indicators.

Priority selection of drives

Information about the purpose of the portal is used to correctly prioritize the selection of disks for storing SharePoint databases.

If the corporate portal is used by users mainly for reading content and there is no active daily increase in content (for example, an external website of a company), the most productive disks must be allocated for data storage, while the less productive ones should be stored for transaction log files:

| Speed / Usage Scenario | The predominance of viewing content over its editing is significant (external website) |

|---|---|

| Highest performance | Data Files and Tempdb Database Transaction Log Files |

| ... | Database files |

| ... | Search service data files excluding administration database |

| Lowest performance | SharePoint Content Database Transaction Log Files |

If the corporate portal is used to organize collaboration between users who actively download dozens of documents daily, the priorities will be different:

| Speed / Usage Scenario | The predominance of editing content over reading it (external website) |

|---|---|

| Highest performance | Data Files and Tempdb Database Transaction Log Files |

| ... | SharePoint Content Database Transaction Log Files |

| ... | Search service data files excluding administration database |

| Lowest performance | Content Database Data Files |

Database drive type considerations for a SharePoint farm

Disk Recommendations for tempdb

The slow speed of read / write operations for the tempdb SQL Server system database seriously affects the overall performance of the SharePoint farm, and as a result, the performance of the corporate portal. A better recommendation would be to use RAID 10 to store the files in this database.

Drive recommendations for heavily used content databases

For sites designed to work together or perform a large volume of upgrade operations, special attention should be paid to the choice of disks for storing SharePoint content database files. The following recommendations should be considered:

- Placing data files and transaction logs for content databases on different physical disks

- Consider the growth of content sizes in the design of portal solutions. The most optimal is to support the size of each individual content base up to 200 GB.

- For heavily used SharePoint content databases, it is recommended that you use multiple data files, placing them on separate disks. To avoid sudden system crashes in databases, it is strongly recommended that you do not use the database size limit setting.

Calculate the expected size of the content database

The general formula for calculating the expected size of the content database is:

((D * V) * S) + (10Kb * (L + (V * D))), where:

- D - the number of documents, taking into account personal sites, documents and pages in libraries, the forecast for an increase in the number in the coming year;

- V — count — in the versions of documents;

- S - the average size of documents (if possible to find out);

- 10 Kb - constant, expected by SharePoint 2013 number of metadata for one document;

- L is the expected number of list items, taking into account the forecast for an increase in the number in the coming year.

Additional storage costs must be considered:

- Conducting an audit, the storage time of audit data + forecasts for the growth of audit data;

- Recycle bin, data storage time in the site basket and site collections.

RAID Recommendations

Although RAID 5 has the best performance / cost ratios, it is strongly recommended that you use RAID 10 for SharePoint databases, especially if you are actively using the corporate portal for user collaboration. RAID 5 has not very high write speeds to disk.

Network data optimization

You must ensure that a high-speed connection is configured between the SQL and SharePoint servers.

Dedicated network adapters and their groupings

It is recommended that you install two network adapters, one of which is dedicated to transferring traffic between SQL and SharePoint, and the second for interaction with client requests, as the first step to increase the performance of network communication on each server in a SharePoint farm.

Installing SQL and SharePoint servers on the basis of Windows Server 2012 and using the functionality of grouping adapters into a group will give additional advantages and can be the second serious step in optimizing configuration settings for SharePoint:

- Fault tolerance at the level of a network adapter and, accordingly, network traffic. Failure of a group network adapter does not lead to loss of network connection; the server switches network traffic to working group adapters.

- Bandwidth aggregation for adapters in a group. When performing network operations, for example, copying files, the system can potentially use all the adapters of the group, increasing the productivity of network interaction.

You can read more about setting up grouping adapters here .

According to the software requirements, the SharePoint farm can be deployed on the basis of the following operating systems:

- Windows Server 2008 R2 Service Pack 1 (SP1) Standard, Enterprise, or Datacenter 64-bit Edition

- Windows Server 2012 Standard or Datacenter 64-bit Edition

- With the release of the first service pack for Microsoft SharePoint Server 2013, installation of SharePoint 2013 servers based on the 64-bit edition of the Windows Server 2012 R2 operating system became available .

Scalable networking pack

Back in 2007, Windows Server 2003 SP2 introduced a set of features that control network performance and are commonly known as the Scalable Networking Pack (SNP). This package used hardware acceleration when processing network packets to provide higher bandwidth. The SNP package includes features known as Receive Side Scaling (RSS), TCP / IP Chimney Offload (sometimes called TOE), and Network Direct Memory Access (NetDMA). This functionality of the operating system allows you to transfer the load of processing TCP / IP packets from the processor to the network card.

Due to issues with SNP in Server 2003 SP2, the IT community quickly made it a rule to disable these features. For Server 2003, this approach made sense. But on Server 2008, Server 2008 R2, and later operating systems, disabling these features can often lead to reduced network performance and server bandwidth. These features are very stable on Server 2008 R2 (with or without SP1). Unfortunately, disabling functions as one of the first steps in solving network problems is still a very common troubleshooting practice, although many problems cannot be solved in this way.

Receive Side Scaling

RSS technology provides the network adapter with the ability to distribute network processing load across multiple cores in multi-core processors.

If you disable the use of the RSS mechanism, the system may suffer significant performance losses, which will reduce the overall load and the number of network operations that each server can process. Such a situation may lead to an increase in costs associated with the purchase of additional equipment, which is actually not required, and with additional infrastructure costs that accompany the purchase of additional equipment.

By default, Server 2008 R2 and later have RSS enabled. You can find out whether the technology is enabled globally or not by analyzing the result of the following command:

netsh interface tcp show global

It is necessary to make sure that the use of RSS technology is also enabled on individual network adapters through their additional properties or configuration settings:

RSS also depends on network adapter offloads, known as TCP Checksum Offload, IP Checksum Offload, Large Send Offload and UDP Checksum Offload (for IPv4 and IPv6 protocols). So if they were disabled on the network adapter, RSS technology will not be used for it.

In addition, some network adapters have additional parameters that control the number of processors used in RSS mechanisms, as well as the number of RSS queues.

A common mistake is to install a small number of RSS processors compared to the number of processors on the server. Each adapter and manufacturer has their own recommendations for tuning, so you need to look at the manufacturer's documentation to determine the optimal settings for a specific environment and workload.

TCP / IP Chimney Offload

Chimney Offload TCP technology (often referred to as TCP / IP Offloading or, more briefly, TOE) manufactures the transmission of TCP traffic from the computer processor to a network adapter that supports TOE. Transferring TCP processing from the central processor to the network adapter can free the processor to perform functions more related to the operation of applications. TOE can offload processing for both TCP / IPv4 and TCP / IPv6 connections, if the network adapter supports it.

Due to delays associated with the transfer of TCP / IP processing to the network adapter (more details can be found here), TOE technology is most effective for applications that establish long-term connections and transfer large amounts of data. Servers that make long-term connections, such as database replication, working with files, or performing backup functions, are examples of computers that can benefit from using TOE.

Short-lived servers, such as SharePoint web servers, benefit virtually nothing from this technology. In this regard, it is recommended to disable the use of TCP Chimney Offload.

You can check the global TCP settings with the following command:

netsh interface tcp show global

You can disable TOE for TCP using the command:

netsh int tcp set global chimney = disable

Before deciding whether to disable TOE, it is recommended that you first look at the TOE usage statistics for TCP using the command:

Netsh netsh interface tcp show chimneystats

You can disable TOE for IP using the following command

netsh int ip set global taskoffload = disable d

Check global IP settings with of another command:

netsh int ip show global

Network direct memory access

NetDMA is quite an interesting feature. The meaning of its application is when TOE is not supported for you and you need to speed up the processing of network connections. NetDMA allows you to copy data from the receiving buffers of the network stack directly to application buffers without CPU participation, which removes this task from the CPU.

In order to use NetDMA, you need equipment that supports this functionality - in the case of Windows it is a processor with support for Intel I / O Acceleration Technology (I / OAT) family of technologies. The inclusion of NetDMA on AMD equipment, alas, will not bring any effect.

Note that TOE and NetDMA are mutually exclusive; these functions cannot be simultaneously enabled in the system.

To enable NetDMA in Windows 2008 R2, you can use the following command:

netsh interface tcp set global netdma = enabled

You can check the status of the functional using another command:

netsh interface tcp show global

Default SNP Settings

Windows Server 2008 R 2

| Parameter | Default value |

|---|---|

| TCP Chimney Offload | automatic |

| RSS feed | enabled |

| Netdma | enabled |

“Automatic” for TCP Chimney Offload means that the function will be enabled if the “TCP Chimney Offload” setting is enabled for the network adapter in combination with a high-speed connection such as 10 GB Ethernet.

Windows Server 2012, Windows Server 2012 R2 2

| Parameter | Default value |

|---|---|

| TTCP Chimney Offload | disable |

| RSS feed | enabled |

| Netdma | Not supported |