Everything you wanted to know about stack traces and hip dumps. Part 1

Practice has shown that the hardcore transcripts from our reports go well, so we decided to continue. Today we have on our menu a mixture of approaches to searching and analyzing errors and caches, seasoned with a pinch of useful tools, prepared on the basis of a report by Andrey Pangin aka apangin from Odnoklassniki on one of the JUGs (this was a dopped version of his report from JPoint 2016). In a seven-minute two-hour report, Andrei talks in detail about stack traces and hip dumps.

The post was just huge, so we split it into two parts. Now you are reading the first part, the second part is here .

Today I will talk about stack traces and hip dumps - a topic, on the one hand, known to everyone, on the other - allowing me to constantly open something new (I even found a bug in the JVM while I was preparing this topic).

When I did a training run of this report in our office, one of my colleagues asked: “Is all this very interesting, but in practice, is this useful to anyone at all?” After this conversation, the first slide in my presentation, I added a page with questions about the topic on StackOverflow. So this is relevant.

I myself work as a leading programmer at Odnoklassniki. And it so happened that often I have to work with the internals of Java - to tune it, look for bugs, pull something through system classes (sometimes not entirely legal ways). From there, I got most of the information that I wanted to present to you today. Of course, my previous experience helped me a lot in this: for 6 years I worked at Sun Microsystems, I was directly involved in the development of a virtual Java machine. So now I know this topic from within the JVM as well as from the development user.

When a novice developer writes his “Hello world!”, The execution pops up and he is shown a stack trace where this error occurred. So the majority have some ideas about stack traces.

Let us go straight to the examples.

I wrote a small program that performs the following experiment in a cycle of 100 million times: it creates an array of 10 random elements of type long and checks if it has been sorted or not.

In fact, he considers the probability of getting a sorted array, which is approximately equal

What will happen? Execution, going out of the array.

Let's figure out what's the matter. Our console displays:

but there are no stack traces. Where to go?

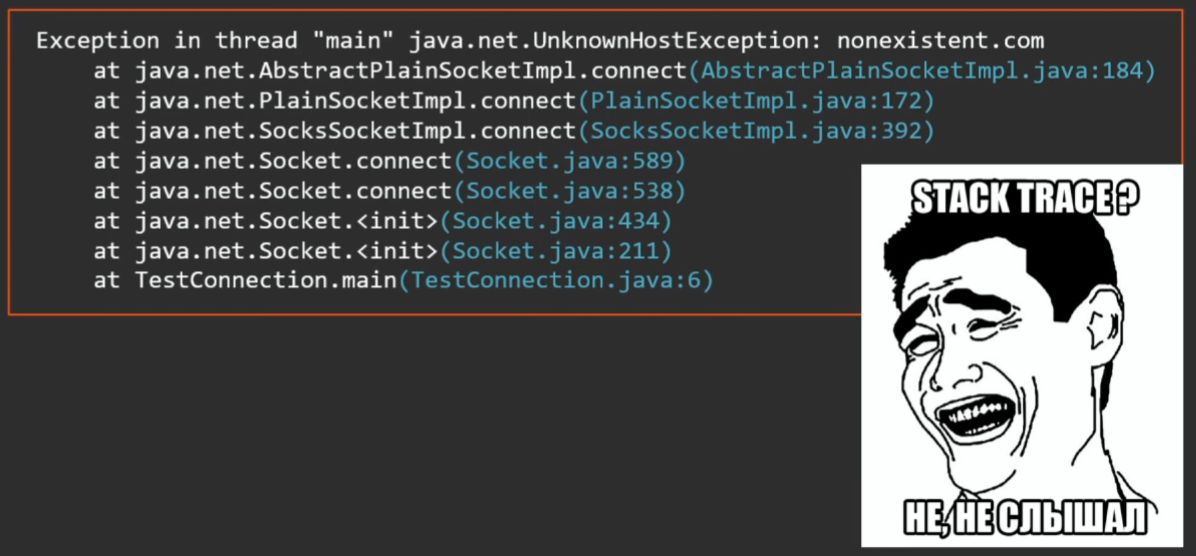

In HotSpot JVM there is such an optimization: the executions that the JVM itself throws from the hot code, and in this case the code is hot — it twitches 100 million times, stack traces are not generated.

This can be fixed using a special key:

Now try to run the example. We get all the same, only all the stack traces in place.

Such optimization works for all implicit executions that the JVM throws: going beyond the boundaries of the array, dereferencing the null pointer, etc.

Since they came up with optimization, does it need it for some reason? It is clear that it is more convenient for the programmer when there are stack traces.

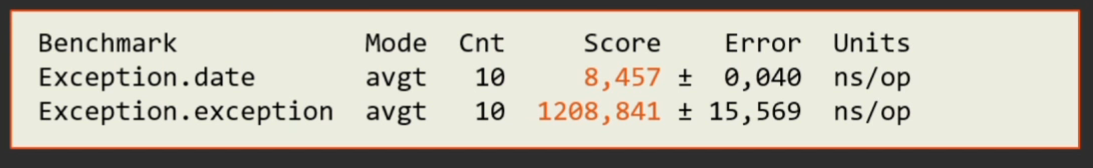

Let's measure how much it costs to create an execution (let's compare it with some simple Java object like Date).

Using JMH, we will write a simple benchmark and measure how many nanoseconds both operations take.

It turns out that creating an execution is 150 times more expensive than a regular object.

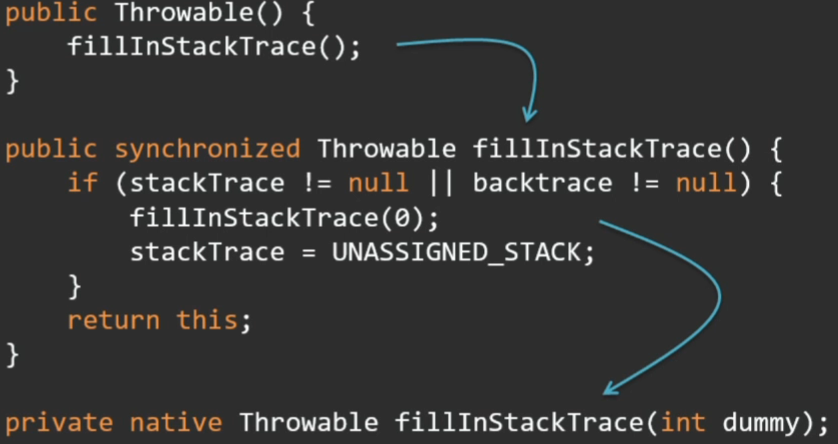

And here it is not so simple. For a virtual machine, the execution is not different from any other object, but the solution lies in the fact that almost all the construction designers in one way or another are reduced to calling the fillInStackTrace method, which fills the stack trace of this execution. It is filling the stack trace that takes time.

This method, in turn, is native, falls into the VM runtime and walks along the stack there, collects all frames.

The fillInStackTrace method is public, not final. Let's just redefine it:

Now creating a regular object and execution without a stack trace takes the same amount of time.

There is another way to create an exit without a stack trace. Starting with Java 7, Throwable and Exception have a protected constructor with an additional parameter writableStackTrace:

If false is passed there, then the stack trace will not be generated, and creation of the execution will be very fast.

Why do you need executions without stack traces? For example, if execution is used in code as a way to quickly get out of a loop. Of course, it’s better not to do this, but there are times when it really gives a performance boost.

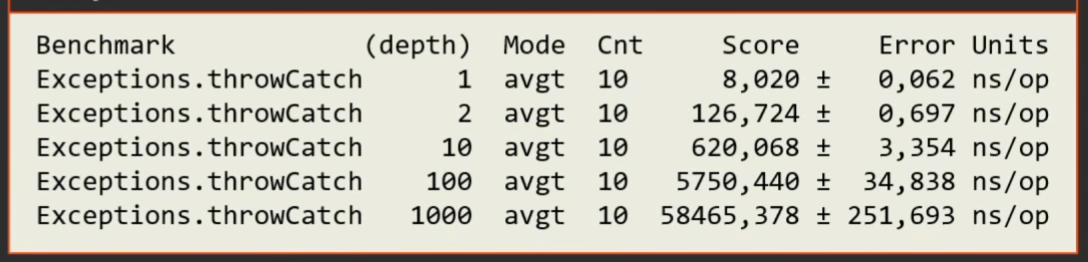

And how much does it cost to quit execution?

Consider different cases: when he throws himself and is caught in one method, as well as situations with different stack depths.

Here is what the measurements give:

i.e. if we have a shallow depth (the execution is caught in the same frame or a frame higher - depth 0 or 1), the execution is worth nothing. But as soon as the depth of the stack becomes large, the costs are of a completely different order. At the same time, a clear linear relationship is observed: the “cost” of exclusion almost linearly depends on the depth of the stack.

Not only is it worth getting the stack trace, but also further manipulations - printing, sending over the network, recording, are all that the getStackTrace method is used for, which translates the saved stack trace into Java objects.

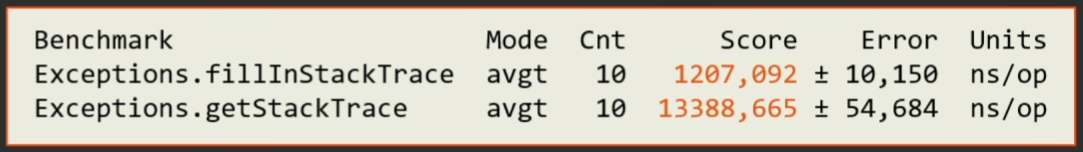

It can be seen that converting the stack trace is 10 times more expensive than receiving it:

Why is this happening?

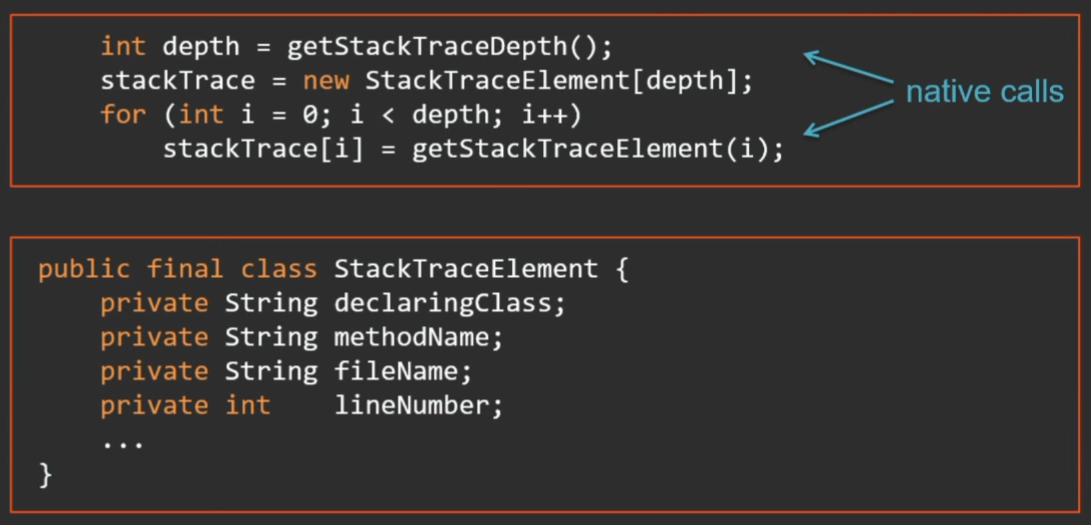

Here is the getStackTrace method in the JDK source:

First, through the call to the native method, we find out the stack depth, then in the loop to this depth we call the native method to get the next frame and convert it to a StackTraceElement object (this is a normal Java object with a bunch of fields). Not only is it a long time, the procedure consumes a lot of memory.

Moreover, in Java 9 this object is supplemented with new fields (in connection with the well-known modularization project) - now each frame is assigned a note about which module it is from.

Hi to those who parse expression using regular expressions. Get ready for surprises in Java 9 - modules will also appear.

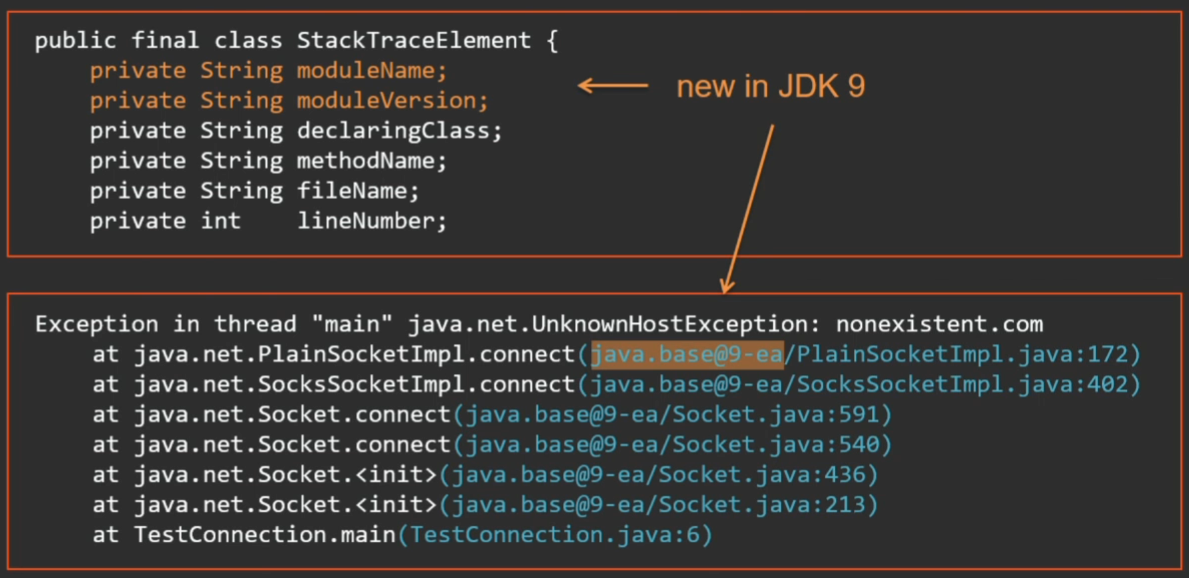

To find out what the program does, the easiest way is to take a thread dump, for example, with jstack utility.

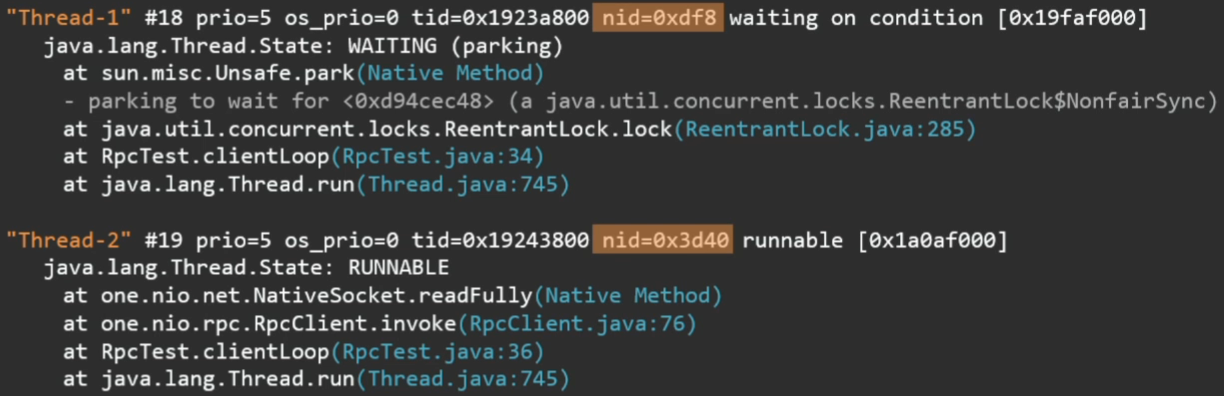

Fragments of the output of this utility:

What is visible here? What are the threads, in what state are they and their current stack.

Moreover, if streams have captured some locks, are waiting to enter the synchronized section or to take ReentrantLock, this will also be reflected in the stack trace.

Sometimes a little-known identifier is useful:

It is directly related to the thread ID in the operating system. For example, if you look at the top program on Linux, which threads do the CPU eat the most, the pid of the stream is the very nid that is shown in the thread dump. You can immediately find which Java thread it matches.

In the case of monitors (with synchronized objects), it will be written right in the thread dump which thread and which monitors it holds, who is trying to capture them.

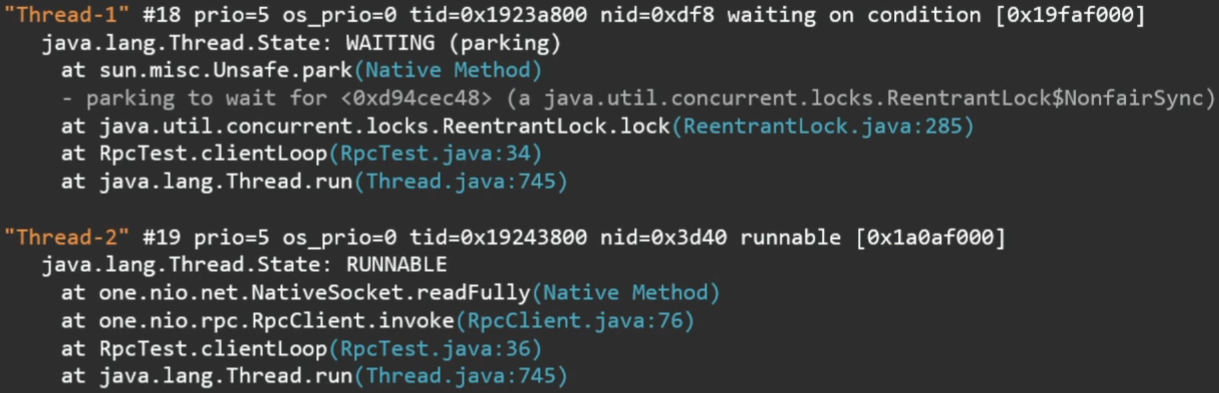

In the case of ReentrantLock, this, unfortunately, is not so. Here you can see how Thread 1 is trying to capture a certain ReentrantLock, but it is not visible who is holding this lock. For this case, the VM has an option:

If we run the same with PrintConcurrentLocks, we will see ReentrantLock in the thread dump.

This is the exact id of the lock. It can be seen that Thread 2 captured it.

If the option is so good, why not make it "default"?

She's also worth something. To print information about which thread which ReentrantLocks holds, the JVM runs through the entire Java heap, looks for all ReentrantLocks there, compares them with threads and only then displays this information (the thread does not have information about which locks it captured; information is only in the opposite direction - which lock is associated with which thread).

In this example, the names of the threads (Thread 1 / Thread 2) do not understand what they refer to. My advice from practice: if you have some kind of long operation, for example, the server processes client requests or, conversely, the client goes to several servers, set the thread with a friendly name (as in the case below, directly the IP of the server to which the client is now coming). And then in the dump of the stream it will immediately be visible what kind of server it is waiting for a response from.

Enough theory. Let's get back to practice. I have cited this example more than once.

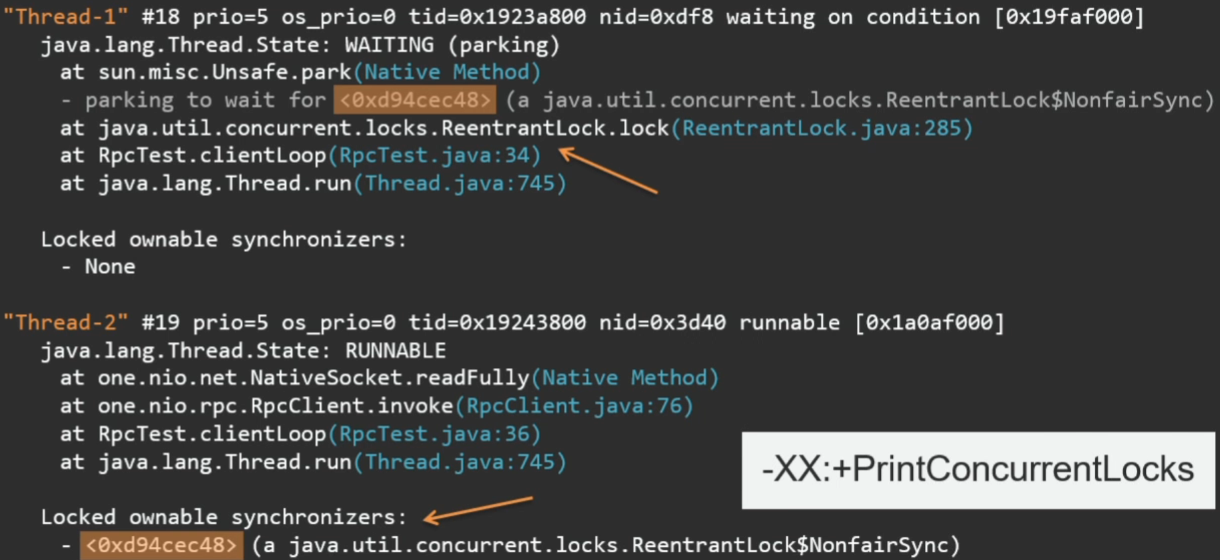

Run the program 3 times in a row. 2 times it displays the sum of numbers from 0 to 100 (not including 100), the third does not want to. Let's watch the dump thread:

The first thread turns out to be RUNNABLE, and our reduce is executed. But look, what an interesting point: Thread.State is kind of like RUNNABLE, but it is written that the stream is in Object.wait ().

This, too, was not clear to me. I even wanted to report a bug, but it turns out that such a bug was instituted many years ago and is closed with the wording: “not an issue, will not fix”.

This program really has a deadlock. Its reason is class initialization .

The expression is executed in the static initializer of the ParallelSum class:

But since the stream is parallel, execution occurs in separate ForkJoinPool threads, from which the lambda body is called:

The lambda code is written by the Java compiler directly in the ParallelSum class as a private method. It turns out that from ForkJoinPool we are trying to access the ParallelSum class, which is currently at the initialization stage. Therefore, the threads begin to wait when the initialization of the class ends, and it cannot end, because it expects the calculation of this convolution. Deadlock.

Why was the amount considered at first? It was just luck. We have a small number of elements summed up, and sometimes everything is executed in one thread (the other thread just does not have time).

But why, then, the thread in the RUNNABLE stack trace? If you read the documentation for Thread.State, it becomes clear that there can be no other state here. There can be no BLOCKED state, since the thread is not blocked on the Java monitor, there is no synchronized section, and there can be no WAITING state, because there are no calls to Object.wait () here. Synchronization occurs on the internal object of the virtual machine, which, generally speaking, does not even have to be a Java object.

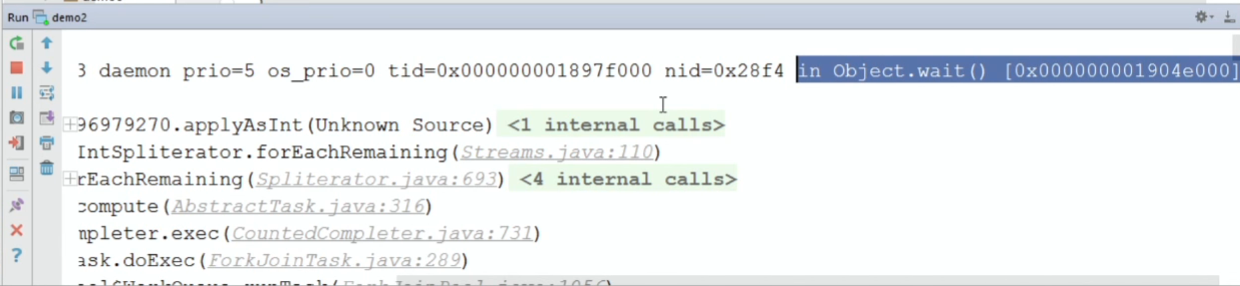

Imagine a situation: in a heap of places in our application something is logged. It would be useful to know from which place this or that line appeared.

There is no preprocessor in Java, so there is no way to use the __FILE__, __LINE__ macros, as in C (these macros are converted to the current file name and string at the compilation stage). Therefore, there are no other ways to supplement the output with the file name and line number of the code from where it was printed, except through stack traces.

We generate the execution, we get the stack trace from it, take in this case the second frame (zero is the getLocation method, and the first calls the warning method).

As we know, getting a stack trace and, especially, converting it into a stack trace element is very expensive. And we need one frame. Is it somehow easier to do (without execution)?

In addition to getStackTrace, the exception has a getStackTrace method on the Thread object.

Will it be faster?

Not. The JVM does no magic, everything will work here through the same reception with exactly the same stack trace.

But there is still a tricky way:

I love all sorts of private things: Unsafe, SharedSecrets, etc.

There is an accessor that allows you to get the StackTraceElement of a particular frame (without having to convert the entire stack trace to Java objects). This will work faster. But there is bad news: in Java 9 this will not work. There a lot of work has been done to refactor everything related to stack traces, and now there are simply no such methods there.

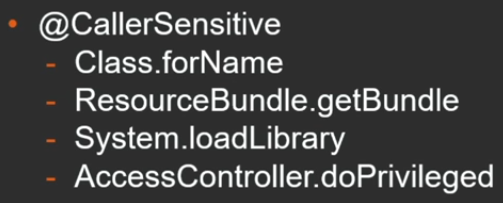

A design that allows you to get a single frame can be useful in the so-called Caller-sensitive methods - methods whose results may depend on who calls them. In applications, you often encounter such methods, but there are many similar examples in the JDK itself:

Depending on who calls Class.forName, the class will be searched in the corresponding loader class (the class that called this method); similarly, with getting a ResourceBundle and loading the System.loadLibrary library. Also, information about who is calling is useful when using various methods that check permissions (and does this code have the right to call this method). For this case, the "secret" API provides the getCallerClass method, which is actually a JVM intrinsic and generally costs almost nothing.

As has been said many times, a private API is an evil that is highly discouraged to use (you run the risk of running into problems like those that Unsafe previously called). Therefore, the JDK developers thought about the fact that once they use this, they need a legal alternative - a new API to bypass threads. Basic requirements for this API:

It is known that in the public release of Java 9 there will be java.lang.StackWalker.

Obtaining an instance of it is very simple - using the getInstance method. It has several options - the default StackWalker or slightly configurable options:

Also, for optimization, you can set the approximate depth that is necessary (so that the JVM can optimize the receipt of stack frames in batch).

The simplest example of how to use this:

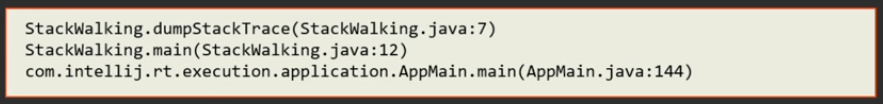

We take StackWalker and we call the forEach method so that it bypasses all frames. As a result, we get such a simple stack trace:

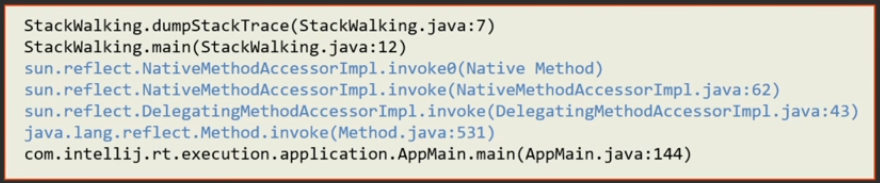

The same with the SHOW_REFLECT_FRAMES option:

In this case, methods related to calling through reflection will be added:

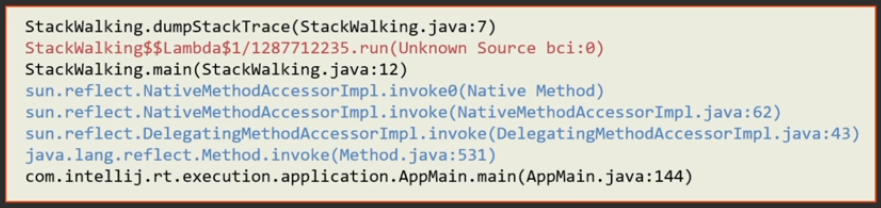

If you add the SHOW_HIDDEN_FRAMES option (by the way, it includes SHOW_REFLECT_FRAMES, i.e. reflex frames will also be shown):

Methods of dynamically generated lambda classes will appear in the stack trace:

And now the most important method that the StackWalker API has is a walk method with such a cunning, obscure signature with a bunch of generics:

The walk method takes a function from the stack frame.

His work is easier to show with an example.

Despite the fact that it all looks scary, how to use it is obvious. The stream is transferred to the function, and already on the stream you can carry out all the usual operations. For example, the getCallerFrame method would look like this, which gets only the second frame: the first 2 are skipped, then findFirst is called:

The walk method returns the result that this stream function returns. Everything is simple.

For this particular case (when you just need to get a Caller class) there is a special shortcut method:

Another example is more complicated.

We go around all the frames, leave only those that belong to the org.apache package, and display the first 10 in the list.

An interesting question: why such a long signature with a bunch of generics? Why not just make StackWalker a method that returns a stream?

If you give an API that returns a stream, the JDK loses control over what is being done on this stream. You can further put this stream somewhere, give it to another thread, try to use it 2 hours after receiving (the stack that we tried to bypass is long lost, and the thread can be killed for a long time). Thus, it will not be possible to provide the “laziness” of the Stack Walker API.

The main point of the Stack Walker API: while you are inside the walk, you have a fixed state of the stack, so all operations on this stack can be done lazy.

For dessert, a little more interesting.

As always, JDK developers are hiding a bunch of treasures from us. And in addition to the usual stack frames, they made live stack frames for some of their needs, which differ from the usual ones in that they have additional methods that allow not only to obtain information about the method and class, but also about local variables captured by monitors and values of the express stack of this stack frame.

The protection here is not so hot: the class was simply made non-public. But who is stopping us from taking reflection and trying it out? (Note: in current JDK 9 builds, access to the non-public API through reflexion is prohibited. To enable it, you need to add the JVM option

We try using this example. There is a program that recursively searches for a way out of the maze. We have a square box size x size. There is a visit method with current coordinates. We are trying to go left / right / up / down from the current cell (if they are not busy). If you get from the right-lower cell to the left-upper one, we think that we have found a way out and print the stack.

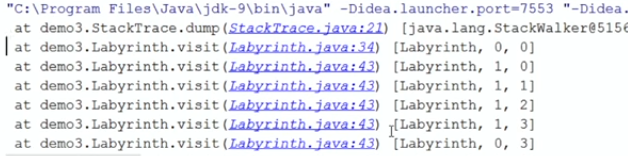

Run:

If I do a regular dumpStack, which was already in Java 8, we get a regular stack trace, from which nothing is clear. Obviously, the recursive method calls itself, but it is interesting at what step (and with what coordinate values) each method is called.

Replace the standard dumpStack with our StackTrace.dump, which uses live stack frames through reflection:

First of all, you need to get the appropriate StackWalker by calling the getStackWalker method. All the frames that will be transferred to getStackWalker will in fact be instances of the live stack frame, which has additional methods, in particular, getLocals for obtaining local variables.

We start. We get the same thing, but we see the whole path from the maze in the form of values of local variables:

На этом мы заканчиваем первую часть поста. Вторая часть здесь.

Лично встретиться с Андреем в Москве можно будет уже совсем скоро — 7-8 апреля на JPoint 2017. В этот раз он выступит с докладом «JVM-профайлер с чувством такта», в котором расскажет, как можно получить честные замеры производительности приложения, комбинируя несколько подходов к профилированию. Доклад будет «всего» часовой, зато в дискуссионной зоне никто не будет ограничивать вас от вопросов и горячих споров!

Кроме этого, на JPoint есть целая куча крутых докладов практически обо всем из мира Java — обзор планируемых докладов мы давали в другом посте, а просто программу конференции вы найдете на сайте мероприятия.

The post was just huge, so we split it into two parts. Now you are reading the first part, the second part is here .

Today I will talk about stack traces and hip dumps - a topic, on the one hand, known to everyone, on the other - allowing me to constantly open something new (I even found a bug in the JVM while I was preparing this topic).

When I did a training run of this report in our office, one of my colleagues asked: “Is all this very interesting, but in practice, is this useful to anyone at all?” After this conversation, the first slide in my presentation, I added a page with questions about the topic on StackOverflow. So this is relevant.

I myself work as a leading programmer at Odnoklassniki. And it so happened that often I have to work with the internals of Java - to tune it, look for bugs, pull something through system classes (sometimes not entirely legal ways). From there, I got most of the information that I wanted to present to you today. Of course, my previous experience helped me a lot in this: for 6 years I worked at Sun Microsystems, I was directly involved in the development of a virtual Java machine. So now I know this topic from within the JVM as well as from the development user.

Stack traces

Stack traces exception

When a novice developer writes his “Hello world!”, The execution pops up and he is shown a stack trace where this error occurred. So the majority have some ideas about stack traces.

Let us go straight to the examples.

I wrote a small program that performs the following experiment in a cycle of 100 million times: it creates an array of 10 random elements of type long and checks if it has been sorted or not.

package demo1;

import java.util.concurrent.ThreadLocalRandom;

public class ProbabilityExperiment {

private static boolean isSorted(long[] array) {

for (int i = 0; i < array.length; i++) {

if (array[i] > array[i + 1]) {

return false;

}

}

return true;

}

public void run(int experiments, int length) {

int sorted = 0;

for (int i = 0; i < experiments; i++) {

try {

long[] array = ThreadLocalRandom.current().longs(length).toArray();

if (isSorted(array)) {

sorted++;

}

} catch (Exception e) {

e.printStackTrace();

}

}

System.out.printf("%d of %d arrays are sorted\n", sorted, experiments);

}

public static void main(String[] args) {

new ProbabilityExperiment().run(100_000_000, 10);

}

}In fact, he considers the probability of getting a sorted array, which is approximately equal

1/n!. As often happens, the programmer made a mistake by one:for (int i = 0; i < array.length; i++)What will happen? Execution, going out of the array.

Let's figure out what's the matter. Our console displays:

java.lang.ArrayIndexOutOfBoundsExceptionbut there are no stack traces. Where to go?

In HotSpot JVM there is such an optimization: the executions that the JVM itself throws from the hot code, and in this case the code is hot — it twitches 100 million times, stack traces are not generated.

This can be fixed using a special key:

-XX:-OmitStackTraceInFastThrowNow try to run the example. We get all the same, only all the stack traces in place.

Such optimization works for all implicit executions that the JVM throws: going beyond the boundaries of the array, dereferencing the null pointer, etc.

Since they came up with optimization, does it need it for some reason? It is clear that it is more convenient for the programmer when there are stack traces.

Let's measure how much it costs to create an execution (let's compare it with some simple Java object like Date).

@Benchmark

public Object date() {

return new Date();

}

@Benchmark

public Object exception() {

return new Exception();

}

Using JMH, we will write a simple benchmark and measure how many nanoseconds both operations take.

It turns out that creating an execution is 150 times more expensive than a regular object.

And here it is not so simple. For a virtual machine, the execution is not different from any other object, but the solution lies in the fact that almost all the construction designers in one way or another are reduced to calling the fillInStackTrace method, which fills the stack trace of this execution. It is filling the stack trace that takes time.

This method, in turn, is native, falls into the VM runtime and walks along the stack there, collects all frames.

The fillInStackTrace method is public, not final. Let's just redefine it:

@Benchmark

public Object exceptionNoStackTrace() {

return new Exception() {

@Override

public Throwable fillInStackTrace() {

return this;

}

};

}Now creating a regular object and execution without a stack trace takes the same amount of time.

There is another way to create an exit without a stack trace. Starting with Java 7, Throwable and Exception have a protected constructor with an additional parameter writableStackTrace:

protected Exception(String message, Throwable cause,

boolean enableSuppression,

boolean writableStackTrace);If false is passed there, then the stack trace will not be generated, and creation of the execution will be very fast.

Why do you need executions without stack traces? For example, if execution is used in code as a way to quickly get out of a loop. Of course, it’s better not to do this, but there are times when it really gives a performance boost.

And how much does it cost to quit execution?

Consider different cases: when he throws himself and is caught in one method, as well as situations with different stack depths.

@Param("1", "2", "10", "100", "1000"})

int depth;

@Benchmark

public Object throwCatch() {

try {

return recursive(depth);

} catch (Exception e) {

return e;

}

}

Here is what the measurements give:

i.e. if we have a shallow depth (the execution is caught in the same frame or a frame higher - depth 0 or 1), the execution is worth nothing. But as soon as the depth of the stack becomes large, the costs are of a completely different order. At the same time, a clear linear relationship is observed: the “cost” of exclusion almost linearly depends on the depth of the stack.

Not only is it worth getting the stack trace, but also further manipulations - printing, sending over the network, recording, are all that the getStackTrace method is used for, which translates the saved stack trace into Java objects.

@Benchmark

public Object fillInStackTrace() {

return new Exception();

}

@Benchmark

public Object getStackTrace() {

return new Exception().getStackTrace();

}

It can be seen that converting the stack trace is 10 times more expensive than receiving it:

Why is this happening?

Here is the getStackTrace method in the JDK source:

First, through the call to the native method, we find out the stack depth, then in the loop to this depth we call the native method to get the next frame and convert it to a StackTraceElement object (this is a normal Java object with a bunch of fields). Not only is it a long time, the procedure consumes a lot of memory.

Moreover, in Java 9 this object is supplemented with new fields (in connection with the well-known modularization project) - now each frame is assigned a note about which module it is from.

Hi to those who parse expression using regular expressions. Get ready for surprises in Java 9 - modules will also appear.

Let's summarize

- creation of the object itself is cheap;

- it takes time to get his stack trace;

- even more expensive is converting this internal stack trace to a Java object in a StackTraceElement. The complexity of this case is directly proportional to the depth of the stack.

- throwing the execution is fast, it costs almost nothing (almost like an unconditional transition),

- but only if the execution is caught in the same frame. Here it is also necessary to add that the JIT is able to inline methods with us, so a single compiled frame can include several Java methods that are inline into each other. But if the execution is caught somewhere deeper on the stack, its high cost is proportional to the depth of the stack.

A couple of tips:

- turn off optimization on production, perhaps this will save a lot of time on debugging:

-XX:-OmitStackTraceInFastThrow

- Do not use executions to control program flow; this is not considered good practice;

- but if you still resort to this method, make sure that the executions are fast and do not create stack traces once again.

Stack trades in thread dumps

To find out what the program does, the easiest way is to take a thread dump, for example, with jstack utility.

Fragments of the output of this utility:

What is visible here? What are the threads, in what state are they and their current stack.

Moreover, if streams have captured some locks, are waiting to enter the synchronized section or to take ReentrantLock, this will also be reflected in the stack trace.

Sometimes a little-known identifier is useful:

It is directly related to the thread ID in the operating system. For example, if you look at the top program on Linux, which threads do the CPU eat the most, the pid of the stream is the very nid that is shown in the thread dump. You can immediately find which Java thread it matches.

In the case of monitors (with synchronized objects), it will be written right in the thread dump which thread and which monitors it holds, who is trying to capture them.

In the case of ReentrantLock, this, unfortunately, is not so. Here you can see how Thread 1 is trying to capture a certain ReentrantLock, but it is not visible who is holding this lock. For this case, the VM has an option:

-XX:+PrintConcurrentLocksIf we run the same with PrintConcurrentLocks, we will see ReentrantLock in the thread dump.

This is the exact id of the lock. It can be seen that Thread 2 captured it.

If the option is so good, why not make it "default"?

She's also worth something. To print information about which thread which ReentrantLocks holds, the JVM runs through the entire Java heap, looks for all ReentrantLocks there, compares them with threads and only then displays this information (the thread does not have information about which locks it captured; information is only in the opposite direction - which lock is associated with which thread).

In this example, the names of the threads (Thread 1 / Thread 2) do not understand what they refer to. My advice from practice: if you have some kind of long operation, for example, the server processes client requests or, conversely, the client goes to several servers, set the thread with a friendly name (as in the case below, directly the IP of the server to which the client is now coming). And then in the dump of the stream it will immediately be visible what kind of server it is waiting for a response from.

Enough theory. Let's get back to practice. I have cited this example more than once.

package demo2;

import java.util.stream.IntStream;

public class ParallelSum {

static int SUM = IntStream.range(0, 100).parallel().reduce(0, (x, y) -> x + y);

public static void main(String[] args) {

System.out.println(SUM);

}

}Run the program 3 times in a row. 2 times it displays the sum of numbers from 0 to 100 (not including 100), the third does not want to. Let's watch the dump thread:

The first thread turns out to be RUNNABLE, and our reduce is executed. But look, what an interesting point: Thread.State is kind of like RUNNABLE, but it is written that the stream is in Object.wait ().

This, too, was not clear to me. I even wanted to report a bug, but it turns out that such a bug was instituted many years ago and is closed with the wording: “not an issue, will not fix”.

This program really has a deadlock. Its reason is class initialization .

The expression is executed in the static initializer of the ParallelSum class:

static int SUM = IntStream.range(0, 100).parallel().reduce(0, (x, y) -> x + y);But since the stream is parallel, execution occurs in separate ForkJoinPool threads, from which the lambda body is called:

(x, y) -> x + yThe lambda code is written by the Java compiler directly in the ParallelSum class as a private method. It turns out that from ForkJoinPool we are trying to access the ParallelSum class, which is currently at the initialization stage. Therefore, the threads begin to wait when the initialization of the class ends, and it cannot end, because it expects the calculation of this convolution. Deadlock.

Why was the amount considered at first? It was just luck. We have a small number of elements summed up, and sometimes everything is executed in one thread (the other thread just does not have time).

But why, then, the thread in the RUNNABLE stack trace? If you read the documentation for Thread.State, it becomes clear that there can be no other state here. There can be no BLOCKED state, since the thread is not blocked on the Java monitor, there is no synchronized section, and there can be no WAITING state, because there are no calls to Object.wait () here. Synchronization occurs on the internal object of the virtual machine, which, generally speaking, does not even have to be a Java object.

Logging Stack Trace

Imagine a situation: in a heap of places in our application something is logged. It would be useful to know from which place this or that line appeared.

There is no preprocessor in Java, so there is no way to use the __FILE__, __LINE__ macros, as in C (these macros are converted to the current file name and string at the compilation stage). Therefore, there are no other ways to supplement the output with the file name and line number of the code from where it was printed, except through stack traces.

public static String getLocation() {

StackTraceElement s = new Exception().getStackTrace()[2];

return s.getFileName() + ':' + s.getLineNumber();

}

We generate the execution, we get the stack trace from it, take in this case the second frame (zero is the getLocation method, and the first calls the warning method).

As we know, getting a stack trace and, especially, converting it into a stack trace element is very expensive. And we need one frame. Is it somehow easier to do (without execution)?

In addition to getStackTrace, the exception has a getStackTrace method on the Thread object.

Thread.current().getStackTrace()Will it be faster?

Not. The JVM does no magic, everything will work here through the same reception with exactly the same stack trace.

But there is still a tricky way:

public static String getLocation() {

StackTraceElement s = sun.misc.SharedSecrets.getJavaLangAccess()

.getStackTraceElement(new Exception(), 2);

return s.getFileName() + ':' + s.getLineNumber();

}

I love all sorts of private things: Unsafe, SharedSecrets, etc.

There is an accessor that allows you to get the StackTraceElement of a particular frame (without having to convert the entire stack trace to Java objects). This will work faster. But there is bad news: in Java 9 this will not work. There a lot of work has been done to refactor everything related to stack traces, and now there are simply no such methods there.

A design that allows you to get a single frame can be useful in the so-called Caller-sensitive methods - methods whose results may depend on who calls them. In applications, you often encounter such methods, but there are many similar examples in the JDK itself:

Depending on who calls Class.forName, the class will be searched in the corresponding loader class (the class that called this method); similarly, with getting a ResourceBundle and loading the System.loadLibrary library. Also, information about who is calling is useful when using various methods that check permissions (and does this code have the right to call this method). For this case, the "secret" API provides the getCallerClass method, which is actually a JVM intrinsic and generally costs almost nothing.

sun.reflect.Reflection.getCallerClassAs has been said many times, a private API is an evil that is highly discouraged to use (you run the risk of running into problems like those that Unsafe previously called). Therefore, the JDK developers thought about the fact that once they use this, they need a legal alternative - a new API to bypass threads. Basic requirements for this API:

- so that you can bypass only part of the frames (if we need just a few top frames);

- the ability to filter frames (do not show unnecessary frames related to the framework or system classes);

- so that these frames are constructed in a lazy way (lazy) - if we do not need to get information about which file it is associated with, this information is not prematurely retrieved;

- as in the case of getCallerClass - we need not the class name, but the java.lang.Class instance itself.

It is known that in the public release of Java 9 there will be java.lang.StackWalker.

Obtaining an instance of it is very simple - using the getInstance method. It has several options - the default StackWalker or slightly configurable options:

- the RETAIN_CLASS_REFERENCE option means that you need not class names, but instances;

- other options allow you to show frames related to system classes and reflection classes in the stack trace (by default they will not be shown in the stack trace).

Also, for optimization, you can set the approximate depth that is necessary (so that the JVM can optimize the receipt of stack frames in batch).

The simplest example of how to use this:

StackWalker sw = StackWalker.getInstance();

sw.forEach(System.out::println);We take StackWalker and we call the forEach method so that it bypasses all frames. As a result, we get such a simple stack trace:

The same with the SHOW_REFLECT_FRAMES option:

StackWalker sw = StackWalker.getInstance(StackWalker.Option.SHOW_REFLECT_FRAMES);

sw.forEach(System.out::println);In this case, methods related to calling through reflection will be added:

If you add the SHOW_HIDDEN_FRAMES option (by the way, it includes SHOW_REFLECT_FRAMES, i.e. reflex frames will also be shown):

StackWalker sw = StackWalker.getInstance(StackWalker.Option.SHOW_HIDDEN_FRAMES);

sw.forEach(System.out::println);Methods of dynamically generated lambda classes will appear in the stack trace:

And now the most important method that the StackWalker API has is a walk method with such a cunning, obscure signature with a bunch of generics:

public T walk(Function, ? extends T> function) The walk method takes a function from the stack frame.

His work is easier to show with an example.

Despite the fact that it all looks scary, how to use it is obvious. The stream is transferred to the function, and already on the stream you can carry out all the usual operations. For example, the getCallerFrame method would look like this, which gets only the second frame: the first 2 are skipped, then findFirst is called:

public static StackFrame getCallerFrame() {

return StackWalker.getInstance()

.walk(stream -> stream.skip(2).findFirst())

.orElseThrow(NoSuchElementException::new);

}

The walk method returns the result that this stream function returns. Everything is simple.

For this particular case (when you just need to get a Caller class) there is a special shortcut method:

return StackWalker.getInstance(RETAIN_CLASS_REFERENCE).getCallerClass();Another example is more complicated.

We go around all the frames, leave only those that belong to the org.apache package, and display the first 10 in the list.

StackWalker sw = StackWalker.getInstance();

List frames = sw.walk(stream ->

stream.filter(sf -> sf.getClassName().startsWith("org.apache."))

.limit(10)

.collect(Collectors.toList()));

An interesting question: why such a long signature with a bunch of generics? Why not just make StackWalker a method that returns a stream?

public Stream stream(); If you give an API that returns a stream, the JDK loses control over what is being done on this stream. You can further put this stream somewhere, give it to another thread, try to use it 2 hours after receiving (the stack that we tried to bypass is long lost, and the thread can be killed for a long time). Thus, it will not be possible to provide the “laziness” of the Stack Walker API.

The main point of the Stack Walker API: while you are inside the walk, you have a fixed state of the stack, so all operations on this stack can be done lazy.

For dessert, a little more interesting.

As always, JDK developers are hiding a bunch of treasures from us. And in addition to the usual stack frames, they made live stack frames for some of their needs, which differ from the usual ones in that they have additional methods that allow not only to obtain information about the method and class, but also about local variables captured by monitors and values of the express stack of this stack frame.

/* package-private */

interface LiveStackFrame extends StackFrame {

public Object[] getMonitors();

public Object[] getLocals();

public Object[] getStack();

public static StackWalker getStackWalker();

}

The protection here is not so hot: the class was simply made non-public. But who is stopping us from taking reflection and trying it out? (Note: in current JDK 9 builds, access to the non-public API through reflexion is prohibited. To enable it, you need to add the JVM option

--add-opens=java.base/java.lang=ALL-UNNAMED) We try using this example. There is a program that recursively searches for a way out of the maze. We have a square box size x size. There is a visit method with current coordinates. We are trying to go left / right / up / down from the current cell (if they are not busy). If you get from the right-lower cell to the left-upper one, we think that we have found a way out and print the stack.

package demo3;

import java.util.Random;

public class Labyrinth {

static final byte FREE = 0;

static final byte OCCUPIED = 1;

static final byte VISITED = 2;

private final byte[][] field;

public Labyrinth(int size) {

Random random = new Random(0);

field = new byte[size][size];

for (int x = 0; x < size; x++) {

for (int y = 0; y < size; y++) {

if (random.nextInt(10) > 7) {

field[x][y] = OCCUPIED;

}

}

}

field[0][0] = field[size - 1][size - 1] = FREE;

}

public int size() {

return field.length;

}

public boolean visit(int x, int y) {

if (x == 0 && y == 0) {

StackTrace.dump();

return true;

}

if (x < 0 || x >= size() || y < 0 || y >= size() || field[x][y] != FREE) {

return false;

}

field[x][y] = VISITED;

return visit(x - 1, y) || visit(x, y - 1) || visit(x + 1, y) || visit(x, y + 1);

}

public String toString() {

return "Labyrinth";

}

public static void main(String[] args) {

Labyrinth lab = new Labyrinth(10);

boolean exitFound = lab.visit(9, 9);

System.out.println(exitFound);

}

}Run:

If I do a regular dumpStack, which was already in Java 8, we get a regular stack trace, from which nothing is clear. Obviously, the recursive method calls itself, but it is interesting at what step (and with what coordinate values) each method is called.

Replace the standard dumpStack with our StackTrace.dump, which uses live stack frames through reflection:

package demo3;

import java.lang.reflect.Method;

import java.util.Arrays;

public class StackTrace {

private static Object invoke(String methodName, Object instance) {

try {

Class liveStackFrame = Class.forName("java.lang.LiveStackFrame");

Method m = liveStackFrame.getMethod(methodName);

m.setAccessible(true);

return m.invoke(instance);

} catch (ReflectiveOperationException e) {

throw new AssertionError("Should not happen", e);

}

}

public static void dump() {

StackWalker sw = (StackWalker) invoke("getStackWalker", null);

sw.forEach(frame -> {

Object[] locals = (Object[]) invoke("getLocals", frame);

System.out.println(" at " + frame + " " + Arrays.toString(locals));

});

}

}First of all, you need to get the appropriate StackWalker by calling the getStackWalker method. All the frames that will be transferred to getStackWalker will in fact be instances of the live stack frame, which has additional methods, in particular, getLocals for obtaining local variables.

We start. We get the same thing, but we see the whole path from the maze in the form of values of local variables:

На этом мы заканчиваем первую часть поста. Вторая часть здесь.

Лично встретиться с Андреем в Москве можно будет уже совсем скоро — 7-8 апреля на JPoint 2017. В этот раз он выступит с докладом «JVM-профайлер с чувством такта», в котором расскажет, как можно получить честные замеры производительности приложения, комбинируя несколько подходов к профилированию. Доклад будет «всего» часовой, зато в дискуссионной зоне никто не будет ограничивать вас от вопросов и горячих споров!

Кроме этого, на JPoint есть целая куча крутых докладов практически обо всем из мира Java — обзор планируемых докладов мы давали в другом посте, а просто программу конференции вы найдете на сайте мероприятия.