ClickHouse: very fast and very convenient

Victor Tarnavsky shows that it works. Here is the transcript of the report Highload ++ 2016.

Hello. My name is Victor Tarnavsky. I work at Yandex. I’ll tell you about a very fast, very fault-tolerant and super-scalable ClickHouse database for analytical tasks that we have developed.

A few words about me. I am Victor, I work at Yandex and head the department that develops analytical products such as Yandex.Metrica and Yandex.AppMetrica. I think many of you have used these products and know them. Well, in the past, and still write a lot of code, and before that I was still involved in the development of iron.

What will happen today

I’ll tell a little story: why we decided to create our own system, how we got to the point where in the modern world, in which, it would seem, there is a solution for any problem, we still needed to create our own database. Then I’ll tell you about the features that ClickHouse currently has, what it consists of, and what features it can use. Then I want to dive a little deeper and tell you what decisions we made inside ClickHouse, and what it consists of, and why ClickHouse works so fast. And in the end I want to show how ClickHouse can help you personally or the company in which you work, for what tasks it is worth using, what cases you can build around ClickHouse.

A bit of history

It all started in 2009. Then we did Yandex.Metrica, a web analytic tool. That is, such a tool that website owners or developers put on their site. This is a piece of JavaScript, it sends data to Yandex.Metrica. Then in the “Metric” you can see statistics: how many people were on the site, what they did, whether they bought a refrigerator and all that.

And from the point of view of developing a web analytic system - this is some challenge. When you develop one service or a product, you design the load so as to withstand some RPS and other parameters of this one service or product. And when you are developing a web analytic tool, you need to withstand the load of all the sites on which your web analytic tool stands. And in the case of Metrica, this is a very large scale: tens of billions of events that we take every day.

Metrica stands on millions of websites. Hundreds of thousands of analysts sit every day and look at the Metrics interface, request some reports, select filters and try to understand what is happening on their website, whether this person bought this refrigerator, or what is happening. According to external data, Metrika is a system that is one of the three largest players in this market. That is, the number of sites that we have, and the number of people that Metrika sees, is a product from the top, there are practically no larger-scale products.

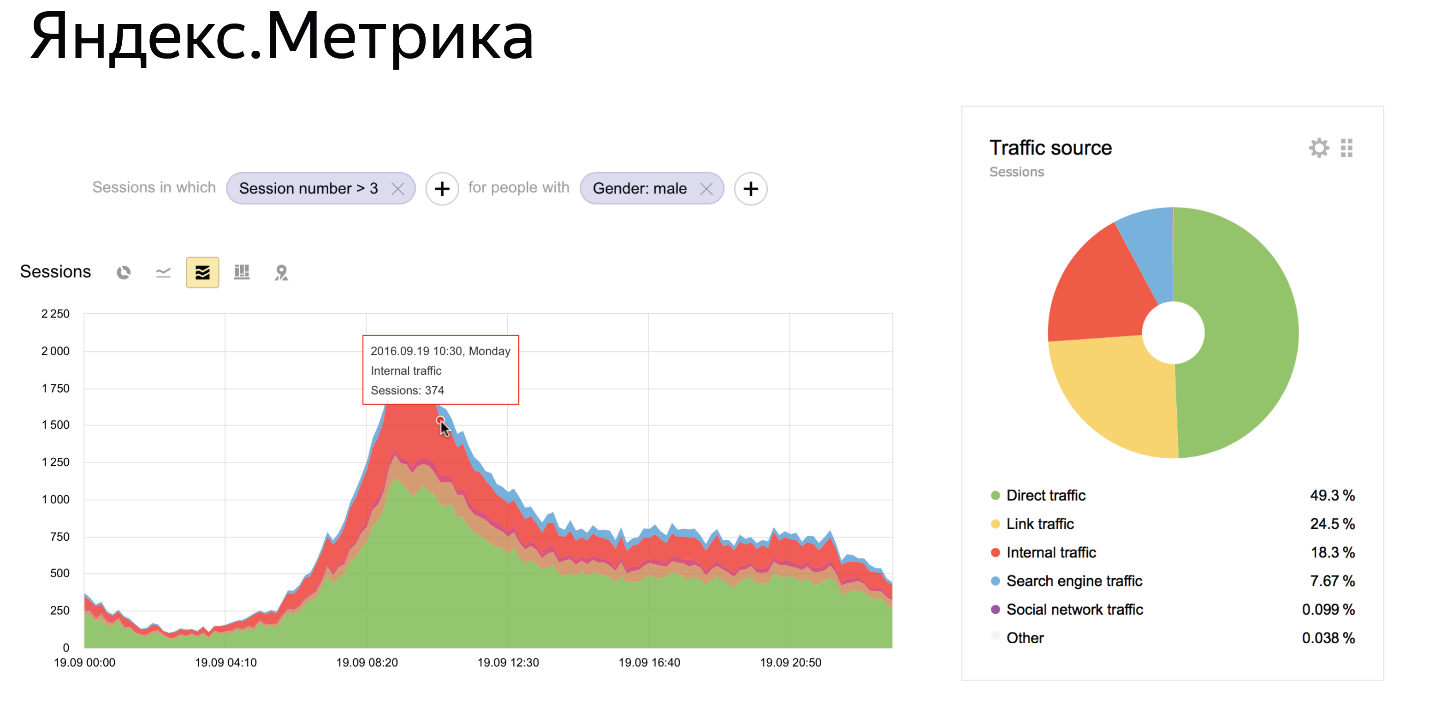

In 2009, Metrica did not look like this:

This is her current state. It can be seen that there are a lot of things in Metric. There are Dashboards on which you can build your reports. There are graphs that allow you to build something in real time. There is a sophisticated filter system with which you can view data in any slice. For example, to look at data only for girls or only for people who came from Zimbabwe - something like that.

The question arises - how to store data in order to realize all these features?

And in 2009, we lived, as we call it, in the world of the “classical” approach of aggregated data. What does it look like?

Suppose you want to build a report by gender. As you know, there are three sexes in the world: male, female and “not defined”, when we were not able to understand what gender a person has. You take your favorite database, make a column with a gender in it, make it enumerate any of the three values, make a column with a date, because you need to build a graph, and make columns with metrics that you think: the number of people, the number of events , for example, visits to the site, the number of refrigerators purchased, and so on. Such a label, for example, on Cron once a day, recount and write new lines in MySQL, and everything works successfully.

In 2009, we lived in such a paradigm. Therefore, for each report that we did, we created, in fact, a new such table. And in the case of "Metric" there were more than 50 similar different tables with different pre-aggregated keys. Our system was significantly more complicated. We were able to do this in real time. We were able to withstand any load, but nevertheless the meaning remained the same.

The problem with this approach is that it is impossible to implement such an interface on top of this data structure. Because if you wrote down on your tablet that the male gender, such and such a date, 4 refrigerators were bought, then you will not be able to filter out these data, because the number 4 is written there and it is not known how many of these people are of what age, for example. Therefore, over time, we came to the concept of a non-aggregated approach.

This is as follows. We keep a small number of very wide source event tables. For example, in the case of Metrics, these are pageviews. One line means one page view, one page view has a lot of different attributes: gender, age, refrigerator bought or not, and some other columns. In our case, in the views, we record more than 500 different attributes for each view.

What does this approach allow you to do? It allows you to build any reports on top of such a data model. The data can be filtered and grouped as you like, because you have all the parameters, you can calculate anything. It turns out a small number of tables, in the case of "Metrics" they can be counted on the fingers of one hand, but they have a very large number of columns.

The problem with this approach

We need a database that allows for such a wide and long table - because it is obvious that it will be longer than the aggregated data - quickly read any queries.

DBMS selection

This was the main question that we had in 2009. Then we already had our own experiments. We had such a system, we called it All Up. It already had non-aggregated data, and if someone remembers the old "Metric", it had a report designer in which it was possible to select some filters, measurements and build an arbitrary report. He was just on top of this All Up system. She was simple enough, she had many shortcomings, she was not flexible enough, not fast enough. But she gave us an understanding that this approach is in principle applicable.

We began to choose the base, formed some requirements for ourselves. We realized that we have approximately the following list of requirements.

Of course, we need to fulfill requests as quickly as possible. Because our main product advantage is to fulfill queries on large volumes of data as quickly as possible. The larger the site for which we can quickly read the data, the better. So that you understand the pattern: a person looks at the interface in the "Metric", changes some parameters, adds filters. He wants to quickly get the result, he does not want to wait until tomorrow or half an hour until it comes to him. You need to do these queries in seconds.

Need real-time data processing. As at the level of this request, when a person sits and wants to quickly see the result, so at the level of time that elapses between buying a refrigerator on a site and the moment when the site owner sees that a person has bought this refrigerator. This is also a very important advantage, for example, for news sites that release some news and want to quickly see how fast it grows. We need a system that allows you to insert this data in real time into the database and at the same time take fresh results and aggregated data from there.

We need the ability to store petabytes of data, because we have them. "Metric" is very large - the volume is measured in petabytes. Not every base can scale like that. This is a very important parameter for us.

Fault tolerance in terms of data centers. Well what does that mean?

We live in Russia and have seen a lot of things. From time to time, a tractor arrives and digs a cable that leads to your data center, and then quite unexpectedly the same day an excavator arrives and digs a backup cable into your other data center, which is located 100 kilometers away. And well, if I were joking like that. But it really was. If, sometimes, a cat crawls into a transformer, it explodes. Sometimes a meteorite crashes, destroys a data center. In general, everything happens - data centers are turned off.

At Yandex, we design all of our services in such a way that they live - they do not degrade in their product characteristics when data centers are turned off. Any of the data centers may be disconnected and the services must survive. At the database level for Metrika, we need a database that can survive a data center crash, which is especially difficult given the previous points, for storing petabytes of data and processing in real time.

A flexible query language is also needed, because Metrica is a complex product and we have many different combinations, reports, filters and everything else. If the language looks like some aggregation API MongoDB - if someone tried to use it, then this one does not suit us. We need some kind of language that is convenient to use, so this was one of the key criteria for us.

Then it was about how? There was nothing on the market. We managed to find the bases that implemented, at most, three of these five parameters, and then with some kind of stretch there, but about five there was not even any discussion. We were creating the All Up system and we realized that we seem to be able to build such a system ourselves. We started prototyping. We started to create a system from scratch.

The main ideas that we pursued when creating a new system and it was initially clear that it should be SQL . Because its flexibility is enough for our tasks.

It is clear that SQL is an extensible language, because you can add some functions to it, even do some super-bells and whistles. This is a language with a low entry threshold. All analysts and most developers are familiar with this language.

Linear scalability. What does linear mean? Linear means that if you have some kind of cluster and you add servers to it, then productivity should increase, because the number of servers has increased. But if you take some more typical system, which does not scale well enough, it can easily turn out that you are adding a server, and then making a request immediately to the entire cluster and the performance will not grow.

You're lucky if it will be the same, but in most cases it also will fall over time. This does not suit us.

Initially, we designed the system with a focus on the quick execution of requests, because, as I said, this is our main feature. From the point of view of system design, from the very beginning it was clear that this should be a Column-oriented column solution. Only a multi-solution solution is able to realize all that we need, to cover all our needs.

And we started to create, we started to prototype. In 2009 , we had a prototype that did some simple things.

In 2012 , we started translating parts of our production to ClickHouse. Production elements appeared that started working on top of ClickHouse.

IN 2014year, we realized that ClickHouse has grown to the point where you can create a new generation product. Metrica 2.0 on top of ClickHouse, and we started copying the data.

This is a very non-trivial process, if someone tried to copy two petabytes from one place to another, this is not very simple - you can’t do this on a flash drive.

In December 2014 , we launched the new Metrica on top of ClickHouse - it was a direct breakthrough, segmentation, a bunch of features, and all this worked on top of this base.

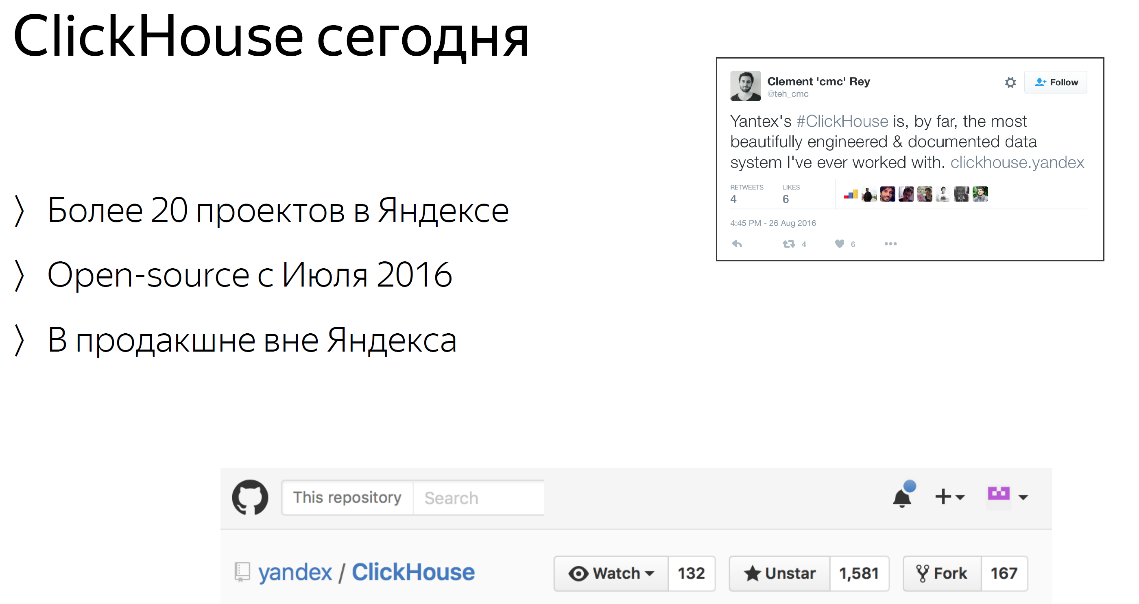

Several months agoIn June, we posted ClickHouse on Open Source. We realized that there is a niche and everything is still on the market. I had a site about “nothing on the market”, now we are still close to this situation, little has changed. There are not many good solutions on the market for this task. We realized that we are on time - we can now put it in Open Source and bring many benefits to people. Many tasks are now very poorly solved, but ClickHouse solves them very well.

It somehow happened in an explosion. Of course, we expected some great effect from the fact that we posted Open Source. But what happened exceeded all our expectations. Before that, we had many Yandex projects that were implemented at ClickHouse, but right now, when we posted, they started writing about us everywhere.

Where they just did not write about us: on HackerNews, on all specialized publications. A bunch of large companies began to ask us, a bunch of smaller companies, about new solutions. Someone has already tried to do it, but right now the status is such that ClickHouse uses, I can say - more than a hundred different companies outside of Yandex. Either at the stage of some ready-made prototypes, or already in production. There are companies that use ClickHouse directly in production and build pieces of their services on top of ClickHouse.

We got 1,500 stars on GitHub - but this is an outdated slide, now there are 1,800. The metric is so-so, but just in case, I can say for comparison that Hadoop has 2,500 stars. You see, level. We will soon overtake Hadoop, and I think then there will be something to talk about.

Indeed, there are a lot of activities going on right now. We begin to arrange all kinds of mitaps and we will arrange them, so come and ask.

What are the opportunities

What am I talking about?

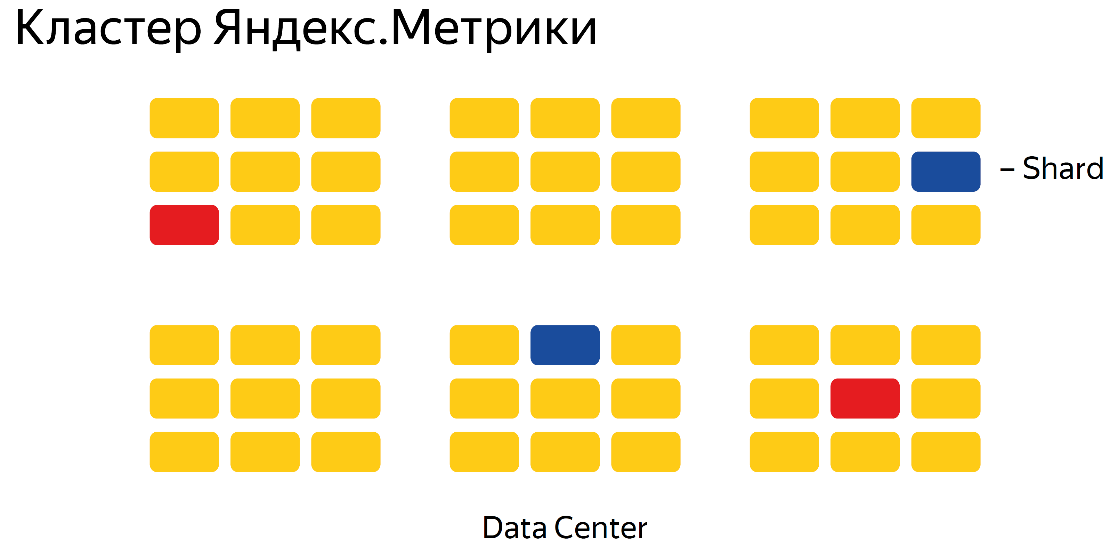

Linear scalability is a very important advantage of ClickHouse compared to similar solutions. Out of the box, ClickHouse can scale linearly and you can build a very large cluster and everything will work well. For example, because it works very well with us. Petabytes of data - building a petabyte cluster on ClickHouse is not a problem. Cross-datacenter works out of the box, you don’t have to do anything for this and it was originally conceived as a cross-datacenter solution. At us, Yandex mainly uses such clusters.

High availabilitymeans that your data, and in general the cluster, will always be available for reading and writing. In this sense, ClickHouse is such a constructor - you can build a cluster with any guarantees that you need. If you want to withstand the fall of the data center - you put, in a typical case, in three data centers a cluster with a replication factor of x2. If you want to build a solution - a cluster that can withstand the fall of the data center and one node, usually this means that you need to take the x3 replication factor and build on the basis of at least three data centers. This is a fairly flexible system; you can build any guarantee if you know how.

Data compression.ClickHouse is a layout system, which in itself means that data compression works very well. Because the meaning of the layout solution: the data from one column is stored, in fact, in one file on the hard disk and, if it says about the same there, it is compressed very efficiently - and if you store it line by line, everything goes in a row and is compressed enough badly.

ClickHouse uses a fairly large number of optimizations on this topic, and therefore the data is compressed very well. If you compare this with a regular database, or with Hadoop, or with something else - the difference is from a dozen to hundreds of times, easily. Usually we have a problem with this: people try ClickHouse, upload data there, look at how much space they are occupying and such “I think I haven’t downloaded everything,” we also looked - everything seemed to load. Actually very effective compression - people do not expect this.

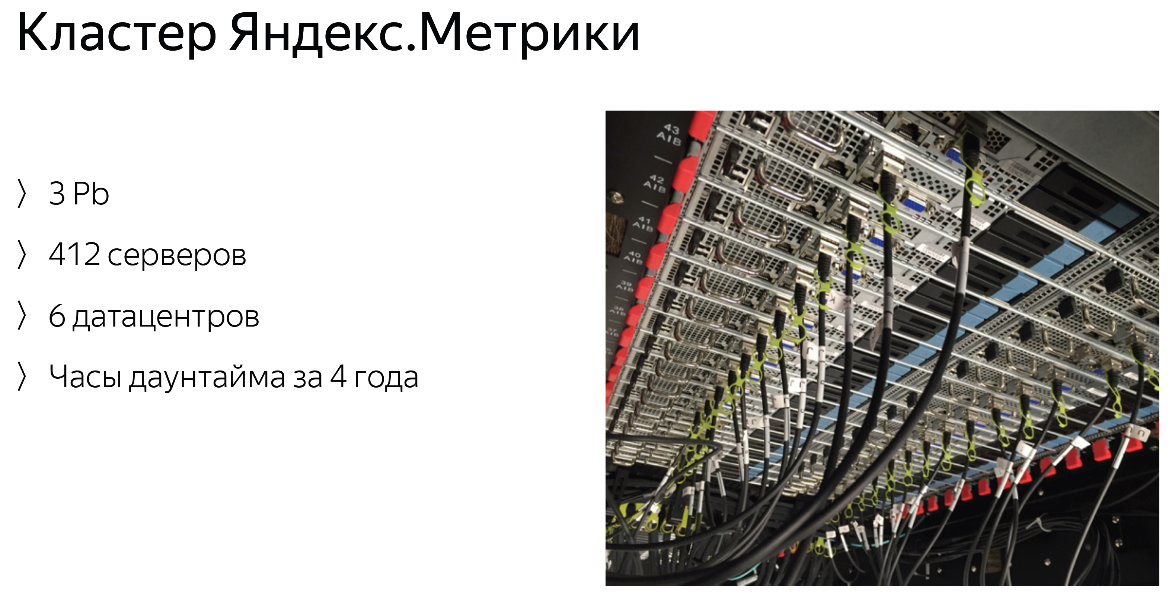

On the example of the Yandex.Metrica cluster:

We have several clusters - this is the largest of them. We have 3 petabytes right now, 3.4 or something - the slide is outdated, it has 412, and now it has 420 servers. We are increasing them slowly. The cluster is spread over six data centers in different countries. Despite the complex configuration, the cluster has some units of downtime for the entire time of its existence. This is a very small and incredible number of nines. This is all despite the fact that every time we upload the latest version of ClickHouse, with the largest number of bugs, apparently, but this did not stop us from providing the required guarantees.

This shows that this solution is able to work 24/7 without any problems. Yes, Metrika works 24/7, we do not have any maintenance periods.

Inquiries

Yes, SQL is supported. SQL is essentially the only way to ask ClickHouse anything. Strictly speaking, this is a dialect of SQL, because there are some differences from the standard, but in most cases, if you make some kind of SQL query, it most likely will work fine. There are various additional functions for approximate calculations when you can sacrifice accuracy - but the request will work faster, or it will fit into memory. There are many different functions for various data types, for example, for URLs - there are obviously a lot of URLs in Yandex.Metrica and there is a whole large set of functions for working with URLs: you can pull out a domain, expand some paths, and parameters. For each data type there is a large set of functions. Metric probably has a wide variety of data, and most likely, for about all types of data,

Arrays and tuples are supported out of the box . This means that you can create a plate in which in one of the columns will not be a column, but an array. It can be just an array, for example, a lot of numbers, or it can be a tuple - an array with a complex structure of several fields. Array support works at the query level - at the base schema level, from top to bottom and there are many functions for working with arrays that allow you to work effectively with them. You can, for example, multiply the rest of the data on this array, or you can extract any information from the array. There is even a special lambda syntax with which you can make map to an array or filter to an array, such things.

Out of the box, all requests work in a distributed manner, you do not need to change anything, you need to indicate a different plate, to which it will be distributed - it will work at the level of the entire cluster.

There is also such a feature as external dictionaries. Such an opportunity, which I would like to say a little separately. When we developed it, in “Metric” it solved the problem well, probably 80% join. They just ceased to exist. What's the point? Suppose you have a main data plate, and there are some identifiers in it - suppose this is the identifier of some client. There is a separate directory that translates this identifier into the name of the client. Quite a classic situation. What does it look like in plain SQL?

You do the join - right, do the join of one on the other and translate the ID into the name if you want to get the name as a result. In ClickHouse, you can connect this table as an external dictionary using a very simple syntax in the config - after that, at the request level, you can simply specify a function that translates this identifier into a value, well, or some other fields that you have in this remote table.

What does this mean? This means that if you select from the main table, for example, a set of identifiers, pieces 5 - to translate these identifiers into some kind of decryption is a matter of one function. If you did this through join, then you would have 5-6 join, which looks awful. In addition, external dictionaries can be connected from any database, from MySQL, from a file - from any database that supports ODBC, for example, from PostgreSQL. This allows these names to be updated somehow, which will be immediately picked up at the request level. Incredibly convenient feature.

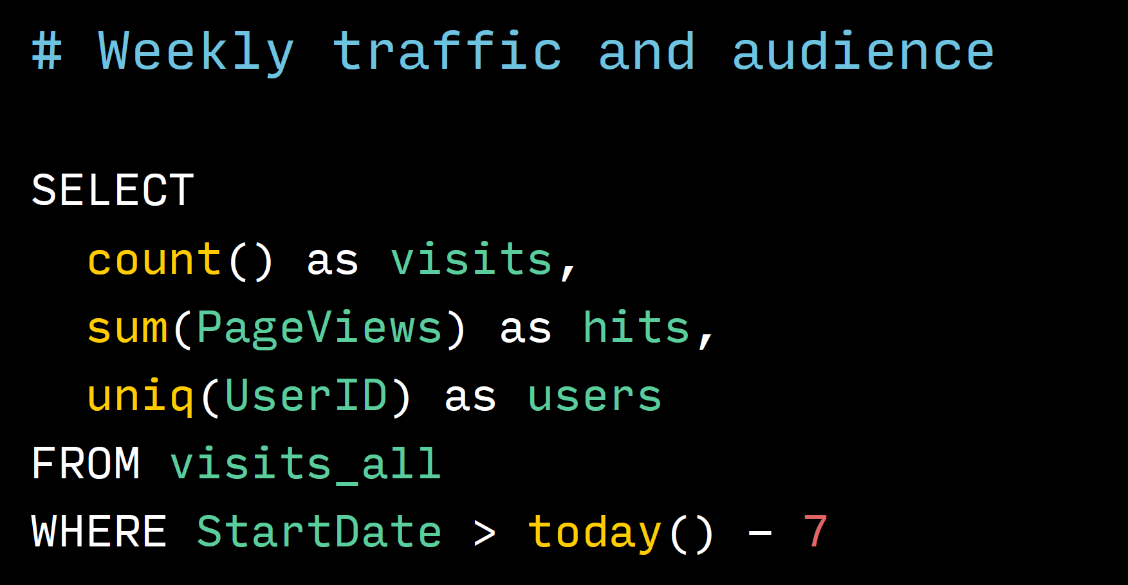

A couple of example queries:

This is a request that takes traffic and audience size, that is, the number of users from the Metrics cluster per week. It can be seen that this query is quite simple, plain SQL. There is nothing special here, only count is written without an asterisk, with an asterisk if that is also possible, but we are used to writing like that.

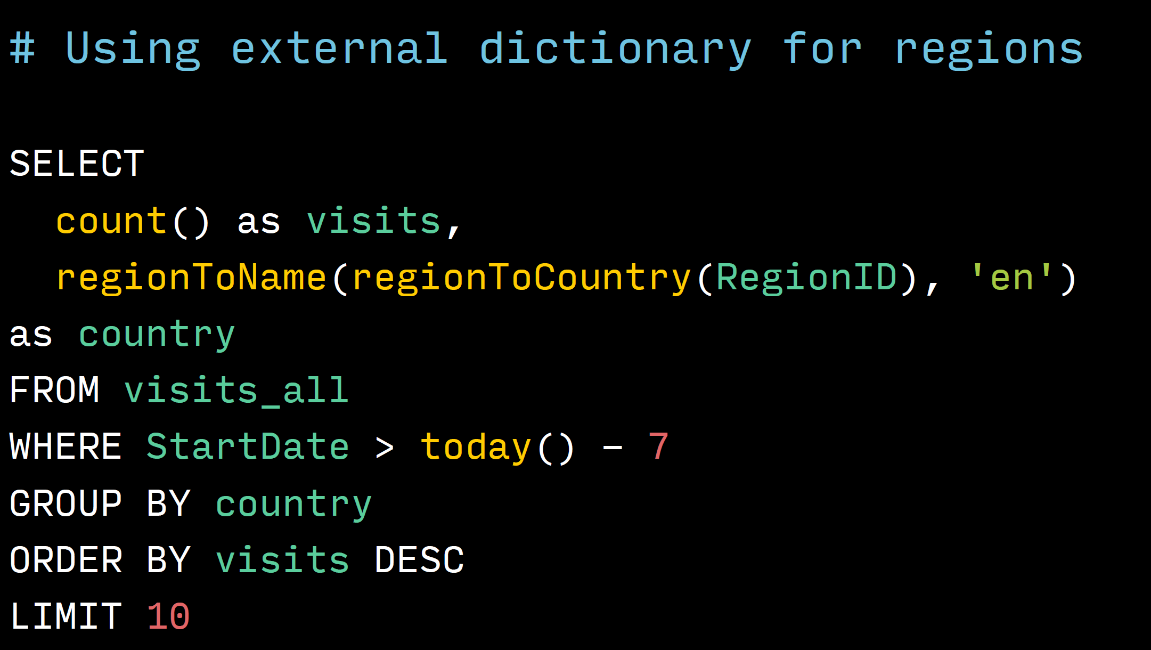

This is an example of using external dictionaries. If we look where the regionToName line is in this line, we translate RegionID - the identifier of the region where the person was. First, in the identifier of the country where he was. We have a directory from the region to the country, and then to the name of the country in English. As you can see this is a call of two functions, it is very clear and looks very simple. In classical SQL, this would be two JOINs and the query would not fit into this slide, and would have to break it into three slides. It greatly simplifies the work.

Speed

The most important feature of ClickHouse is speed. The speed is incredible. I think everyone has some expectations on how ClickHouse can work. They come to us with their expectations - here you have some expectations. Most likely ClickHouse exceeds your expectations - this is the case with all the people who come to us.

Typically, typical queries work faster than in a second even on the scale of the Metrika cluster, that is, petabytes in less than a second - despite the fact that the data is stored on ordinary (non-solid-state) hard drives.

When compared with conventional databases, the gain is hundreds of thousands of times compared with Hadoop, MySQL, PostgreSQL. It will produce results hundreds of thousands of times higher. This is reality - we have benchmarks, I'll show you later.

Some cool numbers, up to 1 billion lines per second - ClickHouse can process on one node, which is quite a lot. On the scale of the Metrics cluster, 1 request can process up to 2 terabytes per second. You can imagine what 2 terabytes are - these are such hard drives, now it only has 2 terabytes and can interfere, they are 4 terabytes, but less often. And per second, this amount of information is processed by the Metrics cluster.

And what's the point? Why is this speed so important

It is important, roughly, that's why:

It changes the approach to work completely. Especially for analysts and people who delve into data science. This is fashionable now.

How it usually works

They make a request to the classical system, in Hadoop, for example, or in some kind of MapReduce. Make a request, click OK, take a mug and go to the kitchen. And in the kitchen they flirt with someone there, chat with someone, come back in half an hour, and the request is still being fulfilled or has already been fulfilled, but only with luck.

In the case of ClickHouse, they begin to switch to ClickHouse and it looks like this: they type the request, press Enter, take the mug, go and notice that the request has already been completed - and they are so “okay, wait”, put the mug on and make the request again some other, because they already saw that something is wrong there, and it is also being executed, and they make a request more and more, enter the cycle.

This completely changes the approach to working with data, you get the result instantly - well, literally in seconds. This allows you to test a huge number of hypotheses very quickly and look at your data and so on and so, at one angle, at another angle. Conducting research work is incredibly fast or investigating any incidents that you have occurred very quickly.

We even have a problem with this inside the company. Analysts, they try ClickHouse, for example, the Metrics cluster or some other cluster. Then they become infected with some kind of infection, go to Hadoop or some kind of MapReduce and can no longer use it, because it works too slowly for them. They already think differently. They walk around and tell everyone: “We want data in ClickHouse. We all want at ClickHouse . ”

In fact, ClickHouse, strictly speaking, does not solve all the problems that MapReduce solves. MapReduce is a slightly different system, but for typical tasks ClickHouse works much, much faster, which really changes the work with data.

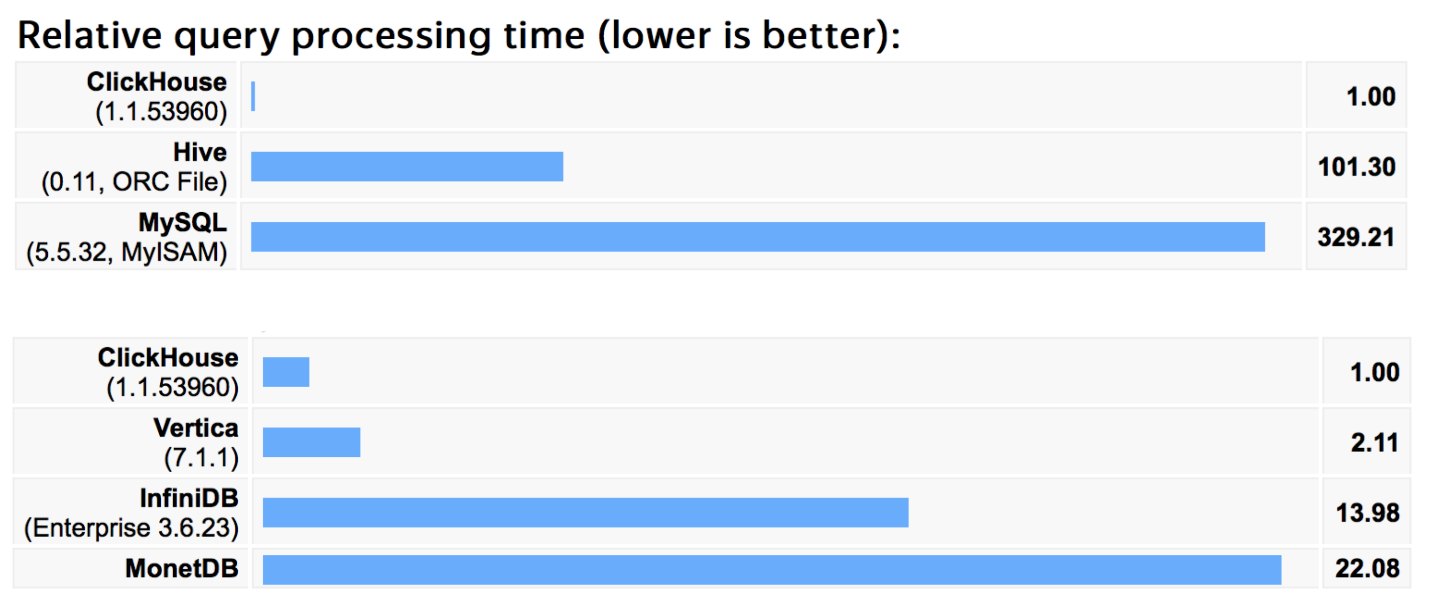

A few benchmarks, so as not to be unfounded. We drove these benchmarks a year ago on a billion lines of our own from Yandex.Metrica. The requests that were in this benchmark are different. Basically, these are the queries that we ourselves use in Metric, normal analytical queries: select group by, fairly complex or fairly simple, set of about a hundred queries.

On our own data, the results are approximately the same, when compared with Hive or MySQL - with some classic solutions, the difference is hundreds of times, as I said, it’s not entirely fair, because they are not perfect and not tailored to that.

Below there are more real competitors - bases that solve the same problems and it can be seen that the coolest of them we tried is Vertica. Vertica is really a very good database, well designed, well written and very very expensive.

According to our measurements, ClickHouse was twice as fast in these benchmarks, 2 years ago. Since then, we have optimized a lot of things.

More information at the link: https://clickhouse.yandex/benchmark.html

This link is not an error - indeed ClickHouse.yandex. There is nothing further - Yandex bought the .yandex domain - it looks very cool, it costs a lot of money, but apparently it costs that. In general, you will not be mistaken.

Interfaces

You can work differently with ClickHouse. There is an excellent console client - very convenient for fast work and convenient for fast automation, because it can work in non-interactive mode. For scripts, this is quite convenient. The default protocol is HTTP, through which you can do everything: request data, download data. Anything.

On top of HTTP, we have a JDBC driver, it is also in Open Source. You can use it in Java, Scala - in any environment. Also, people have already written different connectors for different languages, you can also use it. If there is no connector for your favorite language, then of course - write it and people will rejoice again.

A bit inside

First of all, I want to answer the most important question: “Why is ClickHouse so fast? What is the magic? Why is this so? ”There are several answers. Firstly , from the point of view of the code, as I already said, we initially designed the solution so that it was maximally tailored for performance. Therefore, all the code there is optimized as much as possible.

When a feature is written, performance tests are always run and it is checked whether this feature can be further optimized. Therefore, ClickHouse now does not have slowly working functions - everyone works as fast as possible, within reason. Vector data processing is also used - this means that data is never processed line by line, it is processed only by columns. If you have a column of numbers - you write some number in the sum, take a large array of numbers, apply some cool SSE-something-instruction, and it all adds up very quickly. This allows you to really speed up the processing.

From the point of view of data - the column solution, of course, works very efficiently, because you request only the data that you need, and not all columns. And Merge Tree is used by someone who is not familiar - read on Wikipedia , an excellent structure for storing data, is used in ClickHouse. This means that your data will lie in some small number of files, guaranteed - in a limited number of files. Therefore, from the point of view of seeks on the hard disk, their number will be minimal. In general, ClickHouse is optimized for working on hard disks, because in "Metric" we use hard disks, a lot of data will not fit on the SSD.

Data processing occurs as close to this data as possible. If you have a remote request, then ClickHouse tries to do the maximum amount of work closer to the data in order to transfer some aggregates and a small amount of information over the network. This, in particular, is one of the reasons that ClickHouse works well in the cross-datacenter environment - because not so much data is transmitted over the network.

There are also some features that ClickHouse out of the box works to speed up queries, if necessary.

There is a sampling.

It works something like this: if the request does not work fast enough, you can calculate for your request not all the data, but say 10% or 1%. This is necessary for research work - if you want to investigate your data, then you can do this at 1% normally and will work approximately 100 times faster. Very comfortably.

There are functions with probabilistic algorithms - you can choose some kind of compromise between speed and accuracy. There is the ability to edit parameters at the request level, for example, to select the number of threads that will process this particular request, which adds flexibility.

Scalability and resiliency

Here I must say that ClickHouse is such a completely decentralized system and there is no single point that accepts these requests, and no single point that somehow regulates what is happening.

Asynchronous replication is the default. This means that you are writing data to some kind of replica, and they will be guaranteed to be copied to another replica someday. Well, how does asynchronous replication work - in terms of ClickHouse it is seconds, but in fact there is a mode that allows you to do everything synchronously, if you need it. This is usually not necessary.

Zookeepervery actively used for synchronization of actions, for leader election and other operations. Not used during requests, because ZooKeeper is not able to withstand RPS. In general, he is capable, but this will introduce additional delays, but inside he is used very actively.

On the example of the Yandex.Metrica cluster:

6 data centers are shown here. Blue shows one shard, these are two copies, x2 replication factor. Data is equally located in two data centers - everything is quite simple. If the replication factor is x3, then there will be 3 pieces of blue and red squares.

The most interesting. How can you use ClickHouse?

I would like to start with how ClickHouse does not need to be used. Do not hammer nails with a microscope. ClickHouse is not an OLTP solution, which means the following - if you want some kind of transactionality, if you want to do some business logic on top of the database, do not use ClickHouse for this. Use classic solutions: PostgreSQL, MySQL, whatever you like. ClickHouse is a database for analytics, for research and for some real-time reports. ClickHouse is not a key-value solution. No need to use it as storage files or something else. Do not put your favorite films there, put them somewhere else.

ClickHouse is not a document-oriented system, which means that ClickHouse has a tough scheme. It needs to be set at the level of create table and describe the structure. The more efficiently you describe this structure, the more correctly you do it - the more profit you will get from ClickHouse in terms of performance and usability.

ClickHouse cannot modify data. This may come as a surprise to many, but in fact you do not need to modify the data - this is an illusion. Well, that is, in fact, you can modify the data in ClickHouse - there is support, you can delete whole large pieces. There is an opportunity to work with data - this is the concept of SRDT when you change data without changing it, that is, insert new records. And in ClickHouse you can work in this way, but the main idea here is that if you need to change data often, then most likely you do not need to use ClickHouse - it is hardly suitable for your tasks.

When should I use ClickHouse?

You need to use ClickHouse when you have wide plates with a lot of columns. This works great in ClickHouse, unlike a large number of other databases, because it is a multi-solution solution. If you think analytics is a great solution.

If you have a pattern such that there are not very many requests, but each request uses a lot of data, then ClickHouse works fine in such a pattern. The “little” in this case is: units, tens, hundreds of RPS.

If you have a large flow of incoming data, it constantly flows into the database. In the case of "Metric" it is some 20 billion events a day and they are written in real time. Very few databases are able to work in such conditions, when they constantly write everything, while constantly asking. ClickHouse can, under it it is ground.

If you basically have petabytes of data and you need to consider analytics on them, then generally speaking not many decisions are able to do this. You can count them directly on the fingers of one hand, well, maybe two. And ClickHouse copes with this perfectly (not fingers count).

I have a couple of small cases. What they usually start with is trying to analyze what is going on in their current production.

How it usually looks

People take their access-logs or logs simply daemons, lay them out in columns that are interesting and write down in ClickHouse with a literally simple script. All. This is done very simply, it’s real for hours to write this script in Python or Bash, which you like best.

And what comes out? You can very easily analyze any strange situations at any time. Some client did something strange - you need to see if you need to see DDoS-um, whether this client is me or not. You can select all this and instantly get the result. You can build any controls from above and count any metrics, this also works instantly. We started from this case, from Metrica ClickHouse crawled precisely using this case. And other departments began to upload their logs and build some kind of incident reporting.

The second, larger and more general case is the base for analytics within Data Warehouse.

What is there

The company has some kind of base that is used for the production process: Oracle, PostgreSQL, something like that. It is not intended for analytics at all, it’s understandable - it is designed to implement business logic. And analytics on it is very inconvenient to consider. Or there is a situation where there is Hadoop, in which everything is also poured and analysts are sitting there too, trying to understand something in this Hadoop that works very slowly.

What can be done?

You can raise a ClickHouse cluster, a small, literally one server - or you need to calculate how much, while remembering that the data in ClickHouse will take up much less space than the data in your current database. You can simply copy the data there. Copy them, for example, periodically - once a day. As a result, you will get incredible speed in working with your data that relates to business or development. Your analysts will immediately have silky hair. They can quickly investigate incidents, build business indicators very quickly, and even build some kind of dashboards on top of ClickHouse very quickly. The difference in speed, you yourself saw a hundred times. Setting it all up is very simple and picking it up is simple. In general, the threshold for entering ClickHouse is much lower than in a typical system, for example, in Hadoop.

Where to begin?

We have a wonderful tutorial - with it you can go in, play around, put ClickHouse, the whole tutorial is done in some hours. There is already a data set, it can be downloaded, uploaded, see how simple it all is. Really very simple. I set up ClickHouse the day before yesterday on a completely new server and it took me literally 2 minutes.

Write questions to the newsletter , we always answer.

Go to GitHub - see the code. This is the most super-optimal way and all that, the whole code is open.

And go to the Google group and other sources. More information on the link ClickHouse.yandexIt has everything you might need - including excellent documentation.

Total

Open Source database open to all under the apache2 license. Great license.

You can use it for tasks that need linear scalability and for which you need incredible query speed. In general, if you have a problem with the speed of analytical queries, try ClickHouse.

SQL is supported with a bunch of bells and whistles.

Thanks to everyone.

Report: ClickHouse: very fast and very convenient .