As I did the fastest image resizing. Part 0

Hello, my name is Sasha, I wrote the fastest image resize for modern x86 processors. I say so, since all the other libraries that I managed to find and test were slower. I took up this task when I was working on optimizing image resizing on the fly at Uploadcare . We decided to open the code, and as a result, the Pillow-SIMD project appeared . Anyone can easily use it in a Python application.

Any code is executed on a specific hardware and good optimization can be achieved only by understanding its architecture. In total, I plan to release 4 or 5 articles in which I will tell you how to apply knowledge of the architecture of iron to optimize a real task. By my example, I want to encourage you to optimize other applications. The first two articles will be published within a week, the rest - as they become available.

About the task

By “image resizing” I mean resizing an image using convolution resampling. Resampling is performed over an array of 8-bit RGB pixels into memory, without taking into account decoding and encoding of images, however, taking into account the allocation of memory for the final image and taking into account the preparation of coefficients necessary for a particular operation.

That's so strict. No tricks (like decoding a smaller image from a jeep) and no combination of algorithms, only an honest measurement of the operation of a particular algorithm. Tricks and optimization of special cases can be applied later, they are not the subject of consideration in this series of articles.

... using convolution resampling. What?

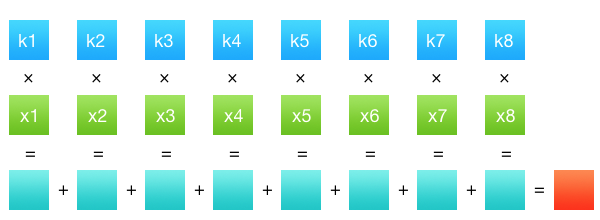

To make it clear what specifically needed optimization, I’ll tell you what convolution resampling is. Convolution (correctly speaking, convolution of discrete values , because image pixels are discrete) is a very simple mathematical operation. We have some series of values No. 1 (coefficients) and a series of values No. 2 (data, in our case, the intensity of pixel channels). The result of the convolution of these two rows will be the sum of the products of all members in pairs. So simple - the sum of the pieces. Matan ended before he could start.

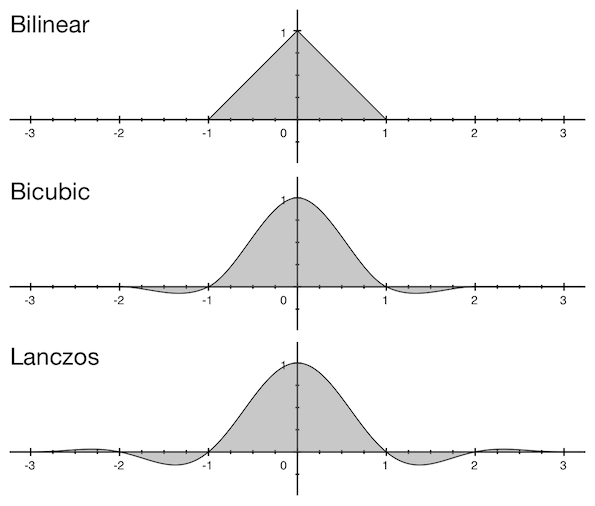

It remains to understand exactly how this operation is associated with resize. A number of values No. 2 is a series of pixels of the original image. A number of values No. 1 are coefficients obtained from the filter. A filter is a function that determines how exactly we will collapse values. Maybe you noticed a drop-down menu with filters in the resize window in Photoshop or another graphics editor - bilinear, bicubic, sometimes Lanczos. This is this filter. But the value resulting from the convolution is the intensity of one channel of one pixel of the final image. Those. to get an image of M × N pixels, we need to do M × N × C convolution operations, where C is the number of color channels. Yes, counting the entire pixel with one operation will not work, the values of different channels are independent and must be considered separately.

The functions of the filters are not infinite, their values are not equal to zero only in the central part: for a bilinear filter it is a range of values from –1 to 1; for bicubic from –2 to 2, for Lanczos from –3 to 3 (although there are other varieties of Lanczos).

These numbers are called the filter window, because the filter is applied only in this range, and outside it is equal to zero. Accordingly, the number of source pixels needed for convolution is taken in a radius the size of the filter window times the reduction factor (or one if the increase occurs). I think this is best explained by example. We need to reduce the image with a width of 2560 pixels to a width of 2048 using a bicubic filter. Suppose we want to find the value of the 33rd pixel of the final image. The bicubic filter has a window size of two, and a reduction ratio of 2560/2048 = 1.25, so we will need to take a row of pixels from the source image from floor((33 - 2) × 1,25)to ceil((33 + 2) × 1,25). Those. from the 38th to the 44th pixel. For the same pixels, coefficient values are calculated.

Until that moment, I talked about a number of coefficients and a number of pixels, overlooking the fact that the image is actually a two-dimensional structure. And it seems, logically, you need to collapse not a line, but some area of the original image. But one of the properties of the convolution is that the operation can be carried out separately vertically and horizontally, making two passes. Roughly speaking, this reduces the complexity of one convolution from O (n²) to O (2n) (actually less, but still significant).

Why all the same convolution

In general, the phrase "image resize" carries a minimum of information about what needs to be done. She says that we should get an image of finite size, using the original, while preserving the geometry of the depicted objects. But you can use the original image in different ways. For example, for each final pixel, you can map one pixel from the original one and take it unchanged. This is called the nearest neighbor method. The picture is rough, torn, unpleasant:

This is because a very small fraction of the original pixels were used in the final image (in the example above, less than one percent). We lost the information from those pixels that did not fall into the final image.

Here's what resampling with convolution looks like:

The convolution resampling correctly takes into account the contribution of each source pixel to the final image. It is universal because gives an equally good and predictable result for a wide range of scaling factors, does not contain geometry distortions at the local level (with the caveat that a filter is used that does not give such distortions, because some filters still give). And in general, it is all so correct and good from all sides, except for one thing: performance.

Pillow

Pillow is a Python image library developed by a community led by Alex Clark and Eric Soroos. Uploadcare used Pillow even before I joined the team. Then it seemed strange to me - working with images was one of the main tasks, why was it necessary to take a library tied to the language for it. Is it not better to take the same ImageMagick, which has a ton of functions used by a million developers, certainly everything should be fine with performance in it. After several years, I can say that it was a success for me and for Pillow. As it turned out, the performance of both libraries at the start was about the same, but I doubt very much that I would have the strength to do something for ImageMagick that I did for Pillow.

Pillow is a fork of the very old PIL library. Historically, no convolution has been used for resizing in PIL. The first convolution resize implementation in PIL appeared in version 1.1.3 and was available using the ANTIALIAS filter, the name of which emphasized the fact that other filters used lower-quality algorithms. On the network, you can still often find now no longer relevant recommendations to use only the ANTIALIAS filter when resizing in PIL (and in Pillow, as a receiver).

Unfortunately, ANTIALIAS had a rather low performance. I got into the source code to see what can be done, and it turned out that the implementation of resize for ANTIALIAS (that is, convolution) can be used with the rest of the filters. And the ANTIALIAS constant itself corresponds to the Lanczos filter, which has a large window (± 3), and therefore it is quite slow. The very first optimization I wanted to do was to enable convolution for bilinear and bicubic filters. So it would be possible to use a cheaper bicubic filter in your application (with a window of ± 2) and not lose too much in quality.

Further I was interested to look at the code of the resize itself. I easily found it in this module . And although I write mainly in python, I immediately noticed a few dubious places in terms of performance. After several optimization, I got a 2.5 times increase (this will be described in the next article ). Then I began to experiment with SIMD, translate all calculations into integers, aggressively deploy cycles and group calculations. The task was extremely interesting, there were always a couple more ideas in my head on how to improve productivity. I plunged deeper and deeper into the rabbit hole, periodically testing another hypothesis.

Gradually, the code became larger and faster. Part of the work was given back to Pillow. But the SIMD code was hard to port because it was written for a specific architecture, and Pillow is a cross-platform library. Therefore, it was decided to make a non-cross-fork fork of Pillow-SIMD . The Pillow-SIMD versions are fully consistent with the versions of the original Pillow and add speed to some operations.

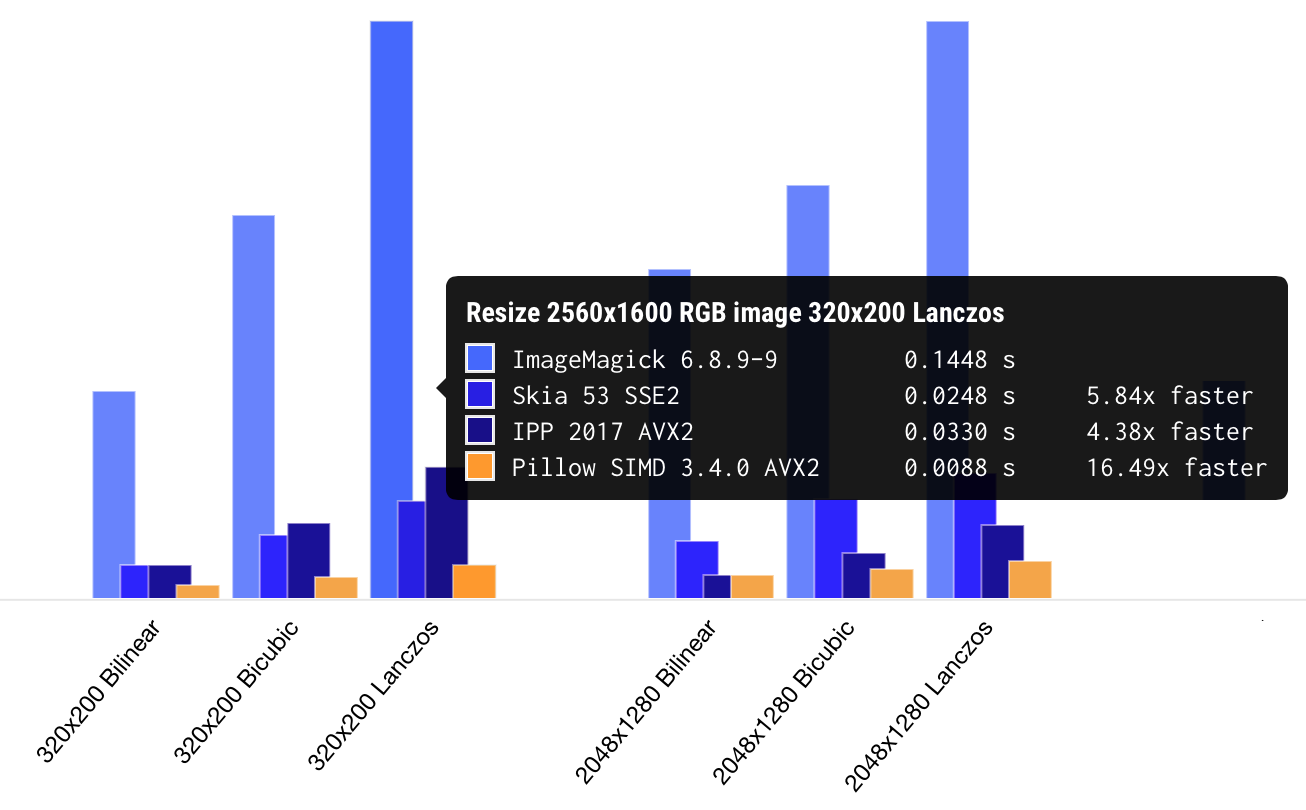

In the latest versions of Pillow-SIMD with AVX2, resize runs 15 to 30 times faster than in the original PIL. As I said at the very beginning, this is the fastest implementation of high-quality resize that I managed to test. You can see the page on which the results of benchmarks of different libraries are collected.

What makes me happy with Pillow and Pillow-SIMD is that these are real libraries that even a novice developer can really use. This is not a piece of code published on Stack Overflow, which is unclear where to stick. And not the "primitive blocks" from which each operation needs to be assembled as a constructor. And not even a complicated C ++ library with a confusing interface that compiles for half an hour. This is one installation line, one library import line, one image loading line and, “hey, mum, look, I use the fastest resize in my application”.

Performance measurements

In the articles, I will upload the performance measurements in the form of a table in which the original image with a resolution of 2560 × 1600 pixels is resized to resolutions of 320x200, 2048x1280 and 5478x3424 using the bilinear, bicubic and Lanczos filter (i.e., only 9 operations). The original image is taken large enough not to fit completely in the cache of the third-level processor. In this case, the actual content of the image does not matter in terms of performance, you can resize at least an empty white sheet, at least black, at least a photo of your cat. Here is an example of the results from the Pillow version 2.6 library, before any optimizations.

Scale 2560×1600 RGB image

to 320x200 bil 0.08927 s 45.88 Mpx/s

to 320x200 bic 0.13073 s 31.33 Mpx/s

to 320x200 lzs 0.16436 s 24.92 Mpx/s

to 2048x1280 bil 0.40833 s 10.03 Mpx/s

to 2048x1280 bic 0.45507 s 9.00 Mpx/s

to 2048x1280 lzs 0.52855 s 7.75 Mpx/s

to 5478x3424 bil 1.49024 s 2.75 Mpx/s

to 5478x3424 bic 1.84503 s 2.22 Mpx/s

to 5478x3424 lzs 2.04901 s 2.00 Mpx/sThe second column here is the time in seconds, and the third is the bandwidth of the original image for this operation. That is, if the operation took 0.2 s, then the throughput would be 2560 × 1600 / 0.2 = 20.48 megapixels per second.

The original image resizes to a resolution of 320 × 200 in 164 milliseconds. Well, sort of not bad. Maybe you don’t need to optimize at all, leave it as it is? Well, if you recall that the resolution of photos from mobile phones now has an average size of 12 megapixels, then everything turns out not so rosy. The photo from the iPhone will be reduced half a second without taking into account unpacking. Considering other operations, you can process ≈80 images per minute, and about 5000 per hour. The current load on our service is about 130 thousand requests per hour. We would need 26 AWS c4.large servers running at the limit to handle this load. In reality, we only have 4 servers involved, the load on which in hot hours is about 40%.

If it were possible to extrapolate such an effect to the scale of the planet and replace all the code that resizes images with more efficient one, the benefit would be enormous. Tens of thousands of servers saved, hundreds of kilowatts of electricity. And this is one millionth of world consumption. Yes, you could save the planet!