Create a standalone drone on Intel Edison

- Transfer

We continue the conversation on how to independently make an autonomous flying device. The last time it was about the hardware components, mechanics and management, we also have equipped your device for

In the second article of the cycle, our colleague from Intel Paul Guermonprez suggests changing the platform and see what a drone based on an Intel Edison computer can achieve and, most importantly, how to do it. Well, at the very end of the post there is an offer for those who are fired up with the idea to put everything into practice. We assure you, under certain conditionsit’s quite possible to get a free hardware platform for experiments from Intel!

Maps and measurements

Civil drones achieve good results in high-definition video recording and in flight stabilization. You can even plan automatic flight routes on your tablet. But there is still a serious problem: how to prevent the collision of small drones in flight with people and with buildings? Large drones are equipped with radars and transceivers. They fly in controlled areas, away from obstacles. They are remotely controlled by pilots using cameras. But small civilian drones should be near the ground, where there are many obstacles. And pilots can not always get a video image or can be distracted from flight control. Therefore, collision avoidance systems must be developed.- Single beam sonar can be used. Simple drones are often equipped with a vertical sonar to maintain a stable flight altitude at a short distance from the ground. Sonar data is extremely easy to interpret: you get the distance in millimeters from the sensor to the obstacle. Similar systems exist in large aircraft, but are directed horizontally: they serve to detect obstacles in front. But this information alone is not enough for the flight. It is not enough to know if there is any obstacle right in front or not. It's like stretching out a hand in front of you in the dark.

- You can use an advanced sensor. A sonar beam gives information about one distance, like 1 pixel. When using the horizontal viewing radar, you get full information: 1 distance from all directions. The development of a one-dimensional sensor is a two-dimensional sensor. In this sensor family, you probably know Microsoft Kinect. Intel has Intel RealSense. From such a sensor you get a two-dimensional distance matrix. Each pixel is a distance. It looks like a black and white bitmap, where black pixels are nearby objects and white dots are objects far away. Therefore, working with such data is very easy: if in a certain direction you see a group of dark pixels, then there is an object. The range of these systems is limited: sensors the size of a webcam have a range of 2-3 meters, larger sensors - up to 5 meters. Therefore, such sensors can be useful on drones to detect obstacles at a short distance (and when driving at low speed), but they will not allow to detect obstacles at a distance of 100 m. Samples of such drones were shown at CES:.

So, a single-beam sonar is ideal for measuring distance to the ground at low altitude. Sensors like RealSense are good for short range. But what if you need to see and analyze volumetric objects in front? Need computer vision and artificial intelligence!

To detect volumetric objects, you can either obtain a three-dimensional image using two images from two adjacent web cameras (stereoscopic vision), or use sequential images captured during movement.

Our autonomous drone project

In our project, we use the Intel-Creative Senz3D camera . This device includes a two-dimensional distance sensor (with a resolution of 320 x 240), a conventional webcam, two microphones and accelerometers. We use a distance sensor for detecting objects at short range and a webcam for long range.

In this article, we focus mainly on long-range computer vision, rather than two-dimensional information on short-range depth. Therefore, the same code can be run with a cheap five-dollar webcam with Linux instead of the powerful multi-purpose Senz3D. But if you need both computer vision and depth data, the Senz3D is just perfect.

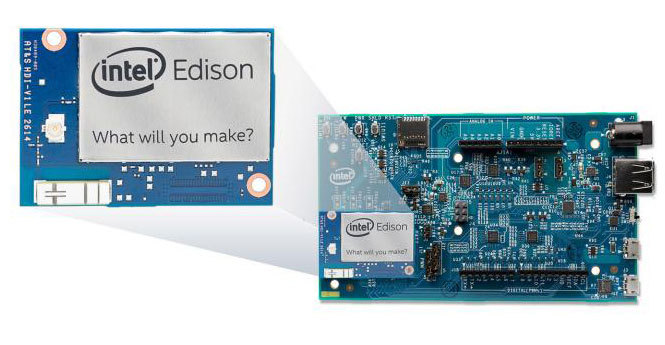

As an integrated drone platform, we chose Intel Edison. In previous projectWe used a fully-functional Android smartphone as an integrated computing platform, but Edison is more compact and cheaper.

Edison is not so much a processor as a full-featured Linux computer: a dual-core Intel Atom processor, RAM, WiFi module, Bluetooth and much more. It is only necessary to pick up an expansion card for it, based on the requirements for I / O, and connect it. In this case, we need to connect the Senz3D USB camera interface, so we use a large expansion board. But there are tiny boards with only the components you need, and you can easily create the right board yourself. It's just an expansion board, not a motherboard.

Installation

OS We unpacked Edison, updated the firmware, and saved the Yocto Linux OS. There are other varieties of Linux available with many already compiled packages, but we need to install simple software with as few dependencies as possible, so Yocto is fine with us.Software. We configure WiFi and get access to the board via ssh. We will edit the source code files and compile them directly on the board via ssh. You can also compile on a Linux PC and transfer binary files. If you compile code for the 32-bit i686 architecture, it will run on the Edison platform. All you need is to install the gcc toolchain and your favorite source code editor.

Camera.The first difficulty is using the PerC camera with Linux. This camera was designed for use with Windows. Fortunately, the company that released the sensor itself provides a driver for Linux: SoftKinetic DepthSense 325 .

This is a binary driver, but a version compiled for Intel processors is available. Using Intel Edison, you can compile in-place using gcc or Intel Compiler, but you can also get binaries compiled for Intel and deploy them unchanged. In such situations, Intel compatibility is an important advantage. After solving several problems with dependencies and layout, our driver is ready and running. Now we can receive the image from the camera.

Sensor data.Depth data is very simple, easy to get from the sensor and easy to analyze. This is a matrix of depth information, its resolution is 320 x 240. Depth is encoded as shades of gray. Black pixels are close, white pixels are far. The video component of the sensor is a conventional webcam. From the point of view of the developer, the sensor gives us 2 webcams: one is black and white, it returns data about the depth, the other is color, it gives a normal video image.

We will use the video image to detect obstacles in the distance. Only light is needed, and the distance is not limited. Depth information will be used to detect obstacles at a very close distance from drones, not more than 2-3 m.

Safety notification.The photographs show that we are working in the laboratory and simulate the flight of a drone. The proposed algorithm is still far from ready, so you should not launch a drone in flight, especially when people are nearby. First of all, in some countries this is quite rightly prohibited. But more importantly, it is simply dangerous! A lot of videos shot by drones that you see on the Internet (shot both indoors and outdoors) are actually very dangerous. We also send drones flying on the street, but in completely different conditions, in order to comply with all the requirements of French law and to avoid accidents.

The code

So, we have two sensors. One returns depth data. You do not need our help to process this data: black is close, white is far. The sensor is very accurate (error of the order of 1-3 mm) and works with extremely low delays. As we said above, this is all fine, but the range is small.The second sensor is a regular webcam. And here we have a new difficulty. How to get voluminous information from a webcam? In our case, the drone flies along a relatively straight path. Therefore, two consecutive images can be analyzed to detect differences between them.

We get all the important points on each image and try to correlate the difference between the positions of these points to get vectors. The photo shows the result when the drone is on the chair and slowly moves through the laboratory.

Small vectors are green, long vectors are red. This means that if between two consecutive shots the position of a point changes quickly, then it is red, and if it is slow, then it is green. It is clear that the errors still remain, but the result is already quite acceptable.

It's like in Star Trek. Remember how the starry sky “stretches” when the ship moves faster than the speed of light? Close stars turn into long white vectors. Further stars form short vectors.

Then we filter the vectors. If the vectors (any: both large and small) are on the side, there is no risk of collision. In the test photo, we find two black suitcases on the side, but fly right between the masses. There is no risk.

If the vector is right in front of you, it means that a collision is possible. A short vector means you still have time. A long vector means that time is gone.

Example. In the previous photo, the suitcases were close, but on the side, which is safe. Objects in the background were ahead, but far away, also safe. In the second picture, the objects are already right in front of us, with large vectors: this is already dangerous.

Download the project materials from here .

results

On the set of equipment described above, we demonstrated 4 theses.- The Intel Edison platform is powerful enough to process data from sophisticated 2D sensors and USB webcams. You can even implement computer vision support on the board itself, and it’s convenient to work with it, since it is a full-fledged Linux computer. Yes, you can achieve higher performance per watt if you use a dedicated image processor, but developing simple software for it will take several weeks, or even months. Prototyping using Intel Edison is easy.

- Analysis of data from one webcam provides detection of volumetric objects at a basic level, with a frequency of 10-20 times per second on the Intel Edison platform without optimization. This is enough to detect volumetric objects at a great distance and to adjust the path accordingly.

- The same combination of hardware and software can support a two-dimensional volume sensor to work with ultra-low latency and get an accurate three-dimensional map. With Intel Edison and Senz3D, you can solve the collision avoidance problem at both low speed / short distance and high speed / long distance.

- The proposed solution is characterized by low cost, low weight and low electricity consumption. This is a practical solution for small consumer drones and professional drones.

It's time to act!

Now about how to get a free set of experiments for Intel. The company continues the Intel Do-It-Yourself Challenge academic program and now we have 10 sets that we will be happy to share with you - subject to certain conditions. Namely:- You are a student or an employee of a Russian university;

- Together with your colleagues, you have sufficient knowledge to start a project;

- Your research team is led by a professor or university teacher;

- You understand the complexity of the task and are ready to devote enough time to working on it;

- Your project is not related to military issues.

In this case, you need to formulate your idea, briefly talk about your creative team and send the resulting text in English or French to Paul Guermonprez .

References and Resources

- Intel Software Academic Program (project description and source code)

- Intel Real Sense

- SoftKinetic DS325