Worst practices for working with backup products using Veeam Backup & Replication as an example

- Transfer

Many have heard about the "best practices" - these are recommendations that should be followed when deploying the system for its optimal operation. However, today I want to talk about the “worst practices” or the common mistakes of administrators in the field of backup tasks. Since product users do not always have enough time to read many articles with “best practices”, as well as resources to implement them all, I decided to summarize information on what exactly should be avoided when working with backup products. For “bad advice” welcome under cat.

Lack of backup planning and evaluation of backup storage characteristics is the most common “worst practice”. Many users install a backup product with standard settings by simply clicking the “Next” button in the product wizards sequentially. Moreover, many leave the default settings for backup jobs, not taking into account the specifics of the infrastructure, policies for deleting old copies, and other important aspects. As a result, there are errors when launching and completing tasks, prematurely filling up the repository, and, as a result, pleasant but time-consuming communication with the technical support service.

How will be correct?Using Veeam products as an example: to avoid such scenarios, before installing Veeam Backup & Replication, it is recommended to use, for example, Veeam ONE , a tool for monitoring and reporting on the operation of the infrastructure. So, by opening the VM Configuration Assessment report , you will see if your virtual machines are ready for backup, and if not, for what reason. The VM Change Rate Estimation report (estimation of VM change frequency) is based on statistics on changes in virtual machine blocks and allows you to correctly calculate the required repository size.

You can read more about useful reports for planning, for example, here .

Another tool from Veeam, the online recovery point size calculator, will also help you plan your backup environment.

Want to flood all backup jobs due to an error in one of them? Then rather combine them into chains of dependent tasks so that when one task is completed, another begins immediately. In this case, you are guaranteed to receive an increase in backup windows, delayed start of tasks and other unpleasant consequences.

How will be correct?In fact, tasks should be combined only in certain cases. For example, if you do not want to backup two applications at the same time, then you can configure the start of backup of one of them only after the backup of the other application is completed. In the case of Veeam, you better entrust the product to plan resources for you. The Veeam Backup & Replication scheduler can run several tasks at the same time to optimize the backup windows, using “smart algorithms” to distribute the load on the infrastructure components, choosing the optimal data transfer mode and working within the set bandwidth limits of the channel to the repository.

Life experience shows that often companies “save” on testing backups. This may be due to lack of awareness regarding possible problems in the recovery phase, as well as to economic factors, since a full-fledged process of testing system restore from a backup, if done manually, is a very time-consuming operation. Such a situation is fraught with negative consequences, because in the event of a failure critical data may not be restored at a given time or, even worse, may be partially or completely lost.

What happened in 1998 in the real case of the Pixar studio, when all the working materials of the cartoon “Toy Story 2” were almost lost, you can read in our post “ The Case in Pixar or once again about the importance of testing backups"

How will be correct? Testing recovery from backups must be done regularly. You should distinguish between integrity testing of the backup itself and testing recovery from the backup . In the first case, you only check the integrity of the copy against the checksums of the data blocks. In the second, you conduct testing that reflects a particular simulated scenario of a single failure or a full-blown “catastrophe” of a productive network. It is extremely important to do not only the first, but also the second, because ultimately you are interested in the real data recovery in the event of a failure, and even if the integrity of the backup itself is not compromised, the restoration may fail.

There can be many likely reasons for the failure of recovery; I will cite only a few of them:

How can these problems be solved? For example, Veeam SureBackup technology allows you to automatically test virtual machines from backups and make sure that they can be restored. The SureBackup task (see the post “SureBackup - automatically check the possibility of restoring data from a backup” ) automatically starts all the machines, taking into account their dependencies (for example, first a domain controller and only then an Exchange server), in an isolated virtual laboratory, checks their operability ( including using scripts written by the user) and sends the relevant reports by e-mail.

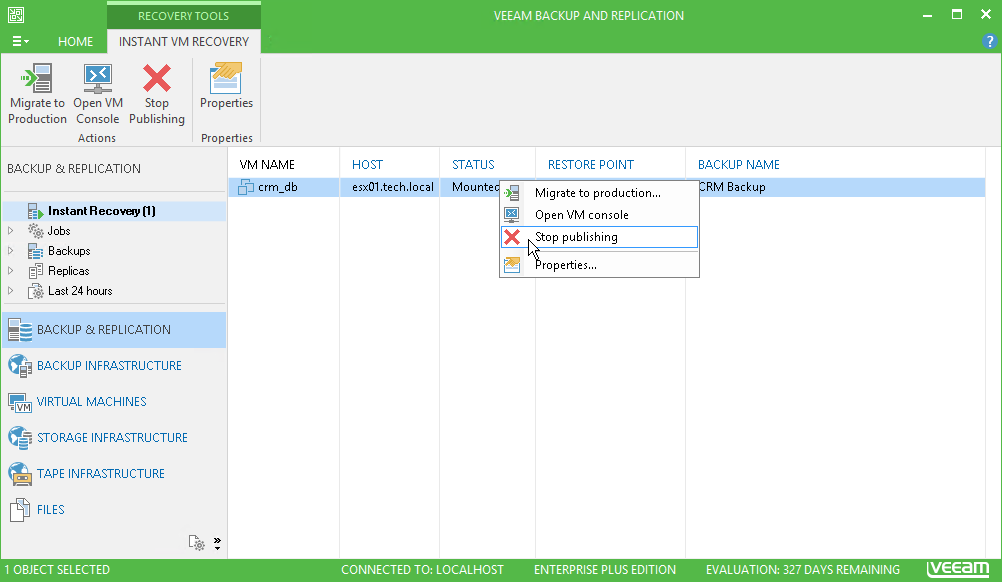

And finally, the worst advice on today's topic specifically on Veeam: start the virtual machine using Instant VM recovery and forget about it. Forget it completely. In this case, you are guaranteed a ton of problems. Running the machine directly from the repository will block the recovery point, so other tasks (for example, SureBackup or BackupCopy) simply will not start. This happens because the machine starts directly from the backup file in read mode, that is, without writing any changes to the file itself. This creates a separate file on the C: drive of the Veeam backup server, which will grow to incredible sizes if you leave the machine running for at least a few days.

How will be correct?To avoid this, immediately after the completion of Instant VM recovery, be sure to transfer the machine to the production environment. See the Veeam user guide for more information on this process .

I hope this article will save time, money and relieve headaches from the problems described. Anything to add to the list of “worst practices”? Then share it in the comments!

And in conclusion, I give links to our best posts about the best backup practices, whatever product you use:

Starting backup jobs with settings that do not take into account a specific configuration

Lack of backup planning and evaluation of backup storage characteristics is the most common “worst practice”. Many users install a backup product with standard settings by simply clicking the “Next” button in the product wizards sequentially. Moreover, many leave the default settings for backup jobs, not taking into account the specifics of the infrastructure, policies for deleting old copies, and other important aspects. As a result, there are errors when launching and completing tasks, prematurely filling up the repository, and, as a result, pleasant but time-consuming communication with the technical support service.

How will be correct?Using Veeam products as an example: to avoid such scenarios, before installing Veeam Backup & Replication, it is recommended to use, for example, Veeam ONE , a tool for monitoring and reporting on the operation of the infrastructure. So, by opening the VM Configuration Assessment report , you will see if your virtual machines are ready for backup, and if not, for what reason. The VM Change Rate Estimation report (estimation of VM change frequency) is based on statistics on changes in virtual machine blocks and allows you to correctly calculate the required repository size.

You can read more about useful reports for planning, for example, here .

Another tool from Veeam, the online recovery point size calculator, will also help you plan your backup environment.

Chain all tasks

Want to flood all backup jobs due to an error in one of them? Then rather combine them into chains of dependent tasks so that when one task is completed, another begins immediately. In this case, you are guaranteed to receive an increase in backup windows, delayed start of tasks and other unpleasant consequences.

How will be correct?In fact, tasks should be combined only in certain cases. For example, if you do not want to backup two applications at the same time, then you can configure the start of backup of one of them only after the backup of the other application is completed. In the case of Veeam, you better entrust the product to plan resources for you. The Veeam Backup & Replication scheduler can run several tasks at the same time to optimize the backup windows, using “smart algorithms” to distribute the load on the infrastructure components, choosing the optimal data transfer mode and working within the set bandwidth limits of the channel to the repository.

Never verify backups

Life experience shows that often companies “save” on testing backups. This may be due to lack of awareness regarding possible problems in the recovery phase, as well as to economic factors, since a full-fledged process of testing system restore from a backup, if done manually, is a very time-consuming operation. Such a situation is fraught with negative consequences, because in the event of a failure critical data may not be restored at a given time or, even worse, may be partially or completely lost.

What happened in 1998 in the real case of the Pixar studio, when all the working materials of the cartoon “Toy Story 2” were almost lost, you can read in our post “ The Case in Pixar or once again about the importance of testing backups"

How will be correct? Testing recovery from backups must be done regularly. You should distinguish between integrity testing of the backup itself and testing recovery from the backup . In the first case, you only check the integrity of the copy against the checksums of the data blocks. In the second, you conduct testing that reflects a particular simulated scenario of a single failure or a full-blown “catastrophe” of a productive network. It is extremely important to do not only the first, but also the second, because ultimately you are interested in the real data recovery in the event of a failure, and even if the integrity of the backup itself is not compromised, the restoration may fail.

There can be many likely reasons for the failure of recovery; I will cite only a few of them:

- during system recovery, additional steps may be required that the backup product does not automatically perform (say, reconfiguring remote services that interact with the system being restored),

- denial of access to the backup repository from the new administrator’s laptop may occur (simply because this will be the first attempt of such access - and this will take extra precious time),

- low channel bandwidth “suspends” the off-site recovery process (when a backup needs to be pulled up from another office),

- it suddenly turns out that the daily backup for the past six months has been constantly increasing in size (almost all source systems are constantly growing in size) - and at some point it did not fit on the tape, and the error went unnoticed (the above example with Pixar),

- the old backup may not be restored to the new hardware,

- during recovery, it may turn out that not all files or machines fell into the backup job area.

How can these problems be solved? For example, Veeam SureBackup technology allows you to automatically test virtual machines from backups and make sure that they can be restored. The SureBackup task (see the post “SureBackup - automatically check the possibility of restoring data from a backup” ) automatically starts all the machines, taking into account their dependencies (for example, first a domain controller and only then an Exchange server), in an isolated virtual laboratory, checks their operability ( including using scripts written by the user) and sends the relevant reports by e-mail.

Do not complete the Instant VM recovery process

And finally, the worst advice on today's topic specifically on Veeam: start the virtual machine using Instant VM recovery and forget about it. Forget it completely. In this case, you are guaranteed a ton of problems. Running the machine directly from the repository will block the recovery point, so other tasks (for example, SureBackup or BackupCopy) simply will not start. This happens because the machine starts directly from the backup file in read mode, that is, without writing any changes to the file itself. This creates a separate file on the C: drive of the Veeam backup server, which will grow to incredible sizes if you leave the machine running for at least a few days.

How will be correct?To avoid this, immediately after the completion of Instant VM recovery, be sure to transfer the machine to the production environment. See the Veeam user guide for more information on this process .

Conclusion

I hope this article will save time, money and relieve headaches from the problems described. Anything to add to the list of “worst practices”? Then share it in the comments!

And in conclusion, I give links to our best posts about the best backup practices, whatever product you use: