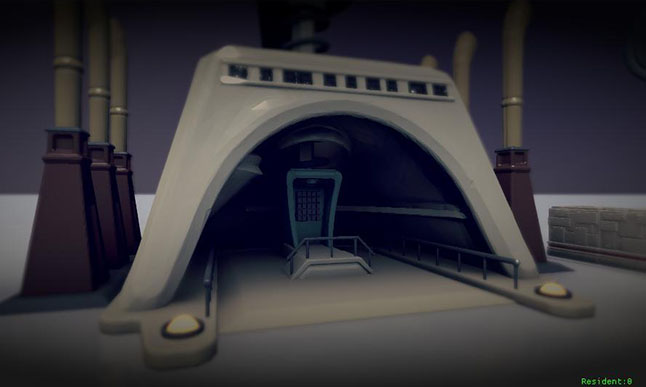

Cascading voxel cones in The Tomorrow Children

- Transfer

What: tracing cascades of voxel cones

For The Tomorrow Children, we implemented an innovative lighting system based on voxel cone tracing. Instead of using traditional direct or delayed lighting systems, we created a system that illuminated everything in the world by tracing cones through voxels.

In this way, both direct and indirect lighting are processed. It allows us to count on PlayStation 4 three reflections of global lighting in semi-dynamic scenes. We trace the cones in 16 fixed directions through six stages of 3D textures and absorb light using directional shading in the screen space (Screen Space Directional Occlusion) and spherical occluders of dynamic objects to obtain the final result. The engine also supports a harmonic-based spherical lighting model, which allows you to calculate particle illumination and implement special effects, such as approximating subsurface scattering and refractive materials.

Who: James Maclaren, Director of Engine Technology at Q-Games

I started programming at the age of 10 when I was presented with the good old ZX for my birthday, and since then I have never regretted it. When I was a teenager, I worked on an 8086 PC and Commodore Amiga, and then went to the University of Manchester with a degree in Computer Science.

After university, I worked at Virtek / GSI for several years on flight simulators for PCs ( F16 Aggressor and Wings of Destiny ), then went into racing games ( F1 World Grand Prix 1 and 2 for Dreamcast) in the Manchester office of the Japanese company Video System. Thanks to her, I got the opportunity to fly to Kyoto several times, fell in love with this city, and then moved to it, starting to work on Q-Games in early 2002.

In Q-Games, I worked on Star Fox Command for DS, the base engine was used in the PixelJunk series of games. I was also lucky to work directly with Sony on the visualizers of graphics and music for the PS3 OS. In 2008, I moved to Canada for three years to work in the Slant Six games on Resident Evil Raccoon City , and in 2012 I returned to Q-Games to participate in the development of The Tomorrow Children .

Why: a fully dynamic world

At the early stage of The Tomorrow Children, we already knew that we wanted a fully dynamic world, modifiable and modifiable by players. Our artists began to render image concepts using the Octane GPU renderer. They illuminated objects with very soft gradient sky lighting, and were delighted with the beautiful light reflections. Therefore, we wondered how we can achieve the desired effect of global lighting in real time without prior baking.

A screenshot of an early concept rendered with Octane. It demonstrates the style sought by artists.

At first, we tried many different approaches with VPL and crazy real-time ray tracing attempts. But we almost immediately realized that the most interesting direction was the approach proposed by Cyril Crassin in his 2011 work on tracing voxel cones using sparse voxel octree trees (Sparse Voxel Octree). I was especially attached to this technique because I liked the ability to filter the scene geometry. We were also inspired by the fact that other developers, such as Epic, also explored this technique. Epic used it in the Unreal Elemental demo (unfortunately, they later abandoned this technique).

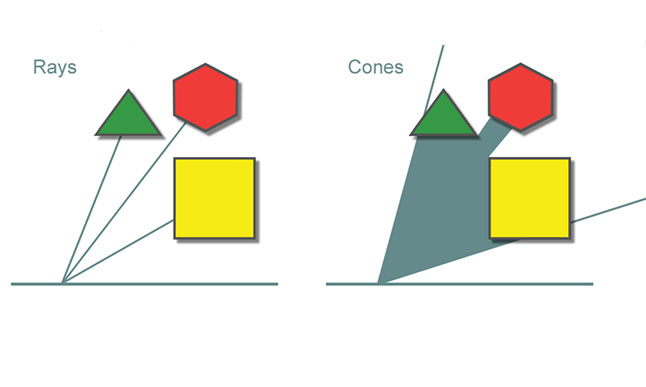

What is a "cone trace"?

The cone tracing technique is somewhat similar to ray tracing. In both techniques, we strive to obtain many samples of the incident radiation at a point, emitting primitives and crossing them with the scene.

If we compute enough samples with a good distribution, we can combine them together to get an estimate of the incident light at the desired point. We can then pass this data through DFOS , which represents the properties of the material at the point, and calculate the output lighting in the direction of the angle of view. Obviously, I omitted the details in the description, especially with regard to ray tracing, but for comparison they are not too important.

At the intersection of the beam, we get a point, and in the case of a cone, a region or volume, depending on how you approach this. The important thing is that this is not an infinitely small point, and because of this, the properties of the lighting assessment are changing. Firstly, since we need to evaluate a scene in an area, our scene must be filtered. In addition, due to filtering, we get not the exact value, but the average, and the accuracy of the estimate is reduced. On the other hand, since we estimate the average, the noise usually obtained during ray tracing is practically absent.

It was this cone tracing feature that caught my attention when I saw Cyril's presentation on Siggraph. Suddenly, we realized that we have a technique for an acceptable estimate of the illumination of a point with a small number of samples. And since the geometry of the scene is filtered, there is no noise, and we can quickly perform calculations.

Obviously, the problem is this: how to get cone samples? The violet region of the surface in the figure above, which defines the intersections, is not so easy to calculate. Therefore, instead, we take several bulk samples along the cone. Each sample returns an estimate of the amount of light reflected toward the apex of the cone, as well as an estimate of the absorption of light in that direction. It turns out that we can combine these samples with the simple rules used when tracing rays through volume.

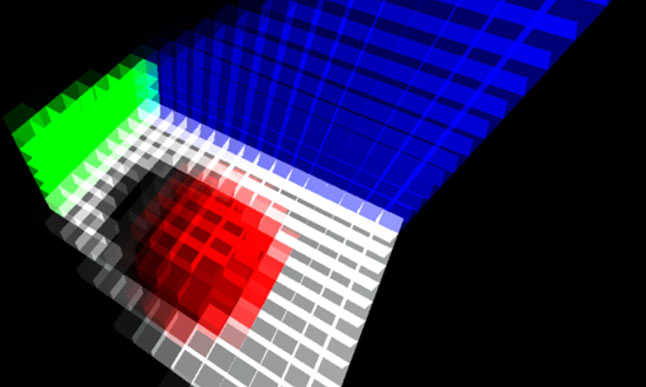

Cyril’s original work required voxelization into a sparse voxel octree, so in early 2012, before returning to Q-Games, I started experimenting only with them in DX11 on a PC. Here are screenshots of a very early (and very simple) first version. (The first shows the voxelization of a simple object, the second shows the lighting added to voxels from light with a shading scheme):

There were many ups and downs in the project, so as a result, I postponed my research for a while to help implement the first prototype. Then I adapted it to the PS4 development equipment, but there were very serious problems with the frame rate. My initial tests did not work well, so I decided I needed a new approach. I always doubted a bit whether the voxel octrees make sense in the GPU, and tried to make everything a lot simpler.

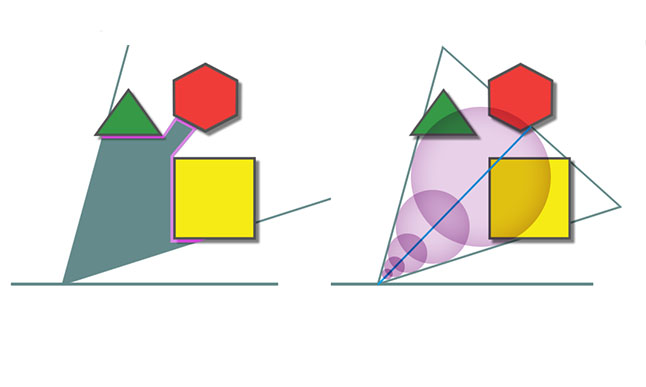

A cascade of voxels appears on the scene:

Thanks to the cascade of voxels, we can simply store the voxels in a 3D texture instead of octaves, and create different levels of stored voxels (six in our case), each with its own resolution, but each time doubling the size of the volume covered by it. This means that near us we will have voluminous data with good resolution, but at the same time we will have a rough idea of objects that are far away. This type of data placement should be familiar to those who implemented clipmapping or light propagation volumes.

For each voxel, we need to store basic information about the properties of its material, such as albedo, normals, radiation, etc., in all six of its directions (+ x, -x, + y, -y, + z, -z) . Then we can insert lighting for any voxel on the surface into the volume and trace the cones several times to get reflected lighting and again save this information for each of the six directions in a different texture of the voxel cascade.

The six directions mentioned are important, because then the voxels become anisotropic, and without it, light, which, for example, is reflected from one side of a thin wall, can leak to the other side, which does not suit us.

After we calculated the lighting in a voxel texture, you need to display it on the screen. You can do this by tracing the cones from the positions of the pixels in the space of the world, followed by a combination of the result with the properties of the materials, which we also render into a more traditional two-dimensional G-buffer.

Another subtle difference from Cyril’s original method is the rendering method: all lighting, even direct lighting, comes from tracing the cones. This means that we do not have to do anything complicated to insert lighting, we just trace the cones to get direct lighting, and if they then go beyond the boundaries of the cascade, we accumulate sky lighting, reducing it with all the received partial absorption. At the same time, dynamic point light sources are processed using another voxel cascade, in which we use a geometry shader to populate the radiation values. Then you can sample data from this cascade and accumulate it when tracing cones.

Here are a couple of screenshots of the technology implemented in the early prototype.

Firstly, here is our scene without reflected lighting from cascades, only with sky lighting:

And now with reflected lighting:

Even cascades instead of octaves when tracing cones are rather slow. Our first tests looked great, but we didn’t fit into the technical requirements at all, because tracing the cones alone took about 30 milliseconds. Therefore, in order to use the technique in real time, we needed to make additional changes to it.

The first big change we made: choosing a fixed number of cone tracing directions. In the original technique of Cyril Crassin, the number of sampled cones per writing depended on the surface normal. This means that for each pixel we should potentially go through cascades of textures, regardless of the nearest pixels. There are hidden costs in this technique: to determine the illumination in the pixel / voxel direction of the field of view, at each sampling from the illuminated cascade of voxels, it was necessary to sample for three of the six faces of the anisotropic voxel and take the weighted average based on the bypass direction. When tracing cones, they touch several voxels on their way, and this each time leads to excessive sampling and waste of bandwidth and ALU resources.

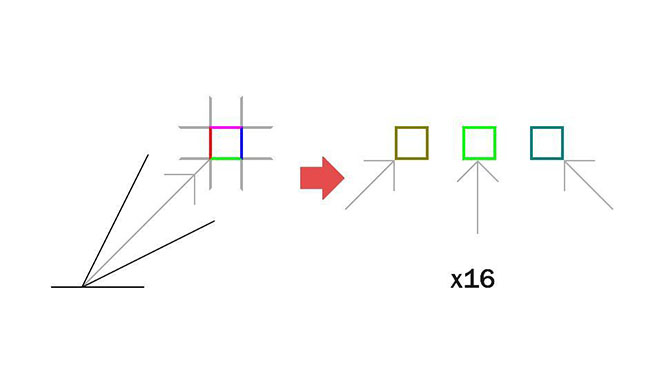

If instead we fix the directions of the trace (we did this with 16 directions in the sphere), then we can use a well-coordinated passage through the cascade of voxels and very quickly create cascades of radiation voxels that provide information on how our anisotropic voxels will look from each of 16 directions. Then we use them to speed up the tracing of the cones, so instead of three texture samples we need only one.

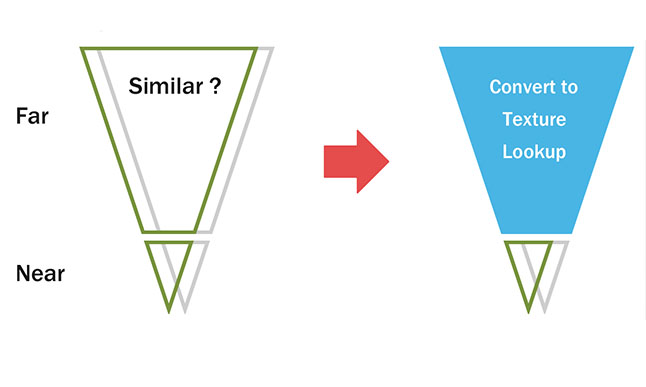

We made another important observation: in the process of tracing cones and passing through the levels of the cascade, the voxels that we access for adjacent pixels / voxels become more and more similar, the further we move away from the top of the cone. This observation is a well-known simple effect: parallax. When moving the head, objects that are closer seem to be moving for the eyes, and distant objects move much more slowly. With this in mind, I decided to try to calculate another set of texture cascades, one for each of the 16 directions, so that we could periodically fill the back half of the cone from the center of each voxel with pre-computed results of the tracing of the cones.

These data show that the illumination of distant objects barely changes from the user's point of view. Then you can combine this data, which can simply be sampled from our texture using a full cone trace of the “near” part of the cone. Correctly adjusting the distance after which we go to the “far” cone data (1-2 meters is enough for our purposes), we will get a significant increase in speed with a slight decrease in quality.

Even these optimizations did not give us the desired result, so we had to make changes to the volumes of the calculated data, both temporally and spatially.

It turns out that the human visual system “forgives” delays in updating the reflected refreshment. With a delay in updating reflected light up to 0.5-1 seconds, images that are well perceived by a person are still created, and the “incorrectness” of calculations is difficult to recognize. Realizing this, I realized that it is possible not to update each level of the system cascade in each frame. Therefore, instead of updating all 6 levels of the cascade, we select only one and update it every frame. At the same time, the levels of fine detail are updated more often than the levels of rough detail. Therefore, the first cascade is updated every second frame, the next is every fourth frame, the next is every eighth, and so on. In this case, the results of calculating the reflected light close to the player will be updated at the required speed, and rough results in the distance will be updated much less often,

Obviously, to calculate the lighting result of our final screen space, you can significantly increase the speed by slightly losing quality by calculating the results of screen space tracing in lower resolution. Then, by increasing the resolution taking into account the geometry and additional correction of shading errors, we can get the final results of lighting the screen space. We found that for The Tomorrow Children you can get good results by tracing with 1/16 of the screen resolution (1/4 in height and width).

The results are good, because we apply this principle only to information about illumination. The material properties and normals in the scene are still calculated in two-dimensional G-buffers with full resolution, and then combined with the light data at the pixel level. It is very simple to understand why this works: you need to present the light data obtained in 16 directions around the sphere, like a small map of the environment. If we travel small distances (from pixel to pixel), then, provided there are no sharp interruptions in depth, this map will change very little. Therefore, it can simply be restored from low-resolution textures.

By combining all this information, we managed to update the voxel cascades every frame in a time of about 3 ms. The calculation time of per-pixel diffuse lighting also decreased to about 3 ms, which allows you to already use this technology in games with a frequency of 30 Hz.

Based on this system, we were able to add some other effects to it. We developed a variant of directional shading in the screen space (Screen Space Directional Occlusion), which calculates the cone of visibility for each pixel, and uses it to modify the illumination obtained from 16 directions of tracing cones. We also added something similar to the shadows of our characters (because the characters move very fast and they are too detailed for voxelization) and used the sphere trees obtained from the volumes of the collisions of the characters to calculate the directional absorption.

For particles, we created a simplified four-component version of the textures of lighting cascades, which are of much lower quality, but still look good enough to illuminate the particles. We were also able to use this texture to create sharp reflections using accelerated ray tracing over distance fields, and write effects similar to simple sub surface scattering. A detailed description of all these processes will take a lot of time, so I recommend those who want to know the details to read my presentation with GDC 2015 , where this topic is discussed in more detail.

Result

As you can see, the result is a system that is radically different from traditional engines. It took a lot of work, but it seems to me that the results speak for themselves. Having chosen our own direction, we managed to get a completely unique style in the game. We created something new that we would never have achieved using standard techniques or other people's technologies.