How to avoid jumps in response time and memory consumption when taking snapshots of the state in a DBMS in RAM

- Transfer

Remember my recent article “What is a DBMS in RAM and how does it efficiently save data” ? In it, I gave a brief overview of the mechanisms used in the DBMS in RAM to ensure the safety of data. It was about two main mechanisms: writing transactions to the log and taking snapshots of the state. I gave a general description of the principles of working with a transaction log and only touched on the topic of snapshots. Therefore, in this article I will talk about snapshots in more detail: I’ll start with the simplest way to take snapshots of the state in the DBMS in RAM, highlight several problems associated with this method, and dwell on how this mechanism is implemented in Tarantool .

So, we have a DBMS that stores all the data in RAM. As I mentioned in my previous article, to take a snapshot of the state, you need to write all this data to disk. This means that we need to go through all the tables and all the lines in each table and write all this to disk with one file via the write system call . Pretty simple at first glance. However, the problem is that the data in the database is constantly changing. Even if you freeze data structures when taking a snapshot, as a result, you can get a non-consistent database state on disk.

How to achieve a consistent state? The easiest (and coarsest) way is to pre-freeze the entire database, take a snapshot of the state and unfreeze it again. And it will work. The database can be frozen for quite some time. For example, with a data size of 256 GB and a maximum hard drive capacity of 100 MB / s, taking a picture will take 256 GB / (100 MB / s) - approximately 2560 seconds, or (again approximately) 40 minutes. The DBMS will still be able to handle read requests, but it will not be able to fulfill data change requests. "Seriously?" - you will exclaim. Let's count: with 40 minutes of downtime, say, on a day, the DBMS is fully operational 97% of the time in the best case (in fact, of course, this percentage will be lower,

What options can there be? Let's take a closer look at what is happening. We froze all the data only because it was necessary to copy them to a slow device. But what if you sacrifice memory to increase speed? The bottom line is: we copy all the data into a separate area of RAM, and then write the copy to a slow disk. This method seems to be better, but it entails at least three problems of varying severity:

- We still need to freeze all data. Suppose we copy data to a memory area at a speed of 1 GB / s (which is again too optimistic, because in fact the speed can be 200-500 MB / s for more or less advanced data structures). 256 GB / (1 GB / s) is 256 seconds, or about 4 minutes. We get 4 minutes of downtime per day, or 99.7% of the system’s availability time. This, of course, is better than 97%, but not by much.

- As soon as we copied the data to a separate buffer in RAM, we need to write it to disk. While the copy is being written, the original data in memory continues to change. Some changes need to be tracked somehow, for example, saving the transaction identifier along with the snapshot so that it is clear which transaction was in the last snapshot. There is nothing complicated about it, but nevertheless it is necessary to do it.

- The requirements for the amount of RAM are doubled. In fact, we constantly need twice as much memory as the size of the data; I emphasize: not only for taking snapshots of the state, but constantly , because you can’t just increase the amount of memory in the server, take a picture, and then pull the memory bar again.

One way to solve this problem is to use the copy-on-write mechanism (hereinafter for brevity I will use the English abbreviation COW , from copy-on-write ) provided by the fork system call. As a result of this call, a separate process is created with its virtual address space and a read-only copy of all data. A read-only copy is used because all changes occur in the parent process. So, we create a copy of the process and slowly write the data to disk. The question remains: what is the difference from the previous copy algorithm? The answer lies in the COW mechanism used by Linux. As mentioned a little above, COW is an abbreviation meaning copy-on-write, i.e. copying during recording. The essence of the mechanism is that the child process initially uses page memoryin conjunction with the parent process. As soon as one of the processes changes any data in RAM, a copy of the corresponding page is created.

Naturally, copying a page leads to an increase in response time, because, in addition to the actual copy operation, several more things happen. Typically, the page size is 4 KB. Suppose you change a small value in a database. First, there is an interruption due to the absence of a page , because after making a fork callall pages of the parent and child processes are read-only. After that, the system switches to kernel mode, selects a new page, copies 4 KB from the old page and returns to user mode again. This is a very simplified description, more about what is actually happening, you can read the link .

If the change you make affects not just one, but several pages (which is very likely when using data structures such as trees), the above sequence of events will be repeated again and again, which can significantly degrade the performance of the DBMS. At high loads, this can lead to a sharp increase in response time and even short downtime; in addition, a large number of pages will be arbitrarily updated in the parent process, as a result of which almost the entire database can be copied, which, in turn, can double the required amount of RAM. In general, if you are lucky, there will be no jumps in the response time and database downtime, if not, get ready for both; oh yes, and do not forget about double the memory consumption.

Another problem with fork is that this system call copies the page descriptor table . Say, when using 256 GB of memory, the size of this table can reach hundreds of megabytes, so your process may freeze for a second or two, which again will increase the response time.

Using fork , of course, is not a panacea, but this is so far the best we have. In fact, some popular in-memory DBMSs still use fork to take snapshots of the state - for example, Redis .

Can anything be improved here? Let's take a look at the COW mechanism. Copying takes place at 4 KB. If only one byte is changed, the whole page is copied anyway (in the case of trees, many pages, even if rebalancing is not required). But what if we implement our own COW mechanism, which will copy only actually changed sections of memory, or rather, changed values? Naturally, such an implementation will not serve as a full replacement for the system mechanism, but will only be used to take snapshots of the state.

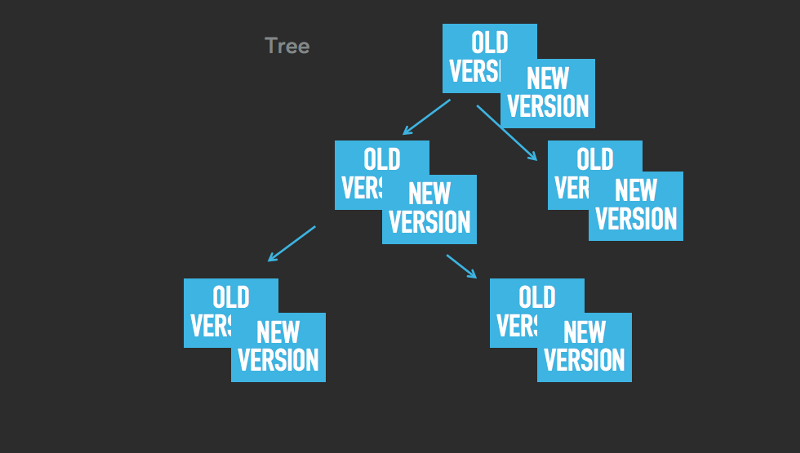

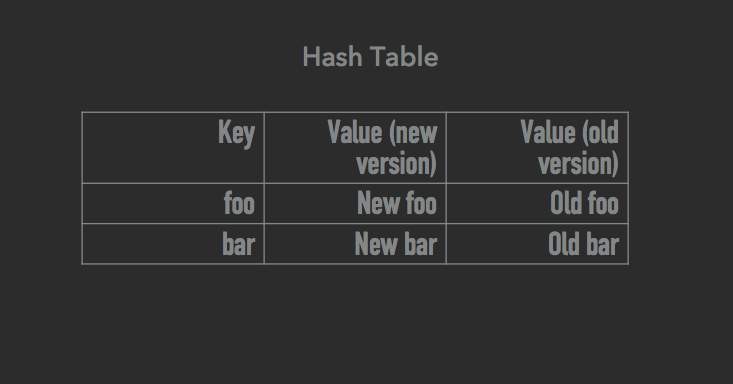

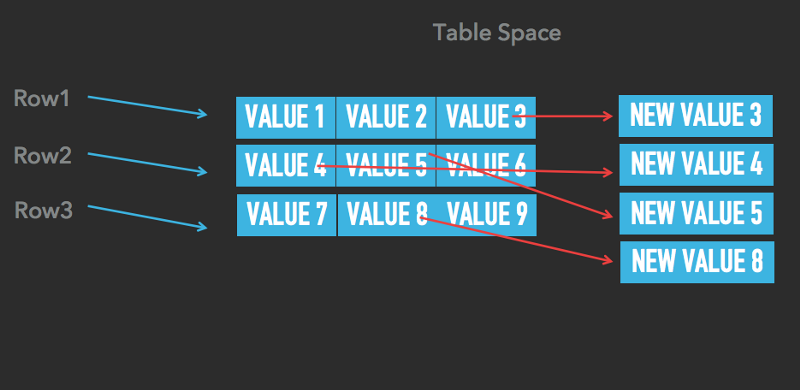

The essence of the improvement is the following: to make sure that all our data structures (trees, hash tables, table spaces) can store many versions of each element. The idea is close to multi-version concurrent access control. The difference is that here this improvement is not used for concurrent access control itself, but only for taking snapshots of the state. As soon as we started to take a snapshot, all data modification operations create a new version of the elements to be changed, while all old versions remain active and are used to create the snapshot. Take a look at the images below. This logic applies to trees, hash tables, and table spaces:

As you can see, elements can have both old and new versions. For example, in the last image in the table space, values 3, 4, 5, and 8 have two versions (the old and the corresponding new one), while the rest (1, 2, 6, 7, 9) have one each.

Changes occur only in newer versions. The older versions are used for read operations when taking a snapshot. The main difference between our implementation of the COW mechanism and the system mechanism is that we do not copy the entire 4 KB page, but only a small part of the really changed data. Say, if you update an integer four-byte number, our mechanism will create a copy of this number, and only these 4 bytes will be copied (plus a few more bytes as a fee for maintaining two versions of one element). And now for comparison, look at how the system COW behaves: 4096 bytes are copied, a page is interrupted, the context switches (each such switch is equivalent to copying about 1 KB of memory) - and all this is repeated several times.

We use our own implementation of the COW mechanism for taking snapshots in Tarantool starting from version 1.6.6 (before that we used fork ).

New articles on this topic are coming up with interesting details and graphs from working Mail.Ru Group servers. Follow the news.

All questions related to the content of the article can be addressed to the author of the original danikin , technical director of mail and cloud services Mail.Ru Group.