QUIC protocol: Web transition from TCP to UDP

- Transfer

The QUIC protocol (the name stands for Quick UDP Internet Connections) is a completely new way of transmitting information on the Internet, built on top of the UDP protocol, instead of the generally accepted use of TCP. Some people call it (jokingly) TCP / 2 . The transition to UDP is the most interesting and powerful feature of the protocol, from which some other features follow.

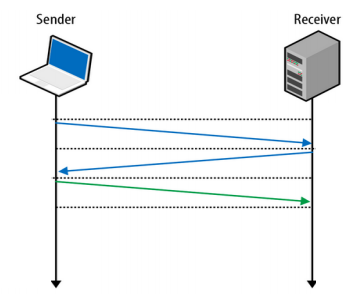

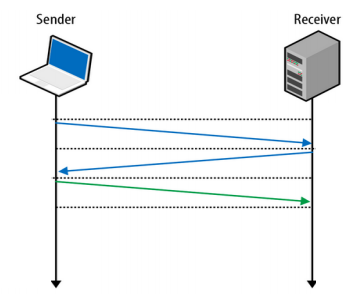

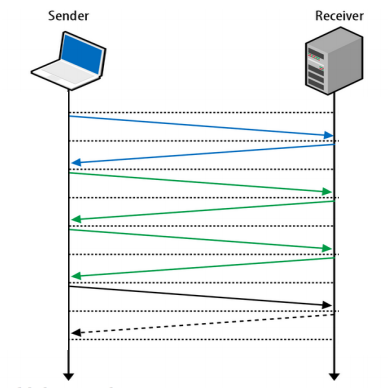

Today's Web is built on the TCP protocol, which was chosen for its reliability and guaranteed packet delivery. To open a TCP connection, the so-called “triple handshake” is used. This means additional message send / receive cycles for each new connection, which increases delays.

If you want to establish a secure TLS connection, you will have to forward even more packets.

Some innovations, such as TCP Fast Open , will improve some aspects of the situation, but this technology is not yet very widespread.

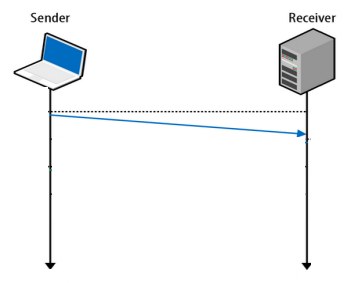

UDP, on the other hand, is built on the idea of "send a packet and forget about it." A message sent over UDP will be delivered to the recipient (not guaranteed, with some probability of success). The bright advantage here is the shorter connection setup time, the same bright drawback is the non-guaranteed delivery or the order in which packets arrive to the recipient. This means that to ensure reliability, you will have to build some mechanism on top of UDP, which guarantees packet delivery.

And here comes QUIC from Google.

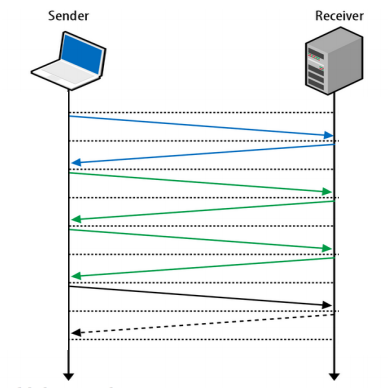

The QUIC protocol can open a connection and negotiate all TLS parameters (HTTPs) in 1 or 2 packets (1 or 2 - depends on whether the connection is opened to a new server or to a friend).

This incredibly speeds up the opening of the connection and the start of data loading.

The plans of the QUIC protocol development team look very ambitious: the protocol will try to combine UDP speed with TCP reliability.

Here's what Wikipedia writes about it:

There is an important point in this quote: if QUIC proves its effectiveness, then there is a chance that the ideas tested in it will become part of the next version of TCP .

TCP is quite formalized. Its implementations are in the Windows and Linux kernels, in every mobile OS, and in many simpler devices. Improving TCP is not easy, as all of these implementations must support it.

UDP is a relatively simple protocol. It is much faster to develop a new protocol over UDP in order to be able to test theoretical ideas, work in congested networks, process flows blocked by a lost packet packet, etc. Once these points are clarified, it will be possible to begin work on porting the best parts of QUIC to the next version of TCP.

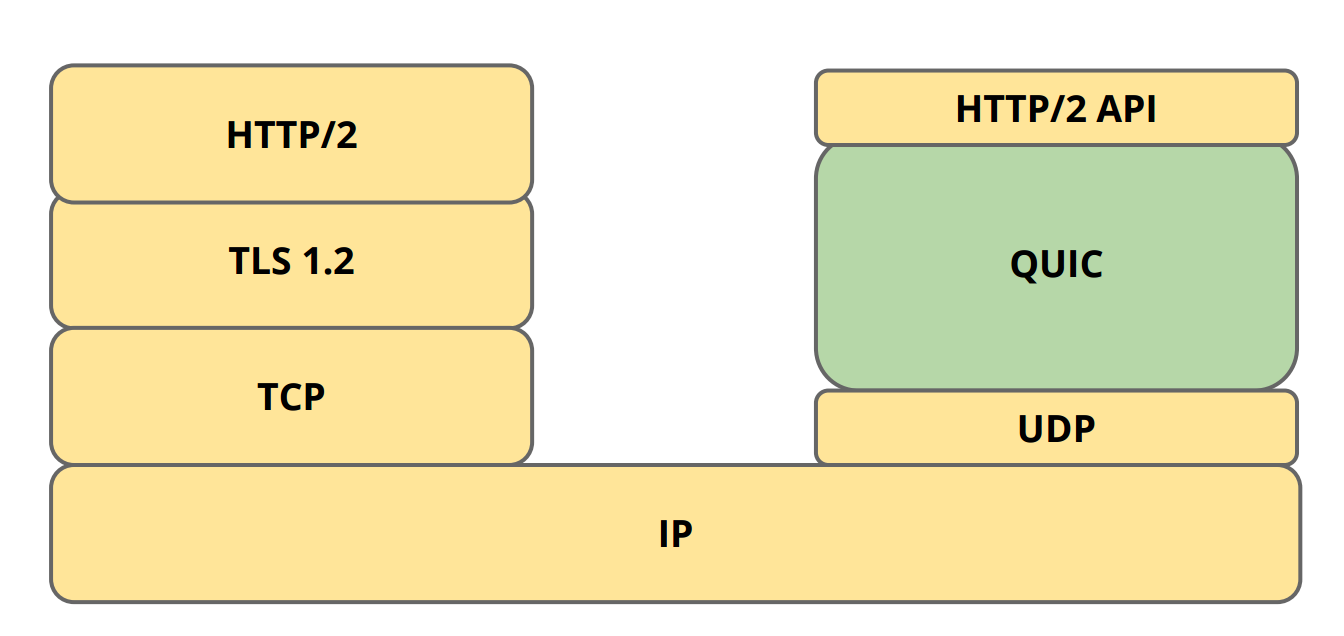

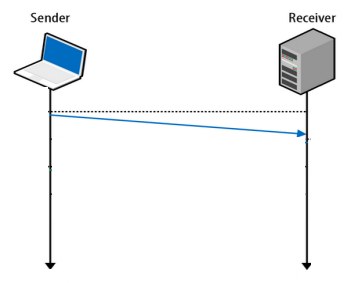

If you look at the layers that make up the modern HTTPs connection, you will see that QUIC replaces the entire TLS stack and part of HTTP / 2.

Yes, the QUIC protocol implements its own crypto layer, which avoids the use of TLS 1.2.

On top of QUIC there is a small layer of HTTP / 2 API used to communicate with remote servers. It is smaller than the full HTTP / 2 implementation, since multiplexing and connection setup are already implemented in QUIC. All that remains is the implementation of the HTTP protocol.

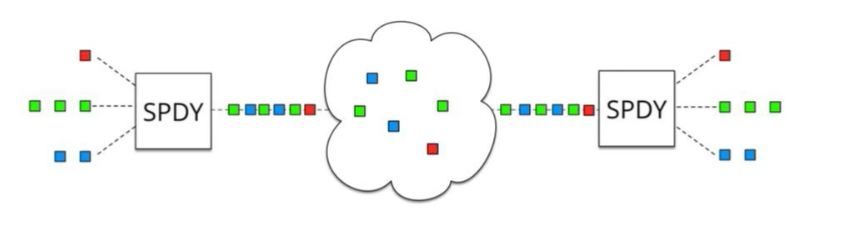

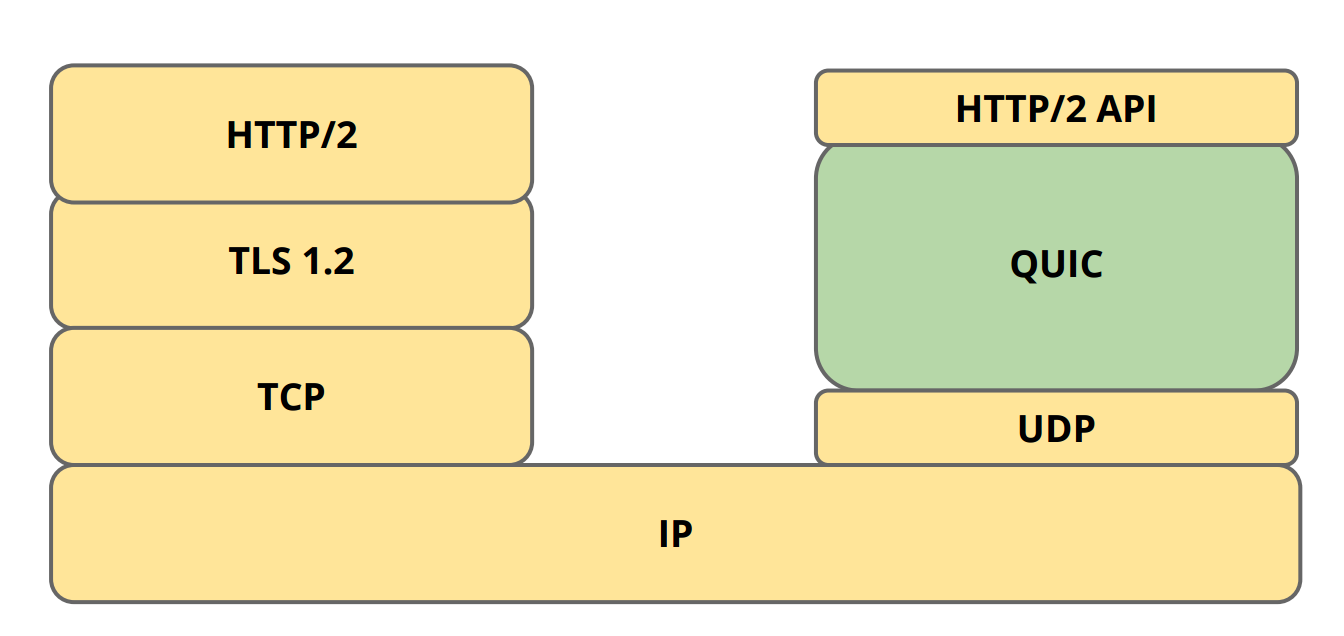

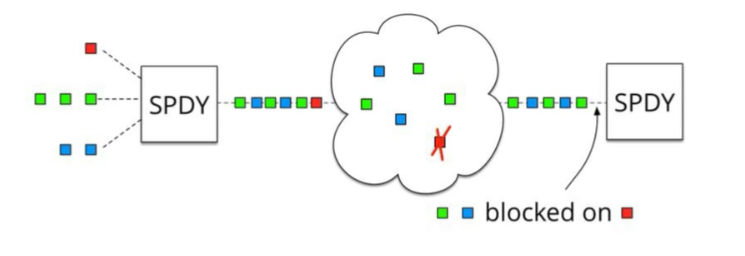

The SPDY and HTTP / 2 protocols use the same TCP connection to the server instead of separate connections for each page. This single connection can be used for independent requests and for obtaining individual resources.

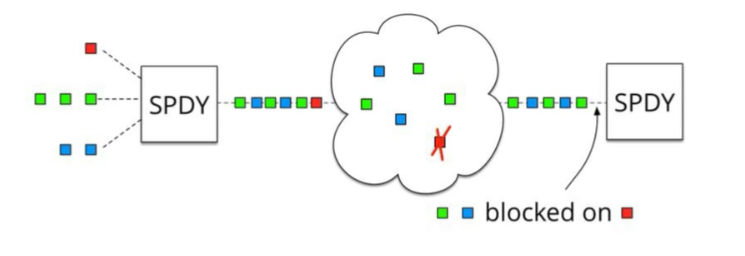

Since the entire data exchange is now built on a single TCP connection, we automatically get one drawback: Head-of-line blocking. TCP requires packets to arrive (or rather processed) in the correct order. If the packet is lost on the way to \ from the server - it must be resent. A TCP connection at this time should wait (block) and only after receiving the lost packet again does processing of all packets in the queue continue - this is the only way to observe the condition of the correct packet processing order.

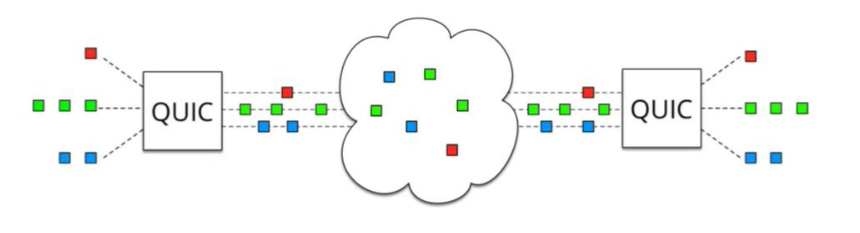

The QUIC protocol solves this problem fundamentally - by abandoning the TCP protocol in favor of UDP, which does not require compliance with the processing order of received packets. And, although packet loss, of course, is still possible, this will only affect the processing of those resources (individual HTML \ CSS \ JS files) to which the lost package belongs.

QUIC very elegantly combines the best parts of SPDY \ HTTP2 (multiplexing) with a non-blocking transport protocol.

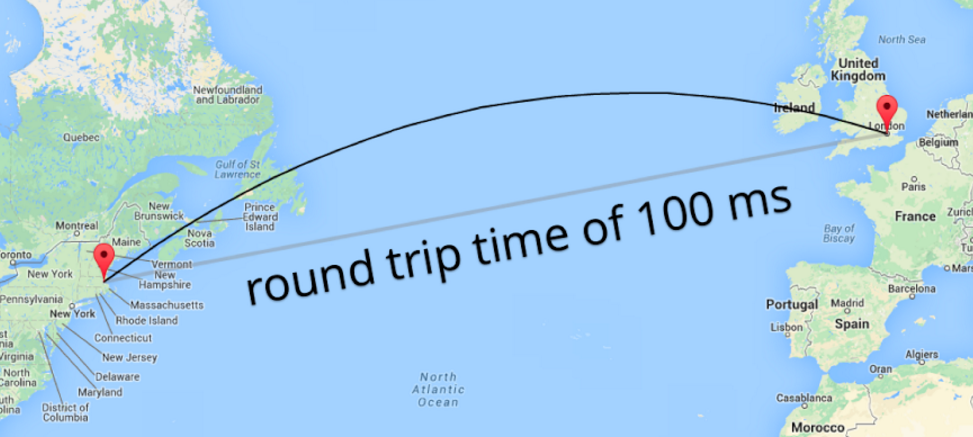

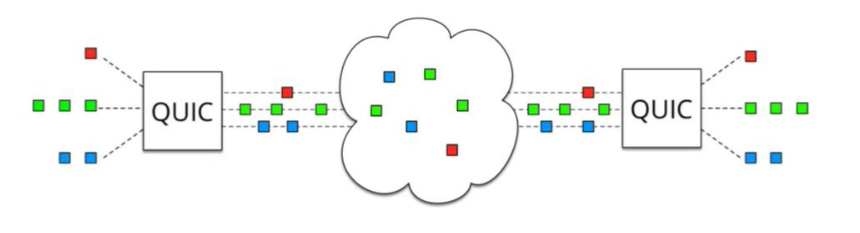

If you have a fast Internet connection, then packet transmission delays between your computer and the remote server are about 10-50 ms. Each packet sent from you over the network will be received by the server after this period of time. For this order of magnitude, the advantages of QUIC may not be very clear. But if we consider the issue of exchanging data with a server on another continent or using mobile networks, then we already have delays of the order of 100-150 ms.

As a result, on a mobile device, when accessing a far away server, the difference between 4 TCP + TLS packets and one QUIC packet can be about 300 ms, which is already a significant amount observed with the naked eye.

An elegant feature of the QUIC protocol is Forward Error Correction (FEC). Each forwarded packet contains a certain amount of data from other packets, which allows you to reconstruct any lost packet from the data in its neighbors, without the need to request forwarding of the lost packet and wait for its contents. This is essentially an implementation of RAID 5 at the network level.

But you yourself already see the drawback of this solution: each package gets a little larger. The current implementation sets this overhead to 10%, i.e. Having made each packet forwarded 10% more, we thereby get the opportunity to recover data without re-requesting if not more than every tenth packet is lost.

This redundancy is a payment for network bandwidth for reducing delays (which seems logical, because the connection speeds and channel bandwidth are constantly growing, but the fact that data transfer to the other end of the planet takes a hundred milliseconds is unlikely to be able to somehow be changed without fundamental coup in physics).

Another interesting feature of using the UDP protocol is that you are no longer tied to the IP server. In TCP, a connection is defined by four parameters: the server and client IP addresses, server and client ports. On Linux, you can see these options for each established connection using the netstat command:

If any of these four parameters needs to be changed, we will need to open a new TCP connection. That’s why it’s hard to maintain a stable connection on mobile devices when switching between WiFi and 3G / LTE.

In QUIC, with its use of UDP, this parameter set is no longer available. QUIC introduces the concept of a connection identifier called Connection UUID. It becomes possible to switch from WiFi to LTE while maintaining the Connection UUID, thus avoiding the cost of re-creating the connection. Mosh Shell works similarly , keeping the SSH connection active when changing the IP address.

Also, this approach opens the door to the possibility of using multiple sources to request content. If Connection UUID can be used to switch from WiFi to a mobile network, then we can, theoretically, use both of them simultaneously to receive data in parallel. More communication channels - more bandwidth.

The Chrome browser has experimental QUIC support since 2014. If you want to test QUIC, you can enable its support in Chrome and try to work with Google services that support it. This is a strong advantage of Google - the ability to use a combination of your browser and your own web resources. By enabling QUIC in the world's most popular browser (Chrome) and highly loaded sites (Youtube.com, Google.com), they will be able to get large, clear statistics on the use of the protocol, which will reveal all the significant problems with the practical use of QUIC.

There is a plugin for Chrome , which shows as an icon the server supports HTTP / 2 and QUIC protocols.

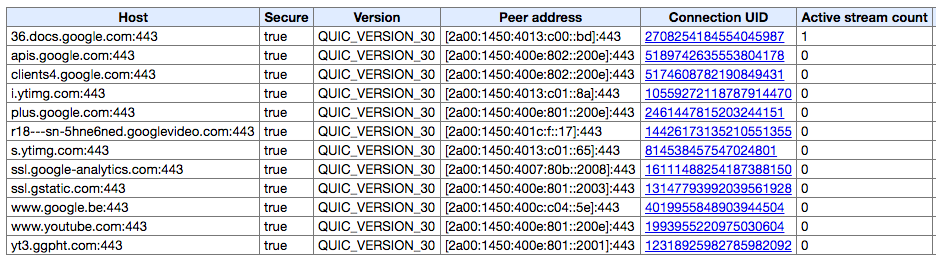

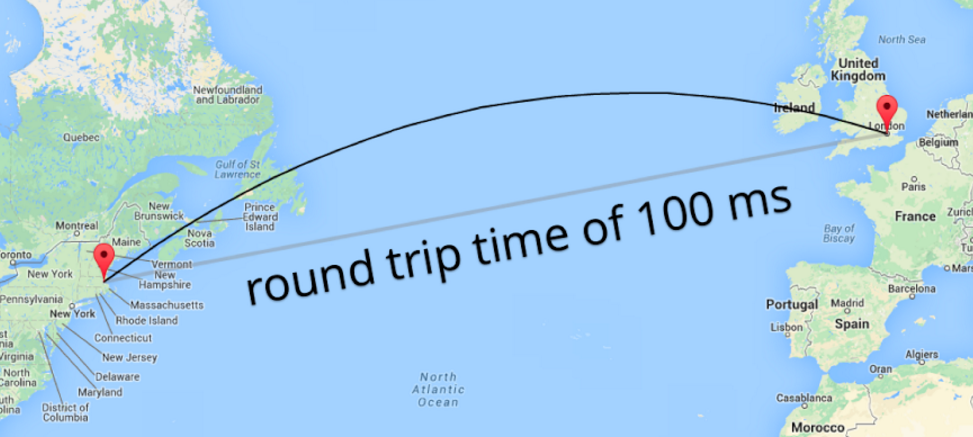

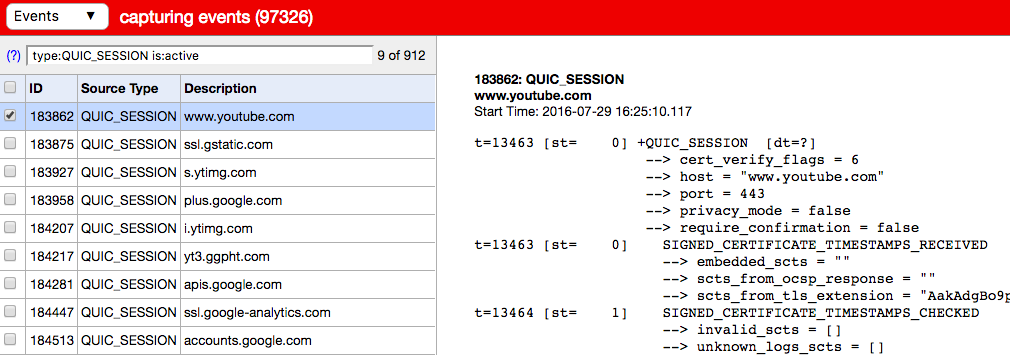

You can also see open QUIC connections by opening the chrome: // net-internals / # quic tab right now (pay attention to the Connection UUID parameter mentioned earlier in the table).

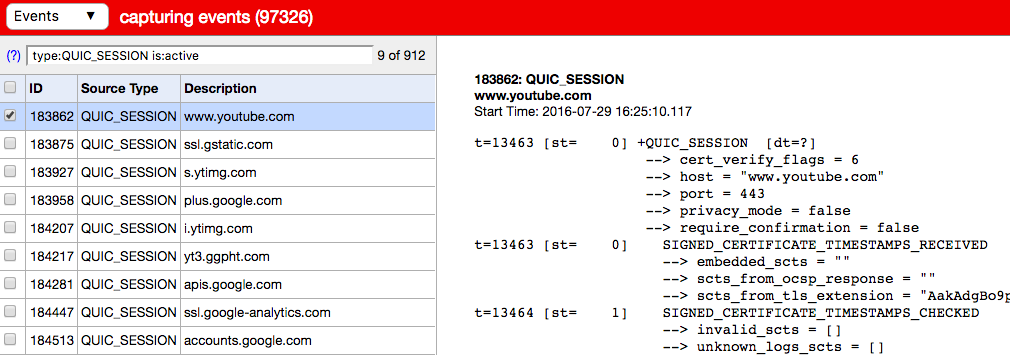

You can go even further and see all open connections and all transferred over them packages: chrome: // net-internals / # events & q = type: QUIC_SESSION% 20is: active.

If you are a sysadmin or network engineer, you may have jerked a bit when you heard that QUIC uses UDP instead of TCP. Yes, you probably have your own reasons. It is possible for you (as, for example, in our company), the settings for accessing the web server look something like this:

The most important thing here, of course, is the protocol column, which clearly says “TCP”. Similar settings are used by thousands of web servers around the world, as they are reasonable. 80 and 443 ports, only TCP - and nothing more should be allowed on the production-web server. No UDP.

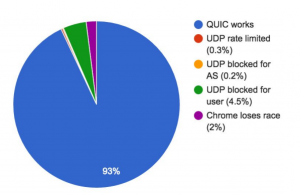

Well, if we want to use QUIC, we will have to add the resolution of UDP connections to the 443rd port. In large enterprise networks, this can be a problem. As Google statistics show, UDP is blocked in some places:

These figures were obtained in a recent study in Sweden. Let's note a few key points:

The advantage of encryption by default is that various Deep Packet Inspection tools cannot decrypt encrypted information and modify data, they see a binary stream and (I want to believe) just skip it.

QUIC is currently supported by the Caddy web server (since version 0.9). Both the client and server QUIC implementations are still at the experimental support stage, so be careful with the practical application of QUIC. Since no one has QUIC enabled by default, it will probably be safe to enable it on your server and experiment with your browser (Update: from version 52 QUIC is enabled by default in Chrome).

In 2015, Google published some QUIC performance measurements.

Youtube statistics are especially interesting. If improvements of this scale are really possible, then we will see a very quick adaptation of QUIC, at least in the field of video services like Vimeo, as well as in the "adult video" market.

Personally, I find the QUIC protocol completely charming! The huge amount of work done by its developers was not in vain - the mere fact that today the largest sites on the Internet support QUIC is a bit overwhelming. I can’t wait for the final QUIC specification, and its further implementation by all browsers and web servers.

More information on the topic:

Today's Web is built on the TCP protocol, which was chosen for its reliability and guaranteed packet delivery. To open a TCP connection, the so-called “triple handshake” is used. This means additional message send / receive cycles for each new connection, which increases delays.

If you want to establish a secure TLS connection, you will have to forward even more packets.

Some innovations, such as TCP Fast Open , will improve some aspects of the situation, but this technology is not yet very widespread.

UDP, on the other hand, is built on the idea of "send a packet and forget about it." A message sent over UDP will be delivered to the recipient (not guaranteed, with some probability of success). The bright advantage here is the shorter connection setup time, the same bright drawback is the non-guaranteed delivery or the order in which packets arrive to the recipient. This means that to ensure reliability, you will have to build some mechanism on top of UDP, which guarantees packet delivery.

And here comes QUIC from Google.

The QUIC protocol can open a connection and negotiate all TLS parameters (HTTPs) in 1 or 2 packets (1 or 2 - depends on whether the connection is opened to a new server or to a friend).

This incredibly speeds up the opening of the connection and the start of data loading.

Why do you need QUIC?

The plans of the QUIC protocol development team look very ambitious: the protocol will try to combine UDP speed with TCP reliability.

Here's what Wikipedia writes about it:

Improving the TCP protocol is a long-term goal for Google, and QUIC is designed to be the equivalent of an independent TCP connection, but with reduced latency and SPDY-enhanced multiplexing support. If QUIC shows its effectiveness, then these features may be included in the next version of the TCP and TLS protocols (the development of which takes more time).

There is an important point in this quote: if QUIC proves its effectiveness, then there is a chance that the ideas tested in it will become part of the next version of TCP .

TCP is quite formalized. Its implementations are in the Windows and Linux kernels, in every mobile OS, and in many simpler devices. Improving TCP is not easy, as all of these implementations must support it.

UDP is a relatively simple protocol. It is much faster to develop a new protocol over UDP in order to be able to test theoretical ideas, work in congested networks, process flows blocked by a lost packet packet, etc. Once these points are clarified, it will be possible to begin work on porting the best parts of QUIC to the next version of TCP.

Where is QUIC's place today?

If you look at the layers that make up the modern HTTPs connection, you will see that QUIC replaces the entire TLS stack and part of HTTP / 2.

Yes, the QUIC protocol implements its own crypto layer, which avoids the use of TLS 1.2.

On top of QUIC there is a small layer of HTTP / 2 API used to communicate with remote servers. It is smaller than the full HTTP / 2 implementation, since multiplexing and connection setup are already implemented in QUIC. All that remains is the implementation of the HTTP protocol.

Blocking the beginning of the queue (Head-of-line blocking)

The SPDY and HTTP / 2 protocols use the same TCP connection to the server instead of separate connections for each page. This single connection can be used for independent requests and for obtaining individual resources.

Since the entire data exchange is now built on a single TCP connection, we automatically get one drawback: Head-of-line blocking. TCP requires packets to arrive (or rather processed) in the correct order. If the packet is lost on the way to \ from the server - it must be resent. A TCP connection at this time should wait (block) and only after receiving the lost packet again does processing of all packets in the queue continue - this is the only way to observe the condition of the correct packet processing order.

The QUIC protocol solves this problem fundamentally - by abandoning the TCP protocol in favor of UDP, which does not require compliance with the processing order of received packets. And, although packet loss, of course, is still possible, this will only affect the processing of those resources (individual HTML \ CSS \ JS files) to which the lost package belongs.

QUIC very elegantly combines the best parts of SPDY \ HTTP2 (multiplexing) with a non-blocking transport protocol.

Why reducing the number of forwarded packets is so important

If you have a fast Internet connection, then packet transmission delays between your computer and the remote server are about 10-50 ms. Each packet sent from you over the network will be received by the server after this period of time. For this order of magnitude, the advantages of QUIC may not be very clear. But if we consider the issue of exchanging data with a server on another continent or using mobile networks, then we already have delays of the order of 100-150 ms.

As a result, on a mobile device, when accessing a far away server, the difference between 4 TCP + TLS packets and one QUIC packet can be about 300 ms, which is already a significant amount observed with the naked eye.

Proactive Error Correction

An elegant feature of the QUIC protocol is Forward Error Correction (FEC). Each forwarded packet contains a certain amount of data from other packets, which allows you to reconstruct any lost packet from the data in its neighbors, without the need to request forwarding of the lost packet and wait for its contents. This is essentially an implementation of RAID 5 at the network level.

But you yourself already see the drawback of this solution: each package gets a little larger. The current implementation sets this overhead to 10%, i.e. Having made each packet forwarded 10% more, we thereby get the opportunity to recover data without re-requesting if not more than every tenth packet is lost.

This redundancy is a payment for network bandwidth for reducing delays (which seems logical, because the connection speeds and channel bandwidth are constantly growing, but the fact that data transfer to the other end of the planet takes a hundred milliseconds is unlikely to be able to somehow be changed without fundamental coup in physics).

Session Resume and Parallel Downloads

Another interesting feature of using the UDP protocol is that you are no longer tied to the IP server. In TCP, a connection is defined by four parameters: the server and client IP addresses, server and client ports. On Linux, you can see these options for each established connection using the netstat command:

$ netstat -anlp | grep ':443'

...

tcp6 0 0 2a03:a800:a1:1952::f:443 2604:a580:2:1::7:57940 TIME_WAIT -

tcp 0 0 31.193.180.217:443 81.82.98.95:59355 TIME_WAIT -

...If any of these four parameters needs to be changed, we will need to open a new TCP connection. That’s why it’s hard to maintain a stable connection on mobile devices when switching between WiFi and 3G / LTE.

In QUIC, with its use of UDP, this parameter set is no longer available. QUIC introduces the concept of a connection identifier called Connection UUID. It becomes possible to switch from WiFi to LTE while maintaining the Connection UUID, thus avoiding the cost of re-creating the connection. Mosh Shell works similarly , keeping the SSH connection active when changing the IP address.

Also, this approach opens the door to the possibility of using multiple sources to request content. If Connection UUID can be used to switch from WiFi to a mobile network, then we can, theoretically, use both of them simultaneously to receive data in parallel. More communication channels - more bandwidth.

Practical Implementations of QUIC

The Chrome browser has experimental QUIC support since 2014. If you want to test QUIC, you can enable its support in Chrome and try to work with Google services that support it. This is a strong advantage of Google - the ability to use a combination of your browser and your own web resources. By enabling QUIC in the world's most popular browser (Chrome) and highly loaded sites (Youtube.com, Google.com), they will be able to get large, clear statistics on the use of the protocol, which will reveal all the significant problems with the practical use of QUIC.

There is a plugin for Chrome , which shows as an icon the server supports HTTP / 2 and QUIC protocols.

You can also see open QUIC connections by opening the chrome: // net-internals / # quic tab right now (pay attention to the Connection UUID parameter mentioned earlier in the table).

You can go even further and see all open connections and all transferred over them packages: chrome: // net-internals / # events & q = type: QUIC_SESSION% 20is: active.

How do firewalls work?

If you are a sysadmin or network engineer, you may have jerked a bit when you heard that QUIC uses UDP instead of TCP. Yes, you probably have your own reasons. It is possible for you (as, for example, in our company), the settings for accessing the web server look something like this:

The most important thing here, of course, is the protocol column, which clearly says “TCP”. Similar settings are used by thousands of web servers around the world, as they are reasonable. 80 and 443 ports, only TCP - and nothing more should be allowed on the production-web server. No UDP.

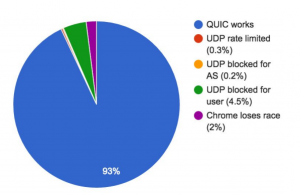

Well, if we want to use QUIC, we will have to add the resolution of UDP connections to the 443rd port. In large enterprise networks, this can be a problem. As Google statistics show, UDP is blocked in some places:

These figures were obtained in a recent study in Sweden. Let's note a few key points:

- Since QUIC was tested only with Google services, we can assume that there was no unavailability due to an incorrectly configured firewall on the server.

- The numbers reflect the success of outgoing requests from users to the 443rd UDP port.

- QUIC can be disabled in Chrome for various reasons. I bet that in some enterprise environments it was turned off proactively, just in case.

- Since the QUIC protocol uses encryption by default, we should only worry about access to the 443rd port; the availability or inaccessibility of the 80th should not have any effect.

The advantage of encryption by default is that various Deep Packet Inspection tools cannot decrypt encrypted information and modify data, they see a binary stream and (I want to believe) just skip it.

Using QUIC on the server side

QUIC is currently supported by the Caddy web server (since version 0.9). Both the client and server QUIC implementations are still at the experimental support stage, so be careful with the practical application of QUIC. Since no one has QUIC enabled by default, it will probably be safe to enable it on your server and experiment with your browser (Update: from version 52 QUIC is enabled by default in Chrome).

QUIC performance

In 2015, Google published some QUIC performance measurements.

As expected, QUIC eclipses classic TCP on poor communication channels, giving a half-second gain on loading the start page www.google.com for 1% of the slowest connections. This gain is even more noticeable on video services like YouTube. Users were 30% less complaining about delays due to buffering when watching videos using QUIC.

Youtube statistics are especially interesting. If improvements of this scale are really possible, then we will see a very quick adaptation of QUIC, at least in the field of video services like Vimeo, as well as in the "adult video" market.

conclusions

Personally, I find the QUIC protocol completely charming! The huge amount of work done by its developers was not in vain - the mere fact that today the largest sites on the Internet support QUIC is a bit overwhelming. I can’t wait for the final QUIC specification, and its further implementation by all browsers and web servers.

Commentary on an article by Jim Roskind, one of the developers of QUIC

I spent many years researching, designing and developing the implementation of the QUIC protocol, and I would like to add some thoughts to the article. The text correctly noted a point about the probable inaccessibility of the QUIC protocol for some users due to strict corporate policies regarding the UDP protocol. This was the reason that we got an average protocol availability of 93%.

If we return a little to the past, we will see that more recently, corporate systems often prohibited even outgoing traffic to port 80, with the argument “this will reduce the amount of time that employees spend surfing to the detriment of work”. Later, as you know, the benefits of access to websites (including for production purposes) forced most corporations to revise their rules by allowing Internet access from the workplace of an ordinary employee. I expect something similar with the QUIC protocol: as soon as it becomes clear that communication with the new protocol can be faster, tasks are completed more quickly - it will break its way into the enterprise.

I hope that QUIC will massively replace TCP, and this is in addition to the fact that it will present a number of ideas to the next version of TCP. The fact is that TCP is implemented in the kernels of operating systems, in hardware, which means that adaptation to the new version can take 5-15 years, while QUIC can be implemented on top of the publicly available and universally supported UDP in a single product / service in just a few weeks or months.

More information on the topic: