How ABBYY Technologies Help Improve Data Leak Detection Systems

Today we will tell how the ABBYY FineReader Engine SDK product was integrated into one of such programs - SearchInform Information Security Circuit and what came of it.

We believe that not all readers of the ABBYY blog are well versed in information security, so first we will briefly describe how the Information Security Outline works. If you are a regular at the IS hub and are familiar with the principles of DLP systems, you can skip this section.

How DLP works

SearchInform information security loop is designed to control information flows within the local area network. Control is possible in two ways, depending on the server component used: on the network, or at users workstations. Server components are platforms on which data interception modules operate. Each interception module acts as a traffic analyzer and controls its data channel.

The first module intercepts and, if necessary, blocks information flows at the network level. It allows you to work with mirrored traffic, proxy servers, mail servers and other corporate software, for example, Lynс. Network traffic is intercepted at the level of network protocols (mail, Internet, instant messengers, FTP, cloud storage). The second one intercepts and blocks information using agents that are installed on employees' computers. At the same time, they control: Internet, corporate and personal e-mail, all popular instant messengers (Viber, ICQ, etc.), Skype, cloud storage, FTP, Sharepoint, output of documents to printers, use of external storage devices. It controls the file system, the activity of processes and sites, the information displayed on PC monitors and captured by microphones, keystrokes,

The system also allows you to index documents “at rest” - on user workstations or network devices - and can index any textual information from any sources that have an API or the ability to connect via ODBC.

The system searches for confidential information that should not be "leaked" in different ways: by keywords taking into account morphology and synonyms, by phrases taking into account the word order and distance between them, by attributes or by signs of documents (format, name of sender or recipient, etc. ) The analysis algorithms are so sensitive that they can even find a seriously modified document if it is close in meaning or content to the “standard”

In the system, you can set security policies and monitor their implementation. DLP is able to collect statistics and reports on cases of violation of security policies.

The system architecture - for those interested - is well described in this review , we will not repeat it.

SearchInform information security loop is designed to control information flows within the local area network. Control is possible in two ways, depending on the server component used: on the network, or at users workstations. Server components are platforms on which data interception modules operate. Each interception module acts as a traffic analyzer and controls its data channel.

The first module intercepts and, if necessary, blocks information flows at the network level. It allows you to work with mirrored traffic, proxy servers, mail servers and other corporate software, for example, Lynс. Network traffic is intercepted at the level of network protocols (mail, Internet, instant messengers, FTP, cloud storage). The second one intercepts and blocks information using agents that are installed on employees' computers. At the same time, they control: Internet, corporate and personal e-mail, all popular instant messengers (Viber, ICQ, etc.), Skype, cloud storage, FTP, Sharepoint, output of documents to printers, use of external storage devices. It controls the file system, the activity of processes and sites, the information displayed on PC monitors and captured by microphones, keystrokes,

The system also allows you to index documents “at rest” - on user workstations or network devices - and can index any textual information from any sources that have an API or the ability to connect via ODBC.

The system searches for confidential information that should not be "leaked" in different ways: by keywords taking into account morphology and synonyms, by phrases taking into account the word order and distance between them, by attributes or by signs of documents (format, name of sender or recipient, etc. ) The analysis algorithms are so sensitive that they can even find a seriously modified document if it is close in meaning or content to the “standard”

In the system, you can set security policies and monitor their implementation. DLP is able to collect statistics and reports on cases of violation of security policies.

The system architecture - for those interested - is well described in this review , we will not repeat it.

Most of the tasks in DLP are solved by text analysis. But, as we remember, in many companies a large number of scans (images) of documents are stored and transmitted through various channels. If you leave the picture “as is” as a picture, the DLP system will not be able to work with it.

In order for the system to understand what information is contained in the image and whether it can be stored / transmitted in one way or another, the image needs to be recognized and the text received. At the same time, a variety of images are fed to the input, and their quality can be completely different. If a fuzzy picture gets into the interception, you have to work with what is, not to ask the user to send it again, in higher quality.

SearchInform DLP has been equipped with OCR technology before. But this engine had serious flaws in both recognition quality and speed. Developing our own engine for SearchInform did not make sense, so we started looking for ready-made technologies. Now in the SearchServer module, ABBYY technologies can be used as a full-text recognition engine.

How did the integration of FineReader Engine into the system

The development was divided into two areas. First, it was necessary to seamlessly integrate ABBYY technology and create a user-friendly DLP recognition module. Secondly, adapt ABBYY document recognition and classification technologies for applied tasks.

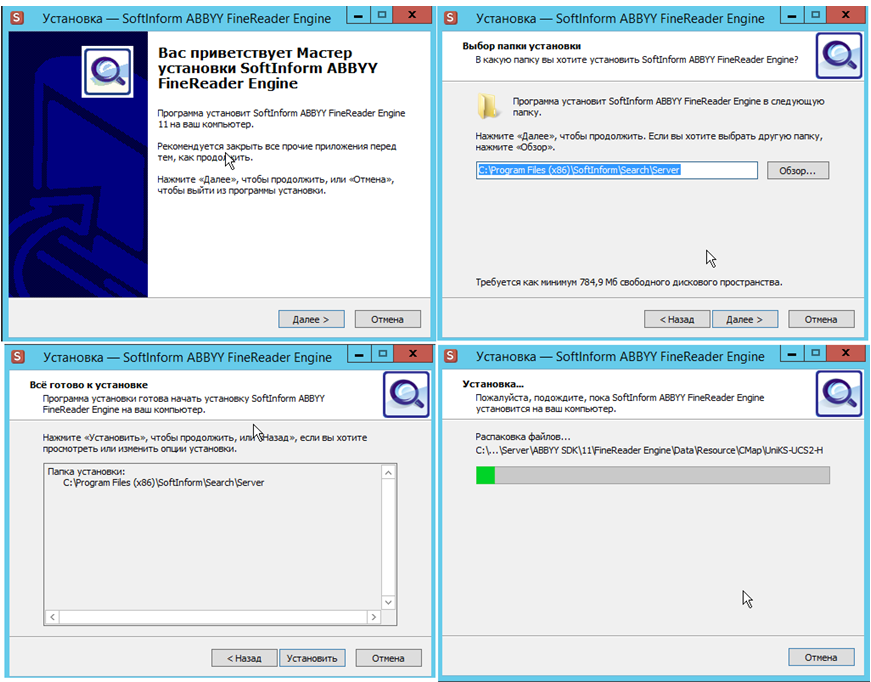

The first task was solved quickly: it was implemented through its own pre-configured installer in the DLP system. Deployment of the OCR module based on ABBYY technology boils down to installing an additional component in the style of "further - further", and activating the desired "check" in DLP. The component is installed on the DLP server, so no configuration is necessary for “connecting” DLP and OCR in principle, the user does not need to do anything else.

Turning on the FineReader Engine is implemented through the DLP interface, it is turned on in one click by selecting the appropriate item from the drop-down list. More detailed settings are immediately available (if desired).

Moreover, the user does not need to interact with ABBYY; FineReader Engine is licensed by SearchInform at the DLP license level. The implementation turned out to be really user-friendly and was liked by SearchInform clients.

With the second task - adaptation of ABBYY technology for applied tasks - there was much more work.

When SearchInform programmers began to study technology, they revealed a lot of opportunities that are potentially useful for solving information security problems. Everyone is used to ABBYY being, first of all, OCR. But the FineReader Engine also knows how to classify documents by their appearance and content. To set up the classification process, at the first stage, you need to specify the categories by which documents will be distributed (for example, “Passport”, “Agreement”, “Check”). After this, the program needs to be trained: “show” documents that correspond to each class in all possible design options.

How the classifier works in the FineReader Engine

In order for such a machine to work, the classifier must first be trained. You pick up a small database of documents representing each category that you go to define. With the help of this base you train the classifier. Then you take another base, check the work of the classifier on it, and if the results suit you, you can start the classifier "in battle".

When working and training, the classifier uses a set of features that help to separate documents of one type from documents of another type. All signs can be divided into graphic and text.

Graphic features well divide groups of documents that are very outwardly different from each other. Relatively speaking, if you look at a document from a distance so that you can’t read the text on it, but you can understand what type it is, then the graphic signs will work well here.

So, graphic signs can well separate the continuous and non-continuous text, for example, letters and payment receipts. They look at the size of the image, the density of colors in its different parts, various other characteristic elements like vertical and horizontal lines.

And if the documents are similar in appearance, or one group, without reading the text, cannot be separated from another group, then text attributes help. They are very similar to those used in spam filters and allow using characteristic words to determine whether a document belongs to one or another type. It is convenient to separate letters from agreements, checks from business cards precisely with the help of text attributes.

Also, text attributes help to separate documents of a similar type, but differing in the value of one or more fields. For example, checks from McDonald's and Teremk are very similar in appearance, but if you consider them as text, the differences will be very noticeable.

As a result, the classifier for each training sample gives more weight to those textual or graphic features that allow the best way to separate documents from this sample by type.

When working and training, the classifier uses a set of features that help to separate documents of one type from documents of another type. All signs can be divided into graphic and text.

Graphic features well divide groups of documents that are very outwardly different from each other. Relatively speaking, if you look at a document from a distance so that you can’t read the text on it, but you can understand what type it is, then the graphic signs will work well here.

So, graphic signs can well separate the continuous and non-continuous text, for example, letters and payment receipts. They look at the size of the image, the density of colors in its different parts, various other characteristic elements like vertical and horizontal lines.

And if the documents are similar in appearance, or one group, without reading the text, cannot be separated from another group, then text attributes help. They are very similar to those used in spam filters and allow using characteristic words to determine whether a document belongs to one or another type. It is convenient to separate letters from agreements, checks from business cards precisely with the help of text attributes.

Also, text attributes help to separate documents of a similar type, but differing in the value of one or more fields. For example, checks from McDonald's and Teremk are very similar in appearance, but if you consider them as text, the differences will be very noticeable.

As a result, the classifier for each training sample gives more weight to those textual or graphic features that allow the best way to separate documents from this sample by type.

How classification is implemented in the DLP + ABBYY bundle

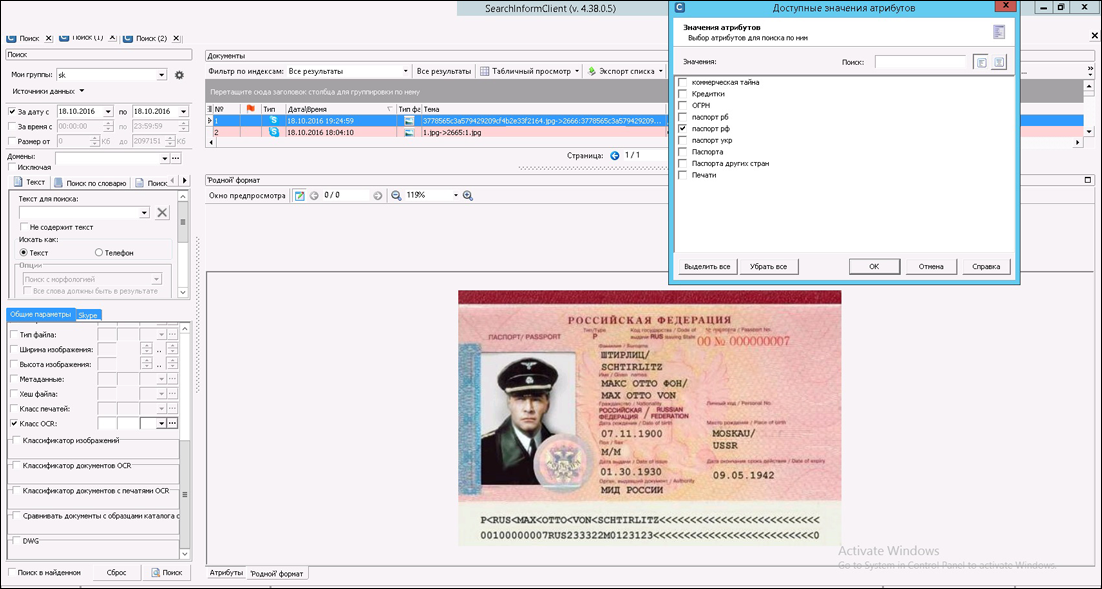

SearchInform developers specified several categories of documents in the program that users might need first of all - different types of passports and bank cards.

The categorization of passports and credit cards begins to work for the user without training the system, almost immediately after the deployment of FineReader Engine. It is enough for the user to turn on the classifier in the settings - and all new documents will be sorted into categories, to classify documents from the archive you just need to restart the check against them.

However, the user is left with the opportunity to create additional categories. He himself can set any classes (categories), or add his documents to existing ones (passports and credit cards). To do this, he needs to create subfolders in the special folder with class names, put document standards there and start the learning process. This procedure is usually performed in conjunction with SearchInform engineers, who clearly demonstrate and talk about the requirements for images, together with the customer form a database of standards, advise which documents are best suited as standards for a particular category. Immediately after the adjustment, a check is made on real data, often after verification a reconfiguration is needed, a slight adjustment of the settings or standards. Usually this procedure takes half an hour or an hour.

The accuracy of the classification depends on how much the system can “see” the difference between documents in different categories. If you apply for training in two different categories, documents that are similar in appearance and contain many identical keywords, the technology will begin to get confused and the classification accuracy will decrease.

So, when detecting scans of only the main page of passports, SearchInform engineers encountered a large number of false positives. The ABBYY algorithm found in the archive of 10,000 images 300 pictures that looked like passports, while there were only a few passports.

If for arbitrary categories this is an acceptable percentage of relevance, then for key objects, for example, credit cards, this is too big an error. To improve relevance, SearchInform engineers also developed their own validation algorithms that apply to the ABBYY classification results. An individual validator was created for each data category, working only with its own group.

How the DLP + ABBYY system works with images

Data processing in DLP is engaged in a special component - SearchServer. When this component finds an image in the intercepted data (it doesn’t matter if it is part of a document or a separate image), it transfers it to the FineReader Engine module, which performs optical recognition and classifies images according to specified categories. Moreover, together with the FineReader Engine category, it also gives the “percentage of similarity” of the image to the corresponding class, for example, image No. 1 looks like a passport by 83%, image No. 2 looks like a driver’s license by 35%. The result is passed to SearchServer. A special parameter is set in SearchServer, for simplicity of understanding we will call it “the minimum possible percentage” - by default it is 40%. If the FineReader Engine has assigned a percentage less than this value, the class is deleted, and this document goes without a class in DLP.

1. SearchInform validator works for passports and credit cards, it checks the document against the recognized text as well as the graphic component (for example, for passports it is found out whether the face is on the image, where it is located, how much space it occupies, whether there are graphic elements , inherent only to passports (for the Russian Federation - a special pattern), other characteristic elements). The more “markers” are confirmed, the higher will be the final “multiplier”. For example, the FineReader Engine classifier is sure that the image on the passport is 63% similar, the validator found the keywords “Passport of the Russian Federation”, “Last Name”, “Issued By” on it, as well as a photo on which the face is detected - in this case, the multiplier is , for example, 1.5 - and the final relevance is 94.5%

Relevance with such a post-check has grown significantly, while productivity has remained the same. The ABBYY algorithm works quite quickly and is applied to large data arrays, which allows you to speed up the program several times, the SearchInform category validator works more slowly, but thanks to the ABBYY classification, it is used only when necessary and is applied to a significantly smaller data array.

The complexity of this implementation was that we had to create validators from scratch for many data types. After all, the same passports of different countries have their own unique features, and the algorithm for searching for a passport of the Russian Federation is absolutely not suitable for finding a passport of a citizen of Ukraine. The same with credit cards - the specifications for VISA are significantly different from MasterCard.

2. If the document is similar to some category, but it does not apply to passports or credit cards, the relevancy assigned by the FineReader Engine module remains unchanged - the SearchInform validator is not applied to such categories at all.

After the final relevance is calculated, the rule once again works in which the threshold is no longer the “minimum percentage of similarity” value, but the value configured by the user. The default is 70%. Total, if the relevance is greater than or equal to this value - such documents confirm the class, and you can search for this class. If it is less, the class label is removed and the document is considered “without class”.

Solution of applied problems

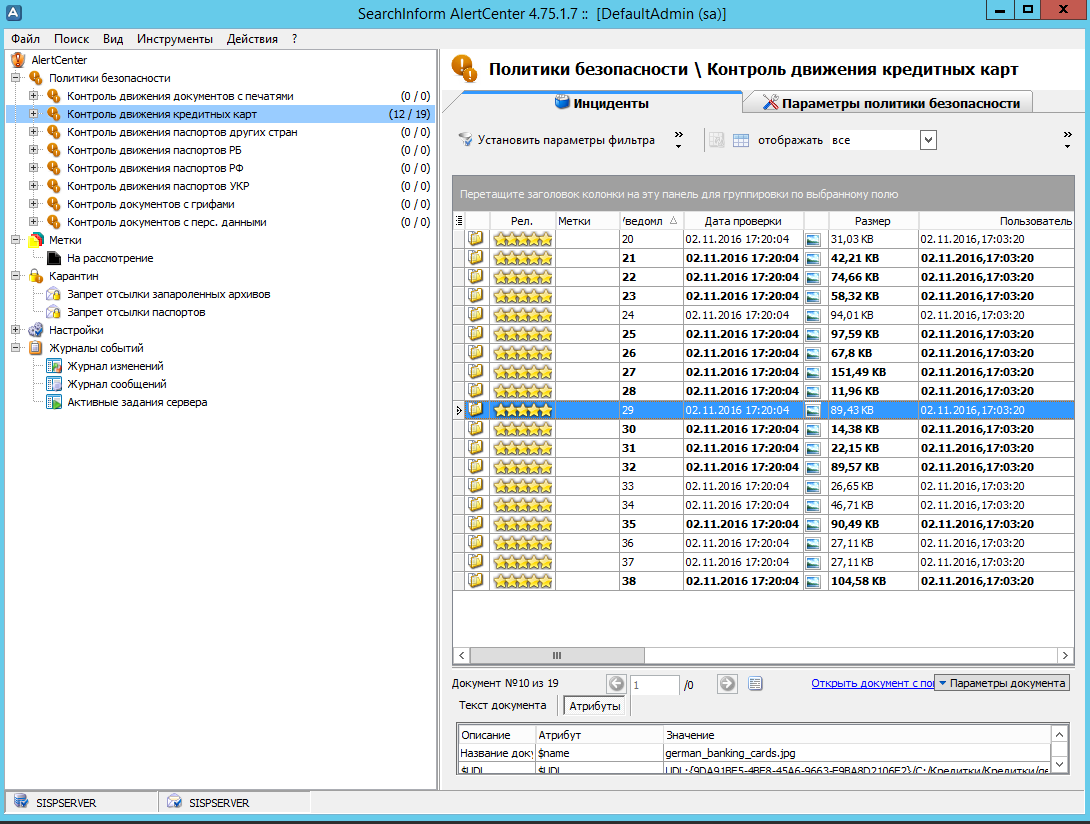

In the "Information Security Circuit" there are specialized analytics tools that allow you to work with the data archive or fulfill the specified security policies.

Of course, passports and credit cards are critical data, because they contain personal data, so their control is very important to customers. Moreover, it is usually extremely difficult to develop a blocking rule that will “cut” such data - when creating such rules in real projects, there are a lot of subtleties that are clarified gradually, and each such case leads to disruption of the client’s business processes (because lock is triggered). Therefore, most often, customers just want to see all the movements of such documents within the organization and, especially, beyond it. And given that this can be not only passports / credit cards, but also any other types of data - the method gives great flexibility in the analysis.

Consider a real case. The customer’s company works with passport scans. It has a certain set of rules governing where and how such things should be stored, on which electronic channels and to whom it can be transmitted. Part of the data is blocked during transmission - for example, if it is outgoing mail intended not for an employee of the company and it has a passport scan, passport data text or something even remotely resembling passport data. It is possible to send a passport scan out only by agreement with the IB.

Also, information security experts monitor the transfer of such data via "personal" channels and the storage of this data on media (storage is regulated, but if transfer to third parties is considered a critical violation, then copying to the "wrong folder" is a minor violation).

When the work is in normal mode, the IS relies on DLP automation and works exclusively with system reports. When there is some kind of investigation, close checks begin and begin to work manually with the flow of data. If you need to identify all the actions that occur with the passport object, the policy relevance is set to 70-80%, 500 out of a million events are filtered out, they are scanned manually and appropriate measures are taken. If something serious has happened, they narrow the policy to “employee \ date \ time” and relevance greatly reduces (up to 1%) - data that are not relevant will be an order of magnitude greater, but the likelihood of missing critical events is greatly reduced.

Svetlana Luzgina, ABBYY Corporate Communications Service,

Alexey Parfentiev, Technical Analyst, SearchInform