As a programmer, I bought a car. Part II

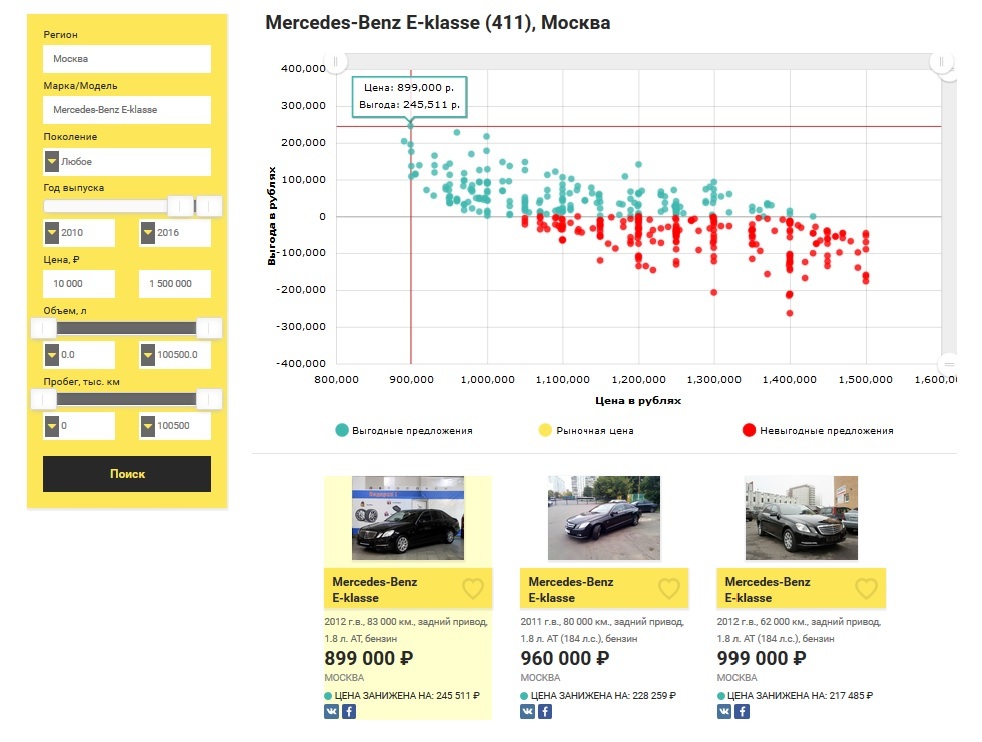

In a previous article, by the example of buying a Mercedes-Benz E-class no older than 2010, worth up to 1.5 million rubles in Moscow, the problem of finding profitable cars was considered. By “profitable” you should understand offers, the price of which is lower than the current market price among ads collected from all the most reputable sites for the sale of used cars in the Russian Federation.

At the first stage, multiple linear regression was chosen as a machine learning method, the legitimacy of its use, as well as the pros and cons, were considered. Simple linear regression was chosen as a familiarization algorithm. Obviously, there are many more machine learning methods to solve the regression problem. In this article, I would like to tell you exactly how I chose the most optimal machine learning algorithm for the model under study, which is currently used in the service I implemented - robasta.ru .

Applicants for the title of "champion":

Before making a choice, all of the above algorithms were investigated, so I wanted to tell you in detail about each of them. However, such a brute-force search path is not entirely optimal; it is more reasonable to conduct additional research of the task at first.

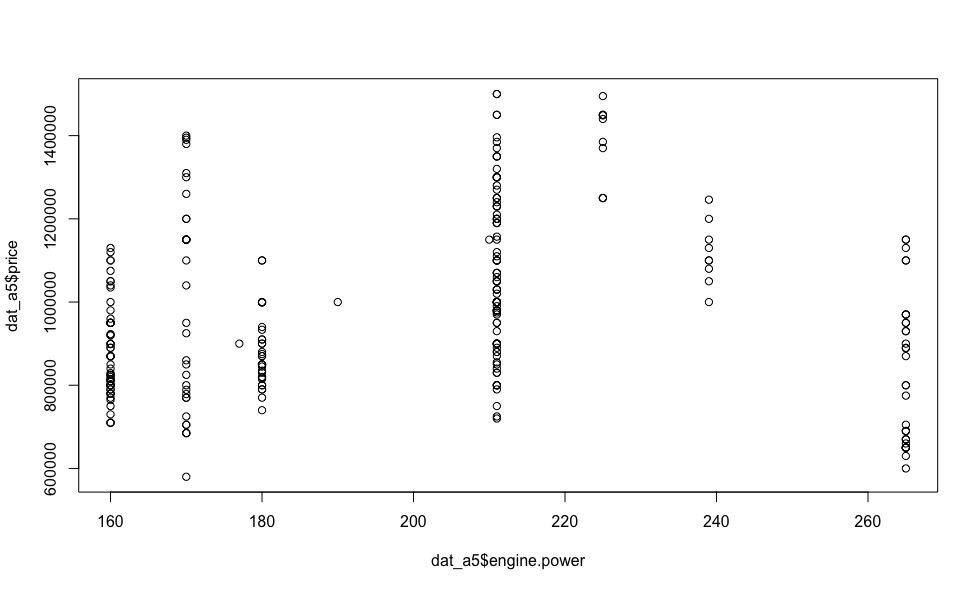

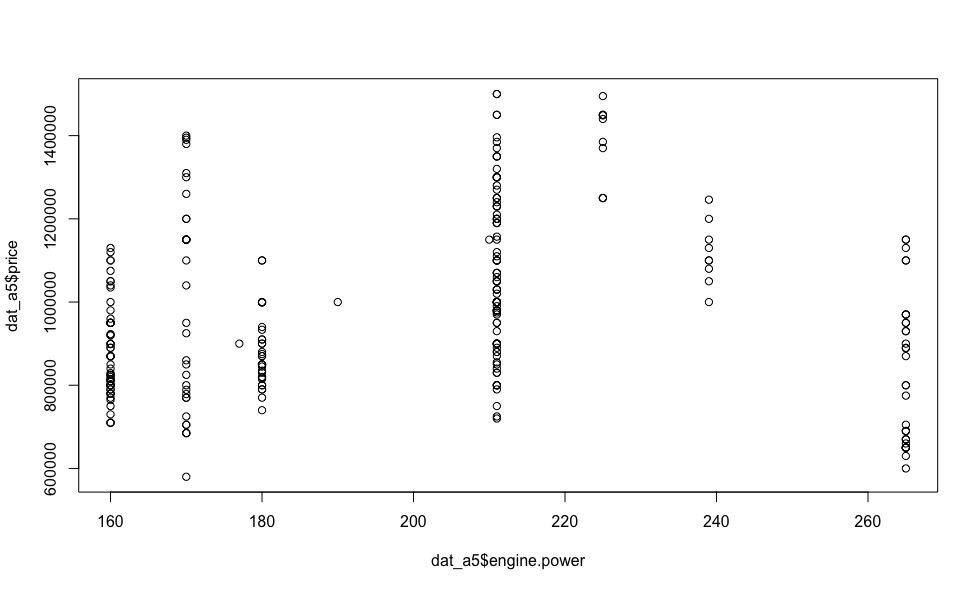

In addition to the Mercedes-Benz E-klasse, I was impressed by the Audi A5, especially with a 239 hp diesel engine, which has good dynamics (6 seconds to 100 km / h) and an acceptable tax. Looking at the dependence of price on the engine power of this creation of German engineers (visualization below), many questions disappear by themselves.

There is no question of linear dependence here, therefore algorithms based on the linear dependence of the explained variable (cost, in our case) on the regressors can be safely discarded. The use of polynomial and non-linear models is unlawful for the reason that the type of dependence of a particular regressor on the price for each individual car model taken is not known in advance.

Thus, taking the above considerations into account, we can only consider the algorithms based on decision trees - Random forest and Xgboost (with two types of boosting - xgbDart, xgbTree), and choose the optimal one from them.

It should be noted that the optimal algorithm is the one that will show its best performance (min RMSE ) during cross-validation and delayed sampling.

Before proceeding to the “blind” application of the selected algorithms, in the next chapter I would like to cover in more detail the question of their settings.

Cross-validation (CV) is often used to assess the real capabilities of the model and adjust its parameters in machine learning tasks. A number of partitions of the initial sample are distinguished into the training and control subsamples. For each of the partitions, the algorithm is configured according to the training subsample, then its average error is estimated on the control one.

Cross-validation assessment refers to the average across all partitions of the error in the control subsamples.

For bias estimates of the probability of errors obtained through cross-validation, it is necessary that the training and control samples form a disjoint subset, in order to avoid the phenomenon of retraining .

Varieties of cross-validation:

Each of the cross validation methods described above can be characterized using bias and variance. The bias characterizes the accuracy of the assessment. Dispersion characterizes the accuracy of the estimate.

In general, the cross validation method bias depends on the size of the validation sample. If the size of the validation sample is 50% of the initial data (2-block cross-validation), the final estimate of the standard deviation will be more biased than when this size is 10% of the initial data. On the other hand, a smaller size of the validation sample increases the variance, since each validation sample contains less data to obtain a stable standard deviation.

Thus, when it comes to k-block cross-validation, to minimize bias, choose the maximum k, and to reduce variance, use the multiple k-block method, which copes with this task better than a single one.

As for the MKKV, for this type of cross-validation, the size of the validation sample has a slightly larger effect on variance than the number of repetitions of the process. It should also be noted that the number of repetitions of the process does not significantly affect the bias.

Thus, it is possible to use the small size validation sample (for example, 10%) for the MQW method and perform a large number of repetitions in order to reduce the variance.

However, ceteris paribus, the use of multiple 10-block HF provides less variance, which is primarily due to the fact that for this method, the same data element cannot be found in different samples, unlike the MQW.

At the end of our reasoning, I would like to make a reservation that with large amounts of data, a 10-block or even 5-block single-shot KB gives quite acceptable results, in our task, we will use multiple 10-block cross-validation to configure the model.

“Random Forest” is an algorithm that randomly creates a lot of decision trees for the received data and then averages the results of their predictions. The tree-building algorithm is very fast, so it’s easy to make as many trees as you need.

From a practical point of view, the method described above has one huge advantage: it requires almost no configuration. If we take any other machine learning algorithm, whether it be a regression or a neural network, they all have many parameters, and they need to be able to select for a specific task. RF, in fact, has only one important parameter that needs to be configured - mtry (the size of a random subset selected at each step of the tree construction). However, even using the default value, very acceptable results can be obtained.

As in the previous article , we replace the missing values (N / A) with the median for all regressors, exclude the engine volume from the sample (due to the strong correlation of the parameter with power) and look at the capabilities of this algorithm.

For cross-validation, we will use the caret package , which has more options for evaluating the quality of the model than rfcv .

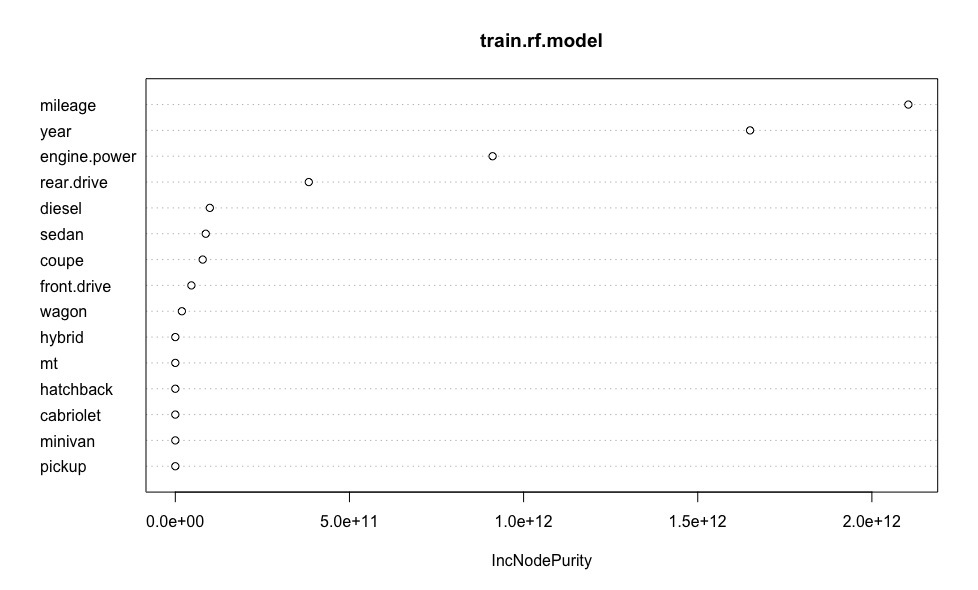

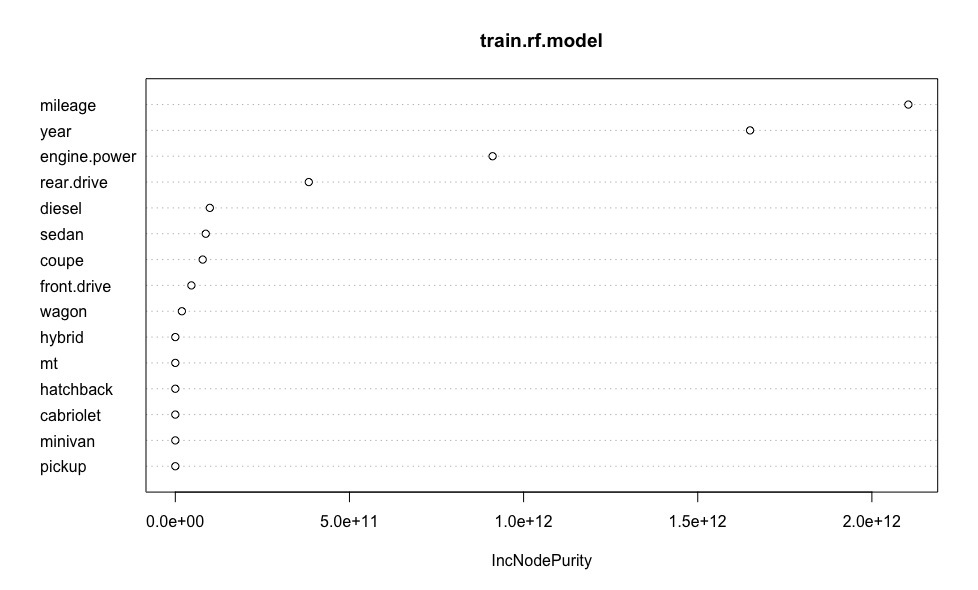

We construct a graph that clearly illustrates the importance of each of the predictors of the model.

The obtained average error in estimating the value of the car is equivalent to the same value obtained for linear regression. Let me remind you that when building a linear model, unlike RF, we got rid of emissions , which led to additional inaccuracies in estimating the cost of cars. Thus, one can argue about the robustness of the "random forest" to emissions.

The idea of gradient boosting is to build an ensemble of elementary models that sequentially refine each other. Each subsequent elementary model is trained on the “mistakes” of the ensemble from the previous elementary models, the responses of the models are weighted summarized.

You can “launch” almost any model - general linear, generalized linear, decision trees, K-nearest neighbors and many others.

The features of the implementation of the boosting algorithm in xgboost include, firstly, the use of the loss function in addition to the first and second derivatives, which increases the efficiency of the algorithm. Secondly, the presence of built-in regularization , which helps to combat retraining. Finally, the ability to define custom loss functions and quality metrics.

Thanks to the experimental parameter num_parallel_tree, you can set the number of trees to be created at the same time and represent Random Forest as a special case of a boosting model with one iteration. And if you use more than one iteration, you will get a boost of “random forests”, when each “random forest” acts as an elementary model.

As part of the article, we will consider only one type of boost - xgbTree, because xgbDart gives similar results.

We construct a graph demonstrating the importance of each of the predictors of the model.

XGboost for this particular case showed slightly more accurate estimates of the cost of cars. A large number of hyperparameters that require reconfiguration depending on the selected make and model of the car are of concern. In this regard, for use on the robasta.ru service , the Random Forest algorithm was preferred.

Now that the choice of “champion" is over, it's time to look at him in action.

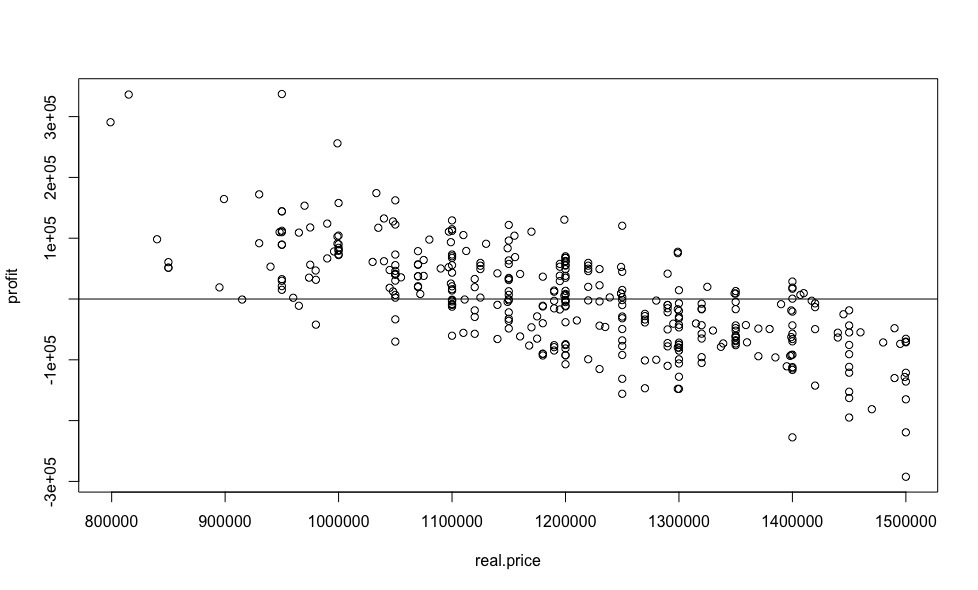

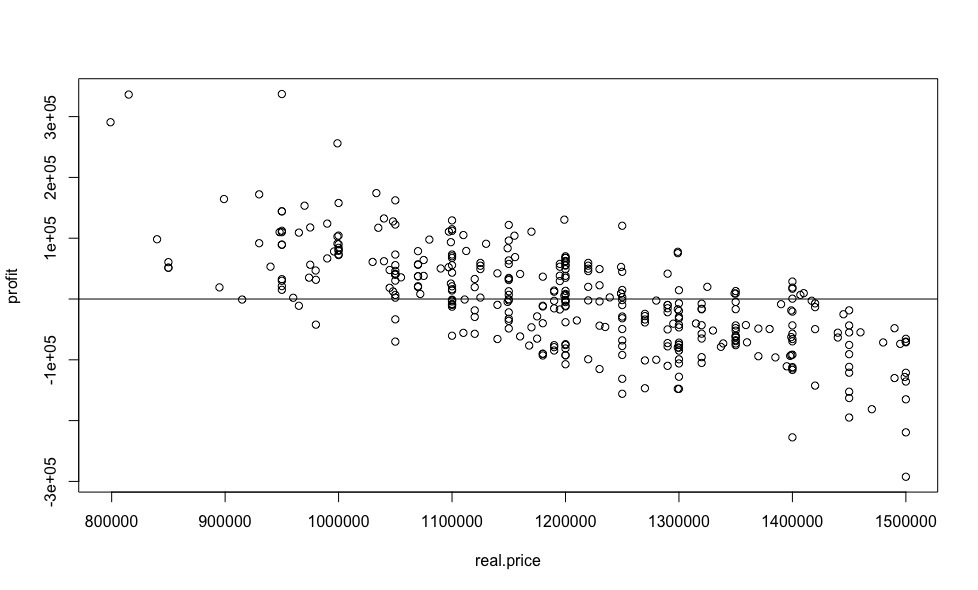

As for the linear regression in the previous article , we plot the gain versus price.

And now let's calculate the percentage benefit.

The results obtained are very weakly similar to the results obtained using linear regression, and look more plausible, despite the almost identical standard deviation for both models.

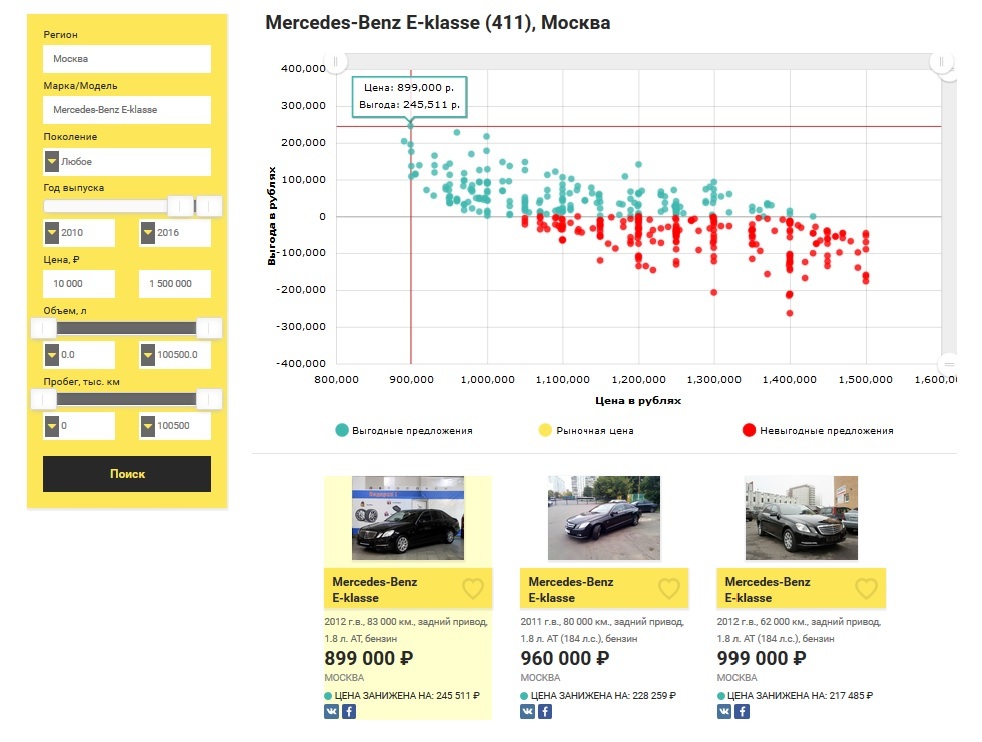

To compare the results in this article, we used a sample from a previous publication , so let's see how many profitable offers Mercedes-Benz E-class are not older than 2010, costing up to 1.5 million rubles in Moscow on the market now.

Summarizing all of the above, I can confidently say that for the selection of used cars we got a powerful tool that is not sensitive to “fake” ads, working in real time. You no longer need to spend hours on several sites with advertisements for the sale of cars and drive to watch potentially unprofitable offers.

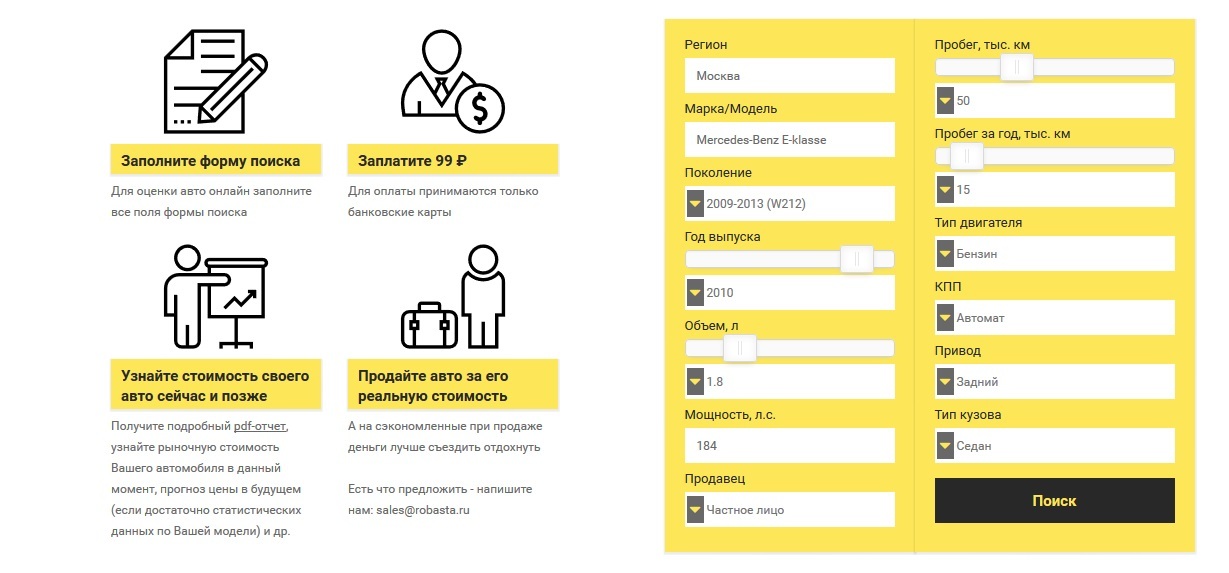

But that’s not all, now, using the considered mathematical apparatus, Robast can help not only those who want to buy, but also those who want to sell their car.

When selling your car, you certainly want to at least not cheapen and sell it in a short time. For a quick and profitable sale of your car, you need to understand the contribution of various characteristics to its value.

To solve this problem, on the basis of the same “random forest", a service was developed to evaluate cars. You fill out all the fields of the search form, in accordance with the parameters of your car, after which the model is trained based on market offers at the moment. If there are five or more ads on the market, the algorithm for the data you fill out predicts the price and gives out some interesting features depending on the overall market picture. It is worth emphasizing that in order to achieve the greatest accuracy, only cars of the same generation as yours are selected for analysis. The results of the assessment of your car are generated in the form of a pdf report , the cost of which is 99 ₽.

Various directions of further development are currently being worked out, among which the main ones can be called the following:

Relatively new cars (mileage up to 100 thousand km) are often sold in front of large expensive MOTs, it is useful to take these data into account in the model. Therefore, now I am in search of reliable partners among medium and large car dealers.

Opening an offline center for the selection and evaluation of cars in Moscow, which, thanks to the implemented algorithm, will be much less costly than that of competitors.

Creation of a convenient API for providing functionality to “intelligent resellers”.

Do you want something to help in the implementation of the tasks voiced by me or to offer your ideas? Write, I am always ready to consider any kind of cooperation.

At the first stage, multiple linear regression was chosen as a machine learning method, the legitimacy of its use, as well as the pros and cons, were considered. Simple linear regression was chosen as a familiarization algorithm. Obviously, there are many more machine learning methods to solve the regression problem. In this article, I would like to tell you exactly how I chose the most optimal machine learning algorithm for the model under study, which is currently used in the service I implemented - robasta.ru .

Algorithm selection

Applicants for the title of "champion":

- Multiple Linear (Simple) Regression

- Lasso regression

- Ridge regression

- Robust regression

- Vowpal wabbit

- Polynomial and nonlinear regression

- Random forest

- Xgboost

Before making a choice, all of the above algorithms were investigated, so I wanted to tell you in detail about each of them. However, such a brute-force search path is not entirely optimal; it is more reasonable to conduct additional research of the task at first.

In addition to the Mercedes-Benz E-klasse, I was impressed by the Audi A5, especially with a 239 hp diesel engine, which has good dynamics (6 seconds to 100 km / h) and an acceptable tax. Looking at the dependence of price on the engine power of this creation of German engineers (visualization below), many questions disappear by themselves.

There is no question of linear dependence here, therefore algorithms based on the linear dependence of the explained variable (cost, in our case) on the regressors can be safely discarded. The use of polynomial and non-linear models is unlawful for the reason that the type of dependence of a particular regressor on the price for each individual car model taken is not known in advance.

Thus, taking the above considerations into account, we can only consider the algorithms based on decision trees - Random forest and Xgboost (with two types of boosting - xgbDart, xgbTree), and choose the optimal one from them.

It should be noted that the optimal algorithm is the one that will show its best performance (min RMSE ) during cross-validation and delayed sampling.

Before proceeding to the “blind” application of the selected algorithms, in the next chapter I would like to cover in more detail the question of their settings.

Cross validation

Cross-validation (CV) is often used to assess the real capabilities of the model and adjust its parameters in machine learning tasks. A number of partitions of the initial sample are distinguished into the training and control subsamples. For each of the partitions, the algorithm is configured according to the training subsample, then its average error is estimated on the control one.

Cross-validation assessment refers to the average across all partitions of the error in the control subsamples.

For bias estimates of the probability of errors obtained through cross-validation, it is necessary that the training and control samples form a disjoint subset, in order to avoid the phenomenon of retraining .

Varieties of cross-validation:

- k-fold cross-validation More detailsThis method randomly splits data into k disjoint blocks of approximately the same size. In turn, each block is considered as a validation sample, and the remaining k-1 blocks are considered as a training sample. The model is trained on k-1 blocks and predicts a validation block. The forecast of the model is estimated using the selected indicator: accuracy, standard deviation (RMSE), etc. The process is repeated k times, and we get k estimates for which the average value is calculated, which is the final estimate of the model. Usually k is chosen equal to 10, sometimes 5. If k is equal to the number of elements in the original data set, this method is called cross-validation for individual elements (this article is not considered).

- Repeated k-fold cross-validation. More detailsIn this method, k-block cross-validation is performed several times. For example, a 5x 10-block cross-validation will give 50 ratings, based on which the average rating will then be calculated. Please note that this is not the same as 50-block cross-validation.

- Monte Carlo cross-validation (MKKV, Monte Carlo cross-validation, leave-group-out cross-validation). More detailsThis method randomly splits the original data set into a training and validation sample in a predetermined proportion a specified number of times.

Each of the cross validation methods described above can be characterized using bias and variance. The bias characterizes the accuracy of the assessment. Dispersion characterizes the accuracy of the estimate.

In general, the cross validation method bias depends on the size of the validation sample. If the size of the validation sample is 50% of the initial data (2-block cross-validation), the final estimate of the standard deviation will be more biased than when this size is 10% of the initial data. On the other hand, a smaller size of the validation sample increases the variance, since each validation sample contains less data to obtain a stable standard deviation.

Thus, when it comes to k-block cross-validation, to minimize bias, choose the maximum k, and to reduce variance, use the multiple k-block method, which copes with this task better than a single one.

As for the MKKV, for this type of cross-validation, the size of the validation sample has a slightly larger effect on variance than the number of repetitions of the process. It should also be noted that the number of repetitions of the process does not significantly affect the bias.

Thus, it is possible to use the small size validation sample (for example, 10%) for the MQW method and perform a large number of repetitions in order to reduce the variance.

However, ceteris paribus, the use of multiple 10-block HF provides less variance, which is primarily due to the fact that for this method, the same data element cannot be found in different samples, unlike the MQW.

At the end of our reasoning, I would like to make a reservation that with large amounts of data, a 10-block or even 5-block single-shot KB gives quite acceptable results, in our task, we will use multiple 10-block cross-validation to configure the model.

Random forest

“Random Forest” is an algorithm that randomly creates a lot of decision trees for the received data and then averages the results of their predictions. The tree-building algorithm is very fast, so it’s easy to make as many trees as you need.

From a practical point of view, the method described above has one huge advantage: it requires almost no configuration. If we take any other machine learning algorithm, whether it be a regression or a neural network, they all have many parameters, and they need to be able to select for a specific task. RF, in fact, has only one important parameter that needs to be configured - mtry (the size of a random subset selected at each step of the tree construction). However, even using the default value, very acceptable results can be obtained.

As in the previous article , we replace the missing values (N / A) with the median for all regressors, exclude the engine volume from the sample (due to the strong correlation of the parameter with power) and look at the capabilities of this algorithm.

dat <- read.csv("dataset.txt") # загружаем выборку в R

dat$mileage[is.na(dat$mileage)] <- median(na.omit(dat$mileage)) # заменяем NA на примере пробега

dat <- dat[-c(1,11)] # исключаем номер строки и объем двигателя из выборки

set.seed(1) # инициализируем генератор случайных чисел (для воспроизводимости)

split <- runif(dim(dat)[1]) > 0.2 # разделяем нашу выборку

train <- dat[split,] # выборка для обучения и настройки (cross-validation) параметров

test <- dat[!split,] # отложенная (hold-out) выборкаFor cross-validation, we will use the caret package , which has more options for evaluating the quality of the model than rfcv .

library(caret) # подключаем библиотеку caret

fit.control <- trainControl(method = "repeatedcv", number = 10, repeats = 10)

train.rf.model <- train(price~., data=train, method="rf", trControl=fit.control , metric = "RMSE") # применим 10-ти кратную 10-ти блочную кросс-валидацию для настройки модели

train.rf.model # посмотрим на результаты кросс-валидации

More details

Random Forest

292 samples

15 predictor

No pre-processing

Resampling: Cross-Validated (10 fold, repeated 10 times)

Summary of sample sizes: 262, 262, 262, 263, 263, 263, ...

Resampling results across tuning parameters:

mtry RMSE Rsquared

2 134565.8 0.4318963

8 117451.8 0.4378768

15 122897.6 0.3956822

RMSE was used to select the optimal model using the smallest value.

The final value used for the model was mtry = 8.

292 samples

15 predictor

No pre-processing

Resampling: Cross-Validated (10 fold, repeated 10 times)

Summary of sample sizes: 262, 262, 262, 263, 263, 263, ...

Resampling results across tuning parameters:

mtry RMSE Rsquared

2 134565.8 0.4318963

8 117451.8 0.4378768

15 122897.6 0.3956822

RMSE was used to select the optimal model using the smallest value.

The final value used for the model was mtry = 8.

library(randomForest) # подключаем библиотеку random forest

train.rf.model <- randomForest(price ~ ., train,mtry=8) # построим модель на основе полученных с помощью кросс-валидации параметров

We construct a graph that clearly illustrates the importance of each of the predictors of the model.

varImpPlot(train.rf.model) # оценим важность предикторов

rf.model.predictions <- predict(train.rf.model, test) # проверим точность оценки на отложенной выборке

print(sqrt(sum((as.vector(rf.model.predictions - test$price))^2)/length(rf.model.predictions))) # средняя ошибка прогноза цены (в рублях)

[1] 121760.5The obtained average error in estimating the value of the car is equivalent to the same value obtained for linear regression. Let me remind you that when building a linear model, unlike RF, we got rid of emissions , which led to additional inaccuracies in estimating the cost of cars. Thus, one can argue about the robustness of the "random forest" to emissions.

Xgboost

The idea of gradient boosting is to build an ensemble of elementary models that sequentially refine each other. Each subsequent elementary model is trained on the “mistakes” of the ensemble from the previous elementary models, the responses of the models are weighted summarized.

You can “launch” almost any model - general linear, generalized linear, decision trees, K-nearest neighbors and many others.

The features of the implementation of the boosting algorithm in xgboost include, firstly, the use of the loss function in addition to the first and second derivatives, which increases the efficiency of the algorithm. Secondly, the presence of built-in regularization , which helps to combat retraining. Finally, the ability to define custom loss functions and quality metrics.

Thanks to the experimental parameter num_parallel_tree, you can set the number of trees to be created at the same time and represent Random Forest as a special case of a boosting model with one iteration. And if you use more than one iteration, you will get a boost of “random forests”, when each “random forest” acts as an elementary model.

As part of the article, we will consider only one type of boost - xgbTree, because xgbDart gives similar results.

fit.control <- trainControl(method = "repeatedcv", number = 10, repeats = 10)

train.xgb.model <- train(price ~., data = train, method = "xgbTree", trControl = fit.control, metric = "RMSE") # применим 10-ти кратную 10-ти блочную кросс-валидацию

train.xgb.model # посмотрим на результаты кросс-валидации

More details

eXtreme Gradient Boosting

292 samples

15 predictor

No pre-processing

Resampling: Cross-Validated (10 fold, repeated 10 times)

Summary of sample sizes: 263, 262, 262, 263, 264, 263, ...

Resampling results across tuning parameters:

eta max_depth colsample_bytree nrounds RMSE Rsquared

0.3 1 0.6 50 114131.1 0.4705512

0.3 1 0.6 100 113639.6 0.4745488

0.3 1 0.6 150 113821.3 0.4734121

0.3 1 0.8 50 114234.6 0.4694687

0.3 1 0.8 100 113960.5 0.4712563

0.3 1 0.8 150 114337.1 0.4685121

0.3 2 0.6 50 115364.6 0.4604643

0.3 2 0.6 100 117576.4 0.4472452

0.3 2 0.6 150 119443.6 0.4358365

0.3 2 0.8 50 116560.3 0.4494750

0.3 2 0.8 100 119054.2 0.4350078

0.3 2 0.8 150 121035.4 0.4222440

0.3 3 0.6 50 117883.2 0.4422659

0.3 3 0.6 100 121916.7 0.4162103

0.3 3 0.6 150 125206.7 0.3968248

0.3 3 0.8 50 119331.3 0.4296062

0.3 3 0.8 100 124385.7 0.3987044

0.3 3 0.8 150 128396.6 0.3753334

0.4 1 0.6 50 113771.6 0.4727520

0.4 1 0.6 100 113951.6 0.4717968

0.4 1 0.6 150 114135.0 0.4710503

0.4 1 0.8 50 114055.0 0.4700165

0.4 1 0.8 100 114345.5 0.4680938

0.4 1 0.8 150 114715.8 0.4655844

0.4 2 0.6 50 116982.1 0.4499777

0.4 2 0.6 100 119511.9 0.4347406

0.4 2 0.6 150 122337.9 0.4163611

0.4 2 0.8 50 118384.6 0.4379478

0.4 2 0.8 100 121302.6 0.4201654

0.4 2 0.8 150 124283.7 0.4015380

0.4 3 0.6 50 118843.2 0.4356722

0.4 3 0.6 100 124315.3 0.4017282

0.4 3 0.6 150 128263.0 0.3796033

0.4 3 0.8 50 122043.1 0.4135415

0.4 3 0.8 100 128164.0 0.3782641

0.4 3 0.8 150 132538.2 0.3567702

Tuning parameter 'gamma' was held constant at a value of 0

Tuning parameter 'min_child_weight' was held constant at a value of 1

RMSE was used to select the optimal model using the smallest value.

The final values used for the model were nrounds = 100, max_depth = 1, eta = 0.3, gamma = 0, colsample_bytree = 0.6 and min_child_weight = 1.

292 samples

15 predictor

No pre-processing

Resampling: Cross-Validated (10 fold, repeated 10 times)

Summary of sample sizes: 263, 262, 262, 263, 264, 263, ...

Resampling results across tuning parameters:

eta max_depth colsample_bytree nrounds RMSE Rsquared

0.3 1 0.6 50 114131.1 0.4705512

0.3 1 0.6 100 113639.6 0.4745488

0.3 1 0.6 150 113821.3 0.4734121

0.3 1 0.8 50 114234.6 0.4694687

0.3 1 0.8 100 113960.5 0.4712563

0.3 1 0.8 150 114337.1 0.4685121

0.3 2 0.6 50 115364.6 0.4604643

0.3 2 0.6 100 117576.4 0.4472452

0.3 2 0.6 150 119443.6 0.4358365

0.3 2 0.8 50 116560.3 0.4494750

0.3 2 0.8 100 119054.2 0.4350078

0.3 2 0.8 150 121035.4 0.4222440

0.3 3 0.6 50 117883.2 0.4422659

0.3 3 0.6 100 121916.7 0.4162103

0.3 3 0.6 150 125206.7 0.3968248

0.3 3 0.8 50 119331.3 0.4296062

0.3 3 0.8 100 124385.7 0.3987044

0.3 3 0.8 150 128396.6 0.3753334

0.4 1 0.6 50 113771.6 0.4727520

0.4 1 0.6 100 113951.6 0.4717968

0.4 1 0.6 150 114135.0 0.4710503

0.4 1 0.8 50 114055.0 0.4700165

0.4 1 0.8 100 114345.5 0.4680938

0.4 1 0.8 150 114715.8 0.4655844

0.4 2 0.6 50 116982.1 0.4499777

0.4 2 0.6 100 119511.9 0.4347406

0.4 2 0.6 150 122337.9 0.4163611

0.4 2 0.8 50 118384.6 0.4379478

0.4 2 0.8 100 121302.6 0.4201654

0.4 2 0.8 150 124283.7 0.4015380

0.4 3 0.6 50 118843.2 0.4356722

0.4 3 0.6 100 124315.3 0.4017282

0.4 3 0.6 150 128263.0 0.3796033

0.4 3 0.8 50 122043.1 0.4135415

0.4 3 0.8 100 128164.0 0.3782641

0.4 3 0.8 150 132538.2 0.3567702

Tuning parameter 'gamma' was held constant at a value of 0

Tuning parameter 'min_child_weight' was held constant at a value of 1

RMSE was used to select the optimal model using the smallest value.

The final values used for the model were nrounds = 100, max_depth = 1, eta = 0.3, gamma = 0, colsample_bytree = 0.6 and min_child_weight = 1.

library(xgboost) # подключаем библиотеку xgboost

xgb_train <- xgb.DMatrix(as.matrix(train[-c(1)] ), label=train$price) # тренировочная выборка

xgb_test <- xgb.DMatrix(as.matrix(test[-c(1)]), label=test$price) # тестовая выборка

xgb.param <- list(booster = "gbtree",

max.depth = 1,

eta = 0.3,

gamma = 0,

subsample = 0.5,

colsample_bytree = 0.6,

min_child_weight = 1,

eval_metric = "rmse")

train.xgb.model <- xgb.train(data = xgb_train, nrounds = 100, params = xgb.param) # построим модель на основе полученных с помощью кросс-валидации параметров

We construct a graph demonstrating the importance of each of the predictors of the model.

importance.frame <- xgb.importance(colnames(train[-c(1)]), model = train.xgb.model) # оценим важность предикторов

library(Ckmeans.1d.dp) # подключим библиотеку для xgb.plot

xgb.plot.importance(importance.frame)

xgb.model.predictions <- predict(train.xgb.model, xgb_test) # проверим точность оценки на отложенной выборке

print(sqrt(sum((as.vector(xgb.model.predictions - test$price))^2)/length(xgb.model.predictions))) # средняя ошибка прогноза цены (в рублях)

[1] 118742.8

XGboost for this particular case showed slightly more accurate estimates of the cost of cars. A large number of hyperparameters that require reconfiguration depending on the selected make and model of the car are of concern. In this regard, for use on the robasta.ru service , the Random Forest algorithm was preferred.

Testing the selected algorithm

Now that the choice of “champion" is over, it's time to look at him in action.

library(randomForest) # подключаем библиотеку random forest

rf.model <- randomForest(price ~ ., dat,mtry=8) # построим модель на основе полученных с помощью кросс-валидации параметров

predicted.price <- predict(rf.model, dat) # предскажем цену для каждого автомобиля

real.price <- dat$price # вектор цен на автомобили полученный из объявлений

profit <- predicted.price - real.price # выгода между предсказанной нами ценой и ценой из объявлений

As for the linear regression in the previous article , we plot the gain versus price.

plot(real.price,profit)

abline(0,0)

And now let's calculate the percentage benefit.

sorted <- sort(predicted.price /real.price, decreasing = TRUE)

sorted[1:10]

69 42 122 15 168 248 346 109 231 244

1.412597 1.363876 1.354881 1.256323 1.185104 1.182895 1.168575 1.158208 1.157928 1.154557

The results obtained are very weakly similar to the results obtained using linear regression, and look more plausible, despite the almost identical standard deviation for both models.

To compare the results in this article, we used a sample from a previous publication , so let's see how many profitable offers Mercedes-Benz E-class are not older than 2010, costing up to 1.5 million rubles in Moscow on the market now.

Summarizing all of the above, I can confidently say that for the selection of used cars we got a powerful tool that is not sensitive to “fake” ads, working in real time. You no longer need to spend hours on several sites with advertisements for the sale of cars and drive to watch potentially unprofitable offers.

But that’s not all, now, using the considered mathematical apparatus, Robast can help not only those who want to buy, but also those who want to sell their car.

Car sale

When selling your car, you certainly want to at least not cheapen and sell it in a short time. For a quick and profitable sale of your car, you need to understand the contribution of various characteristics to its value.

To solve this problem, on the basis of the same “random forest", a service was developed to evaluate cars. You fill out all the fields of the search form, in accordance with the parameters of your car, after which the model is trained based on market offers at the moment. If there are five or more ads on the market, the algorithm for the data you fill out predicts the price and gives out some interesting features depending on the overall market picture. It is worth emphasizing that in order to achieve the greatest accuracy, only cars of the same generation as yours are selected for analysis. The results of the assessment of your car are generated in the form of a pdf report , the cost of which is 99 ₽.

In the end

Various directions of further development are currently being worked out, among which the main ones can be called the following:

Relatively new cars (mileage up to 100 thousand km) are often sold in front of large expensive MOTs, it is useful to take these data into account in the model. Therefore, now I am in search of reliable partners among medium and large car dealers.

Opening an offline center for the selection and evaluation of cars in Moscow, which, thanks to the implemented algorithm, will be much less costly than that of competitors.

Creation of a convenient API for providing functionality to “intelligent resellers”.

Do you want something to help in the implementation of the tasks voiced by me or to offer your ideas? Write, I am always ready to consider any kind of cooperation.