Locks aren't that slow

- Transfer

- Tutorial

Locks in general and mutexes, as their private implementation, have a long history of incorrectly evaluating the speed of their work. Back in 1986, in a Usenet conference, Matthew Dillon wrote : "Most people mistakenly realized that locks were slow." Today, after many years, we can say that nothing has changed.

Indeed, locks may work slowly on some platforms, or in highly competitive code. And, if you are developing a multi-threaded application, then it is quite possible that sooner or later you will come across a situation where any one lock will eat up a lot of resources (most likely due to an error in the code that leads to its frequent calling). But all these are special cases that are not generally related to the statement "locks work slowly." As we will see below, code with locks can work quite productively.

One of the reasons for misconceptions about the speed of locks is that many programmers do not distinguish between the concepts of “lightweight mutex” and “mutex as an object of the OS kernel”. Always use lightweight mutexes.. For example, if you program in C ++ under Windows, then your choice is the critical sections.

The second cause of errors can serve, paradoxically, as benchmarks. For example, later in this article we will measure the performance of locks under high load: each thread will require a lock to perform any action, and the locks themselves will be very short (and, as a result, very frequent). This is fine for an experiment, but this way of writing code is not what you need in a real application.

The second cause of errors can serve, paradoxically, as benchmarks. For example, later in this article we will measure the performance of locks under high load: each thread will require a lock to perform any action, and the locks themselves will be very short (and, as a result, very frequent). This is fine for an experiment, but this way of writing code is not what you need in a real application.

Locks are criticized for other reasons. There is a whole family of algorithms and technologies called lock-free"programming. This is an incredibly exciting and challenging development method that can bring a huge performance boost to a number of applications. I know programmers who spent weeks polishing their" lock-free "algorithms, wrote a million tests on them - and only for to catch a rare bug associated with a certain combination of timings a few months later, this combination of danger and reward for it can be very attractive for some programmers (including me). With the power of “lock-fr ee "classic locks begin to seem boring, outdated and slow.

But do not rush to discount them. One of the good examples where locks are often used and show good performance are memory allocators. Popular implementationDoug Lea's malloc is often used in game devs. But it is single-threaded, so we need to protect it with locks. During an active game, we may well have a situation where several threads access the allocator with a frequency of, say, 15,000 times per second. During game loading, this figure can reach up to 100,000 times per second. As you will see later, this is not at all a problem for code with locks.

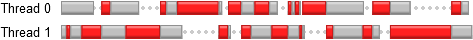

In this test, we will run a stream that will generate random numbers using the Mersenne vortex algorithm . In the process, he will regularly capture and release the synchronization object. The time between capture and release will be random, but on average it will tend to the value we set. For example, suppose we want to use a lock 15,000 times per second and hold it for 50% of the time. Here's how the timeline will look in this case. Red means the time when the lock is captured, gray - when it is free.

This is essentially a Poisson process. If we know the average amount of time to generate one random number (and this, for example, 6.349 nanoseconds on a 2.66 GHz quad-core Xeon processor) - we can measure the amount of work done in some units, not seconds. We can use the technique described in another article of mine to determine the number of units of work between capturing and releasing a synchronization object. Here is a C ++ implementation. I omitted some irrelevant details, but you can download the full source code here .

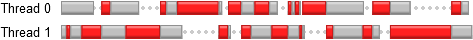

Now let's imagine that we launched two such threads, each on its own processor core. Each thread will hold a lock of 50% of its operating time, but if one thread tries to access the critical section while it is being held by another thread, it will be forced to wait. The classic case of the struggle for a shared resource.

I think this is an approximate example of how locks are used in real applications. When we run the above code in two threads, we find that each thread spends about 25% of the time waiting and only 75% of the time doing the real work. Together, two threads increase productivity by 1.5 times compared to a single-threaded solution.

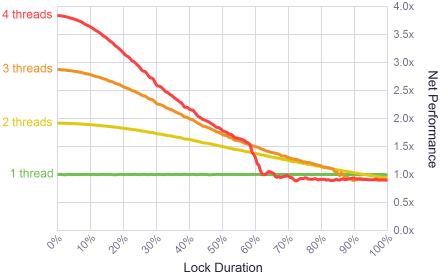

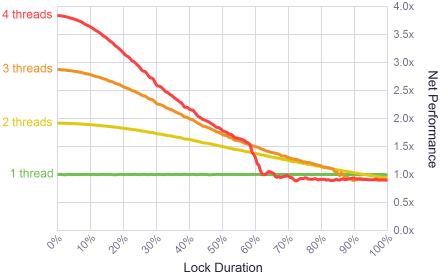

I ran this test with different variations on a 2.66 GHz quad-core Xeon processor - in 1 thread, in 2 threads, in 4 threads, each on its own core. I also varied the duration of the lock capture from a degenerate case where the lock was released immediately, to the opposite side, when the lock took 100% of the thread’s time. In all cases, the capture frequency remained constant - 15,000 times per second.

The results were quite interesting. For a short capture duration (I would say up to 10% of the time), the system showed an extremely high degree of parallelism. Not perfect, but close to that. Locks work fast!

To evaluate the results in terms of practical application, I analyzed the operation of the memory allocator in a multi-threaded game using a profiler. During the game, there were about 15,000 calls of the allocator from three threads, blocking occupied about 2% of the overall application performance. This value is located in the “comfort zone” on the left side of the graph.

These results also show that as soon as the duration of the code inside the lock passes gracina to 90% of the total time, it makes no sense to write multi-threaded code. A single-threaded application in this case will work faster. What is even more surprising is a sharp jump downward in the performance of 4 streams in the region of 60%. It was like an anomaly, so I repeated the test several times, tried to run the tests in a different order. But each time it turned out the same thing. My best guess is that this combination of the number of threads, the duration of the locks, and the CPU load brought the Windows Scheduler to some extreme mode of operation, but I did not investigate it further.

Even a lightweight mutex carries some overhead. A pair of lock / unlock operations for the critical section in Windows runs about 23.5 nanoseconds (on the above processor). Thus, even 15,000 locks per second do not carry any significant load affecting performance. But what happens if we increase the frequency?

The above algorithm offers very convenient means of controlling the amount of work performed between the locks. I ran another series of tests: from 10 nanoseconds to 31 microseconds between locks, which corresponds to approximately 32,000 locks per second. In each test, two threads were used.

As you might have expected, at very high frequencies, the overhead of locking starts to affect overall performance. At such frequencies, only a few instructions are executed inside the lock, which is comparable in time to the capture / release of the synchronization object itself. The good news is that for such short (and therefore simple) operations, it is quite possible to develop and use some kind of lock-free algorithm.

At the same time, the results showed that calling locks up to 320,000 times per second (3.1 microseconds between locks) can be quite effective. In gamedev, a memory allocator can work normally at similar frequencies during game loading. You still get up to 1.5x gain from multithreading in this case (but only if the duration of the lock itself is short).

We examined a wide range of different cases of using locks: from their very high performance to cases where the idea of multithreading itself makes no sense. An example with a memory allocator in a game engine showed that in real applications a high frequency of using locks is quite acceptable under certain conditions. With such arguments, no one will be able to simply unfoundedly say that "locks work slowly." Yes, locks can be used in an inefficient way, but living with this fear is not worth it - fortunately we have profilers that easily identify such problems. Every time you want to rush headlong into the maelstrom of exciting and dangerous lock-free programming - remember this article and the fact that locks can work quite quickly.

The purpose of this article was to return the locks a little of the respect that they rightly deserve. I understand that with all the wealth of options for using locks in industrial software, programmers were accompanied by both successes and painful failures in the process of finding a balance of performance. If you have an example of this or that, tell us about it in the comments.

Indeed, locks may work slowly on some platforms, or in highly competitive code. And, if you are developing a multi-threaded application, then it is quite possible that sooner or later you will come across a situation where any one lock will eat up a lot of resources (most likely due to an error in the code that leads to its frequent calling). But all these are special cases that are not generally related to the statement "locks work slowly." As we will see below, code with locks can work quite productively.

One of the reasons for misconceptions about the speed of locks is that many programmers do not distinguish between the concepts of “lightweight mutex” and “mutex as an object of the OS kernel”. Always use lightweight mutexes.. For example, if you program in C ++ under Windows, then your choice is the critical sections.

The second cause of errors can serve, paradoxically, as benchmarks. For example, later in this article we will measure the performance of locks under high load: each thread will require a lock to perform any action, and the locks themselves will be very short (and, as a result, very frequent). This is fine for an experiment, but this way of writing code is not what you need in a real application.

The second cause of errors can serve, paradoxically, as benchmarks. For example, later in this article we will measure the performance of locks under high load: each thread will require a lock to perform any action, and the locks themselves will be very short (and, as a result, very frequent). This is fine for an experiment, but this way of writing code is not what you need in a real application. Locks are criticized for other reasons. There is a whole family of algorithms and technologies called lock-free"programming. This is an incredibly exciting and challenging development method that can bring a huge performance boost to a number of applications. I know programmers who spent weeks polishing their" lock-free "algorithms, wrote a million tests on them - and only for to catch a rare bug associated with a certain combination of timings a few months later, this combination of danger and reward for it can be very attractive for some programmers (including me). With the power of “lock-fr ee "classic locks begin to seem boring, outdated and slow.

But do not rush to discount them. One of the good examples where locks are often used and show good performance are memory allocators. Popular implementationDoug Lea's malloc is often used in game devs. But it is single-threaded, so we need to protect it with locks. During an active game, we may well have a situation where several threads access the allocator with a frequency of, say, 15,000 times per second. During game loading, this figure can reach up to 100,000 times per second. As you will see later, this is not at all a problem for code with locks.

Benchmark

In this test, we will run a stream that will generate random numbers using the Mersenne vortex algorithm . In the process, he will regularly capture and release the synchronization object. The time between capture and release will be random, but on average it will tend to the value we set. For example, suppose we want to use a lock 15,000 times per second and hold it for 50% of the time. Here's how the timeline will look in this case. Red means the time when the lock is captured, gray - when it is free.

This is essentially a Poisson process. If we know the average amount of time to generate one random number (and this, for example, 6.349 nanoseconds on a 2.66 GHz quad-core Xeon processor) - we can measure the amount of work done in some units, not seconds. We can use the technique described in another article of mine to determine the number of units of work between capturing and releasing a synchronization object. Here is a C ++ implementation. I omitted some irrelevant details, but you can download the full source code here .

QueryPerformanceCounter(&start);

for (;;)

{

// Выполняем некоторую работу без блокировки

workunits = (int) (random.poissonInterval(averageUnlockedCount) + 0.5f);

for (int i = 1; i < workunits; i++)

random.integer(); // выполняем один юнит работы

workDone += workunits;

QueryPerformanceCounter(&end);

elapsedTime = (end.QuadPart - start.QuadPart) * ooFreq;

if (elapsedTime >= timeLimit)

break;

// Выполняем некоторую работу с блокировкой

EnterCriticalSection(&criticalSection);

workunits = (int) (random.poissonInterval(averageLockedCount) + 0.5f);

for (int i = 1; i < workunits; i++)

random.integer(); // выполняем один юнит работы

workDone += workunits;

LeaveCriticalSection(&criticalSection);

QueryPerformanceCounter(&end);

elapsedTime = (end.QuadPart - start.QuadPart) * ooFreq;

if (elapsedTime >= timeLimit)

break;

}

Now let's imagine that we launched two such threads, each on its own processor core. Each thread will hold a lock of 50% of its operating time, but if one thread tries to access the critical section while it is being held by another thread, it will be forced to wait. The classic case of the struggle for a shared resource.

I think this is an approximate example of how locks are used in real applications. When we run the above code in two threads, we find that each thread spends about 25% of the time waiting and only 75% of the time doing the real work. Together, two threads increase productivity by 1.5 times compared to a single-threaded solution.

I ran this test with different variations on a 2.66 GHz quad-core Xeon processor - in 1 thread, in 2 threads, in 4 threads, each on its own core. I also varied the duration of the lock capture from a degenerate case where the lock was released immediately, to the opposite side, when the lock took 100% of the thread’s time. In all cases, the capture frequency remained constant - 15,000 times per second.

The results were quite interesting. For a short capture duration (I would say up to 10% of the time), the system showed an extremely high degree of parallelism. Not perfect, but close to that. Locks work fast!

To evaluate the results in terms of practical application, I analyzed the operation of the memory allocator in a multi-threaded game using a profiler. During the game, there were about 15,000 calls of the allocator from three threads, blocking occupied about 2% of the overall application performance. This value is located in the “comfort zone” on the left side of the graph.

These results also show that as soon as the duration of the code inside the lock passes gracina to 90% of the total time, it makes no sense to write multi-threaded code. A single-threaded application in this case will work faster. What is even more surprising is a sharp jump downward in the performance of 4 streams in the region of 60%. It was like an anomaly, so I repeated the test several times, tried to run the tests in a different order. But each time it turned out the same thing. My best guess is that this combination of the number of threads, the duration of the locks, and the CPU load brought the Windows Scheduler to some extreme mode of operation, but I did not investigate it further.

Benchmark Locking Capture Frequency

Even a lightweight mutex carries some overhead. A pair of lock / unlock operations for the critical section in Windows runs about 23.5 nanoseconds (on the above processor). Thus, even 15,000 locks per second do not carry any significant load affecting performance. But what happens if we increase the frequency?

The above algorithm offers very convenient means of controlling the amount of work performed between the locks. I ran another series of tests: from 10 nanoseconds to 31 microseconds between locks, which corresponds to approximately 32,000 locks per second. In each test, two threads were used.

As you might have expected, at very high frequencies, the overhead of locking starts to affect overall performance. At such frequencies, only a few instructions are executed inside the lock, which is comparable in time to the capture / release of the synchronization object itself. The good news is that for such short (and therefore simple) operations, it is quite possible to develop and use some kind of lock-free algorithm.

At the same time, the results showed that calling locks up to 320,000 times per second (3.1 microseconds between locks) can be quite effective. In gamedev, a memory allocator can work normally at similar frequencies during game loading. You still get up to 1.5x gain from multithreading in this case (but only if the duration of the lock itself is short).

We examined a wide range of different cases of using locks: from their very high performance to cases where the idea of multithreading itself makes no sense. An example with a memory allocator in a game engine showed that in real applications a high frequency of using locks is quite acceptable under certain conditions. With such arguments, no one will be able to simply unfoundedly say that "locks work slowly." Yes, locks can be used in an inefficient way, but living with this fear is not worth it - fortunately we have profilers that easily identify such problems. Every time you want to rush headlong into the maelstrom of exciting and dangerous lock-free programming - remember this article and the fact that locks can work quite quickly.

The purpose of this article was to return the locks a little of the respect that they rightly deserve. I understand that with all the wealth of options for using locks in industrial software, programmers were accompanied by both successes and painful failures in the process of finding a balance of performance. If you have an example of this or that, tell us about it in the comments.