More coffee, less caffeine: Intel 9th Gen (Part 2)

- Transfer

Part 1 → Part 2 → Part 3 → Part 4

The “System Tests” section focuses on testing in real-world conditions faced by the consumer, with a slight bias in throughput. In this section, we consider application load time, image processing, simple physics, emulation, neural modeling, optimized computation, and the development of three-dimensional models using readily available and customizable software. Although some of the tests more simply overlap with the capabilities of large packages, such as PCMark, (we publish these values in the office test section), it’s still worth considering different perspectives. In all tests we will explain in detail what is being tested and how exactly we are testing.

One of the most important aspects of user experience and workflow is the speed of response of the system. A good test here is to check the application download time. Most programs nowadays, when stored on an SSD, load almost instantly, but some office tools require preloading of assets before they are ready to go. Most operating systems also use caching, so when certain software is loaded frequently (web browser, office tools), it can be initialized much faster.

In the previous test suite, we checked how long it would take to load a large PDF document in Adobe Acrobat. Unfortunately, this test was a nightmare in terms of programming, and refused to switch to Win10 RS3 without a fight. In the meantime, we found an application that can automate this test, it was decided to use GIMP, a popular and free photo editing application. This is an open source editor, the main alternative to Adobe Photoshop. We configured it to load a large design pattern of 50 MB in size, performed the load 10 times with an interval of 10 seconds between them. Because of the caching, the first 3-5 results are often slower than the others, and the time for caching can be inconsistent, so we take the average of the last five results,

Downloading an application is usually limited to one thread, but it is obvious that at some point it is also limited to kernel resources. Having access to more resources per stream (in an environment without HT), the 8C / 8T and 6C / 6T processors outperform both 5.0 GHz processors in our testing.

The FCAT software was designed to detect micro-sticking, dropped frames and running graphics tests when two video cards are combined to render the scene. Because of the game engines and graphics drivers, not all GPU combinations worked perfectly, with the result that the software captured colors for each rendered frame and dynamically produced RAW data recording using a video capture device.

FCAT software takes recorded video, in our case it is 90 seconds 1440p of Rise of the Tomb Raider game, and converts color data to frame time data, so the system can display the “observed” frame rate and correlate with the power consumption of video accelerators. This test, because of how quickly it was compiled, is single-threaded. We start the process, and get the completion time as a result.

FCAT is another scenario limited to single-threaded performance, and it seems that the new 9th generation processors do well here. 9700K and 9900K gave the same time with a difference in milliseconds.

Our 3DPM test is a custom benchmark designed to simulate six different algorithms for the movement of particles in three-dimensional space. The algorithms were developed as part of my PhD thesis and, ultimately, work best on the GPU, and give a good idea of how command flows are interpreted by different micro-architectures.

The key part of the algorithms is the generation of random numbers — we use relatively fast generation, which completes the implementation of dependency chains in the code. The main update compared with the primitive first version of this code - the problem of False Sharing in the caches was solved, which was the main bottleneck. We are also considering using the AVX2 and AVX512 versions of this test for future reviews.

For this test, we launch the stock set of particles using six different algorithms, within 20 seconds, with 10-second pauses, and report the total speed of movement of the particles in millions of operations (movements) per second.

Based on the non-AVX code, the 9900K shows slightly better IPC and frequencies compared to the R7 2700X, although in reality it is not as large a percentage jump as we might have expected. Non-HT processors lose in this test.

But when we use AVX2 / AVX512, Skylake-X processors are in their element. 9900K is now far superior to the R7 2700X, even more than we expected, the Core i7-9700K also takes the lead.

One of the most popular requested tests in our package is console emulation. The ability to select a game from an outdated system and run it is very attractive, and depends on the efforts of the emulator: it takes a much more powerful x86 system to be able to accurately emulate an old console, different from x86. Especially if the code for this console was made taking into account some physical flaws and equipment bugs.

For our test, we use the popular Dolphin emulation software, run a computational project through it to determine how accurately our processors can emulate a console. In this test, work under the Nintendo Wii emulation will last about 1050 seconds.

Dolphin is another scenario, limited by the performance of a single thread, so Intel processors are historically among the leaders. Here 9900K surpasses 9700K for just a second.

This benchmark was originally designed to model and visualize the activity of neurons and synapses in the brain. The software comes with various preset modes, we chose a small benchmark that performs brain simulation from 32 thousand neurons / 1.8 billion synapses, which is equivalent to the brain of a sea slug.

We report the test results as an opportunity to emulate data in real time, so any results above the “one” are suitable for working in real time. Of the two modes, the mode “without launching synapses”, which is heavy for DRAM, and the mode “with launching synapses”, in which the processor is loaded, we choose the latter. Despite our choices, the test still affects the speed of DRAM.

DigiCortex is heavily dependent on processor performance and memory bandwidth, but it looks like the 6-core Ryzen can easily compete with the 8-core 9900K. The 8700K / 8086K seems to handle this test better.

I once heard of y-Cruncher as a tool to help calculate various mathematical constants. But after I started talking to his developer, Alex Yee, a NWU researcher and now a software optimization developer, I realized that he had optimized the software in an incredible way to get the best performance. Naturally, any simulation that takes 20+ days will benefit from a 1% performance boost! Alex started working with y-Cruncher as a project in high school, but now the project is up to date, Alex is constantly working on it to take advantage of the latest instruction sets, even before they are available at the hardware level.

For our test, we run y-Cruncher v0.7.6 through all possible optimized variants of binary, single-threaded and multi-threaded calculations, including binary files optimized for AVX-512. The test is to calculate 250 million characters of Pi, and we use single-threaded and multi-threaded versions of this test.

Since the y-cruncher gets the advantages of the AVX2 / AVX512, we can see that the Skylake-X processors go back to their cozy world. In multithreaded mode, the 9900K / 9700K requires 8 cores in order to overtake the 6-core processor supporting AVX512.

One of the ISVs we have been working with for several years is Agisoft. The campaign is developing software called PhotoScan, which converts a series of 2D images into a 3D model. It is an important tool in the development and archiving of models, and relies on a number of single-threaded and multi-threaded algorithms to move from one side of the calculation to the other.

In our test, we take version 1.3.3 of the software with a large data set — photographs 84 x 18 megapixels. We run the test on a fairly quick set of algorithms, but still more stringent than our 2017 test. As a result, we report the total time to complete the process.

Photoscan is a task that makes the most of high bandwidth, single-threaded performance, and in this case, the presence of HT is a burden.

In a professional environment, rendering is often the primary concern for processor workloads. It is used in various formats: from 3D rendering to rasterization, in such tasks as games or ray tracing, and uses the ability of software to manage meshes, textures, collisions, aliases, and physics (in animation). Most renderers offer code for the CPU, while some of them use graphics processors and choose environments that use FPGA or specialized ASICs. However, for large studios, processors are still the main hardware.

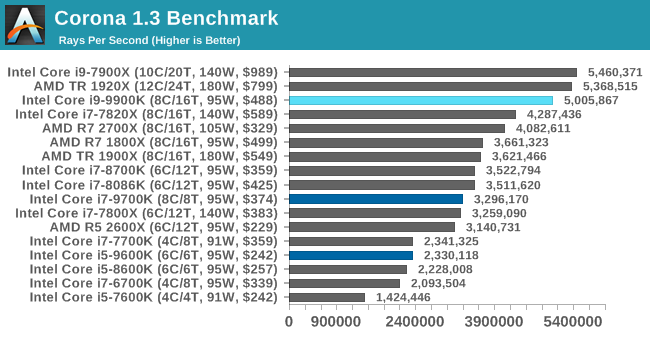

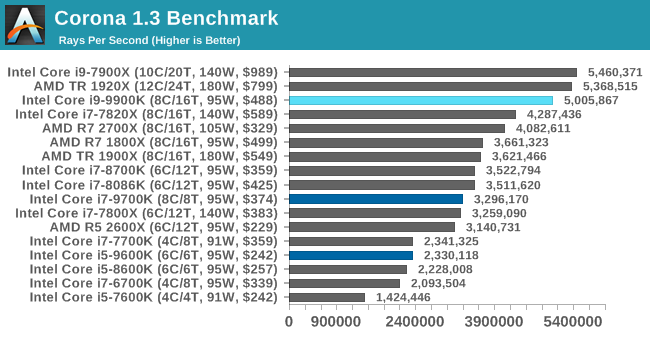

Improved performance optimizer for software such as 3ds Max and Cinema 4D, Corona test renders the generated scene of standard version 1.3. Typically, a benchmark GUI implementation shows the scene building process, and allows the user to see the result as “time to complete.”

We contacted the developer who gave us the command line version of the test. It provides direct output of results. Instead of reporting the scene construction time, we report the average number of rays per second over six runs, since the ratio of actions performed to units of time is visually easier to understand.

Corona is a fully multi-threaded test, so processors without an HT are a bit slow. Core i9-9900K takes off to the top, overtaking AMD's 8-core components with a 25 percent margin, and second only to the 12-core Threadripper.

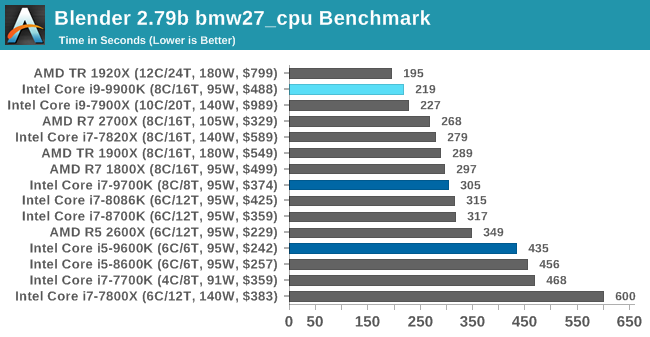

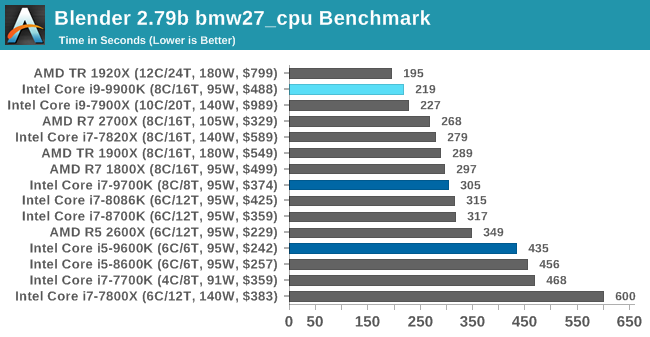

A high-end rendering tool, Blender is an open source product with many settings and configurations used by many high-end animation studios around the world. The organization recently released the Blender test suite, a couple of weeks after we decided to reduce the use of the Blender test in our new package, but the new test may take more than an hour. To get our results, we launch one of the subtests in this package via the command line - the standard bmw27 scene in the “CPU only” mode, and measure the rendering completion time.

Blender has an eclectic mix of requirements, from memory bandwidth to raw performance, but, as in Corona, non-HT processors lag a bit in it. The high frequency of 9900K raises it above 10C Skylake-X and AMD 2700X, but not above 1920X.

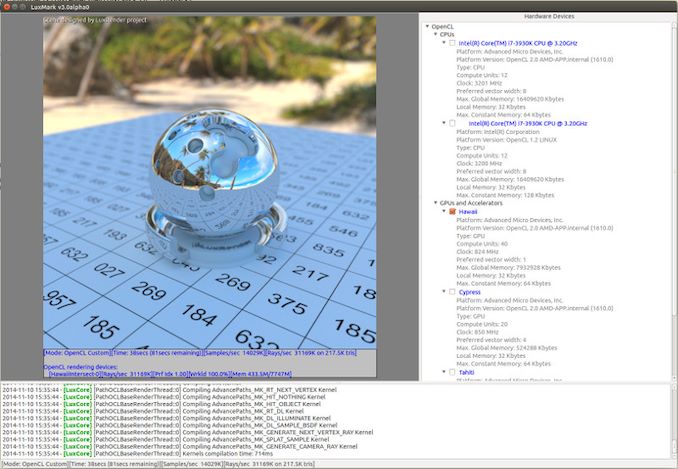

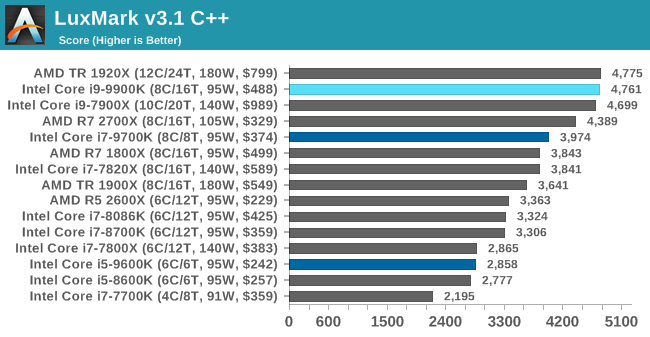

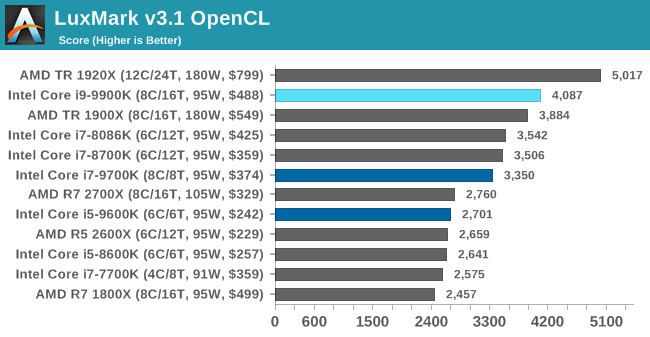

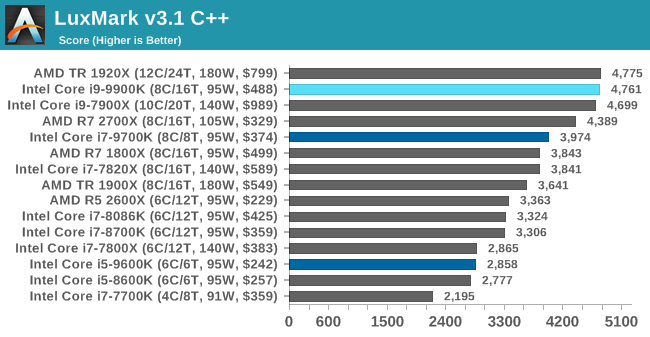

As stated above, there are many different ways to handle rendering data: CPU, GPU, Accelerator, and others. In addition, there are many frameworks and APIs in which to program, depending on how the software is used. LuxMark, a benchmark designed using the LuxRender mechanism, offers several different scenes and an API.

taken from the Linux version of LuxMark

In our test, we run a simple “Ball” scene on the C ++ and OpenCL code, but in CPU mode. This scene begins with a rough rendering and slowly improves in quality over two minutes, giving the final result in what can be called "medium kilo-ray per second."

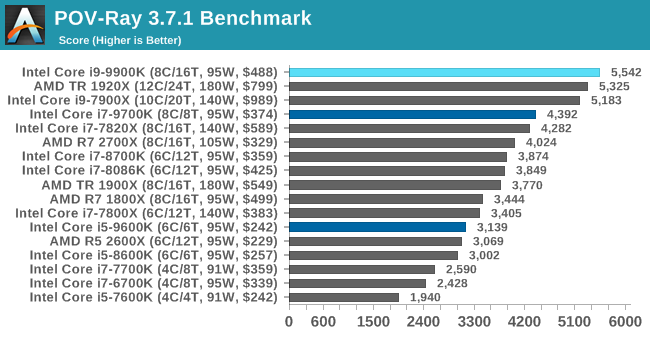

The Persistence of Vision ray tracing engine is another well-known benchmarking tool that was dormant for a while until AMD released its Zen processors when suddenly both Intel and AMD started pushing code into the main branch of the open source project. For our test, we use the built-in test for all cores, called from the command line.

The Office Test Suite is designed to focus on more industry standard benchmarks that focus on office workflows. These are more synthetic tests, but we also check the compiler performance in this section. For users who need to evaluate the equipment as a whole, these are usually the most important criteria.

Futuremark, now known as UL, has been developing tests that have become industry standards for two decades. The latest system test suite is PCMark 10, where several tests have been improved compared to PCMark 8, and more attention has been paid to OpenCL, specifically in such cases as video streaming.

PCMark splits its assessments into approximately 14 different areas, including application launch, web pages, spreadsheets, photo editing, rendering, video conferencing and physics. We publish all these data in our Bench database, but the key indicator for the current review is the overall score.

Here, where many tests are mixed, the new processors from Intel occupy the top three positions, in order. Even the i5-9600K is ahead of the i7-8086K.

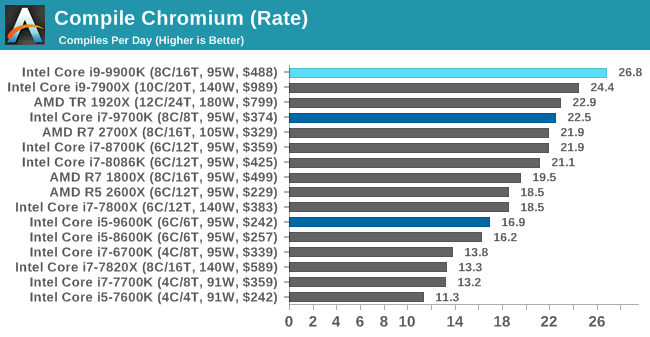

A large number of AnandTech readers are software engineers who look at how the hardware works. Although Linux kernel compilation is “standard” for reviewers who often compile, our test is a bit more varied — we use Windows instructions to compile Chrome, in particular, Chrome build March 56, 2017, as it was when we created the test. Google gives detailed instructions on how to compile under Windows after downloading 400,000 files from the repository.

In our test, following the instructions of Google, we use the MSVC compiler, and ninja to manage the compilation. As you would expect, this is a test with variable multithreading, and with variable DRAM requirements that benefit from faster caches. The results obtained in our test is the time taken to compile, which we convert to the number of compilations per day.

The high rates of the full-scale turbo frequency seem to work well in our compilation test.

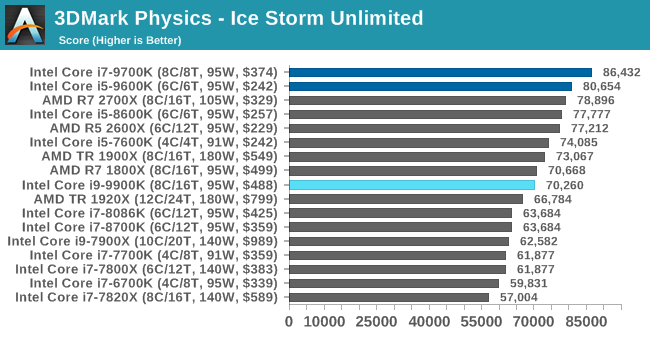

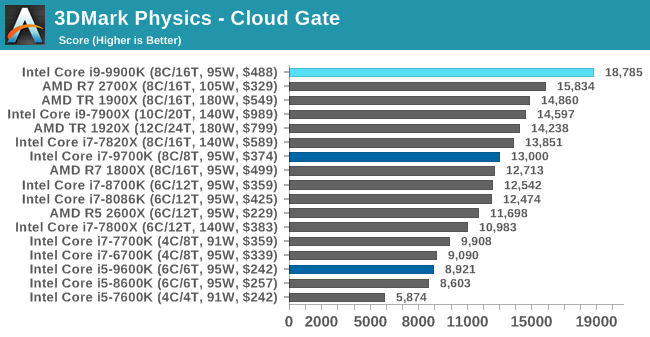

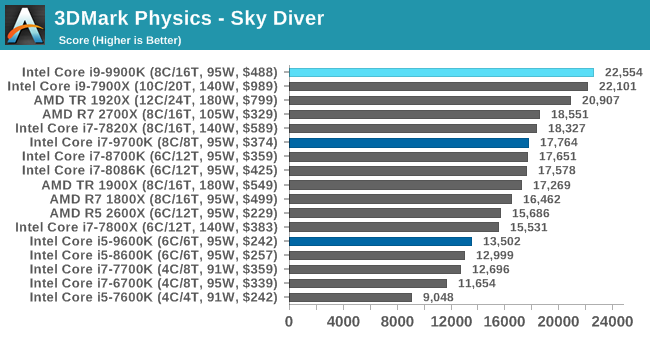

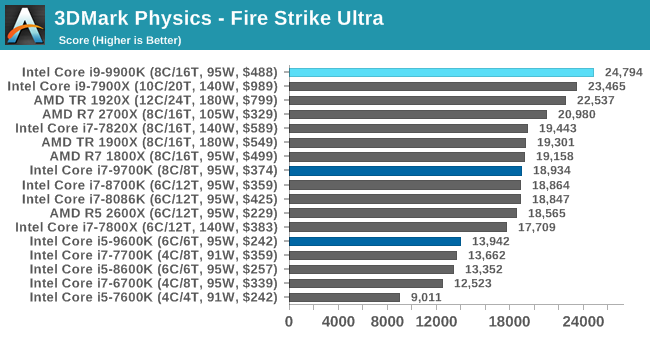

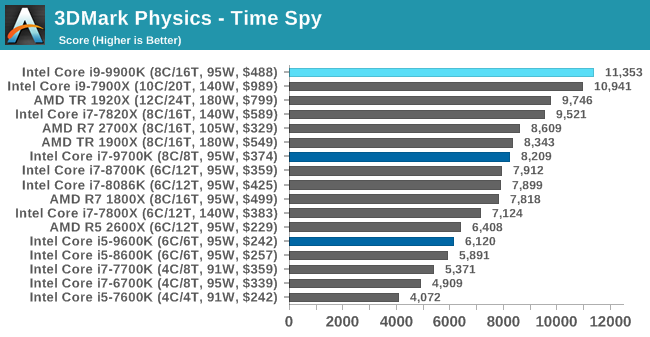

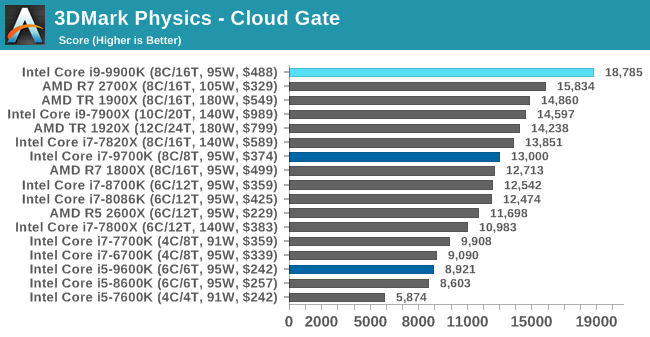

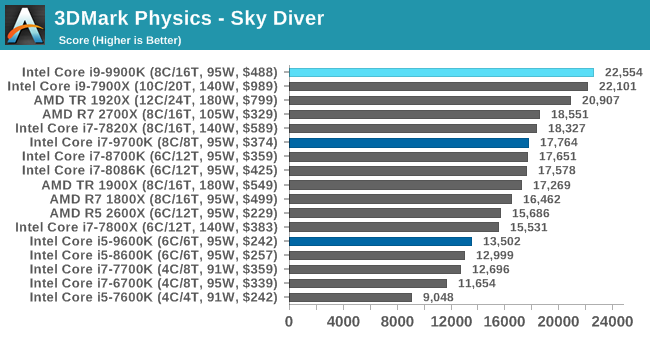

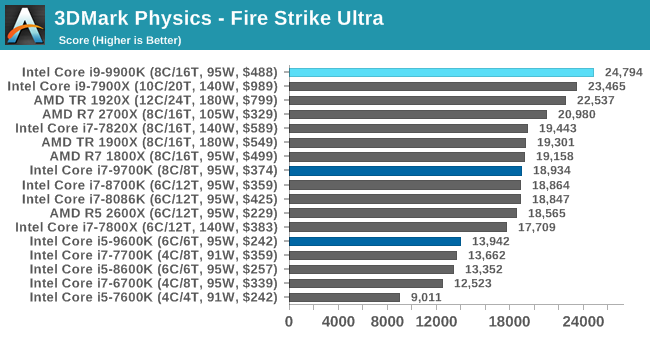

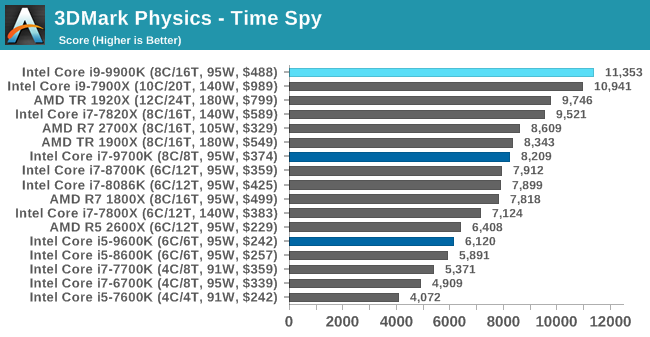

Along with PCMark there is a benchmark 3DMark, Futuremark (UL) - a set of game tests. Each game test consists of one or two scenes, heavy for the GPU, as well as a physical test, depending on when the test was written and on which platform it is aimed. The main subjects, in order of increasing complexity, are Ice Storm, Cloud Gate, Sky Diver, Fire Strike and Time Spy.

Some of the subtests offer other options, such as Ice Storm Unlimited (designed for mobile platforms with off-screen rendering), or Fire Strike Ultra (designed for high-performance 4K systems with many added features). It is worth noting that Time Spy currently has AVX-512 mode (which we can use in the future).

As for our tests, we send the results of each physical test to Bench, but for review we follow the results of the most demanding scenes: Ice Storm Unlimited, Cloud Gate, Sky Diver, Fire Strike Ultra and Time Spy.

The older test Ice Storm did not like the new Core i9-9900K, pushing it behind the R7 1800X. For more advanced PC-oriented tests, 9900K wins. The lack of HT prevents the other two processors in the line to show high results.

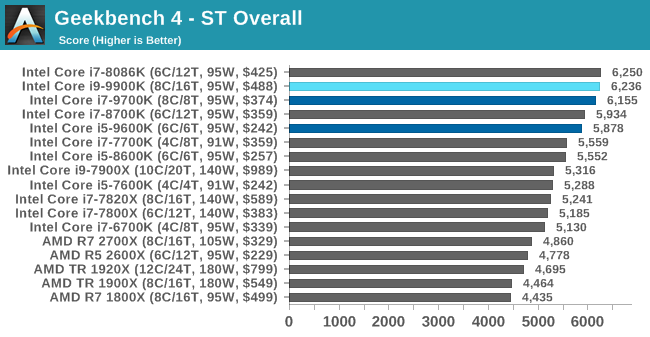

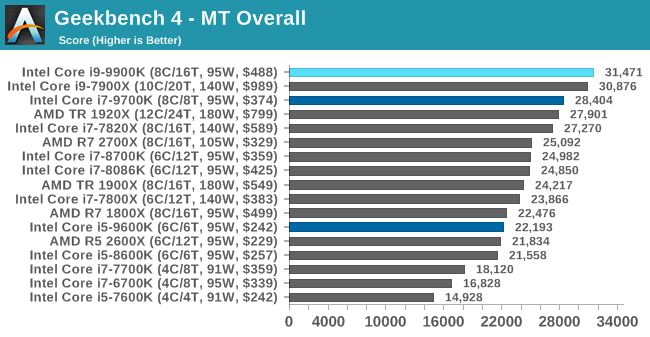

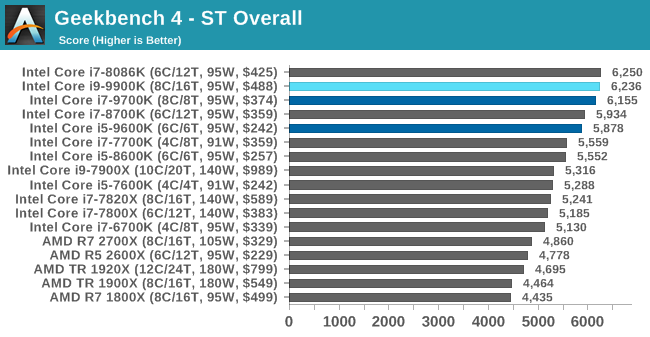

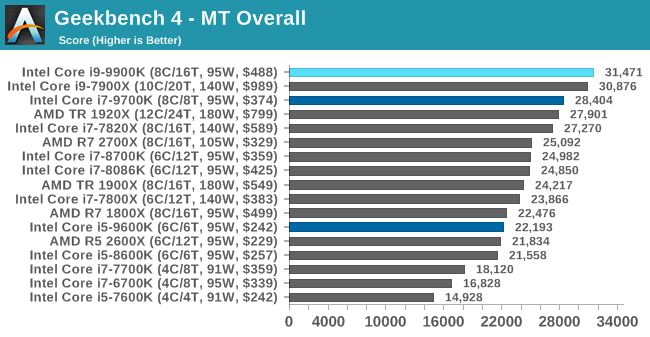

A common tool for cross-platform testing on mobile devices, PCs and Macs, GeekBench 4 is the perfect synthetic test of the system using a variety of algorithms that require maximum throughput. Tests include encryption, compression, fast Fourier transform, memory operations, n-body physics, matrix operations, histogram manipulation, and HTML parsing.

I include this test because of the popularity of the request, although its results are very much synthetic. Many users often attach great importance to its results due to the fact that it is compiled on different platforms (albeit by different compilers).

We write evaluations of the main subtests (Crypto, Integer, Floating Point, Memory) into our database of test results, but for review we publish only general single-threaded and multi-threaded results.

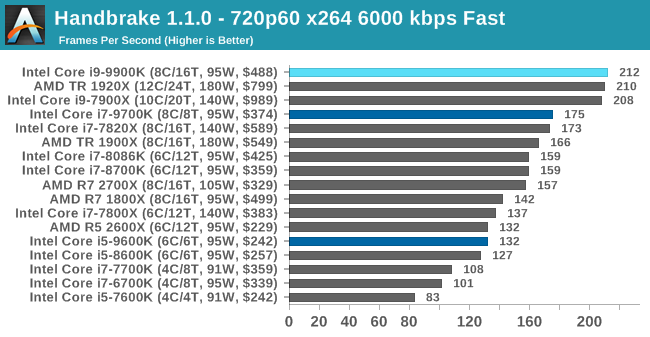

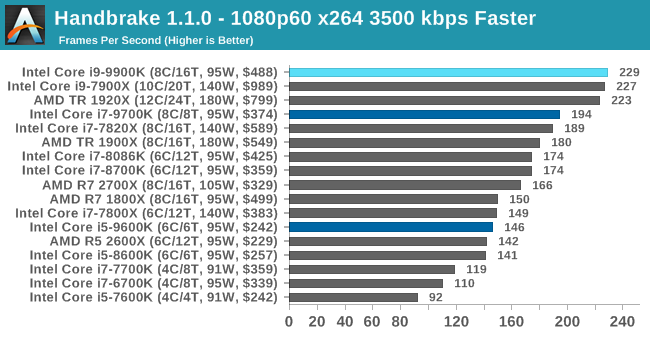

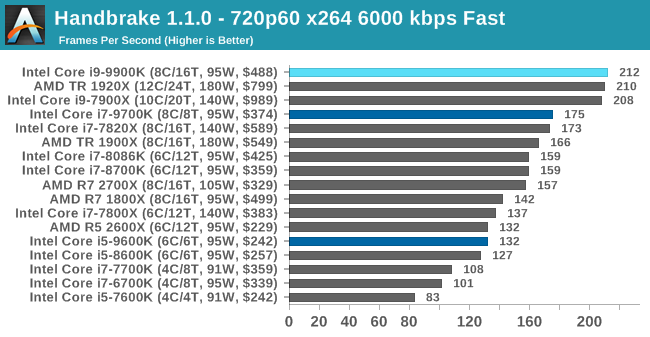

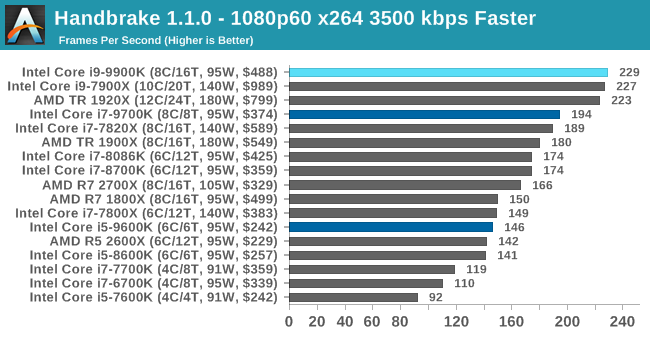

With the increasing number of streams, video blogs and video content in general, encoding and transcoding tests are becoming increasingly important. Not only are more and more home users and gamers involved in converting video files and video streams, but servers that process data streams need to be encrypted on the fly, as well as compression and decompression of logs. Our coding tests are aimed at such scenarios, and take into account the opinion of the community to provide the most current results.

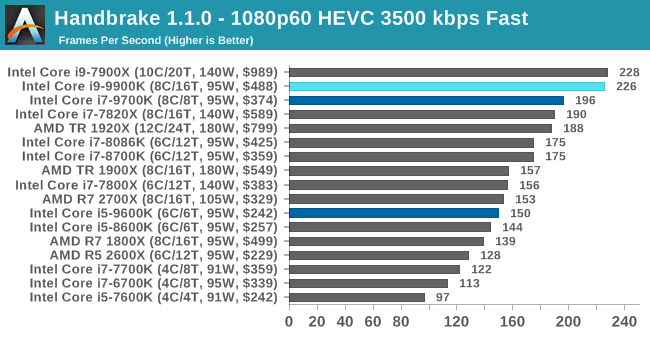

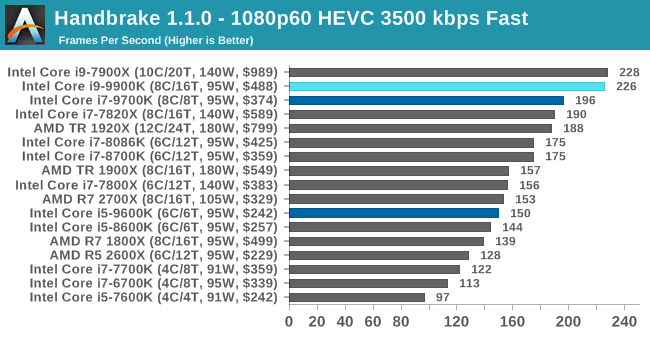

A popular open source tool, Handbrake is video conversion software in every possible way, which, in a sense, is the benchmark. The danger here lies in the version numbers and in the optimization. For example, the latest software versions can take advantage of the AVX-512 and OpenCL to speed up certain types of transcoding and certain algorithms. The version we are using is clean CPU work with standard transcoding options.

We divided Handbrake into several tests using recording from a Logitech C920 1080p60 native webcam (essentially stream recording). The record will be converted to two types of stream formats and one for archiving. Used output parameters:

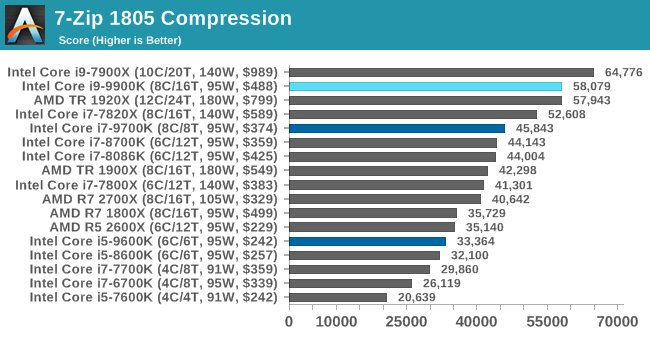

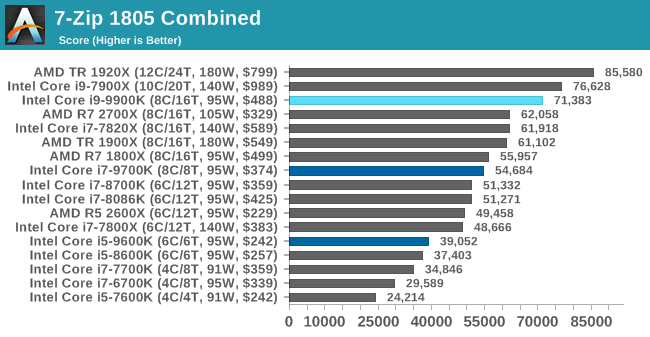

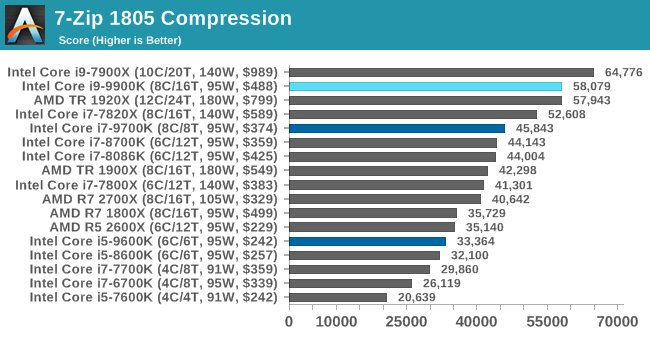

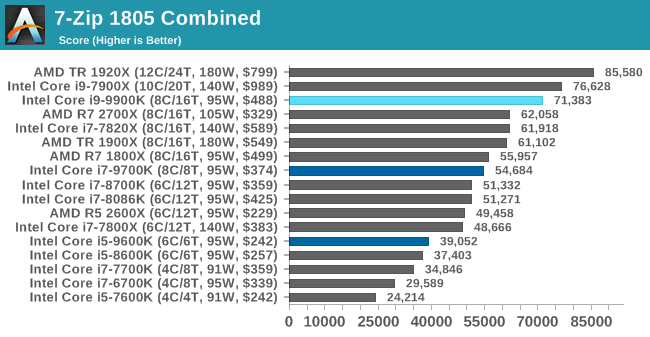

Of all our archiving / unarchiving tests, 7-zip is the most requested, and has a built-in benchmark. In our test suite, we introduced the latest version of this software, and we run the benchmark from the command line. The results of archiving and unzipping are displayed as a single total score.

In this test, it is clearly seen that modern processors with several matrices have a large difference in performance between compression and decompression: they manifest themselves well in one and badly in another. In addition, we are actively discussing how Windows Scheduler implements each thread. When we get more results, we will be happy to share our thoughts on this matter.

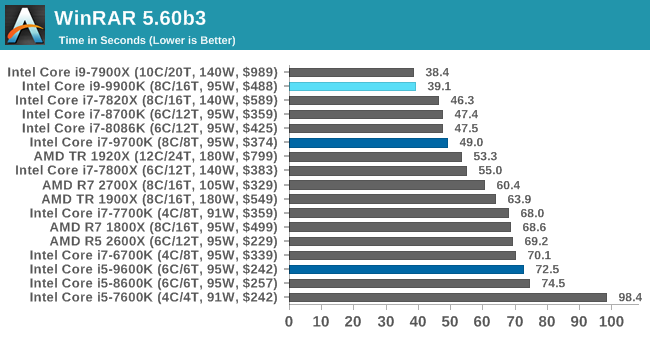

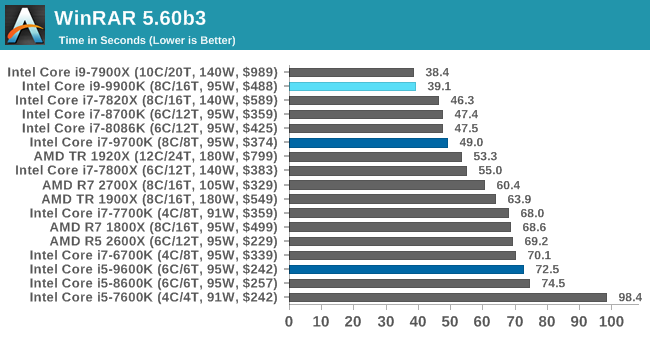

When I need a compression tool, I usually choose WinRAR. Many users of my generation used it more than two decades ago. The interface has not changed much, although integration with the right-click commands in Windows is a very nice plus. It does not have a built-in benchmark, so we run a compression directory containing more than 30 60-second video files and 2000 small web files, with a normal compression rate.

WinRAR has a variable multithreading, and is demanding for caching, so in our test we run it 10 times, and we calculate the average value for the last five runs, which means testing only the processor performance.

A number of platforms, especially mobile devices, encrypt filesystems by default to protect content. Windows-based devices often use BitLocker or third-party software. In the AES encryption test, we used the discontinued TrueCrypt in the benchmark, which tests several encryption algorithms directly in memory.

The data obtained from this test is the combined AES performance for encryption / decryption, measured in gigabytes per second. The software uses AES commands if the processor allows it, but does not use the AVX-512.

Due to the focus on low-end systems, or small form factor systems, web tests are usually difficult to standardize. Modern web browsers are often updated without giving the opportunity to disable these updates, so it is difficult to maintain some kind of common platform. The rapid pace of browser development means that versions (and performance indicators) can change from week to week. Despite this, web tests are often an important indicator for users: many of the modern office work are related to web applications, especially electronic and office applications, as well as interfaces and development environments. Our web test suite includes several industry standard tests, as well as several popular, but somewhat outdated tests.

We also included our outdated, but still popular tests in this section.

The company behind the XPRT test suite, Principled Technologies, recently released the newest web test, and instead of adding the release year to the name, it was simply called "3". This newest test (at least for now) is developed on the basis of such predecessors: user interaction tests, office computing, graphing, list sorting, HTML5, image manipulation, and in some cases even AI tests.

For our benchmark, we run a standard test that will work out the checklist seven times and give the final result. We carry out such a test four times, and derive the average value.

An older version of WebXPRT is the 2015 edition, which focuses on a slightly different set of web technologies and frameworks used today. This is still an actual test, especially for users who interact with not the latest web applications on the market, and there are many such users. Development of web frameworks is moving very fast and has high turnover. The frameworks are quickly developed, embedded in applications, used, and immediately the developers move on to the next. And the adaptation of the application under the new framework is a difficult task, especially with such a speed of development cycles. For this reason, many applications are “stuck in time,” and remain relevant to users for many years.

As in the case of WebXPRT3, the main benchmark runs the control set seven times, displaying the final result. We repeat this four times, display the average and show the final results.

Our newest web test is Speedometer 2, which runs through a variety of javascript frameworks to do just three simple things: build a list, turn on each item in the list, and delete the list. All frameworks implement the same visual cues, but, obviously, they do it in different ways.

Our test passes the entire list of frameworks and gives the final score called “rpm”, one of the internal benchmark indicators. We display this figure as the final result.

A popular web test for several years, but no longer updated, is Google's Octane. Version 2.0 performs a couple of dozen tasks related to calculations, such as regular expressions, cryptography, ray tracing, emulation, and the calculation of the Navier – Stokes equations.

The test gives each of the subtests a score, and returns the geometric average as the final result. We run a full benchmark four times and evaluate the final results.

Even older than Octane, in front of us is Kraken, this time developed by Mozilla. This is an old test that performs relatively monotonous computational mechanics, such as sound processing or image filtering. It seems that Kraken produces a very unstable result, depending on the browser version, since this test is highly optimized.

The main benchmark passes through each of the subtests ten times, and returns the average completion time for each cycle in milliseconds. We run a full benchmark four times, and measure the average result.

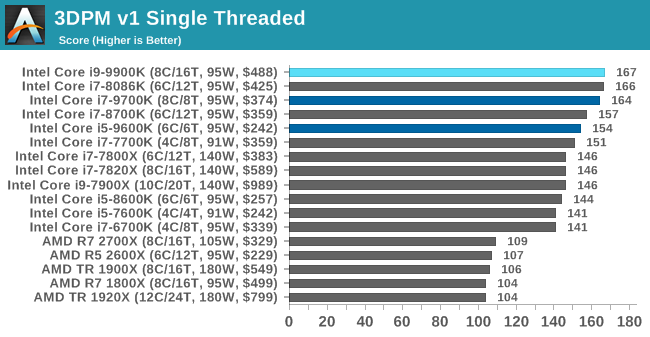

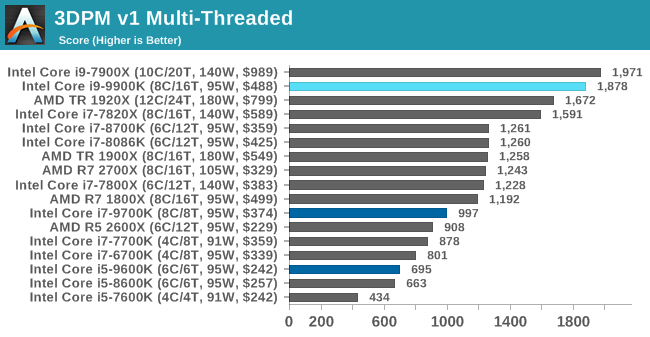

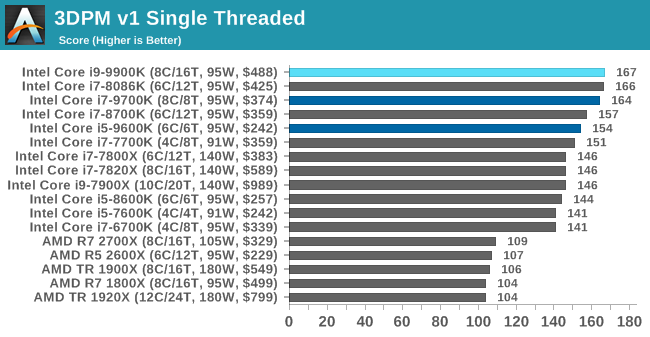

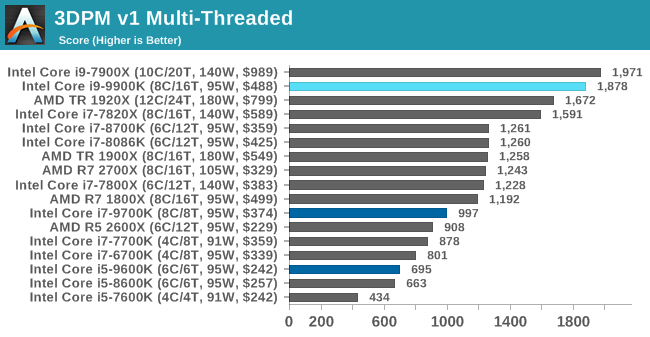

The first “inherited” test in the package is the first version of our 3DPM test. This is the ultimate native version of the code, as if it were written by a scientist without knowledge of how computer equipment, compilers, or optimization work (as it was at the very beginning). The test is a large amount of scientific modeling in the wild, where getting an answer is more important than the speed of calculations (getting a result in 4 days is acceptable if it is correct; a year learns to program and getting a result in 5 minutes is not acceptable).

In this version, the only real optimization was in the compiler flags (-O2, -fp: fast): compile into release mode and enable OpenMP in the main calculation cycles. The cycles were not adjusted to the size of the functions, and the most serious slowdown is false sharing in the cache. The code also has long chains of dependencies based on random number generation, which leads to a decrease in performance on some computational micro-architectures.

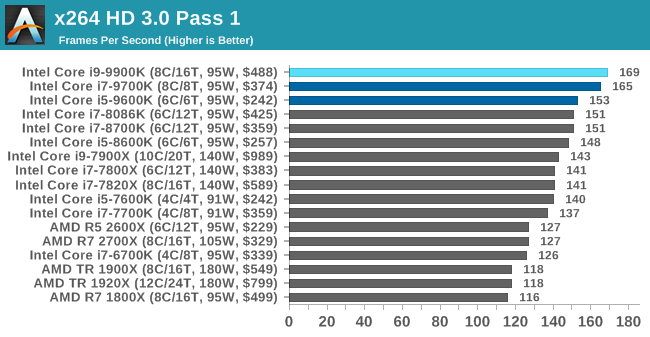

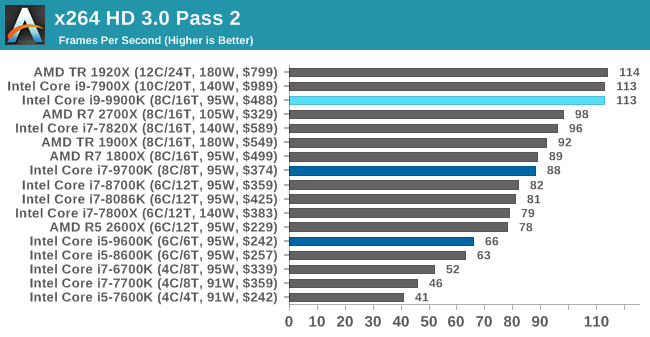

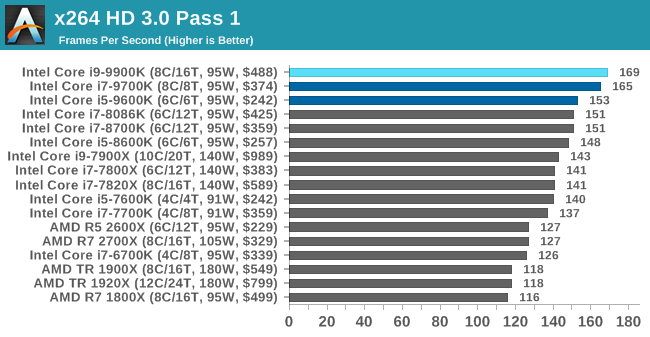

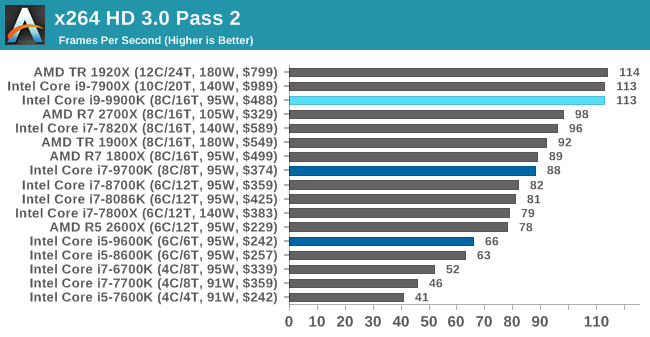

This transcoding test is very old; it was used by Anandtech in the days of the Pentium 4 and Athlon II processors. In it, standardized 720p video is recoded with double conversion, and the benchmark shows frames per second of each pass. The test is single-threaded, and in some architectures we run into the IPC constraint, instructions-per-clock.

Thank you for staying with us. Do you like our articles? Want to see more interesting materials? Support us by placing an order or recommending to friends, 30% discount for Habr's users on a unique analogue of the entry-level servers that we invented for you: The whole truth about VPS (KVM) E5-2650 v4 (6 Cores) 10GB DDR4 240GB SSD 1Gbps from $ 20 or how to share the server?(Options are available with RAID1 and RAID10, up to 24 cores and up to 40GB DDR4).

VPS (KVM) E5-2650 v4 (6 Cores) 10GB DDR4 240GB SSD 1Gbps until January 1 for free if you pay for a period of six months, you can order here .

Dell R730xd 2 times cheaper? Only we have 2 x Intel Dodeca-Core Xeon E5-2650v4 128GB DDR4 6x480GB SSD 1Gbps 100 TV from $ 249 in the Netherlands and the USA! Read about How to build an infrastructure building. class c using servers Dell R730xd E5-2650 v4 worth 9000 euros for a penny?

System tests

The “System Tests” section focuses on testing in real-world conditions faced by the consumer, with a slight bias in throughput. In this section, we consider application load time, image processing, simple physics, emulation, neural modeling, optimized computation, and the development of three-dimensional models using readily available and customizable software. Although some of the tests more simply overlap with the capabilities of large packages, such as PCMark, (we publish these values in the office test section), it’s still worth considering different perspectives. In all tests we will explain in detail what is being tested and how exactly we are testing.

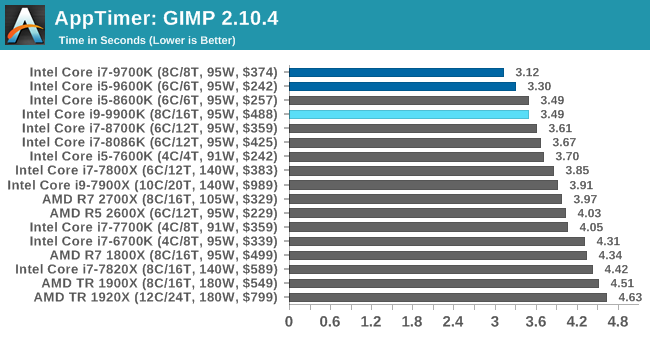

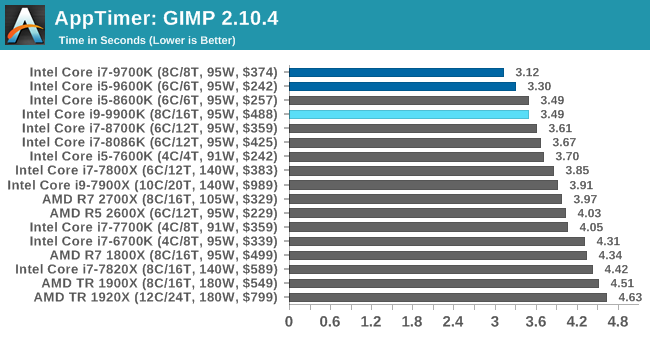

Application Download: GIMP 2.10.4

One of the most important aspects of user experience and workflow is the speed of response of the system. A good test here is to check the application download time. Most programs nowadays, when stored on an SSD, load almost instantly, but some office tools require preloading of assets before they are ready to go. Most operating systems also use caching, so when certain software is loaded frequently (web browser, office tools), it can be initialized much faster.

In the previous test suite, we checked how long it would take to load a large PDF document in Adobe Acrobat. Unfortunately, this test was a nightmare in terms of programming, and refused to switch to Win10 RS3 without a fight. In the meantime, we found an application that can automate this test, it was decided to use GIMP, a popular and free photo editing application. This is an open source editor, the main alternative to Adobe Photoshop. We configured it to load a large design pattern of 50 MB in size, performed the load 10 times with an interval of 10 seconds between them. Because of the caching, the first 3-5 results are often slower than the others, and the time for caching can be inconsistent, so we take the average of the last five results,

Downloading an application is usually limited to one thread, but it is obvious that at some point it is also limited to kernel resources. Having access to more resources per stream (in an environment without HT), the 8C / 8T and 6C / 6T processors outperform both 5.0 GHz processors in our testing.

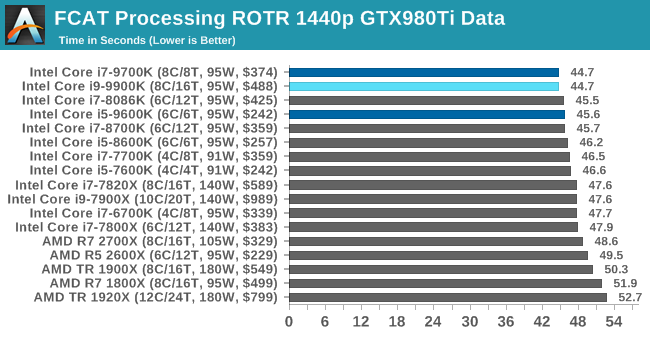

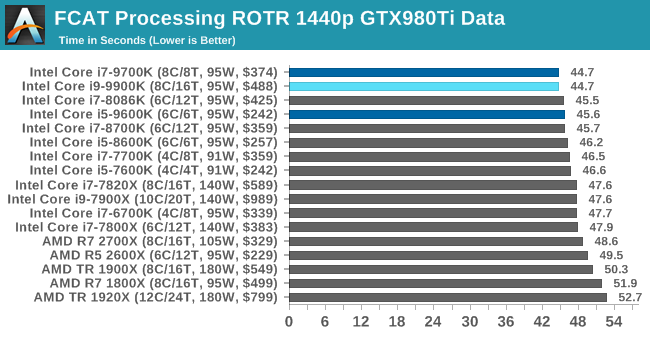

FCAT: image processing

The FCAT software was designed to detect micro-sticking, dropped frames and running graphics tests when two video cards are combined to render the scene. Because of the game engines and graphics drivers, not all GPU combinations worked perfectly, with the result that the software captured colors for each rendered frame and dynamically produced RAW data recording using a video capture device.

FCAT software takes recorded video, in our case it is 90 seconds 1440p of Rise of the Tomb Raider game, and converts color data to frame time data, so the system can display the “observed” frame rate and correlate with the power consumption of video accelerators. This test, because of how quickly it was compiled, is single-threaded. We start the process, and get the completion time as a result.

FCAT is another scenario limited to single-threaded performance, and it seems that the new 9th generation processors do well here. 9700K and 9900K gave the same time with a difference in milliseconds.

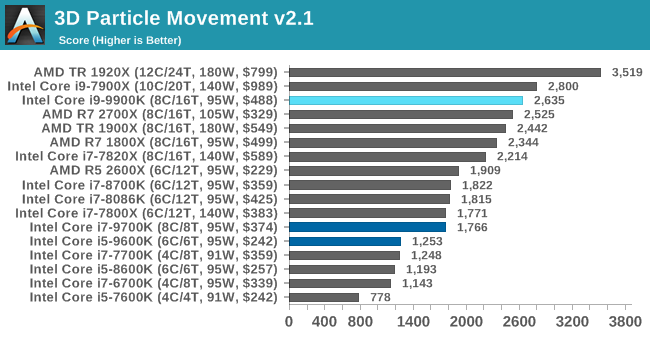

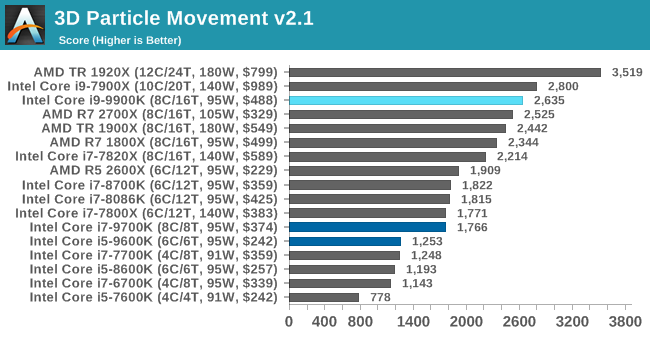

3D Particle Movement v2.1: Brownian Motion

Our 3DPM test is a custom benchmark designed to simulate six different algorithms for the movement of particles in three-dimensional space. The algorithms were developed as part of my PhD thesis and, ultimately, work best on the GPU, and give a good idea of how command flows are interpreted by different micro-architectures.

The key part of the algorithms is the generation of random numbers — we use relatively fast generation, which completes the implementation of dependency chains in the code. The main update compared with the primitive first version of this code - the problem of False Sharing in the caches was solved, which was the main bottleneck. We are also considering using the AVX2 and AVX512 versions of this test for future reviews.

For this test, we launch the stock set of particles using six different algorithms, within 20 seconds, with 10-second pauses, and report the total speed of movement of the particles in millions of operations (movements) per second.

Based on the non-AVX code, the 9900K shows slightly better IPC and frequencies compared to the R7 2700X, although in reality it is not as large a percentage jump as we might have expected. Non-HT processors lose in this test.

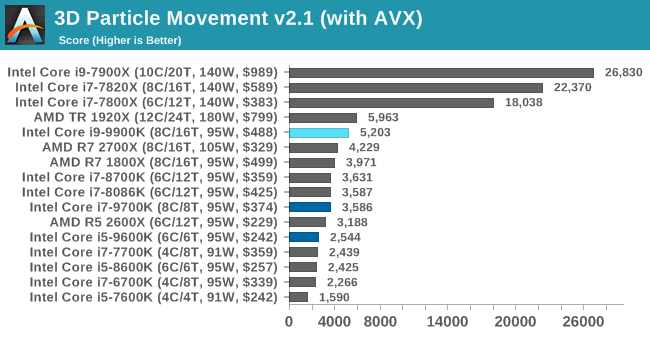

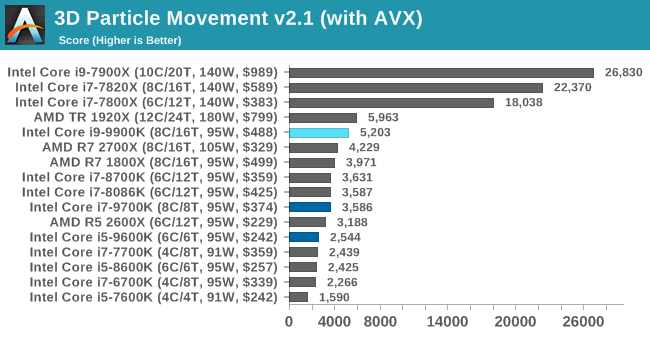

But when we use AVX2 / AVX512, Skylake-X processors are in their element. 9900K is now far superior to the R7 2700X, even more than we expected, the Core i7-9700K also takes the lead.

Dolphin 5.0: console emulation

One of the most popular requested tests in our package is console emulation. The ability to select a game from an outdated system and run it is very attractive, and depends on the efforts of the emulator: it takes a much more powerful x86 system to be able to accurately emulate an old console, different from x86. Especially if the code for this console was made taking into account some physical flaws and equipment bugs.

For our test, we use the popular Dolphin emulation software, run a computational project through it to determine how accurately our processors can emulate a console. In this test, work under the Nintendo Wii emulation will last about 1050 seconds.

Dolphin is another scenario, limited by the performance of a single thread, so Intel processors are historically among the leaders. Here 9900K surpasses 9700K for just a second.

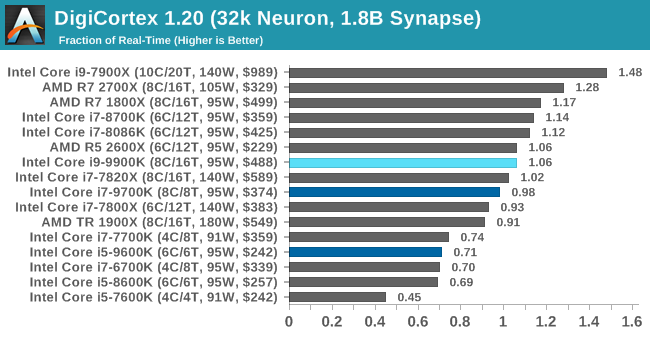

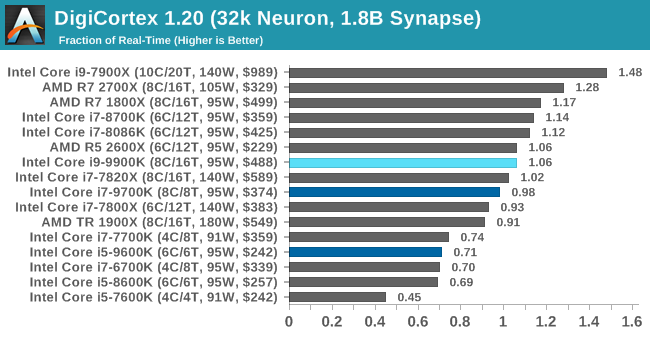

DigiCortex 1.20: Modeling the Brain of a Sea Slug

This benchmark was originally designed to model and visualize the activity of neurons and synapses in the brain. The software comes with various preset modes, we chose a small benchmark that performs brain simulation from 32 thousand neurons / 1.8 billion synapses, which is equivalent to the brain of a sea slug.

We report the test results as an opportunity to emulate data in real time, so any results above the “one” are suitable for working in real time. Of the two modes, the mode “without launching synapses”, which is heavy for DRAM, and the mode “with launching synapses”, in which the processor is loaded, we choose the latter. Despite our choices, the test still affects the speed of DRAM.

DigiCortex is heavily dependent on processor performance and memory bandwidth, but it looks like the 6-core Ryzen can easily compete with the 8-core 9900K. The 8700K / 8086K seems to handle this test better.

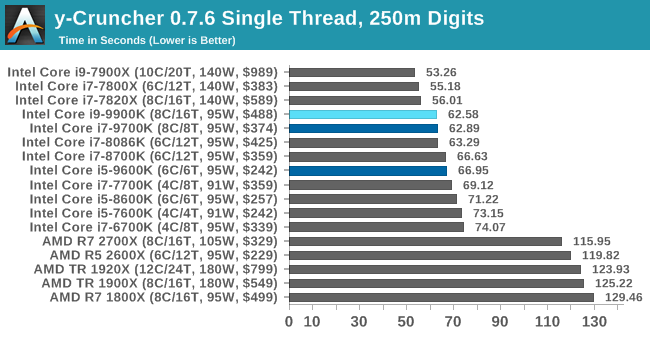

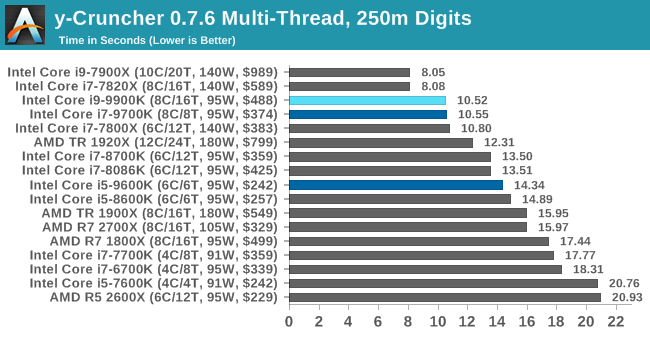

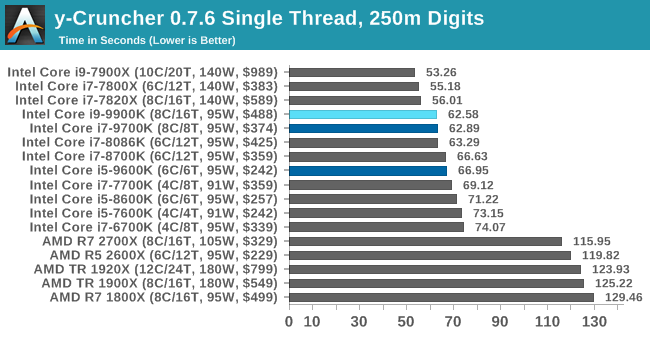

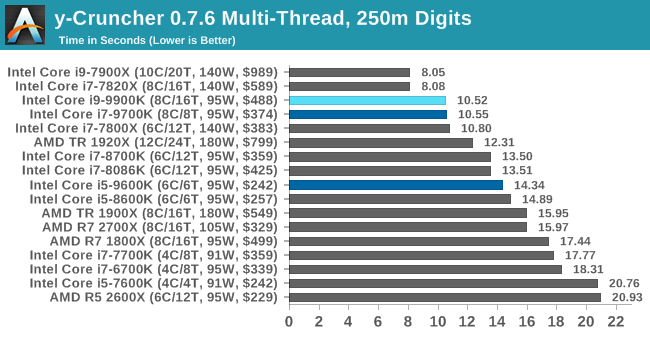

y-Cruncher v0.7.6: Calculations Optimized for Micro-Architecture

I once heard of y-Cruncher as a tool to help calculate various mathematical constants. But after I started talking to his developer, Alex Yee, a NWU researcher and now a software optimization developer, I realized that he had optimized the software in an incredible way to get the best performance. Naturally, any simulation that takes 20+ days will benefit from a 1% performance boost! Alex started working with y-Cruncher as a project in high school, but now the project is up to date, Alex is constantly working on it to take advantage of the latest instruction sets, even before they are available at the hardware level.

For our test, we run y-Cruncher v0.7.6 through all possible optimized variants of binary, single-threaded and multi-threaded calculations, including binary files optimized for AVX-512. The test is to calculate 250 million characters of Pi, and we use single-threaded and multi-threaded versions of this test.

Since the y-cruncher gets the advantages of the AVX2 / AVX512, we can see that the Skylake-X processors go back to their cozy world. In multithreaded mode, the 9900K / 9700K requires 8 cores in order to overtake the 6-core processor supporting AVX512.

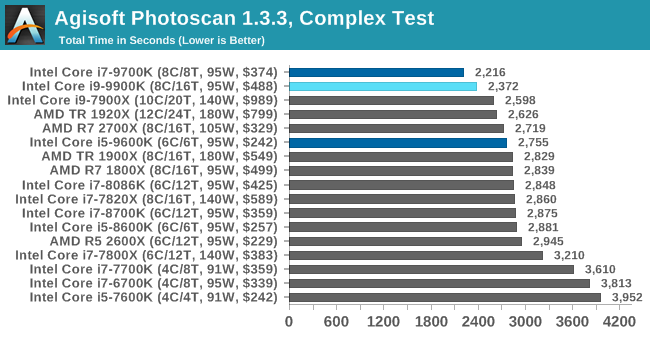

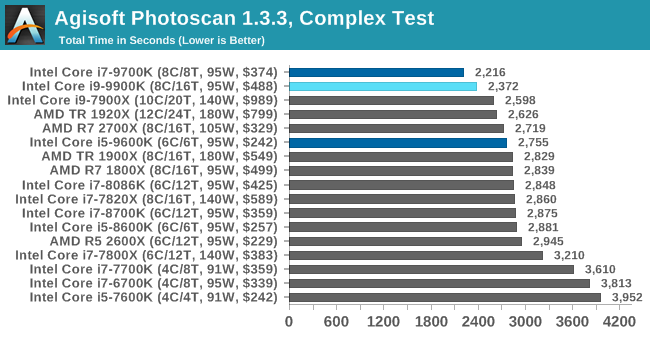

Agisoft Photoscan 1.3.3: 2D image conversion to 3D model

One of the ISVs we have been working with for several years is Agisoft. The campaign is developing software called PhotoScan, which converts a series of 2D images into a 3D model. It is an important tool in the development and archiving of models, and relies on a number of single-threaded and multi-threaded algorithms to move from one side of the calculation to the other.

In our test, we take version 1.3.3 of the software with a large data set — photographs 84 x 18 megapixels. We run the test on a fairly quick set of algorithms, but still more stringent than our 2017 test. As a result, we report the total time to complete the process.

Photoscan is a task that makes the most of high bandwidth, single-threaded performance, and in this case, the presence of HT is a burden.

Rendering Tests

In a professional environment, rendering is often the primary concern for processor workloads. It is used in various formats: from 3D rendering to rasterization, in such tasks as games or ray tracing, and uses the ability of software to manage meshes, textures, collisions, aliases, and physics (in animation). Most renderers offer code for the CPU, while some of them use graphics processors and choose environments that use FPGA or specialized ASICs. However, for large studios, processors are still the main hardware.

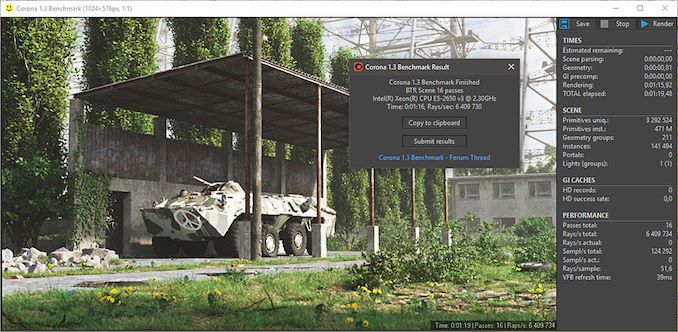

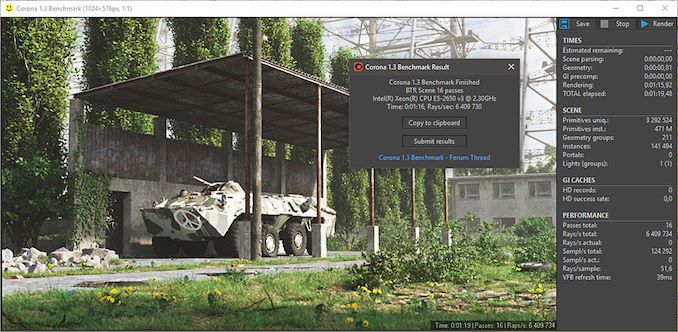

Corona 1.3: Performance Render

Improved performance optimizer for software such as 3ds Max and Cinema 4D, Corona test renders the generated scene of standard version 1.3. Typically, a benchmark GUI implementation shows the scene building process, and allows the user to see the result as “time to complete.”

We contacted the developer who gave us the command line version of the test. It provides direct output of results. Instead of reporting the scene construction time, we report the average number of rays per second over six runs, since the ratio of actions performed to units of time is visually easier to understand.

Corona is a fully multi-threaded test, so processors without an HT are a bit slow. Core i9-9900K takes off to the top, overtaking AMD's 8-core components with a 25 percent margin, and second only to the 12-core Threadripper.

Blender 2.79b: 3D Creation Suite

A high-end rendering tool, Blender is an open source product with many settings and configurations used by many high-end animation studios around the world. The organization recently released the Blender test suite, a couple of weeks after we decided to reduce the use of the Blender test in our new package, but the new test may take more than an hour. To get our results, we launch one of the subtests in this package via the command line - the standard bmw27 scene in the “CPU only” mode, and measure the rendering completion time.

Blender has an eclectic mix of requirements, from memory bandwidth to raw performance, but, as in Corona, non-HT processors lag a bit in it. The high frequency of 9900K raises it above 10C Skylake-X and AMD 2700X, but not above 1920X.

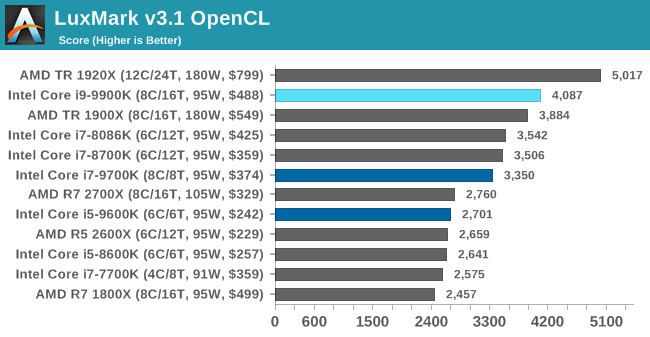

LuxMark v3.1: LuxRender via various code paths

As stated above, there are many different ways to handle rendering data: CPU, GPU, Accelerator, and others. In addition, there are many frameworks and APIs in which to program, depending on how the software is used. LuxMark, a benchmark designed using the LuxRender mechanism, offers several different scenes and an API.

taken from the Linux version of LuxMark

In our test, we run a simple “Ball” scene on the C ++ and OpenCL code, but in CPU mode. This scene begins with a rough rendering and slowly improves in quality over two minutes, giving the final result in what can be called "medium kilo-ray per second."

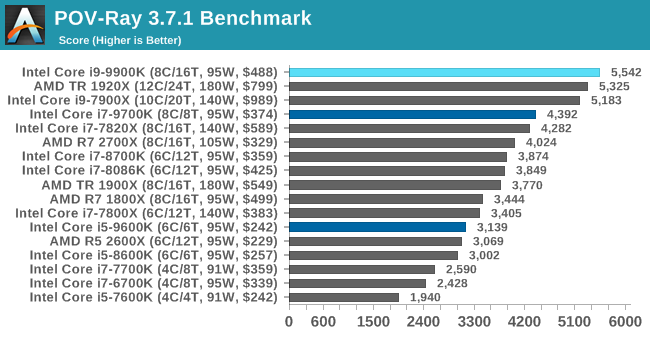

POV-Ray 3.7.1: ray tracing

The Persistence of Vision ray tracing engine is another well-known benchmarking tool that was dormant for a while until AMD released its Zen processors when suddenly both Intel and AMD started pushing code into the main branch of the open source project. For our test, we use the built-in test for all cores, called from the command line.

Office tests

The Office Test Suite is designed to focus on more industry standard benchmarks that focus on office workflows. These are more synthetic tests, but we also check the compiler performance in this section. For users who need to evaluate the equipment as a whole, these are usually the most important criteria.

PCMark 10: Industry Standard

Futuremark, now known as UL, has been developing tests that have become industry standards for two decades. The latest system test suite is PCMark 10, where several tests have been improved compared to PCMark 8, and more attention has been paid to OpenCL, specifically in such cases as video streaming.

PCMark splits its assessments into approximately 14 different areas, including application launch, web pages, spreadsheets, photo editing, rendering, video conferencing and physics. We publish all these data in our Bench database, but the key indicator for the current review is the overall score.

Here, where many tests are mixed, the new processors from Intel occupy the top three positions, in order. Even the i5-9600K is ahead of the i7-8086K.

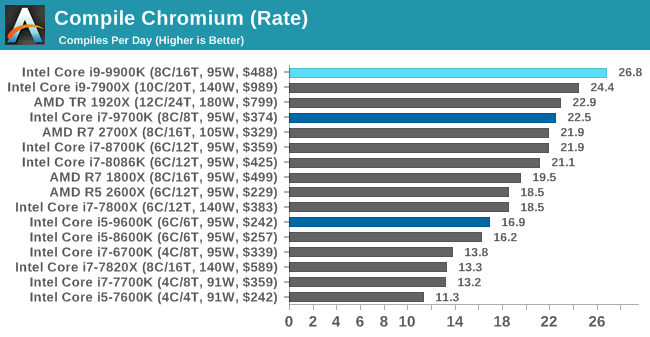

Chromium Compile: Windows VC ++ Compiling Chrome 56

A large number of AnandTech readers are software engineers who look at how the hardware works. Although Linux kernel compilation is “standard” for reviewers who often compile, our test is a bit more varied — we use Windows instructions to compile Chrome, in particular, Chrome build March 56, 2017, as it was when we created the test. Google gives detailed instructions on how to compile under Windows after downloading 400,000 files from the repository.

In our test, following the instructions of Google, we use the MSVC compiler, and ninja to manage the compilation. As you would expect, this is a test with variable multithreading, and with variable DRAM requirements that benefit from faster caches. The results obtained in our test is the time taken to compile, which we convert to the number of compilations per day.

The high rates of the full-scale turbo frequency seem to work well in our compilation test.

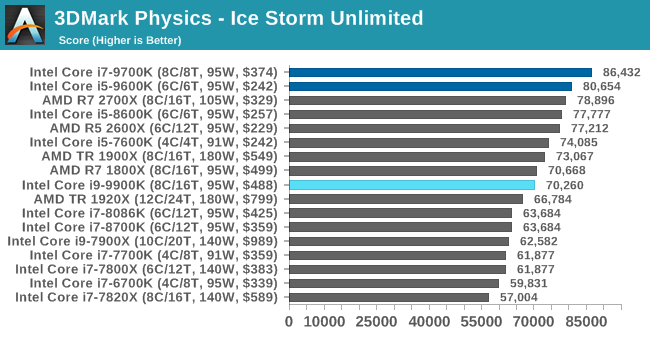

3DMark Physics: calculating physics in games

Along with PCMark there is a benchmark 3DMark, Futuremark (UL) - a set of game tests. Each game test consists of one or two scenes, heavy for the GPU, as well as a physical test, depending on when the test was written and on which platform it is aimed. The main subjects, in order of increasing complexity, are Ice Storm, Cloud Gate, Sky Diver, Fire Strike and Time Spy.

Some of the subtests offer other options, such as Ice Storm Unlimited (designed for mobile platforms with off-screen rendering), or Fire Strike Ultra (designed for high-performance 4K systems with many added features). It is worth noting that Time Spy currently has AVX-512 mode (which we can use in the future).

As for our tests, we send the results of each physical test to Bench, but for review we follow the results of the most demanding scenes: Ice Storm Unlimited, Cloud Gate, Sky Diver, Fire Strike Ultra and Time Spy.

The older test Ice Storm did not like the new Core i9-9900K, pushing it behind the R7 1800X. For more advanced PC-oriented tests, 9900K wins. The lack of HT prevents the other two processors in the line to show high results.

GeekBench4: Synthetic Test

A common tool for cross-platform testing on mobile devices, PCs and Macs, GeekBench 4 is the perfect synthetic test of the system using a variety of algorithms that require maximum throughput. Tests include encryption, compression, fast Fourier transform, memory operations, n-body physics, matrix operations, histogram manipulation, and HTML parsing.

I include this test because of the popularity of the request, although its results are very much synthetic. Many users often attach great importance to its results due to the fact that it is compiled on different platforms (albeit by different compilers).

We write evaluations of the main subtests (Crypto, Integer, Floating Point, Memory) into our database of test results, but for review we publish only general single-threaded and multi-threaded results.

Encoding Tests

With the increasing number of streams, video blogs and video content in general, encoding and transcoding tests are becoming increasingly important. Not only are more and more home users and gamers involved in converting video files and video streams, but servers that process data streams need to be encrypted on the fly, as well as compression and decompression of logs. Our coding tests are aimed at such scenarios, and take into account the opinion of the community to provide the most current results.

Handbrake 1.1.0: streaming and archiving video transcoding

A popular open source tool, Handbrake is video conversion software in every possible way, which, in a sense, is the benchmark. The danger here lies in the version numbers and in the optimization. For example, the latest software versions can take advantage of the AVX-512 and OpenCL to speed up certain types of transcoding and certain algorithms. The version we are using is clean CPU work with standard transcoding options.

We divided Handbrake into several tests using recording from a Logitech C920 1080p60 native webcam (essentially stream recording). The record will be converted to two types of stream formats and one for archiving. Used output parameters:

- 720p60 at 6000kbps

- 1080p60 at 3500 kbps, faster bit rate, faster setting, main profile

- 1080p60 HEVC at 3500 kbps variable bit rate, main setting

7-zip v1805: the popular open source archiver

Of all our archiving / unarchiving tests, 7-zip is the most requested, and has a built-in benchmark. In our test suite, we introduced the latest version of this software, and we run the benchmark from the command line. The results of archiving and unzipping are displayed as a single total score.

In this test, it is clearly seen that modern processors with several matrices have a large difference in performance between compression and decompression: they manifest themselves well in one and badly in another. In addition, we are actively discussing how Windows Scheduler implements each thread. When we get more results, we will be happy to share our thoughts on this matter.

WinRAR 5.60b3: Archiver

When I need a compression tool, I usually choose WinRAR. Many users of my generation used it more than two decades ago. The interface has not changed much, although integration with the right-click commands in Windows is a very nice plus. It does not have a built-in benchmark, so we run a compression directory containing more than 30 60-second video files and 2000 small web files, with a normal compression rate.

WinRAR has a variable multithreading, and is demanding for caching, so in our test we run it 10 times, and we calculate the average value for the last five runs, which means testing only the processor performance.

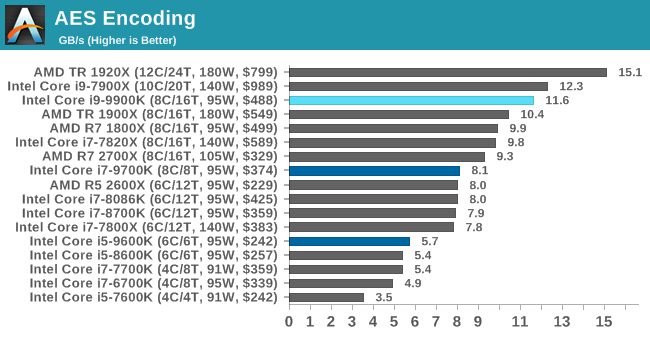

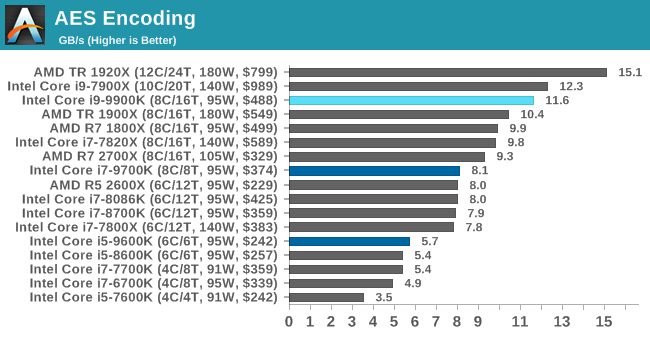

AES Encryption: File Protection

A number of platforms, especially mobile devices, encrypt filesystems by default to protect content. Windows-based devices often use BitLocker or third-party software. In the AES encryption test, we used the discontinued TrueCrypt in the benchmark, which tests several encryption algorithms directly in memory.

The data obtained from this test is the combined AES performance for encryption / decryption, measured in gigabytes per second. The software uses AES commands if the processor allows it, but does not use the AVX-512.

Web Tests and Outdated Tests

Due to the focus on low-end systems, or small form factor systems, web tests are usually difficult to standardize. Modern web browsers are often updated without giving the opportunity to disable these updates, so it is difficult to maintain some kind of common platform. The rapid pace of browser development means that versions (and performance indicators) can change from week to week. Despite this, web tests are often an important indicator for users: many of the modern office work are related to web applications, especially electronic and office applications, as well as interfaces and development environments. Our web test suite includes several industry standard tests, as well as several popular, but somewhat outdated tests.

We also included our outdated, but still popular tests in this section.

WebXPRT 3: web tasks of the modern world, including AI

The company behind the XPRT test suite, Principled Technologies, recently released the newest web test, and instead of adding the release year to the name, it was simply called "3". This newest test (at least for now) is developed on the basis of such predecessors: user interaction tests, office computing, graphing, list sorting, HTML5, image manipulation, and in some cases even AI tests.

For our benchmark, we run a standard test that will work out the checklist seven times and give the final result. We carry out such a test four times, and derive the average value.

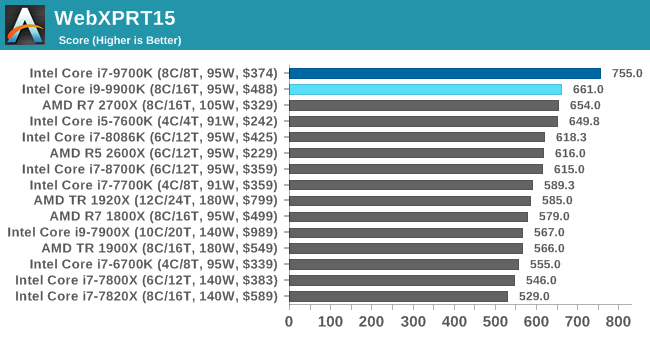

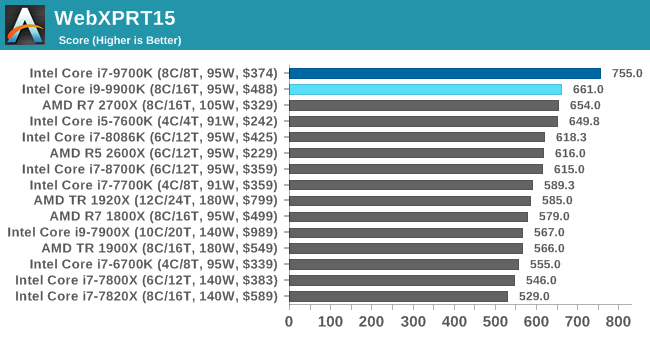

WebXPRT 2015: Testing HTML5 and Javascript Web UX

An older version of WebXPRT is the 2015 edition, which focuses on a slightly different set of web technologies and frameworks used today. This is still an actual test, especially for users who interact with not the latest web applications on the market, and there are many such users. Development of web frameworks is moving very fast and has high turnover. The frameworks are quickly developed, embedded in applications, used, and immediately the developers move on to the next. And the adaptation of the application under the new framework is a difficult task, especially with such a speed of development cycles. For this reason, many applications are “stuck in time,” and remain relevant to users for many years.

As in the case of WebXPRT3, the main benchmark runs the control set seven times, displaying the final result. We repeat this four times, display the average and show the final results.

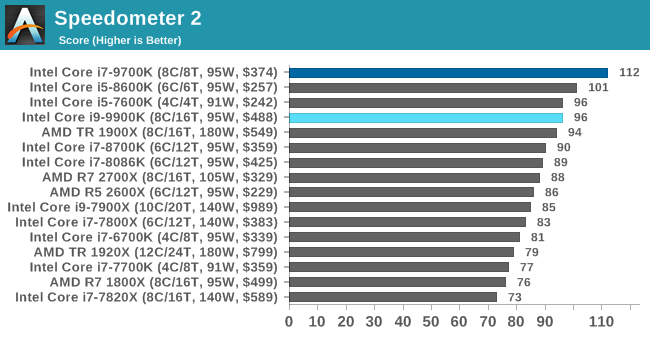

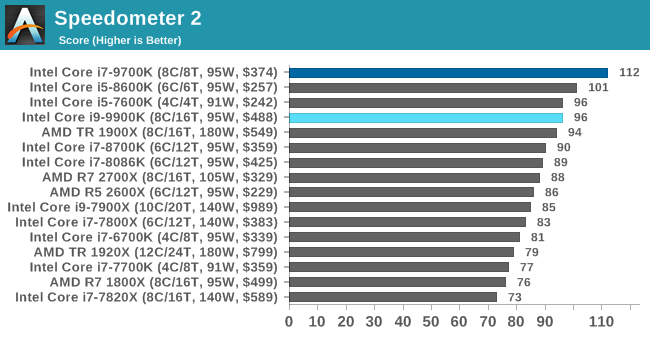

Speedometer 2: Javascript Frameworks

Our newest web test is Speedometer 2, which runs through a variety of javascript frameworks to do just three simple things: build a list, turn on each item in the list, and delete the list. All frameworks implement the same visual cues, but, obviously, they do it in different ways.

Our test passes the entire list of frameworks and gives the final score called “rpm”, one of the internal benchmark indicators. We display this figure as the final result.

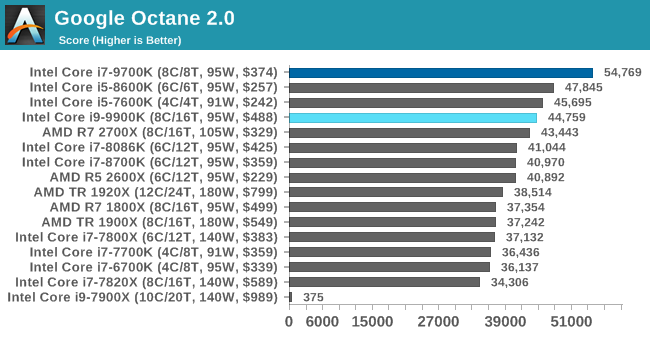

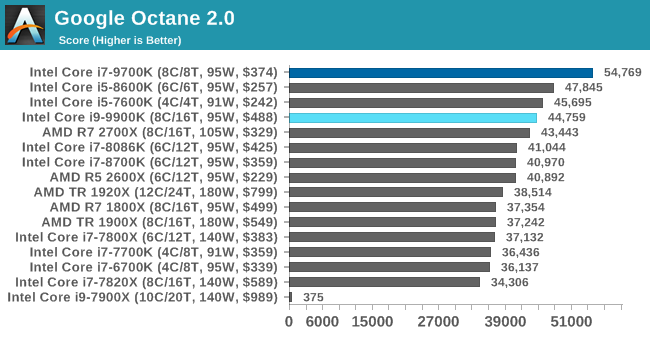

Google Octane 2.0: Core Web Compute

A popular web test for several years, but no longer updated, is Google's Octane. Version 2.0 performs a couple of dozen tasks related to calculations, such as regular expressions, cryptography, ray tracing, emulation, and the calculation of the Navier – Stokes equations.

The test gives each of the subtests a score, and returns the geometric average as the final result. We run a full benchmark four times and evaluate the final results.

Mozilla Kraken 1.1: Core Web Compute

Even older than Octane, in front of us is Kraken, this time developed by Mozilla. This is an old test that performs relatively monotonous computational mechanics, such as sound processing or image filtering. It seems that Kraken produces a very unstable result, depending on the browser version, since this test is highly optimized.

The main benchmark passes through each of the subtests ten times, and returns the average completion time for each cycle in milliseconds. We run a full benchmark four times, and measure the average result.

3DPM v1: 3DPM v2.1 variant with native code

The first “inherited” test in the package is the first version of our 3DPM test. This is the ultimate native version of the code, as if it were written by a scientist without knowledge of how computer equipment, compilers, or optimization work (as it was at the very beginning). The test is a large amount of scientific modeling in the wild, where getting an answer is more important than the speed of calculations (getting a result in 4 days is acceptable if it is correct; a year learns to program and getting a result in 5 minutes is not acceptable).

In this version, the only real optimization was in the compiler flags (-O2, -fp: fast): compile into release mode and enable OpenMP in the main calculation cycles. The cycles were not adjusted to the size of the functions, and the most serious slowdown is false sharing in the cache. The code also has long chains of dependencies based on random number generation, which leads to a decrease in performance on some computational micro-architectures.

x264 HD 3.0: outdated transcoding test

This transcoding test is very old; it was used by Anandtech in the days of the Pentium 4 and Athlon II processors. In it, standardized 720p video is recoded with double conversion, and the benchmark shows frames per second of each pass. The test is single-threaded, and in some architectures we run into the IPC constraint, instructions-per-clock.

Thank you for staying with us. Do you like our articles? Want to see more interesting materials? Support us by placing an order or recommending to friends, 30% discount for Habr's users on a unique analogue of the entry-level servers that we invented for you: The whole truth about VPS (KVM) E5-2650 v4 (6 Cores) 10GB DDR4 240GB SSD 1Gbps from $ 20 or how to share the server?(Options are available with RAID1 and RAID10, up to 24 cores and up to 40GB DDR4).

VPS (KVM) E5-2650 v4 (6 Cores) 10GB DDR4 240GB SSD 1Gbps until January 1 for free if you pay for a period of six months, you can order here .

Dell R730xd 2 times cheaper? Only we have 2 x Intel Dodeca-Core Xeon E5-2650v4 128GB DDR4 6x480GB SSD 1Gbps 100 TV from $ 249 in the Netherlands and the USA! Read about How to build an infrastructure building. class c using servers Dell R730xd E5-2650 v4 worth 9000 euros for a penny?