My experience using WebRTC in an iOS app

WebRTC (Eng. Real-time communications) is an open source project designed to organize the transfer of streaming data between browsers or other applications supporting it using point-to-point technology.

For a complete understanding of the article, I recommend that you familiarize yourself with the basic principles of the technology here .

How I came up with the need to use WebRTC

Project objective:

Imagine that we need to connect two random users to each other to transmit real-time streaming video to each other.

What are the possible solutions to the problem?

Option 1:

Organize the connection of two users to the server as the source and receiver of the video stream and wait for him to receive video from the interlocutor.

Minuses:

- Expensive server hardware

- Low extensibility due to limited server resources

- Video delay time increased due to intermediary

Pros:

- There is no problem with choosing a user and connecting to it - this is what the server does for us

Option 2:

Connect to the user directly, forming a tunnel for data transfer “between the parties”, knowingly receiving information about this user from the server.

Minuses:

- Long operation of connecting two users

- The difficulty of creating a peer-to-peer connection

- Difficulties with NAT protocol for transferring data directly

- Multiple servers are required to create a connection.

Pros:

- Server unloading

- The server may stop working, but the video will not stop

- Image quality directly depends on the quality of the user data channel

- Ability to use end-to-end encryption

- Connection management is in the hands of users

Also, the task was to do this as budget as possible, without serious load on the server.

It was decided to use option number 2.

After a diligent search on the Internet and

Implementation

The second stage of the search has begun. On it, I came across many different paid libraries that offered me to solve my problem, found a lot of materials about WebRTC, but I could not find an implementation for iOS.

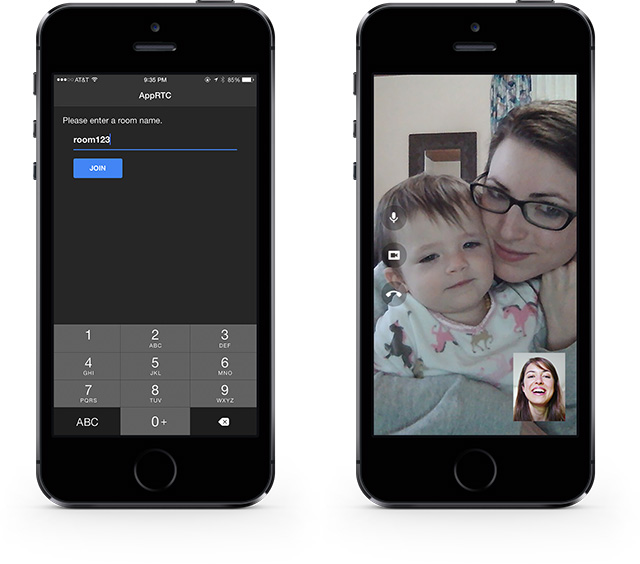

Then, by chance, I went to the git core for the browser from Google and noticed a folder there called “WebRTC”, in which there were several files and two folders: “Android” and “iOS”. Having entered a folder that I liked more than another, I found a lot of source library files and a folder “Example”, in which there was a project called “AppRTC”.

I immediately launched this project on my device and on the second iOS device, which was at hand, and I managed to connect them and chat with myself on two phones.

The fastest way to integrate video chat into my application, which already had some functions (registration, authorization), I saw by applying an “AppRTC” example to my application, which I started to do.

At this time, during the project, the stage came when it was necessary to implement the server part for the application. We decided to find an example of servers for “AppRTC”, and they also killed a lot of time with the developer of the server side to debug the server and to “make friends” of the server with the client.

Ultimately, everything in the “service” was divided into two parts:

- Authorization, registration, search filter for interlocutors, settings, purchases and so on;

- Video conference.

Development went on, but after debugging started, I came across the fact that the library is absolutely unstable, there are constant disconnections, poor video quality, the device’s processor is using at 120%, low frame refresh rate and much more. A lot of time was spent on optimization, but it did not lead to a real result: there were minor changes in the speed of work, but still not a cake. Also, VP8 codec required a lot of resources, but the service did not have others at that time.

The thought came to update the library completely. After altering 80% of the code written before the update, it still did not work, and the example was not updated to the current version. The server part also refused to work with the new version of the library.

The solution was not to use your server for WebRTC. That is, the server of the application itself only analyzed the users connected to it and suggested that they connect, after that the applications started working with the server of the “AppRTC” itself and all interactions with packet transmission, STUN and TURN servers took place there. I also had to rewrite everything to the current version in the application on my own. During this, I noticed that the encryption of data that was transferred between users was added, and the H264 codec was integrated, which significantly reduced the load on the device and gave an increase in the number of frames per second.

A full refactoring of the video chat code was carried out and the application worked even faster and became more usable. As a result of code optimization, the library was moved to a separate “Pod” here.

Conclusion

The problem was solved, we got an application that connects two random users who downloaded and launched it, among themselves. Almost without material costs, video chat is implemented on new technologies, and video quality directly depends on the bandwidth of the Internet channel for users.

Several solutions to optimize the operation of the AppRTC library itself were developed and presented and they were sent to the developers.