Development of a class for working with Markov chains

Today I would like to tell you about writing a class to simplify working with Markov chains.

I ask for cat.

Initial knowledge:

Representation of graphs in the form of an adjacency matrix, knowledge of basic concepts about graphs. Knowledge of C ++ for the practical part.

In simple terms, the Markov Chain is a weighted graph. There are events at its vertices, and the weight of the edge connecting the vertices A and B is the probability that event B will follow after event B. A

lot of articles have been written on the application of Markov chains, but we will continue to develop the class.

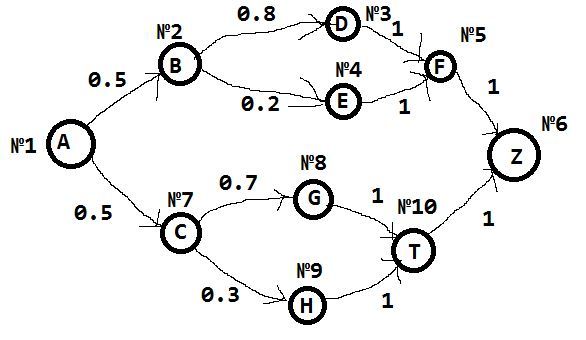

Let me give an example of a Markov chain:

In what follows, we will consider this scheme as an example.

Obviously, if there is only one outgoing edge from the vertex A, then its weight will be 1.

At the tops we have events (from A, B, C, D, E ...). On the edges, the probability that after the i-th event there will be an event j> i. For convenience and convenience, I numbered the peaks (No. 1, No. 2, etc.).

A matrix is an adjacency matrix of an oriented weighted graph, with which we represent a Markov chain. (more on this later). In this particular case, this matrix is also called the transition probability matrix or simply the transition matrix.

We will represent Markov chains with the help of a matrix, namely the adjacency matrix with which we represent the graphs.

Let me remind you:

More about adjacency matrices - in the course of discrete mathematics.

In our case, the matrix will have a size of 10x10, we will write it:

Take a closer look at our matrix. In each row, we have nonzero values in those columns whose number coincides with the subsequent event, and the nonzero value itself is the probability that this event will occur.

Thus, we have the probability values of the occurrence of an event with a number equal to the column number after an event with a number equal to a row .

Those who know the theory of probability could guess that each line is a probability distribution function.

1) initialize the initial position k with a zero vertex.

2) If the vertex is not finite, then we generate the number m from 0 ... n-1 based on the probability distribution in the row k of the matrix, where n is the number of vertices and m is the number of the next event (!). Otherwise, we exit

3) We equate the number of the current position k with the number of the generated vertex

4) At step 2

Note: the vertex is finite if the probability distribution is zero (see the 6th row in the matrix).

Pretty elegant algorithm, right?

In this article I want to separately make the implementation code for the described workaround. Initialization and filling in the Markov chain are of little interest (see the full code at the end).

This algorithm looks especially simple due to the features of the discrete_distribution container . In words, it’s quite difficult to describe how this container works, so let's take the 0th row of our matrix as an example:

0 50 0 0 0 0 50 0 0 0

As a result of the generator’s work, it will return either 1 or 6 with probabilities of 0.5 for each. That is, it returns the column number (which is equivalent to the vertex number in the chain) where to continue moving further.

An example program that uses the class:

Example output that the program generates:

We see that the algorithm works pretty well. We can specify anything as values.

It can be seen from the illustration of the Markov chain that ABDFZ is the most likely sequence of events. It is most often manifested. Event E is least likely to occur. The

configuration file used in the example:

Thus, we see that the algorithms on graphs are applicable to Markov chains and these algorithms look pretty nice. If someone knows a better implementation, then please unsubscribe in the comments.

Full code and readme on github: code

Thank you all for your attention.

I ask for cat.

Initial knowledge:

Representation of graphs in the form of an adjacency matrix, knowledge of basic concepts about graphs. Knowledge of C ++ for the practical part.

Theory

Markov chain - a sequence of random events with a finite or countable number of outcomes, characterized by the property that, speaking non-rigid, with a fixed present, the future is independent of the past. Named after A.A. Markov (Sr.).

In simple terms, the Markov Chain is a weighted graph. There are events at its vertices, and the weight of the edge connecting the vertices A and B is the probability that event B will follow after event B. A

lot of articles have been written on the application of Markov chains, but we will continue to develop the class.

Let me give an example of a Markov chain:

In what follows, we will consider this scheme as an example.

Obviously, if there is only one outgoing edge from the vertex A, then its weight will be 1.

Designations

At the tops we have events (from A, B, C, D, E ...). On the edges, the probability that after the i-th event there will be an event j> i. For convenience and convenience, I numbered the peaks (No. 1, No. 2, etc.).

A matrix is an adjacency matrix of an oriented weighted graph, with which we represent a Markov chain. (more on this later). In this particular case, this matrix is also called the transition probability matrix or simply the transition matrix.

Matrix representation of a Markov chain

We will represent Markov chains with the help of a matrix, namely the adjacency matrix with which we represent the graphs.

Let me remind you:

The adjacency matrix of a graph G with a finite number of vertices n (numbered from 1 to n) is a square matrix A of size n in which the value of aij is equal to the number of edges from the ith vertex of the graph to the jth vertex.

More about adjacency matrices - in the course of discrete mathematics.

In our case, the matrix will have a size of 10x10, we will write it:

0 50 0 0 0 0 50 0 0 0

0 0 80 20 0 0 0 0 0 0

0 0 0 0 100 0 0 0 0 0

0 0 0 0 100 0 0 0 0 0

0 0 0 0 0 100 0 0 0 0

0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 70 30 0

0 0 0 0 0 0 0 0 0 100

0 0 0 0 0 0 0 0 0 100

0 0 0 0 0 100 0 0 0 0

Idea

Take a closer look at our matrix. In each row, we have nonzero values in those columns whose number coincides with the subsequent event, and the nonzero value itself is the probability that this event will occur.

Thus, we have the probability values of the occurrence of an event with a number equal to the column number after an event with a number equal to a row .

Those who know the theory of probability could guess that each line is a probability distribution function.

Markov circuit traversal algorithm

1) initialize the initial position k with a zero vertex.

2) If the vertex is not finite, then we generate the number m from 0 ... n-1 based on the probability distribution in the row k of the matrix, where n is the number of vertices and m is the number of the next event (!). Otherwise, we exit

3) We equate the number of the current position k with the number of the generated vertex

4) At step 2

Note: the vertex is finite if the probability distribution is zero (see the 6th row in the matrix).

Pretty elegant algorithm, right?

Implementation

In this article I want to separately make the implementation code for the described workaround. Initialization and filling in the Markov chain are of little interest (see the full code at the end).

Implementation of the bypass algorithm

template Element *Markov::Next(int StartElement = -1)

{

if (Markov::Initiated) // если матрица смежности создана

{

if (StartElement == -1) // если стартовый элемент по умолчанию

StartElement = Markov::Current; // то продолжаем ( в конструкторе Current = 0 )

std::random_device rd;

std::mt19937 gen(rd());

std::discrete_distribution<> dicr_distr(Markov::AdjacencyMatrix.at(Current).begin(),

Markov::AdjacencyMatrix.at(Current).end()); // инициализируем контейнер для генерации числа на основе распределения вероятности

int next = dicr_distr(gen); // генерируем следующую вершину

if (next == Markov::size()) // тонкости работы генератора, если распределение вероятностей нулевое, то он возвращает количество элементов

return NULL;

Markov::Current = next; // меняем текущую вершину

return &(Markov::elems.at(next)); // возвращаем значение в вершине

}

return NULL;

}

This algorithm looks especially simple due to the features of the discrete_distribution container . In words, it’s quite difficult to describe how this container works, so let's take the 0th row of our matrix as an example:

0 50 0 0 0 0 50 0 0 0

As a result of the generator’s work, it will return either 1 or 6 with probabilities of 0.5 for each. That is, it returns the column number (which is equivalent to the vertex number in the chain) where to continue moving further.

An example program that uses the class:

Implementation of a program that does a bypass of the Markov chain from an example

#include

#include "Markov.h"

#include

#include

using namespace std;

int main()

{

Markov chain;

ofstream outs;

outs.open("out.txt");

ifstream ins;

ins.open("matrix.txt");

int num;

double Prob = 0;

(ins >> num).get(); // количество вершин

string str;

for (int i = 0; i < num; i++)

{

getline(ins, str);

chain.AddElement(str); // добавляем вершину

}

if (chain.InitAdjacency()) // инициализируем матрицу нулями

{

for (int i = 0; i < chain.size(); i++)

{

for (int j = 0; j < chain.size(); j++)

{

(ins >> Prob).get();

if (!chain.PushAdjacency(i, j, Prob)) // вводим матрицу

{

cerr << "Adjacency matrix write error" << endl;

}

}

}

outs << chain.At(0) << " "; // выводим 0-ю вершину

for (int i = 0; i < 20 * chain.size() - 1; i++) // генерируем 20 цепочек

{

string *str = chain.Next();

if (str != NULL) // если предыдущая не конечная

outs << (*str).c_str() << " "; // выводим значение вершины

else

{

outs << std::endl; // если конечная, то начинаем с начала

chain.Current = 0;

outs << chain.At(0) << " ";

}

}

chain.UninitAdjacency(); // понятно

}

else

cerr << "Can not initialize Adjacency matrix" << endl;;

ins.close();

outs.close();

cin.get();

return 0;

}

Example output that the program generates:

Output

A B D F Z

A C G T Z

A C G T Z

A B D F Z

A C H T Z

A B D F Z

A C G T Z

A C G T Z

A C G T Z

A B D F Z

A B D F Z

A C G T Z

A B D F Z

A B D F Z

A C H T Z

A C G T Z

A C H T Z

A C H T Z

A B E F Z

A C H T Z

A B E F Z

A C G T Z

A C G T Z

A C H T Z

A B D F Z

A B D F Z

A C H T Z

A B D F Z

A C G T Z

A B D F Z

A B D F Z

A B D F Z

A B D F Z

A C G T Z

A B D F Z

A C H T Z

A C H T Z

A B D F Z

A C G T Z

A B D F Z

We see that the algorithm works pretty well. We can specify anything as values.

It can be seen from the illustration of the Markov chain that ABDFZ is the most likely sequence of events. It is most often manifested. Event E is least likely to occur. The

configuration file used in the example:

file

10

A

B

D

E

F

Z

C

G

H

T

0 50.0 0 0 0 0 50 0 0 0

0 0 80.0 20.0 0 0 0 0 0 0

0 0 0 0 100.0 0 0 0 0 0

0 0 0 0 100.0 0 0 0 0 0

0 0 0 0 0 100.0 0 0 0 0

0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 70.0 30.0 0

0 0 0 0 0 0 0 0 0 100.0

0 0 0 0 0 0 0 0 0 100

0 0 0 0 0 100.0 0 0 0 0

Conclusion

Thus, we see that the algorithms on graphs are applicable to Markov chains and these algorithms look pretty nice. If someone knows a better implementation, then please unsubscribe in the comments.

Full code and readme on github: code

Thank you all for your attention.