Collecting and analyzing daemon logs in Badoo

Introduction

There are dozens of “self-written" demons in Badoo. Most of them are written in C, one left in C ++ and five or six in Go. They work on about a hundred servers in four data centers.

At Badoo, health checks and detection of daemon problems lie on the shoulders of the monitoring department. Colleagues using Zabbix and scripts check whether the service is running, whether it responds to requests, and also monitor versions. In addition, the department analyzes the statistics of demons and scripts working with them for anomalies, sudden jumps, etc.

However, until recently, we did not have a very important part - collecting and analyzing logs that each daemon writes locally to files on the server. Often, it is this information that helps at the earliest stage to catch a problem or post factum to understand the causes of failure.

We have built such a system and are in a hurry to share the details. Surely one of you will have a similar task, and reading this article will save you from mistakes that we managed to make.

Tool selection

From the very beginning, we dismissed the “cloud” systems, because at Badoo, it’s customary not to give your data out, if possible. After analyzing the popular tools, we came to the conclusion that, most likely, one of three systems is suitable for us:

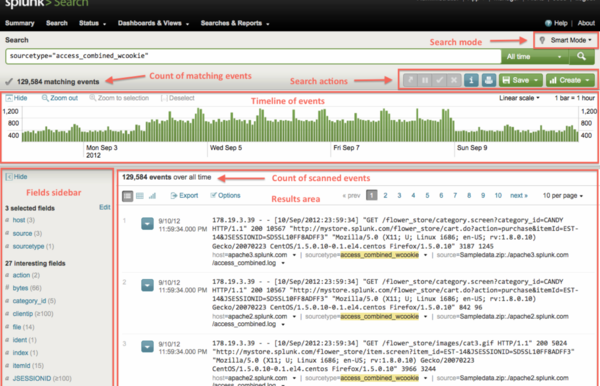

Splunk

First of all, we tried Splunk. Splunk is a turnkey system, a closed and paid solution, the cost of which directly depends on the traffic coming into the system. We already use it for data in the billing department. Colleagues are very satisfied.

We took advantage of their installation for tests and almost immediately faced the fact that our traffic exceeded the available and paid limits.

Another nuance was that during testing, some employees complained about the complexity and "unintuitiveness" of the user interface. Colleagues from billing during this time have already mastered communicating with Splunk and they had no problems, but nevertheless this fact is worth noting, because a nice interface will be of great importance if we want our system to be actively used.

On the technical side of Splunk, apparently, we were completely satisfied. But its cost, closeness and inconvenient interface made us look further.

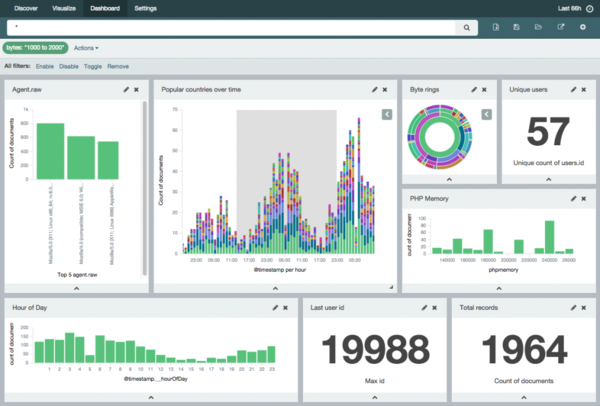

ELK: Elastic Search + Logstash + Kibana

Next on the list was ELK. ELK is probably the most popular system for collecting and analyzing logs today. And I want to say that this is not surprising, because It is free, simple, flexible and powerful.

ELK consists of three components:

- Elastic Search. Data storage and retrieval system based on the Lucene engine;

- Logstash. A “pipe” with a bunch of features through which data (possibly processed) gets into Elastic Search;

- Kibana. Web interface for searching and visualizing data from Elastic Search.

Getting started with ELK is very simple: just download three archives from the official site, unzip and run several binaries. This simplicity allowed us to test the system in a matter of days and understand how it suits us.

And overall she came up. Technically, we could implement everything we needed, if necessary, write our own solutions and build them into a common infrastructure.

Despite the fact that ELK completely suited us, there was a third challenger.

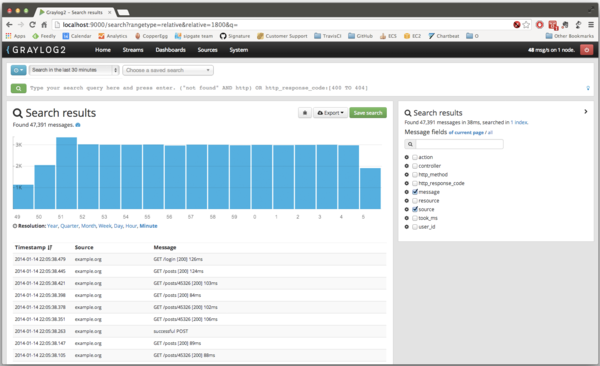

Graylog 2

In general, Graylog 2 is very similar to ELK: open source, easy to install, also uses Elastic Search and Logstash can be used. The main difference is that Graylog 2 is a ready-to-use system that is tailored specifically for collecting logs. By its readiness for the end user, it is very reminiscent of Splunk. There is also a convenient graphical interface with the ability to customize the parsing of lines directly in the browser, and access restriction, and notifications.

But we came to the conclusion that ELK will allow us to make a much more flexible system, customized to our needs; will expand, change components. As a constructor. I did not like one part - replaced with another. They didn’t want to pay for watcher - they made their own system. If in ELK all parts can be easily removed and replaced, in Graylog 2 there was a feeling that some parts would have to be torn up, and some simply could not be implemented.

It is decided. We will do on ELK.

Log delivery

At the very early stage, we made a mandatory requirement that the logs should both get into our collector and remain on disk. A system for collecting and analyzing logs is good, but any system gives some delay, can fail, and nothing can replace the capabilities that standard unix utilities like grep, AWK, sort, etc. give. The programmer should have the opportunity to go to the server and see with his own eyes what is happening there.

We could deliver logs to Logstash as follows:

- use existing utilities from the ELK suite (logstash-forwarder, and now beats). They are a separate daemon that monitors the file on disk and uploads it to Logstash;

- use your own development under the name LSD, which delivers PHP logs to us. In fact, this is also a separate daemon that monitors log files in the file system and uploads them somewhere. On the one hand, LSD took into account and resolved all the problems that can occur when uploading a huge number of logs from a huge number of servers, but the system is too “tuned” to PHP scripts. We would have to finish it;

- in parallel with writing to disk, write logs to the syslog standard for the UNIX world.

Despite the shortcomings of the latter, this approach was very simple, and we decided to try it.

Architecture

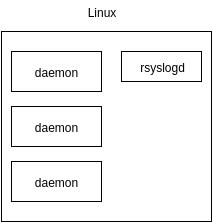

Servers and rsyslogd

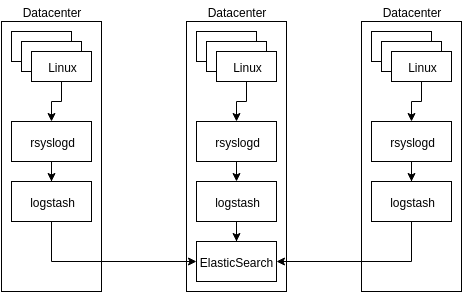

Together with system administrators, we sketched an architecture that seemed reasonable to us: we put one rsyslogd daemon on each server, one main rsyslogd daemon on the site, one Logstash on the site and one Elastic Search cluster closer to us, to Moscow, i.e. . to the Prague data center.

In the pictures, one of the servers looked something like this:

Because Badoo uses docker in some places, we planned to throw the / dev / log socket inside the container using the built-in tools.

The final chart was something like this:

The scheme invented above looked, for starters, quite resistant to data loss: each of the rsyslogd daemons, if it is not possible to send messages further, will save them to disk and send them when it “next” works.

The only data loss was possible if the very first rsyslog daemon did not work. But at that moment we did not pay too much attention to this problem. Still, the logs are not so important information that you should spend a lot of time from the very beginning.

Log line format and Logstash

Logstash is a pipe for data into which lines are sent. Inside, they are parsed and go to Elastic Search in the form of fields and tags ready for indexing.

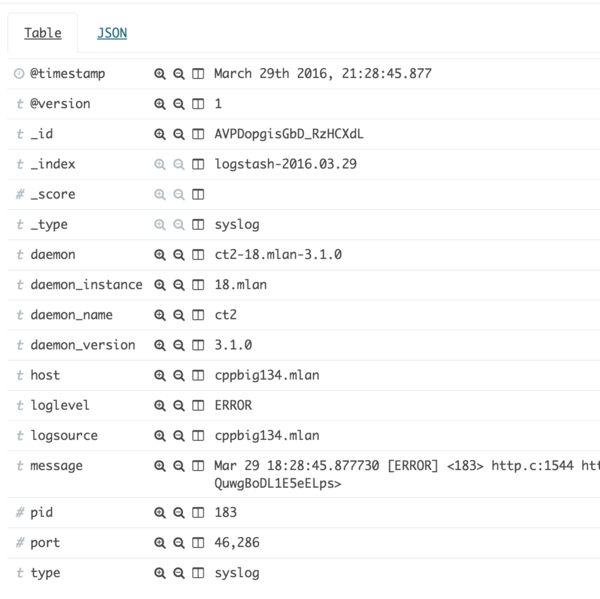

Almost all of our services are built using our own libangel library, which means that they have the same log format and looks like this:

Mar 04 04:00:14.609331 [NOTICE] <16367> storage_file.c:1212 storage___update_dump_data(): starting dump (threaded, update)

The format consists of the general part, which is unchanged, and the part that the programmer sets himself when he calls one of the functions for logging.

In the general part we see the date, time with microseconds, log level, label, PID, file name and line number in the source, function name. The most common things.

Syslog adds information from itself to this message: time, PID, server hostname and so-called ident. This is usually just the name of the program, but anything can be passed there.

We standardized this ident and pass the name, secondary name, and version of the daemon there. For example, meetmaker-ru.mlan-1.0.0 . Thus, we can distinguish logs from different demons, from different types of one daemon (for example, country, replica) and have information about the running version of the daemon.

The analysis of such a message is quite straightforward. I will not cite pieces from the configuration file in the article, but it all comes down to the gradual “biting” and parsing of parts of the string using regular regular expressions.

If some stage of parsing was unsuccessful, we add a special tag to the message, which later allows us to find such messages and monitor their number.

Mention about the analysis of time. We tried to take into account different options, and the default message time will be the time from the libangel message, i.e. essentially the time that this message was generated. If for some reason this time was not found, we will take the time from syslog, i.e. time when the message went to the first local syslog daemon. If for some reason this time is also unavailable, the time of the message will be the time of reception of this message in Logstash.

The resulting fields go to Elastic Search for indexing.

Elasticsearch

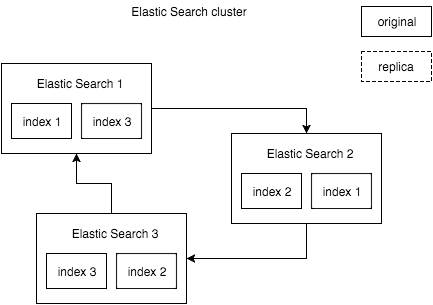

Elastic Search supports working in cluster mode, when several nodes are combined into one network and work together. Due to the fact that replication to another node can be configured for each index, the cluster remains operational in the event of failure of some nodes.

The minimum number of nodes in a failover cluster is three, the first odd number that is greater than one. This is due to the fact that for the operation of internal algorithms it is necessary that, when a cluster is divided into parts, it is possible to isolate the majority. An even number of nodes is not suitable for this.

We selected three servers for the Elastic Search cluster and configured it so that each index had one replica, as in the diagram.

In such an architecture, failure of any of the cluster nodes is not fatal and does not lead to inaccessibility of the cluster.

In addition to fault tolerance itself, with such a scheme it is convenient to update Elastic Search itself: stop one of the nodes, update it, start, update the other.

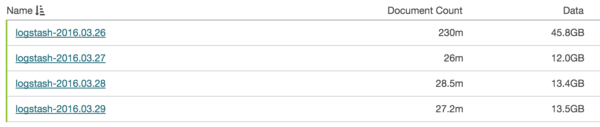

The fact that we store logs in Elastic Search allows us to easily divide all the data into indexes by day. Such a partition provides several advantages:

- in case the servers run out of disk space, it is very easy to delete old data. This is a quick operation, and moreover, there is a ready-made Curator tool to delete old data;

- during a search in the interval of more than one day, the search can be conducted in parallel. Moreover, it can be run in parallel both on one server, and on several.

As already mentioned, we configured Curator to automatically delete old indexes when there is not enough disk space.

In setting up Elastic Search, there are many subtleties related to both Java and simply the fact that Lucene is used internally. But all these subtleties are described both in official documentation, and in numerous articles, therefore I will not go deep. Let me just briefly mention that on the Elastic Search server you need to remember to allocate memory both for Java Heap and outside Heap (it will be used by Lucene), as well as prescribe “mappings” specifically for your fields in the indexes to speed up work and reduce space consumption on disk.

Kibana

There’s nothing to talk about at all :-) They put it and it works. Fortunately, in the latest version, developers added the ability to change the time zone in the settings. Previously, the user’s local time zone was taken by default, which is very inconvenient, because on our servers everywhere and always UTC, and we are used to communicating on it.

Notification system

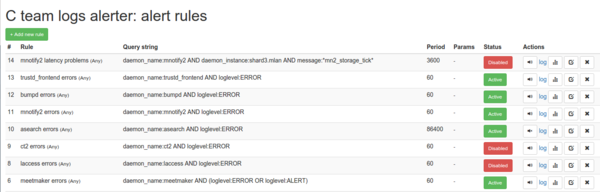

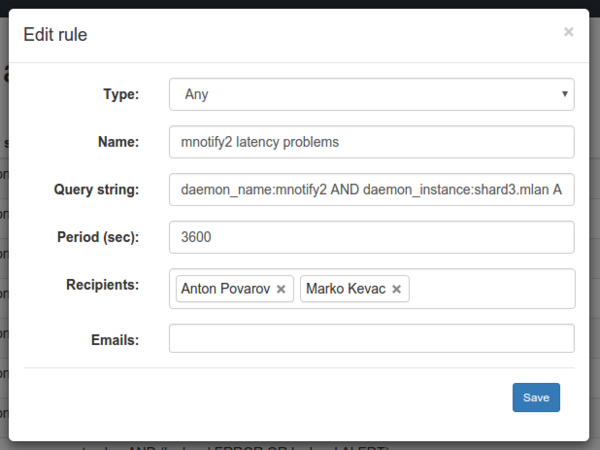

A very important part of the log collection system and one of the main requirements was the availability of a notification system. A system that, based on rules or filters, would send letters notifying of the rule being triggered with a link to a page where you can see the details.

There were two similar off-the-shelf products in the ELK world:

- Watcher from Elastic;

- Elastalert by Yelp .

Watcher is a closed Elastic product requiring an active subscription. Elastalert is an open source product written in Python. We cast off Watcher almost immediately for the same reason as before - the closeness and complexity of expanding and adapting to us. According to tests, Elastalert proved to be a cool product, but it also had several disadvantages (though not very critical):

- It is written in Python. We love Python as a language for writing fast “knee-high” scripts, but we don’t really want to see it as a final product at production;

- the possibilities of constructing letters that the system sends in response to an event are completely rudimentary. And the beauty and convenience of writing is very important if we want others to have a desire to use the system.

Having played with Elastalert and studied its source code, we decided to write the product in PHP using the platform department. As a result, Denis Karasik Battlecat for 2 weeks wrote a product “tailored” for us: it is integrated into backoffice and has only the necessary functionality.

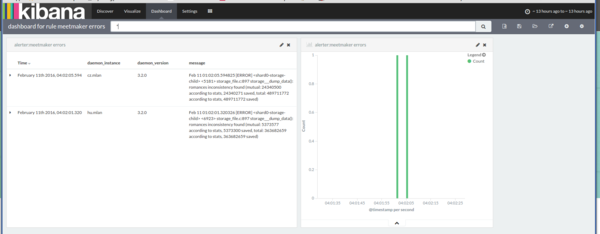

For each rule, the system automatically creates a basic dashboard in Kibana, the link to which will be in the letter. When you click on the link, you will see the messages and the schedule exactly for the period specified in the notification.

"Rake"

At this stage, the first release of the system was ready, it worked and could be used. But, as we promised, the "rake" was not long in coming.

Problem 1 (syslog + docker)

The standard way to communicate between a syslog daemon and a program is unix socket / dev / log. As mentioned above, we threw it into the container using standard docker tools . This bundle worked fine until we needed to reload the syslog daemon.

Apparently, if a specific file is transferred, not a directory, then when you delete or recreate the file on the host system, it will no longer be accessible inside the container. It turns out that any reboot of the syslog daemon leads to the termination of logs from docker containers.

If you forward the entire directory, then inside there can be a unix socket without problems, and rebooting the daemon will not break anything. But then it becomes more complicated to configure all this wealth, because libc expects the socket to be in / dev / log.

The second option that we considered is to use UDP or TCP to send logs out. But here is the same problem as in the previous case: libc can only write in / dev / log. We would have to write our syslog client, but at this stage we did not want to do this.

In the end, we decided to start one syslog daemon in each container and continue to write in / dev / log with the standard libc openlog () / syslog () functions.

This was not a big problem since our system administrators still use the init system in each container, rather than starting only one daemon.

Problem 2 (blocking syslog)

On the devel cluster, we noticed that one of the daemons hangs periodically. Turning on the daemon’s internal watchdog, we got several backtrace, which showed that the daemon hangs in syslog () -> write ().

==== WATCHDOG ====

tag: IPC_SNAPSHOT_SYNC_STATE

start: 3991952 sec 50629335 nsec

now: 3991953 sec 50661797 nsec

Backtrace:

/lib64/libc.so.6(__send+0x79)[0x7f3163516069]

/lib64/libc.so.6(__vsyslog_chk+0x3ba)[0x7f3163510b8a]

/lib64/libc.so.6(syslog+0x8f)[0x7f3163510d8f]

/local/meetmaker/bin/meetmaker-3.1.0_2782 | shard1: running(zlog1+0x225)[0x519bc5]

/local/meetmaker/bin/meetmaker-3.1.0_2782 | shard1: running[0x47bf7f]

/local/meetmaker/bin/meetmaker-3.1.0_2782 | shard1: running(storage_save_sync_done+0x68)[0x47dce8]

/local/meetmaker/bin/meetmaker-3.1.0_2782 | shard1: running(ipc_game_loop+0x7f9)[0x4ee159]

/local/meetmaker/bin/meetmaker-3.1.0_2782 | shard1: running(game+0x25b)[0x4efeab]

/local/meetmaker/bin/meetmaker-3.1.0_2782 | shard1: running(service_late_init+0x193)[0x48f8f3]

/local/meetmaker/bin/meetmaker-3.1.0_2782 | shard1: running(main+0x40a)[0x4743ea]

/lib64/libc.so.6(__libc_start_main+0xf5)[0x7f3163451b05]

/local/meetmaker/bin/meetmaker-3.1.0_2782 | shard1: running[0x4751e1]

==== WATCHDOG ====

Having quickly downloaded the libc sources and looked at the implementation of the syslog client, we realized that the syslog () function is synchronous and any delays on the rsyslog side will affect the daemons.

Something had to be done with this, and the sooner the better. But we didn’t have time ...

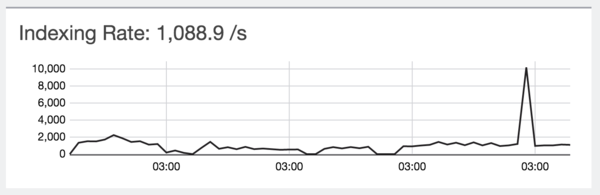

After a couple of days, we stepped on the most unpleasant rake of modern architecture - a cascading failure.

Rsyslog is configured by default so that if the internal queue is filled for some reason, it starts to “throttle” (eng. Throttle), i.e. slow down the "record in yourself" of new messages.

It turned out that, due to a programmer’s oversight, one of the test servers started sending a huge amount of messages to the log. Logstash could not cope with such a stream, the main rsyslog queue was full and he read messages from other rsyslog very slowly. Because of this, the queues of other rsyslogs also overflowed and they very slowly read messages from daemons.

And demons, as I said above, write to / dev / log synchronously and without any timeout.

The result is predictable: because of one flooding test daemon, all daemons that write to syslog with at least some significant frequency started to slow down.

Another mistake was that we did not tell the system administrators about the potential problem, and it took more than an hour to find out the reason and disable rsyslog.

Not we alone stepped on this rake, it turns out. And not only with rsyslog . Synchronous calls in the daemon's event loop are an inadmissible luxury.

Before us were several options:

- get away from syslog. Returning to one of the other options, which suggest that the daemon is writing to disk, and already some other daemon is absolutely independently reading from the disk;

- continue to write to syslog synchronously, but in a separate thread;

- write your syslog client and send data to syslog via UDP.

The most correct option, perhaps, is the first. But we did not want to waste time on it and quickly made a third one, i.e. started writing to syslog over UDP.

As for Logstash, two launch parameters solved all problems: increasing the number of handlers and the number of simultaneously processed lines ( -w 24 -b 1250 ).

Future plans

In the near future, make a dashboard for our demons. Such a dashboard that combines existing and some new features:

- viewing the daemon’s health (“traffic light”), its basic statistics;

- graphs of the number of lines ERROR and WARNING in the logs, their viewing;

- triggered alert system rules;

- SLA monitoring (latency response monitoring) with the display of problematic services or requests;

- selection of various stages from the demon logs. For example, a mark on what stage of loading it is at, loading time, duration of some periodic processes, etc.

The presence of such a dashboard, in my opinion, will appeal to managers, and programmers, and administrators, and monitors.

Conclusion

We built a simple system that collects the logs of all our demons, allows us to conveniently search for them, build graphs and visualizations, and notifies us of problems by mail.

The success of the system is evidenced by the fact that during its existence we quickly found those problems that we would not have discovered at all or found after a long time, and also that other teams are starting to use the infrastructure.

If we talk about the load, then at the moment during the day comes from 600 to 2000 lines with logs per second, with periodic bursts of up to 10 thousand lines. The system digests this load without any problems.

The size of the daily index varies from a dozen to hundreds of gigabytes.

Someone may say that there are drawbacks in this system and that some “rakes” could be circumvented by doing something different. It's true. But we are not programming for the sake of programming. Our goal was achieved in a reasonably minimal amount of time and the system is so flexible that the parts that do not align us in the future can be quite easily improved or changed.

Marco Kevats, programmer in the C / C ++ development department