Web file manager Sprut.IO in OpenSource

In Beget, we have been successfully engaged in virtual hosting for a long time, we use many OpenSource solutions, and now it is time to share our development with the community: the Sprut.IO file manager , which we developed for our users and which is used in our control panel. We invite everyone to join its development. We will tell about how it was developed and why we were not satisfied with the existing analogues, which crutches of technology we used and to whom it may be useful, in this article.

Project site: https://sprut.io

Demo is available at: https://demo.sprut.io:9443

Source code: https://github.com/LTD-Beget/sprutio

In 2010, we used NetFTP, which quite tolerably solved the problems of opening / loading / correcting several files.

However, users sometimes wanted to learn how to transfer sites between hosts or between our accounts, but the site was large, and the Internet was not the best for users. In the end, either we did it ourselves (which was clearly faster), or explained what SSH, MC, SCP, and other terrible things are.

Then we got the idea to make WEB a two-panel file manager that works on the server side and can copy between different sources at the server speed, and also in which there will be: search by files and directories, analysis of occupied space (analogue of ncdu), simple file uploads, well and a lot of interesting things. In general, everything that would make life easier for our users and us.

In May 2013 we posted it in production on our hosting. At some points, it turned out even better than we originally wanted - to download files and access the local file system, we wrote a Java applet that allows you to select files and copy everything at once to the hosting or vice versa from the hosting (where copying is not so important, he knew how to work with remote FTP and with the user's home directory, but, unfortunately, browsers will not support it soon).

Having read about the analogue on Habré , we decided to put our product in OpenSource, which turned out, it seems to us,to work great and can be useful. It took another nine months to separate it from our infrastructure and bring it to an appropriate appearance. Before the new year 2016, we released Sprut.IO.

We made for ourselves and used the most, in our opinion, new, stylish, youth tools and technologies. Often used what was already done for something.

There is some difference in the implementation of Sprut.IO and the version for our hosting, due to the interaction with our panel. For ourselves, we use: full-fledged queues, MySQL, an additional authorization server, which is also responsible for choosing the final server on which the client is located, transport between our servers on the internal network, and so on.

Sprut.IO consists of several logical components:

1) web-muzzle,

2) nginx + tornado, accepting all calls from the web,

3) end agents that can be hosted on one or on many servers.

In fact, by adding a separate layer with authorization and server selection, you can make a multi-server file manager (as in our implementation). All elements can logically be divided into two parts: Frontend (ExtJS, nginx, tornado) and Backend (MessagePack Server, Sqlite, Redis).

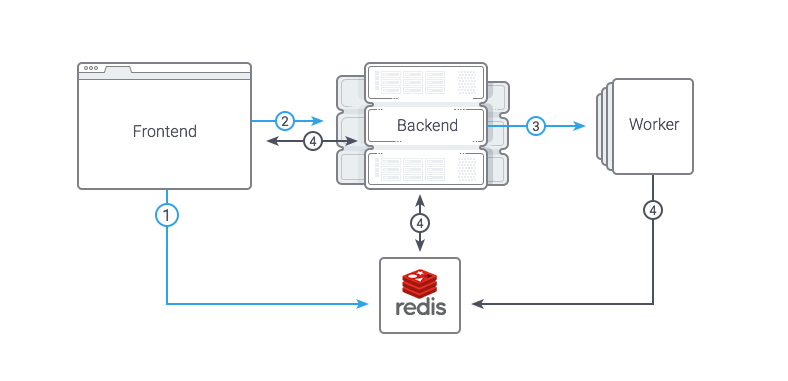

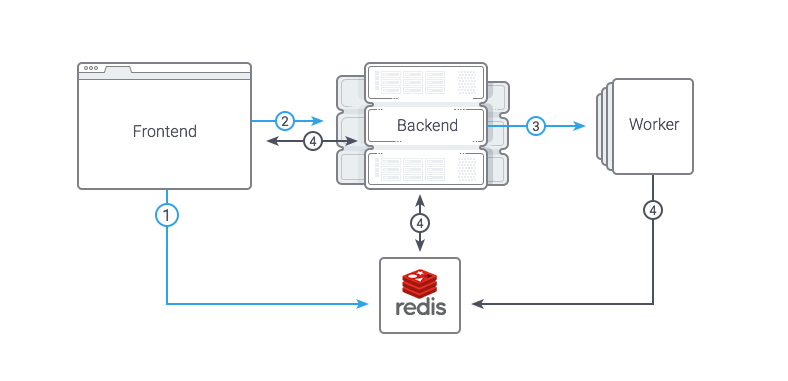

The interaction scheme is presented below:

Web interface - everything is quite simple, ExtJS and a lot of code. The code was written in CoffeeScript. In the first versions, they used LocalStorage for caching, but in the end they refused, because the number of bugs exceeded the benefit. Nginx is used to render statics, JS code, and files through X-Accel-Redirect (detailed below). He simply proxies the rest to Tornado, which, in turn, is a kind of router, redirecting requests to the desired Backend. Tornado scales well and, hopefully, we cut out all the locks that we ourselves made.

Backend consists of several daemons, which, as usual, can accept requests from Frontend. Daemons are located on each target server and work with the local file system, upload files via FTP, perform authentication and authorization, work with SQLite (editor settings, access to the user's FTP servers).

There are two types of requests sent to Backend: synchronous, which are executed relatively quickly (for example, listing files, reading a file), and requests for performing any long tasks (uploading a file to a remote server, deleting files / directories, etc.).

Synchronous requests are a regular RPC. As a way to serialize data, msgpack is used, which has proven itself in terms of speed of data serialization / deserialization and support among other languages. We also considered the python-specific rfoo and the Google protobuf, but the first one did not fit because of the binding to python (and its versions), and protobuf, with its code generators, seemed redundant to us, because the number of remote procedures is not measured in tens and hundreds, and there was no need to transfer the API to separate proto-files.

We decided to implement requests for performing long operations as simple as possible: between Frontend and Backend there is a common Redis, which stores the executed task, its status and any other data. To start a task, a regular synchronous RPC request is used. Flow turns out like this:

We took the path of least resistance and, instead of manually installing it, prepared Docker images. The installation is essentially performed by several commands:

run.sh will check for images, if they aren’t downloaded, and launch 5 containers with system components. To update images, you must run

Stopping and deleting images, respectively, are performed via the stop and rm parameters. The assembly dockerfile is in the project code, the assembly takes 10-20 minutes.

How to raise the development environment in the near future we will write on the site and in the wiki on github .

There are many obvious opportunities for further improvement of the file manager.

As the most useful for users, we see:

If you have add-ons that may be useful to users, tell us about them in the comments or on the sprutio-ru@groups.google.com mailing list .

We will begin to implement them, but I will not be afraid to say this: it will take years,if not decades, on our own . Therefore, if you want to learn how to program, you know Python and ExtJS and want to get development experience in an open project, we invite you to join the development of Sprut.IO. Moreover, for each realized feature, we will pay a fee, since we do not have to implement it ourselves.

The list of TODOs and the status of the tasks can be seen on the project website in the TODO section .

Thanks for attention! If it will be interesting, then with pleasure we will write even more details about the organization of the project and answer your questions in the comments.

Project site: https://sprut.io

Demo is available at: https://demo.sprut.io:9443

Source code: https://github.com/LTD-Beget/sprutio

Russian mailing list: sprutio-ru @ groups.google.com

English mailing list: sprutio@groups.google.com

Project site: https://sprut.io

Demo is available at: https://demo.sprut.io:9443

Source code: https://github.com/LTD-Beget/sprutio

Why reinvent your file manager

In 2010, we used NetFTP, which quite tolerably solved the problems of opening / loading / correcting several files.

However, users sometimes wanted to learn how to transfer sites between hosts or between our accounts, but the site was large, and the Internet was not the best for users. In the end, either we did it ourselves (which was clearly faster), or explained what SSH, MC, SCP, and other terrible things are.

Then we got the idea to make WEB a two-panel file manager that works on the server side and can copy between different sources at the server speed, and also in which there will be: search by files and directories, analysis of occupied space (analogue of ncdu), simple file uploads, well and a lot of interesting things. In general, everything that would make life easier for our users and us.

In May 2013 we posted it in production on our hosting. At some points, it turned out even better than we originally wanted - to download files and access the local file system, we wrote a Java applet that allows you to select files and copy everything at once to the hosting or vice versa from the hosting (where copying is not so important, he knew how to work with remote FTP and with the user's home directory, but, unfortunately, browsers will not support it soon).

Having read about the analogue on Habré , we decided to put our product in OpenSource, which turned out, it seems to us,

How does he work

We made for ourselves and used the most, in our opinion, new, stylish, youth tools and technologies. Often used what was already done for something.

There is some difference in the implementation of Sprut.IO and the version for our hosting, due to the interaction with our panel. For ourselves, we use: full-fledged queues, MySQL, an additional authorization server, which is also responsible for choosing the final server on which the client is located, transport between our servers on the internal network, and so on.

Sprut.IO consists of several logical components:

1) web-muzzle,

2) nginx + tornado, accepting all calls from the web,

3) end agents that can be hosted on one or on many servers.

In fact, by adding a separate layer with authorization and server selection, you can make a multi-server file manager (as in our implementation). All elements can logically be divided into two parts: Frontend (ExtJS, nginx, tornado) and Backend (MessagePack Server, Sqlite, Redis).

The interaction scheme is presented below:

Frontend

Web interface - everything is quite simple, ExtJS and a lot of code. The code was written in CoffeeScript. In the first versions, they used LocalStorage for caching, but in the end they refused, because the number of bugs exceeded the benefit. Nginx is used to render statics, JS code, and files through X-Accel-Redirect (detailed below). He simply proxies the rest to Tornado, which, in turn, is a kind of router, redirecting requests to the desired Backend. Tornado scales well and, hopefully, we cut out all the locks that we ourselves made.

Backend

Backend consists of several daemons, which, as usual, can accept requests from Frontend. Daemons are located on each target server and work with the local file system, upload files via FTP, perform authentication and authorization, work with SQLite (editor settings, access to the user's FTP servers).

There are two types of requests sent to Backend: synchronous, which are executed relatively quickly (for example, listing files, reading a file), and requests for performing any long tasks (uploading a file to a remote server, deleting files / directories, etc.).

Synchronous requests are a regular RPC. As a way to serialize data, msgpack is used, which has proven itself in terms of speed of data serialization / deserialization and support among other languages. We also considered the python-specific rfoo and the Google protobuf, but the first one did not fit because of the binding to python (and its versions), and protobuf, with its code generators, seemed redundant to us, because the number of remote procedures is not measured in tens and hundreds, and there was no need to transfer the API to separate proto-files.

We decided to implement requests for performing long operations as simple as possible: between Frontend and Backend there is a common Redis, which stores the executed task, its status and any other data. To start a task, a regular synchronous RPC request is used. Flow turns out like this:

- Frontend puts a wait task in a radish

- Frontend makes a synchronous request in the backend, passing the task id there

- Backend accepts the task, sets the status to "running", makes the fork and executes the task in the child process, immediately returning the answer to the backend

- Frontend looks at the status of a task or tracks changes in any data (for example, the number of copied files, which is periodically updated from Backend).

Interesting cases worth mentioning

Download files from Frontend

Task:

Upload the file to the destination server, while Frontend does not have access to the file system of the destination server.

Solution:

msgpack-server was not suitable for transferring files, the main reason was that the package could not be transferred by byte, but only in its entirety (it must first be fully loaded into memory and only then serialized and transferred, with a large file size there will be OOM ), in the end, it was decided to use a separate daemon for this.

The operation process turned out as follows:

We get the file from nginx, write it to the socket of our daemon with the header, where the temporary location of the file is indicated. And after the file is completely transferred, we send a request to RPC to move the file to the final location (already to the user). To work with the socket, we use the packagepysendfile , the server itself, based on the standard python library asyncoreEncoding Definition

Task:

Open the file for editing with the definition of the encoding, write taking into account the original encoding.

Problems:

If the user did not correctly recognize the encoding, then when making changes to the file with subsequent recording, we can get a UnicodeDecodeError and the changes will not be recorded.

All the “crutches” that were eventually made are the result of work on tickets with files received from users, all the “problem” files we also use for testing after making changes to the code.

Solution:

After exploring the Internet in search of this solution, we found the chardet library . This library, in turn, is a port of the uchardet libraryfrom Mozilla. For example, it is used in the well-known editor https://notepad-plus-plus.org

Having tested it with real examples, we realized that in reality it could be wrong. Instead of CP-1251, for example, “MacCyrillic” or “ISO-8859-7” may be issued, and instead of UTF-8, there may be “ISO-8859-2” or a special case of “ascii”.

In addition, some of the files on the hosting were utf-8, but they contained strange characters, either from editors who could not work correctly with UTF, or from where, especially for such cases, crutches had to be added.An example of encoding recognition and reading files, with comments# Для определения кодировки используем порт uchardet от Mozilla - python chardet # https://github.com/chardet/chardet # # Используем dev версию, там все самое свежее. # Данный код постоянно улучшается благодаря обратной связи с пользователями # чем больше - тем точнее определяется кодировка, но медленнее. 50000 - выбрано опытным путем self.charset_detect_buffer = 50000 # Берем часть файла part_content = content[0:self.charset_detect_buffer] + content[-self.charset_detect_buffer:] chardet_result = chardet.detect(part_content) detected = chardet_result["encoding"] confidence = chardet_result["confidence"] # костыль для тех, кто использует кривые редакторы в windows # из-за этого в файлах utf-8 имеем cp-1251 из-за чего библиотека ведет себя непредсказуемо при детектировании re_utf8 = re.compile('.*charset\s*=\s*utf\-8.*', re.UNICODE | re.IGNORECASE | re.MULTILINE) html_ext = ['htm', 'html', 'phtml', 'php', 'inc', 'tpl', 'xml'] # Все вероятности выбраны опытным путем, на основе набора файлов для тестирования if confidence > 0.75 and detected != 'windows-1251' and detected != FM.DEFAULT_ENCODING: if detected == "ISO-8859-7": detected = "windows-1251" if detected == "ISO-8859-2": detected = "utf-8" if detected == "ascii": detected = "utf-8" if detected == "MacCyrillic": detected = "windows-1251" # если все же ошиблись - костыль на указанный в файле charset if detected != FM.DEFAULT_ENCODING and file_ext in html_ext: result_of_search = re_utf8.search(part_content) self.logger.debug(result_of_search) if result_of_search is not None: self.logger.debug("matched utf-8 charset") detected = FM.DEFAULT_ENCODING else: self.logger.debug("not matched utf-8 charset") elif confidence > 0.60 and detected != 'windows-1251' and detected != FM.DEFAULT_ENCODING: # Тут отдельная логика # Код убран для краткости из примера elif detected == 'windows-1251' or detected == FM.DEFAULT_ENCODING: pass # Если определилось не очень верно, то тогда, скорее всего, это ошибка и берем UTF-8 )) else: detected = FM.DEFAULT_ENCODING encoding = detected if (detected or "").lower() in FM.encodings else FM.DEFAULT_ENCODING answer = { "item": self._make_file_info(abs_path), "content": content, "encoding": encoding }Parallel search for text in files based on file encoding

Objective:

To organize a search for text in files with the possibility of using “shell-style wildcards” in the name, that is, for example, 'pupkin@*.com' '$ * = 42;' etc.

Problems: The

user enters the word “Contacts” - the search shows that there are no files with the given text, but in reality they are, but on the hosting we encounter many encodings even within the framework of one project. Therefore, the search should also take this into account.

Several times we encountered the fact that users could mistakenly enter any lines and perform several search operations on a large number of folders, in the future this led to an increase in the load on the servers.

Solution:

Multitasking was organized quite standardly using the multiprocessing moduleand two queues (a list of all files, a list of found files with the required entries). One worker builds a list of files, and the rest, working in parallel, parse it and perform a direct search.

The desired string can be represented as a regular expression using the fnmatch package . Link to the final search implementation.

To solve the problem with encodings, an example of code with comments is given, the familiar chardet package is used there .Worker implementation example# Приведен пример воркера self.re_text = re.compile('.*' + fnmatch.translate(self.text)[:-7] + '.*', re.UNICODE | re.IGNORECASE) # remove \Z(?ms) from end of result expression def worker(re_text, file_queue, result_queue, logger, timeout): while int(time.time()) < timeout: if file_queue.empty(): continue f_path = file_queue.get() try: if is_binary(f_path): continue mime = mimetypes.guess_type(f_path)[0] # исключаем некоторые mime типы из поиска if mime in ['application/pdf', 'application/rar']: continue with open(f_path, 'rb') as fp: for line in fp: try: # преобразуем в unicode line = as_unicode(line) except UnicodeDecodeError: # видимо, файл не unicode, определим кодировку charset = chardet.detect(line) # бывает не всегда правильно if charset.get('encoding') in ['MacCyrillic']: detected = 'windows-1251' else: detected = charset.get('encoding') if detected is None: # не получилось ( break try: # будем искать в нужной кодировке line = str(line, detected, "replace") except LookupError: pass if re_text.match(line) is not None: result_queue.put(f_path) logger.debug("matched file = %s " % f_path) break except UnicodeDecodeError as unicode_e: logger.error( "UnicodeDecodeError %s, %s" % (str(unicode_e), traceback.format_exc())) except IOError as io_e: logger.error("IOError %s, %s" % (str(io_e), traceback.format_exc()))

In the final implementation, the ability to set the execution time in seconds (timeout) is added - the default is 1 hour. In the processes of the workers themselves, the execution priority is reduced to reduce the load on the disk and on the processor.Unpacking and creating file archives

Task:

To give users the opportunity to create archives (zip, tar.gz, bz2, tar are available) and unpack them (gz, tar.gz, tar, rar, zip, 7z)

Problems:

We encountered many problems with “real” archives, this both cp866 (DOS) encoded file names and backslashes in file names (windows). Some libraries (standard ZipFile python3, python-libarchive) did not work with Russian names inside the archive. Some library implementations, in particular SevenZip, RarFile, are not able to unpack empty folders and files (they are constantly found in archives with CMS). Also, users always want to see the process of performing the operation, but how can this be done if the library does not allow it (for example, the call to extractall () is just made)?

Decision:

Libraries ZipFile, as well as libarchive-python had to be fixed and connected as separate packages to the project. For libarchive-python, I had to fork the library and adapt it to python 3.

Creating files and folders with zero size (a bug was noticed in the SevenZip and RarFile libraries) had to be done in a separate cycle at the very beginning of the file headers in the archive. For all bugs, the developers were unsubscribed, as we find the time, we will send a pull request to them, apparently, they are not going to fix it themselves.

Separately, gzip processing of compressed files was done (for sql dumps, etc.), there were no crutches using the standard library.

Operation progress is monitored using an IN_CREATE system call overnight using the pyinotify library. It doesn’t work, of course, very accurately (it doesn’t always work when the files are heavily nested, so a magic coefficient of 1.5 is added), but it performs the task of displaying at least something similar for users. A good solution, given that there is no way to track this without rewriting all the libraries for archives.

Code for unpacking and creating archives.Sample code with comments# Пример работы скрипта для распаковки ахивов # Мы не ожидали, что везде придется вносить костыли, работа с русскими буквами, архивы windows и т.д # Сами библиотеки также нуждались в доработке, в том числе и стандартная ZipFile из python 3 from lib.FileManager.ZipFile import ZipFile, is_zipfile from lib.FileManager.LibArchiveEntry import Entry if is_zipfile(abs_archive_path): self.logger.info("Archive ZIP type, using zipfile (beget)") a = ZipFile(abs_archive_path) elif rarfile.is_rarfile(abs_archive_path): self.logger.info("Archive RAR type, using rarfile") a = rarfile.RarFile(abs_archive_path) else: self.logger.info("Archive 7Zip type, using py7zlib") a = SevenZFile(abs_archive_path) # Бибилиотека не распаковывает файлы, если они пусты (не создает пустые файлы и папки) for fileinfo in a.archive.header.files.files: if not fileinfo['emptystream']: continue name = fileinfo['filename'] # Костыли для windows - архивов try: unicode_name = name.encode('UTF-8').decode('UTF-8') except UnicodeDecodeError: unicode_name = name.encode('cp866').decode('UTF-8') unicode_name = unicode_name.replace('\\', '/') # For windows name in rar etc. file_name = os.path.join(abs_extract_path, unicode_name) dir_name = os.path.dirname(file_name) if not os.path.exists(dir_name): os.makedirs(dir_name) if os.path.exists(dir_name) and not os.path.isdir(dir_name): os.remove(dir_name) os.makedirs(dir_name) if os.path.isdir(file_name): continue f = open(file_name, 'w') f.close() infolist = a.infolist() # Далее алгоритм отличается по скорости. В зависимости от того есть ли # not-ascii имена файлов - выполним по файлам, а если нет вызовем extractall() # Классическая проверка not_ascii = False try: abs_extract_path.encode('utf-8').decode('ascii') for name in a.namelist(): name.encode('utf-8').decode('ascii') except UnicodeDecodeError: not_ascii = True except UnicodeEncodeError: not_ascii = True # ========== # Алгоритм по распаковке скрыт для краткости - там ничего интересного # ========== t = threading.Thread(target=self.progress, args=(infolist, self.extracted_files, abs_extract_path)) t.daemon = True t.start() # Прогресс операции отслеживается с помошью вотчера на системный вызов IN_CREATE # Неплохое решение, учитывая, что нет возможности отследить это, не переписывая все библиотеки для архивов def progress(self, infolist, progress, extract_path): self.logger.debug("extract thread progress() start") next_tick = time.time() + REQUEST_DELAY # print pprint.pformat("Clock = %s , tick = %s" % (str(time.time()), str(next_tick))) progress["count"] = 0 class Identity(pyinotify.ProcessEvent): def process_default(self, event): progress["count"] += 1 # print("Has event %s progress %s" % (repr(event), pprint.pformat(progress))) wm1 = pyinotify.WatchManager() wm1.add_watch(extract_path, pyinotify.IN_CREATE, rec=True, auto_add=True) s1 = pyinotify.Stats() # Stats is a subclass of ProcessEvent notifier1 = pyinotify.ThreadedNotifier(wm1, default_proc_fun=Identity(s1)) notifier1.start() total = float(len(infolist)) while not progress["done"]: if time.time() > next_tick: count = float(progress["count"]) * 1.5 if count <= total: op_progress = { 'percent': round(count / total, 2), 'text': str(int(round(count / total, 2) * 100)) + '%' } else: op_progress = { 'percent': round(99, 2), 'text': '99%' } self.on_running(self.status_id, progress=op_progress, pid=self.pid, pname=self.name) next_tick = time.time() + REQUEST_DELAY time.sleep(REQUEST_DELAY) # иначе пользователям кажется, что распаковалось не полностью op_progress = { 'percent': round(99, 2), 'text': '99%' } self.on_running(self.status_id, progress=op_progress, pid=self.pid, pname=self.name) time.sleep(REQUEST_DELAY) notifier1.stop()Increased Security Requirements

Task:

Do not give the user the opportunity to access the destination server.

Problems:

Everyone knows that hundreds of sites and users can simultaneously be on the hosting server. In the first versions of our product, workers could perform some operations with root privileges, in some cases it was theoretically (probably) possible to access other people's files, folders, read unnecessary or break something.

Unfortunately, we cannot give specific examples, there were bugs, but they did not affect the server as a whole, and they were more of our errors than a security hole. In any case, within the framework of the hosting infrastructure there are means of reducing the load and monitoring, and in the version for OpenSource we decided to seriously improve security.

Decision:

All operations were submitted to the so-called workers (createFile, extractArchive, findText), etc. Each worker, before starting to work, performs PAM authentication, as well as the setuid of the user.

At the same time, all the workers work each in a separate process and differ only in wrappers (we are waiting or not waiting for an answer). Therefore, even if the algorithm for performing a particular operation may contain a vulnerability, there will be isolation at the level of system rights.

The application architecture also does not allow direct access to the file system, for example, through a web server. This solution allows you to effectively take into account the load and monitor user activity on the server by any third-party means.

Installation

We took the path of least resistance and, instead of manually installing it, prepared Docker images. The installation is essentially performed by several commands:

user@host:~$ wget https://raw.githubusercontent.com/LTD-Beget/sprutio/master/run.sh

user@host:~$ chmod +x run.sh

user@host:~$ ./run.shrun.sh will check for images, if they aren’t downloaded, and launch 5 containers with system components. To update images, you must run

user@host:~$ ./run.sh pullStopping and deleting images, respectively, are performed via the stop and rm parameters. The assembly dockerfile is in the project code, the assembly takes 10-20 minutes.

How to raise the development environment in the near future we will write on the site and in the wiki on github .

Help us make Sprut.IO better

There are many obvious opportunities for further improvement of the file manager.

As the most useful for users, we see:

- Add SSH / SFTP Support

- Add WebDav Support

- Add terminal

- Add the ability to work with Git

- Add the ability to share files

- Add switching themes design and creation of various themes

- Make a universal interface for working with modules

If you have add-ons that may be useful to users, tell us about them in the comments or on the sprutio-ru@groups.google.com mailing list .

We will begin to implement them, but I will not be afraid to say this: it will take years,

The list of TODOs and the status of the tasks can be seen on the project website in the TODO section .

Thanks for attention! If it will be interesting, then with pleasure we will write even more details about the organization of the project and answer your questions in the comments.

Project site: https://sprut.io

Demo is available at: https://demo.sprut.io:9443

Source code: https://github.com/LTD-Beget/sprutio

Russian mailing list: sprutio-ru @ groups.google.com

English mailing list: sprutio@groups.google.com