Lost art of proof of security. Part 1 of 2

Yuri Pashkov, Kuzma Pashkov - Lead InfoSec , EMC , VMWare trainer @ training.muk.ua

Many years of experience in teaching in the field of "Information Security" (hereinafter referred to as IS) allow us to state positive trends in this area:

At the same time, there are negative ones:

As a result, whole teams of IS support divisions appear, which at all levels of the hierarchy, beginning with the security administrator and ending with the head, thoughtlessly fulfill the requirements of security standards without thinking about proving the security of the automated system after they are completed.

This article demonstrates the capabilities of the evidence-based approach to creating secure automated systems and is educational in nature.

1. Technology for working with data

Suppose that in some organization the following technology is used:

To create documents using multitasking operating system. The operating system (hereinafter referred to as the OS) allows the user to run several programs. Document data is stored in files and processed by OS programs.

Collective work is provided with the help of the workstation of employees, LAN network equipment, a server, and network operating system tools.

2. Security policy

Consider an electoral security policy that can be used in our case:

Security policies and mechanisms to support its implementation form a single, secure information processing environment. This environment has a hierarchical architecture, where the upper levels are represented by security policy requirements, then the user interface follows, then several software protection levels (including OS levels), and finally, the lower level of this structure is represented by hardware protection. At all levels except the top, the requirements of the security policy must be implemented, for which, in fact, the protection mechanisms are responsible.

In different systems, protection mechanisms can be implemented in different ways; their design is determined by the general concept of the system.

However, one requirement must be strictly observed: these mechanisms must adequately implement the requirements of the security policy.

As an example, consider how this security policy can be implemented using the model of our automated system.

3. Model of an automated system

Let us construct a model of an automated system that operates with valuable information. Valuable information is stored in the system in the form of information objects. In addition to valuable information, objects can contain other information, for example, program texts, system service information, etc.

Let time be discrete and take values from N - the set of time values, N = (1,2, ...). Let t∈N denote the current time value.

It is generally accepted that the state of a system at a given moment can be represented as states of a finite set of objects. Therefore, we assume that the state of the system is a set of states of its objects. Objects can be created and destroyed, so we can talk about the set of system objects at some point in time t∈N, which we define as Ot, | Ot | <∞ - the set of system objects at time t. Many objects of course. By an object we mean an arbitrary finite set of words of a certain language I.

For each t∈N from the set Ot of objects of the system, we select a subset St, that is, the set of subjects of the system at time t. The set St consists of the subjects of the system S. By the subject we mean the object that describes the transformation to which the domain is allocated (the domain refers to the resources of the system allocated for conversion) and control is transferred. The transformation to which control is transferred is called a process. Thus, the subject is a pair (domain, process). Each subject can be in two states: in the form of a description in which it is called inactive, and in the form (domain, process) in which it is called activated.

Only another activated subject can activate a subject. On the set S of subjects of the system for each moment of time t∈N we define the graph Гt, the graph of the functioning of the system. The vertices S1 and S2 of the graph are connected by an arc S1 → S2 if and only if, in the case of activation of S1, activation of S2 is possible. If at any time t∈N in the graph Гt, arcs do not enter the vertex S and no arcs exit, then such subjects are excluded from consideration. We denote through the activation procedure of subject S2 subject S1. By activation, we mean the transfer of control by subject S2 to subject S1.

the activation procedure of subject S2 subject S1. By activation, we mean the transfer of control by subject S2 to subject S1.

From the set of St subjects of the system, we single out the subset U - the set of users of the system. By a user we mean a subject S from a set of subjects for which arcs do not enter the vertex S in all the graphs Гt. Users are activated by definition and can activate other system entities. We introduce the concept of a user group, which we will designate as G. A user group is a subset of the set of users U, which includes users who have equal rights to access the objects of collective use of the group. In addition to activation in the system, other types of access are possible. Define the set R - the set of access types. The set is finite, i.e. | R | <∞. Examples of access types are “reading”, “writing”, “execution”.

We denote S → O as the access of the subject S to the object O. Direct access of the subject S of the system to the object O of the system is not always possible.

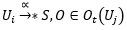

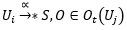

Then we define S → * O - access on behalf of subject S to object O. This means that a sequence of accesses is implemented at a certain time interval [t, t + τ].

Since the functioning of the system is described by the accesses of the activated subjects of the system to the objects of the system, for each moment of time t∈N, we can define

Dt = {O | S → * O at time t}

- the set of objects that are accessed at time t∈N.

In this set, select

Dt (U) = {O | U → * O at time t}

- the set of objects that are accessed on behalf of user U at time t∈N, and also

Dt (G) = {O | Ui → * O, Ui ∈G, i = 1 (1) n at time t}

is the set of objects that can be accessed by a group of users at time t∈N.

From the set of objects that can be accessed, we select a group of objects to which all users access

D = ⋂tDt (Ui), i = 1 (1) n

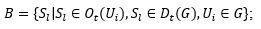

These are general objects of the system. In particular, using tools from D, the user can create objects and destroy objects that do not belong to D. For each user group, you can also select many objects

Dg (G) = ⋂tDt (G)

- objects for collective use of group G.

We assume that some subsystem is constructed from the objects of the system that implements accesses. We will assume that any appeal of a subject for access to an object in this subsystem begins with a request, which we denote .

.

When an object is generated, the subject, activated on behalf of the user, refers to the corresponding procedure, as a result of which an object with a unique name is created. We assume that the corresponding user spawned this object. Denote by Ot (U) the set of objects generated by the user. In a similar way, Ot (G), the set of objects generated by a group of users, can also be defined.

Among all users, let us single out the privileged user of Uadm - the system administrator. This user has a full set of privileges to access shared system objects. At any moment in time t∈N this user is unique.

Now we will express in terms of the model a security policy that defines allowed and unauthorized accesses in the system.

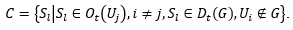

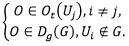

We will consider permissible accesses to objects created by the user himself, as well as belonging to the shared objects of the user group to which this user belongs. Mathematically, this can be formulated as follows:

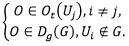

We consider unauthorized access on behalf of a user to objects created by another user, or belonging to shared objects of a user group to which this user does not belong.

4. Proof of the need for security conditions

So, we have built a model of a system that processes valuable information, and formulated statements whose validity in the general case is controversial.

Now it is necessary to prove that, under certain conditions, these statements will be true, in other words, the security policy will be implemented, the system model will be protected and information processing in the system will be safe.

First, we intuitively introduce a number of assumptions that, in our opinion, ensure the security of information processing. Further, we prove that the fulfillment of these assumptions will ensure the implementation of the security policy. From assumptions, we will move on to “services” of a lower level, put forward the conditions under which the system model will be protected, and also prove this fact.

Thus, having proved the security of the model, we can say that the model of the system we built is protected. After the evidence, it can be argued that if the system model is protected, then the system implemented in strict accordance with the conditions formulated in the simulation will also be protected.

Assumption 1. If subject S is activated at time t, then there is a single activated subject S 'in St that activated S. At time t = 0, only users are activated.

Lemma 1. If at the moment t the subject S is activated, then there is a single user U on whose behalf the subject S is activated, that is, there exists a chain

Proof . By Assumption 1, there exists a unique subject Sk that activates S. If Sk = Ui, Ui∈ {U}, then the lemma is proved.

If Sk ≠ Ui, then there is a single subject Sk-1> that activated Sk. Due to the finite time of the system Σ and the fact that only users can be activated at the initial time, we find at the beginning of the chain of one of them. On this, according to the definition of users, the chain breaks. The lemma is proved.

We will model the functioning of the system by the sequence of accesses of the activated subjects of the system to the objects of the system.

Assumption 2. The functioning of the system Σ is described by the sequence of accesses of sets of subjects to sets of objects at each moment of time t∈N.

Lemma 2. For each t∈N, for each O∈Ot, O∉D, there is a unique user U such that O∈Ot (U), that is, who generated it.

Evidence. Since O0 = {Ui} ∪D, then the object O∈Ot, O∉D is generated at some moment s, 0≤s≤t. Then in Os there was an activated subject S, who created O. Then, as noted earlier, there is a single user U, who gave birth to O. The lemma is proved.

One of the drawbacks of a discretionary security policy is the free flow of rights. Suppose that only one user in the system has all the rights to access the general objects of the system, thus being their owner, and he does not transfer these rights in full to anyone.

Assumption 3. In the system Σ at each moment in time t∈N there is a single user Uadm who has all rights with respect to the general objects of the system.

Suppose that it is not possible to store any valuable information in shared objects.

Assumption 4 . If O∈D, then accesses of the form , for any ρ1, ρ2⊆R, cannot create an information leakage channel.

, for any ρ1, ρ2⊆R, cannot create an information leakage channel.

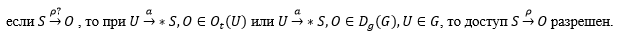

Suppose that the order of the system when trying to contact a subject activated on behalf of a user to an object of the system is as follows.

Assumption 5 . If some subject S, S∈D is activated on behalf of the user Ui (i.e. ), in turn, subject S is granted access to object O at time t, then either O∈D or O∈Ot (Ui) or O ∈Dg (G), Ui∈G, or the system crashes access.

), in turn, subject S is granted access to object O at time t, then either O∈D or O∈Ot (Ui) or O ∈Dg (G), Ui∈G, or the system crashes access.

Theorem 1. Let assumptions 1-5 and security policy rules be fulfilled in the constructed system. Then unauthorized access is impossible in the system.

Proof . Assume the opposite, i.e., that at some point in time t there is such a kind of access p, in which, on behalf of a user Ui who is not a member of the group, access is made to object O of another user Uj or to common objects of another group:

for

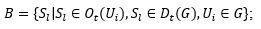

Let S1, S2 , ..., Sm - all activated subjects with access βi⊆ρ, i = 1,2, ..., m at time t to the object O. Then, according to Lemma 2, the set of these subjects is divided into three subsets:

General objects:

Subjects being objects generated on behalf of user Ui or from group G:

Unauthorized access entities (by assumption):

According to Lemma 1, for any Sl, l = 1,2, ..., m, there is a single user on whose behalf the subject Sl is activated. If Sl∈A, then, according to Assumption 5 and the condition of Theorem 1, that access is allowed, we obtain that Sl is activated on behalf of Uj or Ui∈G. This contradicts the assumption.

allowed, we obtain that Sl is activated on behalf of Uj or Ui∈G. This contradicts the assumption.

If Sl∈B, then access is not possible according to the security policy.

not possible according to the security policy.

Therefore, if , then there exists a chain of length (k + 1):

, then there exists a chain of length (k + 1):

and a subject S (k) ∈C.

Then there exists a chain of length k such that

Repeating these arguments, through k steps we get that

Last access is not possible if the security policy is being implemented. Therefore, the assumption is false and Theorem 1 is proved.

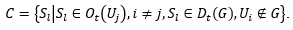

Now we’ll build a lot of “services” of a lower level that are more convenient for implementation in an automated system that support a security policy, that is, we want to define many conditions implemented in the system, such that it is possible to prove the theorem on the sufficiency of the implementation of the rules of the security policy.

Condition 1 (identification and authentication) . If for any, then the membership functions of S and O to the sets Ot (Ui), i = 1 (1) n; D; Dg (Gk), k = 1 (1) g.

then the membership functions of S and O to the sets Ot (Ui), i = 1 (1) n; D; Dg (Gk), k = 1 (1) g.

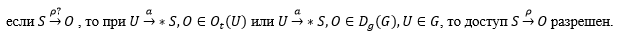

Condition 2 (permissive subsystem) . If at time t, then it follows from t = j

at time t, then it follows from t = j  and from t ≠ j it follows

and from t ≠ j it follows  . Similarly, if

. Similarly, if at time t, then follows

at time t, then follows  from U∈G and from U∉G follows

from U∈G and from U∉G follows  .

.

Condition 3 (no workarounds) . For any t∈N, ρ⊆R, if the subject S, activated at time t, got access at time t , then at time t there was an access request

, then at time t there was an access request  .

.

Theorem 2. If conditions 1-3 are fulfilled in the constructed system, then the security policy is fulfilled (the sufficiency of conditions for the implementation of the policy).

Evidence. The statement of the theorem consists of two statements:

a) if for arbitrary ρ⊆R,

then access is allowed;

allowed;

b) if for an arbitrary ρ⊆R,

then any access at the moment t of the subject S to the object O is impossible.

Let us prove assertion a). If then, by condition 1, membership functions are calculated and the membership of subject S and object O is determined to the sets Ot (Ui), i = 1 (1) n; D; Dg (Gk), k = 1 (1) g. If Ui * S, O∈Ot (Uj) and i = j, then condition 2 is sent and access is allowed. If the object O∈Dg (G), i.e., is the object of collective use of a group of users, and also the user U∈G is a member of this group, then, according to condition 2, the user is also allowed.

then, by condition 1, membership functions are calculated and the membership of subject S and object O is determined to the sets Ot (Ui), i = 1 (1) n; D; Dg (Gk), k = 1 (1) g. If Ui * S, O∈Ot (Uj) and i = j, then condition 2 is sent and access is allowed. If the object O∈Dg (G), i.e., is the object of collective use of a group of users, and also the user U∈G is a member of this group, then, according to condition 2, the user is also allowed.

We now prove assertion b). If membership functions are calculated and it is determined that

membership functions are calculated and it is determined that  i ≠ j, then by condition 2 access is

i ≠ j, then by condition 2 access is  not allowed. Similarly, if it is determined

not allowed. Similarly, if it is determined  that access is not allowed, according to the premise of condition 2.

that access is not allowed, according to the premise of condition 2.

If access became possible bypassing the request

became possible bypassing the request and subject S is activated at time t, then the assumption is contrary to condition 3. If subject S is not activated, then having access

and subject S is activated at time t, then the assumption is contrary to condition 3. If subject S is not activated, then having access  contradicts the definition of access. The theorem is proved.

contradicts the definition of access. The theorem is proved.

A consequence of Theorem 2 is the fact that, having ensured that conditions 1–3 are satisfied when the system is functioning, we guarantee that the security policy is fulfilled.

Thus, if assumptions 1-5 are true and conditions 1-3 are fulfilled during the functioning of the system, then unauthorized access to system objects is impossible.

Since both assumptions and conditions were formulated in relation to the model of an automated system, mechanisms should be implemented in a real system to ensure their unconditional fulfillment.

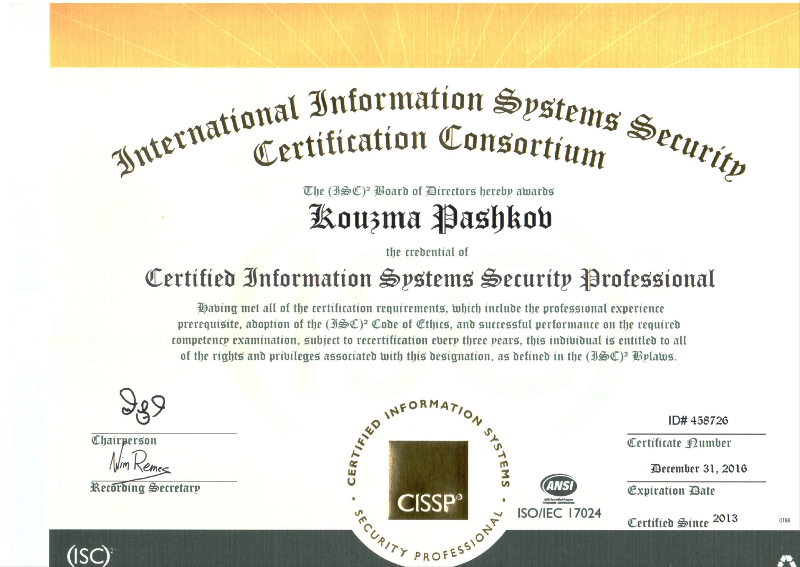

To be continued. We are waiting for questions on training and certification of CISSP / CISM / CISA / Security + at PashkovK@muk.com.ua

Training courses on information security conducted by one of the authors of the article Kuzma Pashkov (CC MUK - Kiev)

Next courses on Information Security (conducted by Kuzma Pashkov)

January 18 - 22, 2016 - MUK-S0101 SecurityPlus

February 1 - 5, 2016 - MUK-S0102 CISA

Many years of experience in teaching in the field of "Information Security" (hereinafter referred to as IS) allow us to state positive trends in this area:

- Business owners, finally, began to consider IS risks as significant as financial and operational, and increasingly they seek not only trusted, but qualified advisers for positions of the CSO level (Chief Security Officer)

- The normative approach to building security systems allowed IS to become a mass-consumed service

- Explosive growth of IS risks supports stable high demand and supply of training services in the relevant field in the market

At the same time, there are negative ones:

- It’s also difficult to confirm your qualification in information security today, especially if successful work experience is gained in the CIS countries and the potential employer is in the USA / Europe

- Massiveness leads to the problem of consumerization of information security

- The quality of training services and the qualifications of specialists are falling

As a result, whole teams of IS support divisions appear, which at all levels of the hierarchy, beginning with the security administrator and ending with the head, thoughtlessly fulfill the requirements of security standards without thinking about proving the security of the automated system after they are completed.

This article demonstrates the capabilities of the evidence-based approach to creating secure automated systems and is educational in nature.

1. Technology for working with data

Suppose that in some organization the following technology is used:

- In the organization, employees work in several different departments that solve interrelated but different tasks;

- the solution to the problems of each department is automated; To solve the problems of the department, a common database is used, accessible to all employees of the department as part of teamwork. The content of the database is not a secret, but its availability is a critical parameter for the activities of the department and the organization as a whole. In other words, if the database is not available, then the organization will suffer damage;

- when employees perform official duties, confidential information contained in documents is used. In other words, if the content of the documents becomes known to an unlimited number of people, then the organization may be damaged;

- documents are developed and printed by employees using automation tools at their automated workstations (hereinafter - AWP) connected to a local area network (hereinafter - LAN).

To create documents using multitasking operating system. The operating system (hereinafter referred to as the OS) allows the user to run several programs. Document data is stored in files and processed by OS programs.

Collective work is provided with the help of the workstation of employees, LAN network equipment, a server, and network operating system tools.

2. Security policy

Consider an electoral security policy that can be used in our case:

- Organization documents and databases are valuable. A system should be implemented to differentiate access to documents and databases on the basis of a set of organizational and technical measures and means of protection;

- closed automation system - only organization officials have access to documents and databases;

- the management of the organization represented by the system administrator has the right to access all documents and databases;

- the head of the organization with the help of the administrator determines the rights for employees to access documents and databases;

- employees have the right to access objects created by themselves, as well as being an object of collective use of the user group to which this employee belongs;

- access on behalf of a user to objects created by another user or belonging to shared objects of a group of users to which this user does not belong is prohibited.

Security policies and mechanisms to support its implementation form a single, secure information processing environment. This environment has a hierarchical architecture, where the upper levels are represented by security policy requirements, then the user interface follows, then several software protection levels (including OS levels), and finally, the lower level of this structure is represented by hardware protection. At all levels except the top, the requirements of the security policy must be implemented, for which, in fact, the protection mechanisms are responsible.

In different systems, protection mechanisms can be implemented in different ways; their design is determined by the general concept of the system.

However, one requirement must be strictly observed: these mechanisms must adequately implement the requirements of the security policy.

As an example, consider how this security policy can be implemented using the model of our automated system.

3. Model of an automated system

Let us construct a model of an automated system that operates with valuable information. Valuable information is stored in the system in the form of information objects. In addition to valuable information, objects can contain other information, for example, program texts, system service information, etc.

Let time be discrete and take values from N - the set of time values, N = (1,2, ...). Let t∈N denote the current time value.

It is generally accepted that the state of a system at a given moment can be represented as states of a finite set of objects. Therefore, we assume that the state of the system is a set of states of its objects. Objects can be created and destroyed, so we can talk about the set of system objects at some point in time t∈N, which we define as Ot, | Ot | <∞ - the set of system objects at time t. Many objects of course. By an object we mean an arbitrary finite set of words of a certain language I.

For each t∈N from the set Ot of objects of the system, we select a subset St, that is, the set of subjects of the system at time t. The set St consists of the subjects of the system S. By the subject we mean the object that describes the transformation to which the domain is allocated (the domain refers to the resources of the system allocated for conversion) and control is transferred. The transformation to which control is transferred is called a process. Thus, the subject is a pair (domain, process). Each subject can be in two states: in the form of a description in which it is called inactive, and in the form (domain, process) in which it is called activated.

Only another activated subject can activate a subject. On the set S of subjects of the system for each moment of time t∈N we define the graph Гt, the graph of the functioning of the system. The vertices S1 and S2 of the graph are connected by an arc S1 → S2 if and only if, in the case of activation of S1, activation of S2 is possible. If at any time t∈N in the graph Гt, arcs do not enter the vertex S and no arcs exit, then such subjects are excluded from consideration. We denote through

the activation procedure of subject S2 subject S1. By activation, we mean the transfer of control by subject S2 to subject S1.

the activation procedure of subject S2 subject S1. By activation, we mean the transfer of control by subject S2 to subject S1.From the set of St subjects of the system, we single out the subset U - the set of users of the system. By a user we mean a subject S from a set of subjects for which arcs do not enter the vertex S in all the graphs Гt. Users are activated by definition and can activate other system entities. We introduce the concept of a user group, which we will designate as G. A user group is a subset of the set of users U, which includes users who have equal rights to access the objects of collective use of the group. In addition to activation in the system, other types of access are possible. Define the set R - the set of access types. The set is finite, i.e. | R | <∞. Examples of access types are “reading”, “writing”, “execution”.

We denote S → O as the access of the subject S to the object O. Direct access of the subject S of the system to the object O of the system is not always possible.

Then we define S → * O - access on behalf of subject S to object O. This means that a sequence of accesses is implemented at a certain time interval [t, t + τ].

Since the functioning of the system is described by the accesses of the activated subjects of the system to the objects of the system, for each moment of time t∈N, we can define

Dt = {O | S → * O at time t}

- the set of objects that are accessed at time t∈N.

In this set, select

Dt (U) = {O | U → * O at time t}

- the set of objects that are accessed on behalf of user U at time t∈N, and also

Dt (G) = {O | Ui → * O, Ui ∈G, i = 1 (1) n at time t}

is the set of objects that can be accessed by a group of users at time t∈N.

From the set of objects that can be accessed, we select a group of objects to which all users access

D = ⋂tDt (Ui), i = 1 (1) n

These are general objects of the system. In particular, using tools from D, the user can create objects and destroy objects that do not belong to D. For each user group, you can also select many objects

Dg (G) = ⋂tDt (G)

- objects for collective use of group G.

We assume that some subsystem is constructed from the objects of the system that implements accesses. We will assume that any appeal of a subject for access to an object in this subsystem begins with a request, which we denote

.

. When an object is generated, the subject, activated on behalf of the user, refers to the corresponding procedure, as a result of which an object with a unique name is created. We assume that the corresponding user spawned this object. Denote by Ot (U) the set of objects generated by the user. In a similar way, Ot (G), the set of objects generated by a group of users, can also be defined.

Among all users, let us single out the privileged user of Uadm - the system administrator. This user has a full set of privileges to access shared system objects. At any moment in time t∈N this user is unique.

Now we will express in terms of the model a security policy that defines allowed and unauthorized accesses in the system.

We will consider permissible accesses to objects created by the user himself, as well as belonging to the shared objects of the user group to which this user belongs. Mathematically, this can be formulated as follows:

We consider unauthorized access on behalf of a user to objects created by another user, or belonging to shared objects of a user group to which this user does not belong.

4. Proof of the need for security conditions

So, we have built a model of a system that processes valuable information, and formulated statements whose validity in the general case is controversial.

Now it is necessary to prove that, under certain conditions, these statements will be true, in other words, the security policy will be implemented, the system model will be protected and information processing in the system will be safe.

First, we intuitively introduce a number of assumptions that, in our opinion, ensure the security of information processing. Further, we prove that the fulfillment of these assumptions will ensure the implementation of the security policy. From assumptions, we will move on to “services” of a lower level, put forward the conditions under which the system model will be protected, and also prove this fact.

Thus, having proved the security of the model, we can say that the model of the system we built is protected. After the evidence, it can be argued that if the system model is protected, then the system implemented in strict accordance with the conditions formulated in the simulation will also be protected.

Assumption 1. If subject S is activated at time t, then there is a single activated subject S 'in St that activated S. At time t = 0, only users are activated.

Lemma 1. If at the moment t the subject S is activated, then there is a single user U on whose behalf the subject S is activated, that is, there exists a chain

Proof . By Assumption 1, there exists a unique subject Sk that activates S. If Sk = Ui, Ui∈ {U}, then the lemma is proved.

If Sk ≠ Ui, then there is a single subject Sk-1> that activated Sk. Due to the finite time of the system Σ and the fact that only users can be activated at the initial time, we find at the beginning of the chain of one of them. On this, according to the definition of users, the chain breaks. The lemma is proved.

We will model the functioning of the system by the sequence of accesses of the activated subjects of the system to the objects of the system.

Assumption 2. The functioning of the system Σ is described by the sequence of accesses of sets of subjects to sets of objects at each moment of time t∈N.

Lemma 2. For each t∈N, for each O∈Ot, O∉D, there is a unique user U such that O∈Ot (U), that is, who generated it.

Evidence. Since O0 = {Ui} ∪D, then the object O∈Ot, O∉D is generated at some moment s, 0≤s≤t. Then in Os there was an activated subject S, who created O. Then, as noted earlier, there is a single user U, who gave birth to O. The lemma is proved.

One of the drawbacks of a discretionary security policy is the free flow of rights. Suppose that only one user in the system has all the rights to access the general objects of the system, thus being their owner, and he does not transfer these rights in full to anyone.

Assumption 3. In the system Σ at each moment in time t∈N there is a single user Uadm who has all rights with respect to the general objects of the system.

Suppose that it is not possible to store any valuable information in shared objects.

Assumption 4 . If O∈D, then accesses of the form

, for any ρ1, ρ2⊆R, cannot create an information leakage channel.

, for any ρ1, ρ2⊆R, cannot create an information leakage channel. Suppose that the order of the system when trying to contact a subject activated on behalf of a user to an object of the system is as follows.

Assumption 5 . If some subject S, S∈D is activated on behalf of the user Ui (i.e.

), in turn, subject S is granted access to object O at time t, then either O∈D or O∈Ot (Ui) or O ∈Dg (G), Ui∈G, or the system crashes access.

), in turn, subject S is granted access to object O at time t, then either O∈D or O∈Ot (Ui) or O ∈Dg (G), Ui∈G, or the system crashes access.Theorem 1. Let assumptions 1-5 and security policy rules be fulfilled in the constructed system. Then unauthorized access is impossible in the system.

Proof . Assume the opposite, i.e., that at some point in time t there is such a kind of access p, in which, on behalf of a user Ui who is not a member of the group, access is made to object O of another user Uj or to common objects of another group:

for

Let S1, S2 , ..., Sm - all activated subjects with access βi⊆ρ, i = 1,2, ..., m at time t to the object O. Then, according to Lemma 2, the set of these subjects is divided into three subsets:

General objects:

Subjects being objects generated on behalf of user Ui or from group G:

Unauthorized access entities (by assumption):

According to Lemma 1, for any Sl, l = 1,2, ..., m, there is a single user on whose behalf the subject Sl is activated. If Sl∈A, then, according to Assumption 5 and the condition of Theorem 1, that access is

allowed, we obtain that Sl is activated on behalf of Uj or Ui∈G. This contradicts the assumption.

allowed, we obtain that Sl is activated on behalf of Uj or Ui∈G. This contradicts the assumption. If Sl∈B, then access is

not possible according to the security policy.

not possible according to the security policy. Therefore, if

, then there exists a chain of length (k + 1):

, then there exists a chain of length (k + 1):

and a subject S (k) ∈C.

Then there exists a chain of length k such that

Repeating these arguments, through k steps we get that

Last access is not possible if the security policy is being implemented. Therefore, the assumption is false and Theorem 1 is proved.

Now we’ll build a lot of “services” of a lower level that are more convenient for implementation in an automated system that support a security policy, that is, we want to define many conditions implemented in the system, such that it is possible to prove the theorem on the sufficiency of the implementation of the rules of the security policy.

Condition 1 (identification and authentication) . If for any,

then the membership functions of S and O to the sets Ot (Ui), i = 1 (1) n; D; Dg (Gk), k = 1 (1) g.

then the membership functions of S and O to the sets Ot (Ui), i = 1 (1) n; D; Dg (Gk), k = 1 (1) g. Condition 2 (permissive subsystem) . If

at time t, then it follows from t = j

at time t, then it follows from t = j  and from t ≠ j it follows

and from t ≠ j it follows  . Similarly, if

. Similarly, if at time t, then follows

at time t, then follows  from U∈G and from U∉G follows

from U∈G and from U∉G follows  .

. Condition 3 (no workarounds) . For any t∈N, ρ⊆R, if the subject S, activated at time t, got access at time t

, then at time t there was an access request

, then at time t there was an access request  .

. Theorem 2. If conditions 1-3 are fulfilled in the constructed system, then the security policy is fulfilled (the sufficiency of conditions for the implementation of the policy).

Evidence. The statement of the theorem consists of two statements:

a) if for arbitrary ρ⊆R,

then access is

allowed;

allowed; b) if for an arbitrary ρ⊆R,

then any access at the moment t of the subject S to the object O is impossible.

Let us prove assertion a). If

then, by condition 1, membership functions are calculated and the membership of subject S and object O is determined to the sets Ot (Ui), i = 1 (1) n; D; Dg (Gk), k = 1 (1) g. If Ui * S, O∈Ot (Uj) and i = j, then condition 2 is sent and access is allowed. If the object O∈Dg (G), i.e., is the object of collective use of a group of users, and also the user U∈G is a member of this group, then, according to condition 2, the user is also allowed.

then, by condition 1, membership functions are calculated and the membership of subject S and object O is determined to the sets Ot (Ui), i = 1 (1) n; D; Dg (Gk), k = 1 (1) g. If Ui * S, O∈Ot (Uj) and i = j, then condition 2 is sent and access is allowed. If the object O∈Dg (G), i.e., is the object of collective use of a group of users, and also the user U∈G is a member of this group, then, according to condition 2, the user is also allowed. We now prove assertion b). If

membership functions are calculated and it is determined that

membership functions are calculated and it is determined that  i ≠ j, then by condition 2 access is

i ≠ j, then by condition 2 access is  not allowed. Similarly, if it is determined

not allowed. Similarly, if it is determined  that access is not allowed, according to the premise of condition 2.

that access is not allowed, according to the premise of condition 2. If access

became possible bypassing the request

became possible bypassing the request and subject S is activated at time t, then the assumption is contrary to condition 3. If subject S is not activated, then having access

and subject S is activated at time t, then the assumption is contrary to condition 3. If subject S is not activated, then having access  contradicts the definition of access. The theorem is proved.

contradicts the definition of access. The theorem is proved. A consequence of Theorem 2 is the fact that, having ensured that conditions 1–3 are satisfied when the system is functioning, we guarantee that the security policy is fulfilled.

Thus, if assumptions 1-5 are true and conditions 1-3 are fulfilled during the functioning of the system, then unauthorized access to system objects is impossible.

Since both assumptions and conditions were formulated in relation to the model of an automated system, mechanisms should be implemented in a real system to ensure their unconditional fulfillment.

To be continued. We are waiting for questions on training and certification of CISSP / CISM / CISA / Security + at PashkovK@muk.com.ua

Training courses on information security conducted by one of the authors of the article Kuzma Pashkov (CC MUK - Kiev)

Next courses on Information Security (conducted by Kuzma Pashkov)

January 18 - 22, 2016 - MUK-S0101 SecurityPlus

February 1 - 5, 2016 - MUK-S0102 CISA