New network architectures: open or closed solutions?

Modern organizations seek to introduce new services and applications, but often the obsolete network infrastructure that is unable to support innovation becomes a stumbling block. Technologies created on the basis of open standards are called to solve this problem.

Today, a standards-based approach has gained a strong position in IT - customers almost always prefer standard solutions. With the passing of the era when mainframes dominated, standards have gained a strong position. They allow you to combine equipment from different manufacturers, choosing the "best in class" products and optimize the cost of the solution. But in the network industry, not everything is so simple.

Closed systems still dominate the network market, and compatibility of solutions from different manufacturers is provided at the best at the interface level. Despite the standardization of interfaces, protocol stacks, network architectures, the network and communication equipment of various vendors often represents proprietary solutions. For example, even the deployment of modern "network factories" Brocade Virtual Cluster Switch, Cisco FabricPath or Juniper QFabric involves replacing existing switches, but this is not a cheap option. What can we say about the technologies of the "last century" that still work, but inhibit the further development of networks and the applications running in them. The evolution of networks. From proprietary to open source solutions. Recent studies

show that there is a gap between the offers of network equipment vendors and the preferences of its customers. For example, according to one survey, 67% of customers believe that proprietary products should be avoided whenever possible, 32% allow their use. Only 1% of respondents believe that proprietary products and tools provide better integration and compatibility than standard ones. That is, in theory, most customers prefer standards-based solutions, but mostly proprietary networking products are offered.

In practice, when buying new equipment or expanding the network infrastructure, customers often choose the solutions of the same vendor or the same product family. The reasons are the inertia of thinking, the desire to minimize risks when updating critical systems. However, standards-based products are much easier to replace, even if they are products from different manufacturers. In addition, under certain conditions, a combination of systems of different vendors will provide a functional network solution for a reasonable price and reduce the total cost of ownership.

This does not mean that you should not buy proprietary, proprietary technologies that are not described by an open standard, but which are the unique technology of a particular vendor. They usually implement innovative functions and tools. Using proprietary solutions and protocols often allows you to get better performance compared to open standards, but when choosing such technologies, it is necessary to minimize (or better exclude) their use at the boundaries of individual segments or technological nodes of the network infrastructure, which is especially important in multi-vendor networks. Examples of such segments are access levels, aggregations or network cores, the boundary between the local and global networks, segments that implement network applications (for example, load balancing, traffic optimization), etc.

Simply put, the use of proprietary technologies should be limited to their use within the boundaries of segments that implement specialized network functions and / or applications (a kind of typical “building blocks” of a network). In cases where non-standard proprietary technologies are used as the basis of the entire corporate network or large network domains, this increases the risk of customer “binding” to one manufacturer.

The purpose of building corporate data transmission networks (KSPD), whether it is a network of a geographically distributed company or a data center network, is to ensure the operation of business applications. KSPD is one of the most important business development tools. In a company with a geographically-distributed structure, business often depends on the reliability and flexibility of the joint work of its divisions. The basis for the construction of KSPD is the principle of dividing the network into "building blocks" - each is characterized by its characteristic functions and implementation features. The industry accepted standards make it possible to use network equipment of various vendors as such building blocks. Private (proprietary) protocols limit the freedom of choice for customers, which ultimately limits the flexibility of the business and increases costs. Using standardized solutions,

Modern large networks are very complex because they are defined by many protocols, configurations, and technologies. Using the hierarchy, you can arrange all the components in an easily analyzed model. The hierarchical model helps in the design, implementation, and maintenance of scalable, reliable, and cost-effective interconnected networks.

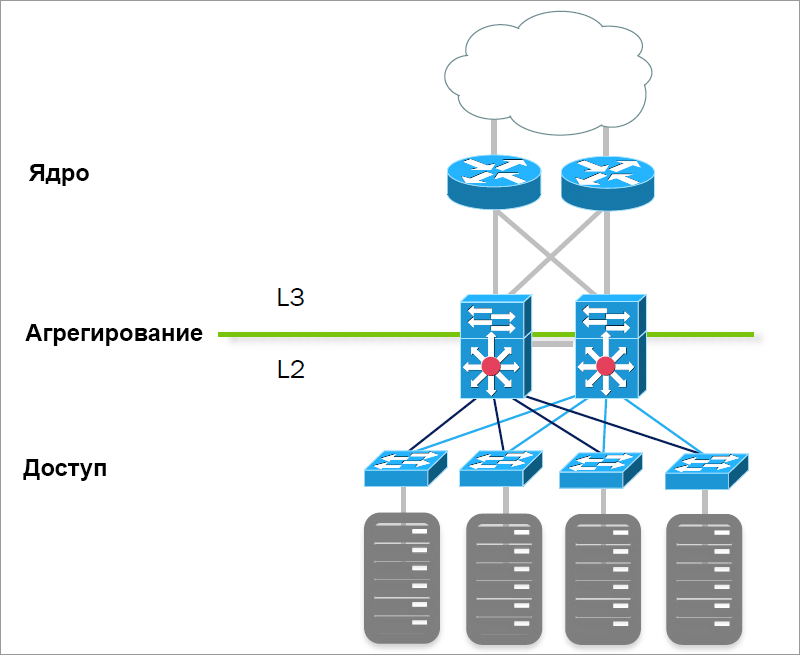

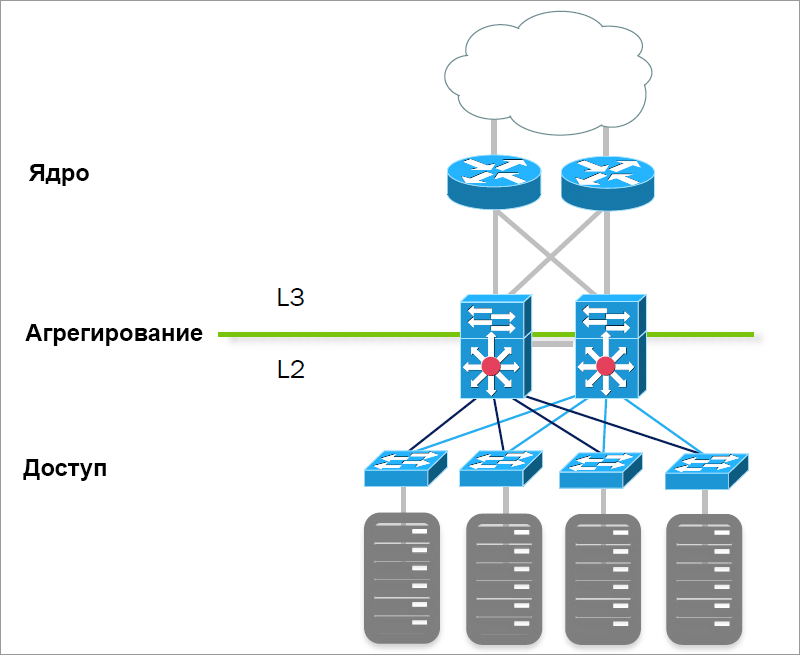

Three-tier corporate network architecture.

The traditional architecture of the corporate network includes three levels: the level of access, aggregation / distribution, and the core. Each of them carries out specific network functions.

The core level is the foundation of the entire network. To achieve maximum performance, routing functions and traffic management policies are moved to the aggregation / distribution level. It is he who is responsible for the proper routing of packets, traffic policies. The task of the distribution level is to aggregate / merge all access level switches into a single network. This can significantly reduce the number of connections. As a rule, the most important network services, its other modules, are connected to the distribution switches. The access level is used to connect clients to the network. Data center networks were built according to a similar scheme.

Outdated three-tier network architecture in the data center.

Traditional three-tier architectures focus on the client-server network traffic paradigm. With the further development of virtualization and application integration technologies, the flow of network traffic between servers increases. Analysts say ( here too ) that the paradigm of network traffic is changing from the north-south direction to the east-west direction, i.e. a significant predominance of traffic between servers in contrast to the exchange between server and clients.

When considering the network architecture of the data center, the access level corresponds to the boundary of the server farm. The three-tier network architecture in this case is not optimized enough to transfer traffic between individual physical servers, because instead of reducing the packet transmission path to one (or a maximum of two) network levels, the packet is transmitted in all three, increasing delays due to spurious traffic in both directions.

That is, traffic between servers passes through access levels, aggregations, network cores and vice versa in an optimal way, due to an unreasonable increase in the total length of the network segment and the number of levels of packet processing by network devices. Hierarchical networks are not adapted enough for data exchange between servers; they do not quite meet the requirements of modern data centers with a high density of server farms and intensive interserver traffic. Such a network typically uses traditional protocols for loop protection, device redundancy, and aggregated connections. Its features: significant delays, slow convergence, static, limited scalability, etc. Instead of the traditional tree-like network topology, you need to use more efficient topologies (CLOS / Leaf-Spine / Collapsed),

HP simplifies network architecture from three-tier (typical of traditional Cisco network architectures) to two-tier or single-tier.

Now the trend is such that more and more customers when building their networks are guided by the construction of second-level data transmission networks (L2) with a flat topology. In data center networks, the transition to it is stimulated by an increase in the number of server-server and server-storage flows. This approach simplifies network planning and implementation, as well as reduces operating costs and the total cost of investments, makes the network more productive.

In the data center, a flat network (L2 layer) better meets the needs of application virtualization, allowingeffectively move virtual machines between physical hosts. Another advantage that is realized with effective clustering / stacking technologies is the lack of need for STP / RSTP / MSTP protocols. This architecture, combined with virtual switches, provides protection against loops without using STP, and in case of failures, the network converges an order of magnitude faster than using traditional STP family protocols.

The network architecture of modern data centers should provide effective support for the transfer of large amounts of dynamic traffic. Dynamic traffic is due to a significant increase in the number of virtual machines and the level of application integration. Here it is necessary to note the growing role of various technologies for virtualization of information technology (IT) infrastructure based on the concept of software-defined networks (SDN).

The SDN concept currently extends not only to the network infrastructure level of individual sites, but also to the levels of computing resources and storage systems both within separate and geographically distributed data centers (examples of the latter are HP Virtual Cloud Networking - VCN and HP Distributed Cloud Networking - DCN).

A key feature of the SDN concept iscombining physical and virtual network resources and their functionality within a single virtual network. At the same time, it is important to understand that despite the fact that network virtualization solutions (overlay) can work on top of any network, the performance / availability of applications and services largely depends on the performance and parameters of the physical infrastructure (underlay). Thus, the combination of the advantages of an optimized physical and adaptive virtual network architectures allows us to build unified network infrastructures for efficient transmission of large flows of dynamic traffic at the request of applications.

To build flat networks, vendors develop appropriate equipment, technologies and services. Examples include Cisco Nexus, Juniper QFabric, and HP FlexFabric. At the core of HP's solution is the open and standardized HP FlexNetwork architecture.

HP FlexNetwork includes four interconnected components: FlexFabric, FlexCampus, FlexBranch, and FlexManagement. HP FlexFabric, HP FlexCampus, and HP FlexBranch solutions optimize network architectures for data centers, campuses, and branch offices, allowing you to gradually migrate from traditional hierarchical infrastructures to unified virtual, high-performance, converged networks as you grow, or immediately build such networks based on reference architectures recommended by HP.

HP FlexManagement provides comprehensive monitoring, automation of deployment / configuration / control of multi-vendor networks, unified management of virtual and physical networks from a single console, which accelerates the deployment of services, simplifies management, improves network availability, and eliminates the difficulties associated with the use of many administration systems. Moreover, the system can control the devices of dozens of other manufacturers of network equipment.

HP FlexFabric supports switching in networks up to 100GbE at the core level and up to 40GbE at the access level, using HP Virtual Connect technology. By implementing the FlexFabric architecture, organizations can move from three-tier networks to optimized two-tier and single-tier networks in stages.

Customers can seamlessly transition from proprietary legacy networks to the HP FlexNetwork architecture with HP Technology Services. HP offers migration services from proprietary network protocols such as Cisco EIGRP (although Cisco calls this protocol the “open standard”) to truly standard OSPF v2 and v3 routing protocols. In addition, HP offers FlexManagement administration services and a set of services related to the life cycle of each modular “building block” of HP FlexNetwork, including planning, design, implementation and maintenance of corporate networks.

HP continues to improve the capabilities of its equipment, both at the level of hardware platforms, and based on the concept of Software Defined Network (SDN), introducing various protocols for the dynamic management of switches and routers (OpenFlow, NETCONF, OVSDB). To build scalable Ethernet factories in a number of models of HP network devices, technologies such as TRILL, SPB, VXLAN are introduced (the list of devices supporting these protocols is constantly expanding). In addition to the standard protocols of the DCB category (in particular VPLS), HP has developed and is actively developing proprietary technologies for efficiently combining geographically distributed data centers into a single L2 network. For example, the current implementation of the HP EVI protocol (Ethernet Virtual Interconnect) allows similar integration of up to 64 data center sites.provides additional opportunities for expansion, improving the reliability and security of distributed virtualized L2 networks.

In each case, the choice of network architecture depends on many factors - the technical requirements for the data center or data center, the wishes of end users, infrastructure development plans, experience, competencies, etc. As for proprietary and standard solutions, the first ones sometimes allow you to cope with tasks for which standard solutions are not suitable. However, at the border of network segments built on the equipment of different vendors, the possibilities for their use are extremely limited.

The large-scale use of proprietary protocols as the basis for a corporate network can seriously limit the freedom of choice, which in the final analysis affects the dynamics of the business and increases its costs.

Open, standards-based solutions help companies move from legacy architectures to modern flexible network architectures that meet current challenges such as cloud computing, virtual machine migration, unified communications and video delivery, and high-performance mobile access. Organizations can choose best-in-class solutions that meet their business needs. Using open, standard protocol implementations reduces the risks and costs of network infrastructure changes. In addition, open networks, with combined physical and virtual network resources and their functionality, simplify the transfer of applications to a private and public cloud.

Our previous publications:

" Implementing MSA in a virtualized enterprise environment

"HP MSA disk arrays as the basis for data consolidation

» Multi-vendor corporate network: myths and reality

» Available HP ProLiant server models (10 and 100 series)

» Convergence based on HP Networking. Part 1

» HP ProLiant ML350 Gen9 - server with incredible extensibility

Thank you for your attention, we are ready to answer your questions in the comments.

Today, a standards-based approach has gained a strong position in IT - customers almost always prefer standard solutions. With the passing of the era when mainframes dominated, standards have gained a strong position. They allow you to combine equipment from different manufacturers, choosing the "best in class" products and optimize the cost of the solution. But in the network industry, not everything is so simple.

Closed systems still dominate the network market, and compatibility of solutions from different manufacturers is provided at the best at the interface level. Despite the standardization of interfaces, protocol stacks, network architectures, the network and communication equipment of various vendors often represents proprietary solutions. For example, even the deployment of modern "network factories" Brocade Virtual Cluster Switch, Cisco FabricPath or Juniper QFabric involves replacing existing switches, but this is not a cheap option. What can we say about the technologies of the "last century" that still work, but inhibit the further development of networks and the applications running in them. The evolution of networks. From proprietary to open source solutions. Recent studies

show that there is a gap between the offers of network equipment vendors and the preferences of its customers. For example, according to one survey, 67% of customers believe that proprietary products should be avoided whenever possible, 32% allow their use. Only 1% of respondents believe that proprietary products and tools provide better integration and compatibility than standard ones. That is, in theory, most customers prefer standards-based solutions, but mostly proprietary networking products are offered.

In practice, when buying new equipment or expanding the network infrastructure, customers often choose the solutions of the same vendor or the same product family. The reasons are the inertia of thinking, the desire to minimize risks when updating critical systems. However, standards-based products are much easier to replace, even if they are products from different manufacturers. In addition, under certain conditions, a combination of systems of different vendors will provide a functional network solution for a reasonable price and reduce the total cost of ownership.

This does not mean that you should not buy proprietary, proprietary technologies that are not described by an open standard, but which are the unique technology of a particular vendor. They usually implement innovative functions and tools. Using proprietary solutions and protocols often allows you to get better performance compared to open standards, but when choosing such technologies, it is necessary to minimize (or better exclude) their use at the boundaries of individual segments or technological nodes of the network infrastructure, which is especially important in multi-vendor networks. Examples of such segments are access levels, aggregations or network cores, the boundary between the local and global networks, segments that implement network applications (for example, load balancing, traffic optimization), etc.

Simply put, the use of proprietary technologies should be limited to their use within the boundaries of segments that implement specialized network functions and / or applications (a kind of typical “building blocks” of a network). In cases where non-standard proprietary technologies are used as the basis of the entire corporate network or large network domains, this increases the risk of customer “binding” to one manufacturer.

Hierarchical and flat networks

The purpose of building corporate data transmission networks (KSPD), whether it is a network of a geographically distributed company or a data center network, is to ensure the operation of business applications. KSPD is one of the most important business development tools. In a company with a geographically-distributed structure, business often depends on the reliability and flexibility of the joint work of its divisions. The basis for the construction of KSPD is the principle of dividing the network into "building blocks" - each is characterized by its characteristic functions and implementation features. The industry accepted standards make it possible to use network equipment of various vendors as such building blocks. Private (proprietary) protocols limit the freedom of choice for customers, which ultimately limits the flexibility of the business and increases costs. Using standardized solutions,

Modern large networks are very complex because they are defined by many protocols, configurations, and technologies. Using the hierarchy, you can arrange all the components in an easily analyzed model. The hierarchical model helps in the design, implementation, and maintenance of scalable, reliable, and cost-effective interconnected networks.

Three-tier corporate network architecture.

The traditional architecture of the corporate network includes three levels: the level of access, aggregation / distribution, and the core. Each of them carries out specific network functions.

The core level is the foundation of the entire network. To achieve maximum performance, routing functions and traffic management policies are moved to the aggregation / distribution level. It is he who is responsible for the proper routing of packets, traffic policies. The task of the distribution level is to aggregate / merge all access level switches into a single network. This can significantly reduce the number of connections. As a rule, the most important network services, its other modules, are connected to the distribution switches. The access level is used to connect clients to the network. Data center networks were built according to a similar scheme.

Outdated three-tier network architecture in the data center.

Traditional three-tier architectures focus on the client-server network traffic paradigm. With the further development of virtualization and application integration technologies, the flow of network traffic between servers increases. Analysts say ( here too ) that the paradigm of network traffic is changing from the north-south direction to the east-west direction, i.e. a significant predominance of traffic between servers in contrast to the exchange between server and clients.

When considering the network architecture of the data center, the access level corresponds to the boundary of the server farm. The three-tier network architecture in this case is not optimized enough to transfer traffic between individual physical servers, because instead of reducing the packet transmission path to one (or a maximum of two) network levels, the packet is transmitted in all three, increasing delays due to spurious traffic in both directions.

That is, traffic between servers passes through access levels, aggregations, network cores and vice versa in an optimal way, due to an unreasonable increase in the total length of the network segment and the number of levels of packet processing by network devices. Hierarchical networks are not adapted enough for data exchange between servers; they do not quite meet the requirements of modern data centers with a high density of server farms and intensive interserver traffic. Such a network typically uses traditional protocols for loop protection, device redundancy, and aggregated connections. Its features: significant delays, slow convergence, static, limited scalability, etc. Instead of the traditional tree-like network topology, you need to use more efficient topologies (CLOS / Leaf-Spine / Collapsed),

HP simplifies network architecture from three-tier (typical of traditional Cisco network architectures) to two-tier or single-tier.

Now the trend is such that more and more customers when building their networks are guided by the construction of second-level data transmission networks (L2) with a flat topology. In data center networks, the transition to it is stimulated by an increase in the number of server-server and server-storage flows. This approach simplifies network planning and implementation, as well as reduces operating costs and the total cost of investments, makes the network more productive.

In the data center, a flat network (L2 layer) better meets the needs of application virtualization, allowingeffectively move virtual machines between physical hosts. Another advantage that is realized with effective clustering / stacking technologies is the lack of need for STP / RSTP / MSTP protocols. This architecture, combined with virtual switches, provides protection against loops without using STP, and in case of failures, the network converges an order of magnitude faster than using traditional STP family protocols.

The network architecture of modern data centers should provide effective support for the transfer of large amounts of dynamic traffic. Dynamic traffic is due to a significant increase in the number of virtual machines and the level of application integration. Here it is necessary to note the growing role of various technologies for virtualization of information technology (IT) infrastructure based on the concept of software-defined networks (SDN).

The SDN concept currently extends not only to the network infrastructure level of individual sites, but also to the levels of computing resources and storage systems both within separate and geographically distributed data centers (examples of the latter are HP Virtual Cloud Networking - VCN and HP Distributed Cloud Networking - DCN).

A key feature of the SDN concept iscombining physical and virtual network resources and their functionality within a single virtual network. At the same time, it is important to understand that despite the fact that network virtualization solutions (overlay) can work on top of any network, the performance / availability of applications and services largely depends on the performance and parameters of the physical infrastructure (underlay). Thus, the combination of the advantages of an optimized physical and adaptive virtual network architectures allows us to build unified network infrastructures for efficient transmission of large flows of dynamic traffic at the request of applications.

HP FlexNetwork Architecture

To build flat networks, vendors develop appropriate equipment, technologies and services. Examples include Cisco Nexus, Juniper QFabric, and HP FlexFabric. At the core of HP's solution is the open and standardized HP FlexNetwork architecture.

HP FlexNetwork includes four interconnected components: FlexFabric, FlexCampus, FlexBranch, and FlexManagement. HP FlexFabric, HP FlexCampus, and HP FlexBranch solutions optimize network architectures for data centers, campuses, and branch offices, allowing you to gradually migrate from traditional hierarchical infrastructures to unified virtual, high-performance, converged networks as you grow, or immediately build such networks based on reference architectures recommended by HP.

HP FlexManagement provides comprehensive monitoring, automation of deployment / configuration / control of multi-vendor networks, unified management of virtual and physical networks from a single console, which accelerates the deployment of services, simplifies management, improves network availability, and eliminates the difficulties associated with the use of many administration systems. Moreover, the system can control the devices of dozens of other manufacturers of network equipment.

HP FlexFabric supports switching in networks up to 100GbE at the core level and up to 40GbE at the access level, using HP Virtual Connect technology. By implementing the FlexFabric architecture, organizations can move from three-tier networks to optimized two-tier and single-tier networks in stages.

Customers can seamlessly transition from proprietary legacy networks to the HP FlexNetwork architecture with HP Technology Services. HP offers migration services from proprietary network protocols such as Cisco EIGRP (although Cisco calls this protocol the “open standard”) to truly standard OSPF v2 and v3 routing protocols. In addition, HP offers FlexManagement administration services and a set of services related to the life cycle of each modular “building block” of HP FlexNetwork, including planning, design, implementation and maintenance of corporate networks.

HP continues to improve the capabilities of its equipment, both at the level of hardware platforms, and based on the concept of Software Defined Network (SDN), introducing various protocols for the dynamic management of switches and routers (OpenFlow, NETCONF, OVSDB). To build scalable Ethernet factories in a number of models of HP network devices, technologies such as TRILL, SPB, VXLAN are introduced (the list of devices supporting these protocols is constantly expanding). In addition to the standard protocols of the DCB category (in particular VPLS), HP has developed and is actively developing proprietary technologies for efficiently combining geographically distributed data centers into a single L2 network. For example, the current implementation of the HP EVI protocol (Ethernet Virtual Interconnect) allows similar integration of up to 64 data center sites.provides additional opportunities for expansion, improving the reliability and security of distributed virtualized L2 networks.

conclusions

In each case, the choice of network architecture depends on many factors - the technical requirements for the data center or data center, the wishes of end users, infrastructure development plans, experience, competencies, etc. As for proprietary and standard solutions, the first ones sometimes allow you to cope with tasks for which standard solutions are not suitable. However, at the border of network segments built on the equipment of different vendors, the possibilities for their use are extremely limited.

The large-scale use of proprietary protocols as the basis for a corporate network can seriously limit the freedom of choice, which in the final analysis affects the dynamics of the business and increases its costs.

Open, standards-based solutions help companies move from legacy architectures to modern flexible network architectures that meet current challenges such as cloud computing, virtual machine migration, unified communications and video delivery, and high-performance mobile access. Organizations can choose best-in-class solutions that meet their business needs. Using open, standard protocol implementations reduces the risks and costs of network infrastructure changes. In addition, open networks, with combined physical and virtual network resources and their functionality, simplify the transfer of applications to a private and public cloud.

Our previous publications:

" Implementing MSA in a virtualized enterprise environment

"HP MSA disk arrays as the basis for data consolidation

» Multi-vendor corporate network: myths and reality

» Available HP ProLiant server models (10 and 100 series)

» Convergence based on HP Networking. Part 1

» HP ProLiant ML350 Gen9 - server with incredible extensibility

Thank you for your attention, we are ready to answer your questions in the comments.