Mobile Application Testing Process

Testing is a very important stage in the development of mobile applications.

The cost of an error in the release of a mobile application is high. Applications get to Google Play within a few hours, in the Appstore for several weeks. It is not known how long users will be updated. Errors cause a stormy negative reaction, users leave low ratings and hysterical reviews. New users, seeing this, do not install the application.

Mobile testing is a complex process: dozens of different screen resolutions, hardware differences, several versions of operating systems, different types of Internet connections, sudden disconnections.

Therefore, in the testing department, we have 8 people (0.5 testers per programmer), a dedicated test lead monitors its development and processes.

Under the cut, I’ll tell you how we test mobile applications.

Testing begins before development. The design department passes the navigation scheme and screen layouts to the testers, the project manager - requirements that are invisible to the design. If the design is provided by the customer, the models are checked by our designers before being transferred to the testing department. The tester analyzes the requirements for completeness and inconsistency. In each project, the initial requirements contain conflicting information. We solve them before the start of development. Also, in each project, the requirements are incomplete: there are not enough layouts of secondary screens, restrictions on input fields, error displays, buttons do not lead anywhere. Things invisible on layouts are not obvious: animations, caching of pictures and screen contents, work in unusual situations.

Weaknesses of requirements are discussed with the project manager, developers and designers. After 2-3 iterations, the whole team understands the project much better, recalls the forgotten functionality, fixes decisions on controversial issues.

Basically, basecamp is used at this stage.

When the requirements are complete and consistent, the tester compiles smoke tests and functional tests covering the source data. Tests are divided into general and specific for different platforms. For storage and test runs we use Sitechсo .

For example, 1856 tests were written for the Trava project at this stage.

The first step of testing is completed. The project goes into development.

All our projects are collected on TeamCity build server.

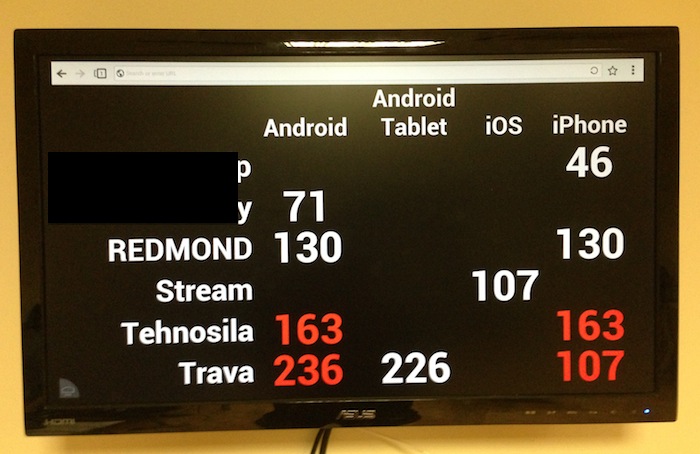

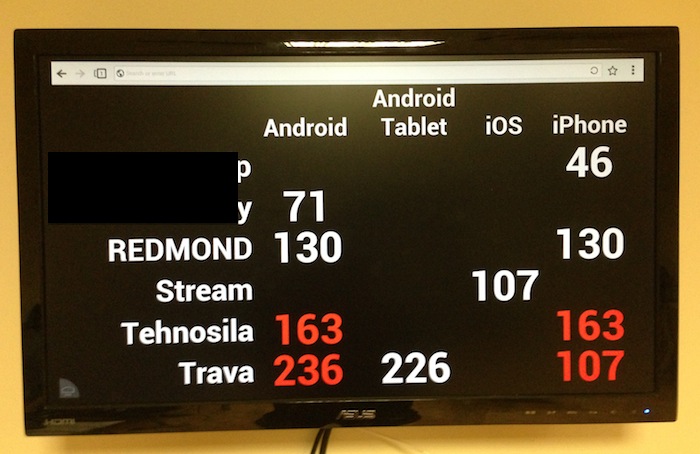

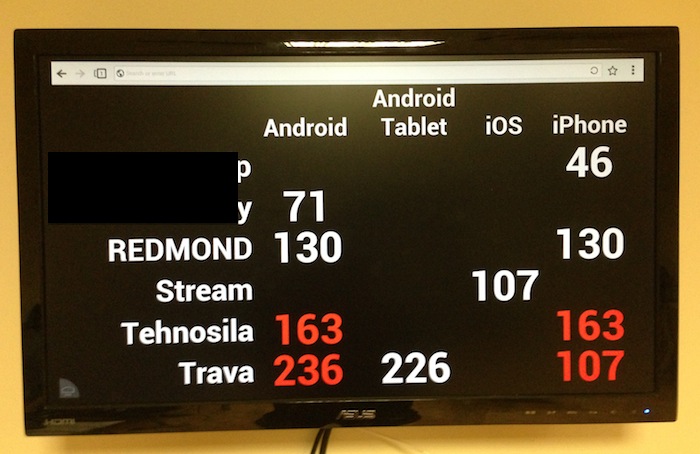

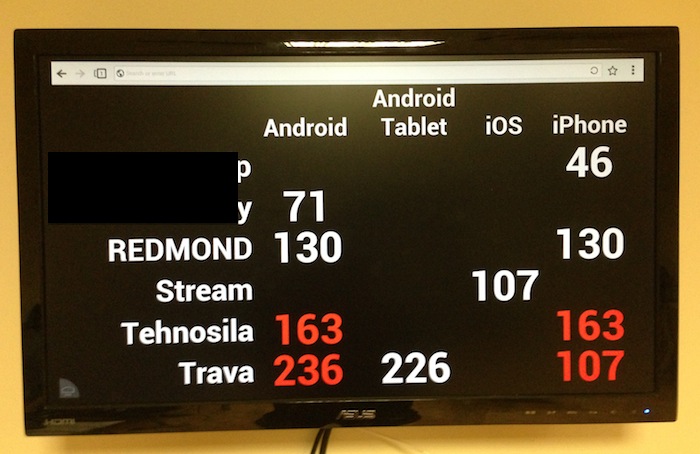

If the project manager ticks “for testing”, the testers will receive a letter about the new assembly for testing. Her number is displayed on the monitor in the testers office. Builds released in the last day are displayed in red, they need to be tested more actively than white ones.

Without the "magic monitor" (by the way, it works on android), old builds were often tested. A new build with bugs hit the customer. Now, before running test cases, just look at the monitor, the confusion was resolved.

Testing builds is quick and complete.

Quick testing is carried out after completion of the development iteration, if the assembly does not go to release.

To begin with, smoke tests are conducted to understand whether it makes sense to test the assembly.

Then, all completed tasks and fixed bugs for iteration from Jira are taken and meticulously checked that the result matches the description of the task. If the task included new interface elements, it is sent to designers for reconciliation with layouts.

Incorrectly performed tasks are rediscovered. Bugs are recorded in Jira. Non-UI bugs must be logged from a smartphone. For UI bugs, screenshots with notes that what's wrong.

After that, functional tests of this iteration are performed. If bugs are found that are not covered by test cases, a new test case is created.

For android applications are launchedmonkey tests .

At the end of testing, the “Bug testing passed” checkbox is put in the build server (yes, the name of the tick is not very correct :).

If during the testing process no blocker, critical and major bugs were found, the checkbox “can be shown to the customer” is checked. No build is sent to the customer without the approval of the testing department. (By agreement with the customer, builds with major bugs are sometimes sent).

The criticality of the bug is determined by the table. Upon completion of testing, PM receives a detailed report letter.

Full testing is done before release. Includes quick testing, regression testing, monkey testing on 100 devices and testing updates.

Regression testing involves running ALL test cases for the project. Test cases not only for the last iteration, but also for all previous and general test cases according to requirements. It takes a day or three per device, depending on the project.

A very important step is testing updates. Almost all applications store data locally (even if it is a login cookie) and it is important to make sure that after updating the application all user data will be saved. The tester downloads the build from the market, creates stored data (login, playlists, financial accounting transactions), updates the application to the test assembly and verifies that everything is in place. Then runs a smoke test. The process is repeated on 2-3 devices.

Developers often forget about data migration from old versions and testing updates allowed us to identify many critical errors with crashes, deleting user data about purchases. This saved more than one application from angry reviews and loss of audience.

We are launching a monkey test on 10 iOS and 80 Android devices using the serviceAppthwack .

At the end of the full test, in addition to the letter, a detailed report is manually prepared.

The assembly goes into release only with 100% completion of all test cases.

Testing integration with Google Analytics, Flurry, or customer statistics is not easy. It happened that builds with non-working Google Analytics went into the release and no one paid attention to it.

Therefore, in a mandatory order for external services, a test account is created and it is checked with full testing. In addition, the sending of statistics is recorded in the logs, which are checked by testers. Upon release, the test account is replaced by a combat account.

Time testers are recorded in a separate Jira project. For the preparation of test cases, test runs, writing reports on the project, a separate task is set up and the standard time in it marks the time spent.

UPD: tell us how the testing works for you, at least how many testers per developer

Subscribe to our habr-blog . Every Thursday, useful articles on mobile development, marketing and the mobile studio business.

The cost of an error in the release of a mobile application is high. Applications get to Google Play within a few hours, in the Appstore for several weeks. It is not known how long users will be updated. Errors cause a stormy negative reaction, users leave low ratings and hysterical reviews. New users, seeing this, do not install the application.

Mobile testing is a complex process: dozens of different screen resolutions, hardware differences, several versions of operating systems, different types of Internet connections, sudden disconnections.

Therefore, in the testing department, we have 8 people (0.5 testers per programmer), a dedicated test lead monitors its development and processes.

Under the cut, I’ll tell you how we test mobile applications.

Requirements testing

Testing begins before development. The design department passes the navigation scheme and screen layouts to the testers, the project manager - requirements that are invisible to the design. If the design is provided by the customer, the models are checked by our designers before being transferred to the testing department. The tester analyzes the requirements for completeness and inconsistency. In each project, the initial requirements contain conflicting information. We solve them before the start of development. Also, in each project, the requirements are incomplete: there are not enough layouts of secondary screens, restrictions on input fields, error displays, buttons do not lead anywhere. Things invisible on layouts are not obvious: animations, caching of pictures and screen contents, work in unusual situations.

Weaknesses of requirements are discussed with the project manager, developers and designers. After 2-3 iterations, the whole team understands the project much better, recalls the forgotten functionality, fixes decisions on controversial issues.

Basically, basecamp is used at this stage.

When the requirements are complete and consistent, the tester compiles smoke tests and functional tests covering the source data. Tests are divided into general and specific for different platforms. For storage and test runs we use Sitechсo .

For example, 1856 tests were written for the Trava project at this stage.

The first step of testing is completed. The project goes into development.

Build server

All our projects are collected on TeamCity build server.

If the project manager ticks “for testing”, the testers will receive a letter about the new assembly for testing. Her number is displayed on the monitor in the testers office. Builds released in the last day are displayed in red, they need to be tested more actively than white ones.

Without the "magic monitor" (by the way, it works on android), old builds were often tested. A new build with bugs hit the customer. Now, before running test cases, just look at the monitor, the confusion was resolved.

Testing builds is quick and complete.

Quick testing

Quick testing is carried out after completion of the development iteration, if the assembly does not go to release.

To begin with, smoke tests are conducted to understand whether it makes sense to test the assembly.

Then, all completed tasks and fixed bugs for iteration from Jira are taken and meticulously checked that the result matches the description of the task. If the task included new interface elements, it is sent to designers for reconciliation with layouts.

Incorrectly performed tasks are rediscovered. Bugs are recorded in Jira. Non-UI bugs must be logged from a smartphone. For UI bugs, screenshots with notes that what's wrong.

After that, functional tests of this iteration are performed. If bugs are found that are not covered by test cases, a new test case is created.

For android applications are launchedmonkey tests .

adb shell monkey -p ru.stream.droid --throttle 50 --pct-syskeys 0 --pct-ap

pswitch 0 -v 5000

At the end of testing, the “Bug testing passed” checkbox is put in the build server (yes, the name of the tick is not very correct :).

If during the testing process no blocker, critical and major bugs were found, the checkbox “can be shown to the customer” is checked. No build is sent to the customer without the approval of the testing department. (By agreement with the customer, builds with major bugs are sometimes sent).

The criticality of the bug is determined by the table. Upon completion of testing, PM receives a detailed report letter.

Full testing

Full testing is done before release. Includes quick testing, regression testing, monkey testing on 100 devices and testing updates.

Regression testing involves running ALL test cases for the project. Test cases not only for the last iteration, but also for all previous and general test cases according to requirements. It takes a day or three per device, depending on the project.

A very important step is testing updates. Almost all applications store data locally (even if it is a login cookie) and it is important to make sure that after updating the application all user data will be saved. The tester downloads the build from the market, creates stored data (login, playlists, financial accounting transactions), updates the application to the test assembly and verifies that everything is in place. Then runs a smoke test. The process is repeated on 2-3 devices.

Developers often forget about data migration from old versions and testing updates allowed us to identify many critical errors with crashes, deleting user data about purchases. This saved more than one application from angry reviews and loss of audience.

We are launching a monkey test on 10 iOS and 80 Android devices using the serviceAppthwack .

At the end of the full test, in addition to the letter, a detailed report is manually prepared.

The assembly goes into release only with 100% completion of all test cases.

Testing External Services

Testing integration with Google Analytics, Flurry, or customer statistics is not easy. It happened that builds with non-working Google Analytics went into the release and no one paid attention to it.

Therefore, in a mandatory order for external services, a test account is created and it is checked with full testing. In addition, the sending of statistics is recorded in the logs, which are checked by testers. Upon release, the test account is replaced by a combat account.

Time tracking

Time testers are recorded in a separate Jira project. For the preparation of test cases, test runs, writing reports on the project, a separate task is set up and the standard time in it marks the time spent.

UPD: tell us how the testing works for you, at least how many testers per developer

Subscribe to our habr-blog . Every Thursday, useful articles on mobile development, marketing and the mobile studio business.