On the limitations in the applicability of the Minkowski metric in digital data processing

Once, a long time ago, I came across an article on a hub, in which people write how cool everything is and how well the Minkowski metric works. Time passed and went, and I wanted and wanted everything. Finally, the task turned up to which I wanted to apply this miracle, and this is what happened:

No, no, no, if I do it now, then you will not read the article. It will be. but later. And before that I want to describe the task with which it all began:

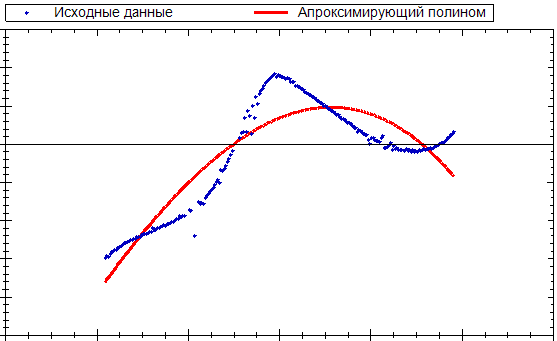

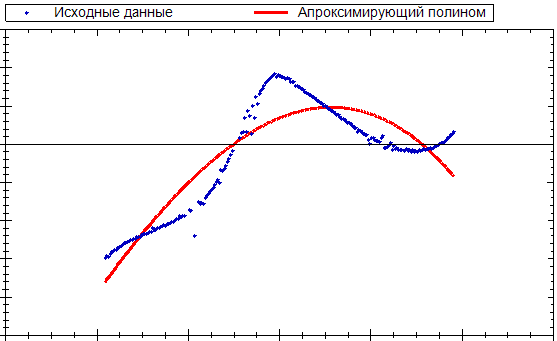

Right before you is a fairly high-quality approximation according to the algorithm. There is nothing to blame for it: the convergence to the resulting polynomial is extremely slow, the standard deviation is reluctant to change, and due to the small number of starting points (only about two hundred), the distribution of residuals looks to a first approximation as normal.

And so, in search of a universal criterion. which would allow me to distinguish a random sequence from a regular one (I know that this is a whole problem, but still), the idea came up using fractals. The fact is that the dimension of a random walk = 1.5. It is hoped that by calculating the dimension of the residual curve, we get 1.5 + - reasonable values.

Calculating the Hausdorff dimension is still an undertaking, but with Minkowski everything is much simpler, although I had to sweat with it.

We apply the approach that is used in the starting article: changing the scale, we consider the number of rectangles N through which the curve passes. Having obtained the dependence log (N (e)) / log (e), we approximate the direct using the least squares method. The metric we are looking for is the slope coefficient.

Unlike the original article, I change the scale not in geometric progression, but in arithmetic, there is too little data for the first. m_linearApproximator is a wrapper over OLS, nothing smart and complicated, but OLS can be found either in the original article. either in MathNet.Numerics. The line if (count == 0) count = 1 arose due to implementation specifics. It covers the case when we have only one point.

All the magic is in the fillBoxes method, this is where the squares are filled:

The source data for me is a set of points. Nothing that is known is that the process being measured is continuous, but in the process itself it is completely arbitrary: to random interference of different powers, additive interference may occur and disappear. In this case, the only way out is linear interpolation. As a consequence of the smallness of the input data, errors associated with passing a straight line through a lattice node are unacceptable.

Given these conditions, a universal solution that can be extended to any dimension is a step-by-step transition from square to square. (I warn you right away, in the demonstrated code, cases when the line is vertical or horizontal are not taken into account)

Let's test it on all possible straight lines:

It works and it’s good. Let's take a square now. Argh, ran into what I warned. But it doesn’t matter, let's turn the square over, and then add degenerate cases:

Result 1.1 . Brr, what a strangeness. But no, of course, 2 is for a flat figure, for a rectangle, and not for its outline. Well, that’s understandable. Let's add the number of scales; 1.05; add more; strive for unity.

It turns out that for a finite union of sets, the Minkowski dimension is the maximum for the dimension of each of the sets. Those. for a set of lines, dimension 1 . In other words, our result is completely correct.

Well now you can prove that Popes is no different from a square. Because If we represent the contour as straight, then our area is divided either into a triangle or a square. we all know about the square. What Minkowski dimension for a square do we know - 2. And for a triangle?

}{\ln(\epsilon)}=\lim\limits_{\epsilon\to0}\frac{\ln&space;0.5&space;a-\ln\epsilon^2}{-\ln\epsilon}=2)

It is not strange, but also two. This, by the way, breaks the assumption in the original article that the fractal dimension reflects the nonsmoothness of the curve. But by the way, the main thing is that as a result, the digitized priest Popes has a maximum dimension of 2 and 2.

Another interesting example. What is the dimension of the circle?

}{\ln(\epsilon)}=\lim\limits_{\epsilon\to0}\frac{\ln&space;\pi&space;a-\ln\epsilon^2}{-\ln\epsilon}=2)

Also two. And the circle?

}{\ln(\epsilon)}=\lim\limits_{\epsilon\to0}\frac{\ln&space;\pi&space;a-\ln\epsilon}{-\ln\epsilon}=1)

Total: the circle, square and triangle (and Lopez priest) are indistinguishable from us, this introduces serious difficulties in the model interpretation for fractal dimension. Classical interpolation between the data in a straight line leads to the fact that the dimensions of the contour tend to 1, and the area to two. Hence it is obvious that without any a priori knowledge, its calculation loses its meaning. Well, also our little test showed that the orientation of a complex curve introduces strong perturbations into our calculations, and their estimation is extremely difficult without understanding what the parameter itself shows.

No, no, no, if I do it now, then you will not read the article. It will be. but later. And before that I want to describe the task with which it all began:

Right before you is a fairly high-quality approximation according to the algorithm. There is nothing to blame for it: the convergence to the resulting polynomial is extremely slow, the standard deviation is reluctant to change, and due to the small number of starting points (only about two hundred), the distribution of residuals looks to a first approximation as normal.

And so, in search of a universal criterion. which would allow me to distinguish a random sequence from a regular one (I know that this is a whole problem, but still), the idea came up using fractals. The fact is that the dimension of a random walk = 1.5. It is hoped that by calculating the dimension of the residual curve, we get 1.5 + - reasonable values.

Calculating the Hausdorff dimension is still an undertaking, but with Minkowski everything is much simpler, although I had to sweat with it.

We apply the approach that is used in the starting article: changing the scale, we consider the number of rectangles N through which the curve passes. Having obtained the dependence log (N (e)) / log (e), we approximate the direct using the least squares method. The metric we are looking for is the slope coefficient.

public double calculate(IEnumerable> dataList)

{

double minY = Double.MaxValue, maxY = Double.MinValue;

double minX = minY, maxX = maxY;

foreach (var pair in dataList)

{

if (minY > pair.Value)

minY = pair.Value;

if (maxY < pair.Value)

maxY = pair.Value;

if (minX > pair.Key)

minX = pair.Key;

if (maxX < pair.Key)

maxX = pair.Key;

}

m_bottomLeftY = minY;

m_bottomLeftX = minX;

return calculate(dataList, maxX, maxY);

}

public double calculate(IEnumerable> dataList, double maxX, double maxY)

{

if( dataList.Count() < 2) return 0;

for(int scaleNumber = StartSize; scaleNumber !=FinishSize; ++scaleNumber){

double XScale = (maxX - m_bottomLeftX) / scaleNumber;

double YScale = (maxY - m_bottomLeftY) / scaleNumber;

var enumerator = dataList.GetEnumerator();

fillBoxes( (ref double x, ref double y) => { var res = enumerator.MoveNext(); if (res) { x = enumerator.Current.Key; y = enumerator.Current.Value; } return res; }, XScale, YScale);

int count = calculatedNumberBoxesAndReset(scaleNumber);

if (count == 0) count = 1;

m_boxesNumber[scaleNumber - StartSize] = Math.Log(count);

}

m_linearApproximator.approximate(m_scaleArgument, m_boxesNumber);

return m_linearApproximator.resultPolinomal.a[1];

}

double m_bottomLeftX, m_bottomLeftY;

Unlike the original article, I change the scale not in geometric progression, but in arithmetic, there is too little data for the first. m_linearApproximator is a wrapper over OLS, nothing smart and complicated, but OLS can be found either in the original article. either in MathNet.Numerics. The line if (count == 0) count = 1 arose due to implementation specifics. It covers the case when we have only one point.

All the magic is in the fillBoxes method, this is where the squares are filled:

void fillBoxes(GetDataDelegate dataIterator, double stepX, double stepY)

{

double prevX=0, prevY=0, targetX=0, targetY=0;

dataIterator(ref prevX, ref prevY);

int indexY = FinishSize, indexX = FinishSize;

int currentIndexX= calculateIndex(m_bottomLeftX, stepX, prevX), currentIndexY =calculateIndex(m_bottomLeftY, stepY, prevY) ;

m_Boxes[currentIndexY, currentIndexX] = true;

double[] CrossPosition = new double[2];

while (dataIterator(ref targetX, ref targetY))

{

if(prevX == targetX && prevY == targetY)

continue;

bool isBottom = targetY - prevY < 0;

bool isLeft = targetX - prevX < 0;

double a = (targetY - prevY) / (targetX - prevX), fracA=1/a;

double b = targetY - a * targetX;

double leftBorder = m_bottomLeftX + currentIndexX * stepX, bottomBorder = m_bottomLeftY + currentIndexY * stepY;

CrossPosition[0] = (leftBorder + (isLeft ? 0 : stepX)) * a + b;

CrossPosition[1] = (bottomBorder + (isBottom ? 0 : stepY) - b) * fracA;

while ( (targetY < CrossPosition[0] == isBottom && Math.Abs(targetY - CrossPosition[0]) / stepY > 1E-9) ||

(targetX < CrossPosition[1] == isLeft && Math.Abs(targetX - CrossPosition[1]) / stepX > 1E-9) )//Нужно ли делать следующий шаг?

{

if ( (bottomBorder - CrossPosition[0])/stepY <= 1E-9 && (CrossPosition[0] - bottomBorder - stepY)/stepY <= 1E-9)

currentIndexX += isLeft ? -1 : 1;

if ( (leftBorder-CrossPosition[1])/stepX <= 1E-9 && (CrossPosition[1] -leftBorder - stepX)/stepX <= 1E-9 )

currentIndexY += isBottom ? -1 : 1;

m_Boxes[currentIndexY, currentIndexX] = true;

leftBorder = m_bottomLeftX + currentIndexX * stepX;

bottomBorder = m_bottomLeftY + currentIndexY * stepY;

CrossPosition[0] = (leftBorder + (isLeft ? 0 : stepX)) * a + b;

CrossPosition[1] = (bottomBorder + (isBottom ? 0 : stepY) - b) * fracA;

}

prevY = targetY;

prevX = targetX;

}

}

The source data for me is a set of points. Nothing that is known is that the process being measured is continuous, but in the process itself it is completely arbitrary: to random interference of different powers, additive interference may occur and disappear. In this case, the only way out is linear interpolation. As a consequence of the smallness of the input data, errors associated with passing a straight line through a lattice node are unacceptable.

Given these conditions, a universal solution that can be extended to any dimension is a step-by-step transition from square to square. (I warn you right away, in the demonstrated code, cases when the line is vertical or horizontal are not taken into account)

Whole class

public class MinkowskiDimension

{

public MinkowskiDimension(int startSize, int finishSzie)

{

StartSize = startSize; FinishSize = finishSzie;

}

public MinkowskiDimension()

{

}

public int StartSize

{

get { return m_startSize; }

set

{

m_startSize = value;

if (m_startSize < m_finishSize)

{

m_scaleArgument = new double[m_finishSize - m_startSize];

for (int i = 0; i != m_scaleArgument.Count(); ++i) m_scaleArgument[i] = - Math.Log(m_startSize + i);

m_boxesNumber = new double[m_scaleArgument.Count()];

}

}

}

int m_startSize;

public int FinishSize

{

get { return m_finishSize; }

set

{

m_finishSize = value;

m_Boxes = new bool[value, value];

if (m_startSize < m_finishSize)

{

m_scaleArgument = new double[m_finishSize - m_startSize];

for (int i = 0; i != m_scaleArgument.Count(); ++i) m_scaleArgument[i] = Math.Log(m_startSize + i);

m_boxesNumber = new double[m_scaleArgument.Count()];

}

}

}

int m_finishSize;

double[] m_scaleArgument;

double[] m_boxesNumber;

public double calculate(IEnumerable> dataList)

{

double minY = Double.MaxValue, maxY = Double.MinValue;

double minX = minY, maxX = maxY;

foreach (var pair in dataList)

{

if (minY > pair.Value)

minY = pair.Value;

if (maxY < pair.Value)

maxY = pair.Value;

if (minX > pair.Key)

minX = pair.Key;

if (maxX < pair.Key)

maxX = pair.Key;

}

m_bottomLeftY = minY;

m_bottomLeftX = minX;

return calculate(dataList, maxX, maxY);

}

public double calculate(IEnumerable> dataList, double maxX, double maxY)

{

if( dataList.Count() < 2) return 0;

for(int scaleNumber = StartSize; scaleNumber !=FinishSize; ++scaleNumber){

double XScale = (maxX - m_bottomLeftX) / scaleNumber;

double YScale = (maxY - m_bottomLeftY) / scaleNumber;

var enumerator = dataList.GetEnumerator();

fillBoxes( (ref double x, ref double y) => { var res = enumerator.MoveNext(); if (res) { x = enumerator.Current.Key; y = enumerator.Current.Value; } return res; }, XScale, YScale);

int count = calculatedNumberBoxesAndIbit(scaleNumber);

if (count == 0) count = 1;

m_boxesNumber[scaleNumber - StartSize] = Math.Log(count);

}

m_linearApproximator.approximate(m_scaleArgument, m_boxesNumber);

return m_linearApproximator.resultPolinomal.a[1];

}

double m_bottomLeftX, m_bottomLeftY;

void fillBoxes(GetDataDelegate dataIterator, double stepX, double stepY)

{

double prevX=0, prevY=0, targetX=0, targetY=0;

dataIterator(ref prevX, ref prevY);

int indexY = FinishSize, indexX = FinishSize;

int currentIndexX= calculateIndex(m_bottomLeftX, stepX, prevX), currentIndexY =calculateIndex(m_bottomLeftY, stepY, prevY) ;

m_Boxes[currentIndexY, currentIndexX] = true;

double[] CrossPosition = new double[2];

while (dataIterator(ref targetX, ref targetY))

{

if(prevX == targetX && prevY == targetY)

continue;

bool isBottom = targetY - prevY < 0;

bool isLeft = targetX - prevX < 0;

double a = (targetY - prevY) / (targetX - prevX), fracA=1/a;

double b = targetY - a * targetX;

double leftBorder = m_bottomLeftX + currentIndexX * stepX, bottomBorder = m_bottomLeftY + currentIndexY * stepY;

CrossPosition[0] = (leftBorder + (isLeft ? 0 : stepX)) * a + b;

CrossPosition[1] = (bottomBorder + (isBottom ? 0 : stepY) - b) * fracA;

while ( (targetY < CrossPosition[0] == isBottom && Math.Abs(targetY - CrossPosition[0]) / stepY > 1E-9) ||

(targetX < CrossPosition[1] == isLeft && Math.Abs(targetX - CrossPosition[1]) / stepX > 1E-9) )//Нужно ли делать следующий шаг?

{

if ( (bottomBorder - CrossPosition[0])/stepY <= 1E-9 && (CrossPosition[0] - bottomBorder - stepY)/stepY <= 1E-9)

currentIndexX += isLeft ? -1 : 1;

if ( (leftBorder-CrossPosition[1])/stepX <= 1E-9 && (CrossPosition[1] -leftBorder - stepX)/stepX <= 1E-9 )

currentIndexY += isBottom ? -1 : 1;

m_Boxes[currentIndexY, currentIndexX] = true;

leftBorder = m_bottomLeftX + currentIndexX * stepX;

bottomBorder = m_bottomLeftY + currentIndexY * stepY;

CrossPosition[0] = (leftBorder + (isLeft ? 0 : stepX)) * a + b;

CrossPosition[1] = (bottomBorder + (isBottom ? 0 : stepY) - b) * fracA;

}

prevY = targetY;

prevX = targetX;

}

}

int calculateIndex(double startvalue, double scale, double value)

{

double index = (value - startvalue) / scale;

int intIndex = (int) index;

return Math.Abs(index - intIndex) > 1E-9 || intIndex ==0 ? intIndex: intIndex -1;

}

int calculatedNumberBoxesAndIbit(int currentScaleSize)

{

int result=0;

for (int i = 0; i != currentScaleSize; ++i)

{

for (int j = 0; j != currentScaleSize; ++j)

{

if (m_Boxes[i, j]){

++result;

m_Boxes[i, j] = false;

}

}

}

return result;

}

bool[,] m_Boxes;

PolinomApproximation m_linearApproximator = new PolinomApproximation(1);

}

Let's test it on all possible straight lines:

[TestMethod]

public void lineDimensionTest()

{

var m_calculator = new MinkowskiDimension(3, 10);

var data = new List >();

data.Add(new KeyValuePair(0, 1));

data.Add(new KeyValuePair(1, 5));

double result = m_calculator.calculate(data);

if (Math.Abs(result - 1) > 1E-9) Assert.Fail();

data.Add(new KeyValuePair(2, 9));

result = m_calculator.calculate(data);

if (Math.Abs(result - 1) > 1E-9) Assert.Fail();

data.Clear();

data.Add(new KeyValuePair(0, -1));

data.Add(new KeyValuePair(1, -5));

data.Add(new KeyValuePair(2, -9));

result = m_calculator.calculate(data);

if (Math.Abs(result - 1) > 1E-9) Assert.Fail();

data.Clear();

data.Add(new KeyValuePair(0, -1));

data.Add(new KeyValuePair(0.5, -3));

data.Add(new KeyValuePair(2, -9));

result = m_calculator.calculate(data);

if (Math.Abs(result - 1) > 1E-9) Assert.Fail();

}

It works and it’s good. Let's take a square now. Argh, ran into what I warned. But it doesn’t matter, let's turn the square over, and then add degenerate cases:

[TestMethod]

public void squareDimensiontest()

{

var m_calculator = new MinkowskiDimension(3, 15);

var data = new List>();

data.Add(new KeyValuePair(0, 1));

data.Add(new KeyValuePair(1, 0));

data.Add(new KeyValuePair(0, -1));

data.Add(new KeyValuePair(-1, 0));

data.Add(new KeyValuePair(0, 1));

double result = m_calculator.calculate(data);

if (Math.Abs(result - 2) > 1E-9) Assert.Fail();

}

Result 1.1 . Brr, what a strangeness. But no, of course, 2 is for a flat figure, for a rectangle, and not for its outline. Well, that’s understandable. Let's add the number of scales; 1.05; add more; strive for unity.

It turns out that for a finite union of sets, the Minkowski dimension is the maximum for the dimension of each of the sets. Those. for a set of lines, dimension 1 . In other words, our result is completely correct.

Well now you can prove that Popes is no different from a square. Because If we represent the contour as straight, then our area is divided either into a triangle or a square. we all know about the square. What Minkowski dimension for a square do we know - 2. And for a triangle?

It is not strange, but also two. This, by the way, breaks the assumption in the original article that the fractal dimension reflects the nonsmoothness of the curve. But by the way, the main thing is that as a result, the digitized priest Popes has a maximum dimension of 2 and 2.

Another interesting example. What is the dimension of the circle?

Also two. And the circle?

Total: the circle, square and triangle (and Lopez priest) are indistinguishable from us, this introduces serious difficulties in the model interpretation for fractal dimension. Classical interpolation between the data in a straight line leads to the fact that the dimensions of the contour tend to 1, and the area to two. Hence it is obvious that without any a priori knowledge, its calculation loses its meaning. Well, also our little test showed that the orientation of a complex curve introduces strong perturbations into our calculations, and their estimation is extremely difficult without understanding what the parameter itself shows.