Qihoo 360 and Go

- Transfer

I bring to your attention a translation of a guest post from the Go blog on behalf of Yang Zhou, who is currently an engineer in Qihoo 360 .

Qihoo 360 is China’s leading provider of anti-virus products for the Internet and mobile devices, and controls a large distribution platform for Android mobile applications (application store). At the end of June 2014, Qihoo was used by 500 million active PC users per month and over 640 million mobile device users. Qihoo also has its own browser and search engine, both equally popular among the Chinese.

My team, the push messaging department, provides a fundamental messaging service for more than 50 applications among the company's products (both for PC and mobile devices), as well as thousands of third-party applications that use our open platform.

Our “romance” with Go dates back to 2012, when we tried to get push working. The very first option was a bunch of nginx + lua + redis, but performance under a serious load did not fit our requirements. During the next search for the correct stack, our attention was attracted by the fresh release of Go 1.0.3. We made a prototype in just a few weeks, thanks in large part to goroutines (lightweight threads) and go-channels (typed queues), which are primitives of the language.

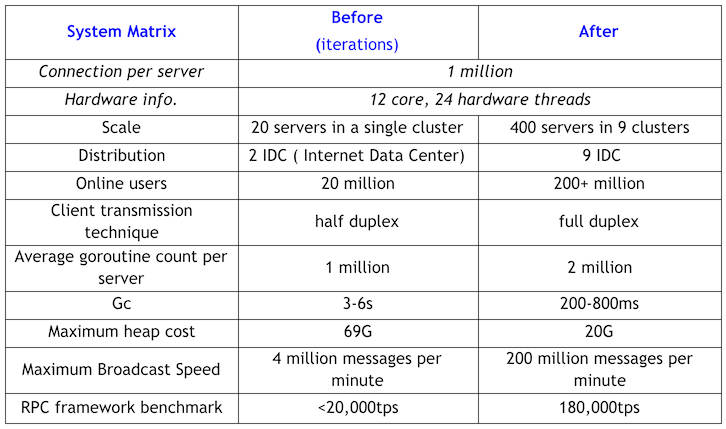

Initially, our corrugated system was deployed on 20 servers, serving a total of 20 million active connections. She coped with sending only 2 million messages a day. Now the system is deployed on 400 servers, supports 200+ million active connections and provides sending more than 10 billion messages daily.

In parallel with the rapid growth of the business and increased requirements for push messaging services, the original Go system quickly ran into limits: heap size reached 69G, GC pauses were 3-6 seconds. Moreover, we rebooted our servers weekly to free up memory. To be honest, we even thought of getting rid of Go and rewriting the entire kernel to C. However, soon the plans changed: the plug occurred during the transfer of business logic. It was impossible for one person (me) to overpower support for the system on Go, while simultaneously porting the business logic to C.

So I decided to stay with Go (and in my opinion, this is the wisest of all that I could then accept) and qualitative progress came pretty soon.

Here are a few tricks and optimizations:

- Reuse TCP connections (connection pool) to avoid creating new objects and buffers during interaction;

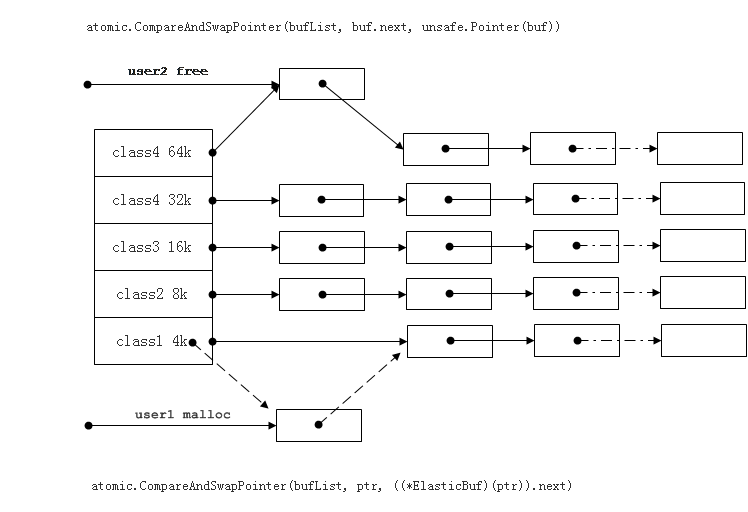

- Reuse objects and allocated memory to reduce the load on the GC;

- Use groups of long-living goroutines to process task queues or messages received from

goroutines. Those. classic pub / sub instead of generating a lot of goroutines, one for each incoming request; - Monitor and control the amount of gorutin in the process. Lack of control can lead to an excessive load on the GC due to a jump in the number of goroutines created. For example, if you allow the uncontrolled creation of goroutines to process requests from outside, then blocking just created as a result of accessing internal services, for example, will lead to the creation of new goroutines and so on;

- Do not forget to specify deadlines for reading and writing for network connections when working with a mobile network, otherwise goroutines may be blocked. However, use them carefully with a LAN network, otherwise the effectiveness of RPC communication may be affected;

- Use Pipelining for calls if necessary (if Full Duplex for TCP is available).

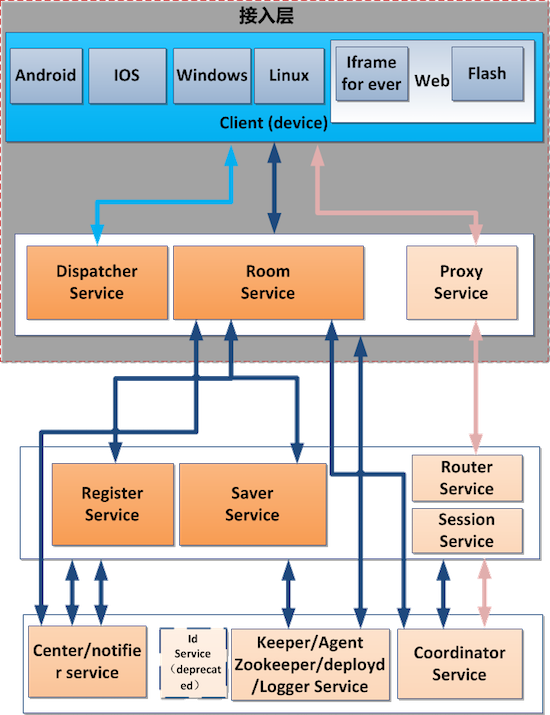

The result is three iterations of our architecture, two iterations of the RPC framework, even with a limited number of people to implement. I would attribute this achievement to the convenience of development on Go. I bring a fresh diagram of our architecture:

The result of continuous improvements and improvements in the form of a table:

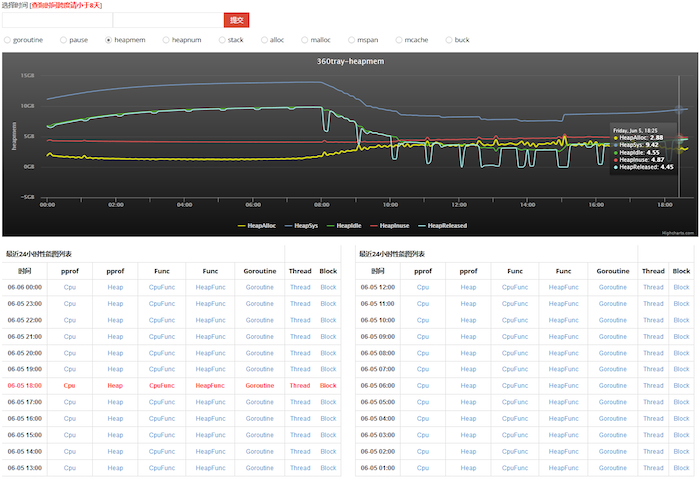

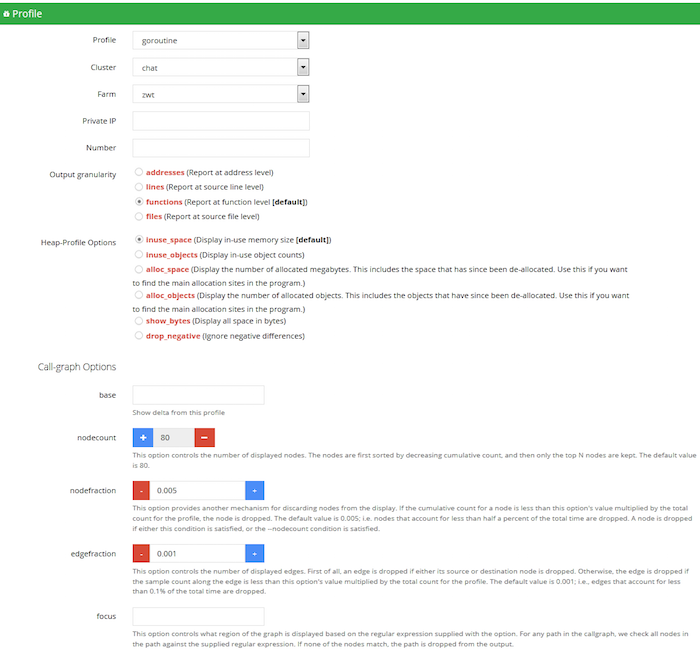

And, no less cool, at the same time we developed a platform for profiling Go programs in real time (Visibility Platform). Now we have access to system status and diagnostic information, anticipating any problems.

Screenshots:

From the remarkable: with this tool we can simulate the connection and behavior of millions of users using the Distributed Stress Test Tool module (also written in Go), observe the results in real time and with visualization. This allows us to study the effectiveness of any innovation or optimization, preventing any performance problems in combat conditions. Almost any possible system optimization has already been tested by us. And we look forward to good news from the team responsible for GC in Go, they could save us from unnecessary work with optimizing the code for GC in the future. I also admit that our tricks will soon become simply rudimentary as Go continues to evolve.

I want to end this story with gratitude for the opportunity to participate in Gopher China. It was a solemn event that allowed us to learn a lot, share our knowledge, imbue Go's popularity and success in China. A large number of teams in Qihoo already managed to get acquainted with Go and even try. I am convinced that soon more Chinese Internet companies will join the trend and rewrite their systems on Go, so the efforts of the team behind Go will benefit a huge number of developers and companies in the near future.