Metaprogramming: what it is and what it should be

Metaprogramming is a type of programming associated with the creation of programs that generate other programs as a result of their work ( wiki ). This is a fairly general term, which, according to the same Wikipedia, refers to the generation of source code by external tools, and various preprocessors, and “plugins to the compiler” - macros with the ability to modify the syntax tree directly in the compilation process, and even such an exotic opportunity, as self-modifying code - modification of a program by the program itself at runtime.

Although, of course, self-modifying code is rather a separate big topic, worthy of a separate study; here, by metaprogramming, we mean the processes that occur during compilation of the program.

Metaprogramming is implemented to one degree or another in very different languages; if you do not consider exotic and similar languages, then the most famous example of metaprogramming is C ++ with its template system. Of the "new" languages, D and Nim can be considered. One of the most successful attempts to implement metaprogramming is the Nemerle language. Actually, on the example of this four we will consider the subject.

Metaprogramming is an interesting topic; in this article I will try to figure out what it is and what it should be in the ideal case.

Compilation steps

Before we start discussing the topic, we should look at how the compiler works.

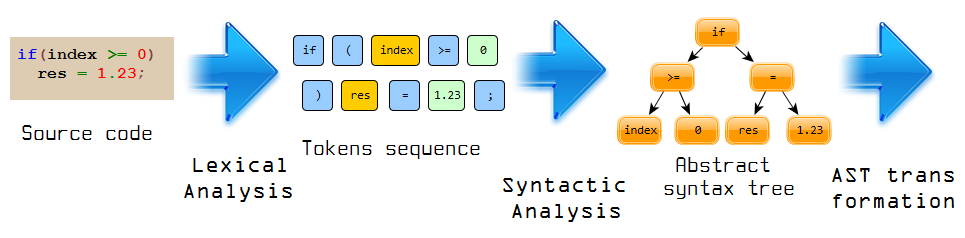

So, at the input of the compiler is the source code in the form of program text.

The first stage of compilation is lexical analysis . At this stage, the program is split from solid text into tokens (tokens) - each variable, literal, operator, keyword, comment becomes a separate object. Of course, working with such objects is much more convenient than directly with source lines.

The next step is parsingbuilding a syntax tree. At this stage, the linear structure becomes hierarchical; into the one that we actually represent when writing programs. Classes, functions, code blocks, operations become nodes of the abstract syntax tree (AST). Parsing itself consists of many steps; which includes working with types (including type inference), and various optimizations.

The final stage is code generation . Based on the syntax tree, virtual and / or machine code is generated; it all depends on the target architecture - registers and memory are allocated, tree nodes turn into a sequence of commands, additional optimization is carried out.

We will be primarily interested in lexical and syntactic analysis (although metaprogramming is also possible at the stage of code generation ... but this is a separate, big and completely unexplored topic).

Lexical macros

One of the oldest metaprogramming tools that has survived to this day is a system preprocessor. Probably, the first preprocessors were really separate programs, and after preprocessing they returned the result of their work back to the source text format. The preprocessor is lexical, because it works at the token level - that is, after receiving a sequence of tokens (although in fact the preprocessor still performs the simplest parsing for its purposes - for example, for its own macros with arguments). The preprocessor can replace one sequence of tokens with others; he knows nothing about the syntactic structure of the program - so it’s so easy to make famous

#define true falseThe problem with the “system-wide” preprocessor is that, on the one hand, it works at an too early stage (after lexical analysis, when the program is still not much different from plain text); on the other hand, it is not a full-fledged programming language, but just a system of conditional compilation and replacement of some sequences of tokens with others. Of course, much can be done on this (see boost.preprocessor ), but nevertheless, it does not reach full-fledged - and most importantly understandable and convenient - metaprogramming.

C ++ Templates

The next best-known metaprogramming tool is C ++ templates - constructs that allow you to create parameterized classes and functions. Patterns, unlike large macros, already work with the syntax tree. Consider the most common template in C ++

template

struct Array

{

T data[N];

}; and its application (instantiation):

Array arr; What is going on here from the point of view of the compiler? The template structure is a separate, special AST assembly; the template has two parameters - type and integer constant. At the template instantiation point, template parameters (which are also actually AST nodes) are substituted into the template body instead of formal parameter names; as a result, a node is created (or searched for previously created), which is used in the main syntax tree. The following is important here: both the template itself and the template parameters at the instantiation point are not just a type and number, they are nodes of the syntax tree. That is, by passing the int type and the number 100, you actually construct and transfer two small syntax trees (in this case, with one single node) to a larger syntax tree (template body) and as a result you get a new tree that is inserted into the main syntax tree. It looks like a mechanism for substituting sichen macros, but already at the level of syntax trees.

Of course, template parameters can also be more complex constructs (for example, std :: vector <std :: set <int>> can be passed as a type). But here is the time to pay attention to the fundamental incompleteness of the capabilities of C ++ templates. According to clause 14.1 of the standard, template parameters can only be types and some non-types: integers, enumeration elements, pointers to class members, pointers to global objects, and pointers to functions. In general, the logic is clear - there is something in the list that can be determined at the compilation stage. But for example, for unknown reasons, there are no strings and floating-point numbers in it. And if you remember that the parameters are AST nodes, it becomes obvious that there are not many other useful things. So, what prevents us from passing an arbitrary AST as a parameter, e.g. variable name or code block? Similarly, the patterns themselves can only be classes (structures) or functions. And what prevents an arbitrary block of code from making a template - both imperative (control operators, expressions) and declarative (for example, a fragment of a structure or enumeration)? Nothing but the lack of such features in C ++ itself.

From templates to syntax macros

The template engine, even in the form in which it exists in C ++, provides a fairly wide range of metaprogramming capabilities. Nevertheless, this is just a system of substituting some AST fragments for others. But what if we go further and, in addition to substitutions, allow something else - in particular, performing arbitrary actions on AST nodes using a script? These are syntax macros, the most powerful metaprogramming tool to date. Arbitrary code written by a programmer and executed at the compilation stage of the main program, with access to the compiler API and the structure of the compiled program in the form of AST, in fact, full-fledged plugins for the compiler built into the compiled program. Not many programming languages realize this feature; one of the best implementations in my opinion is in the languageNemerle , therefore, consider it in more detail.

Here is the simplest example from an almost official documentation:

macro TestMacro()

{

WriteLine("compile-time\n");

<[ WriteLine("run-time\n") ]>;

}If you insert a macro call into another file (which by the way is no different from a function call)

TestMacro();then during compilation we will get a “compile-time” message in the compiler log. And when the program starts, the message “run-time” will be displayed in the console.

As we can see, a macro is ordinary code (in this case, in the same Nemerle language as the main program); the difference is that this code is executed at the compilation stage of the main program. Thus, compilation is divided into two phases: first, macros are compiled, and then the main program, when compiled, previously compiled macros can be called. The first line is executed at compile time. The second line contains an interesting syntax - special brackets <[]>. Using such brackets, you can take code fragments as if in quotation marks, by analogy with regular strings. But unlike strings, these are AST fragments, and they are inserted into the main syntax tree of the program - just like templates at instantiation.

And special brackets are needed because macros, unlike templates, are somehow in a different context, in a different dimension; and we need to somehow separate the macro code and the code with which the macro operates. Such strings in Nemerle terms are called quasi-quotes. “Quasi” - because they can be constructed on the fly using interpolation - a feature known to everyone who writes in scripting languages, when the names of various variables can be inserted into a string using special syntax, and they turn into the values of these variables. Another example from the documentation:

macro TestMacro(myName)

{

WriteLine("Compile-time. myName = " + myName.ToString());

<[ WriteLine("Run-time.\n Hello, " + $myName) ]>;

}Argument - AST node (as well as for templates); to insert a node into a quasi-quote, use the $ symbol in front of its name.

Of course, instead of such a string, it was possible to construct an inserted AST fragment manually - using the compiler API and the types available in the macro context that correspond to AST nodes. Something like

new FunctionCall( new Literal("run-time\n") )but it’s much easier to write the code “as is” and entrust the work of building the AST to the compiler - for this is what it is intended for!

In D, metaprogramming is represented using templates (which are generally similar to C ++ templates, although there are some improvements) and “mixins”. Let's consider them in more detail. The first type is template mixins; the same expansion of templates with the ability to make arbitrary code fragments template. For example, this program will output the number 5.

mixin template testMixin()

{

int x = 5;

}

int main(string [] argv)

{

mixin testMixin!();

writeln(x);

return 0;

}the variable declared in the mixin becomes available after the mixin is included in the code.

The second type of mixins is string mixins; in this case, the argument to the mixin keyword becomes a line with the code for D:

mixin (`writeln("hello world");`);The string, of course, must be known at the time of compilation; and this can be not only a constant explicitly defined line (otherwise this would not make any sense), but also a line formed programmatically at compile time! At the same time, the usual functions of the D language can be used to form the string - the same ones that can be used in runtime. Of course, with certain limitations, the compiler must be able to execute the code of these functions during compilation (yes, a rather powerful interpreter of the D language itself is built into the D compiler).

In the case of string mixins, we do not work with AST nodes as quasicitats; instead, we work with the source code of the language, which is formed explicitly (for example, by concatenating strings) and goes through the full path of lexical and parsing. This method has both advantages and disadvantages. Personally, direct work with AST seems to be more “clean” and ideologically correct than generating lines of source code; however, working with strings can be useful in some situations.

You can also very briefly mention the Nim language. In it, the template keyword works similar to the mixin template from D (and for classic templates in the early C ++ style, a different concept is used - generic). Using the macro keyword, syntactic macros are declared, somewhat similar to Nemerle macros. Nim made an attempt to formalize the phases of calling templates - using special pragmas you can specify whether to call the template before resolving the names of variables or after. Unlike D, there is some compiler API with which you can explicitly create AST nodes. It touches on the issues of “hygiene” of macros (a macro is “hygienic” if it is guaranteed not to affect identifiers at the point of its application ... I should have considered these issues in more detail, but probably some other time).

conclusions

Looking at the implementation of metaprogramming in different languages, there are some thoughts about how it should look in the ideal case. Here are some thoughts:

Metaprogramming should be explicit

Calling a macro is a very specific thing (actually a VERY specific thing!), And the programmer must uniquely visually identify such macros in the code (even without syntax highlighting). Therefore, the syntax of macros must be clearly different from the syntax of functions. More or less, this requirement is met only in D (the special keyword mixin at the dial peer); in Nemerle and Nim, macros are indistinguishable from functions. Moreover, in Nemerle there are several more ways to call a macro - macro attributes and the ability to override the language syntax ... here you can get a little distracted and note that the syntax, unlike functions and classes, is global; and I rather have a negative attitude to such an opportunity, because it leads to the erosion of the language and its transformation into a language generator, which means

Using the same language for programs and metaprograms is not necessary.

In all the examples discussed, metaprograms (macros) were written in the same language as the main program. It seems that the language developers did not think about the possibility of using a different programming language for macros, more suitable for interpretation than for compilation.

Meanwhile, an example of an alternative approach always lay on the surface: web programming uses the html markup language and the javascript programming language; javascript is executed during html rendering (compilation analogue), the html document object model (HTML DOM) is available from scripts - a fairly close analogue of AST. Using the appropriate functions, you can add, modify and delete HTML DOM nodes, at different levels, both in the form of html source code and in the form of nodes of the DOM tree.

// формируем html в виде текста, аналогично mixin в D

document.getElementById('myDiv').innerHTML = '- html data

';

// формируем html в виде узлов дерева, аналогично Nim

var link = document.createElement('a');

link.setAttribute('href', 'mypage.htm');

document.getElementById('myDiv').appendChild(link);What are the advantages and disadvantages of this approach?

Obviously, the metaprogram should not be able to crash or suspend the compiler. Obviously, if you write metaprograms in SI - with pointers and other low-level things, then it will be very simple to bring it down. On the other hand, using common code in programs and metaprograms is convenient. Although this "common code" will still be limited to some very general things like pure algorithms: the compiler API is not applicable in programs, and the libraries of the "real OS" (including graphics, window system, network ...) are very inapplicable in metaprograms . Of course, you can create a couple of your windows during compilation and display them on the screen, but why?

Thus, it is not necessary that the programs and metaprograms be in the same language. Programs and metaprograms have completely different tasks and completely different runtimes. Probably the best solution is to leave the programmer freedom and use several languages: both a safe subset of the main language and some common scripting language - the same javascript is quite suitable.

Compiler API Standardization

The emergence and dissemination of full-fledged metaprogramming in some language will inevitably require standardization of the compiler API. Of course, this would have a positive effect on the quality of the compilers themselves, on their compliance with the Standard and compatibility among themselves. And it seems that the example of html and browsers in itself is pretty good. Although the AST structure is more complicated than html markup (incompatibility of some nodes and other features), it would be nice to take the experience of browser technologies in combination with the experience of existing metaprogramming implementations as the basis for building such an API.

IDE Support

Metaprogramming can be quite complicated. Until now, all the implementations I know have not suggested any means to facilitate the work of a programmer: compiling in your mind is still an idea (of course, there are amateurs ...). Although metaprogrammers in C ++, for example, do just that. Therefore, I consider it necessary to introduce such tools as expanding templates and macros in a special IDE mode - both in debug mode and in code editing mode; some analogue of executing code "from the command line" REPLfor macros. The programmer should have a full set of tools for visual access to the AST, for separate compilation and test run of macros, for "compilation by steps" (just like that - to see in a special debugger how the macro works when compiling the main code in step-by-step mode).

Well, perhaps that's all. Many questions remained behind the scenes, but I think even this is enough to appreciate the incredible power of metaprogramming. Now imagine that this would all be in C ++ now. Look at the Boost library, at those amazing and incredible things that people do even on existing templates and lexical macros ...

If that were the case ... what truly hacking opportunities would open before us!