How face recognition helps you find test phones

Hi, habrovchane! EastBanc Technologies has a large number of mobile development projects. In this connection, a whole zoo of devices is required for testing at all stages. And, characteristically, every single device is constantly needed by very different people, and to find it even in one mobile development department of several dozen people is a whole story. Not to mention the fact that there are testers, designers, PM'y, in the end!

And in order not to lose the phone, but to clearly know where he is and with whom, we use an online database that recognizes employees by persons. Now we will tell how we came to this and implemented it.

We had a board with “cards” of devices with basic information and a place for a magnet that indicates an employee. One was noted about taking a device.

This system has its drawbacks - not critical, but generally uncomfortable:

In a company that is engaged in business process automation, using the “analog” solution described above is not very cool. Naturally, we decided to automate the task of finding the desired device. The first step was to write a mobile application that can determine and report its location in rooms via Wi-Fi access points. Along the way, for convenience, they gave the device the ability to report the OS version to the server, and also to show such an important characteristic as the battery charge.

It would seem that the problem is solved. You look at the list in the database, where the last time the device saw Wi-Fi, go there and ...

In operation it turned out that not everything is so simple. We installed the application on test devices and worked with it for several months. It turned out that this option is convenient, but also not perfect.

Devices are discharged, simply turned off, Wi-Fi access points are swapped from one place to another, and geolocation in itself says only that the device is in the office. Thank you, captain!

You can, of course, try to optimize the existing system, but why not reinvent it based on the technologies of the XXI century? No sooner said than done.

We came up with the concept of a system that would recognize employees by persons, test devices - by special labels, request confirmation of a change in the status of the device, and then make changes to the online database that any employee can see without getting up from the chair.

Face recognition in general, the problem solved in 2018. Therefore, we did not reinvent the wheel and try to train our own models, but took advantage of a ready-made solution. The most convenient option was the FaceRecognition module , since It does not require additional training and works very quickly, even without acceleration on the GPU.

With the help of the face_locations function, photos of employees were detected in faces, and with the help of face_encodings, the signs of a person’s face were extracted.

The data obtained was collected in the database. To determine a specific employee, using the face_distance function was considered the “difference” between the encoding of the detected employee and the encodings from the database.

In general, at this stage it was possible to go further and create a classifier, for example, based on KNN , so that the system was less sensitive to the dynamics of employees' faces. However, in practice this requires much more time. Yes, and the banal averaging of the encoding of a person’s face between the one that is now in the database and the one that the system found to change the status of the device was already enough to avoid accumulating an error in practice.

Initially, the idea arose to use QR-codes for device recognition , in which to enter information about the device as well. However, for sustainable recognition of the QR code, it had to be brought very close to the camera, which is inconvenient.

As a result, the thought arose about the use of augmented reality markers. They carry less information, but are much more consistently recognized. In the course of the experiments, a marker measuring 30 millimeters in size was recognized by the camera with small deviations from the vertical (3-5 degrees) at a distance of up to two and a half meters.

This option already looked much better. Of the entire array of augmented reality markers, ARuco was chosen , since all the necessary tools to work with them are present in the deliveryopencv-contrib-python .

As a result, each device was assigned a numerical index with which the marker was compared with this index.

It would seem that we have learned to recognize the device and the face, the work is done. Fanfare, ovations! What else might be needed?

In fact, the work is just beginning. Now all the components of the system must be made to work stably and quickly "in combat".

It is necessary to optimize the cost of server resources in idle, think through the use cases and understand how this should look like graphically.

Perhaps the most important point in the development of such systems is the interface. Someone may argue, but the user is the central element in such a situation.

As quickly as possible, you can implement the frontend part with Tkinter .

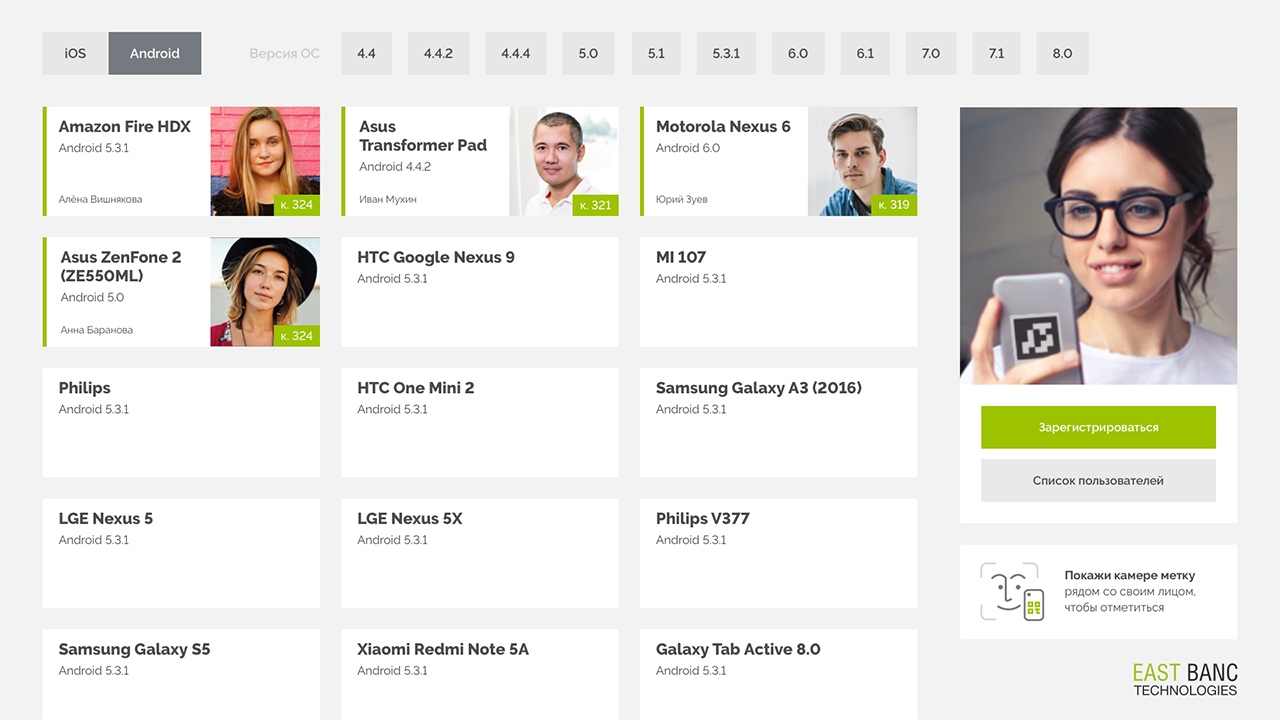

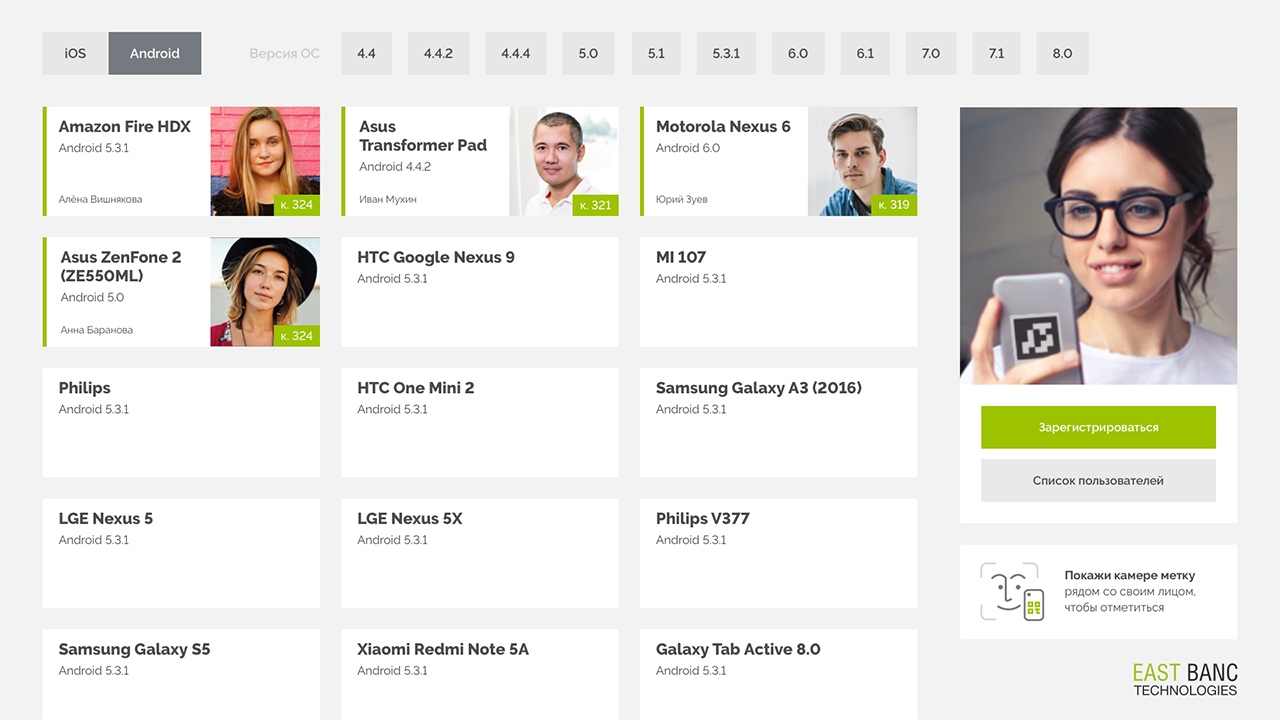

The interface consists of cards with information about the device and the user currently using this device. Most of the screen takes a catalog of cards - the main instrument of accounting. Above is a filter with which you can filter the directory by platform or version of the operating system.

One pass of any component of the system does not take too long. However, if you start recognizing markers and faces at the same time, then an attempt to recognize all 30 frames per second provided by the camera will lead to the complete exhaustion of computer resources without a GPU.

It is clear that 99% of the time the system will do this work idly.

To avoid this, the following optimization decisions were made:

At the moment, the system has been successfully deployed on MacMini Late 2009, which has come up under the arm, on two Core 2 Duo cores. As part of testing, it quite successfully worked even on a single virtual core with 1024 megabytes of RAM and 4 gigabytes of permanent memory in a Docker container. A touchscreen display was connected to MacMini to make the look minimalist.

Now even those users who have not used the old board register their devices with enthusiasm and smiles, and the number of unsuccessful searches has become much less!

In the current system, undoubtedly, there are still a lot of points that can and should be improved:

PS This is how it works.

Video is recorded on a beta GUI.

And in order not to lose the phone, but to clearly know where he is and with whom, we use an online database that recognizes employees by persons. Now we will tell how we came to this and implemented it.

Historical context

We had a board with “cards” of devices with basic information and a place for a magnet that indicates an employee. One was noted about taking a device.

This system has its drawbacks - not critical, but generally uncomfortable:

- Magnets are not easy to move from one place to another.

- To look at this board, be sure to have to go to another office.

- And someone may need many devices at once ... So, you need several magnets for one employee.

- Oh yeah, even the employees sometimes quit and new ones come, which also need to make magnets.

Mobile app

In a company that is engaged in business process automation, using the “analog” solution described above is not very cool. Naturally, we decided to automate the task of finding the desired device. The first step was to write a mobile application that can determine and report its location in rooms via Wi-Fi access points. Along the way, for convenience, they gave the device the ability to report the OS version to the server, and also to show such an important characteristic as the battery charge.

It would seem that the problem is solved. You look at the list in the database, where the last time the device saw Wi-Fi, go there and ...

In operation it turned out that not everything is so simple. We installed the application on test devices and worked with it for several months. It turned out that this option is convenient, but also not perfect.

Devices are discharged, simply turned off, Wi-Fi access points are swapped from one place to another, and geolocation in itself says only that the device is in the office. Thank you, captain!

You can, of course, try to optimize the existing system, but why not reinvent it based on the technologies of the XXI century? No sooner said than done.

How we wanted it to be

We came up with the concept of a system that would recognize employees by persons, test devices - by special labels, request confirmation of a change in the status of the device, and then make changes to the online database that any employee can see without getting up from the chair.

Face recognition

Face recognition in general, the problem solved in 2018. Therefore, we did not reinvent the wheel and try to train our own models, but took advantage of a ready-made solution. The most convenient option was the FaceRecognition module , since It does not require additional training and works very quickly, even without acceleration on the GPU.

With the help of the face_locations function, photos of employees were detected in faces, and with the help of face_encodings, the signs of a person’s face were extracted.

The data obtained was collected in the database. To determine a specific employee, using the face_distance function was considered the “difference” between the encoding of the detected employee and the encodings from the database.

In general, at this stage it was possible to go further and create a classifier, for example, based on KNN , so that the system was less sensitive to the dynamics of employees' faces. However, in practice this requires much more time. Yes, and the banal averaging of the encoding of a person’s face between the one that is now in the database and the one that the system found to change the status of the device was already enough to avoid accumulating an error in practice.

Face Recognition Code

face_locations = face_recognition.face_locations(rgb_small_frame)

face_encodings = face_recognition.face_encodings(rgb_small_frame, face_locations)

face_names = []

for face_encoding in face_encodings:

matches = face_recognition.face_distance(

known_face_encodings, face_encoding)

name = "Unknown"if np.min(matches) <= 0.45:

best_match_index = np.argmin(matches)

name = known_face_info[str(best_match_index)]['name']

else:

best_match_index = NoneDevice recognition

Initially, the idea arose to use QR-codes for device recognition , in which to enter information about the device as well. However, for sustainable recognition of the QR code, it had to be brought very close to the camera, which is inconvenient.

As a result, the thought arose about the use of augmented reality markers. They carry less information, but are much more consistently recognized. In the course of the experiments, a marker measuring 30 millimeters in size was recognized by the camera with small deviations from the vertical (3-5 degrees) at a distance of up to two and a half meters.

This option already looked much better. Of the entire array of augmented reality markers, ARuco was chosen , since all the necessary tools to work with them are present in the deliveryopencv-contrib-python .

ARuco marker recognition code

self.video_capture = cv2.VideoCapture(0)

ret, frame = self.video_capture.read()

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

markers = cv2.aruco.detectMarkers(gray, self.dictionary)As a result, each device was assigned a numerical index with which the marker was compared with this index.

In the bag?

It would seem that we have learned to recognize the device and the face, the work is done. Fanfare, ovations! What else might be needed?

In fact, the work is just beginning. Now all the components of the system must be made to work stably and quickly "in combat".

It is necessary to optimize the cost of server resources in idle, think through the use cases and understand how this should look like graphically.

Interface

Perhaps the most important point in the development of such systems is the interface. Someone may argue, but the user is the central element in such a situation.

As quickly as possible, you can implement the frontend part with Tkinter .

A few notes about Tkinter

- Pay attention to what units indents / sizes of elements are set (relative or absolute).

- Remember that relative and absolute units can be used together (their values are simply added together).

- Parameter

.attributes("-fullscreen", True)

The interface consists of cards with information about the device and the user currently using this device. Most of the screen takes a catalog of cards - the main instrument of accounting. Above is a filter with which you can filter the directory by platform or version of the operating system.

Here are the key components of the interface:

- Device

The screen displays the device cards with the version of the operating system, the name and ID of the device, as well as the user on whom this device is now registered. - Photo-recording

On the right is the control unit, where the image from the webcam is displayed, as well as buttons for registering and editing personal information.

The image is displayed to give the user feedback - you definitely hit the screen with a device label. - Choosing the OS Version

We made a list with the choice of the OS version of interest, because Often, for testing, you need not a specific device, but a specific version of Android or iOS. The version filter is made horizontal to save space and to make the list of versions available without scrolling on one screen.

Optimization

One pass of any component of the system does not take too long. However, if you start recognizing markers and faces at the same time, then an attempt to recognize all 30 frames per second provided by the camera will lead to the complete exhaustion of computer resources without a GPU.

It is clear that 99% of the time the system will do this work idly.

To avoid this, the following optimization decisions were made:

- Only every eighth frame is submitted for processing.Code

if self.lastseen + self.rec_threshold > time.time() or self.frame_number != 8: ret, frame = self.video_capture.read() self.frame_number += 1if self.frame_number > 8: self.frame_number = 8return frame, None, None, None

The delay in the response of the system increases to about 8/30 seconds, while the human response time is approximately one second. Accordingly, such a delay will still not be noticeable to the user. And we have already eight times reduced the load on the system. - First, a device marker is searched for in the frame, and only when it is detected, face recognition is launched. Since searching for markers in a frame is about 300 times less expensive than searching for faces, we decided that in standby mode we will check only the presence of a marker.

- To reduce the “brakes” when searching for faces when there are no faces in the image, the number_of_time_to_upsample parameter was disabled in the face_locations function.

face_locations = face_recognition.face_locations (rgb_small_frame, number_of_times_to_upsample = 0)

Thanks to this, the processing time of a frame with no faces is equal to the processing time of a frame where faces are easily detected.

What is the result?

At the moment, the system has been successfully deployed on MacMini Late 2009, which has come up under the arm, on two Core 2 Duo cores. As part of testing, it quite successfully worked even on a single virtual core with 1024 megabytes of RAM and 4 gigabytes of permanent memory in a Docker container. A touchscreen display was connected to MacMini to make the look minimalist.

Now even those users who have not used the old board register their devices with enthusiasm and smiles, and the number of unsuccessful searches has become much less!

What's next?

In the current system, undoubtedly, there are still a lot of points that can and should be improved:

- Make it so that OS controls do not appear when dialog boxes appear (now this is the messagebox from the Tkinter package).

- Spread the calculations and server requests into different threads with the processing of the interface (they are now executed in the main thread, Tkinter's mainloop, which freezes the interface at the time of sending requests to the online database).

- Lead the interface to the same design with other corporate resources.

- Make a full-fledged web interface for remote viewing of data.

- Use voice recognition to confirm / cancel actions and fill in text fields.

- Implement registering multiple devices at the same time.

PS This is how it works.

Video is recorded on a beta GUI.