How to Build a Scalable Infrastructure on Storage Spaces

We often wrote about clusters in a box and Windows Storage Spaces, and the most common question is how to choose the correct disk configuration?

The traditional question usually looks like this:

- I want 100500 IOPS!

or:

- I want 20 terabytes.

Then it turns out that IOPS really do not need so much and you can get by with a few SSDs, and they want to remove decent 20 decibels from 20 terabytes (4 disks at present), and in reality it will turn out multi-level storage.

How to approach this correctly and plan ahead?

You need to consistently answer several key questions, for example:

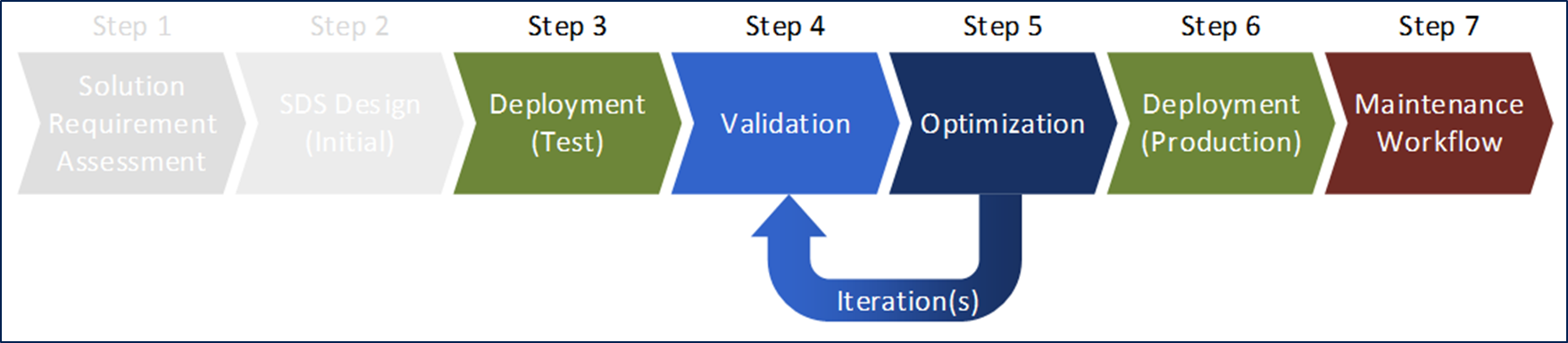

The process of creating storage on Storage Spaces

Key technologies:

Advantages of Storage Spaces: Flexible and inexpensive data storage solution, based entirely on mass equipment and Microsoft software.

The material assumes familiarity with the basics of Storage Spaces (available here and here ).

The steps:

The first step has been described above.

Software Defined Storage Architecture Definition (Preliminary)

Highlights:

General principles You

must install all updates and patches (as well as firmware).

Try to make the configuration symmetrical and holistic.

Consider possible failures and create a plan to eliminate the consequences.

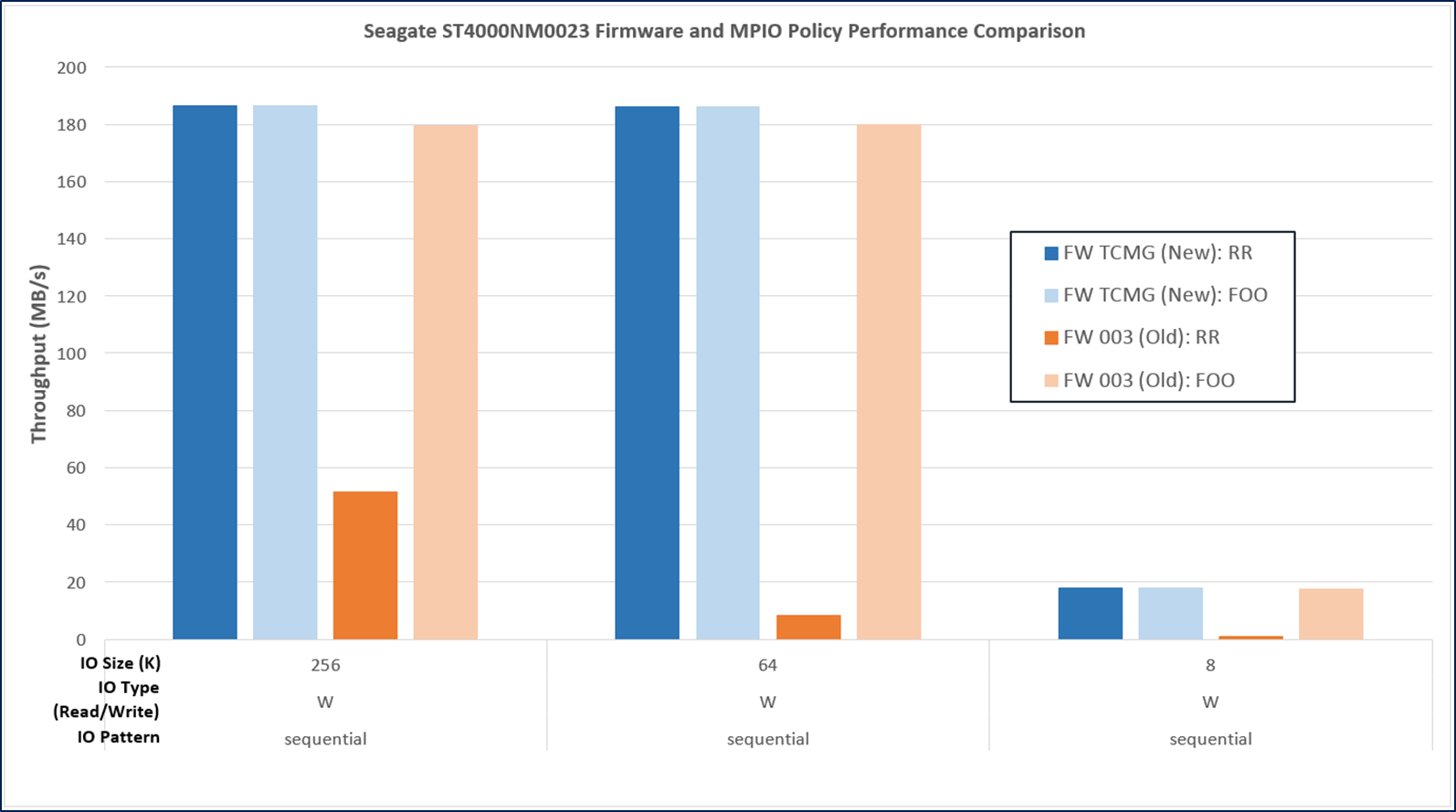

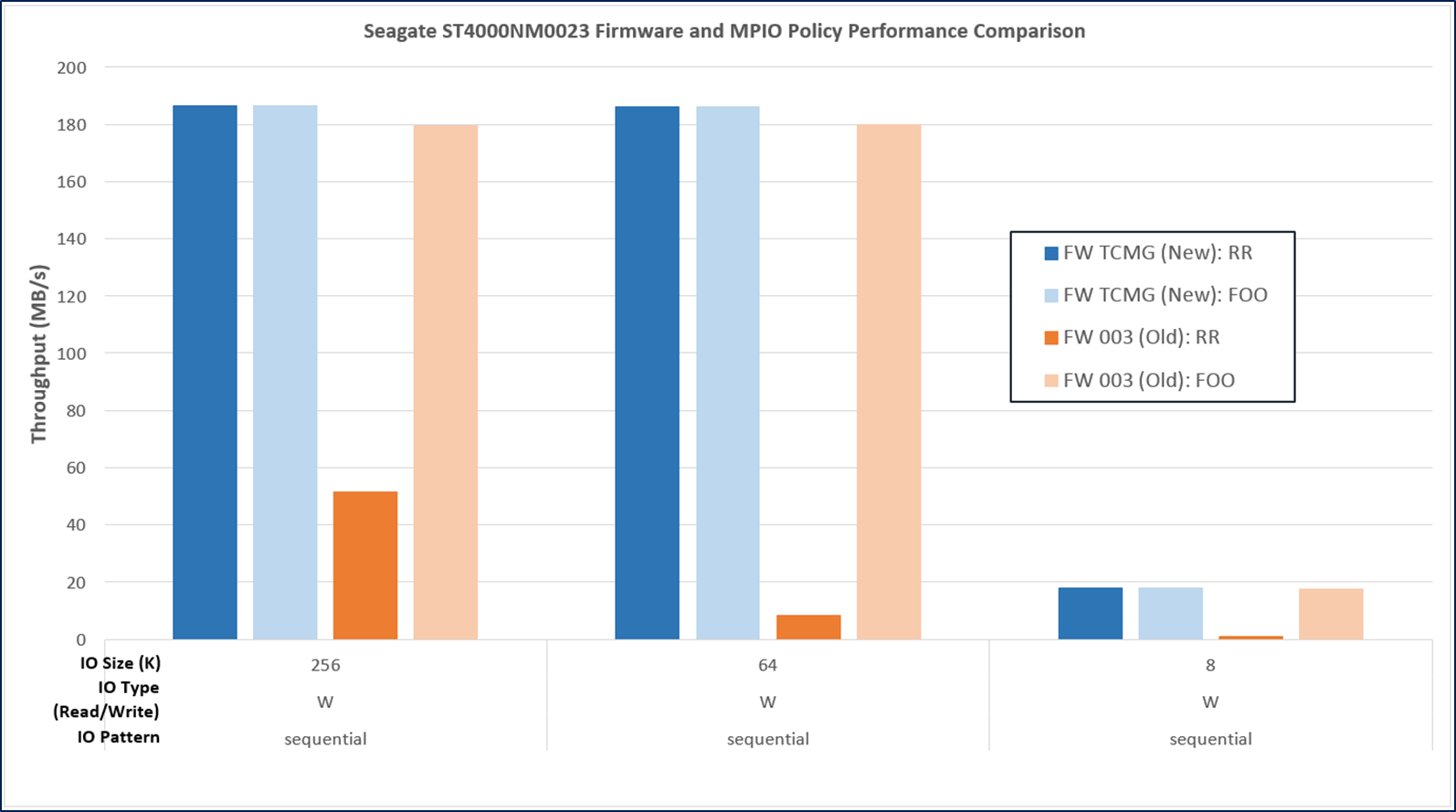

Why firmware is important

Key requirements: tearing

Multilevel storage (tearing) significantly increases the overall performance of the storage system. However, SSDs have a relatively small capacity and high price per gigabyte and slightly increase the complexity of managing storage.

Usually tearing is still used, and the "hot" data is placed on the productive level of SSD drives.

Key requirements: type and number of hard drives

The type, capacity and number of disks should reflect the desired storage capacity. SAS and NL-SAS disks are supported, but expensive SAS 10 / 15K disks are not so often needed when using tiering with storage level on SSD.

Typical choice *: 2-4 TB NL-SAS, a model from one manufacturer with the latest stable firmware version.

* Typical values reflect current recommendations for working with virtualized tasks. Other types of load require additional study.

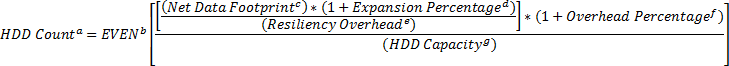

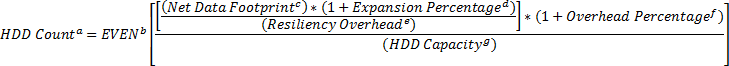

Capacity calculation:

Where:

a) The number of hard drives.

b) The number of disks - the closest even upper value to the resulting number.

c) Typical data block size (in gigabytes).

d) Stock for capacity expansion.

e) Losses to ensure data integrity. Two-way mirror, three-way mirror, parity and other types of array offer a different margin of "strength".

f) Each disk in Storage Spaces stores a copy of metadata (about pools, virtual disks) + if necessary, provide a reserve for fast rebuild technology.

g) Disk capacity in gigabytes.

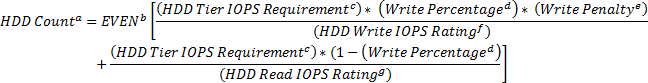

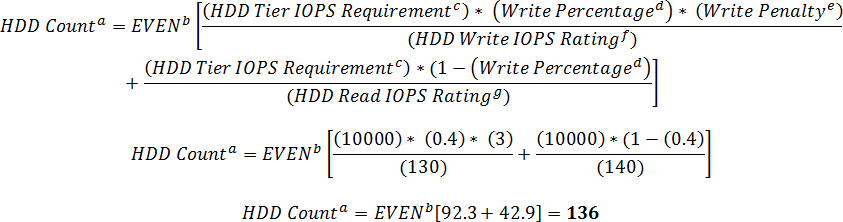

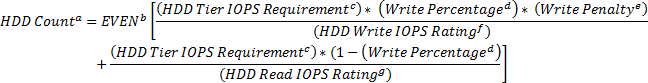

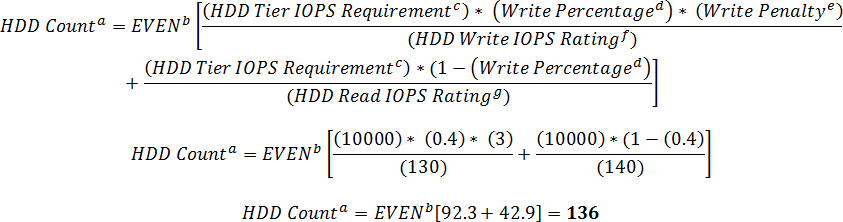

Performance calculation:

Where:

a) The number of hard drives.

b) The number of disks - the closest even upper value to the resulting number.

c) The number of IOPS that is planned to be received from the level of hard drives.

d) Percentage of record.

e) Penalties for recording, which is different for the different levels of the array used, which must be taken into account (for the system as a whole, for example, for a 2-way mirror, 1 write operation causes 2 writes to discs, for 3-way mirror records already 3).

f) Evaluation of the performance of the disc for writing.

g) Evaluation of disk read performance.

* Calculations are given for the starting point of system planning .

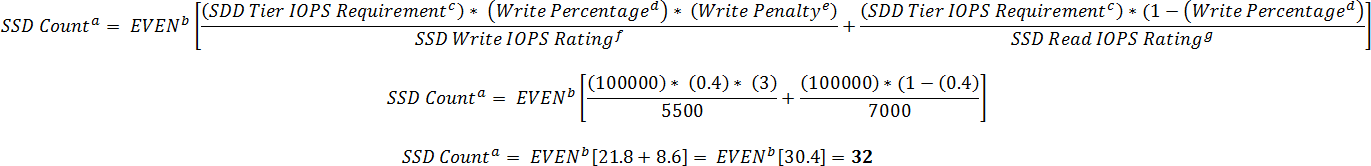

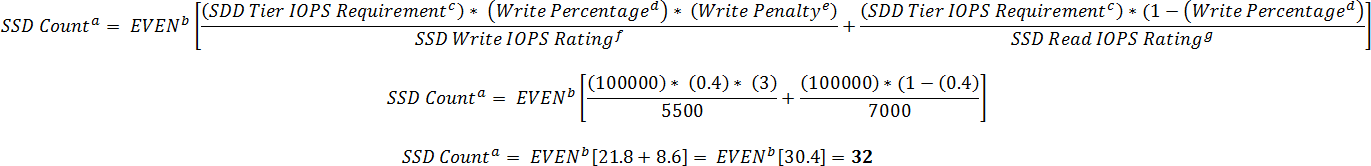

Key requirements: type and number of SSDs

With hard drives sorted out, it's time to calculate the SSD. Their type, quantity and volume is based on the desired maximum performance of the disk subsystem.

Increasing the capacity of the SSD tier allows you to move more tasks to the faster tier with the built-in tiering engine.

Since the number of columns (Columns, parallelization technology for working with data) in a virtual disk must be the same for both levels, increasing the number of SSDs usually allows you to create more columns and increase the performance of the HDD level.

Typical choice: 200-1600 GB on MLC-memory, a model from one manufacturer with the latest stable version of the firmware.

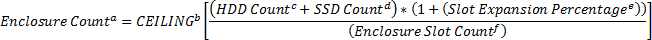

Performance calculation *:

Where:

a) The number of SSDs.

b) The number of SSDs - the closest even upper value to the resulting number.

c) The number of IOPS to be received from the SSD level.

d) Percentage of record.

e) Penalty penalties differing for different levels of the array, must be taken into account when planning.

f) Record SSD performance evaluation.

g) Read SSD performance.

* For starting point only. Usually, the number of SSDs significantly exceeds the minimum required for performance due to additional factors.

Recommended minimum number of SSDs in shelves:

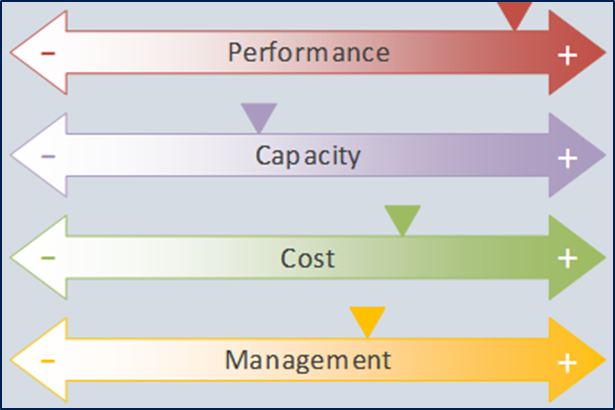

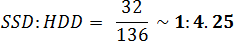

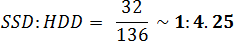

Key requirements: ratio SSD: HDD

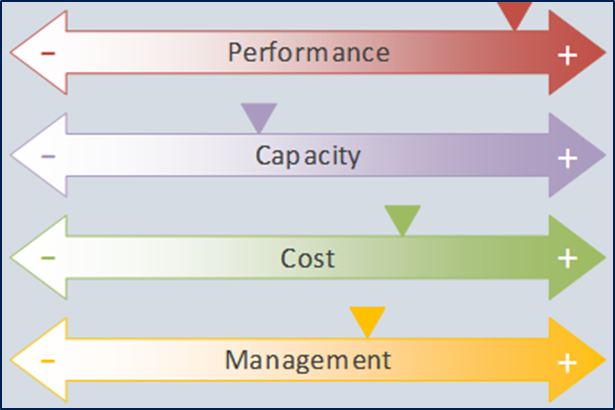

Selection of a ratio is a balance of performance, capacity and cost. Adding an SSD improves performance (most operations take place at the SSD level, the number of columns in virtual disks increases, etc.), but significantly increases the cost and reduces the potential capacity (a small SSD is used instead of a capacious disk).

Typical choice: SSD: HDD *: 1: 4 - 1: 6

* By quantity, not capacity.

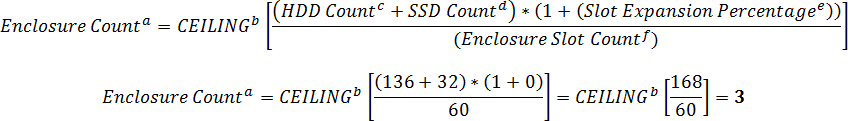

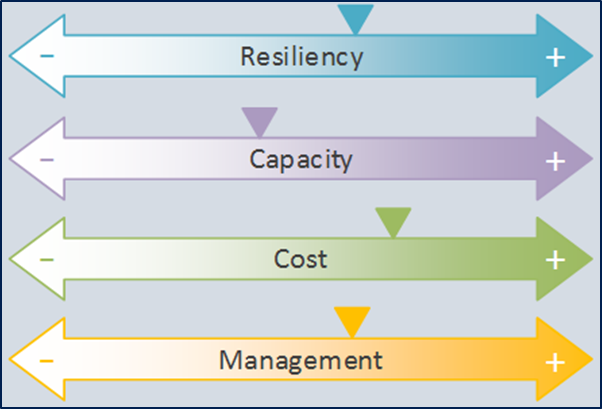

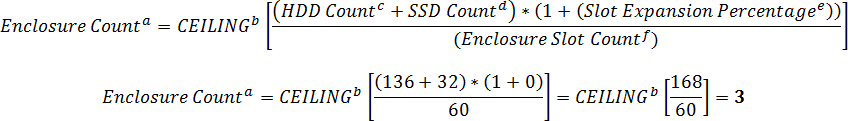

Key requirements: number and configuration of disk shelves (JBOD)

Disk shelves differ in many ways - the number of installed disks, the number of SAS ports, etc.

The use of several shelves allows you to take into account their presence in fault tolerance schemes (using the enclosure awareness function), but also increases the disk space required for Fast Rebuild.

The configuration should be symmetrical in all shelves (meaning cable connection and disk location).

A typical choice: the number of shelves> = 2, IO modules in the shelf - 2, a single model, the latest firmware versions and symmetrical disk layout in all shelves.

Typically, the number of disk shelves is selected from the total number of disks, taking into account the stock for expansion and fault tolerance, taking into account the shelves (using the enclosure awareness function) and / or adding SAS paths for throughput and fault tolerance.

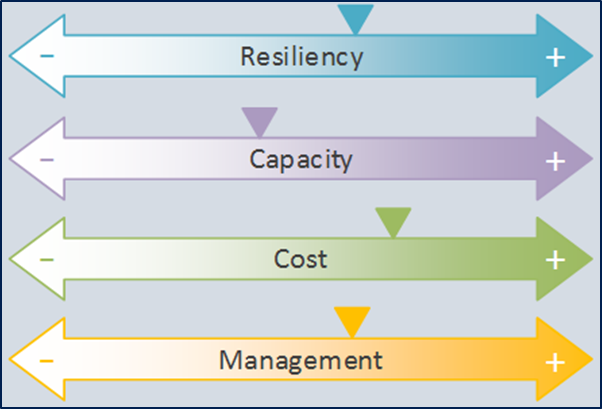

Calculation:

Where:

a) Number of JBOD.

b) Rounded up.

c) Number of HDDs.

d) Number of SSDs.

e) Free disk slots.

f) The maximum number of disks in a JBOD.

Key requirements: SAS HBA and cables

The SAS cable topology must ensure that each storage server connects to the JBOD shelves using at least one SAS path (that is, one SAS port in the server corresponds to one SAS port in each JBOD).

Depending on the number and type of disks in the JBOD shelves, the total performance can easily reach the limit of one 4x 6G SAS port (~ 2.2 GB / s).

Recommended:

Key requirements: storage server configuration

Here you need to take into account such features as server performance, the number in the cluster for fault tolerance and ease of maintenance, load characteristics (total IOPS, throughput, etc.), application of unloading technologies (RDMA), number of ports SAS on JBOD and multipath requirements.

2-4 servers recommended; 2 x processors 6+ cores; memory> = 64 GB; two local hard drives in the mirror; two 1+ GbE ports for management and two 10+ GbE RDMA ports for data exchange; BMC port is either dedicated or combined with 1GbE and supports IPMI 2.0 and / or SMASH; SAS HBA from the previous paragraph.

Key requirements: number of disk pools

At this step, we take into account that pools are units for both management and fault tolerance. A failed disk in the pool affects all virtual disks located on the pool, each disk in the pool contains metadata.

Increase the number of pools:

Typical choice:

Key requirements: pool configuration The pool

contains default data for the corresponding VDs and several settings that affect the storage behavior:

Typical choice:

Key requirements: number of virtual disks (VD)

We take into account the following features:

Typical selection of the number of VDs: 2-4 per storage server.

Key requirements: virtual disk configuration (VD)

Three types of array are available - Simply, Mirror and Parity, but only Mirror is recommended for virtualization tasks.

3-way mirroring, compared to 2-way, doubles the protection against disk failure at the cost of a slight decrease in performance (higher than the penalty for recording), a decrease in available space and a corresponding increase in cost.

Comparison of mirroring types:

Microsoft for the Cloud Platform System (CPS) recommends a 3-way mirror.

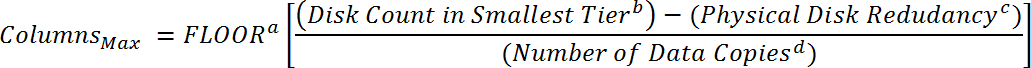

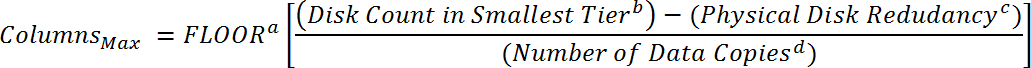

Key requirements: number of columns

Usually, increasing the number of columns increases VD performance, but delays can also increase (more columns - more disks that must confirm the operation). The formula for calculating the maximum number of columns in a particular mirrored VD is:

Where:

a) Rounding down.

b) The number of disks in a lower level (usually this is the number of SSDs).

c) Disk fault tolerance. For a 2-way mirror, this is 1 disc; for a 3-way mirror, this is 2 discs.

d) Number of copies of data. For 2-way mirror it is 2, for 3-way mirror it is 3.

If the maximum number of columns is selected and the disk fails, then the pool does not find the required number of disks to match the number of columns and, accordingly, fast rebuild will be unavailable.

The usual choice is 4-6 (1 less than the calculated maximum for Fast Rebuild). This feature is available only in PowerShell, when building through a graphical interface, the system itself will choose the maximum possible number of columns, up to 8.

Key requirements: virtual disk options

Typical choice:

Interleave: 256K (default)

WBC Size: 1GB (default)

IsEnclosureAware: use whenever possible.

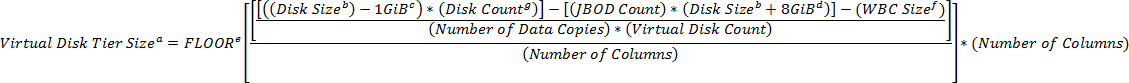

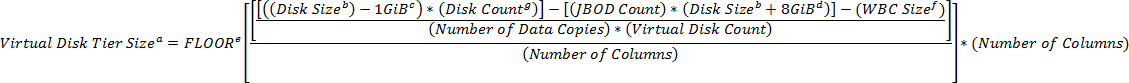

Key requirements: virtual disk size

Obtaining the optimal VD size on the pool requires calculations for each storage level and summarizing the optimal size of the VD levels. It is also necessary to reserve space for Fast Rebuild and metadata, as well as for internal rounding during calculations (buried in the depths of the Storage Spaces stack, so we just reserve it).

Formula:

Where:

a) A conservative approach leaves a little more unallocated space in the pool than is necessary for Fast Rebuild to work. Value in GiB (power of 2, GB is a derivative of power of 10).

b) Value in GiB.

c) Reserve space for Storage Spaces metadata (all disks in the pool contain metadata for both the pool and virtual disks).

d) Reserve space for Fast Rebuild:> = the size of one disk (+ 8GiB) for each level in the pool in the disk shelf.

e) The size of the storage level is already rounded up into several “tiles” (Storage Spaces breaks each disk in the pool into pieces called “tiles”, which are used to build the array), the size of the tile is equal to the size of the Storage Spaces unit (1GiB) times number of columns therefore, we round the size down to the nearest integer so as not to highlight the excess.

f) The size of the write cache, in GiB, for the desired level (1 for the SSD level, 0 for the HDD level).

g) The number of disks in a particular tier of a particular pool.

Spaces-Based SDS: the next steps

With the initial estimates have been decided, move on. Changes and improvements (and they will certainly appear) in the results will be made according to the results of testing.

Example!

What do you want to get?

Equipment:

Disks with a capacity of 2/4/6 / 8TB

IOPS (R / W): 140/130 R / W IOPS (declared characteristics: 175 MB / s, 4.16ms)

SSD with a capacity of 200/400/800 / 1600GB

IOPS

Read: 7000 IOPS @ 460MB / s (stated: 120K)

Write: 5500 IOPS @ 360MB / s (stated: 40K)

SSDs in real tasks show significantly different numbers than stated :)

Disk shelves - 60 disks, two SAS modules, 4 ports on each ETegro Fastor JS300 .

Inputs

Tiering is necessary because high performance is required.

High reliability and not very large capacity are required, we use 3-way mirroring.

Disk selection (based on performance and budget requirements): 4TB HDD and 800GB MLC SSD

Calculations:

Spindles by capacity :

Spindles by capacity (we want to get 10K IOPS) : The

total capacity significantly exceeds the initial estimates, since it is necessary to meet the performance requirements.

SSD level:

The ratio of SSD: HDD: The

number of disk shelves: The

location of disks in the shelves:

Increase the number of disks to get a symmetrical distribution and the optimal ratio of SSD: HDD. For ease of expansion, it is also recommended that the disk shelves be full.

SSD: 32 -> 36

HDD: 136 -> 144 (SSD: HDD 1: 4)

SSD / Enclosure: 12

HDD / Enclosure: 48

SAS cables

For a fail-safe connection and maximizing throughput, you need to connect each disk shelf with two cables (two paths from the server to each shelf). Total 6 SAS ports per server.

Number of servers

Based on the requirements for fault tolerance, IO, budget and multipath organization requirements, we take 3 servers.

The number of pools The

number of disks in the pool should be equal to or less than 80, we take 3 pools (180/80 = 2.25).

HDDs / Pool: 48

SSDs / Pool: 12

Pool configuration

Hot Spares: No

Fast Rebuild: Yes (reserve enough space)

RepairPolicy: Parallel (default)

RetireMissingPhysicalDisks: Always

IsPowerProtected: False (default)

Number of virtual disks

Based on the requirements, we use 2 VD per server, total 6 evenly distributed across pools (2 per pool).

Configuration of virtual disks

Based on the requirements for resistance to data loss (for example, there is no way to replace a failed disk in a few days) and load, we use the following settings:

Resiliency: 3-way mirroring

Interleave: 256K (default)

WBC Size: 1GB (default)

IsEnclosureAware: $ true

Number of columns

Virtual disk size and storage levels

Total:

Storage servers: 3

SAS ports / server: 6

SAS paths from server to each shelf: 2

Disk shelves: 3

Number of pools: 3

Number of VD: 6

Virtual Disks / Pool: 2

HDD: 144 @ 4TB (~ 576TB of raw space), 48 / shelf, 48 / pool, 16 / shelf / pool

SSD: 36 @ 800GB (~ 28TB of raw space), 12 / shelf , 12 / pool, 4 / shelf / pool

Virtual Disk size: SSD Tier + HDD Tier = 1110GB + 27926GB = 28.4TB

Total usable capacity: (28.4) * 6 = 170TB

Overhead: (1 - 170 / (576 + 28) ) = 72%

The traditional question usually looks like this:

- I want 100500 IOPS!

or:

- I want 20 terabytes.

Then it turns out that IOPS really do not need so much and you can get by with a few SSDs, and they want to remove decent 20 decibels from 20 terabytes (4 disks at present), and in reality it will turn out multi-level storage.

How to approach this correctly and plan ahead?

You need to consistently answer several key questions, for example:

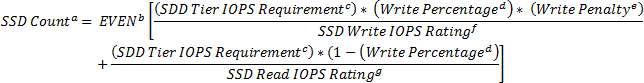

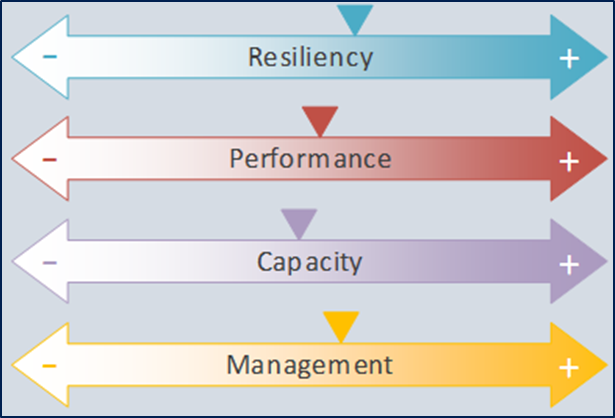

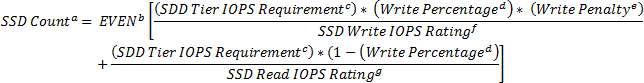

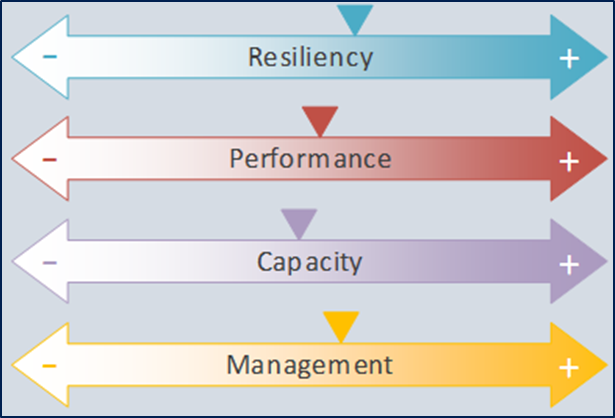

- Data integrity protection priority (for example, if a disk fails).

- Performance is required, but not at the cost of protection.

- Moderate capacity requirement.

- Budget size for building a mixed solution.

- Convenience of management and monitoring.

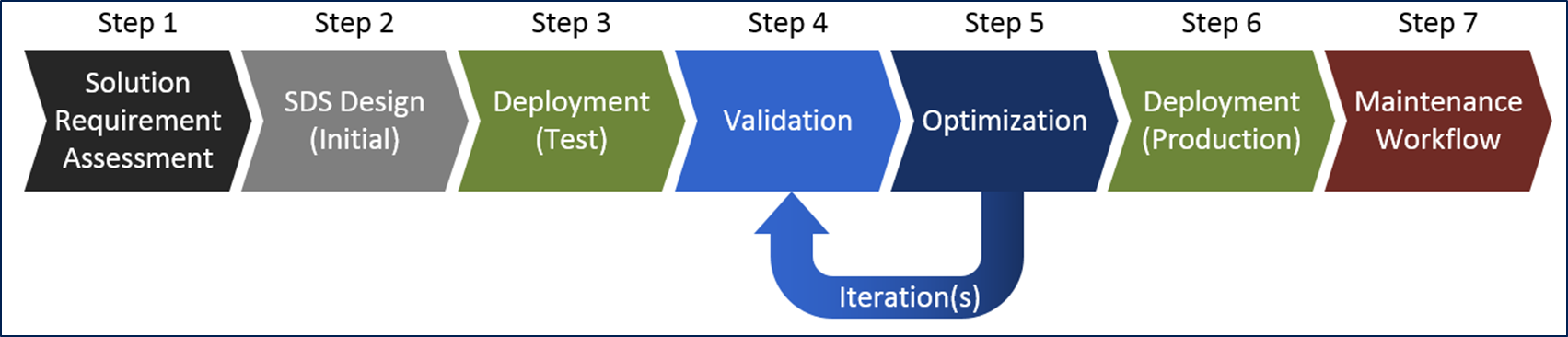

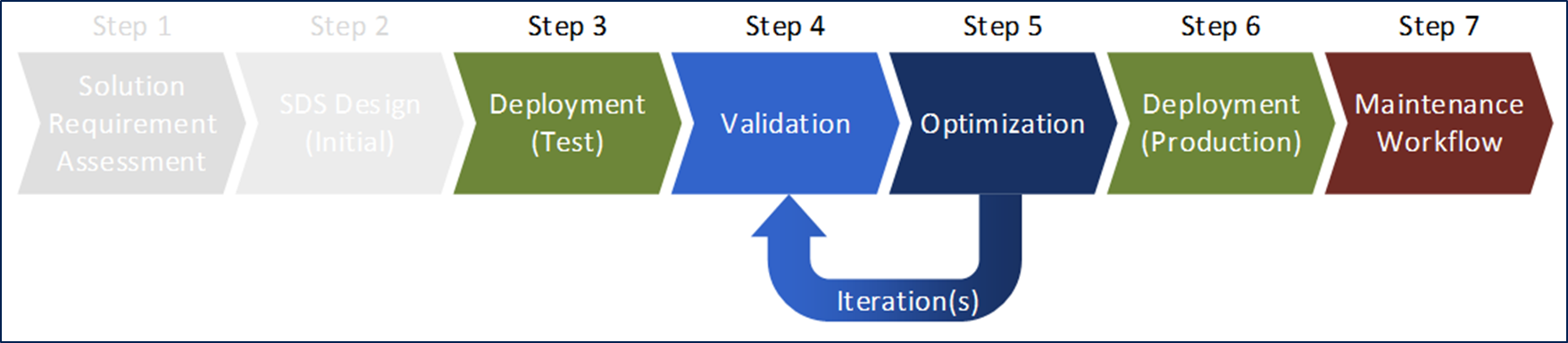

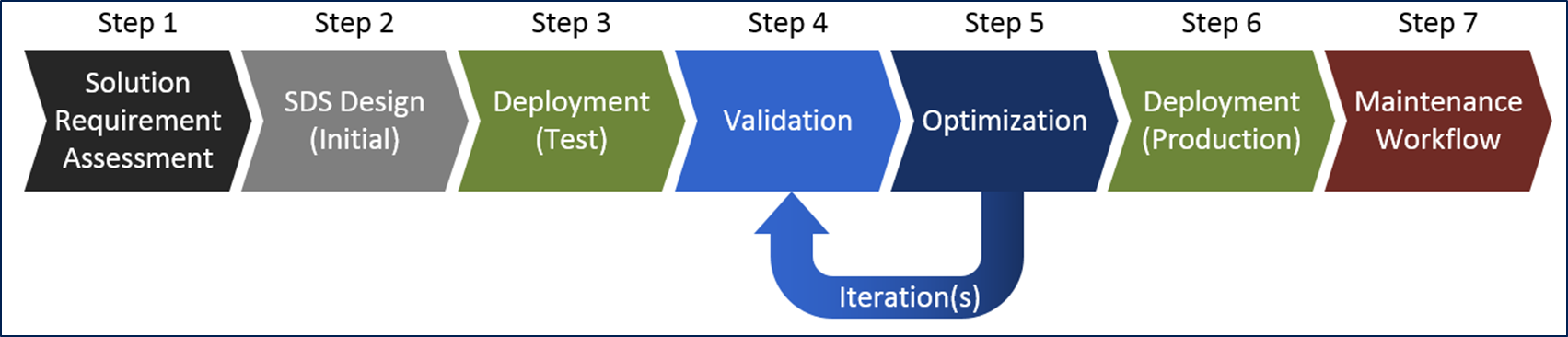

The process of creating storage on Storage Spaces

Key technologies:

- Storage Spaces - Virtualized Storage: Failover and Performance.

- Failover Clustering - Highly accessible storage access.

- Scale-Out File Server (SOFS) and Cluster Shared Volumes (CSV) - Scalable and unified storage access.

- SMB3 - Failover and performance protocol using SMB Multichannel, SMB Direct, and SMB Client Redirection.

- System Center, PowerShell and In-box Windows Tooling - Management / configuration / maintenance.

Advantages of Storage Spaces: Flexible and inexpensive data storage solution, based entirely on mass equipment and Microsoft software.

The material assumes familiarity with the basics of Storage Spaces (available here and here ).

The steps:

| 1 | Decision evaluation | Identify key solution requirements, characteristics of a successful installation. |

| 2 | SDS Design (Preview) | Selection of existing blocks (hardware and software) for the requirements, the choice of topology and configurations. |

| 3 | Test deployment | Testing the selected solution in a real environment, possibly on a reduced scale (Proof-of-Concept, PoC). |

| 4 | Validation | Check for compliance with the requirements of paragraph 1. Usually begins with a synthetic load (SQLIO, Iometer, etc.), the final check is carried out in a real environment. |

| 5 | Optimization | Based on the results of the previous steps and the identified difficulties, tuning and optimizing the solution (add / remove / replace hardware units, modify the topology, reconfigure the software, etc.), return to step 4 |

| 6 | Deployment (in a real environment) | After optimizing the initial solution to the stated requirements, the period of implementation of the final version into the real operating environment begins. |

| 7 | The working process | Operation phase. Monitoring, troubleshooting, upgrading, scaling, etc. |

The first step has been described above.

Software Defined Storage Architecture Definition (Preliminary)

Highlights:

- The work of tearing.

- Counting the number of HDD and SSD.

- Optimizing the ratio of SSD: HDD.

- Determining the required number of disk shelves.

- Requirements for SAS HBA and cable infrastructure.

- Determining the number of storage servers and configurations.

- Determining the number of disk pools (Pool).

- Configuring pools.

- Counting the number of virtual disks.

- Defining virtual disk configurations.

- Calculation of the optimal virtual disk size.

General principles You

must install all updates and patches (as well as firmware).

Try to make the configuration symmetrical and holistic.

Consider possible failures and create a plan to eliminate the consequences.

Why firmware is important

Key requirements: tearing

Multilevel storage (tearing) significantly increases the overall performance of the storage system. However, SSDs have a relatively small capacity and high price per gigabyte and slightly increase the complexity of managing storage.

Usually tearing is still used, and the "hot" data is placed on the productive level of SSD drives.

Key requirements: type and number of hard drives

The type, capacity and number of disks should reflect the desired storage capacity. SAS and NL-SAS disks are supported, but expensive SAS 10 / 15K disks are not so often needed when using tiering with storage level on SSD.

Typical choice *: 2-4 TB NL-SAS, a model from one manufacturer with the latest stable firmware version.

* Typical values reflect current recommendations for working with virtualized tasks. Other types of load require additional study.

Capacity calculation:

Where:

a) The number of hard drives.

b) The number of disks - the closest even upper value to the resulting number.

c) Typical data block size (in gigabytes).

d) Stock for capacity expansion.

e) Losses to ensure data integrity. Two-way mirror, three-way mirror, parity and other types of array offer a different margin of "strength".

f) Each disk in Storage Spaces stores a copy of metadata (about pools, virtual disks) + if necessary, provide a reserve for fast rebuild technology.

g) Disk capacity in gigabytes.

Performance calculation:

Where:

a) The number of hard drives.

b) The number of disks - the closest even upper value to the resulting number.

c) The number of IOPS that is planned to be received from the level of hard drives.

d) Percentage of record.

e) Penalties for recording, which is different for the different levels of the array used, which must be taken into account (for the system as a whole, for example, for a 2-way mirror, 1 write operation causes 2 writes to discs, for 3-way mirror records already 3).

f) Evaluation of the performance of the disc for writing.

g) Evaluation of disk read performance.

* Calculations are given for the starting point of system planning .

Key requirements: type and number of SSDs

With hard drives sorted out, it's time to calculate the SSD. Their type, quantity and volume is based on the desired maximum performance of the disk subsystem.

Increasing the capacity of the SSD tier allows you to move more tasks to the faster tier with the built-in tiering engine.

Since the number of columns (Columns, parallelization technology for working with data) in a virtual disk must be the same for both levels, increasing the number of SSDs usually allows you to create more columns and increase the performance of the HDD level.

Typical choice: 200-1600 GB on MLC-memory, a model from one manufacturer with the latest stable version of the firmware.

Performance calculation *:

Where:

a) The number of SSDs.

b) The number of SSDs - the closest even upper value to the resulting number.

c) The number of IOPS to be received from the SSD level.

d) Percentage of record.

e) Penalty penalties differing for different levels of the array, must be taken into account when planning.

f) Record SSD performance evaluation.

g) Read SSD performance.

* For starting point only. Usually, the number of SSDs significantly exceeds the minimum required for performance due to additional factors.

Recommended minimum number of SSDs in shelves:

| Array type | JBOD on 24 disks (2 columns) | JBOD on 60 drives (4 columns) |

| Two-way mirror | 4 | 8 |

| Three-way mirror | 6 | 12 |

Key requirements: ratio SSD: HDD

Selection of a ratio is a balance of performance, capacity and cost. Adding an SSD improves performance (most operations take place at the SSD level, the number of columns in virtual disks increases, etc.), but significantly increases the cost and reduces the potential capacity (a small SSD is used instead of a capacious disk).

Typical choice: SSD: HDD *: 1: 4 - 1: 6

* By quantity, not capacity.

Key requirements: number and configuration of disk shelves (JBOD)

Disk shelves differ in many ways - the number of installed disks, the number of SAS ports, etc.

The use of several shelves allows you to take into account their presence in fault tolerance schemes (using the enclosure awareness function), but also increases the disk space required for Fast Rebuild.

The configuration should be symmetrical in all shelves (meaning cable connection and disk location).

A typical choice: the number of shelves> = 2, IO modules in the shelf - 2, a single model, the latest firmware versions and symmetrical disk layout in all shelves.

Typically, the number of disk shelves is selected from the total number of disks, taking into account the stock for expansion and fault tolerance, taking into account the shelves (using the enclosure awareness function) and / or adding SAS paths for throughput and fault tolerance.

Calculation:

Where:

a) Number of JBOD.

b) Rounded up.

c) Number of HDDs.

d) Number of SSDs.

e) Free disk slots.

f) The maximum number of disks in a JBOD.

Key requirements: SAS HBA and cables

The SAS cable topology must ensure that each storage server connects to the JBOD shelves using at least one SAS path (that is, one SAS port in the server corresponds to one SAS port in each JBOD).

Depending on the number and type of disks in the JBOD shelves, the total performance can easily reach the limit of one 4x 6G SAS port (~ 2.2 GB / s).

Recommended:

- The number of SAS ports per server> = 2.

- The number of SAS HBAs per server> = 1.

- Latest SAS HBA firmware versions.

- Several SAS paths to JBOD shelves.

- Windows MPIO Settings: Round-Robin.

Key requirements: storage server configuration

Here you need to take into account such features as server performance, the number in the cluster for fault tolerance and ease of maintenance, load characteristics (total IOPS, throughput, etc.), application of unloading technologies (RDMA), number of ports SAS on JBOD and multipath requirements.

2-4 servers recommended; 2 x processors 6+ cores; memory> = 64 GB; two local hard drives in the mirror; two 1+ GbE ports for management and two 10+ GbE RDMA ports for data exchange; BMC port is either dedicated or combined with 1GbE and supports IPMI 2.0 and / or SMASH; SAS HBA from the previous paragraph.

Key requirements: number of disk pools

At this step, we take into account that pools are units for both management and fault tolerance. A failed disk in the pool affects all virtual disks located on the pool, each disk in the pool contains metadata.

Increase the number of pools:

- Increases the reliability of the system, as the number of elements of fault tolerance increases.

- Increases the number of disks for backup space (i.e. an additional penalty), since Fast Rebuild works at the pool level.

- Increases system management complexity.

- Reduces the number of columns in a Virtual Drive (VD), reducing performance, since VD cannot stretch across multiple pools.

- Reduces time for processing pool metadata, such as rebuild a virtual disk or transfer pool control in the event of a cluster failure (performance improvement).

Typical choice:

- The number of pools from 1 to the number of disk shelves.

- Disks / Pool <= 80

Key requirements: pool configuration The pool

contains default data for the corresponding VDs and several settings that affect the storage behavior:

| Option | Description |

| RepairPolicy | Sequential vs Parallel (respectively, lower IO load, but slow, or high IO load, but fast) |

| RetireMissingPhysicalDisks | With the Fast Rebuild option, dropped disks do not cause pool recovery if Auto is set (with the Always value, pool rebuild always starts using a Spare disk. But not immediately, but 5 minutes after the first unsuccessful attempt to write to this disk). |

| IsPowerProtected | True means that all write operations are considered completed without confirmation from the disk. A power outage can cause data corruption if there is no protection (a similar technology appeared on hard drives). |

Typical choice:

- Hot Spares: No

- Fast Rebuild: Yes

- RepairPolicy: Parallel (default)

- RetireMissingPhysicalDisks: Auto (default, MS recommended)

- IsPowerProtected: False (default, but if enabled, performance increases significantly)

Key requirements: number of virtual disks (VD)

We take into account the following features:

- The ratio of SMB folders serving clients to the CSV layer and the corresponding VD; should be 1: 1: 1.

- Each tiled virtual disk has a dedicated WBC cache; increasing the number of VDs can improve performance for some tasks (ceteris paribus).

- Increasing the amount of VD increases the control complexity.

- The increase in the number of loaded VDs facilitates the distribution of the load of the failed node to other elements of the cluster.

Typical selection of the number of VDs: 2-4 per storage server.

Key requirements: virtual disk configuration (VD)

Three types of array are available - Simply, Mirror and Parity, but only Mirror is recommended for virtualization tasks.

3-way mirroring, compared to 2-way, doubles the protection against disk failure at the cost of a slight decrease in performance (higher than the penalty for recording), a decrease in available space and a corresponding increase in cost.

Comparison of mirroring types:

| Number of pools | Type of mirror | Overhead | Pool Resilience | System stability |

| 1 | 2-way | fifty% | 1 disc | 1 disc |

| 3-way | 67% | 2 discs | 2 discs | |

| 2 | 2-way | fifty% | 1 disc | 2 discs |

| 3-way | 67% | 2 discs | 4 discs | |

| 3 | 2-way | fifty% | 1 disc | 3 discs |

| 3-way | 67% | 2 discs | 6 discs | |

| 4 | 2-way | fifty% | 1 disc | 4 discs |

| 3-way | 67% | 2 discs | 8 discs |

Microsoft for the Cloud Platform System (CPS) recommends a 3-way mirror.

Key requirements: number of columns

Usually, increasing the number of columns increases VD performance, but delays can also increase (more columns - more disks that must confirm the operation). The formula for calculating the maximum number of columns in a particular mirrored VD is:

Where:

a) Rounding down.

b) The number of disks in a lower level (usually this is the number of SSDs).

c) Disk fault tolerance. For a 2-way mirror, this is 1 disc; for a 3-way mirror, this is 2 discs.

d) Number of copies of data. For 2-way mirror it is 2, for 3-way mirror it is 3.

If the maximum number of columns is selected and the disk fails, then the pool does not find the required number of disks to match the number of columns and, accordingly, fast rebuild will be unavailable.

The usual choice is 4-6 (1 less than the calculated maximum for Fast Rebuild). This feature is available only in PowerShell, when building through a graphical interface, the system itself will choose the maximum possible number of columns, up to 8.

Key requirements: virtual disk options

| Virtual Disk Option | Considerations |

| Interleave | For random loads (virtualized, for example), the block size must be greater than or equal to the largest active request, since any request larger than this will be divided into several operations, which will reduce performance. |

| WBC Cache Size | The default value is 1 GB, which is a reasonable balance between performance and fault tolerance for most tasks (increasing the size of the cache increases the transfer time for failures, when transferring a CSV volume, a reset and recovery procedure is required, as well as problems due to denial of service) . |

| IsEnclosureAware | Increases the level of protection against failures, if possible - it is recommended to use. To activate, you need to match the number of disk shelves to the array level (available only through PowerShell). For 3-way mirror you need 3 shelves, for dual parity 4, etc. This feature allows you to survive the complete loss of the disk shelf. |

Typical choice:

Interleave: 256K (default)

WBC Size: 1GB (default)

IsEnclosureAware: use whenever possible.

Key requirements: virtual disk size

Obtaining the optimal VD size on the pool requires calculations for each storage level and summarizing the optimal size of the VD levels. It is also necessary to reserve space for Fast Rebuild and metadata, as well as for internal rounding during calculations (buried in the depths of the Storage Spaces stack, so we just reserve it).

Formula:

Where:

a) A conservative approach leaves a little more unallocated space in the pool than is necessary for Fast Rebuild to work. Value in GiB (power of 2, GB is a derivative of power of 10).

b) Value in GiB.

c) Reserve space for Storage Spaces metadata (all disks in the pool contain metadata for both the pool and virtual disks).

d) Reserve space for Fast Rebuild:> = the size of one disk (+ 8GiB) for each level in the pool in the disk shelf.

e) The size of the storage level is already rounded up into several “tiles” (Storage Spaces breaks each disk in the pool into pieces called “tiles”, which are used to build the array), the size of the tile is equal to the size of the Storage Spaces unit (1GiB) times number of columns therefore, we round the size down to the nearest integer so as not to highlight the excess.

f) The size of the write cache, in GiB, for the desired level (1 for the SSD level, 0 for the HDD level).

g) The number of disks in a particular tier of a particular pool.

Spaces-Based SDS: the next steps

With the initial estimates have been decided, move on. Changes and improvements (and they will certainly appear) in the results will be made according to the results of testing.

Example!

What do you want to get?

- High level of fault tolerance.

- Target performance: 100K IOPS with SSD level, 10K IOPS with HDD level at random loading with 64K blocks with 60/40 read

- Capacity - 1000 virtual machines, 40GB each and 15% of the reserve.

Equipment:

Disks with a capacity of 2/4/6 / 8TB

IOPS (R / W): 140/130 R / W IOPS (declared characteristics: 175 MB / s, 4.16ms)

SSD with a capacity of 200/400/800 / 1600GB

IOPS

Read: 7000 IOPS @ 460MB / s (stated: 120K)

Write: 5500 IOPS @ 360MB / s (stated: 40K)

SSDs in real tasks show significantly different numbers than stated :)

Disk shelves - 60 disks, two SAS modules, 4 ports on each ETegro Fastor JS300 .

Inputs

Tiering is necessary because high performance is required.

High reliability and not very large capacity are required, we use 3-way mirroring.

Disk selection (based on performance and budget requirements): 4TB HDD and 800GB MLC SSD

Calculations:

Spindles by capacity :

Spindles by capacity (we want to get 10K IOPS) : The

total capacity significantly exceeds the initial estimates, since it is necessary to meet the performance requirements.

SSD level:

The ratio of SSD: HDD: The

number of disk shelves: The

location of disks in the shelves:

Increase the number of disks to get a symmetrical distribution and the optimal ratio of SSD: HDD. For ease of expansion, it is also recommended that the disk shelves be full.

SSD: 32 -> 36

HDD: 136 -> 144 (SSD: HDD 1: 4)

SSD / Enclosure: 12

HDD / Enclosure: 48

SAS cables

For a fail-safe connection and maximizing throughput, you need to connect each disk shelf with two cables (two paths from the server to each shelf). Total 6 SAS ports per server.

Number of servers

Based on the requirements for fault tolerance, IO, budget and multipath organization requirements, we take 3 servers.

The number of pools The

number of disks in the pool should be equal to or less than 80, we take 3 pools (180/80 = 2.25).

HDDs / Pool: 48

SSDs / Pool: 12

Pool configuration

Hot Spares: No

Fast Rebuild: Yes (reserve enough space)

RepairPolicy: Parallel (default)

RetireMissingPhysicalDisks: Always

IsPowerProtected: False (default)

Number of virtual disks

Based on the requirements, we use 2 VD per server, total 6 evenly distributed across pools (2 per pool).

Configuration of virtual disks

Based on the requirements for resistance to data loss (for example, there is no way to replace a failed disk in a few days) and load, we use the following settings:

Resiliency: 3-way mirroring

Interleave: 256K (default)

WBC Size: 1GB (default)

IsEnclosureAware: $ true

Number of columns

Virtual disk size and storage levels

Total:

Storage servers: 3

SAS ports / server: 6

SAS paths from server to each shelf: 2

Disk shelves: 3

Number of pools: 3

Number of VD: 6

Virtual Disks / Pool: 2

HDD: 144 @ 4TB (~ 576TB of raw space), 48 / shelf, 48 / pool, 16 / shelf / pool

SSD: 36 @ 800GB (~ 28TB of raw space), 12 / shelf , 12 / pool, 4 / shelf / pool

Virtual Disk size: SSD Tier + HDD Tier = 1110GB + 27926GB = 28.4TB

Total usable capacity: (28.4) * 6 = 170TB

Overhead: (1 - 170 / (576 + 28) ) = 72%